SEG 4630 2009 2010 Tutorial 1 Classification Decision

![Tree induction example S[9+, 5 -] Outlook Sunny [2+, 3 -] Info(S) = -9/14(log Tree induction example S[9+, 5 -] Outlook Sunny [2+, 3 -] Info(S) = -9/14(log](https://slidetodoc.com/presentation_image_h/2be133c9852cccafba4cf20b274d4c58/image-8.jpg)

![High [3+, 4 -] S[9+, 5 -] Humidity Normal [6+, 1 -] Gain(Humidity) = High [3+, 4 -] S[9+, 5 -] Humidity Normal [6+, 1 -] Gain(Humidity) =](https://slidetodoc.com/presentation_image_h/2be133c9852cccafba4cf20b274d4c58/image-9.jpg)

![Sunny[2+, 3 -] Temperature <15 [1+, 0 -] 15 -25 [1+, 1 -] >25 Sunny[2+, 3 -] Temperature <15 [1+, 0 -] 15 -25 [1+, 1 -] >25](https://slidetodoc.com/presentation_image_h/2be133c9852cccafba4cf20b274d4c58/image-11.jpg)

![Rain[3+, 2 -] Temperature <15 [1+, 1 -] 15 -25 [2+, 1 -] >25 Rain[3+, 2 -] Temperature <15 [1+, 1 -] 15 -25 [2+, 1 -] >25](https://slidetodoc.com/presentation_image_h/2be133c9852cccafba4cf20b274d4c58/image-13.jpg)

- Slides: 24

SEG 4630 2009 -2010 Tutorial 1 – Classification Decision tree, Naïve Bayes & k-NN CHANG Lijun

Classification: Definition p Given a collection of records (training set ) n p Find a model for class attribute as a function of the values of other attributes. n p Each record contains a set of attributes, one of the attributes is the class. Decision tree, Naïve bayes & k-NN Goal: previously unseen records should be assigned a class as accurately as possible. 2

Decision Tree p Goal n p Construct a tree so that instances belonging to different classes should be separated Basic algorithm (a greedy algorithm) n n Tree is constructed in a top-down recursive manner At start, all the training examples are at the root Test attributes are selected on the basis of a heuristics or statistical measure (e. g. , information gain) Examples are partitioned recursively based on selected attributes 3

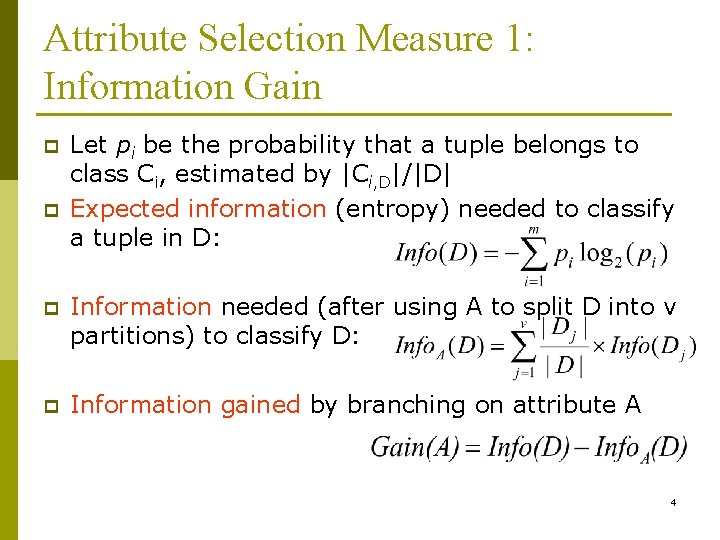

Attribute Selection Measure 1: Information Gain p p Let pi be the probability that a tuple belongs to class Ci, estimated by |Ci, D|/|D| Expected information (entropy) needed to classify a tuple in D: p Information needed (after using A to split D into v partitions) to classify D: p Information gained by branching on attribute A 4

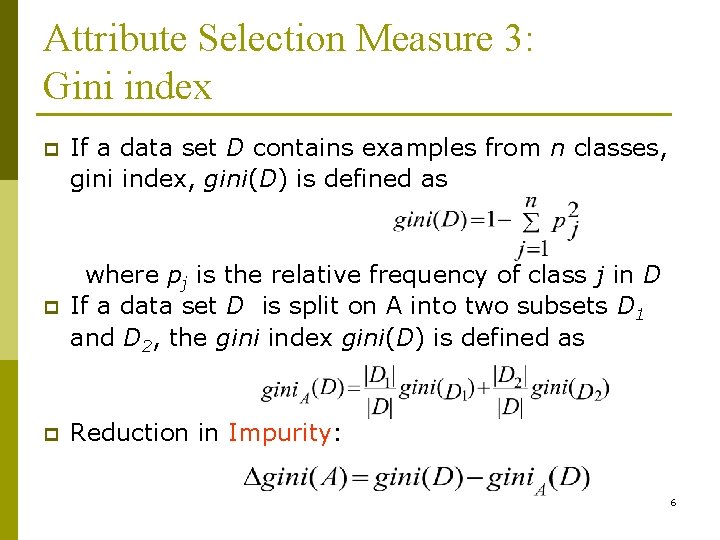

Attribute Selection Measure 2: Gain Ratio p Information gain measure is biased towards attributes with a large number of values p C 4. 5 (a successor of ID 3) uses gain ratio to overcome the problem (normalization to information gain) n Gain. Ratio(A) = Gain(A)/Split. Info(A) 5

Attribute Selection Measure 3: Gini index p If a data set D contains examples from n classes, gini index, gini(D) is defined as p where pj is the relative frequency of class j in D If a data set D is split on A into two subsets D 1 and D 2, the gini index gini(D) is defined as p Reduction in Impurity: 6

Example Outlook Temperature Humidity Wind Play Tennis Sunny >25 High Weak No Sunny >25 High Strong No Overcast >25 High Weak Yes Rain 15 -25 High Weak Yes Rain <15 Normal Strong No Overcast <15 Normal Strong Yes Sunny 15 -25 High Weak No Sunny <15 Normal Weak Yes Rain 15 -25 Normal Weak Yes Sunny 15 -25 Normal Strong Yes Overcast 15 -25 High Strong Yes Overcast >25 Normal Weak Yes Rain 15 -25 High Strong No 7

![Tree induction example S9 5 Outlook Sunny 2 3 InfoS 914log Tree induction example S[9+, 5 -] Outlook Sunny [2+, 3 -] Info(S) = -9/14(log](https://slidetodoc.com/presentation_image_h/2be133c9852cccafba4cf20b274d4c58/image-8.jpg)

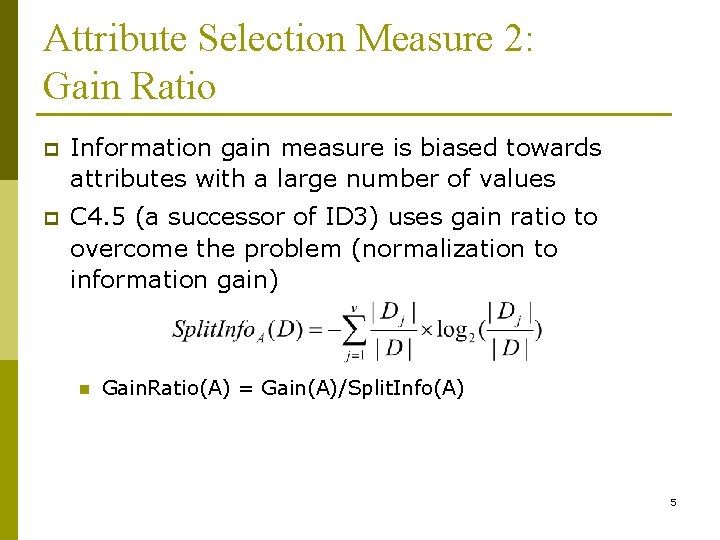

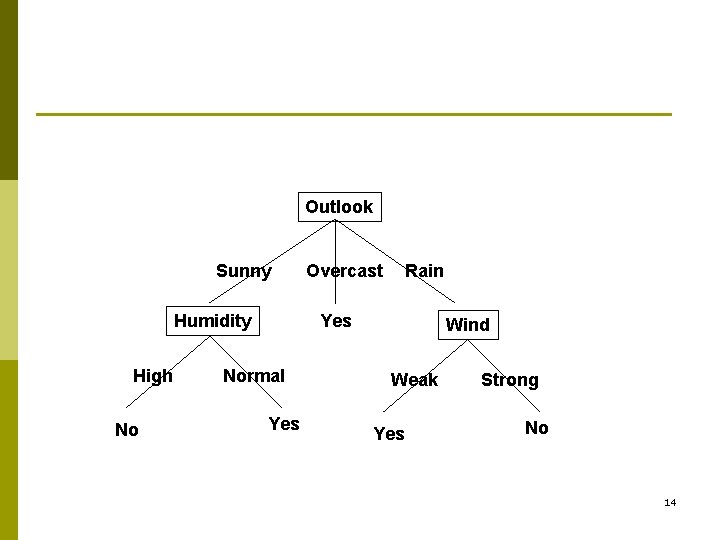

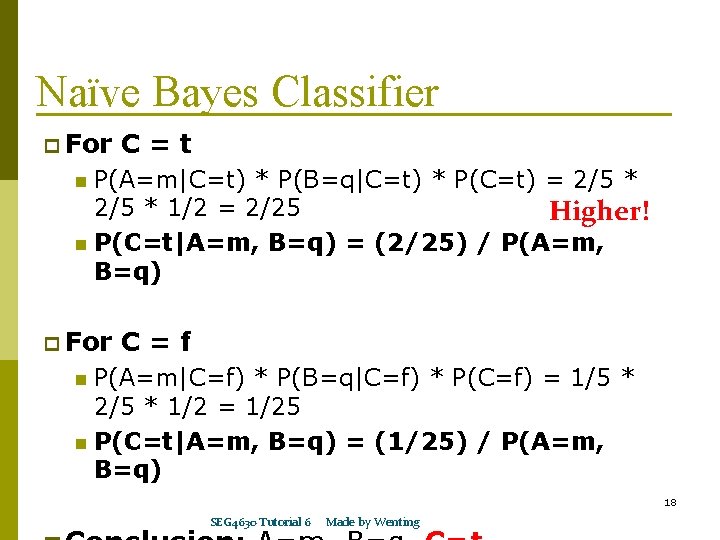

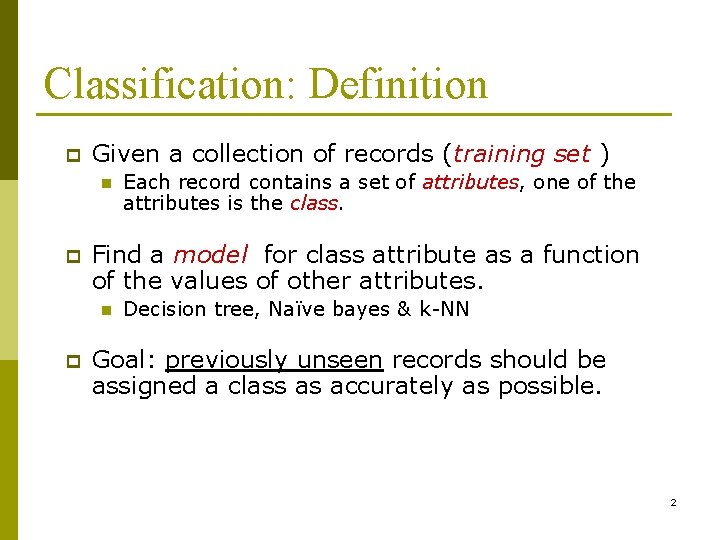

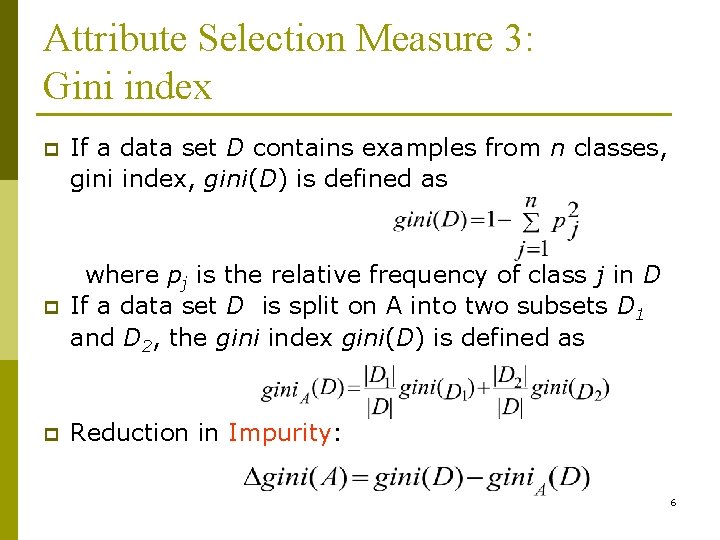

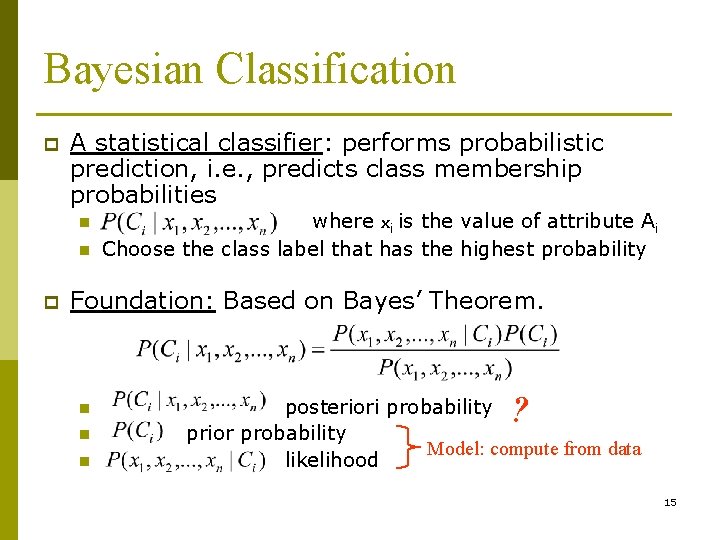

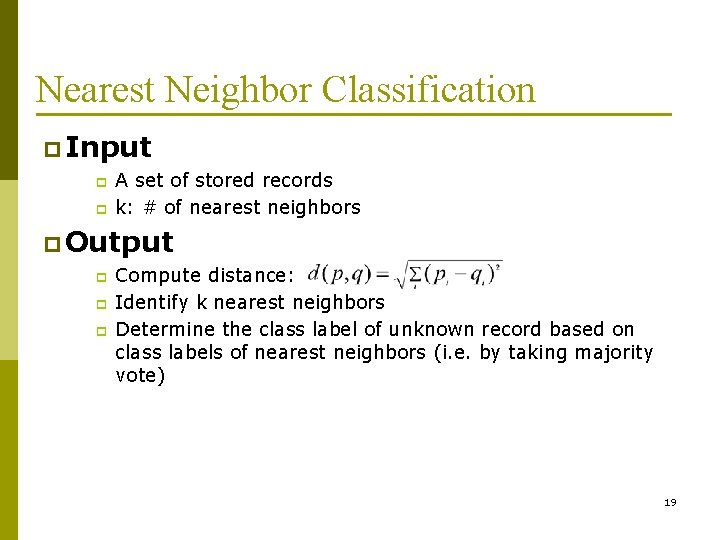

Tree induction example S[9+, 5 -] Outlook Sunny [2+, 3 -] Info(S) = -9/14(log 2(9/14))-5/14(log 2(5/14)) Overcast [4+, 0 -] = 0. 94 Rain [3+, 2 -] Gain(Outlook) = 0. 94 – 5/14[-2/5(log 2(2/5))-3/5(log 2(3/5))] – 4/14[-4/4(log 2(4/4))-0/4(log 2(0/4))] – 5/14[-3/5(log 2(3/5))-2/5(log 2(2/5))] = 0. 94 – 0. 69 = 0. 25 S[9+, 5 -] Temperature <15 [3+, 1 -] 15 -25 [5+, 1 -] >25 [2+, 2 -] Gain(Temperature) = 0. 94 – 4/14[-3/4(log 2(3/4))-1/4(log 2(1/4))] – 6/14[-5/6(log 2(5/6))-1/6(log 2(1/6))] – 4/14[-2/4(log 2(2/4))] = 0. 94 – 0. 80 = 0. 14 8

![High 3 4 S9 5 Humidity Normal 6 1 GainHumidity High [3+, 4 -] S[9+, 5 -] Humidity Normal [6+, 1 -] Gain(Humidity) =](https://slidetodoc.com/presentation_image_h/2be133c9852cccafba4cf20b274d4c58/image-9.jpg)

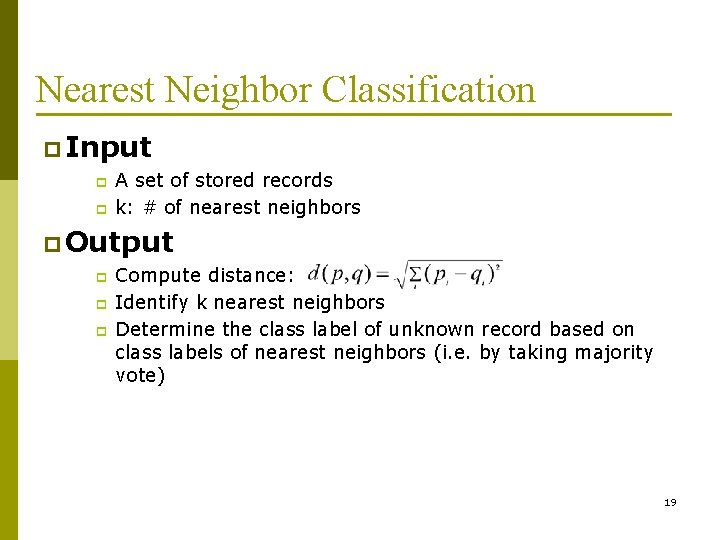

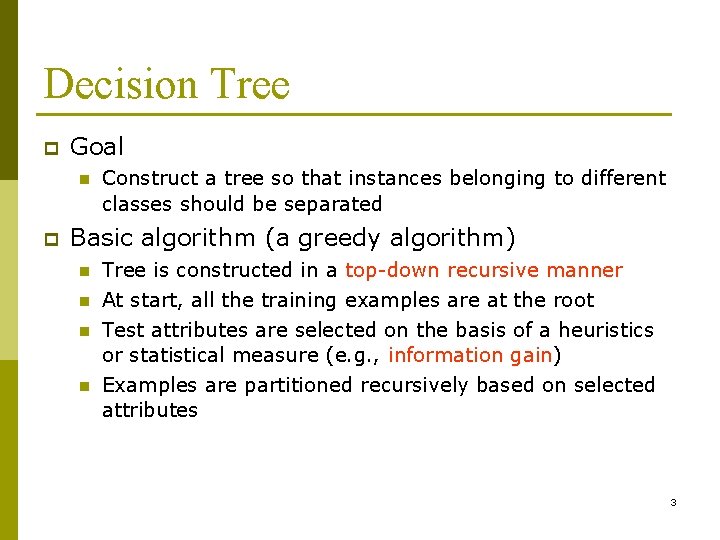

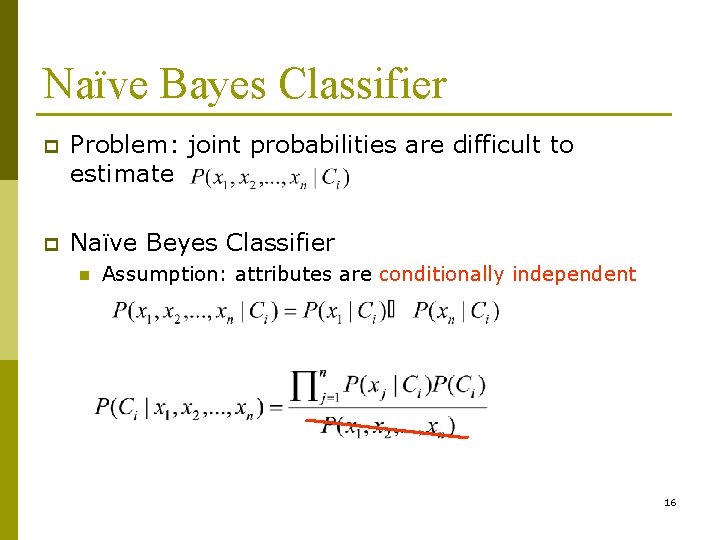

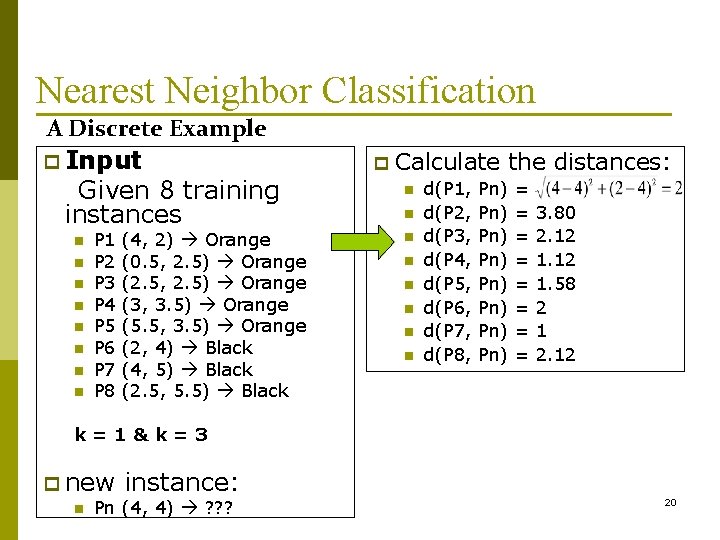

High [3+, 4 -] S[9+, 5 -] Humidity Normal [6+, 1 -] Gain(Humidity) = 0. 94 – 7/14[-3/7(log 2(3/7))-4/7(log 2(4/7))] – 7/14[-6/7(log 2(6/7))-1/7(log 2(1/7))] = 0. 94 – 0. 79 = 0. 15 Weak [6+, 2 -] S[9+, 5 -] Wind Strong [3+, 3 -] Gain(Wind) = 0. 94 – 8/14[-6/8(log 2(6/8))-2/8(log 2(2/8))] – 6/14[-3/6(log 2(3/6))] = 0. 94 – 0. 89 = 0. 05 9

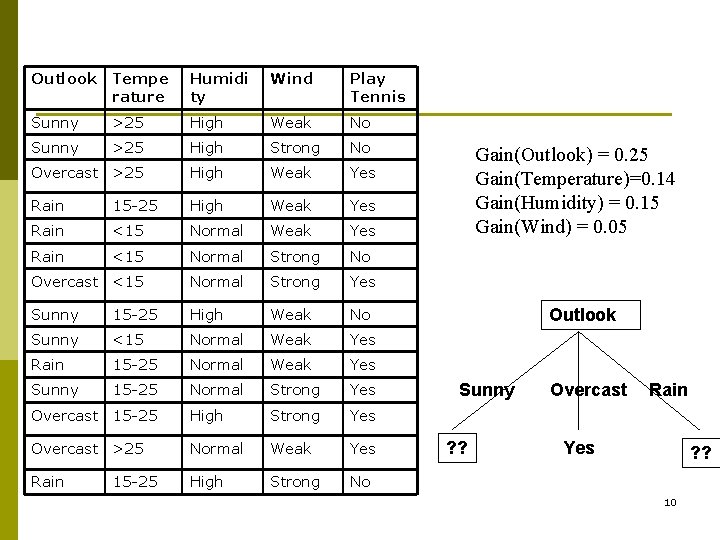

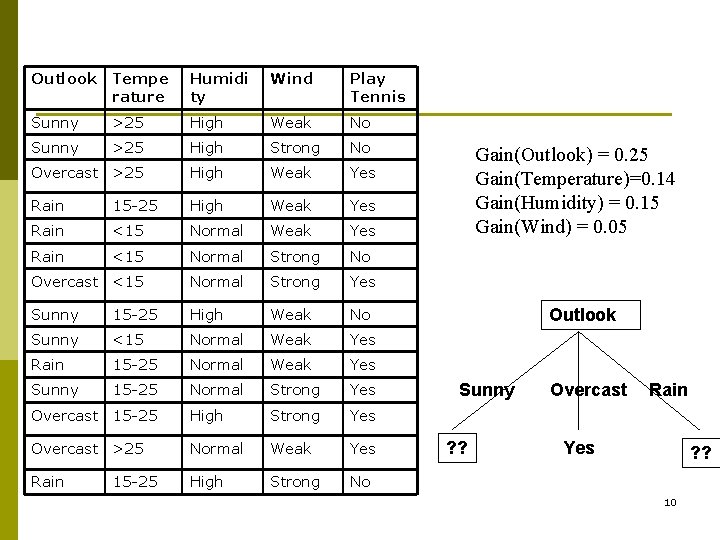

Outlook Tempe rature Humidi ty Wind Play Tennis Sunny >25 High Weak No Sunny >25 High Strong No Overcast >25 High Weak Yes Rain 15 -25 High Weak Yes Rain <15 Normal Strong No Overcast <15 Normal Strong Yes Sunny 15 -25 High Weak No Sunny <15 Normal Weak Yes Rain 15 -25 Normal Weak Yes Sunny 15 -25 Normal Strong Yes Overcast 15 -25 High Strong Yes Overcast >25 Normal Weak Yes Rain High Strong No 15 -25 Gain(Outlook) = 0. 25 Gain(Temperature)=0. 14 Gain(Humidity) = 0. 15 Gain(Wind) = 0. 05 Outlook Sunny ? ? Overcast Rain Yes ? ? 10

![Sunny2 3 Temperature 15 1 0 15 25 1 1 25 Sunny[2+, 3 -] Temperature <15 [1+, 0 -] 15 -25 [1+, 1 -] >25](https://slidetodoc.com/presentation_image_h/2be133c9852cccafba4cf20b274d4c58/image-11.jpg)

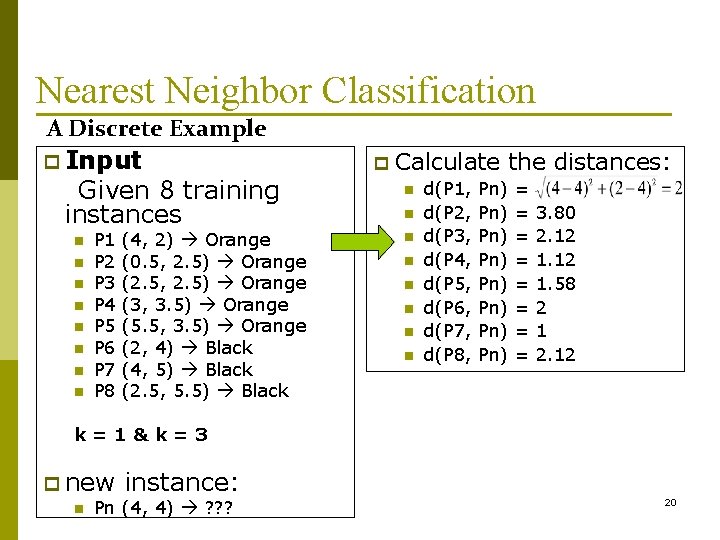

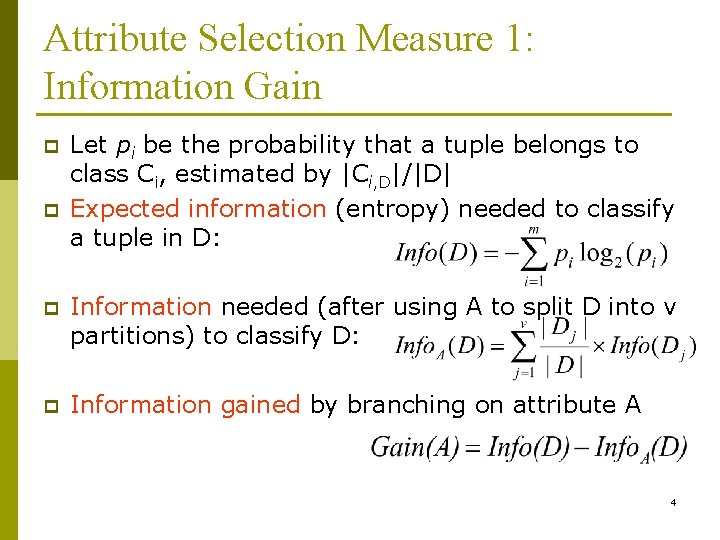

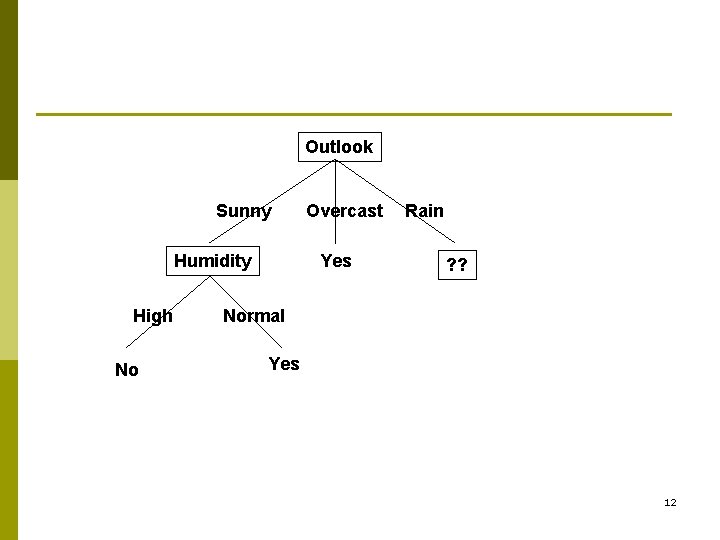

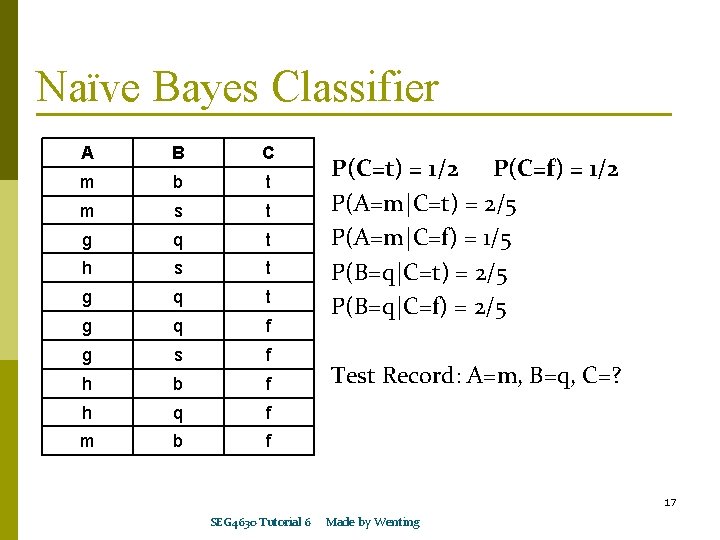

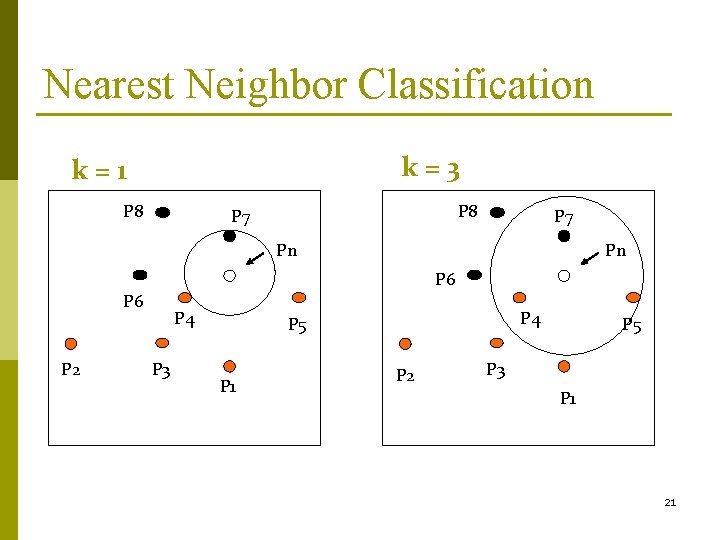

Sunny[2+, 3 -] Temperature <15 [1+, 0 -] 15 -25 [1+, 1 -] >25 [0+, 2 -] Info(Sunny) = -2/5(log 2(2/5)) -3/5(log 2(3/5)) = 0. 97 Gain(Temperature) = 0. 97 – 1/5[-1/1(log 2(1/1))-0/1(log 2(0/1))] – 2/5[-1/2(log 2(1/2))] – 2/5[-0/2(log 2(0/2))-2/2(log 2(2/2))] = 0. 97 – 0. 4 = 0. 37 Sunny[2+, 3 -] Humidity High [0+, 3 -] Normal [2+, 0 -] Sunny[2+, 3 -] Wind Weak [1+, 2 -] Strong [1+, 1 -] Gain(Humidity) = 0. 97 – 3/5[-0/3(log 2(0/3))-3/3(log 2(3/3))] – 2/5[-2/2(log 2(2/2))-0/2(log 2(0/2))] = 0. 97 – 0 = 0. 97 Gain(Wind) = 0. 97 – 3/5[-1/3(log 2(1/3))-2/3(log 2(2/3))] – 3/5[-1/2(log 2(1/2))] = 0. 97 – 0. 96 = 0. 02 11

Outlook Sunny Humidity High No Overcast Yes Rain ? ? Normal Yes 12

![Rain3 2 Temperature 15 1 1 15 25 2 1 25 Rain[3+, 2 -] Temperature <15 [1+, 1 -] 15 -25 [2+, 1 -] >25](https://slidetodoc.com/presentation_image_h/2be133c9852cccafba4cf20b274d4c58/image-13.jpg)

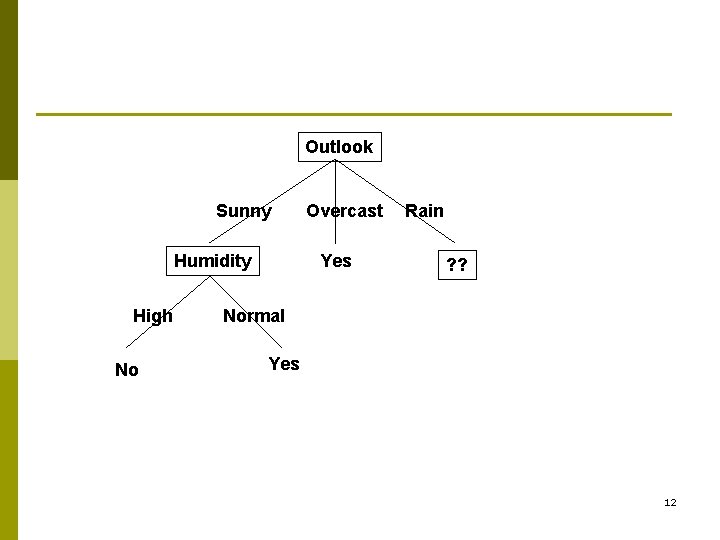

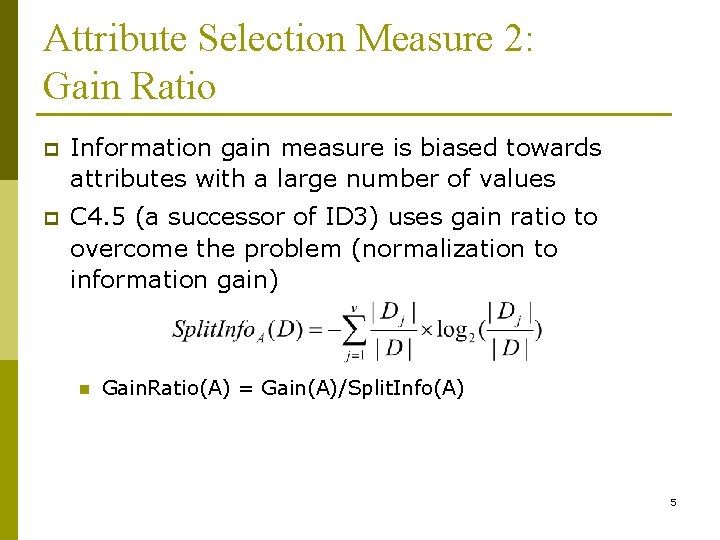

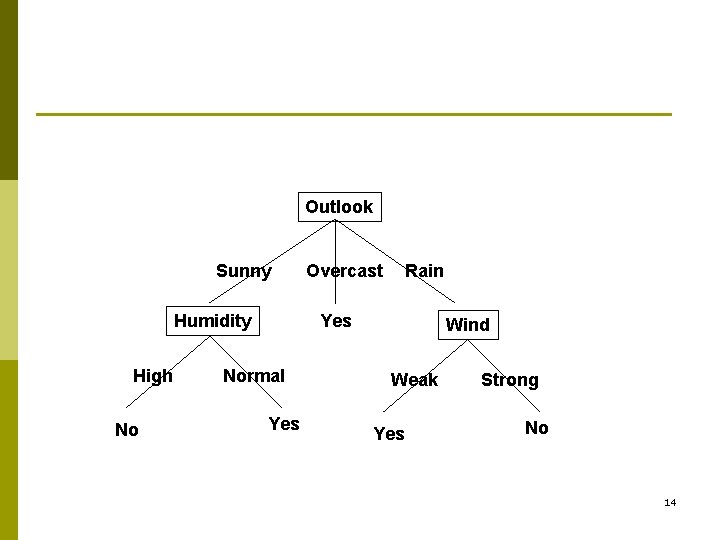

Rain[3+, 2 -] Temperature <15 [1+, 1 -] 15 -25 [2+, 1 -] >25 [0+, 0 -] Info(Rain) = -3/5(log 2(3/5)) -2/5(log 2(2/5)) = 0. 97 Gain(Outlook) = 0. 97 – 2/5[-1/2(log 2(1/2))] – 3/5[-2/3(log 2(2/3))-1/3(log 2(1/3))] – 0/5[-0/0(log 2(0/0))] = 0. 97 – 0. 75 = 0. 22 Rain[3+, 2 -] Humidity High [1+, 1 -] Normal [2+, 1 -] Rain[3+, 2 -] Wind Gain(Humidity) = 0. 97 – 2/5[-1/2(log 2(1/2))] – 3/5[-2/3(log 2(2/3))-1/3(log 2(1/3))] = 0. 97 – 0. 43 = 0. 54 Weak [3+, 0 -] Strong [0+, 2 -] Gain(Wind) = 0. 97 – 3/5[-3/3(log 2(3/3))-0/3(log 2(0/3))] – 2/5[-0/2(log 2(0/2))-2/2(log 2(2/2))] = 0. 97 – 0 = 0. 97 13

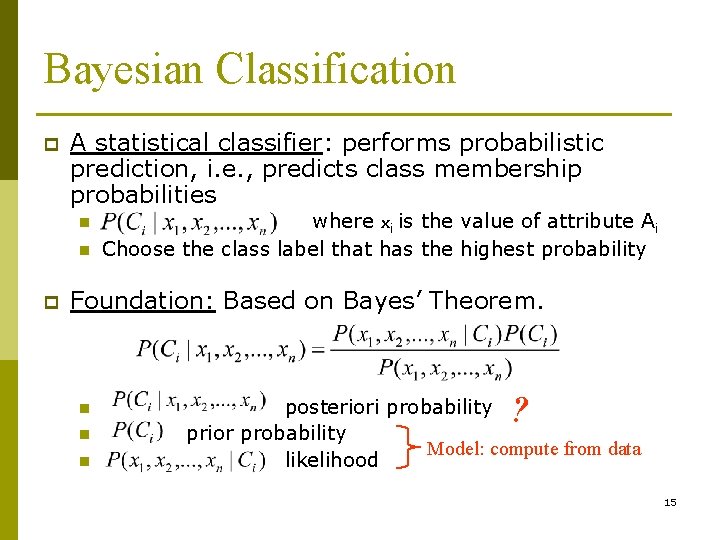

Outlook Sunny Humidity High No Overcast Rain Yes Normal Yes Wind Weak Yes Strong No 14

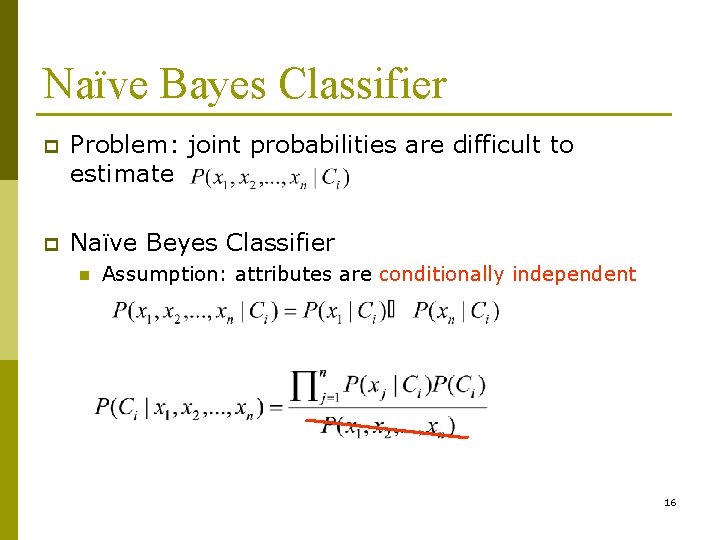

Bayesian Classification p A statistical classifier: performs probabilistic prediction, i. e. , predicts class membership probabilities n n p where xi is the value of attribute Ai Choose the class label that has the highest probability Foundation: Based on Bayes’ Theorem. n n n ? posteriori probability prior probability Model: compute from data likelihood 15

Naïve Bayes Classifier p Problem: joint probabilities are difficult to estimate p Naïve Beyes Classifier n Assumption: attributes are conditionally independent 16

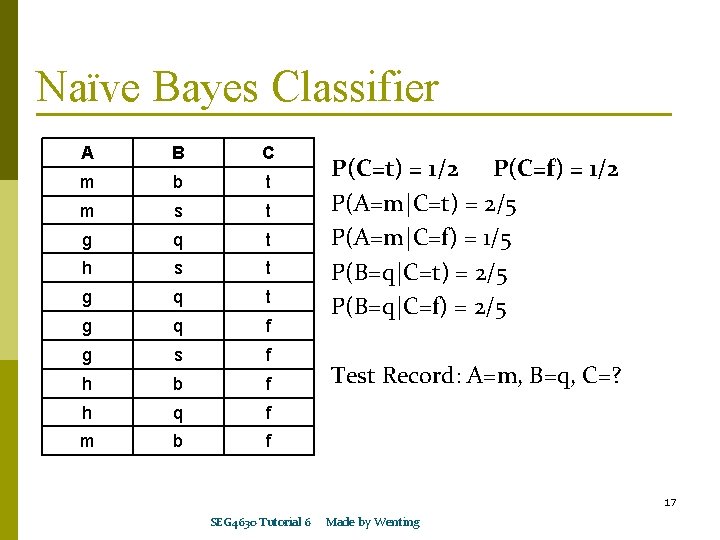

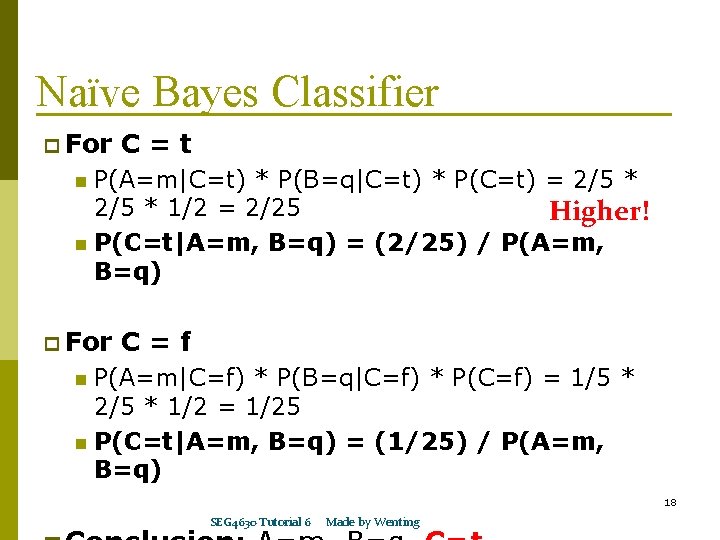

Naïve Bayes Classifier A B C m b t m s t g q t h s t g q f g s f h b f h q f m b f P(C=t) = 1/2 P(C=f) = 1/2 P(A=m|C=t) = 2/5 P(A=m|C=f) = 1/5 P(B=q|C=t) = 2/5 P(B=q|C=f) = 2/5 Test Record: A=m, B=q, C=? 17 SEG 4630 Tutorial 6 Made by Wenting

Naïve Bayes Classifier p For C=t P(A=m|C=t) * P(B=q|C=t) * P(C=t) = 2/5 * 1/2 = 2/25 Higher! n P(C=t|A=m, B=q) = (2/25) / P(A=m, B=q) n p For C=f P(A=m|C=f) * P(B=q|C=f) * P(C=f) = 1/5 * 2/5 * 1/2 = 1/25 n P(C=t|A=m, B=q) = (1/25) / P(A=m, B=q) n 18 SEG 4630 Tutorial 6 Made by Wenting

Nearest Neighbor Classification p Input p p A set of stored records k: # of nearest neighbors p Output p p p Compute distance: Identify k nearest neighbors Determine the class label of unknown record based on class labels of nearest neighbors (i. e. by taking majority vote) 19

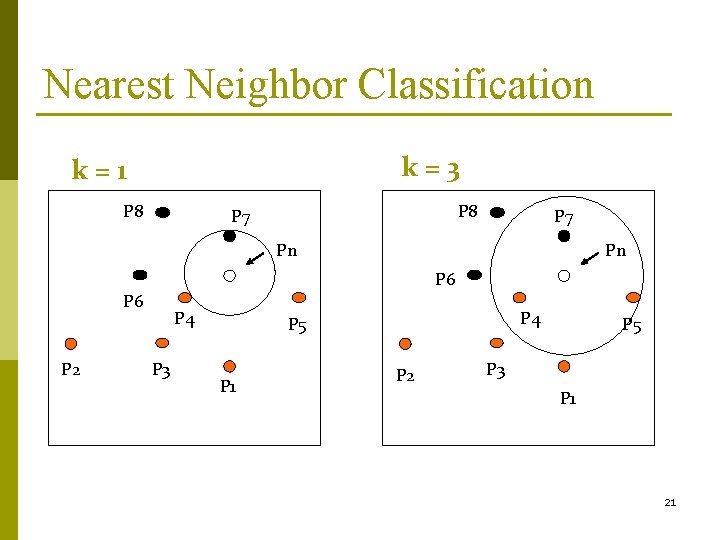

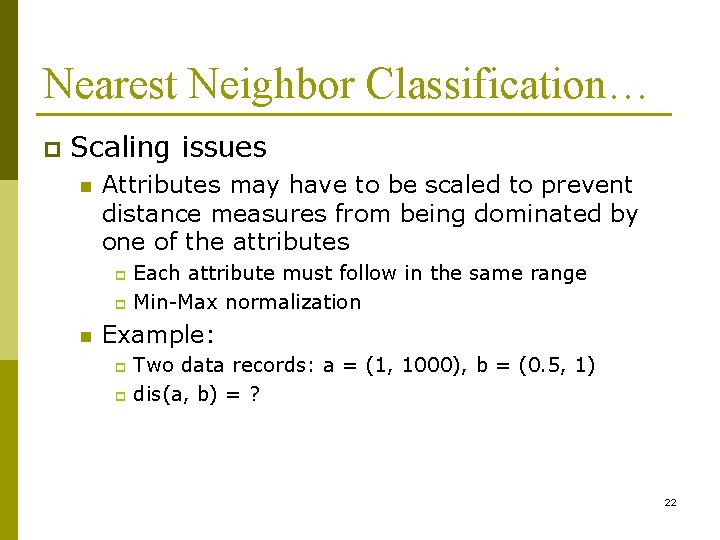

Nearest Neighbor Classification A Discrete Example p Input Given 8 training instances n n n n P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 (4, 2) Orange (0. 5, 2. 5) Orange (2. 5, 2. 5) Orange (3, 3. 5) Orange (5. 5, 3. 5) Orange (2, 4) Black (4, 5) Black (2. 5, 5. 5) Black p Calculate the distances: n n n n d(P 1, d(P 2, d(P 3, d(P 4, d(P 5, d(P 6, d(P 7, d(P 8, Pn) Pn) = = = = 3. 80 2. 12 1. 58 2 1 2. 12 k=1&k=3 p new n instance: Pn (4, 4) ? ? ? 20

Nearest Neighbor Classification k=3 k=1 P 8 P 7 Pn Pn P 6 P 2 P 4 P 3 P 4 P 5 P 1 P 2 P 5 P 3 P 1 21

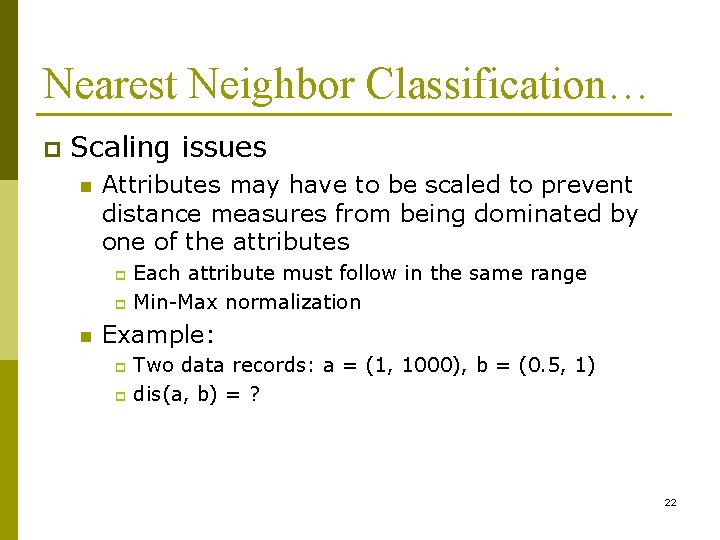

Nearest Neighbor Classification… p Scaling issues n Attributes may have to be scaled to prevent distance measures from being dominated by one of the attributes Each attribute must follow in the same range p Min-Max normalization p n Example: Two data records: a = (1, 1000), b = (0. 5, 1) p dis(a, b) = ? p 22

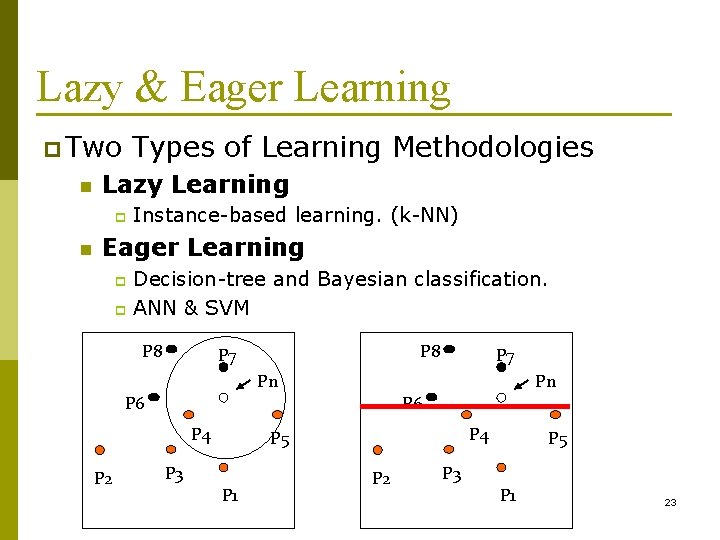

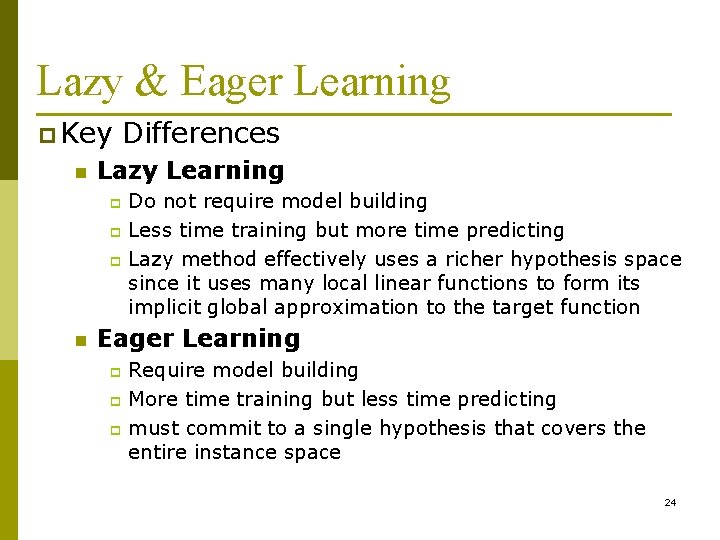

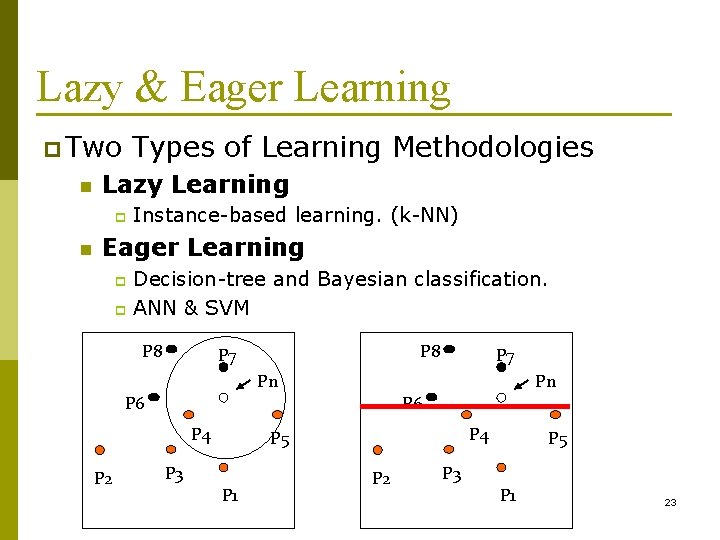

Lazy & Eager Learning p Two n Types of Learning Methodologies Lazy Learning p n Instance-based learning. (k-NN) Eager Learning Decision-tree and Bayesian classification. p ANN & SVM p P 8 P 7 Pn Pn P 6 P 4 P 2 P 3 P 4 P 5 P 1 P 2 P 3 P 5 P 1 23

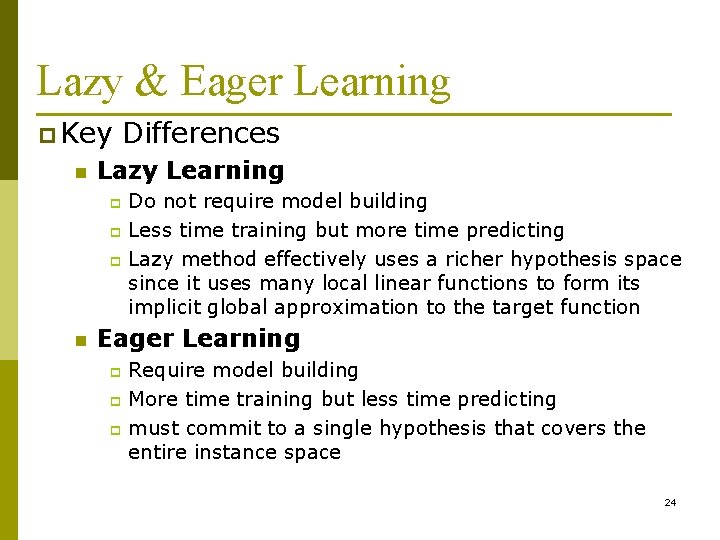

Lazy & Eager Learning p Key n Differences Lazy Learning Do not require model building p Less time training but more time predicting p Lazy method effectively uses a richer hypothesis space since it uses many local linear functions to form its implicit global approximation to the target function p n Eager Learning Require model building p More time training but less time predicting p must commit to a single hypothesis that covers the entire instance space p 24