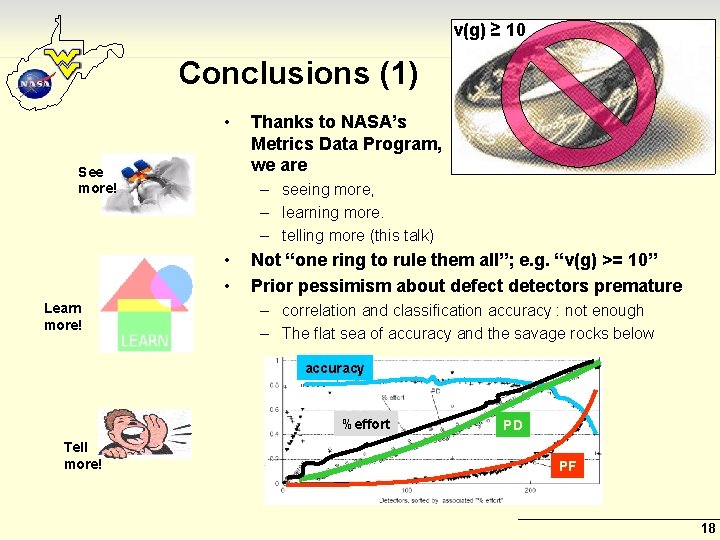

See More Learn More Tell More Tim Menzies

![[Ammar, Menzies, Nikora 2003] Astonishingly few metrics required to generate accurate defect detectors • [Ammar, Menzies, Nikora 2003] Astonishingly few metrics required to generate accurate defect detectors •](https://slidetodoc.com/presentation_image/c96fb0e7dd76ae4ae6645479e95ab066/image-12.jpg)

- Slides: 27

See More, Learn More, Tell More Tim Menzies West Virginia University tim@menzies. us Galaxy Global: NASA IV&V: WVU: JPL: DN American: Robert (Mike) Chapman Justin Di Stefano Kenneth Mc. Gill Pat Callis Kareem Ammar Allen Nikora John Davis Research Heaven, West Virginia

What’s unique about OSMA research? Research Heaven, West Virginia See more! Learn more! Important: transition to the broader NASA software community Tell more! 2

Research Heaven, West Virginia Show me themoney! data! • Old dialogue: “v(G)>10 is a good thing” • New dialogue – A core method in my analysis was M 1 – I have compared M 1 to M 2 • On NASA-related data • Using criteria C 1 – I argue for the merits of C 1 as follows • possible via a discussion on C 2, C 3, … – I endorse/reject M 1 because of that comparison 3

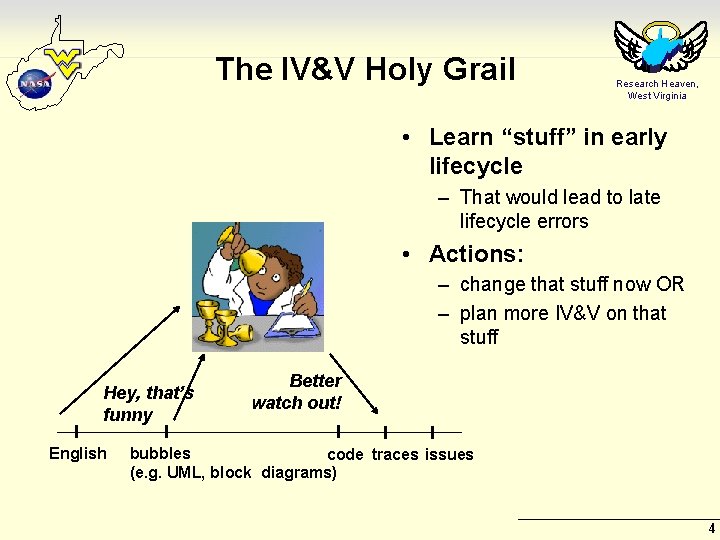

The IV&V Holy Grail Research Heaven, West Virginia • Learn “stuff” in early lifecycle – That would lead to late lifecycle errors • Actions: – change that stuff now OR – plan more IV&V on that stuff Hey, that’s funny English Better watch out! bubbles code traces issues (e. g. UML, block diagrams) 4

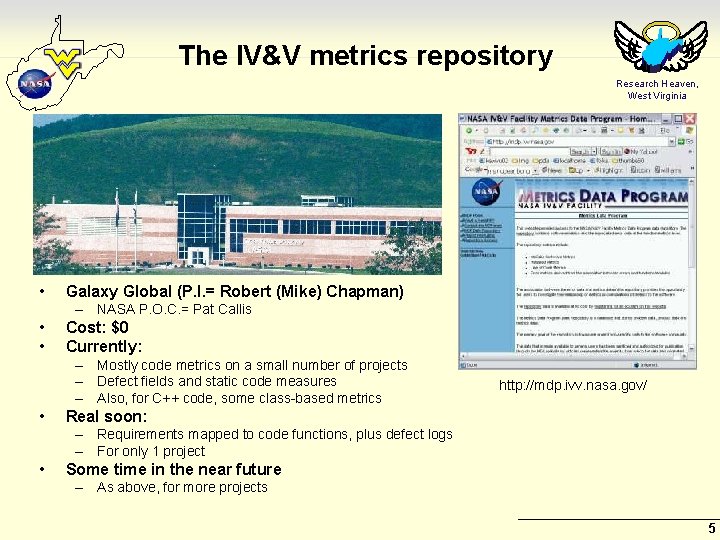

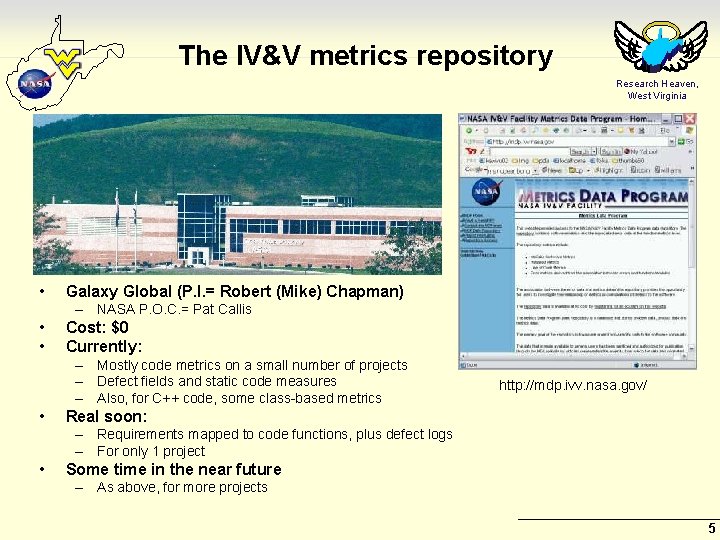

The IV&V metrics repository Research Heaven, West Virginia • Galaxy Global (P. I. = Robert (Mike) Chapman) – NASA P. O. C. = Pat Callis • • Cost: $0 Currently: – Mostly code metrics on a small number of projects – Defect fields and static code measures – Also, for C++ code, some class-based metrics • http: //mdp. ivv. nasa. gov/ Real soon: – Requirements mapped to code functions, plus defect logs – For only 1 project • Some time in the near future – As above, for more projects 5

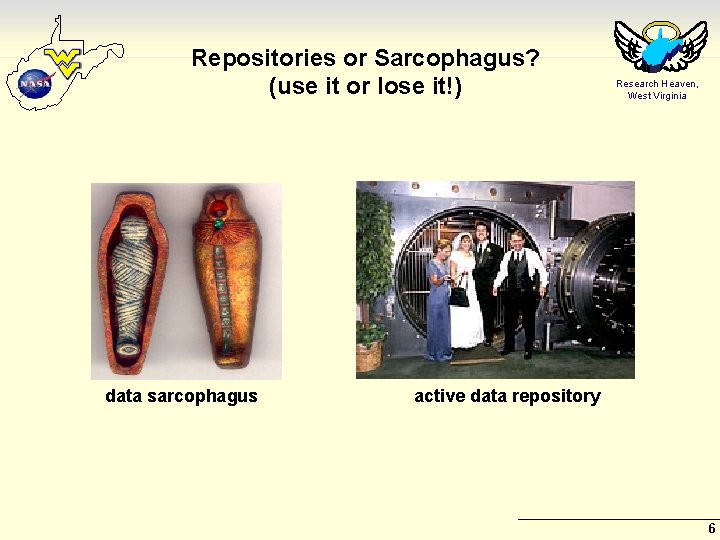

Repositories or Sarcophagus? (use it or lose it!) data sarcophagus Research Heaven, West Virginia active data repository 6

Who’s using MDP data? • • • Ammar, Kareem Callis, Pat Chapman, Mike Cukic , Bojan Davis, John Di Stefano, Justin Goa, Lan Mc. Gill, Kenneth Menzies, Tim • • Research Heaven, West Virginia Dekhtyar, Alex Hayes, Jane Merritt, Phillip Nikora, Allen Orrego, Andres Wallace, Dolores Wilson, Aaron 7

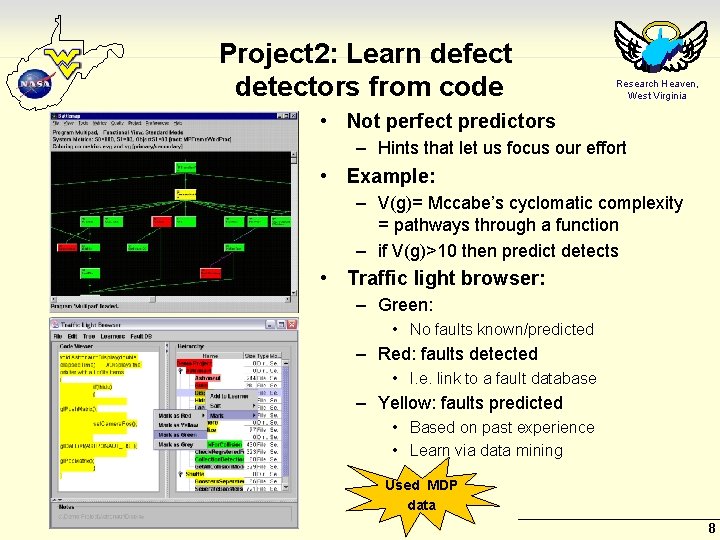

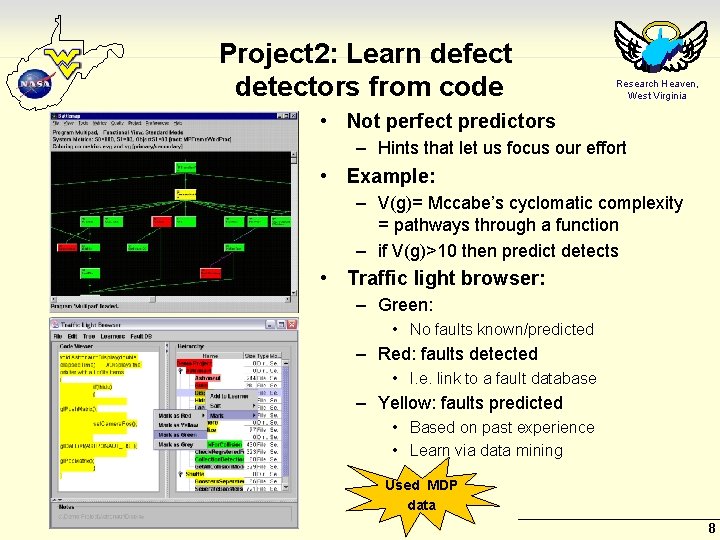

Project 2: Learn defect detectors from code Research Heaven, West Virginia • Not perfect predictors – Hints that let us focus our effort • Example: – V(g)= Mccabe’s cyclomatic complexity = pathways through a function – if V(g)>10 then predict detects • Traffic light browser: – Green: • No faults known/predicted – Red: faults detected • I. e. link to a fault database – Yellow: faults predicted • Based on past experience • Learn via data mining Used MDP data 8

Static code metrics for defect detection= a very bad idea? Research Heaven, West Virginia • Better idea: – model-based methods to study deep semantics of this code – E. g. Heimdahl, Menzies, Owen, et al – E. g. Owen, Menzies • High cost of model-based methods • How about cheaper alternatives? 9

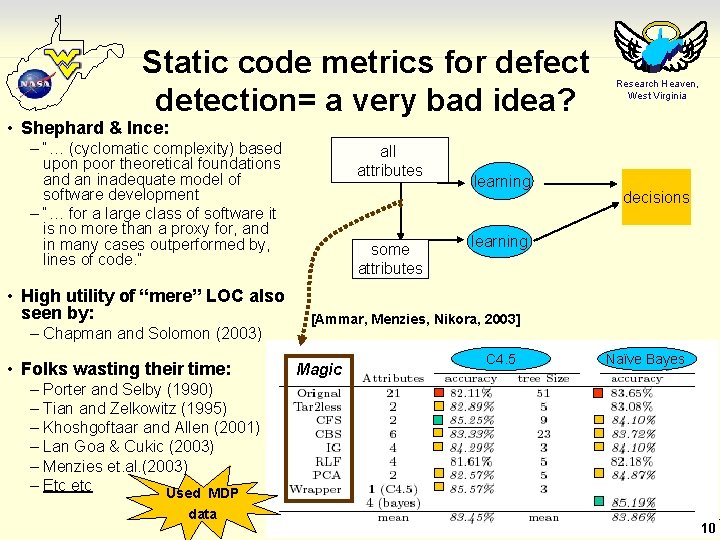

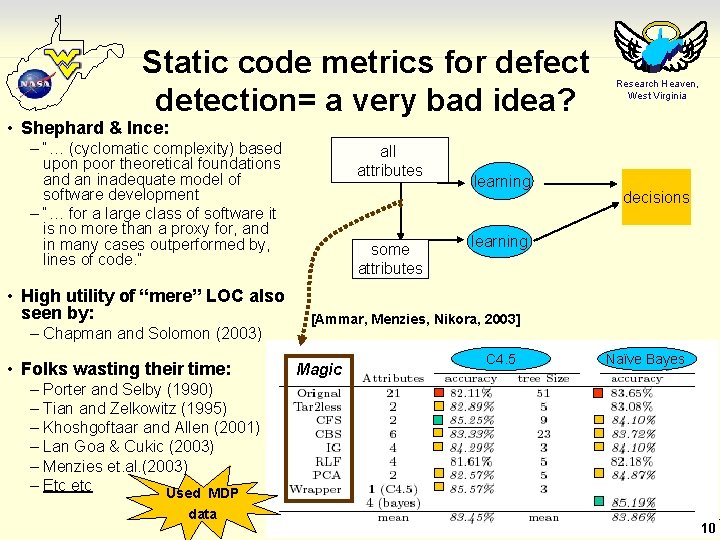

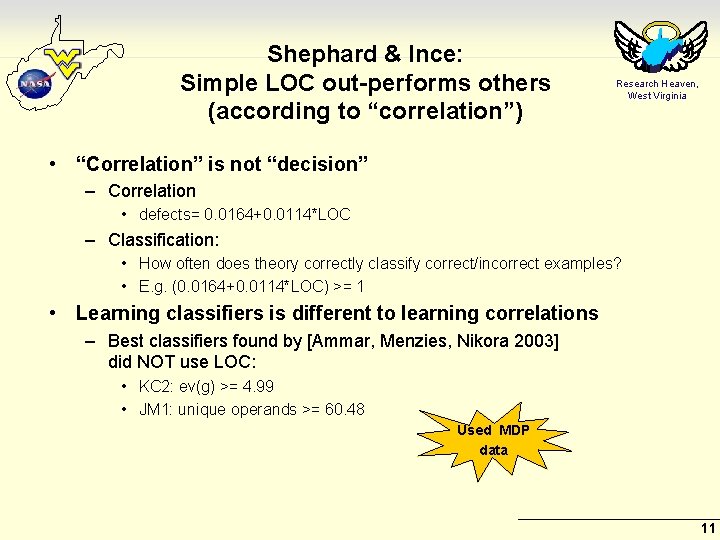

Static code metrics for defect detection= a very bad idea? Research Heaven, West Virginia • Shephard & Ince: – “… (cyclomatic complexity) based upon poor theoretical foundations and an inadequate model of software development – “… for a large class of software it is no more than a proxy for, and in many cases outperformed by, lines of code. ” • High utility of “mere” LOC also seen by: – Chapman and Solomon (2003) • Folks wasting their time: all attributes some attributes learning decisions learning [Ammar, Menzies, Nikora, 2003] Magic C 4. 5 Naïve Bayes – Porter and Selby (1990) – Tian and Zelkowitz (1995) – Khoshgoftaar and Allen (2001) – Lan Goa & Cukic (2003) – Menzies et. al. (2003) – Etc etc Used MDP data 10

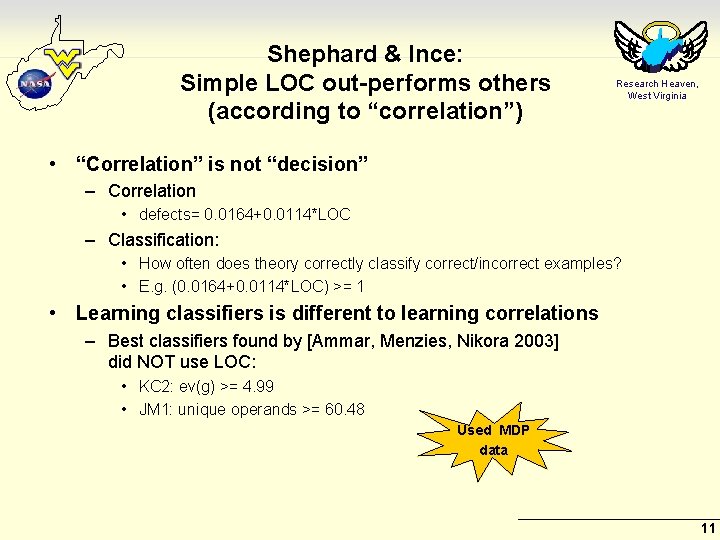

Shephard & Ince: Simple LOC out-performs others (according to “correlation”) Research Heaven, West Virginia • “Correlation” is not “decision” – Correlation • defects= 0. 0164+0. 0114*LOC – Classification: • How often does theory correctly classify correct/incorrect examples? • E. g. (0. 0164+0. 0114*LOC) >= 1 • Learning classifiers is different to learning correlations – Best classifiers found by [Ammar, Menzies, Nikora 2003] did NOT use LOC: • KC 2: ev(g) >= 4. 99 • JM 1: unique operands >= 60. 48 Used MDP data 11

![Ammar Menzies Nikora 2003 Astonishingly few metrics required to generate accurate defect detectors [Ammar, Menzies, Nikora 2003] Astonishingly few metrics required to generate accurate defect detectors •](https://slidetodoc.com/presentation_image/c96fb0e7dd76ae4ae6645479e95ab066/image-12.jpg)

[Ammar, Menzies, Nikora 2003] Astonishingly few metrics required to generate accurate defect detectors • Research Heaven, West Virginia Accuracy, like correlation, can miss vital features – Same accuracy/correlations – Different detection, false alarm rates Detector 1: 0. 0164+0. 0114*LOC correlation(0. 0164+0. 0114*LOC) = 0. 66% classification((0. 0164+0. 0114*LOC)>1) = 80% LSR: loc Truth Detected no yes no A, loc. A B, loc. B yes C, loc. C D, loc. D Used MDP data Accuracy = (A+D)/(A+B+C+D) False alarm = PF = C/(A+C) Got it right = PD = D/(B+D) %effort = (loc. C+loc. D) / (loc. A + loc. B + loc. C + loc. D) Detector 2: 0. 0216 +0. 0954*v(g) - 0. 109*ev(g) + 0. 0598*iv(g) LSR: Mccabes Detector 3: 0. 00892 -0. 00432*uniq. Op + 0. 0147*uniq. Opnd -0. 01* total. Op + 0. 0225* total. Opnd LSR: Halstead 12

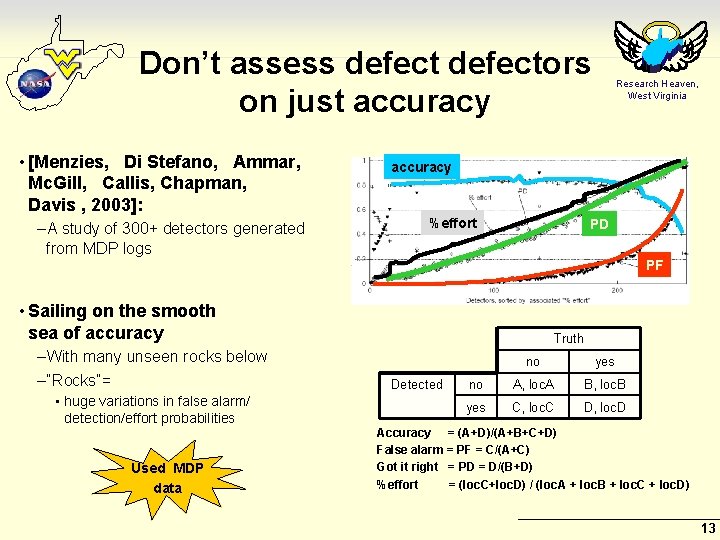

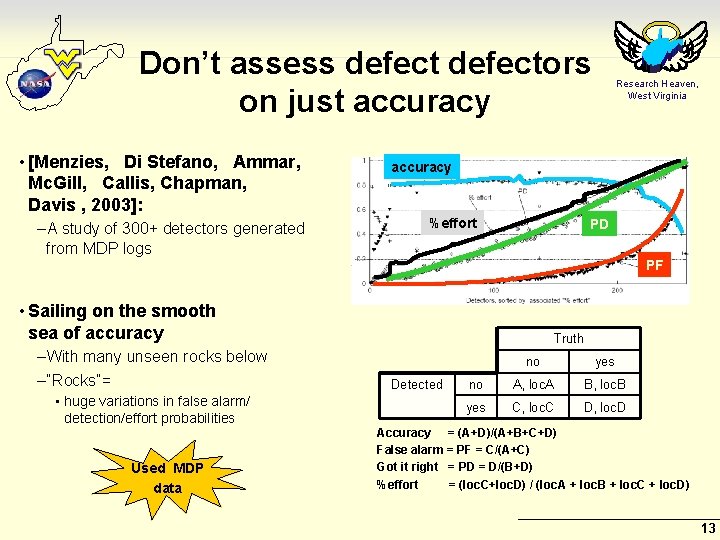

Don’t assess defectors on just accuracy • [Menzies, Di Stefano, Ammar, Mc. Gill, Callis, Chapman, Davis , 2003]: –A study of 300+ detectors generated from MDP logs Research Heaven, West Virginia accuracy %effort PD PF • Sailing on the smooth sea of accuracy –With many unseen rocks below –“Rocks”= • huge variations in false alarm/ detection/effort probabilities Used MDP data Truth Detected no yes no A, loc. A B, loc. B yes C, loc. C D, loc. D Accuracy = (A+D)/(A+B+C+D) False alarm = PF = C/(A+C) Got it right = PD = D/(B+D) %effort = (loc. C+loc. D) / (loc. A + loc. B + loc. C + loc. D) 13

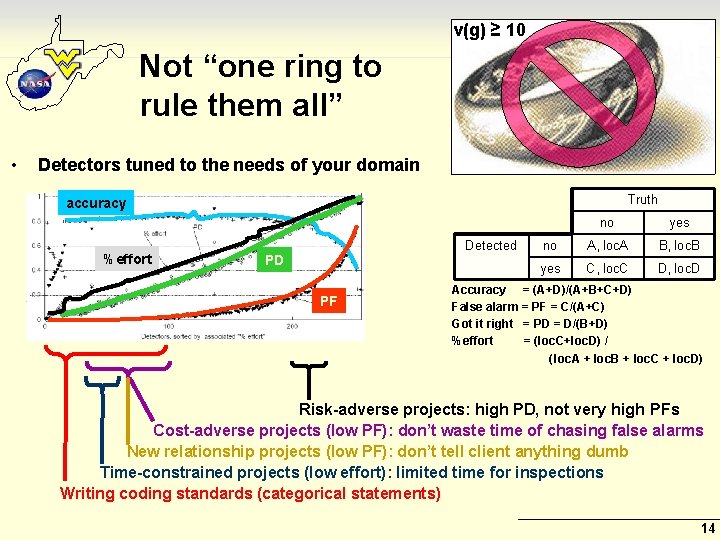

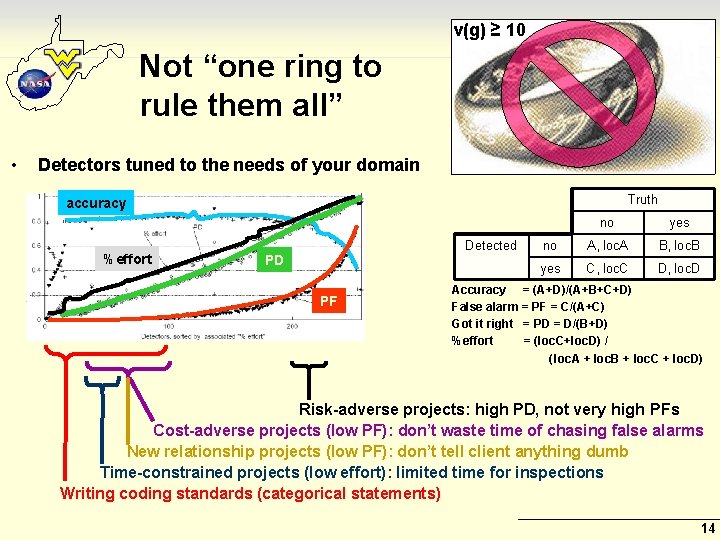

v(g) ≥ 10 Not “one ring to rule them all” • Research Heaven, West Virginia Detectors tuned to the needs of your domain Truth accuracy %effort Detected PD PF no yes no A, loc. A B, loc. B yes C, loc. C D, loc. D Accuracy = (A+D)/(A+B+C+D) False alarm = PF = C/(A+C) Got it right = PD = D/(B+D) %effort = (loc. C+loc. D) / ( loc. A + loc. B + loc. C + loc. D) Risk-adverse projects: high PD, not very high PFs Cost-adverse projects (low PF): don’t waste time of chasing false alarms New relationship projects (low PF): don’t tell client anything dumb Time-constrained projects (low effort): limited time for inspections Writing coding standards (categorical statements) 14

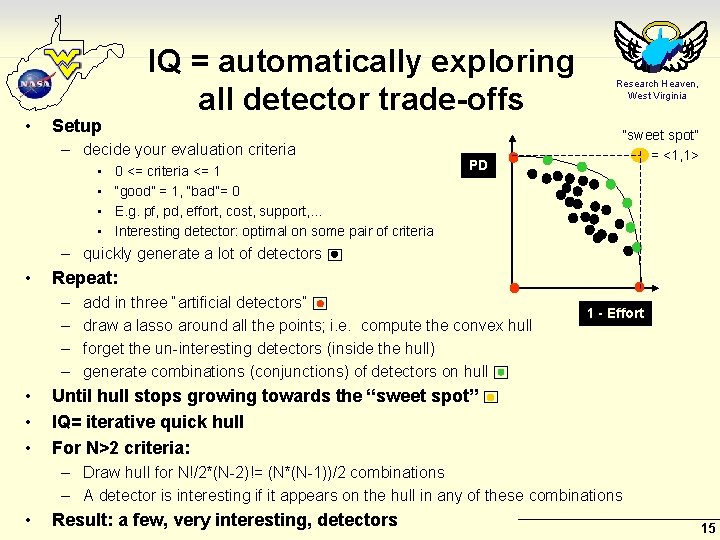

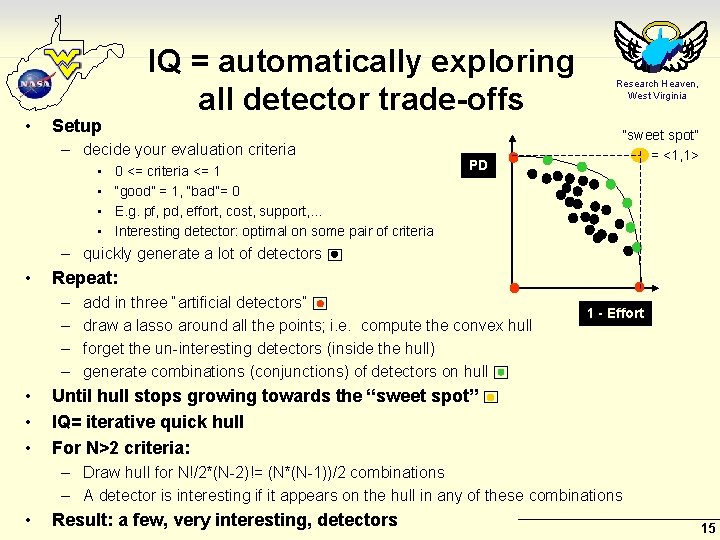

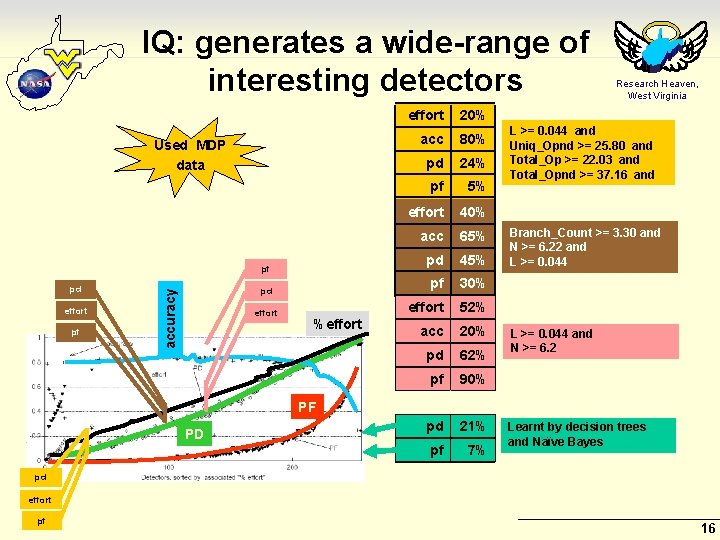

• IQ = automatically exploring all detector trade-offs Setup – decide your evaluation criteria • • 0 <= criteria <= 1 “good” = 1, “bad”= 0 E. g. pf, pd, effort, cost, support, … Interesting detector: optimal on some pair of criteria PD Research Heaven, West Virginia “sweet spot” = <1, 1> – quickly generate a lot of detectors • Repeat: – – • • • add in three “artificial detectors” draw a lasso around all the points; i. e. compute the convex hull forget the un-interesting detectors (inside the hull) generate combinations (conjunctions) of detectors on hull 1 - Effort Until hull stops growing towards the “sweet spot” IQ= iterative quick hull For N>2 criteria: – Draw hull for N!/2*(N-2)!= (N*(N-1))/2 combinations – A detector is interesting if it appears on the hull in any of these combinations • Result: a few, very interesting, detectors 15

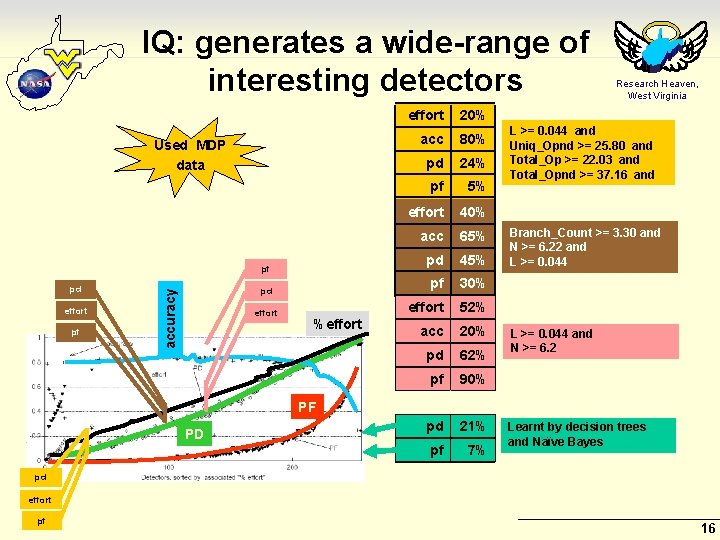

IQ: generates a wide-range of interesting detectors Used MDP data pf effort pf pd accuracy pd effort %effort 20% acc 80% pd 24% pf 5% effort 40% acc 65% pd 45% pf 30% effort 52% acc 20% pd 62% pf 90% pd 21% pf 7% Research Heaven, West Virginia L >= 0. 044 and Uniq_Opnd >= 25. 80 and Total_Op >= 22. 03 and Total_Opnd >= 37. 16 and Branch_Count >= 3. 30 and N >= 6. 22 and L >= 0. 044 and N >= 6. 2 PF PD Learnt by decision trees and Naïve Bayes pd effort pf 16

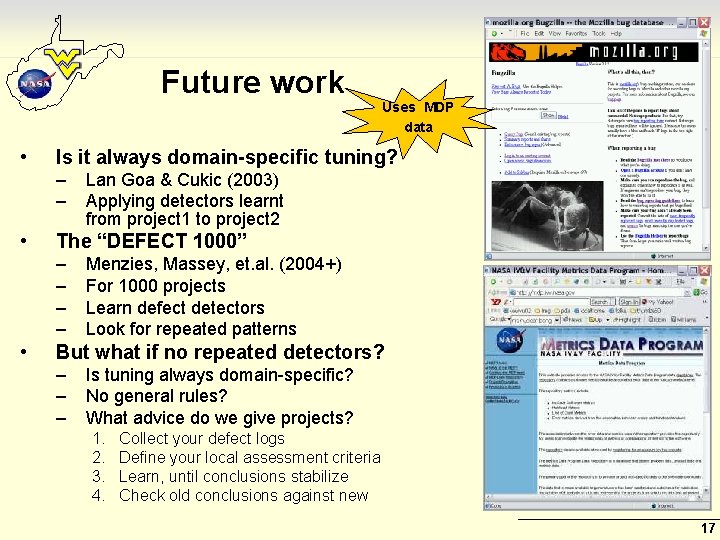

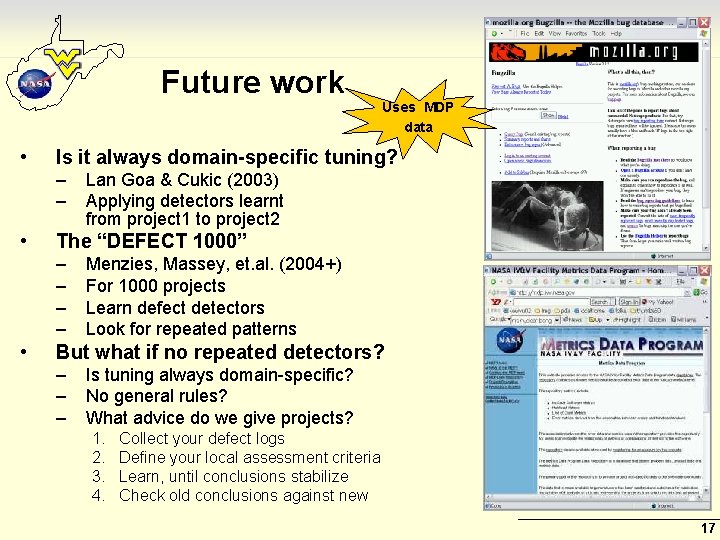

Future work • Is it always domain-specific tuning? – – • Lan Goa & Cukic (2003) Applying detectors learnt from project 1 to project 2 The “DEFECT 1000” – – • Uses MDP data Research Heaven, West Virginia Menzies, Massey, et. al. (2004+) For 1000 projects Learn defect detectors Look for repeated patterns But what if no repeated detectors? – – – Is tuning always domain-specific? No general rules? What advice do we give projects? 1. 2. 3. 4. Collect your defect logs Define your local assessment criteria Learn, until conclusions stabilize Check old conclusions against new 17

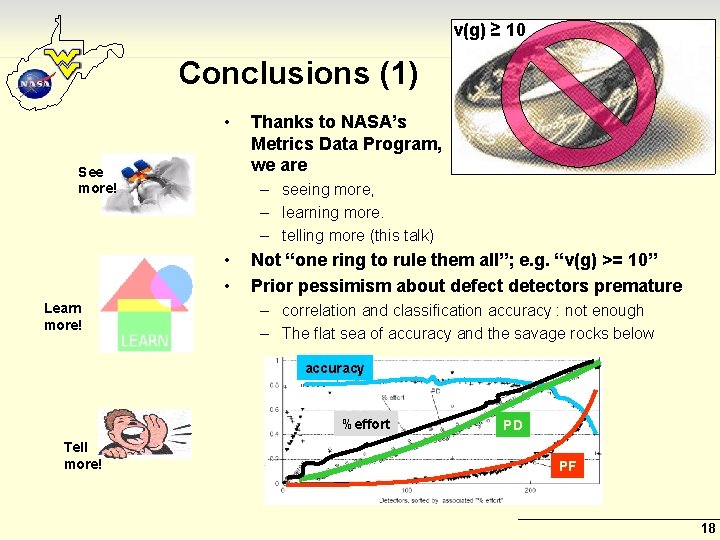

v(g) ≥ 10 Conclusions (1) • See more! Thanks to NASA’s Metrics Data Program, we are – seeing more, – learning more. – telling more (this talk) • • Learn more! Research Heaven, West Virginia Not “one ring to rule them all”; e. g. “v(g) >= 10” Prior pessimism about defect detectors premature – correlation and classification accuracy : not enough – The flat sea of accuracy and the savage rocks below accuracy %effort Tell more! PD PF 18

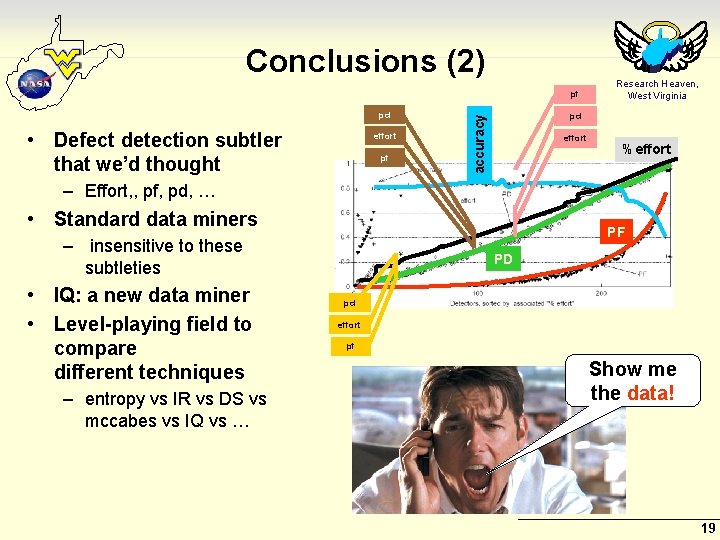

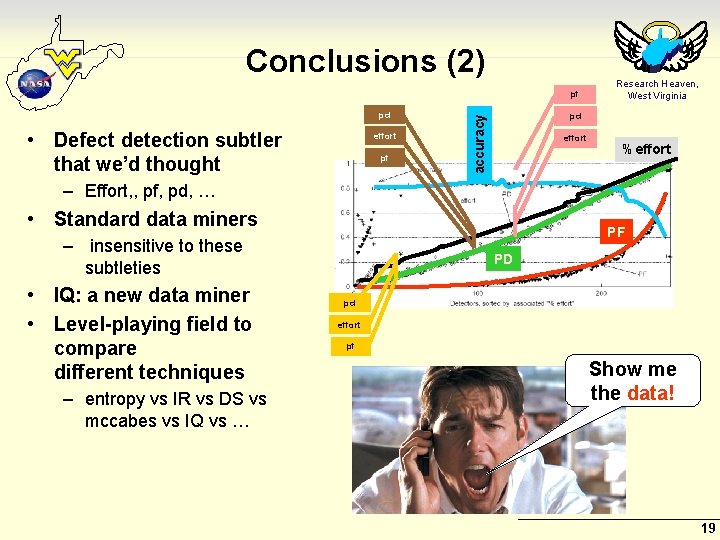

Conclusions (2) pf • Defect detection subtler that we’d thought effort pf pd accuracy pd Research Heaven, West Virginia effort %effort – Effort, , pf, pd, … • Standard data miners PF – insensitive to these subtleties • IQ: a new data miner • Level-playing field to compare different techniques – entropy vs IR vs DS vs mccabes vs IQ vs … PD pd effort pf Show me the data! 19

Conclusions (3) Research Heaven, West Virginia Use MDP data http: //mdp. ivv. nasa. gov/ 20

Research Heaven, West Virginia Supplemental material

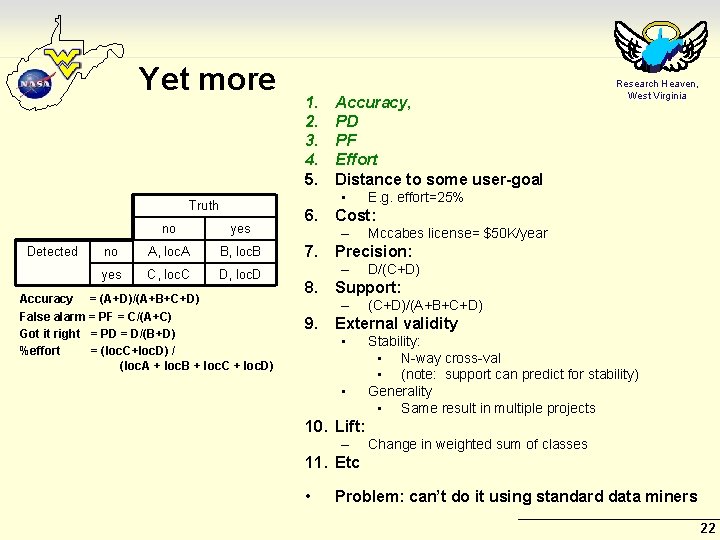

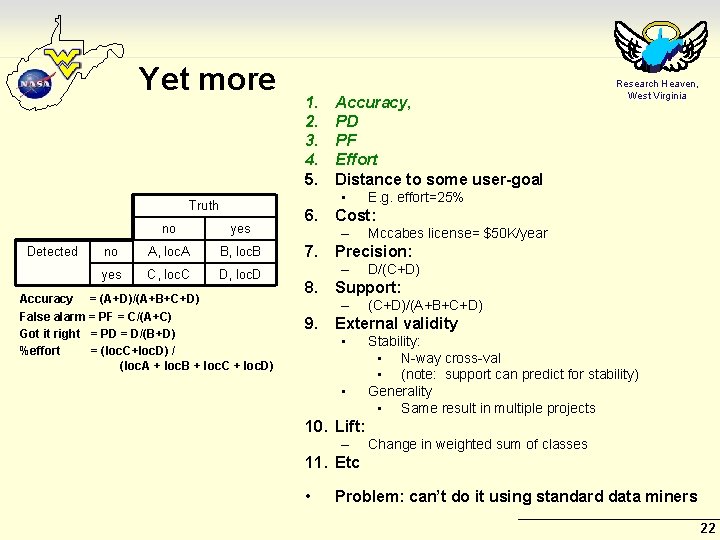

Yet more Accuracy, PD PF Effort Distance to some user-goal • Truth Detected 1. 2. 3. 4. 5. no yes no A, loc. A B, loc. B yes C, loc. C D, loc. D Accuracy = (A+D)/(A+B+C+D) False alarm = PF = C/(A+C) Got it right = PD = D/(B+D) %effort = (loc. C+loc. D) / (loc. A + loc. B + loc. C + loc. D) Research Heaven, West Virginia E. g. effort=25% 6. Cost: – Mccabes license= $50 K/year 7. Precision: – D/(C+D) 8. Support: – (C+D)/(A+B+C+D) 9. External validity • • Stability: • N-way cross-val • (note: support can predict for stability) Generality • Same result in multiple projects 10. Lift: – Change in weighted sum of classes 11. Etc • Problem: can’t do it using standard data miners 22

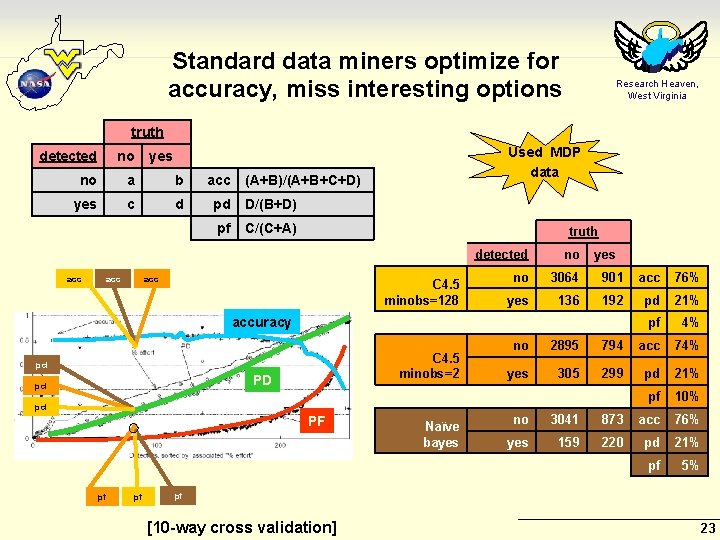

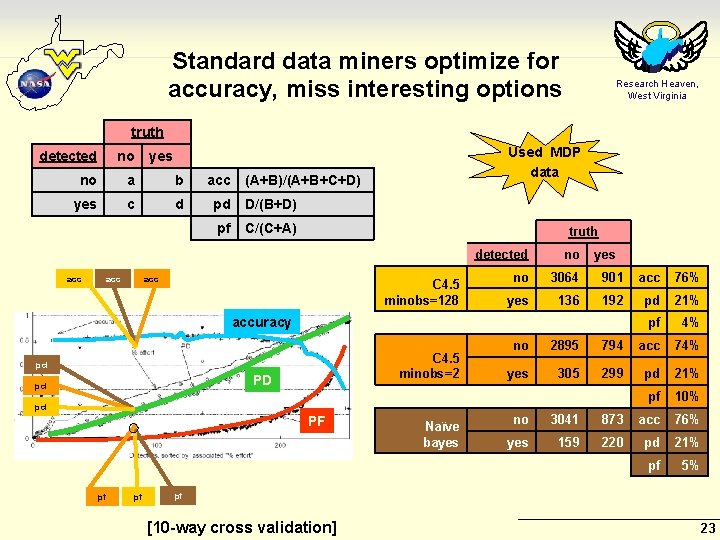

Standard data miners optimize for accuracy, miss interesting options Research Heaven, West Virginia truth detected no no a b acc yes c d pd D/(B+D) pf C/(C+A) acc Used MDP data yes (A+B)/(A+B+C+D) truth acc C 4. 5 minobs=128 detected no yes no 3064 901 acc 76% yes 136 192 pd 21% pf 4% accuracy C 4. 5 minobs=2 pd PD pd no 2895 794 acc 74% yes 305 299 pd 21% pf 10% pd PF pf pf Naïve bayes no 3041 873 acc 76% yes 159 220 pd 21% pf 5% pf [10 -way cross validation] 23

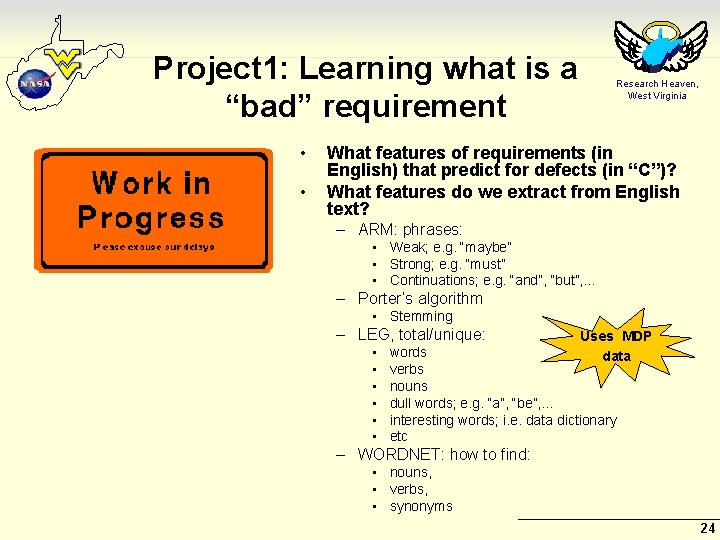

Project 1: Learning what is a “bad” requirement • • Research Heaven, West Virginia What features of requirements (in English) that predict for defects (in “C”)? What features do we extract from English text? – ARM: phrases: • Weak; e. g. “maybe” • Strong; e. g. “must” • Continuations; e. g. “and”, “but”, … – Porter’s algorithm • Stemming – LEG, total/unique: • • • Uses MDP data words verbs nouns dull words; e. g. “a”, “be”, … interesting words; i. e. data dictionary etc – WORDNET: how to find: • nouns, • verbs, • synonyms 24

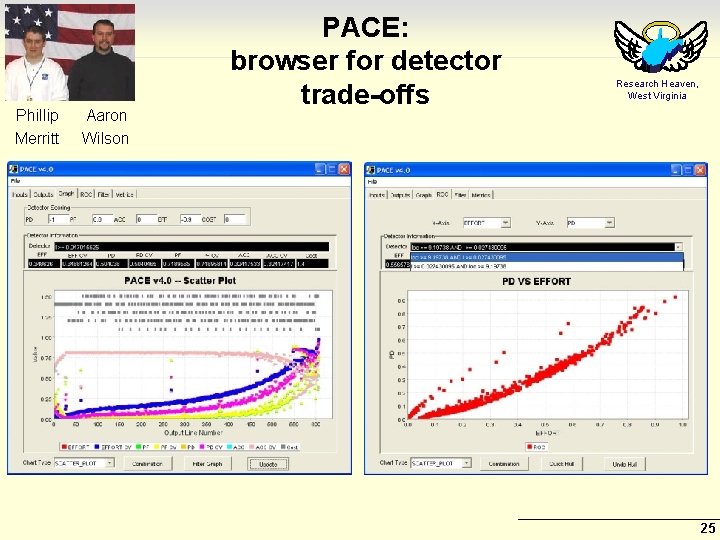

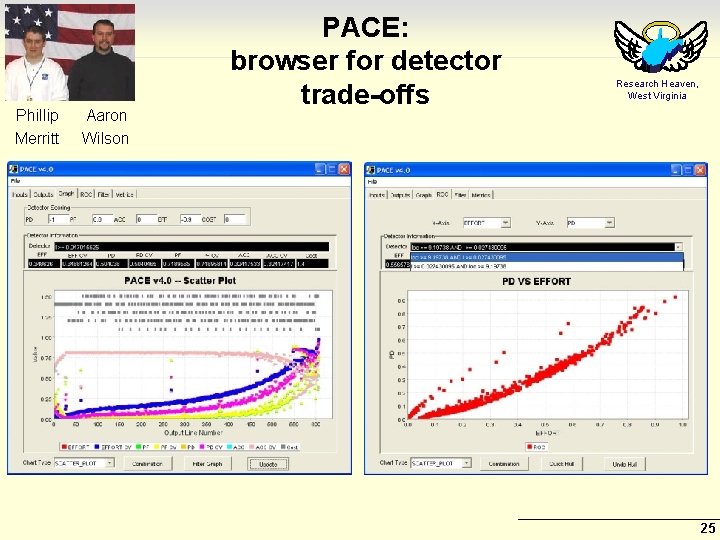

Phillip Merritt Aaron Wilson PACE: browser for detector trade-offs Research Heaven, West Virginia 25

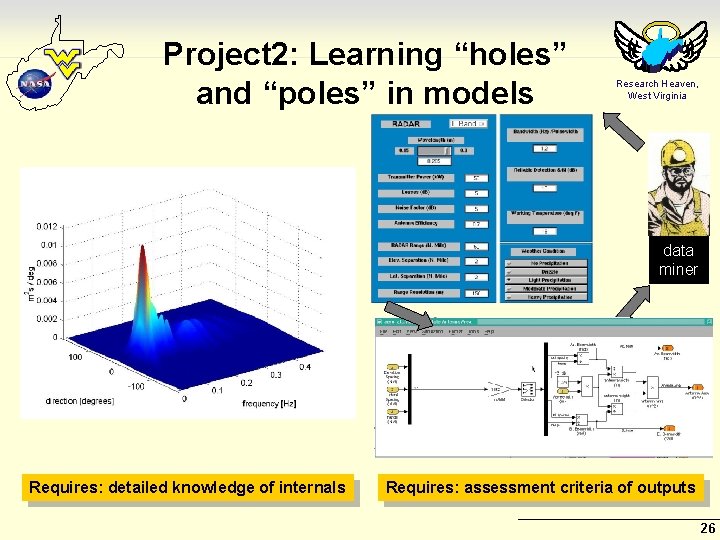

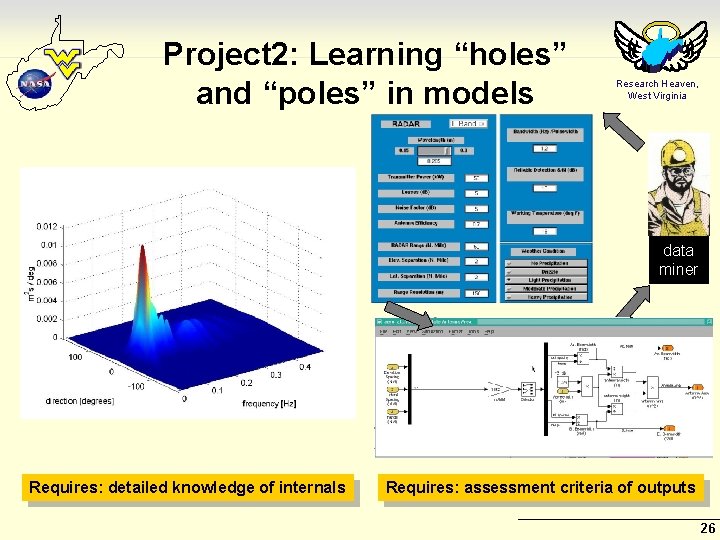

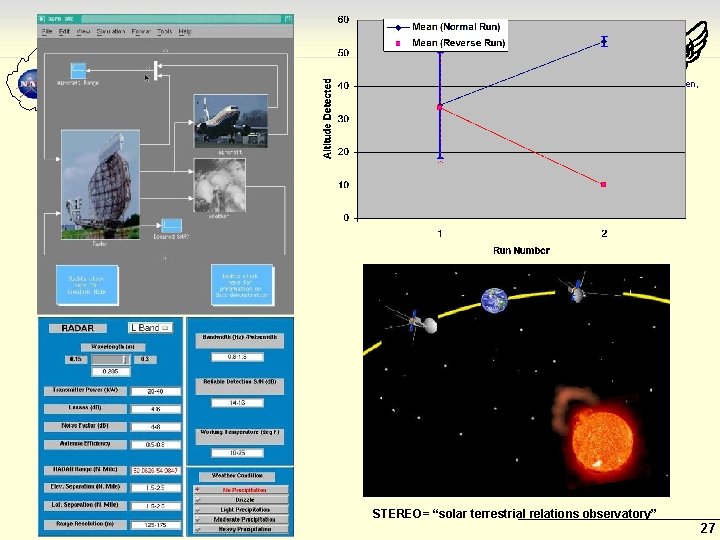

Project 2: Learning “holes” and “poles” in models Research Heaven, West Virginia data miner Requires: detailed knowledge of internals Requires: assessment criteria of outputs 26

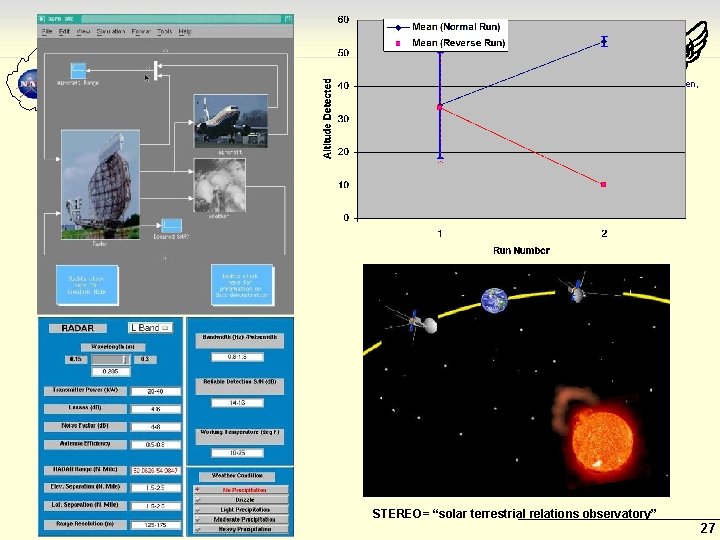

Research Heaven, West Virginia STEREO= “solar terrestrial relations observatory” 27