Search CPSC 386 Artificial Intelligence Ellen Walker Hiram

- Slides: 21

Search CPSC 386 Artificial Intelligence Ellen Walker Hiram College

Problem Solving Agent • Goal-based agent (next slide) • Must find sequence of actions that will satisfy goal • No knowledge of the problem other than definition – No rules – No prior experience

Model-based, Goal Based

Defining the Problem • World state – All relevant information about the world (even if it cannot be perceived) – E. g. “vacuum at left, left is dirty, right is clean” • Initial state – World state in which the agent starts • Goal state – World state in which the problem is solved (can be multiple) • Actions – What the agent can do – Table: (current state, action) -> new state • Solution – A recommended action sequence that will lead from the current state to a goal state

Searching for a Solution • If the initial state is a goal state, return an empty sequence of actions • Repeat (until a sequence is found) [SEARCH] – Choose an action sequence – If the sequence leads to a goal, save it. • Repeat (until the sequence is empty) [EXECUTE] – Remove the first step from the sequence and execute it.

Environmental requirements • Static – so the plan will still work later • Fully Observable – so agent knows the initial state • Discrete – so agent can enumerate choices • Deterministic – so agent can plan a sequence of actions, believing each will lead to a specific intermediate state

State Space Formulation • State space – Graph of states connected by actions – Usually too big to be explicitly represented, we create a Successor Function instead • Successor (state) -> ((action state)…) • Goal test – Is this state a goal state? • Path cost – (we assume) sum of costs of actions in a path • Solution – Path from initial state to a goal state – Optimal: least-cost path from initial state to a goal state

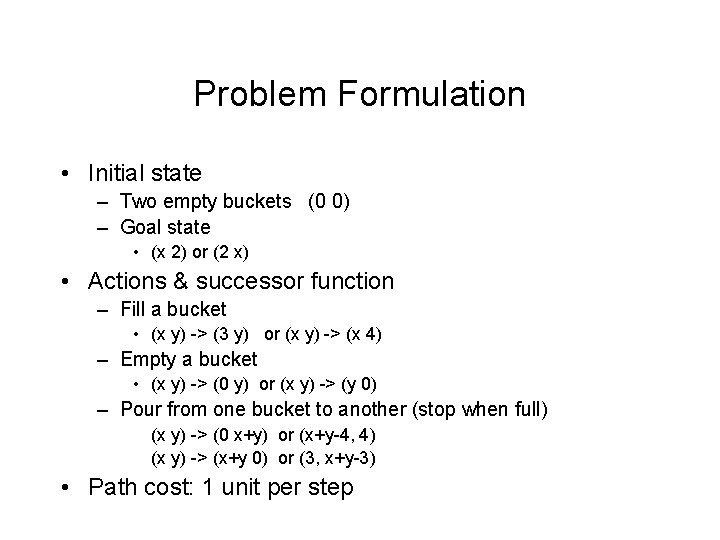

Example: Water Pouring • Problem – We have two buckets; one holds 4 gallons, and one holds 3 gallons. There are no markings on the buckets. – How can we get exactly 2 gallons into one bucket?

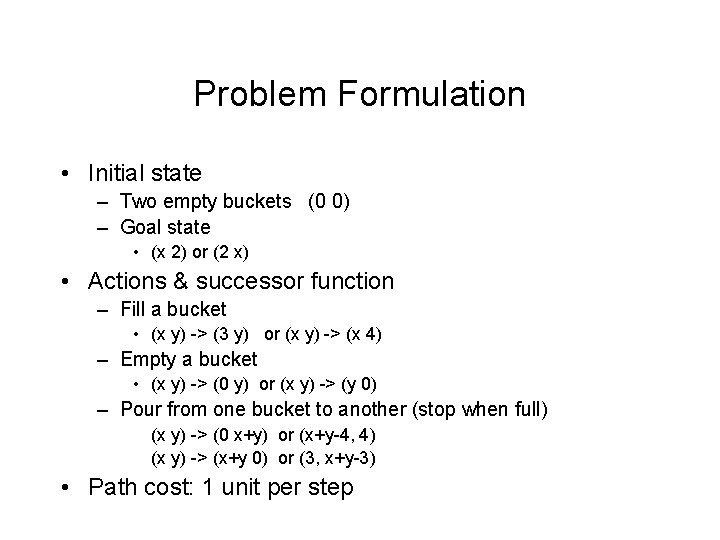

Problem Formulation • Initial state – Two empty buckets (0 0) – Goal state • (x 2) or (2 x) • Actions & successor function – Fill a bucket • (x y) -> (3 y) or (x y) -> (x 4) – Empty a bucket • (x y) -> (0 y) or (x y) -> (y 0) – Pour from one bucket to another (stop when full) (x y) -> (0 x+y) or (x+y-4, 4) (x y) -> (x+y 0) or (3, x+y-3) • Path cost: 1 unit per step

Partial State Space for Water Pouring

Graph Node Formulation • • • State (world state at this node) Parent-node (previous node on path) Action (action taken to generate this node) Path cost, g(n) (from initial state to this node) Depth (number of steps taken so far)

Generic Searching • Create a node for the initial state, and put it into the set of unexpanded nodes • While the problem is not solved… – Pick an unexpanded node [according to strategy] – Stop (and report the path) if it is a goal state – Expand it (create nodes for every possible action that can be applied to that state)

Terminology • Expanded nodes (visited) – Nodes that we have already created child nodes for • Save these to avoid repetition • Fringe nodes (generated but unvisited) – Nodes that exist but we have not yet created child nodes for them.

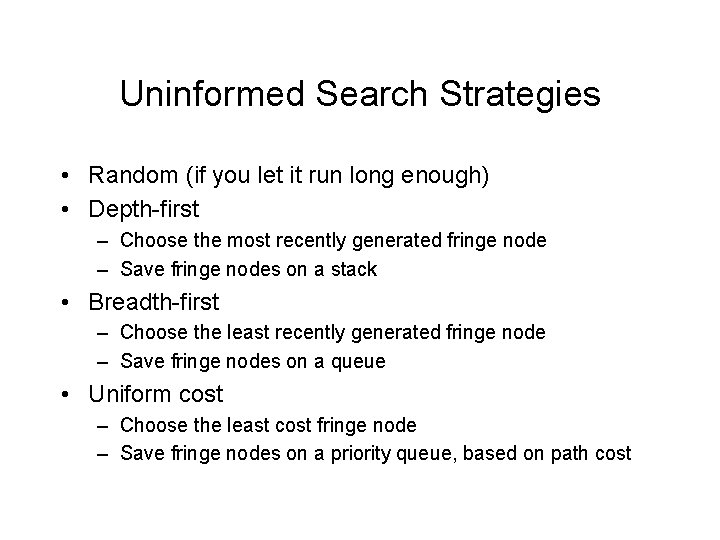

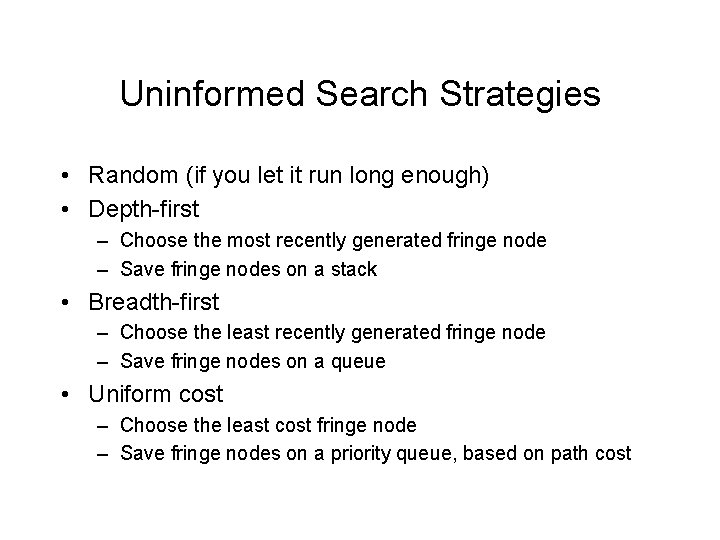

Uninformed Search Strategies • Random (if you let it run long enough) • Depth-first – Choose the most recently generated fringe node – Save fringe nodes on a stack • Breadth-first – Choose the least recently generated fringe node – Save fringe nodes on a queue • Uniform cost – Choose the least cost fringe node – Save fringe nodes on a priority queue, based on path cost

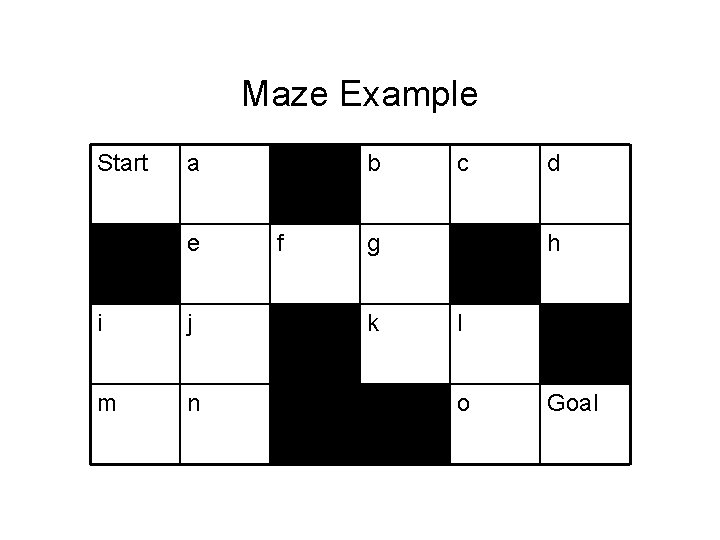

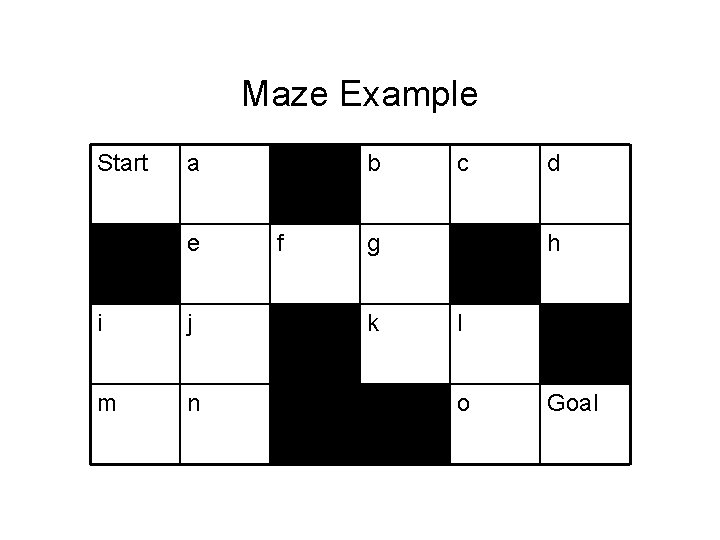

Maze Example Start a e i j m n b f c g k d h l o Goal

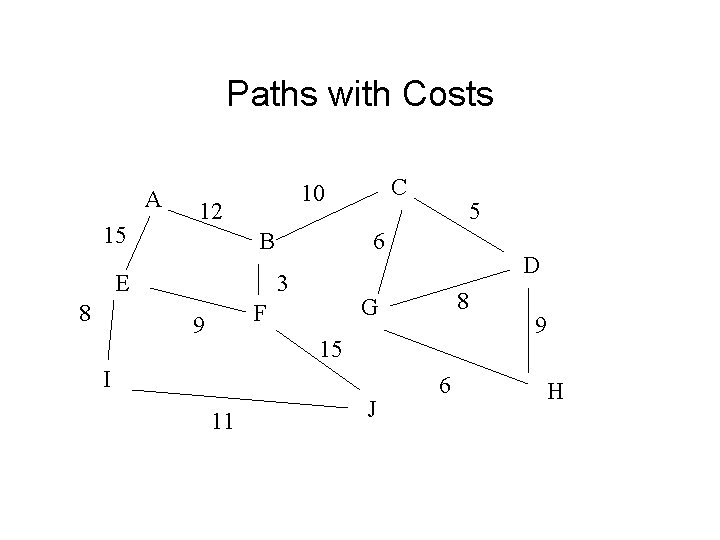

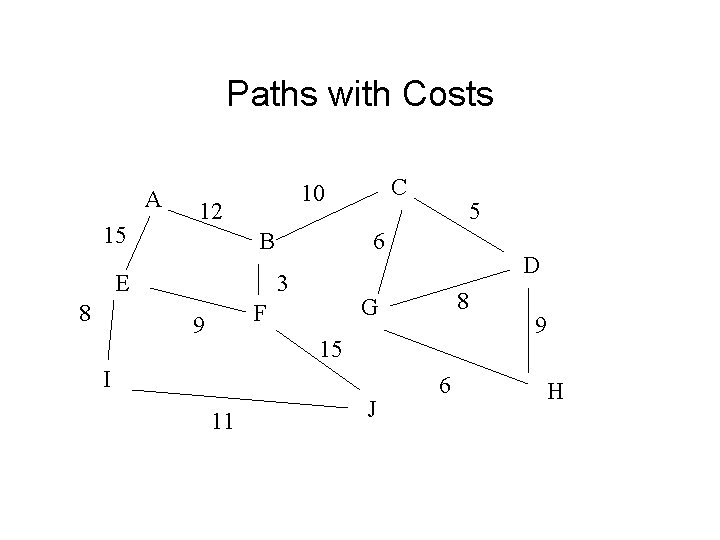

Paths with Costs A 15 12 B E 8 5 6 3 D 8 G F 9 C 10 15 I 11 J 6 9 H

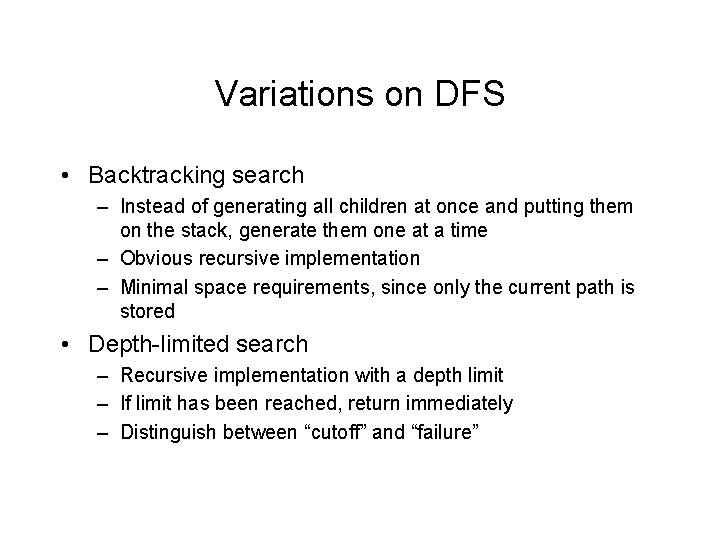

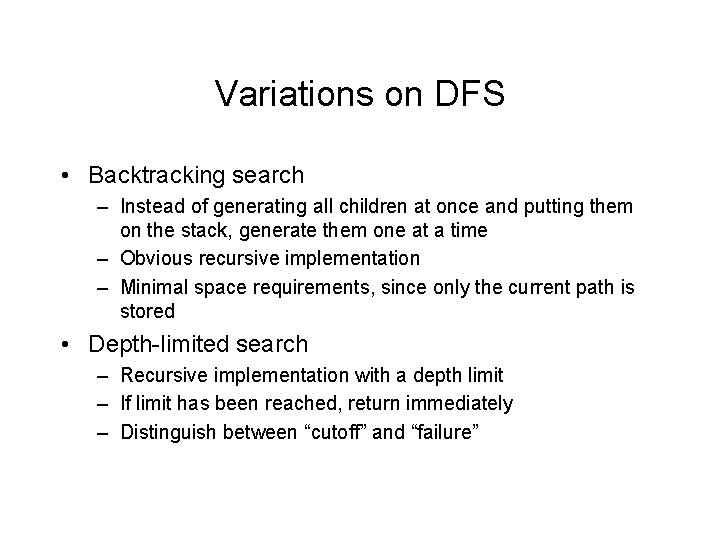

Variations on DFS • Backtracking search – Instead of generating all children at once and putting them on the stack, generate them one at a time – Obvious recursive implementation – Minimal space requirements, since only the current path is stored • Depth-limited search – Recursive implementation with a depth limit – If limit has been reached, return immediately – Distinguish between “cutoff” and “failure”

Issues with DFS and BFS • DFS can waste a very long time on the wrong path! • BFS requires much more space to hold the fringe. – DFS stores approximately 1 path – BFS stores all “current” nodes on all paths simultaneously • BFS can expand nodes beyond the solution

Iterative Deepening Search • Do a depth-limited search at 1, then at 2, etc. • Properties – Finds shortest path (like BFS) – Cost of additional searches isn’t much, in the big-O sense. (Because the number of nodes expanded at a level is comparable to all nodes expanded at earlier levels) – Doesn’t expand nodes beyond solution (which BFS does) – Cost is O(bd) while BFS is O(bd+1) • IDS is the preferred uninformed search method when there is a large search space and the depth of the solution is not known.

Bidirectional Search • Two searches at once – Initial state toward goal state – Goal state toward initial state • Before expanding a node, see if it’s at the fringe of the other tree. – If so, you have found a complete path • 2 trees of depth d/2 have much fewer nodes than 1 tree of depth d • Space cost is high, though - one tree must be kept in memory for testing against.

Evaluating Search Strategies • Completeness – Will a solution always be found if one exists? • Optimality – Will the optimal (least cost) solution be found? • Time Complexity – How long does it take to find the solution? – Often represented by # nodes expanded • Space Complexity – How much memory is needed to perform the search? – Represented by max # nodes stored at once