SE292 High Performance Computing Intro To Concurrent Programming

![Understanding Amdahl’s Law concurrency Compute : min ( |A[i, j]- B[i, i]| ) 1 Understanding Amdahl’s Law concurrency Compute : min ( |A[i, j]- B[i, i]| ) 1](https://slidetodoc.com/presentation_image_h2/82e56cf493305d26fde85d8d5f733741/image-6.jpg)

![Implicit Memory Model n Sequential consistency (SC) [Lamport] q Result of an execution appears Implicit Memory Model n Sequential consistency (SC) [Lamport] q Result of an execution appears](https://slidetodoc.com/presentation_image_h2/82e56cf493305d26fde85d8d5f733741/image-23.jpg)

- Slides: 27

SE-292 High Performance Computing Intro. To Concurrent Programming & Parallel Architecture R. Govindarajan govind@serc

PARALLEL ARCHITECTURE Parallel Machine: a computer system with more than one processor q Special parallel machines designed to make this interaction overhead less Questions: n What about Multicores? n What about a network of machines? q Yes, but time involved in interaction (communication) might be high, as the system is designed assuming that the machines are more or less independent 2

Classification of Parallel Machines Flynn’s Classification n n n In terms of number of Instruction streams and Data streams Instruction stream: path to instruction memory (PC) Data stream: path to data memory SISD: single instruction stream single data stream SIMD MIMD 3

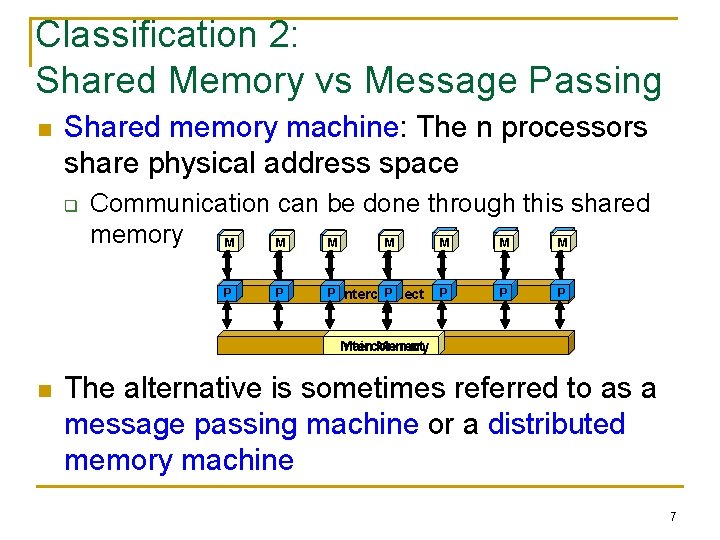

PARALLEL PROGRAMMING n Recall: Flynn SIMD, MIMD q q q n Programming side: SPMD, MPMD Shared memory: threads Message passing: using messages Speedup = 4

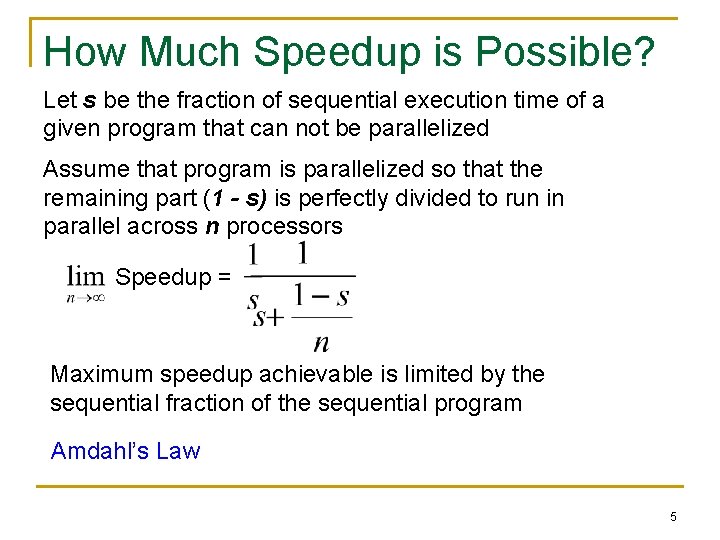

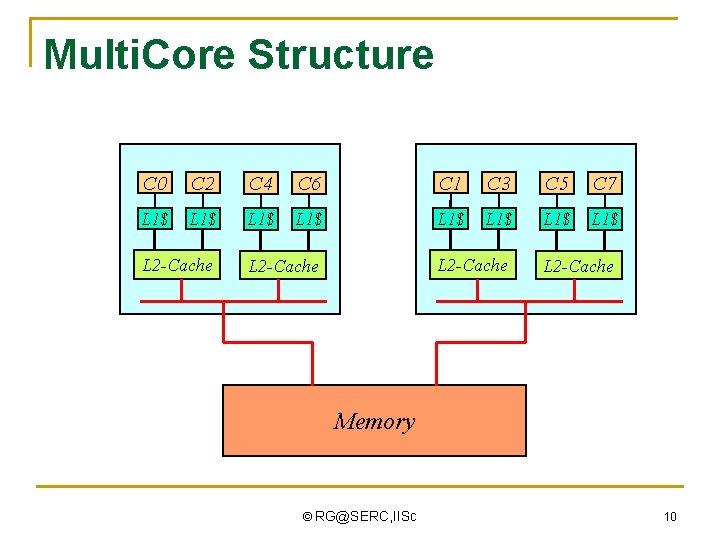

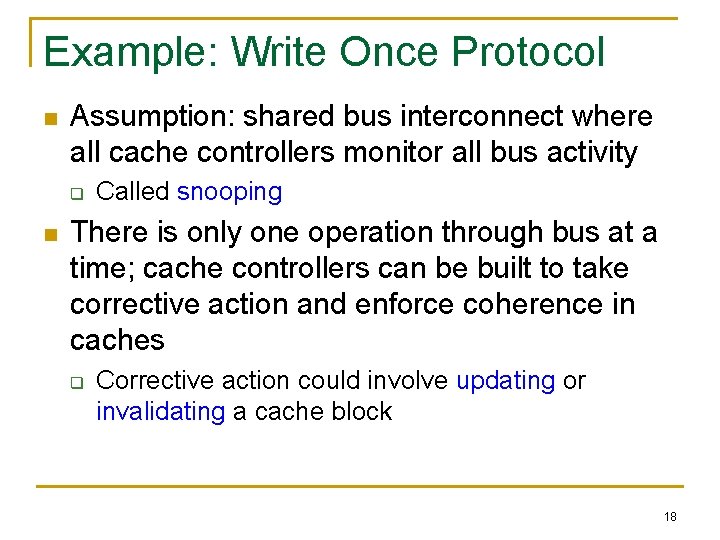

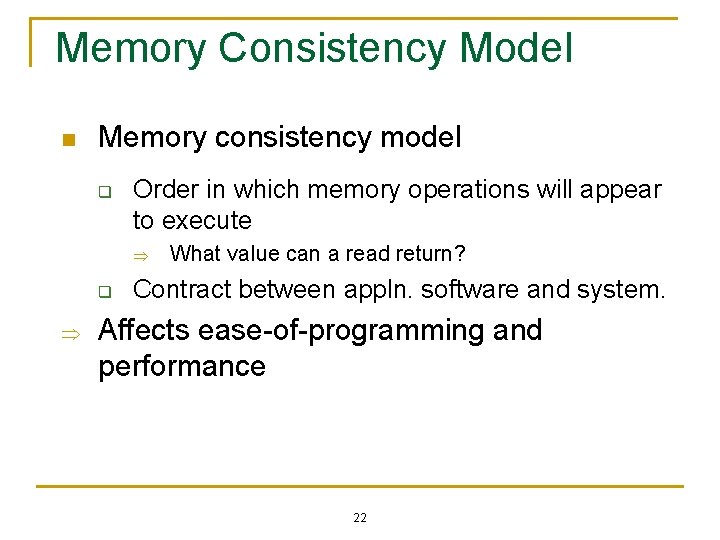

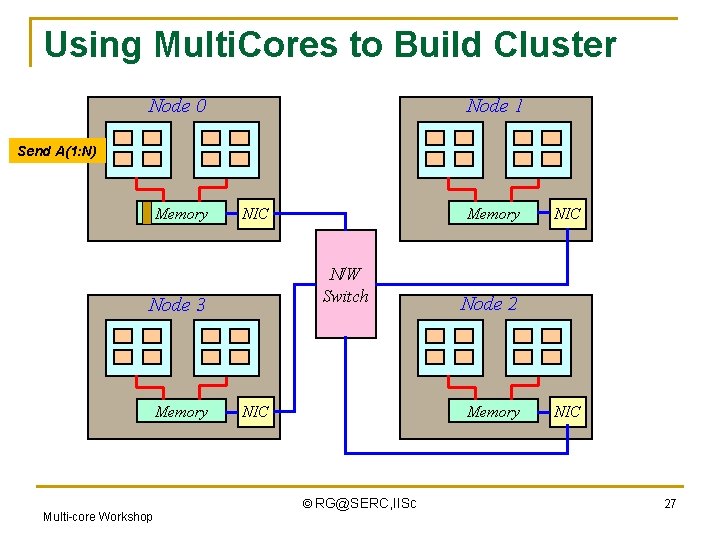

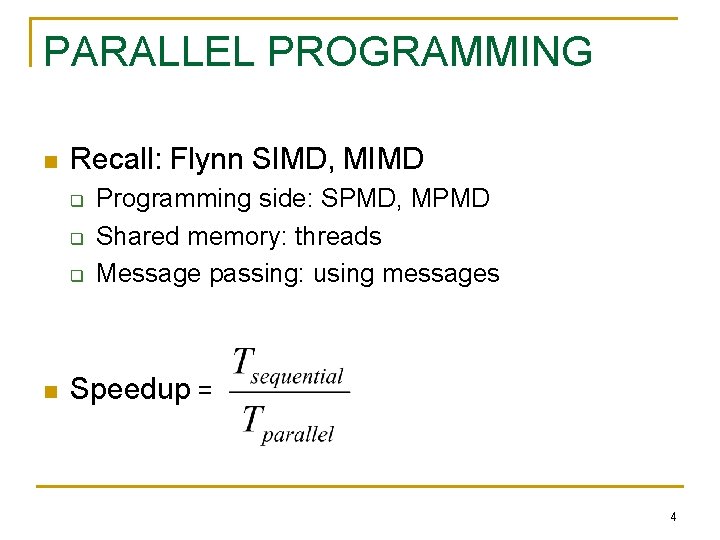

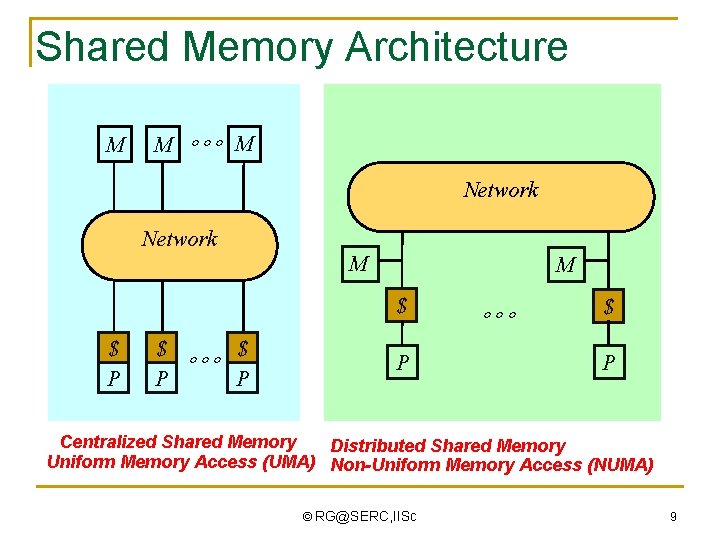

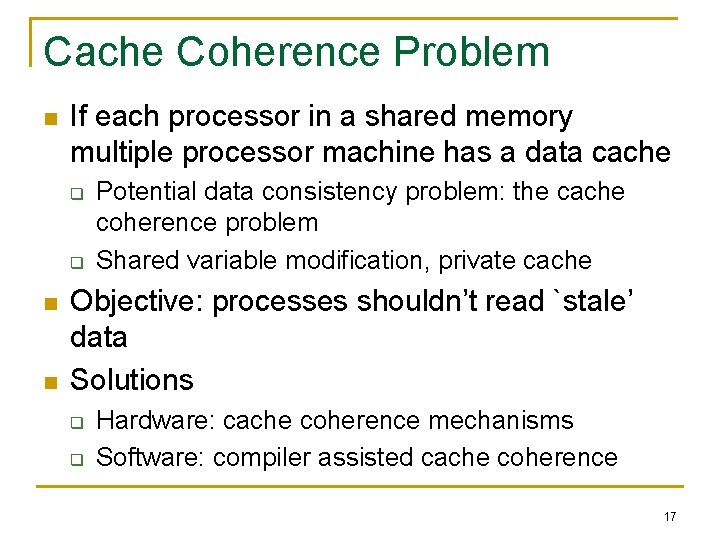

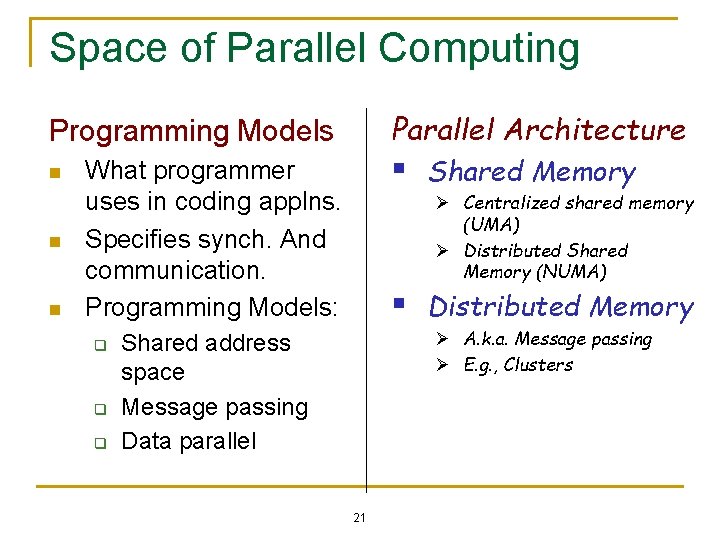

How Much Speedup is Possible? Let s be the fraction of sequential execution time of a given program that can not be parallelized Assume that program is parallelized so that the remaining part (1 - s) is perfectly divided to run in parallel across n processors Speedup = Maximum speedup achievable is limited by the sequential fraction of the sequential program Amdahl’s Law 5

![Understanding Amdahls Law concurrency Compute min Ai j Bi i 1 Understanding Amdahl’s Law concurrency Compute : min ( |A[i, j]- B[i, i]| ) 1](https://slidetodoc.com/presentation_image_h2/82e56cf493305d26fde85d8d5f733741/image-6.jpg)

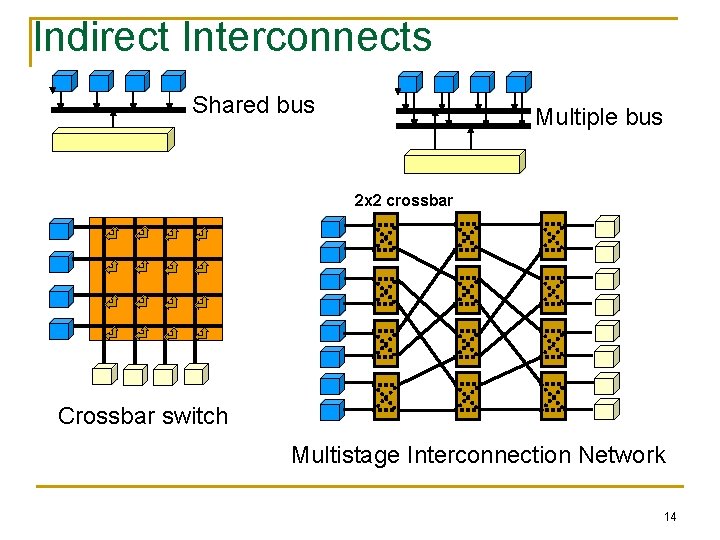

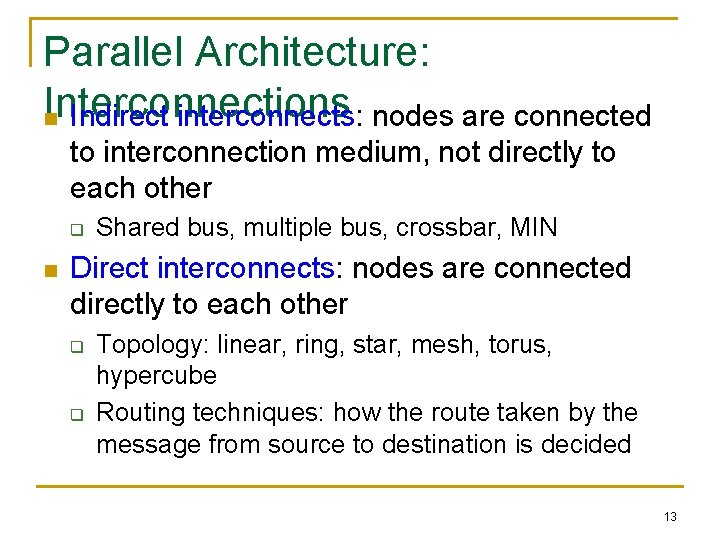

Understanding Amdahl’s Law concurrency Compute : min ( |A[i, j]- B[i, i]| ) 1 n 2 Time 1 n 2/p n 2 (b) Naïve Parallel Time p p 1 n 2/p p concurrency (a) Serial Time (c) Parallel 6

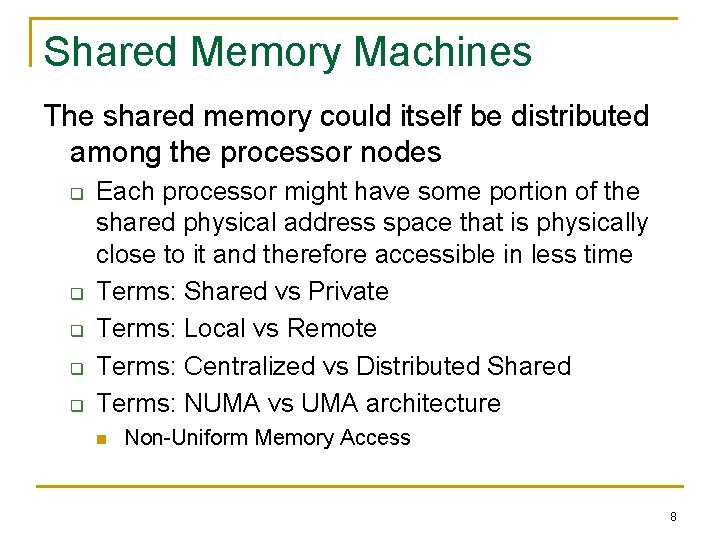

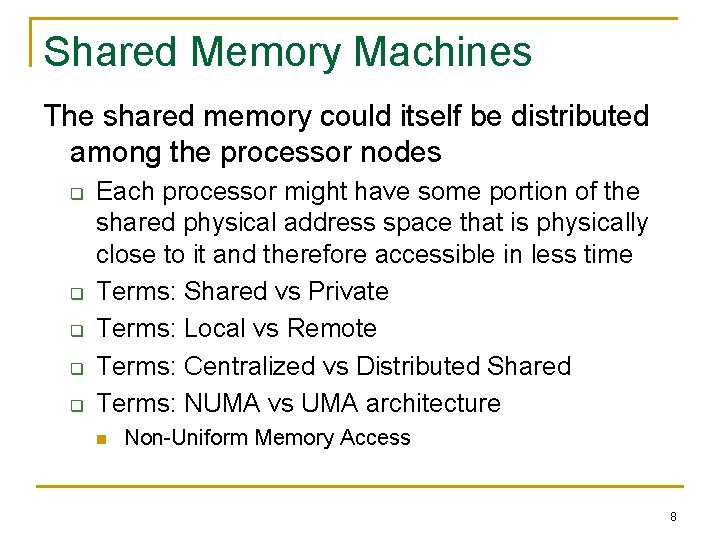

Classification 2: Shared Memory vs Message Passing n Shared memory machine: The n processors share physical address space q Communication can be done through this shared memory P P P P M M M M P P P Interconnect Main Memory n The alternative is sometimes referred to as a message passing machine or a distributed memory machine 7

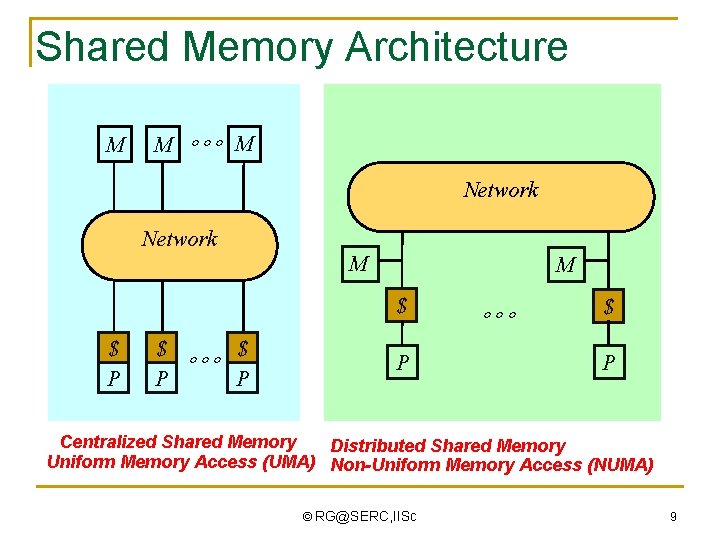

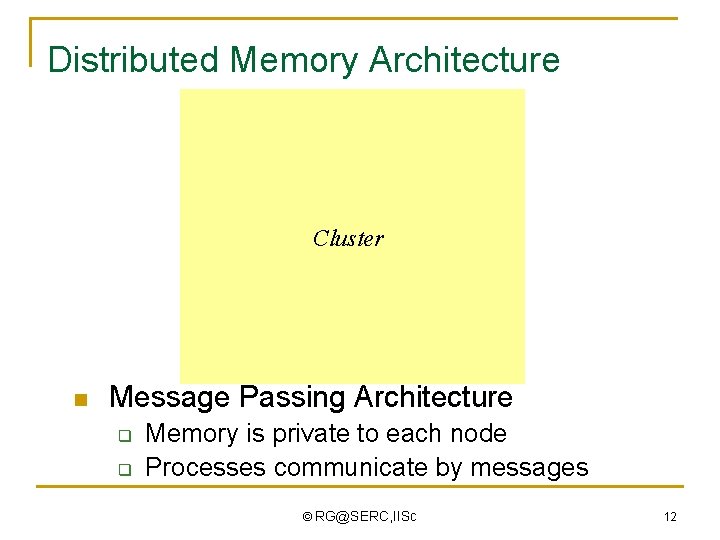

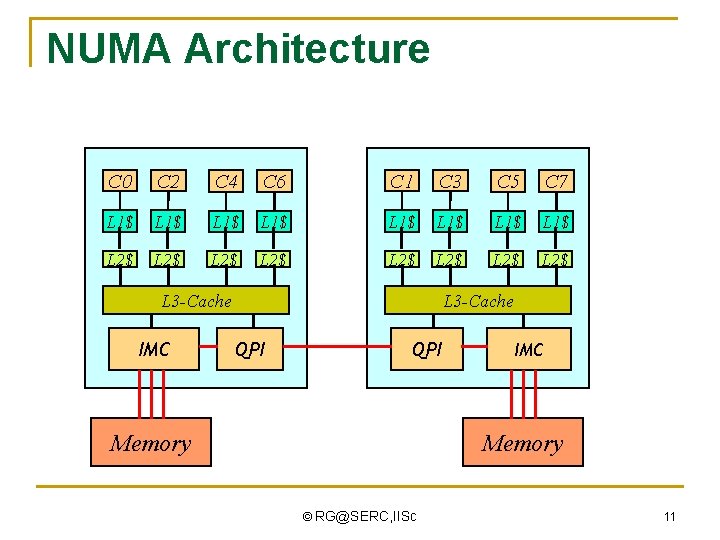

Shared Memory Machines The shared memory could itself be distributed among the processor nodes q q q Each processor might have some portion of the shared physical address space that is physically close to it and therefore accessible in less time Terms: Shared vs Private Terms: Local vs Remote Terms: Centralized vs Distributed Shared Terms: NUMA vs UMA architecture n Non-Uniform Memory Access 8

Shared Memory Architecture M M °°° M Network M M $ $ P $ $ °°° P P P °°° $ P Centralized Shared Memory Distributed Shared Memory Uniform Memory Access (UMA) Non-Uniform Memory Access (NUMA) © RG@SERC, IISc 9

Multi. Core Structure C 0 C 2 C 4 C 6 C 1 C 3 C 5 C 7 L 1$ L 1$ L 2 -Cache Memory © RG@SERC, IISc 10

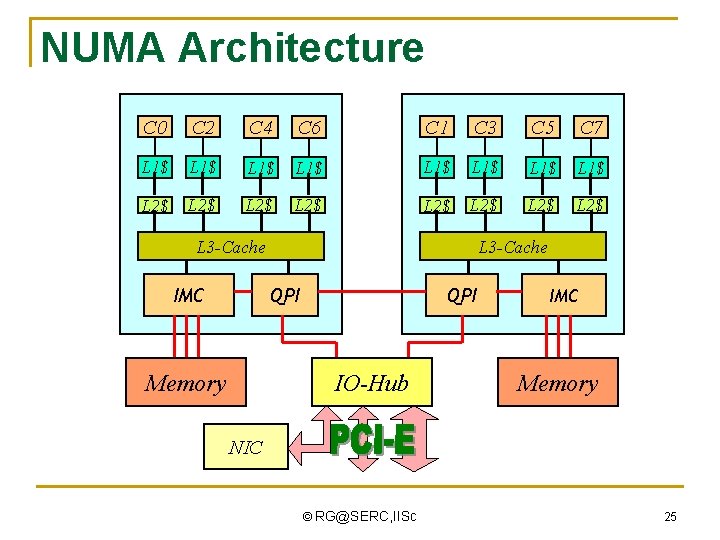

NUMA Architecture C 0 C 2 C 4 C 6 C 1 C 3 C 5 C 7 L 1$ L 1$ L 2$ L 2$ L 3 -Cache IMC L 3 -Cache QPI Memory IMC Memory © RG@SERC, IISc 11

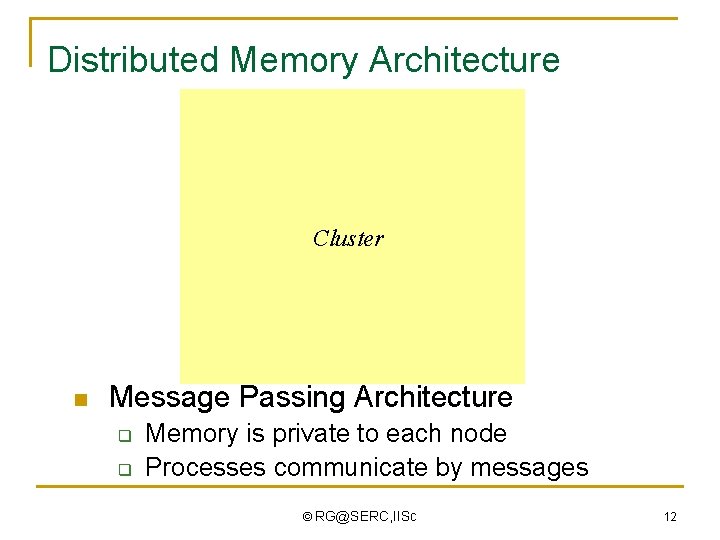

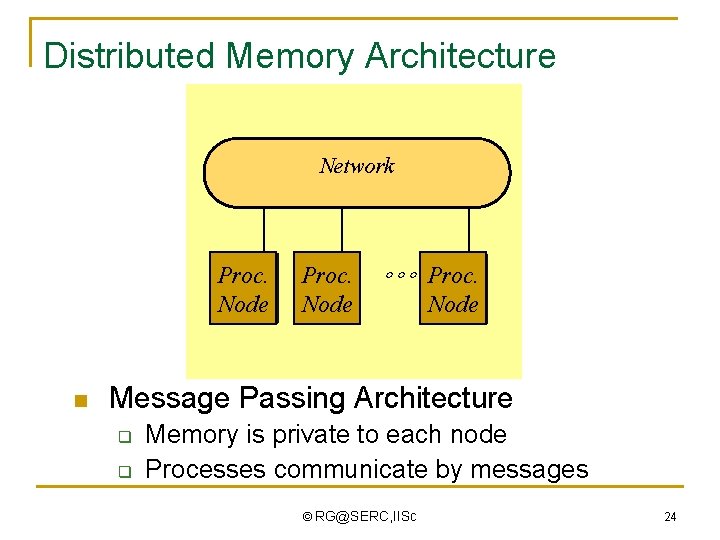

Distributed Memory Architecture Network Cluster n P P M $ °°° P M $ Message Passing Architecture q q Memory is private to each node Processes communicate by messages © RG@SERC, IISc 12

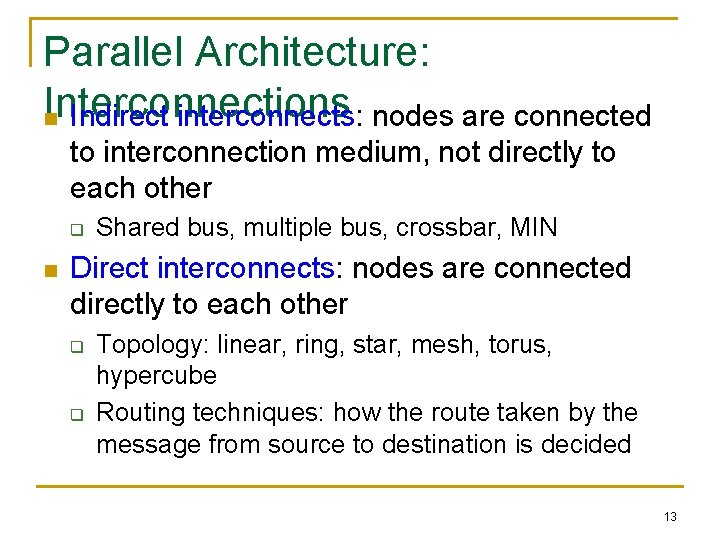

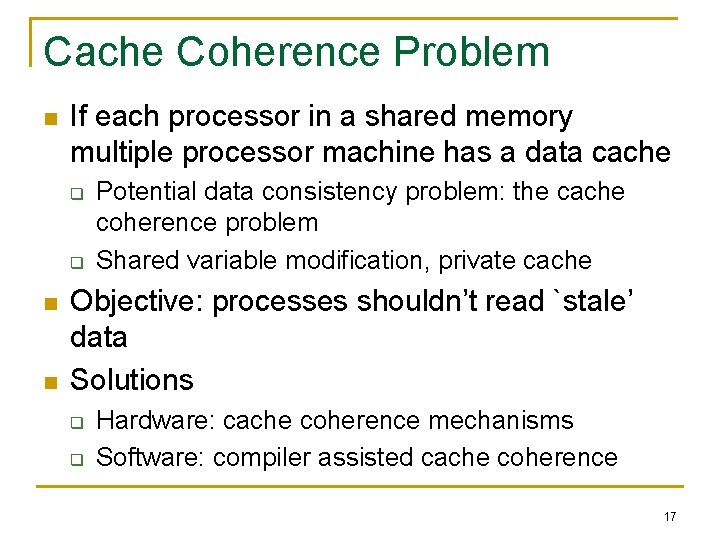

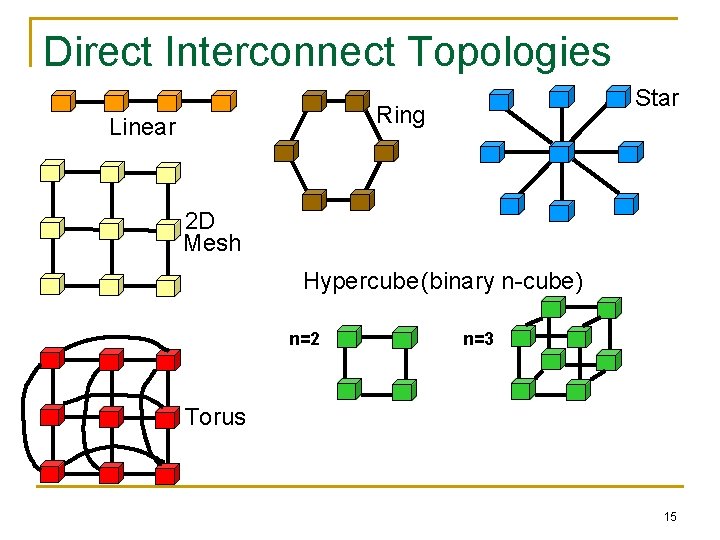

Parallel Architecture: Interconnections n Indirect interconnects: nodes are connected to interconnection medium, not directly to each other q n Shared bus, multiple bus, crossbar, MIN Direct interconnects: nodes are connected directly to each other q q Topology: linear, ring, star, mesh, torus, hypercube Routing techniques: how the route taken by the message from source to destination is decided 13

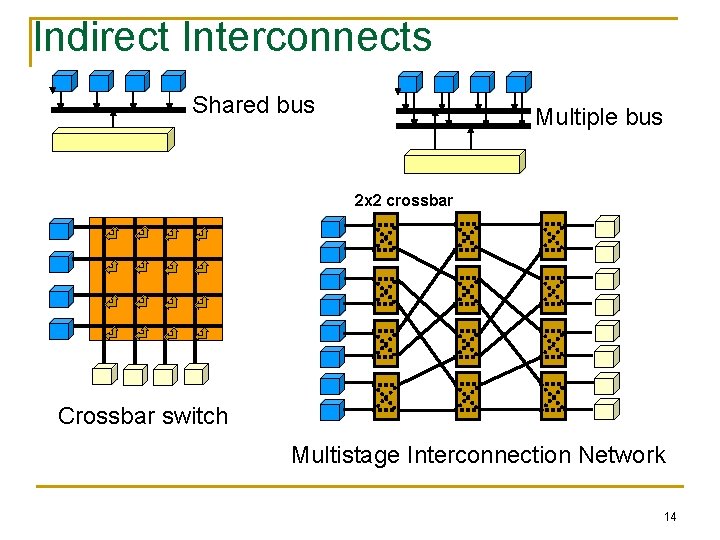

Indirect Interconnects Shared bus Multiple bus 2 x 2 crossbar Crossbar switch Multistage Interconnection Network 14

Direct Interconnect Topologies Star Ring Linear 2 D Mesh Hypercube(binary n-cube) n=2 n=3 Torus 15

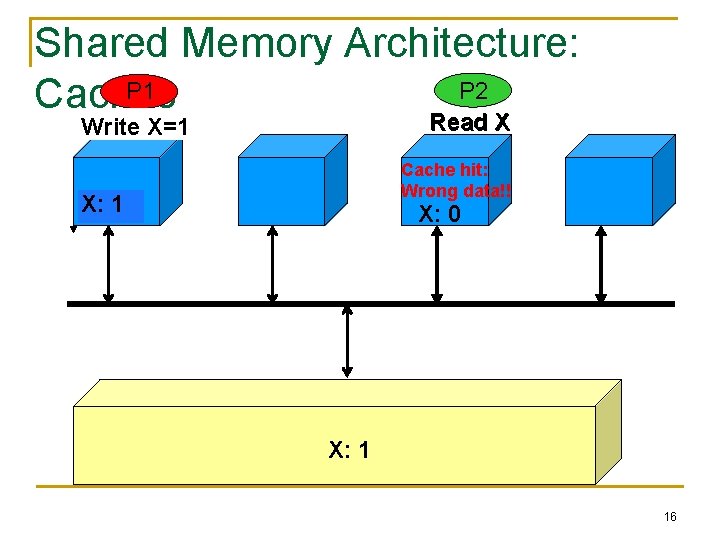

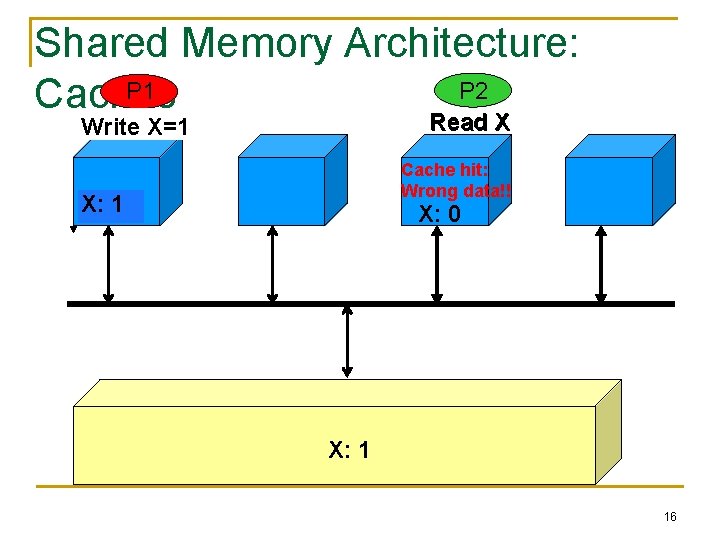

Shared Memory Architecture: P 2 P 1 Caches Read. X=1 X Write Read X Cache hit: Wrong data!! X: X: 10 X: 1 0 16

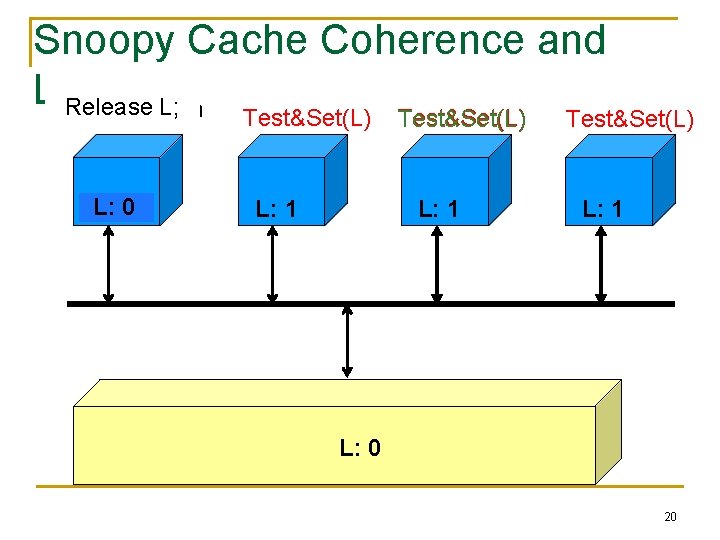

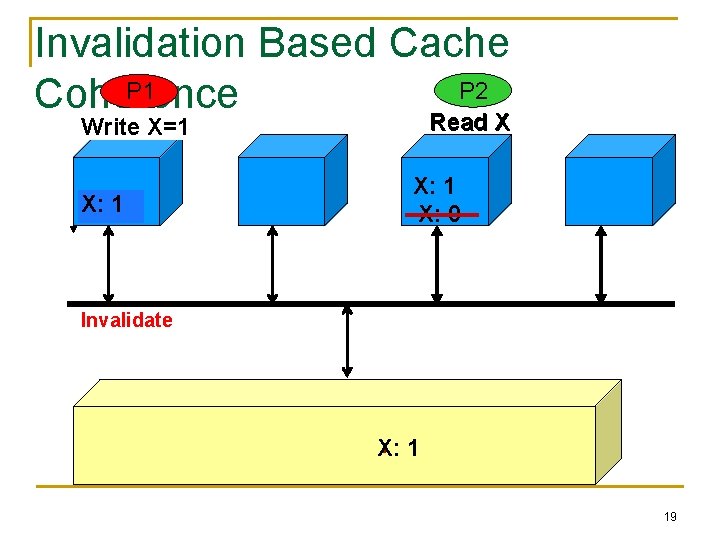

Cache Coherence Problem n If each processor in a shared memory multiple processor machine has a data cache q q n n Potential data consistency problem: the cache coherence problem Shared variable modification, private cache Objective: processes shouldn’t read `stale’ data Solutions q q Hardware: cache coherence mechanisms Software: compiler assisted cache coherence 17

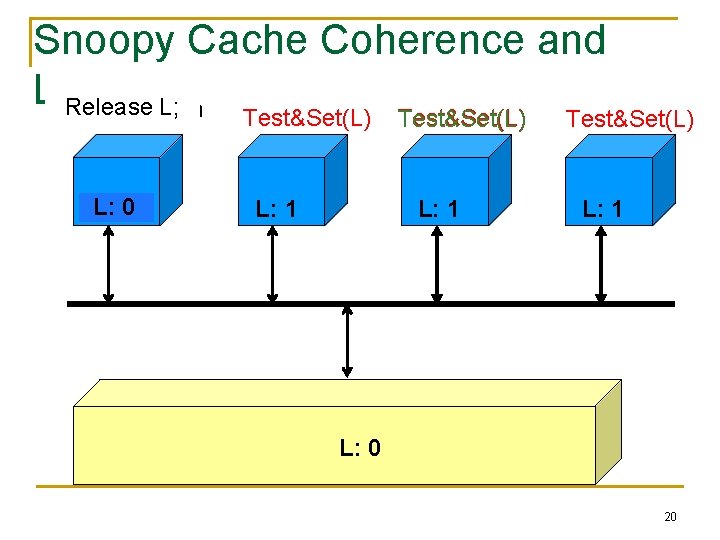

Example: Write Once Protocol n Assumption: shared bus interconnect where all cache controllers monitor all bus activity q n Called snooping There is only one operation through bus at a time; cache controllers can be built to take corrective action and enforce coherence in caches q Corrective action could involve updating or invalidating a cache block 18

Invalidation Based Cache P 2 P 1 Coherence Read. X=1 X Write X: X: 10 Read X X: 1 X: 0 Invalidate X: 0 X: 1 19

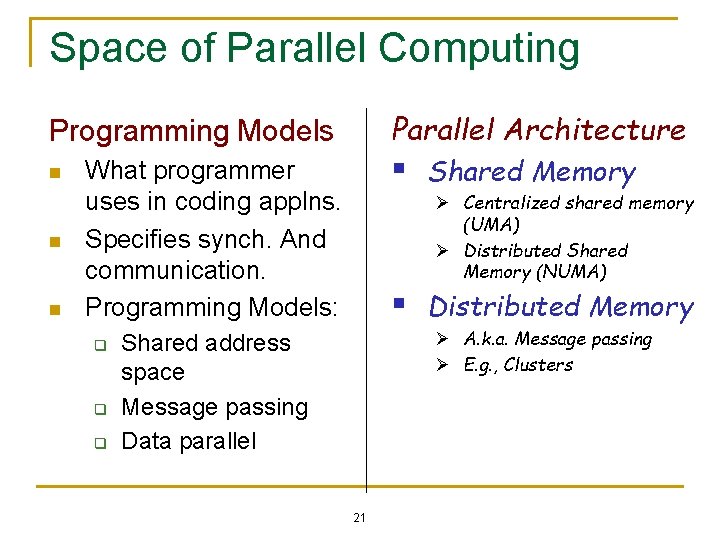

Snoopy Cache Coherence and Holds lock; in Locks Release L; critical section Test&Set(L) L: 10 L: 1 Test&Set(L) L: 1 L: 00 L: 1 20

Space of Parallel Computing Parallel Architecture Programming Models n n n § What programmer uses in coding applns. Specifies synch. And communication. Programming Models: q q q Shared Memory Ø Centralized shared memory (UMA) Ø Distributed Shared Memory (NUMA) § Distributed Memory Ø A. k. a. Message passing Ø E. g. , Clusters Shared address space Message passing Data parallel 21

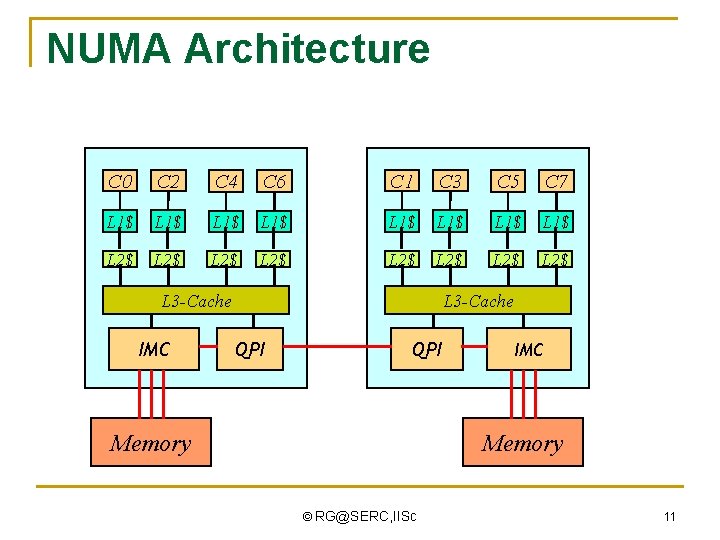

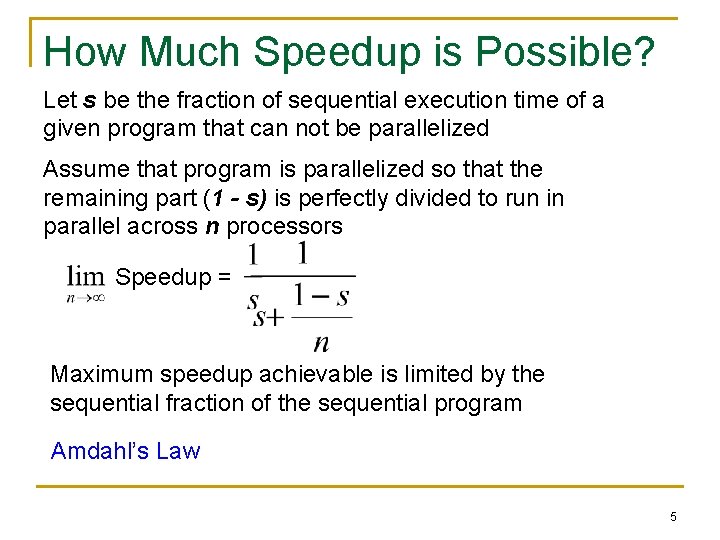

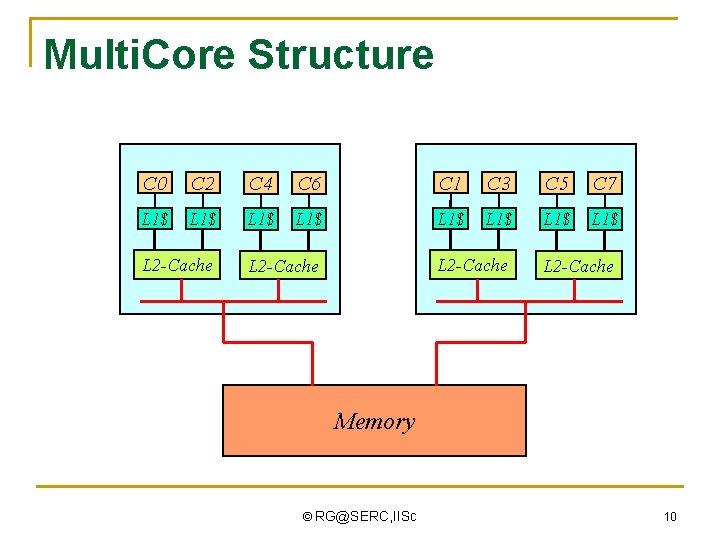

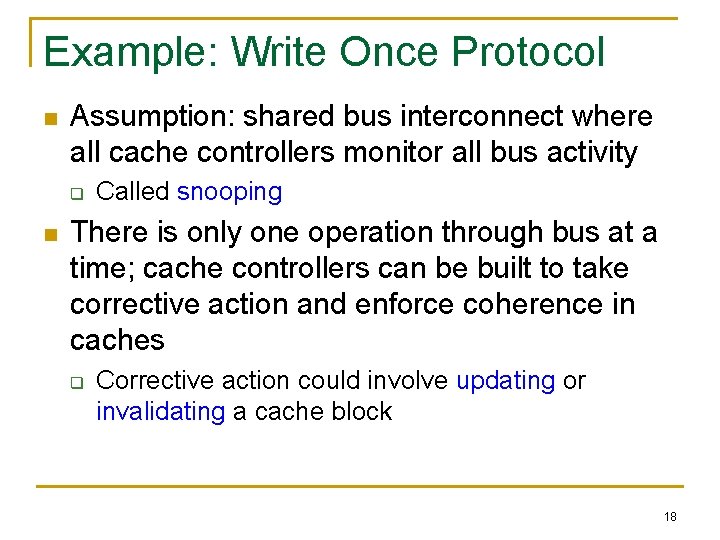

Memory Consistency Model n Memory consistency model q Order in which memory operations will appear to execute Þ q Þ What value can a read return? Contract between appln. software and system. Affects ease-of-programming and performance 22

![Implicit Memory Model n Sequential consistency SC Lamport q Result of an execution appears Implicit Memory Model n Sequential consistency (SC) [Lamport] q Result of an execution appears](https://slidetodoc.com/presentation_image_h2/82e56cf493305d26fde85d8d5f733741/image-23.jpg)

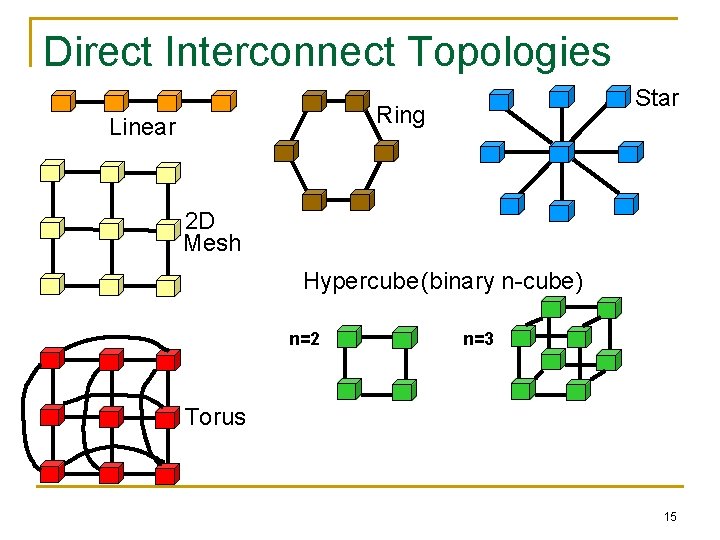

Implicit Memory Model n Sequential consistency (SC) [Lamport] q Result of an execution appears as if • • Operations from different processors executed in some sequential (interleaved) order Memory operations of each process in program order P 1 P 2 P 3 MEMORY 23 Pn

Distributed Memory Architecture Network P Proc. Node M $ n P Proc. Node $ M P ° ° ° Proc. Node M $ Message Passing Architecture q q Memory is private to each node Processes communicate by messages © RG@SERC, IISc 24

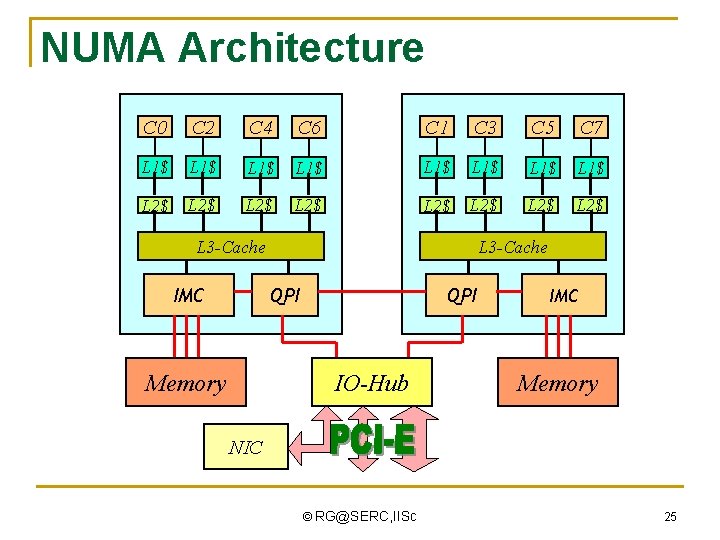

NUMA Architecture C 0 C 2 C 4 C 6 C 1 C 3 C 5 C 7 L 1$ L 1$ L 2$ L 2$ L 3 -Cache IMC L 3 -Cache QPI Memory QPI IO-Hub IMC Memory NIC © RG@SERC, IISc 25

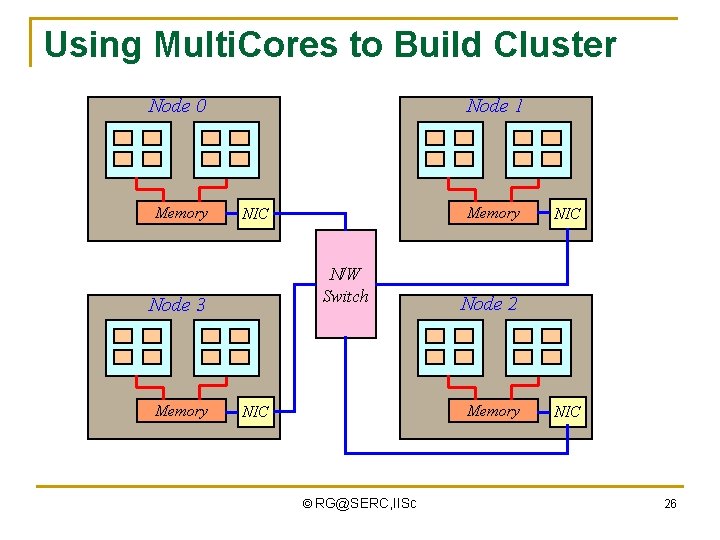

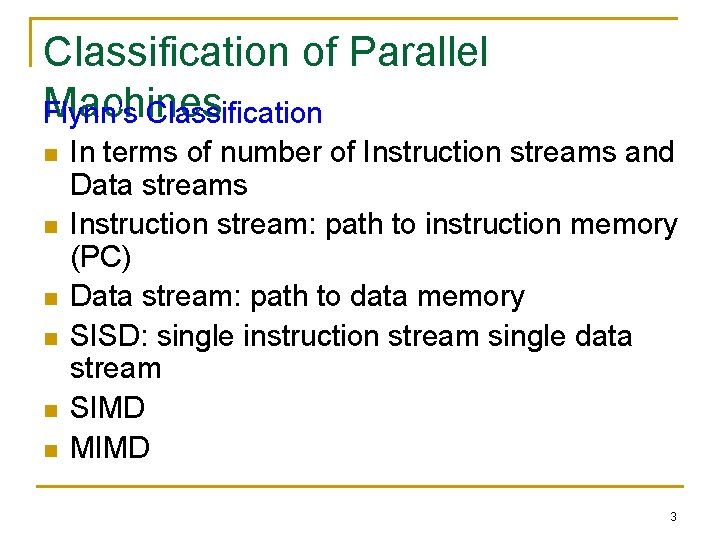

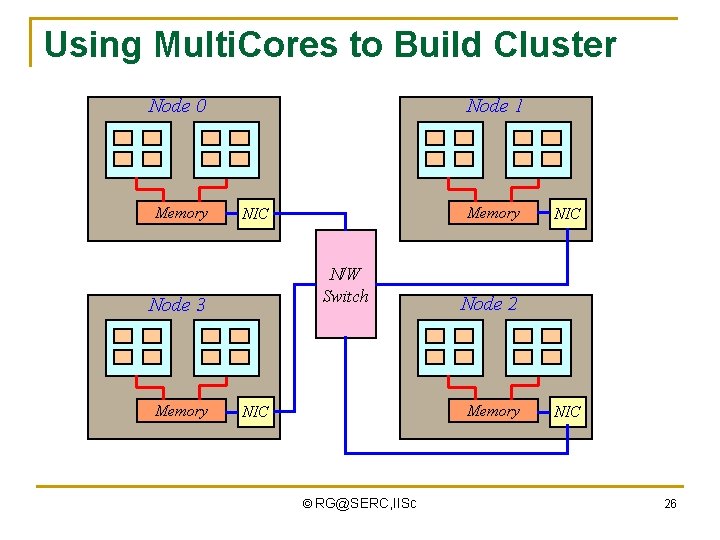

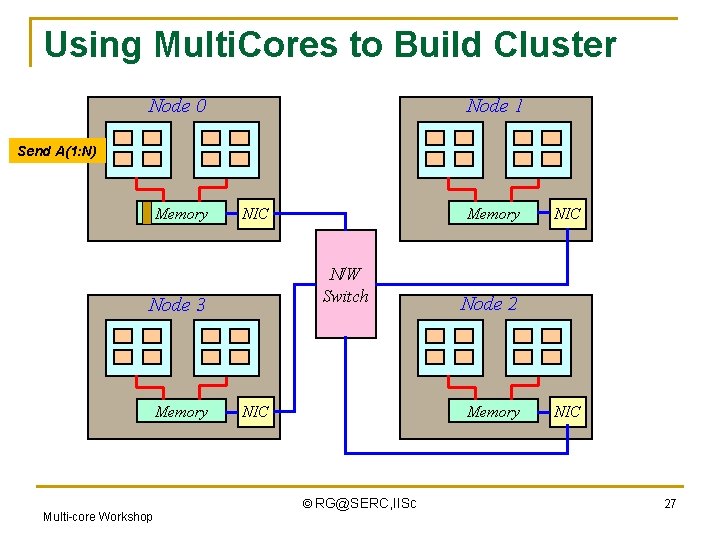

Using Multi. Cores to Build Cluster Node 0 Memory Node 1 N/W Switch Node 3 Memory NIC Node 2 Memory NIC © RG@SERC, IISc NIC 26

Using Multi. Cores to Build Cluster Node 0 Node 1 Send A(1: N) Memory N/W Switch Node 3 Memory Multi-core Workshop Memory NIC Node 2 Memory NIC © RG@SERC, IISc NIC 27