SE292 High Performance Computing Intro To Concurrent Programming

![Producers-Consumers Problem shared Buffer[0. . N-1] Producer: repeatedly Produce x; if (buffer is full) Producers-Consumers Problem shared Buffer[0. . N-1] Producer: repeatedly Produce x; if (buffer is full)](https://slidetodoc.com/presentation_image_h/b4ff141c521be3f882ca71139c171488/image-18.jpg)

- Slides: 48

SE-292 High Performance Computing Intro. To Concurrent Programming & Parallel Architecture Sathish Vadhiyar

Concurrent Programming n n Until now: execution involved one flow of control through program Concurrent programming is about programs with multiple flows of control For example: a program that runs as multiple processes cooperating to achieve a common goal To cooperate, processes must somehow communicate 2

Inter Process Communication 1. Using files (IPC) q q q Parent process creates 2 files before forking child process Child inherits file descriptors from parent, and they share the file pointers Can use one for parent to write and child to read, other for child to write and parent to read OS supports something called a pipe 2. q q Producer writes at one end (write-end) and consumer reads from the other end (read-end) corresponds to 2 file descriptors (int fd[2]) Read from fd[0] accesses data written to fd[1] in FIFO order and vice versa Used with fork - parent process creates a pipe and uses it to communicate with a child process 3

Other IPC Mechanisms Processes could communicate through variables that are shared between them 3. q q q Shared variables, shared memory; other variables are private to a process Special OS support for program to specify objects that are to be in shared regions of address space Posix shared memory – shmget, shmat Processes could communicate by sending and receiving messages to each other 4. q Special OS support for these messages 4

More Ideas on IPC Mechanisms Sometimes processes don’t need to communicate explicit values to cooperate 5. They might just have to synchronize their activities Example: Process 1 reads 2 matrices, Process 2 multiplies them, Process 3 writes the result matrix Process 2 should not start work until Process 1 finishes reading, etc. Called process synchronization Synchronization primitives q q q n Examples: mutex lock, semaphore, barrier 5

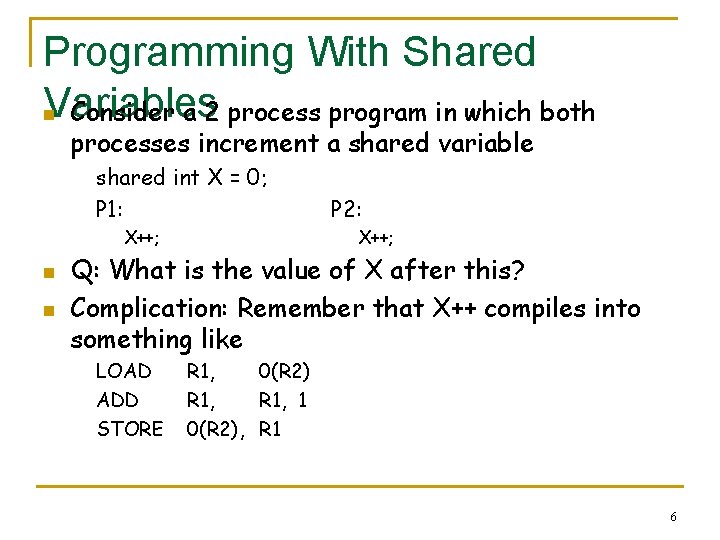

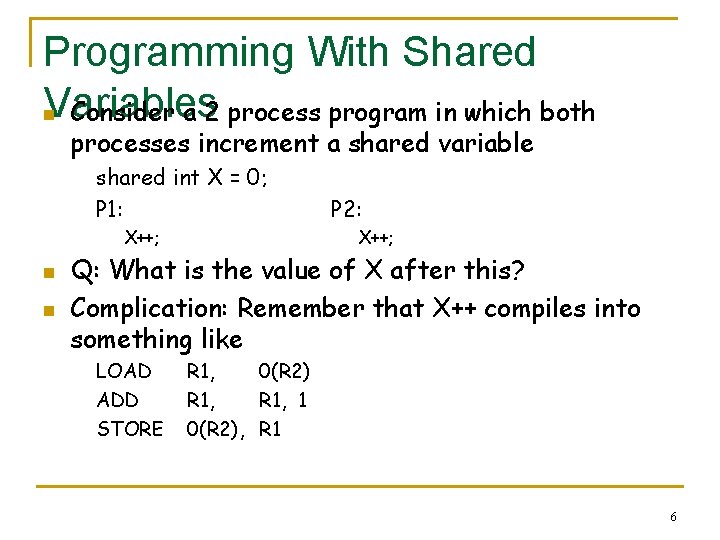

Programming With Shared Variables n Consider a 2 process program in which both processes increment a shared variable shared int X = 0; P 1: X++; n n P 2: X++; Q: What is the value of X after this? Complication: Remember that X++ compiles into something like LOAD ADD STORE R 1, 0(R 2) R 1, 1 0(R 2), R 1 6

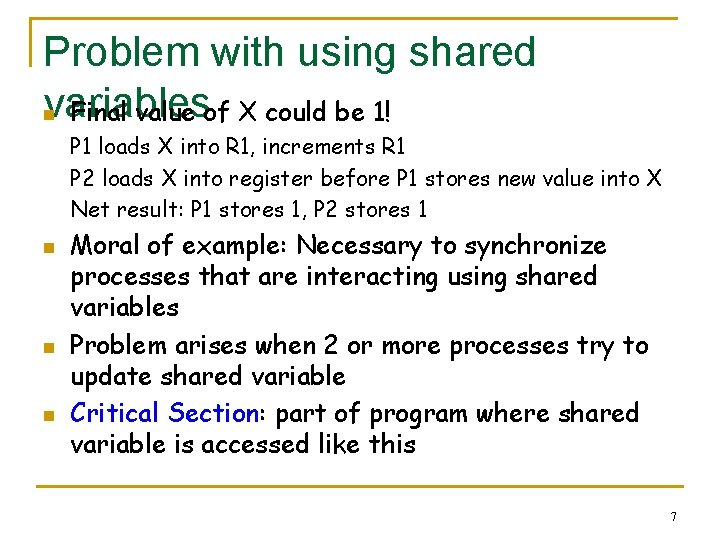

Problem with using shared variables n Final value of X could be 1! P 1 loads X into R 1, increments R 1 P 2 loads X into register before P 1 stores new value into X Net result: P 1 stores 1, P 2 stores 1 n n n Moral of example: Necessary to synchronize processes that are interacting using shared variables Problem arises when 2 or more processes try to update shared variable Critical Section: part of program where shared variable is accessed like this 7

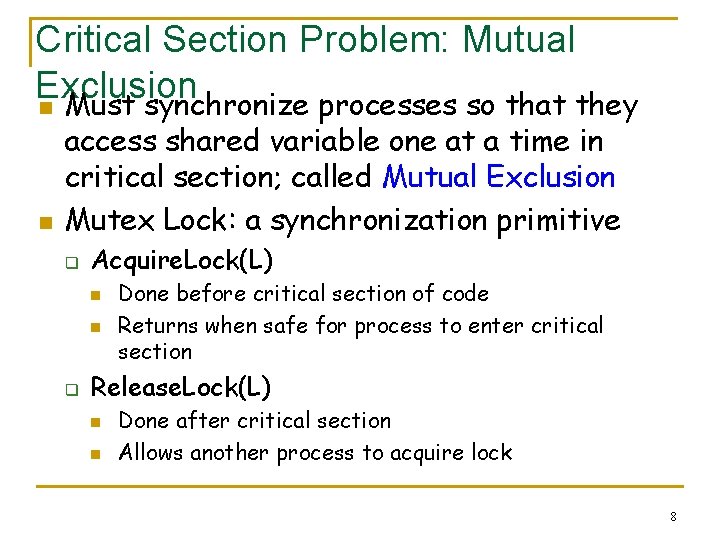

Critical Section Problem: Mutual Exclusion n Must synchronize processes so that they n access shared variable one at a time in critical section; called Mutual Exclusion Mutex Lock: a synchronization primitive q Acquire. Lock(L) n n q Done before critical section of code Returns when safe for process to enter critical section Release. Lock(L) n n Done after critical section Allows another process to acquire lock 8

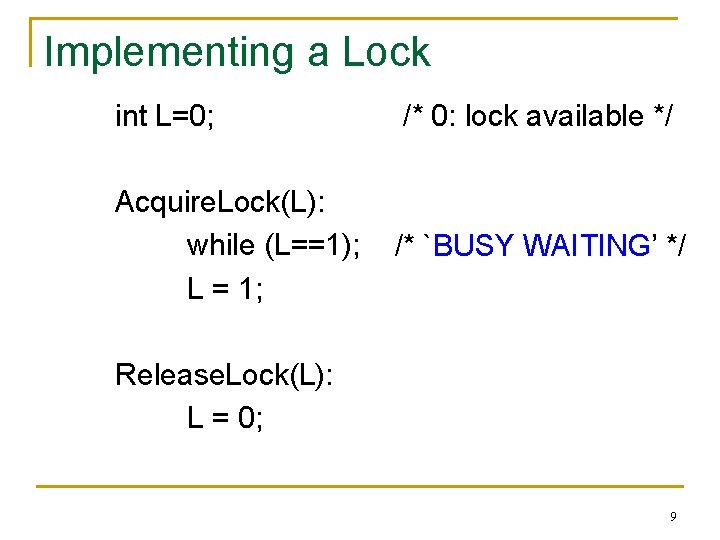

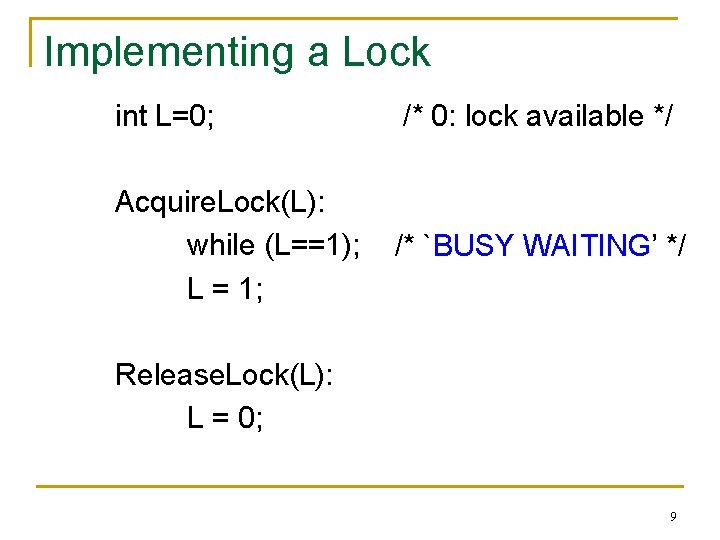

Implementing a Lock int L=0; /* 0: lock available */ Acquire. Lock(L): while (L==1); L = 1; /* `BUSY WAITING’ */ Release. Lock(L): L = 0; 9

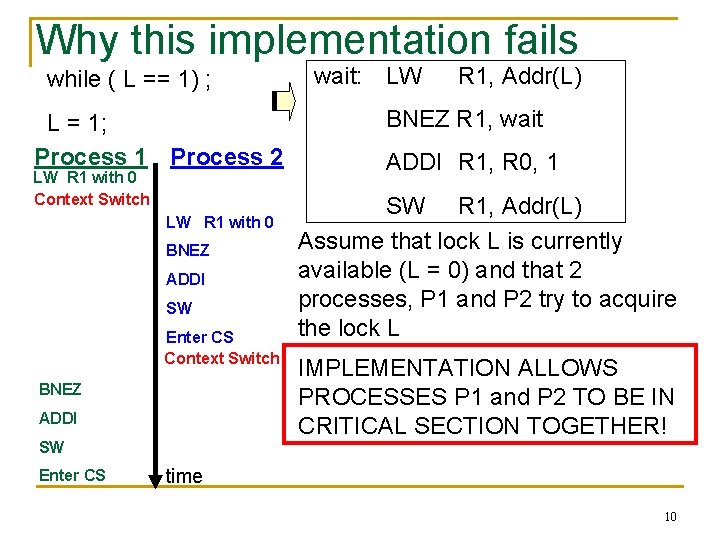

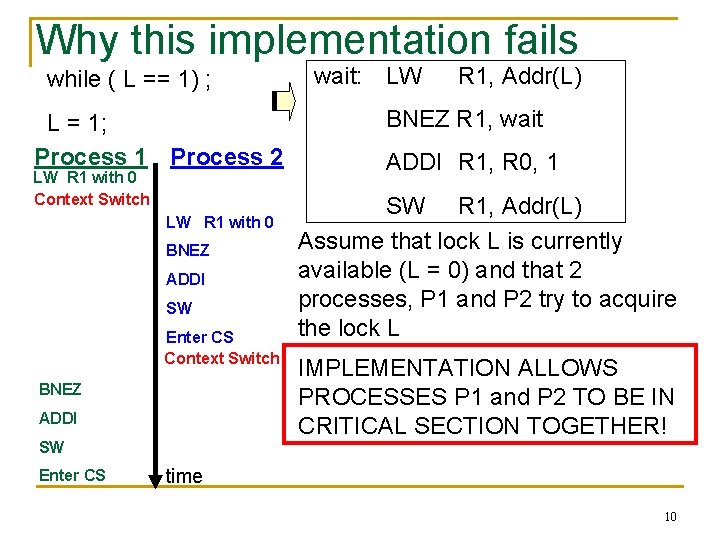

Why this implementation fails while ( L == 1) ; L = 1; Process 1 Process 2 LW R 1 with 0 Context Switch LW R 1 with 0 BNEZ ADDI SW Enter CS Context Switch BNEZ ADDI SW Enter CS wait: LW R 1, Addr(L) BNEZ R 1, wait ADDI R 1, R 0, 1 SW R 1, Addr(L) Assume that lock L is currently available (L = 0) and that 2 processes, P 1 and P 2 try to acquire the lock L IMPLEMENTATION ALLOWS PROCESSES P 1 and P 2 TO BE IN CRITICAL SECTION TOGETHER! time 10

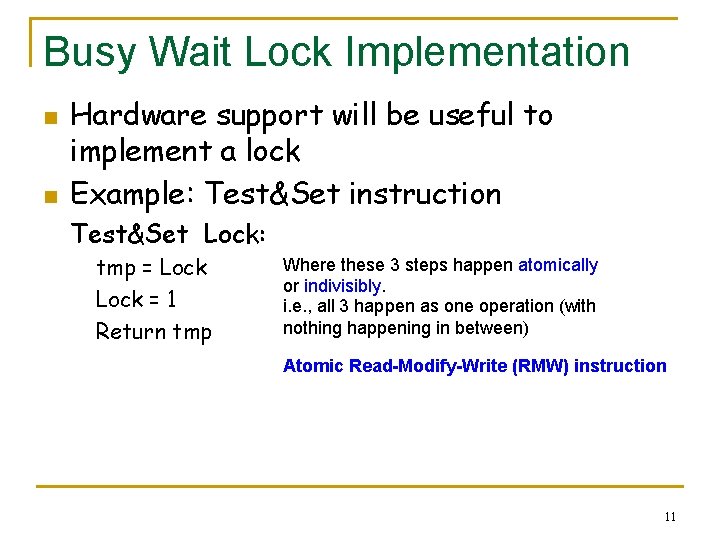

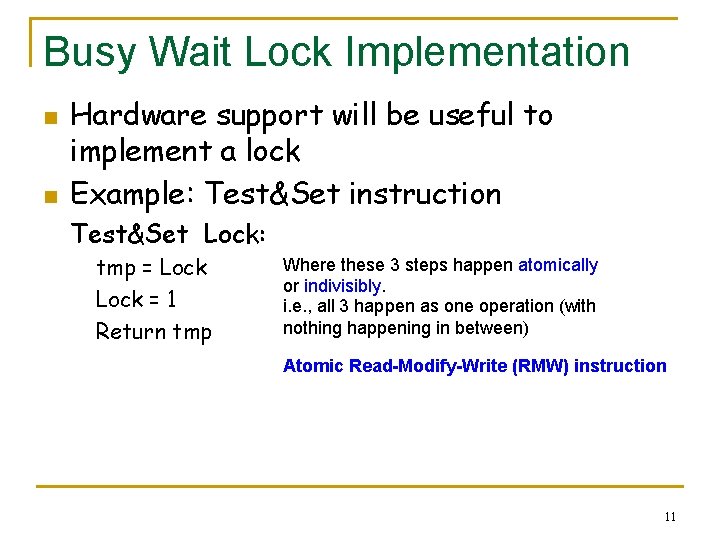

Busy Wait Lock Implementation n n Hardware support will be useful to implement a lock Example: Test&Set instruction Test&Set Lock: tmp = Lock = 1 Return tmp Where these 3 steps happen atomically or indivisibly. i. e. , all 3 happen as one operation (with nothing happening in between) Atomic Read-Modify-Write (RMW) instruction 11

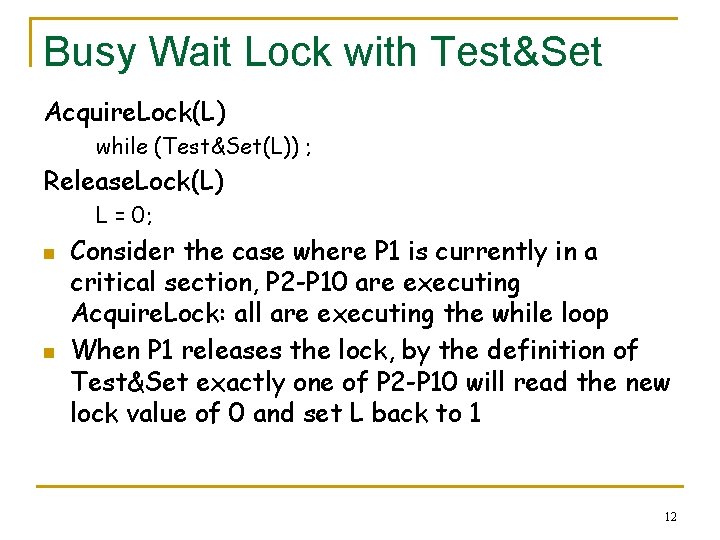

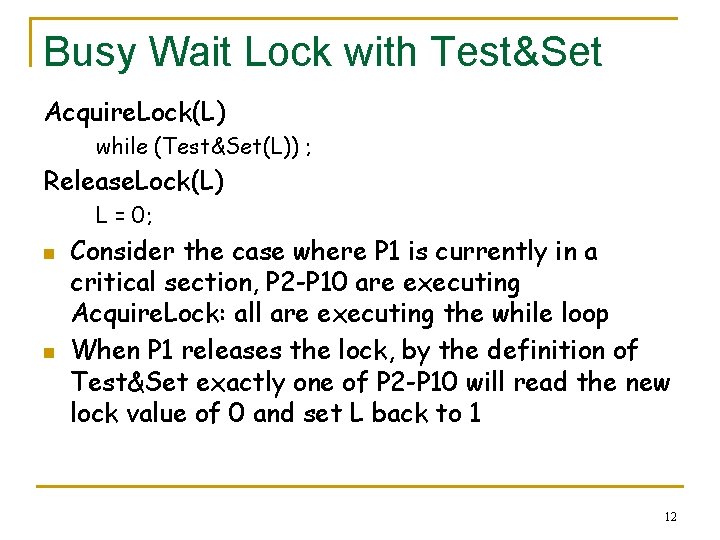

Busy Wait Lock with Test&Set Acquire. Lock(L) while (Test&Set(L)) ; Release. Lock(L) L = 0; n n Consider the case where P 1 is currently in a critical section, P 2 -P 10 are executing Acquire. Lock: all are executing the while loop When P 1 releases the lock, by the definition of Test&Set exactly one of P 2 -P 10 will read the new lock value of 0 and set L back to 1 12

More on Locks n Other names for this kind of lock q q q n Mutex Spin wait lock Busy wait lock Can have locks where instead of busy waiting, an unsuccessful process gets blocked by the operating system 13

Semaphore n n n A more general synchronization mechanism Operations: P (wait) and V (signal) P(S) q q q n if S is nonzero, decrements S and returns Else, suspends the process until S becomes nonzero, when the process is restarted After restarting, decrements S and returns V(S) q q Increments S by 1 If there are processes blocked for S, restarts exactly one of them 14

Critical Section Problem & Semaphore n Semaphore S = 1; n n n Before critical section: P(S) After critical section: V(S) Semaphores can do more than mutex locks q q Initialize S to 10 and 10 processes will be allowed to proceed P 1: read matrices; P 2: multiply; P 3: write product Semaphores S 1=S 2=0; End of P 1: V(S 1), beginning of P 2: P(S 1) etc 15

Deadlock Consider the following process: P 1: lock (L); wait(L); n n n P 1 is waiting for something (release of lock that it is holding) that will never happen Simple case of a general problem called deadlock Cycle of processes waiting for resources held by others while holding resources needed by others 16

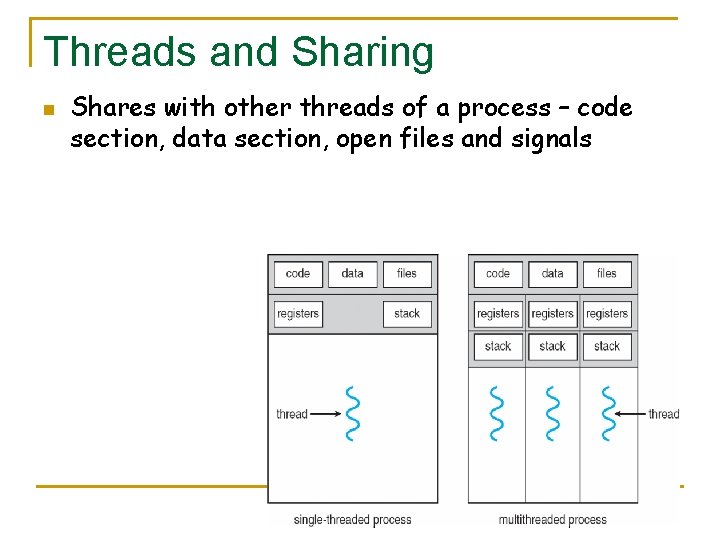

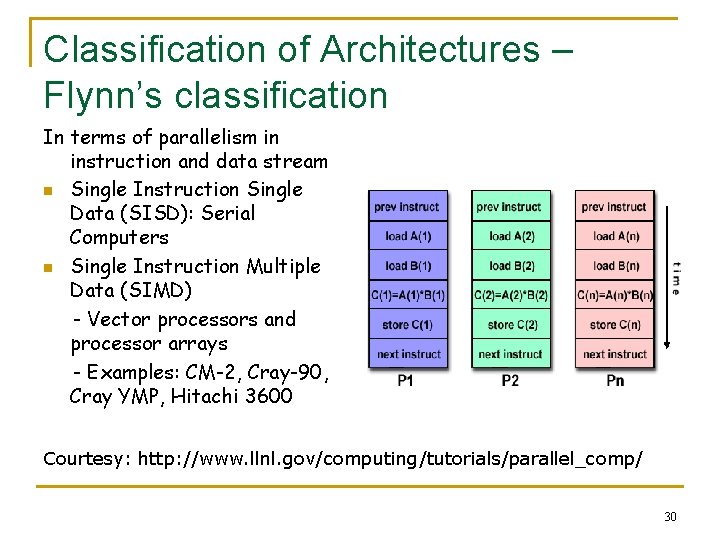

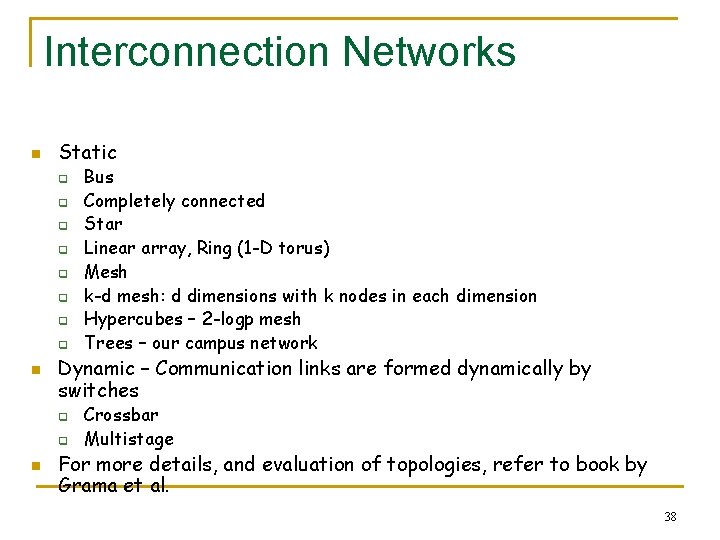

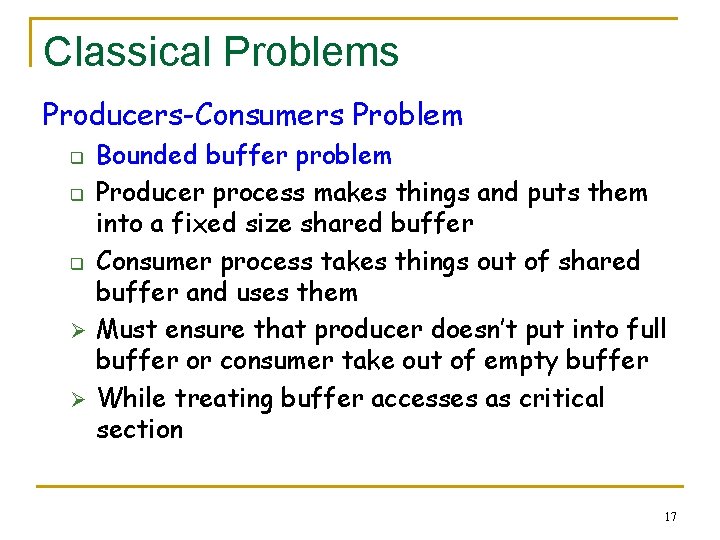

Classical Problems Producers-Consumers Problem Bounded buffer problem q Producer process makes things and puts them into a fixed size shared buffer q Consumer process takes things out of shared buffer and uses them Ø Must ensure that producer doesn’t put into full buffer or consumer take out of empty buffer Ø While treating buffer accesses as critical section q 17

![ProducersConsumers Problem shared Buffer0 N1 Producer repeatedly Produce x if buffer is full Producers-Consumers Problem shared Buffer[0. . N-1] Producer: repeatedly Produce x; if (buffer is full)](https://slidetodoc.com/presentation_image_h/b4ff141c521be3f882ca71139c171488/image-18.jpg)

Producers-Consumers Problem shared Buffer[0. . N-1] Producer: repeatedly Produce x; if (buffer is full) wait for consumption Buffer[i++] = x ; signal consumer Consumer: repeatedly If (buffer is empty) wait for production y = Buffer[- - i] Consume y ; signal producer 18

Dining Philosophers Problem n n N philosophers sitting around a circular table with a plate of food in front of each and a fork between each 2 philosophers Philosopher does: repeatedly Eat (using 2 forks) Think n Problem: Avoid deadock; be fair 19

THREADS Thread q q q n Weight related to q q q n a basic unit of CPU utilization Thread of control in a process `Light weight process’ Time for creation Time for context switch Size of context Recall context of process 20

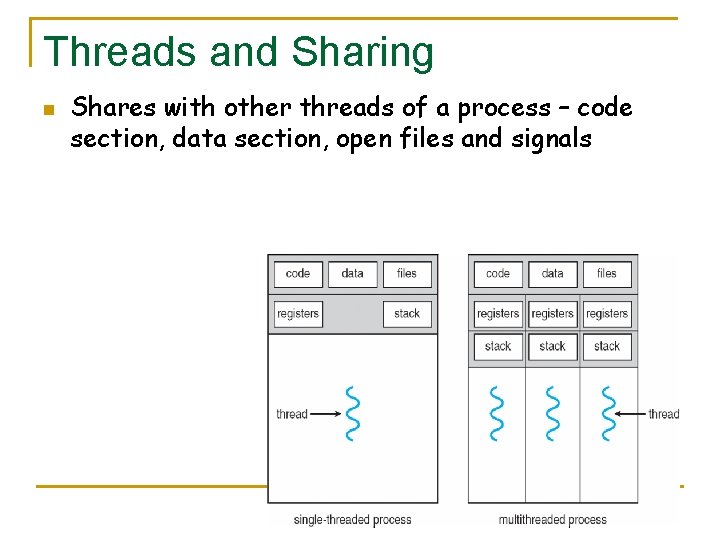

Threads and Processes n Thread context q q q n n So, thread context switching can be fast Many threads in same process that share parts of process context q n Thread id Stack pointer, PC, GPR values Virtual address space (other than stack) So, threads in the same process share variables that are not stack allocated 21

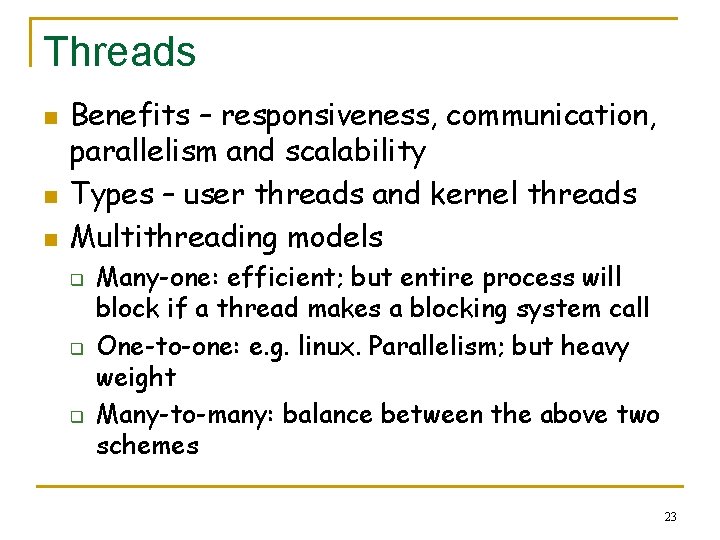

Threads and Sharing n Shares with other threads of a process – code section, data section, open files and signals 22

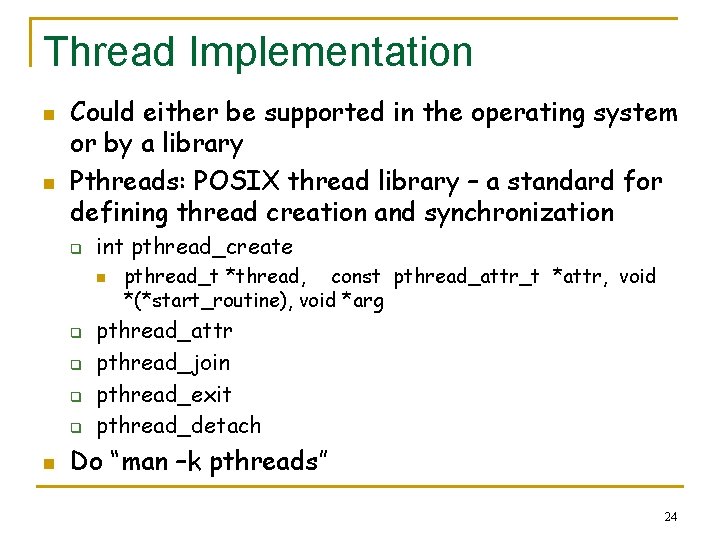

Threads n n n Benefits – responsiveness, communication, parallelism and scalability Types – user threads and kernel threads Multithreading models q q q Many-one: efficient; but entire process will block if a thread makes a blocking system call One-to-one: e. g. linux. Parallelism; but heavy weight Many-to-many: balance between the above two schemes 23

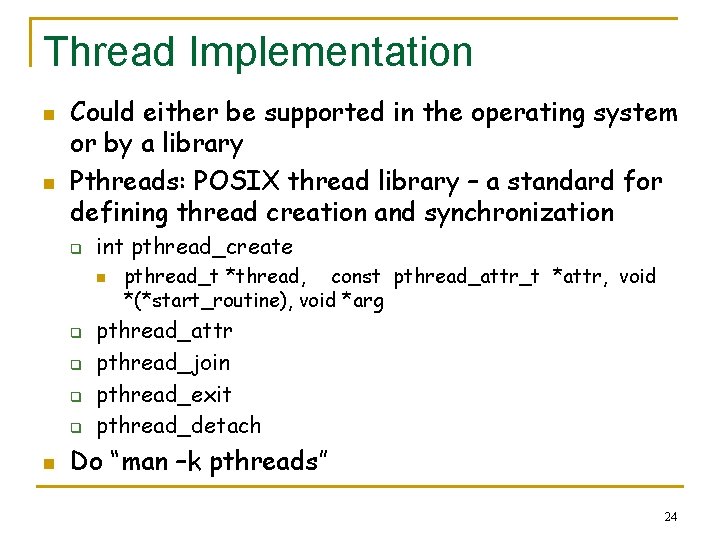

Thread Implementation n n Could either be supported in the operating system or by a library Pthreads: POSIX thread library – a standard for defining thread creation and synchronization q int pthread_create n q q n pthread_t *thread, const pthread_attr_t *attr, void *(*start_routine), void *arg pthread_attr pthread_join pthread_exit pthread_detach Do “man –k pthreads” 24

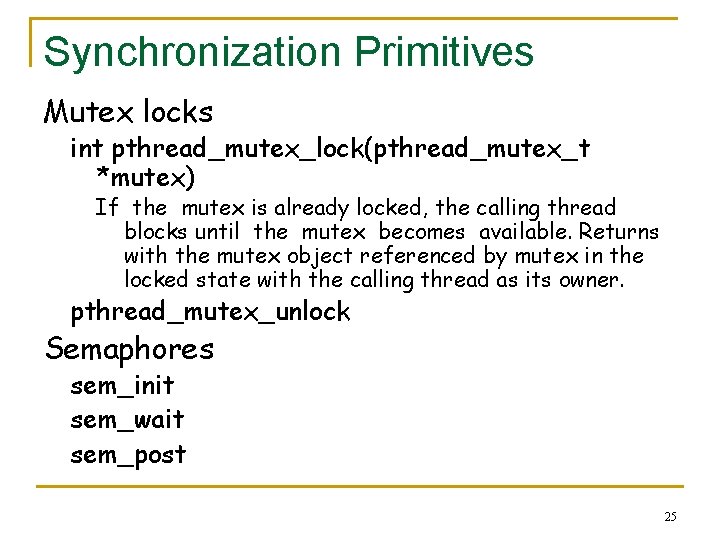

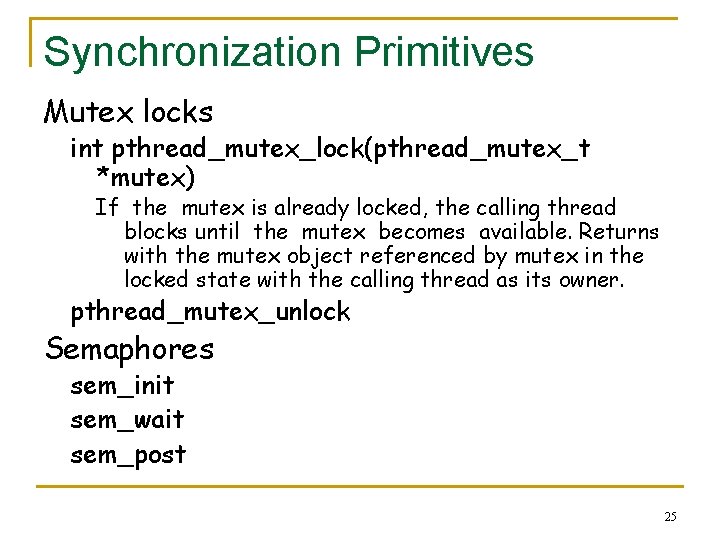

Synchronization Primitives Mutex locks int pthread_mutex_lock(pthread_mutex_t *mutex) If the mutex is already locked, the calling thread blocks until the mutex becomes available. Returns with the mutex object referenced by mutex in the locked state with the calling thread as its owner. pthread_mutex_unlock Semaphores sem_init sem_wait sem_post 25

Pthread scheduling n n n Process contention scope – scheduling userlevel threads among a set of kernel threads. System contention scope – scheduling kernel threads for CPU. Functions for setting the scope pthread_attr_setscope, pthread_attr_getscope q Can use PTHREAD_SCOPE_PROCESS for PCS and PTHREAD_SCOPE_SYSTEM for SCS 26

Thread Safety n n A function is thread safe if it always produces correct results when called repeatedly from concurrent multiple threads Thread Unsafe functions q q n That don’t protect shared variables That keep state across multiple invocations That return a pointer to a static variable That call thread unsafe functions Races q When correctness of a program depends on one thread reaching a point x before another thread reaching a point y 27

PARALLEL ARCHITECTURE 28

PARALLEL ARCHITECTURE Parallel Machine: a computer system with more than one processor Motivations • Faster Execution time due to non-dependencies between regions of code • Presents a level of modularity • Resource constraints. Large databases. • Certain class of algorithms lend themselves • Aggregate bandwidth to memory/disk. Increase in data throughput. • Clock rate improvement in the past decade – 40% • Memory access time improvement in the past decade – 10% 29

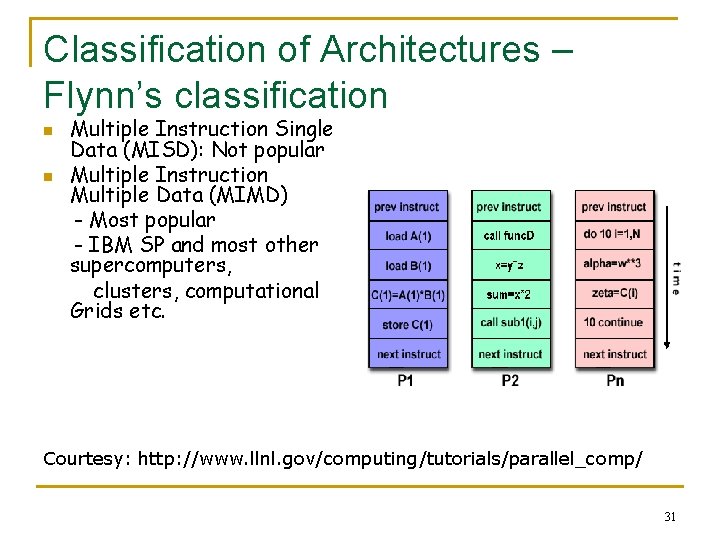

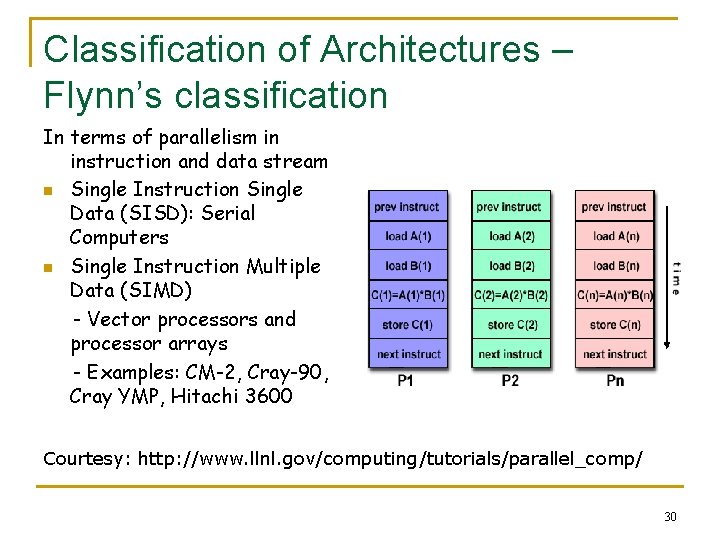

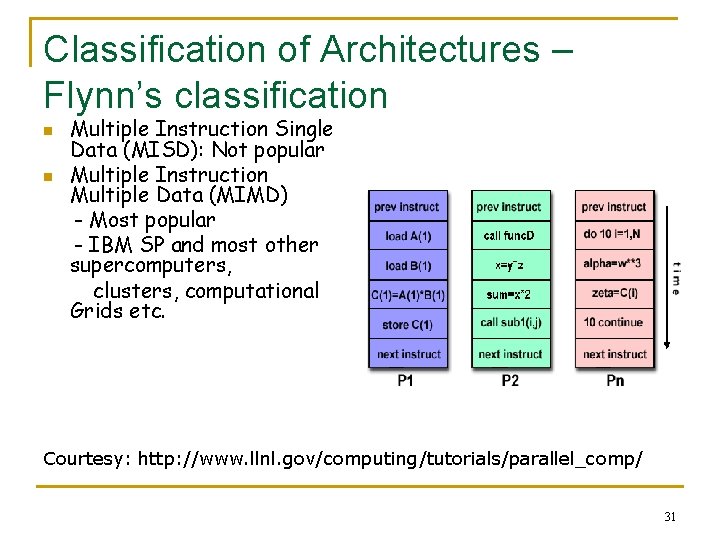

Classification of Architectures – Flynn’s classification In terms of parallelism in instruction and data stream n Single Instruction Single Data (SISD): Serial Computers n Single Instruction Multiple Data (SIMD) - Vector processors and processor arrays - Examples: CM-2, Cray-90, Cray YMP, Hitachi 3600 Courtesy: http: //www. llnl. gov/computing/tutorials/parallel_comp/ 30

Classification of Architectures – Flynn’s classification n n Multiple Instruction Single Data (MISD): Not popular Multiple Instruction Multiple Data (MIMD) - Most popular - IBM SP and most other supercomputers, clusters, computational Grids etc. Courtesy: http: //www. llnl. gov/computing/tutorials/parallel_comp/ 31

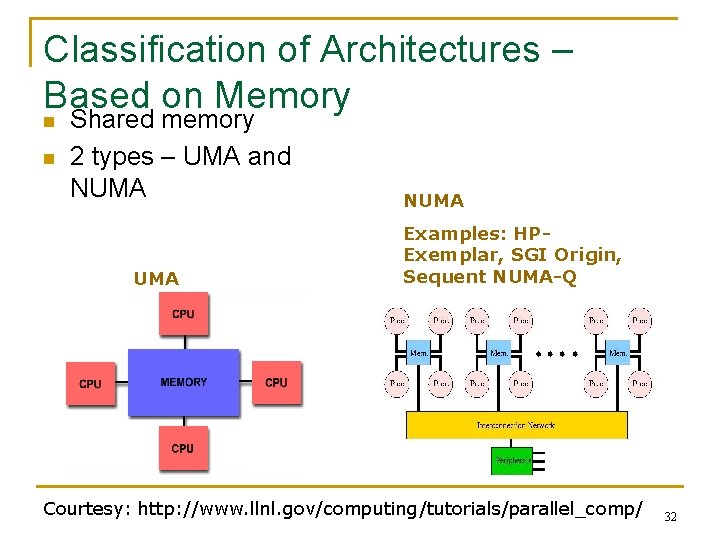

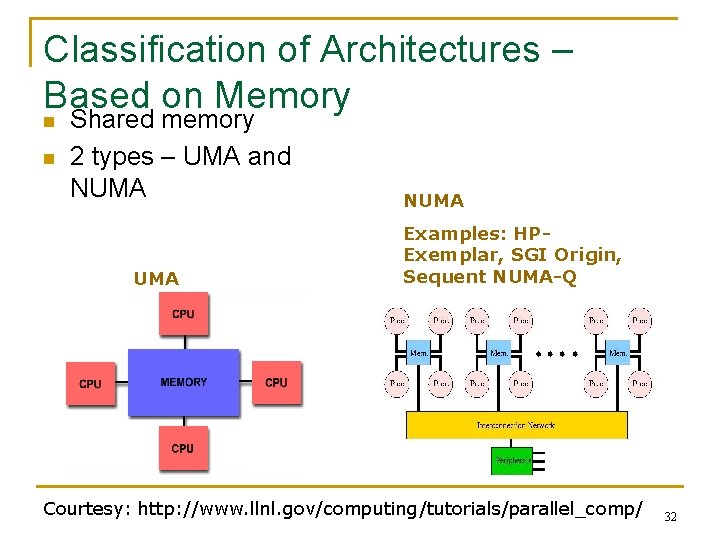

Classification of Architectures – Based on Memory n n Shared memory 2 types – UMA and NUMA Examples: HPExemplar, SGI Origin, Sequent NUMA-Q Courtesy: http: //www. llnl. gov/computing/tutorials/parallel_comp/ 32

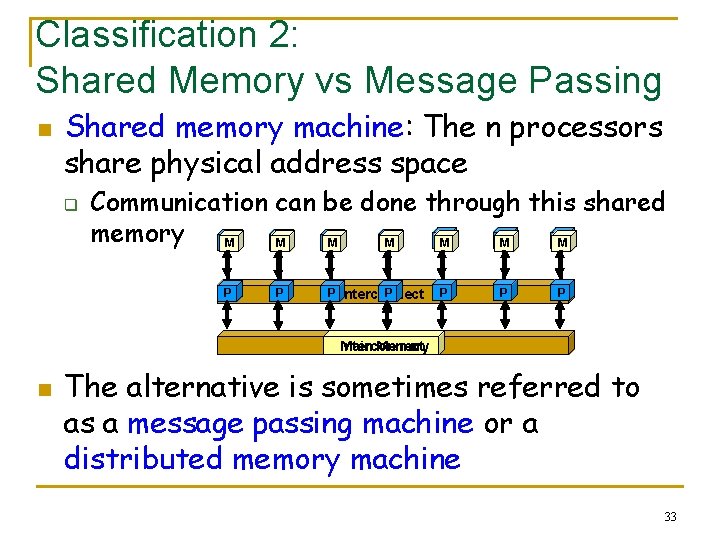

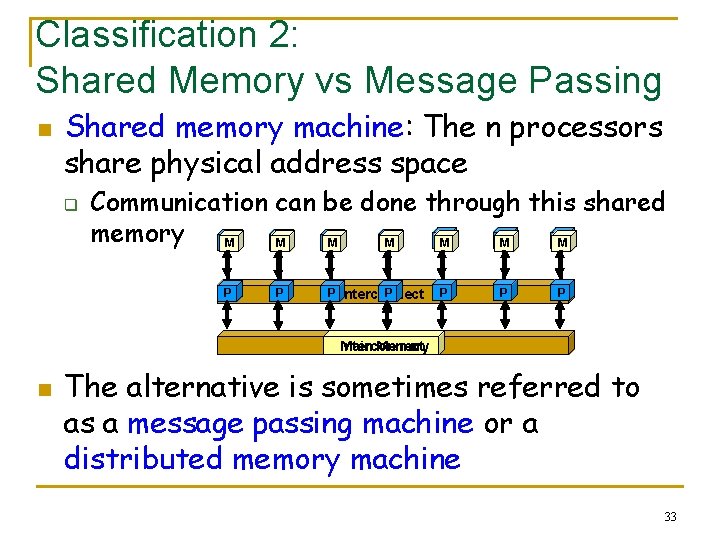

Classification 2: Shared Memory vs Message Passing n Shared memory machine: The n processors share physical address space q Communication can be done through this shared memory P P P P M M M M P P P Interconnect Main Memory n The alternative is sometimes referred to as a message passing machine or a distributed memory machine 33

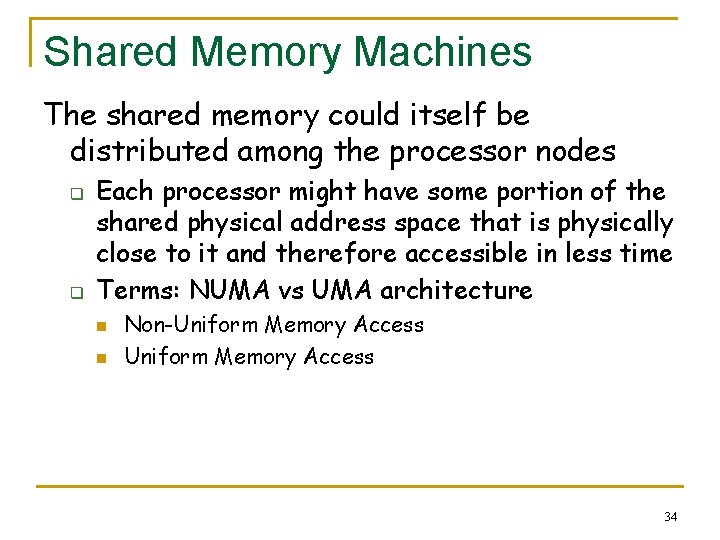

Shared Memory Machines The shared memory could itself be distributed among the processor nodes q q Each processor might have some portion of the shared physical address space that is physically close to it and therefore accessible in less time Terms: NUMA vs UMA architecture n n Non-Uniform Memory Access 34

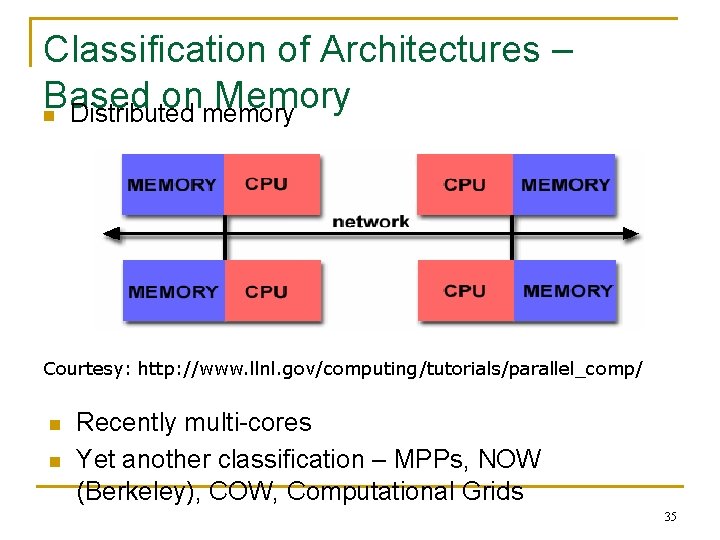

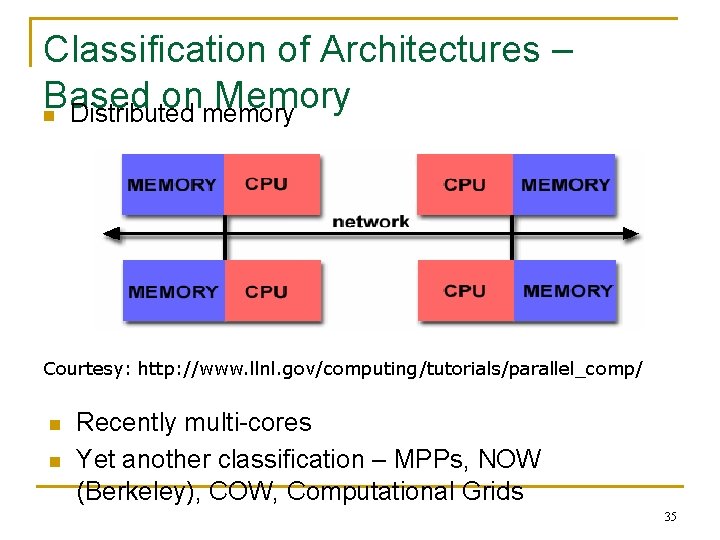

Classification of Architectures – Based on Memory n Distributed memory Courtesy: http: //www. llnl. gov/computing/tutorials/parallel_comp/ n n Recently multi-cores Yet another classification – MPPs, NOW (Berkeley), COW, Computational Grids 35

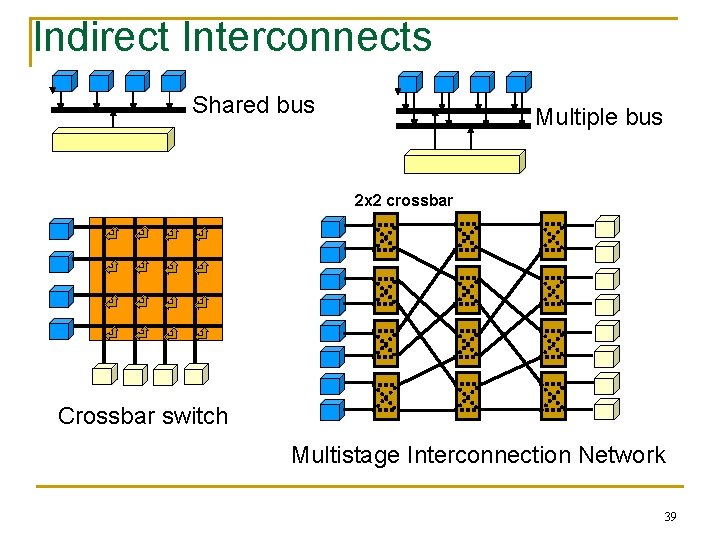

Parallel Architecture: Interconnection Networks n An interconnection network defined by switches, links and interfaces q q Switches – provide mapping between input and output ports, buffering, routing etc. Interfaces – connects nodes with network 36

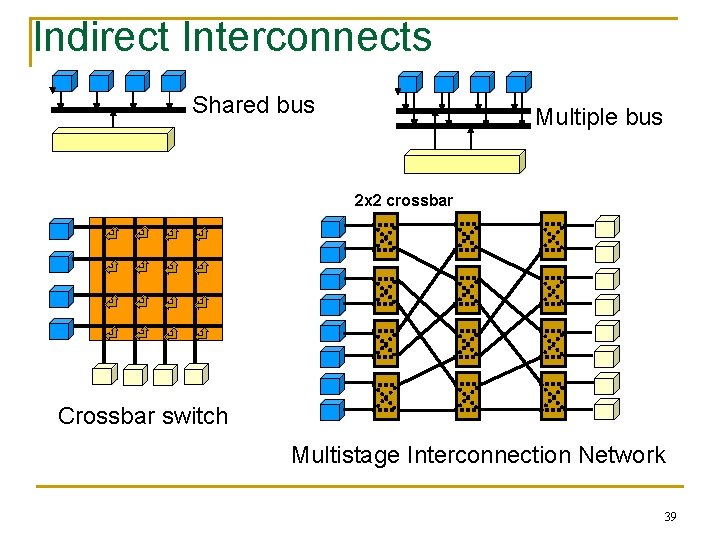

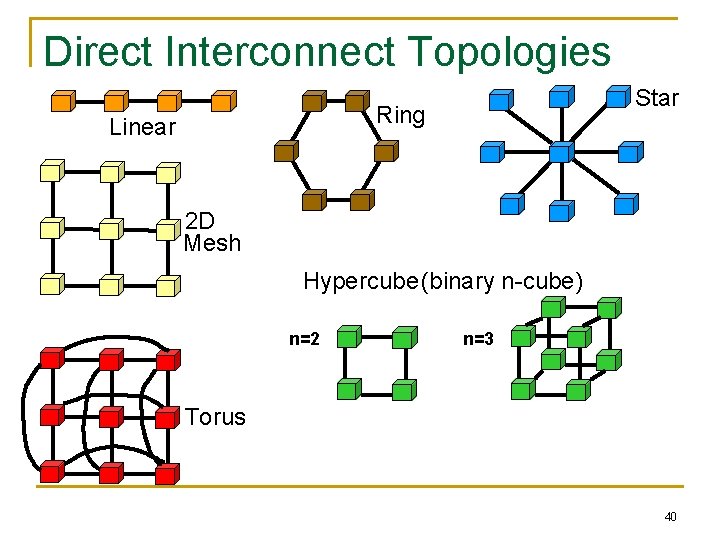

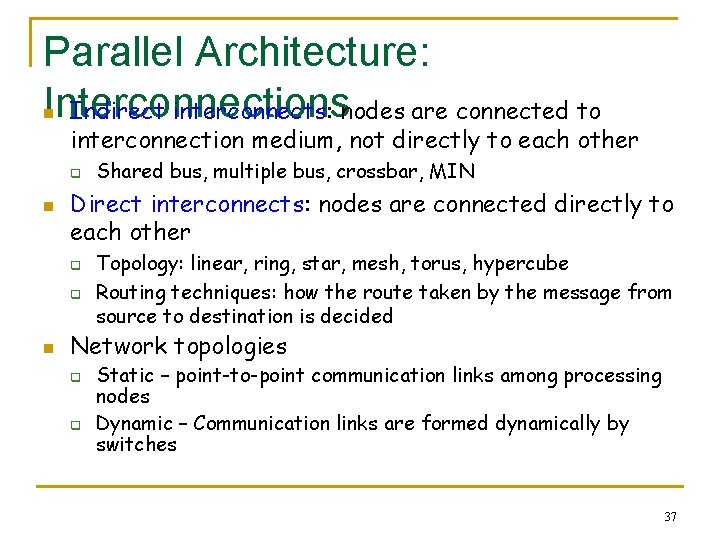

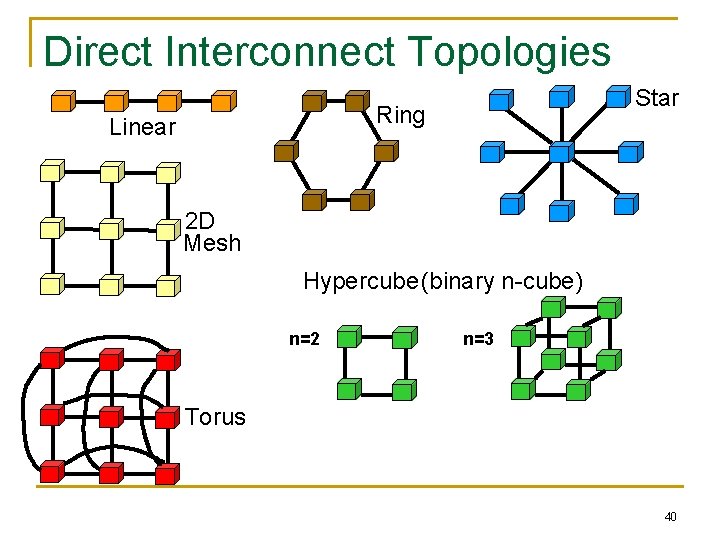

Parallel Architecture: Interconnections Indirect interconnects: nodes are connected to n interconnection medium, not directly to each other q n Direct interconnects: nodes are connected directly to each other q q n Shared bus, multiple bus, crossbar, MIN Topology: linear, ring, star, mesh, torus, hypercube Routing techniques: how the route taken by the message from source to destination is decided Network topologies q q Static – point-to-point communication links among processing nodes Dynamic – Communication links are formed dynamically by switches 37

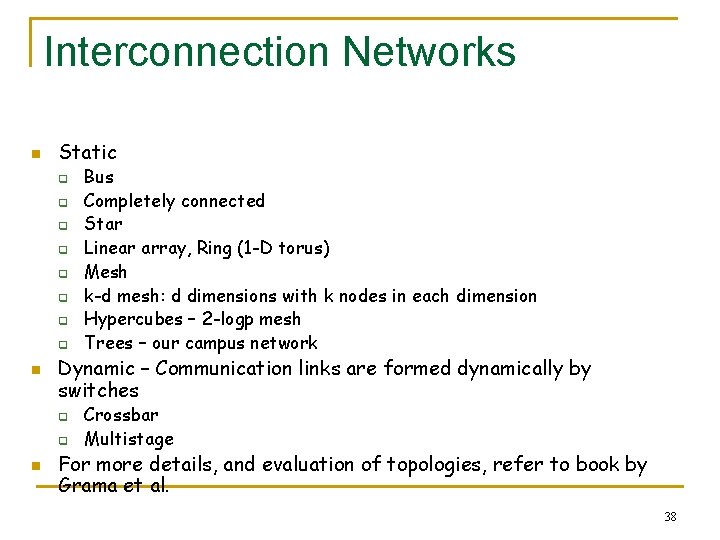

Interconnection Networks n Static q q q q n Dynamic – Communication links are formed dynamically by switches q q n Bus Completely connected Star Linear array, Ring (1 -D torus) Mesh k-d mesh: d dimensions with k nodes in each dimension Hypercubes – 2 -logp mesh Trees – our campus network Crossbar Multistage For more details, and evaluation of topologies, refer to book by Grama et al. 38

Indirect Interconnects Shared bus Multiple bus 2 x 2 crossbar Crossbar switch Multistage Interconnection Network 39

Direct Interconnect Topologies Star Ring Linear 2 D Mesh Hypercube(binary n-cube) n=2 n=3 Torus 40

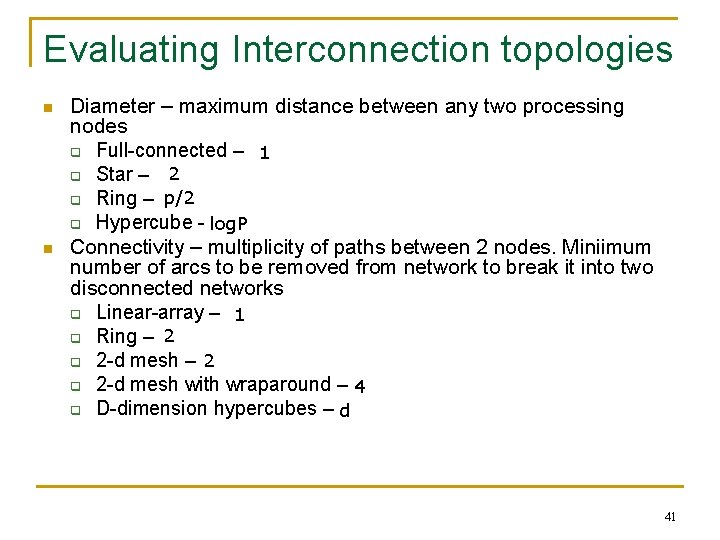

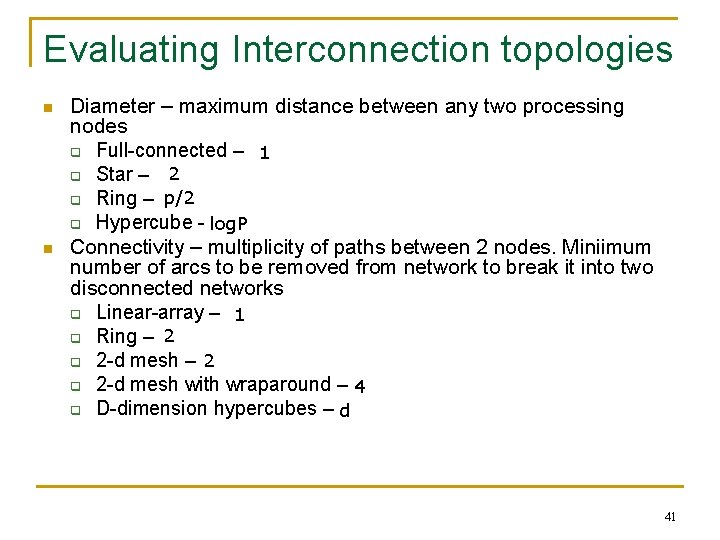

Evaluating Interconnection topologies n n Diameter – maximum distance between any two processing nodes q Full-connected – 1 2 q Star – q Ring – p/2 q Hypercube - log. P Connectivity – multiplicity of paths between 2 nodes. Miniimum number of arcs to be removed from network to break it into two disconnected networks q Linear-array – 1 q Ring – 2 q 2 -d mesh with wraparound – 4 q D-dimension hypercubes – d 41

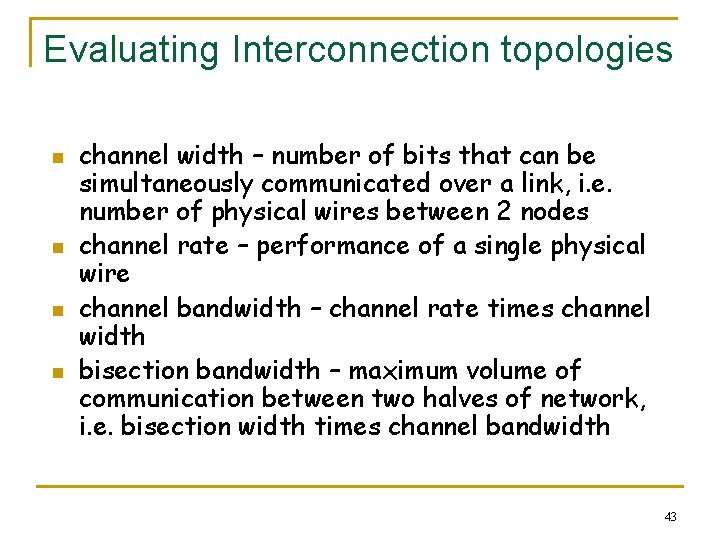

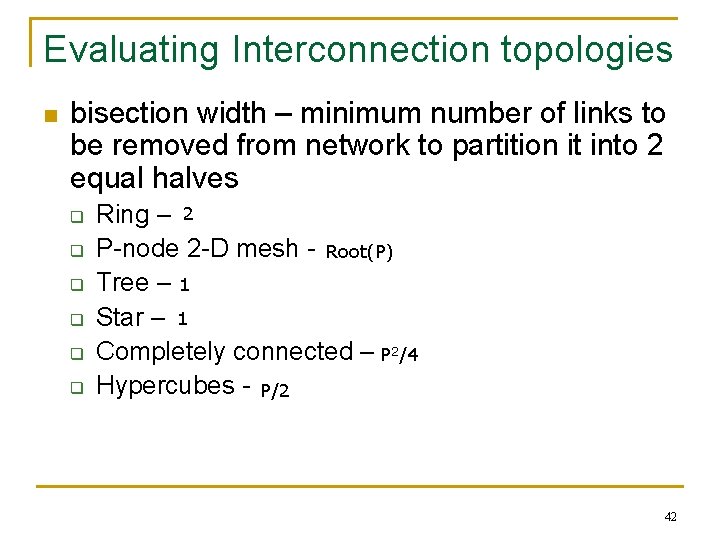

Evaluating Interconnection topologies n bisection width – minimum number of links to be removed from network to partition it into 2 equal halves q q q Ring – 2 P-node 2 -D mesh - Root(P) Tree – 1 Star – 1 Completely connected – P 2/4 Hypercubes - P/2 42

Evaluating Interconnection topologies n n channel width – number of bits that can be simultaneously communicated over a link, i. e. number of physical wires between 2 nodes channel rate – performance of a single physical wire channel bandwidth – channel rate times channel width bisection bandwidth – maximum volume of communication between two halves of network, i. e. bisection width times channel bandwidth 43

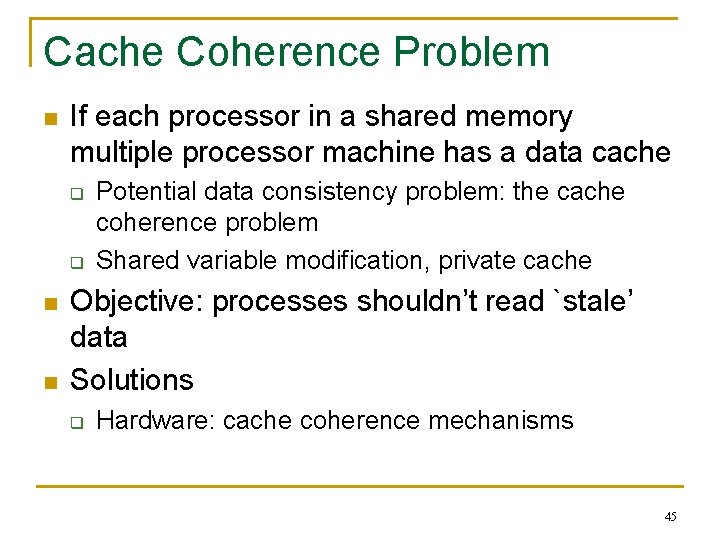

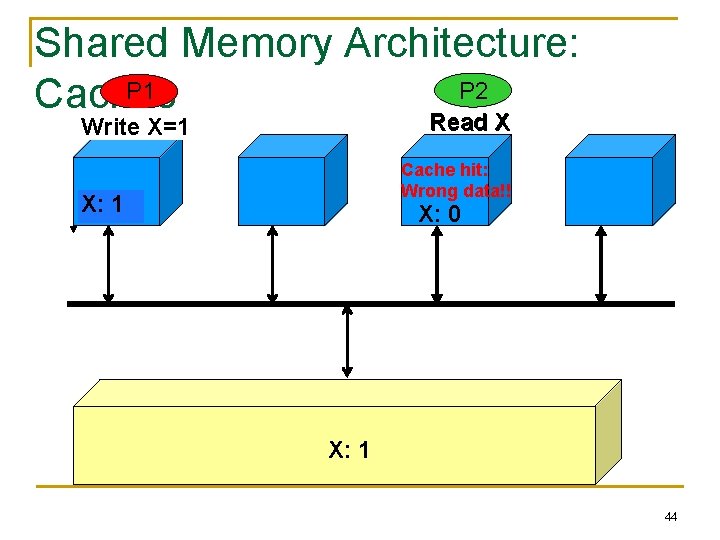

Shared Memory Architecture: P 2 P 1 Caches Read. X=1 X Write Read X Cache hit: Wrong data!! X: X: 10 X: 1 0 44

Cache Coherence Problem n If each processor in a shared memory multiple processor machine has a data cache q q n n Potential data consistency problem: the cache coherence problem Shared variable modification, private cache Objective: processes shouldn’t read `stale’ data Solutions q Hardware: cache coherence mechanisms 45

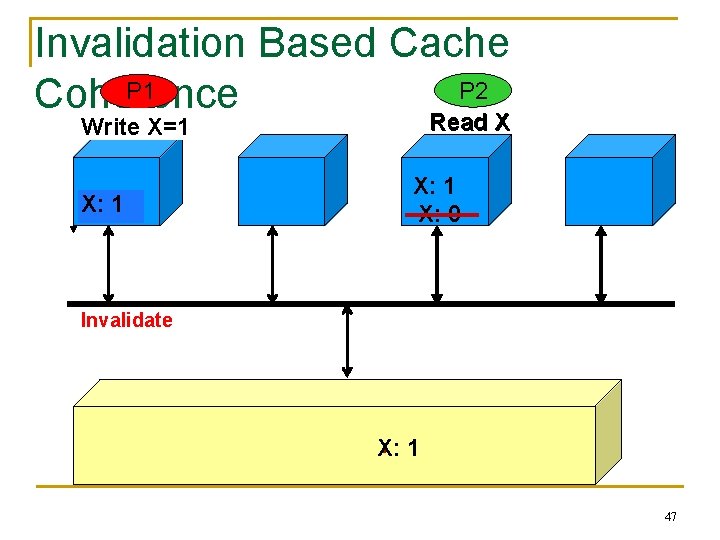

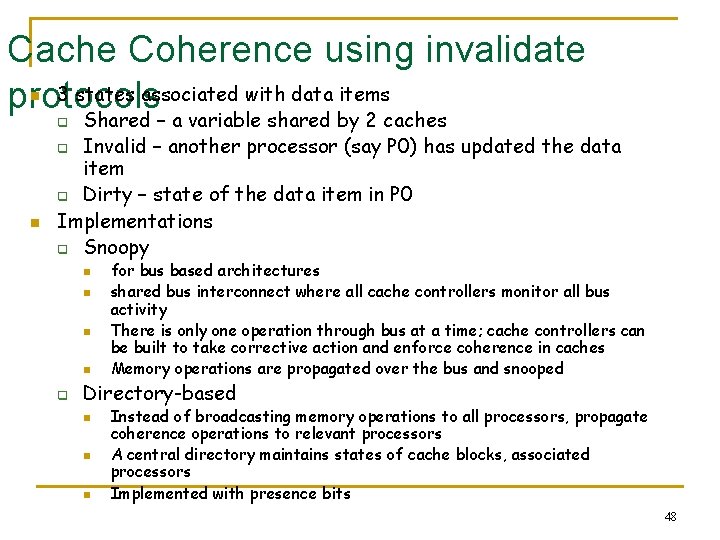

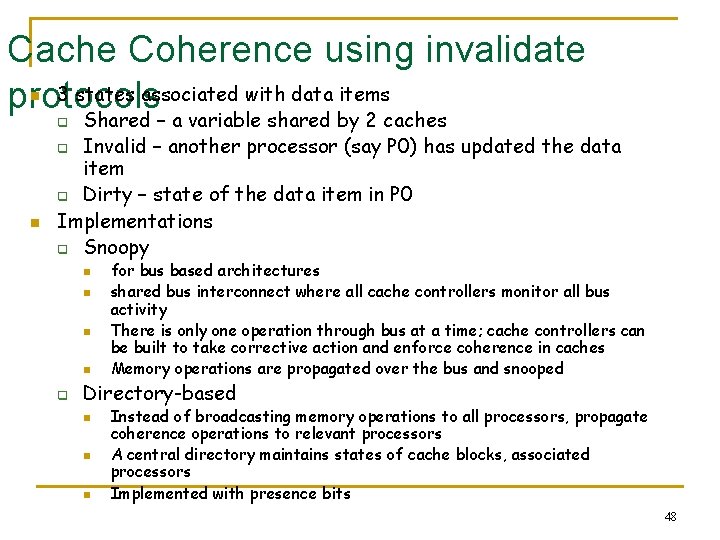

Cache Coherence Protocols n n n Write update – propagate cache line to other processors on every write to a processor Write invalidate – each processor gets the updated cache line whenever it reads stale data Which is better? 46

Invalidation Based Cache P 2 P 1 Coherence Read. X=1 X Write X: X: 10 Read X X: 1 X: 0 Invalidate X: 0 X: 1 47

Cache Coherence using invalidate 3 states associated with data items protocols n Shared – a variable shared by 2 caches q Invalid – another processor (say P 0) has updated the data item q Dirty – state of the data item in P 0 Implementations q Snoopy q n n n q for bus based architectures shared bus interconnect where all cache controllers monitor all bus activity There is only one operation through bus at a time; cache controllers can be built to take corrective action and enforce coherence in caches Memory operations are propagated over the bus and snooped Directory-based n n n Instead of broadcasting memory operations to all processors, propagate coherence operations to relevant processors A central directory maintains states of cache blocks, associated processors Implemented with presence bits 48