SE 433333 Software Testing Quality Assurance Dennis Mumaugh

![Assignment 1 Lessons Learned § Consider: public class Triangle { public static void main(String[] Assignment 1 Lessons Learned § Consider: public class Triangle { public static void main(String[]](https://slidetodoc.com/presentation_image_h/be52d05681641345497be654e48ccd0f/image-9.jpg)

![Parameterized Tests Example – The Parameter Method @Parameters public static Collection<Integer[]> data() { return Parameterized Tests Example – The Parameter Method @Parameters public static Collection<Integer[]> data() { return](https://slidetodoc.com/presentation_image_h/be52d05681641345497be654e48ccd0f/image-98.jpg)

- Slides: 101

SE 433/333 Software Testing & Quality Assurance Dennis Mumaugh, Instructor dmumaugh@depaul. edu Office: CDM, Room 428 Office Hours: Tuesday, 4: 00 – 5: 30 April 18, 2017 SE 433: Lecture 4 1 of 101

Administrivia § Comments and feedback § Announcements Solution to Assignment 1 and 2 have been posted to D 2 L § Hints Ø Ø Ø Look at the Java documentation. For example: http: //docs. oracle. com/javase/8/docs/api/java/lang/Double. html Look at the examples (JUnit 2. zip) mentioned on the reading list and provided in D 2 L. In solving a problem, try getting the example working first. April 18, 2017 SE 433: Lecture 4 2 of 101

SE 433 – Class 4 Topic: Ø Black Box Testing, JUnit Part 2 Reading: Ø Ø Pezze and Young: Chapters 9 -10 JUnit documentation: http: //junit. org An example of parameterized test: JUnit 2. zip in D 2 L See also reading list April 18, 2017 SE 433: Lecture 4 3 of 101

Assignments 4 and 5 Assignment 4: Parameterized Test § The objective of this assignment is to develop a parameterized JUnit test and run JUnit tests using Eclipse IDE. [See JUnit 2. zip] § Due Date: April 25, 2017, 11: 59 pm Assignment 5: Black Box Testing – Part 1: Test Case Design § The objective of this assignment is to design test suites using blackbox techniques to adequately test the programs specified below. You may use any combination of black-box testing techniques to design the test cases. § Due date: May 2, 2017, 11: 59 pm April 18, 2017 SE 433: Lecture 4 4 of 101

Thought for the Day “More than the act of testing, the act of designing tests is one of the best bug preventers known. The thinking that must be done to create a useful test can discover and eliminate bugs before they are coded – indeed, test-design thinking can discover and eliminate bugs at every stage in the creation of software, from conception to specification, to design, coding and the rest. ” – Boris Beizer April 18, 2017 SE 433: Lecture 4 5 of 101

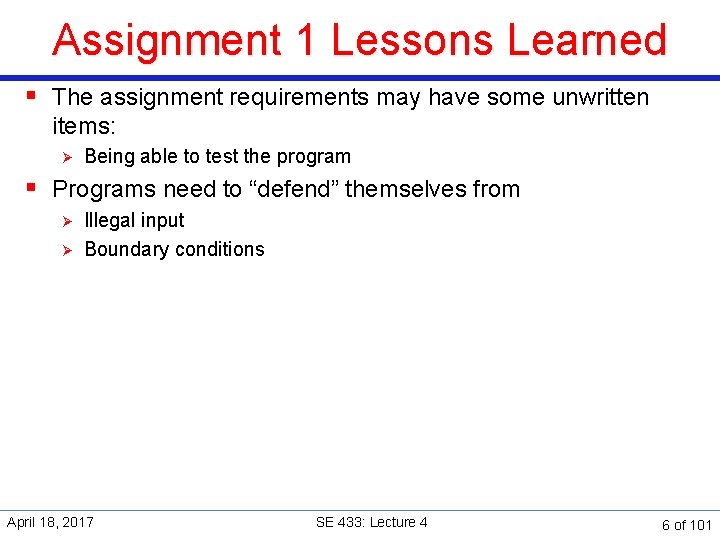

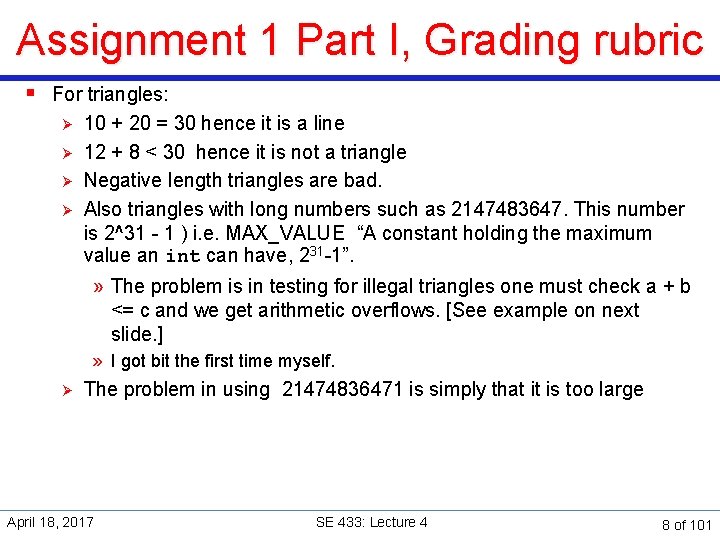

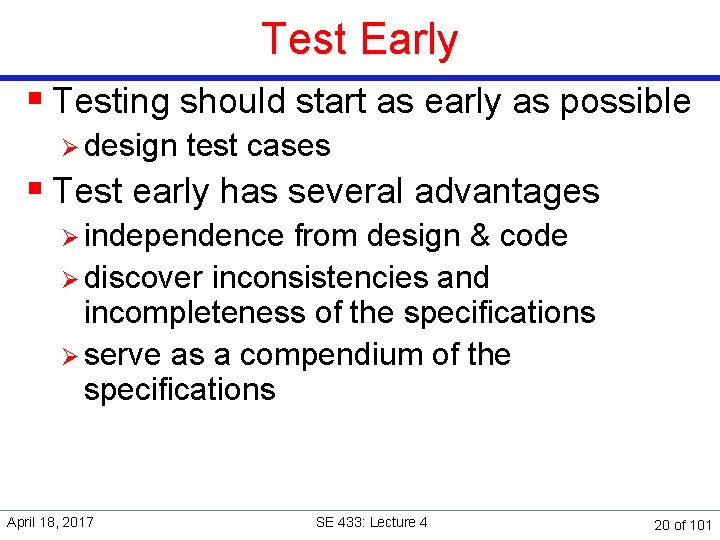

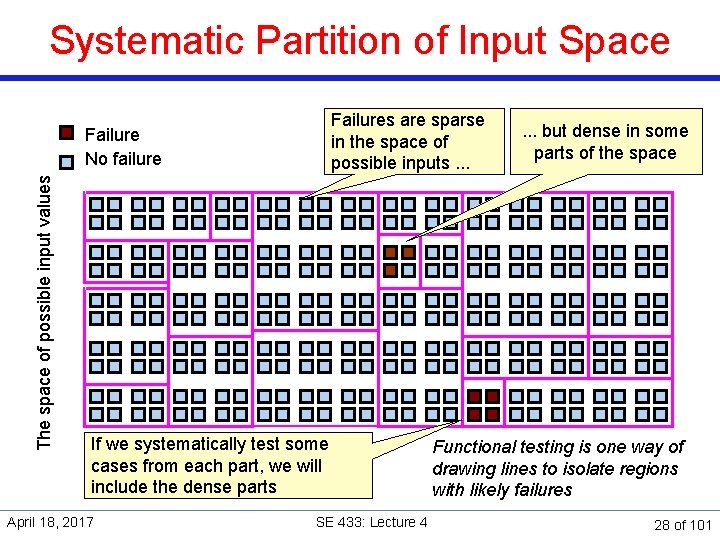

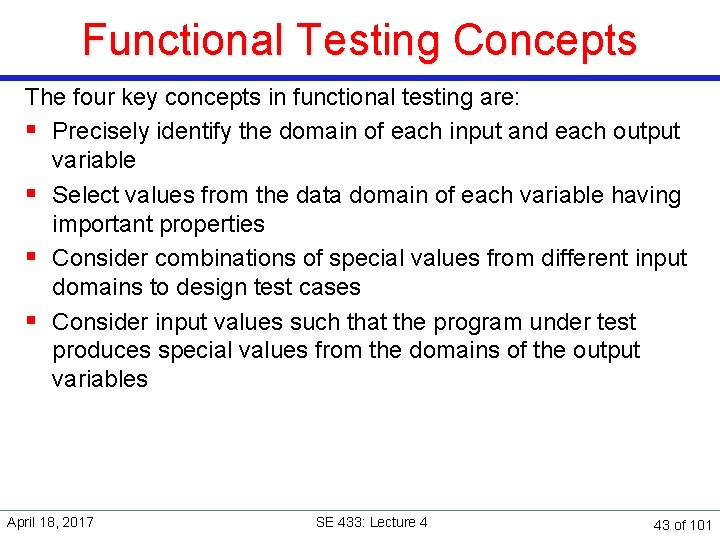

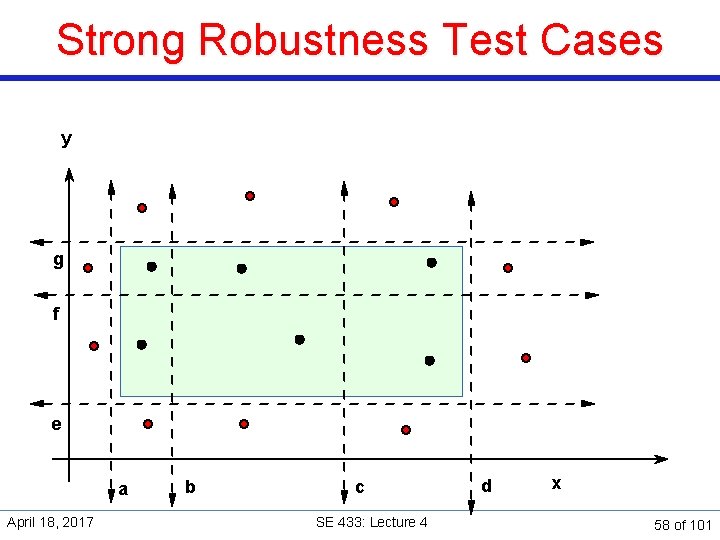

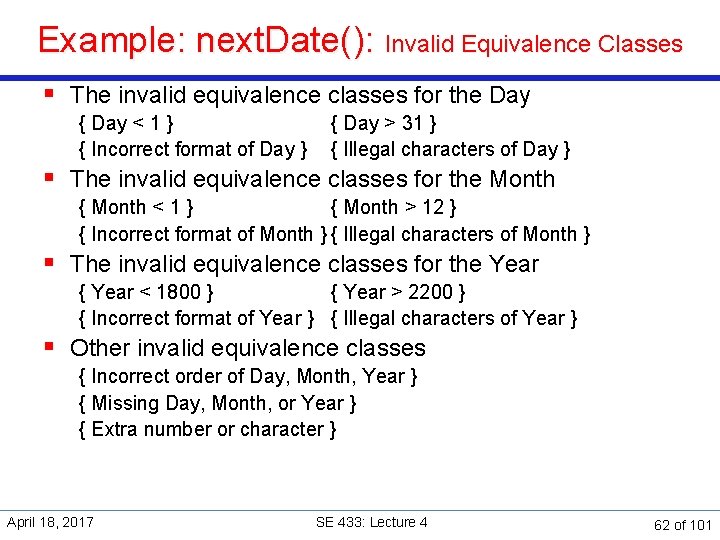

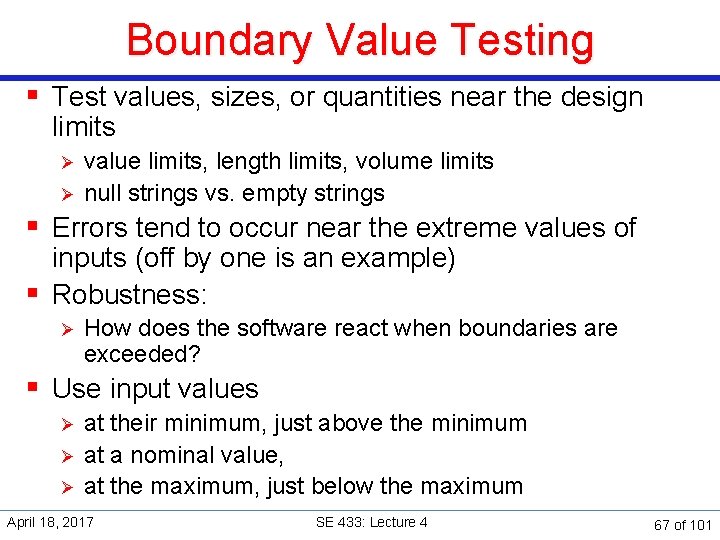

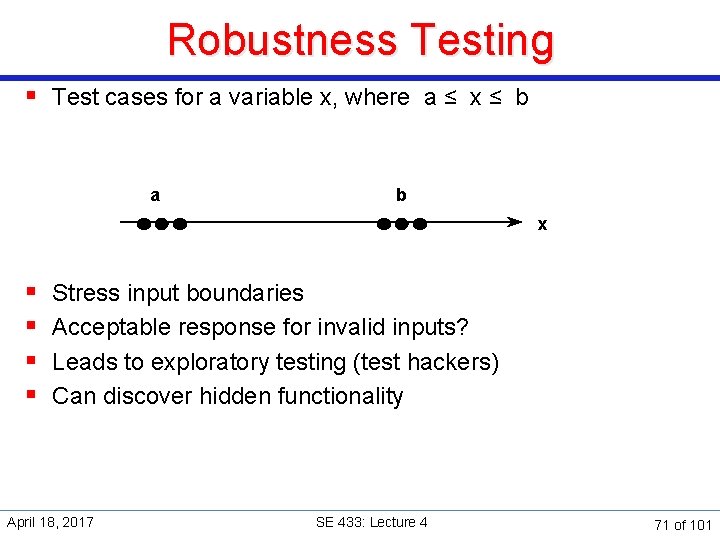

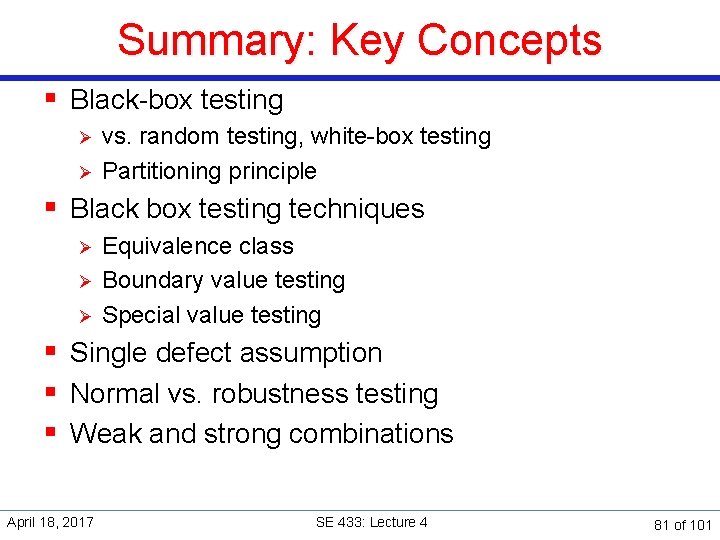

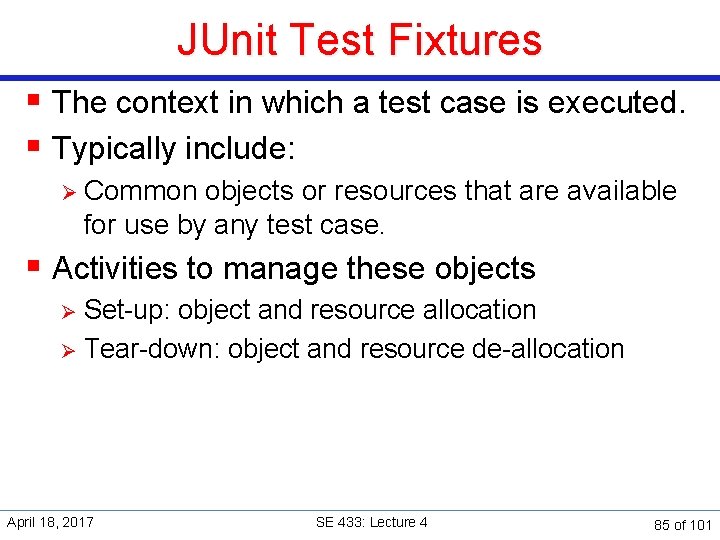

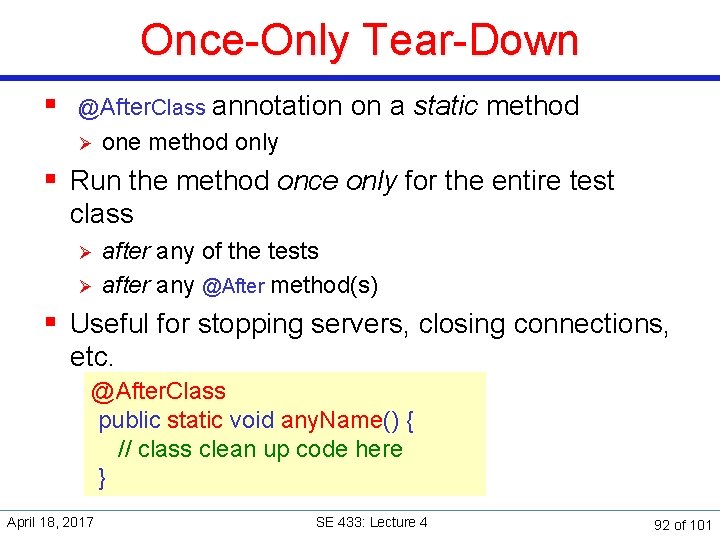

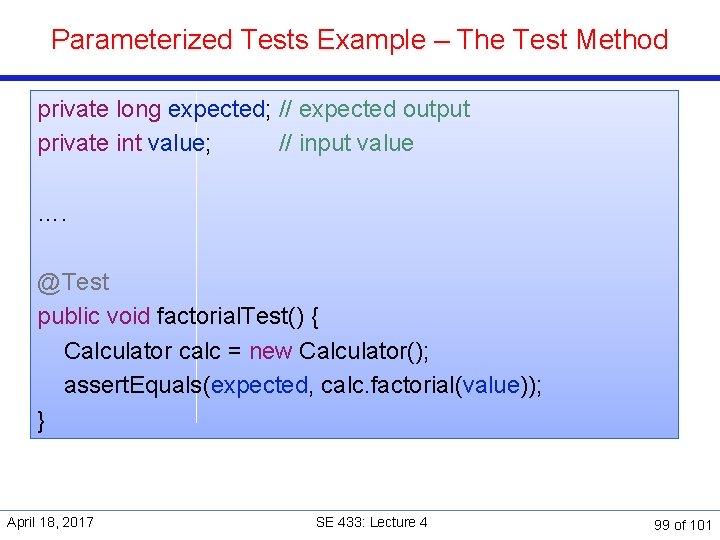

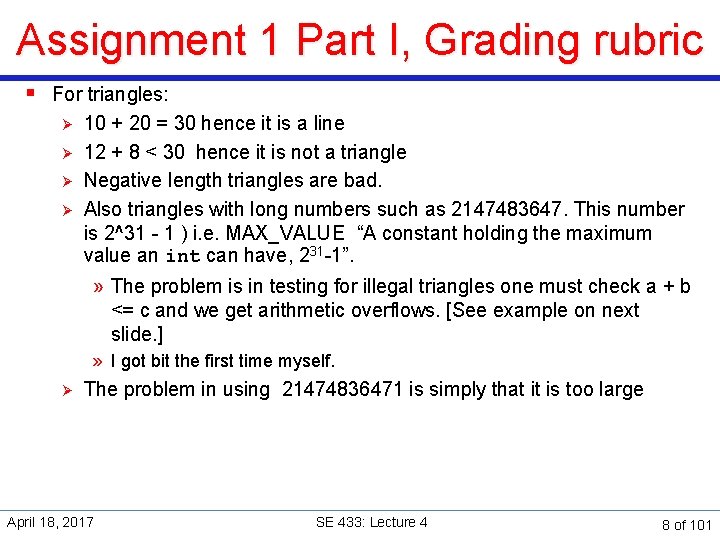

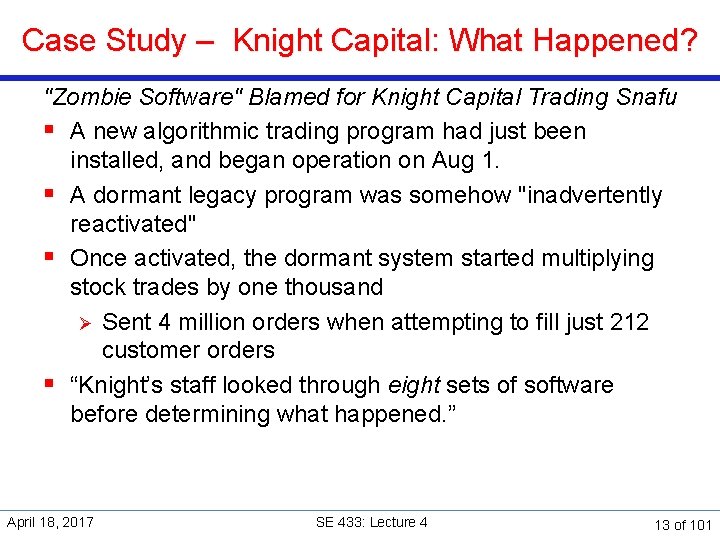

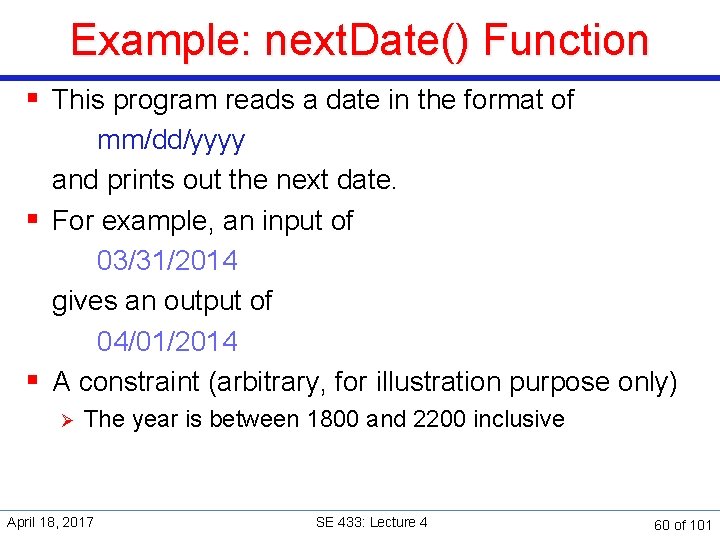

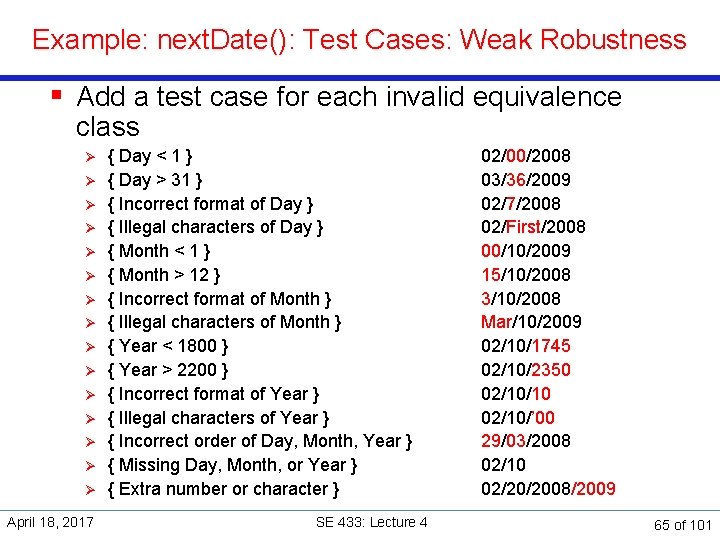

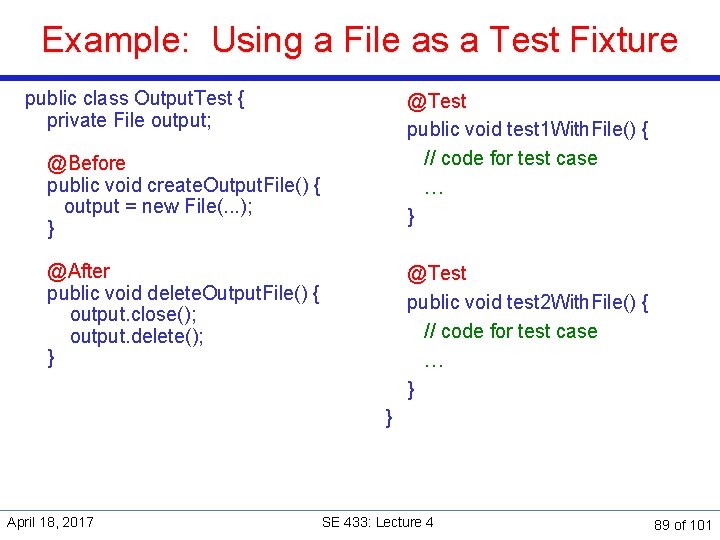

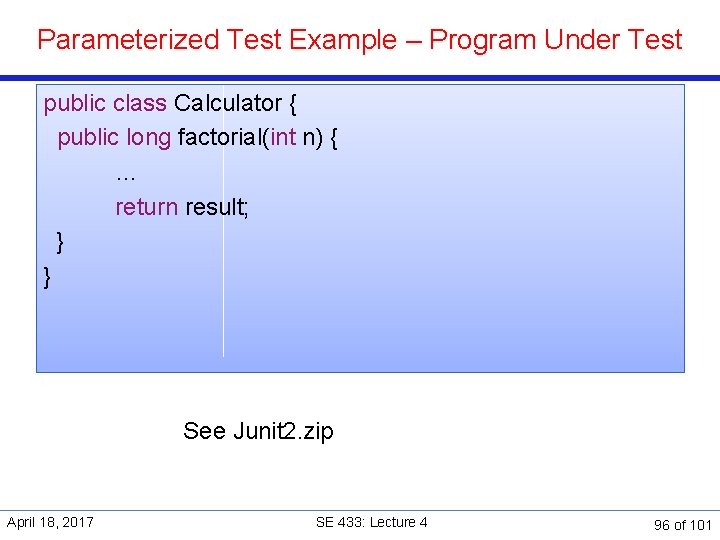

Assignment 1 Lessons Learned § The assignment requirements may have some unwritten items: Ø Being able to test the program § Programs need to “defend” themselves from Ø Ø Illegal input Boundary conditions April 18, 2017 SE 433: Lecture 4 6 of 101

Assignment 1 Part I, Grading rubric Part I: 10 Points § Compiles and runs and handles standard tests § Handles invalid cases -1 Ø Not a triangle (a + b < c) Ø Degenerate triangle (a + b = c), aka line § Handles illegal input -1 Ø Negative numbers Ø Floating point numbers Ø Extra large integers and MAXINT [See above triangle test]. Ø Text § If the code handles most of the special condition give it the point. April 18, 2017 SE 433: Lecture 4 7 of 101

Assignment 1 Part I, Grading rubric § For triangles: Ø Ø 10 + 20 = 30 hence it is a line 12 + 8 < 30 hence it is not a triangle Negative length triangles are bad. Also triangles with long numbers such as 2147483647. This number is 2^31 - 1 ) i. e. MAX_VALUE “A constant holding the maximum value an int can have, 231 -1”. » The problem is in testing for illegal triangles one must check a + b <= c and we get arithmetic overflows. [See example on next slide. ] » I got bit the first time myself. Ø The problem in using 21474836471 is simply that it is too large April 18, 2017 SE 433: Lecture 4 8 of 101

![Assignment 1 Lessons Learned Consider public class Triangle public static void mainString Assignment 1 Lessons Learned § Consider: public class Triangle { public static void main(String[]](https://slidetodoc.com/presentation_image_h/be52d05681641345497be654e48ccd0f/image-9.jpg)

Assignment 1 Lessons Learned § Consider: public class Triangle { public static void main(String[] args) { int a = 2147483647; int b = 2147483647; int c = 2147483647; if (a + b <= c) // Check a c b { System. out. println("Not a triangle"); } else { System. out. println("Triangle"); } } } Result: Not a triangle April 18, 2017 SE 433: Lecture 4 9 of 101

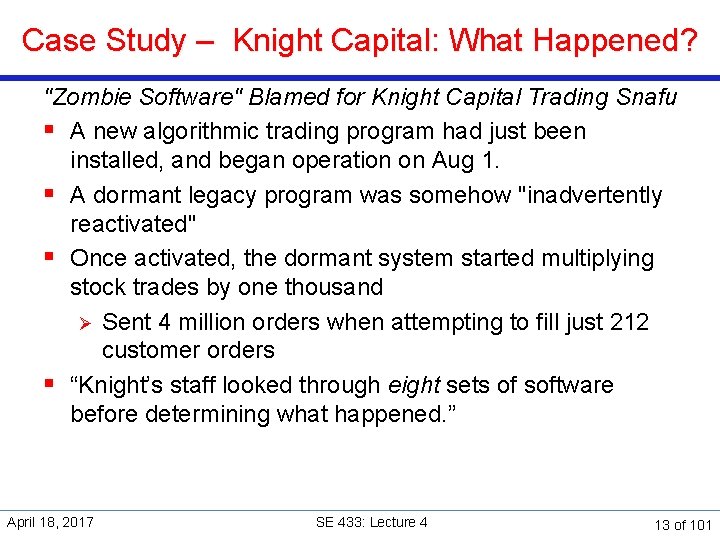

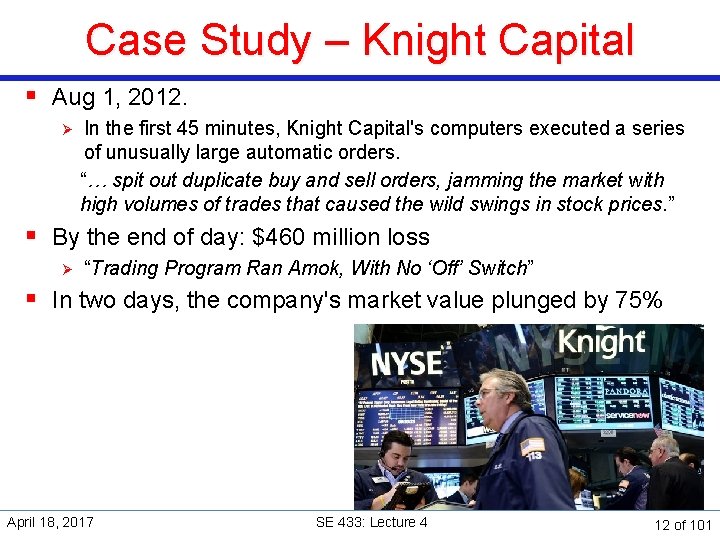

Case Study – Knight Capital High Frequency Trading (HFT) April 18, 2017 SE 433: Lecture 4 10 of 101

Case Study – Knight Capital: High Frequency Trading (HFT) § The Knight Capital Group is an American global financial services firm. § Its high-frequency trading algorithms Knight was the largest trader in U. S. equities Ø with a market share of 17. 3% on NYSE and 16. 9% on NASDAQ. April 18, 2017 SE 433: Lecture 4 11 of 101

Case Study – Knight Capital § Aug 1, 2012. Ø In the first 45 minutes, Knight Capital's computers executed a series of unusually large automatic orders. “… spit out duplicate buy and sell orders, jamming the market with high volumes of trades that caused the wild swings in stock prices. ” § By the end of day: $460 million loss Ø “Trading Program Ran Amok, With No ‘Off’ Switch” § In two days, the company's market value plunged by 75% April 18, 2017 SE 433: Lecture 4 12 of 101

Case Study – Knight Capital: What Happened? "Zombie Software" Blamed for Knight Capital Trading Snafu § A new algorithmic trading program had just been installed, and began operation on Aug 1. § A dormant legacy program was somehow "inadvertently reactivated" § Once activated, the dormant system started multiplying stock trades by one thousand Ø Sent 4 million orders when attempting to fill just 212 customer orders § “Knight’s staff looked through eight sets of software before determining what happened. ” April 18, 2017 SE 433: Lecture 4 13 of 101

Case Study – Knight Capital: The Investigation and Findings § SEC launched an investigation in Nov 2012. Findings: Ø Ø Code changes in 2005 introduced defects. Although the defective function was not meant to be used, it was kept in. New code deployed in late July 2012. The defective function was triggered under new rules. Unable to recognize when orders have been filled. Ignored system generated warning emails. Inadequate controls and procedures for code deployment and testing. § Charges filed in Oct 2013 Ø April 18, 2017 Knights Capital settled charges for $12 million SE 433: Lecture 4 14 of 101

Regression Test April 18, 2017 SE 433: Lecture 4 15 of 101

Software Evolution § Change happens throughout the software development life cycle. Ø Before and after delivery § Change can happen to every aspect of software § Changes can affect unchanged areas » break code, introduce new bugs » uncover previous unknown bugs » reintroduce old bugs April 18, 2017 SE 433: Lecture 4 16 of 101

Regression Test § Testing of a previously tested program following modification to ensure that new defects have not been introduced or uncovered in unchanged areas of the software, as a result of the changes made. § It should be performed whenever the software or its environment is changed. § It applies to testing at all levels. April 18, 2017 SE 433: Lecture 4 17 of 101

Regression Test § Keep a test suite § Use the test suite after every change § Compare output with previous tests Ø Understand all changes § If new tests are needed, add to the test suite. April 18, 2017 SE 433: Lecture 4 18 of 101

Test Driven Development (TDD) April 18, 2017 SE 433: Lecture 4 19 of 101

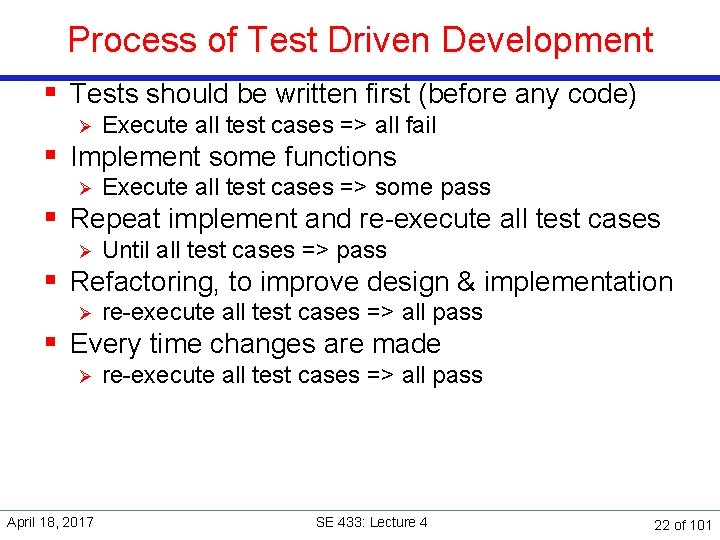

Test Early § Testing should start as early as possible Ø design test cases § Test early has several advantages Ø independence from design & code Ø discover inconsistencies and incompleteness of the specifications Ø serve as a compendium of the specifications April 18, 2017 SE 433: Lecture 4 20 of 101

Test Driven Development § Test driven development (TDD) is one of the corner stones of agile software development § Agile, iterative, incremental development Ø Small iterations, a few units § Verification and validation carried out for each iteration. Ø Ø Design & implement test cases before implementing the functionality Run automated regression test of whole system continuously April 18, 2017 SE 433: Lecture 4 21 of 101

Process of Test Driven Development § Tests should be written first (before any code) Ø Execute all test cases => all fail Ø Execute all test cases => some pass Ø Until all test cases => pass Ø re-execute all test cases => all pass § Implement some functions § Repeat implement and re-execute all test cases § Refactoring, to improve design & implementation § Every time changes are made April 18, 2017 SE 433: Lecture 4 22 of 101

Black Box Testing April 18, 2017 SE 433: Lecture 4 23 of 101

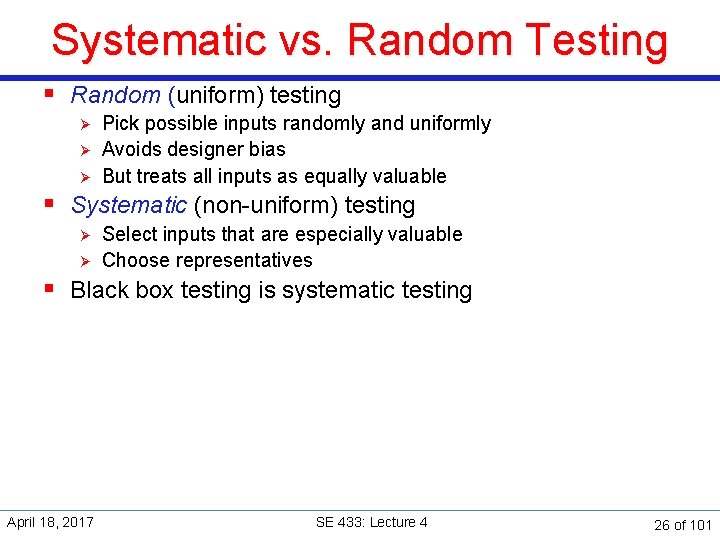

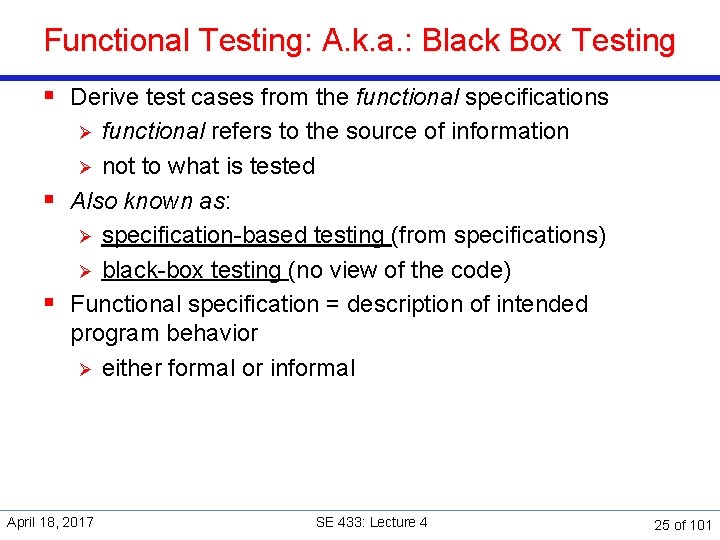

Black Box View § The system is viewed as a black box Ø Provide some input Ø Observe the output April 18, 2017 SE 433: Lecture 4 24 of 101

Functional Testing: A. k. a. : Black Box Testing § Derive test cases from the functional specifications functional refers to the source of information Ø not to what is tested § Also known as: Ø specification-based testing (from specifications) Ø black-box testing (no view of the code) § Functional specification = description of intended program behavior Ø either formal or informal Ø April 18, 2017 SE 433: Lecture 4 25 of 101

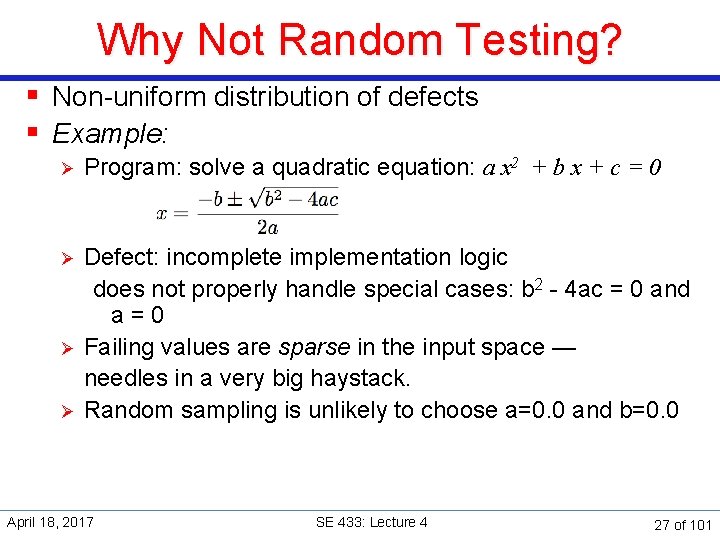

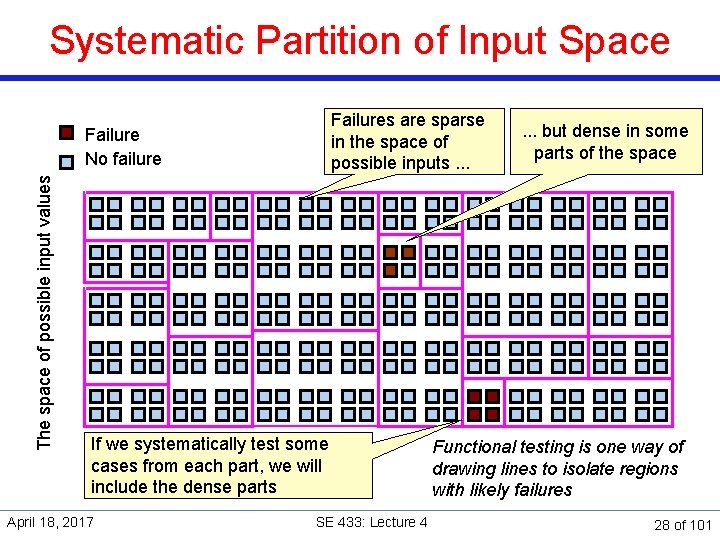

Systematic vs. Random Testing § Random (uniform) testing Ø Ø Ø Pick possible inputs randomly and uniformly Avoids designer bias But treats all inputs as equally valuable § Systematic (non-uniform) testing Ø Ø Select inputs that are especially valuable Choose representatives § Black box testing is systematic testing April 18, 2017 SE 433: Lecture 4 26 of 101

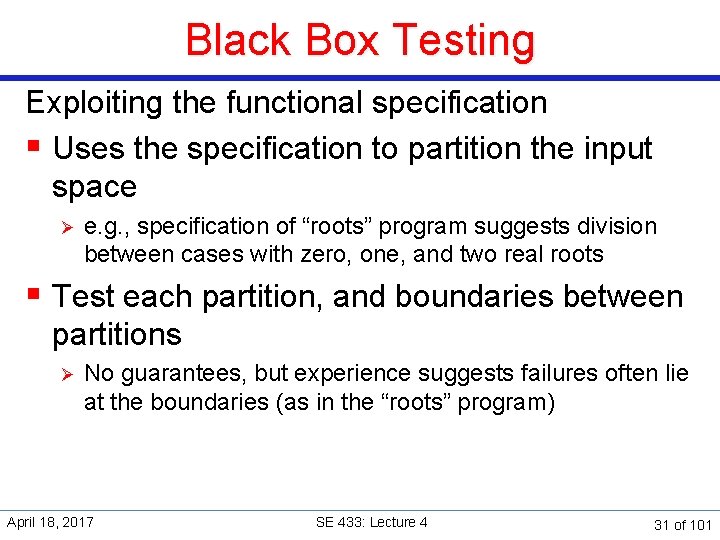

Why Not Random Testing? § Non-uniform distribution of defects § Example: Ø Program: solve a quadratic equation: a x 2 + b x + c = 0 Ø Defect: incomplete implementation logic does not properly handle special cases: b 2 - 4 ac = 0 and a = 0 Failing values are sparse in the input space — needles in a very big haystack. Random sampling is unlikely to choose a=0. 0 and b=0. 0 Ø Ø April 18, 2017 SE 433: Lecture 4 27 of 101

Systematic Partition of Input Space The space of possible input values Failure No failure Failures are sparse in the space of possible inputs. . . If we systematically test some cases from each part, we will include the dense parts April 18, 2017 SE 433: Lecture 4 . . . but dense in some parts of the space Functional testing is one way of drawing lines to isolate regions with likely failures 28 of 101

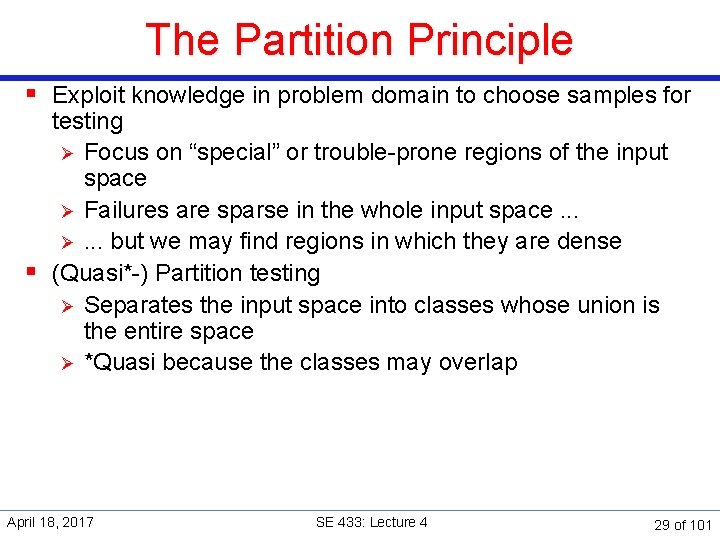

The Partition Principle § Exploit knowledge in problem domain to choose samples for testing Ø Focus on “special” or trouble-prone regions of the input space Ø Failures are sparse in the whole input space. . . Ø. . . but we may find regions in which they are dense § (Quasi*-) Partition testing Ø Separates the input space into classes whose union is the entire space Ø *Quasi because the classes may overlap April 18, 2017 SE 433: Lecture 4 29 of 101

The Partition Principle § Desirable case for partitioning Ø Input values that lead to failures are dense (easy to find) in some classes of input space Ø Sampling each class in the quasi-partition by selecting at least one input value that leads to a failure, revealing the defect § Seldom guaranteed, depend on experiencebased heuristics April 18, 2017 SE 433: Lecture 4 30 of 101

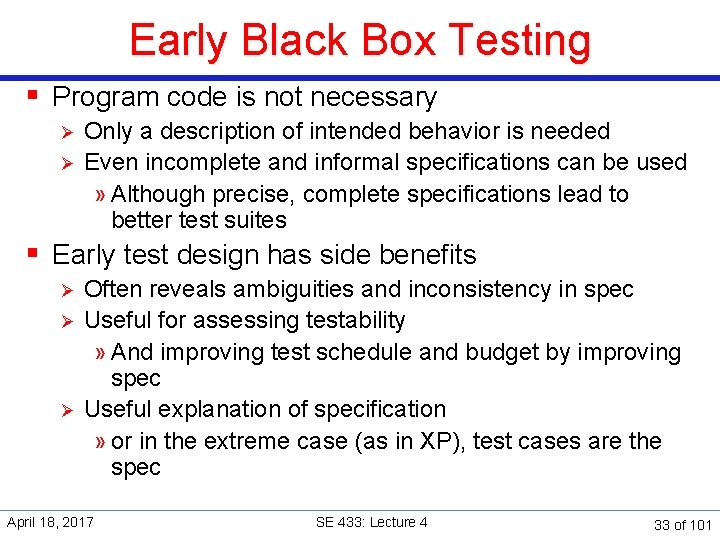

Black Box Testing Exploiting the functional specification § Uses the specification to partition the input space Ø e. g. , specification of “roots” program suggests division between cases with zero, one, and two real roots § Test each partition, and boundaries between partitions Ø No guarantees, but experience suggests failures often lie at the boundaries (as in the “roots” program) April 18, 2017 SE 433: Lecture 4 31 of 101

Why Black Box Testing? § Early. Ø can start before code is written § Effective. Ø find some classes of defects, e. g. , missing logic § Widely applicable Ø Ø any description of program behavior as spec at any level of granularity, from module to system testing. § Economical Ø less expensive than structural (white box) testing The base-line technique for designing test cases April 18, 2017 SE 433: Lecture 4 32 of 101

Early Black Box Testing § Program code is not necessary Ø Ø Only a description of intended behavior is needed Even incomplete and informal specifications can be used » Although precise, complete specifications lead to better test suites § Early test design has side benefits Ø Ø Ø Often reveals ambiguities and inconsistency in spec Useful for assessing testability » And improving test schedule and budget by improving spec Useful explanation of specification » or in the extreme case (as in XP), test cases are the spec April 18, 2017 SE 433: Lecture 4 33 of 101

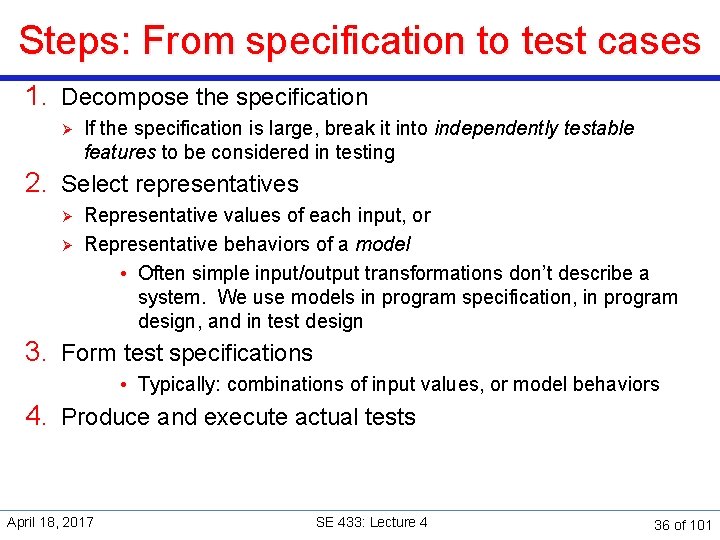

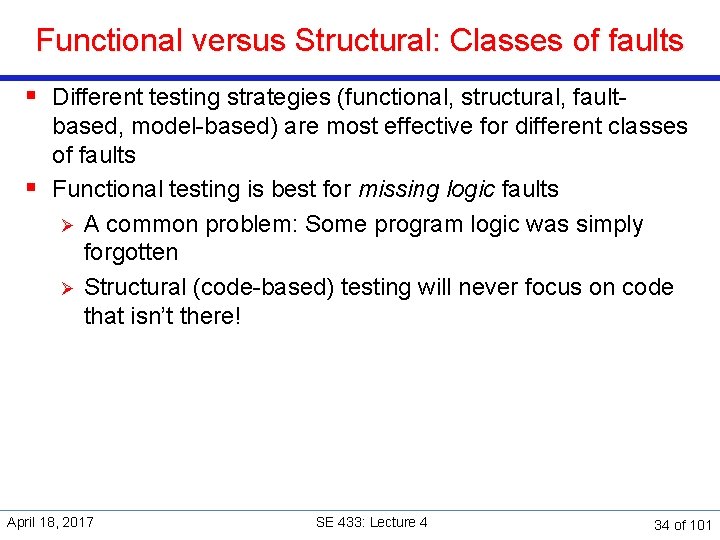

Functional versus Structural: Classes of faults § Different testing strategies (functional, structural, faultbased, model-based) are most effective for different classes of faults § Functional testing is best for missing logic faults Ø A common problem: Some program logic was simply forgotten Ø Structural (code-based) testing will never focus on code that isn’t there! April 18, 2017 SE 433: Lecture 4 34 of 101

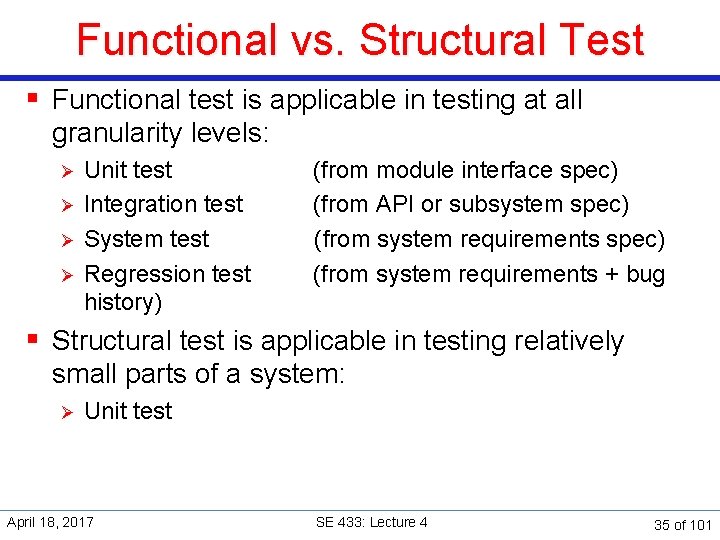

Functional vs. Structural Test § Functional test is applicable in testing at all granularity levels: Ø Ø Unit test (from module interface spec) Integration test (from API or subsystem spec) System test (from system requirements spec) Regression test (from system requirements + bug history) § Structural test is applicable in testing relatively small parts of a system: Ø Unit test April 18, 2017 SE 433: Lecture 4 35 of 101

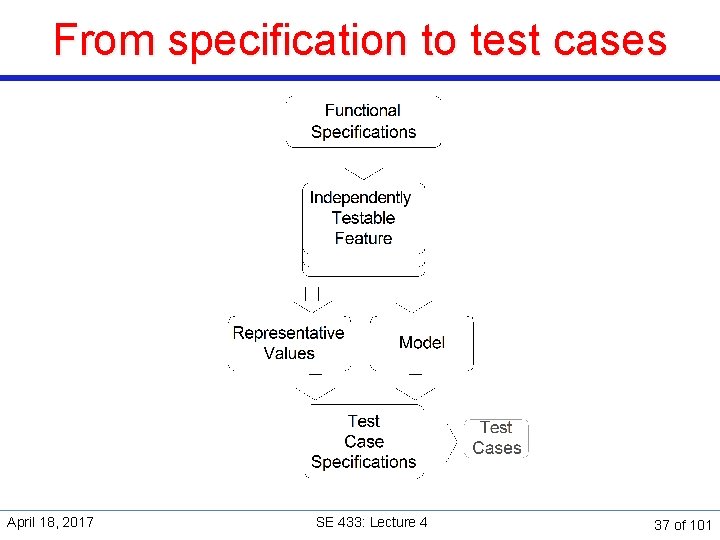

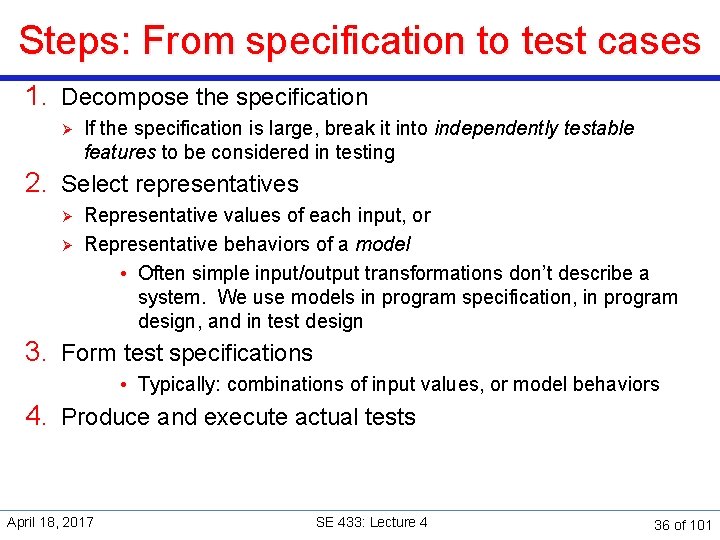

Steps: From specification to test cases 1. Decompose the specification Ø If the specification is large, break it into independently testable features to be considered in testing 2. Select representatives Ø Ø Representative values of each input, or Representative behaviors of a model • Often simple input/output transformations don’t describe a system. We use models in program specification, in program design, and in test design 3. Form test specifications • Typically: combinations of input values, or model behaviors 4. Produce and execute actual tests April 18, 2017 SE 433: Lecture 4 36 of 101

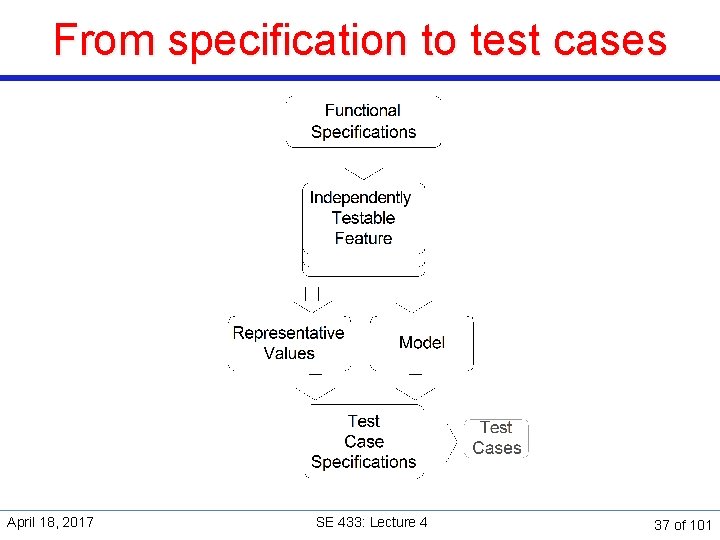

From specification to test cases April 18, 2017 SE 433: Lecture 4 37 of 101

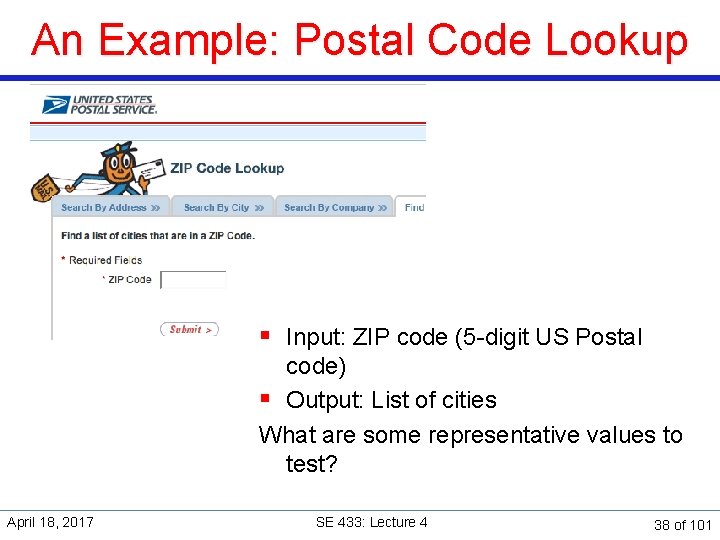

An Example: Postal Code Lookup § Input: ZIP code (5 -digit US Postal code) § Output: List of cities What are some representative values to test? April 18, 2017 SE 433: Lecture 4 38 of 101

Example: Representative Values Simple example with one input, one output § Correct zip code Ø With 0, 1, or many cities § Malformed zip code Ø Ø Ø April 18, 2017 Empty; 1 -4 characters; 6 characters; very long Non-digit characters Non-character data Note prevalence of boundary values (0 cities, 6 characters) and error cases SE 433: Lecture 4 39 of 101

Summary § Functional testing, i. e. , generation of test cases from specifications is a valuable and flexible approach to software testing Ø Applicable from very early system specs right through module specifications § (quasi-)Partition testing suggests dividing the input space into (quasi-)equivalent classes Ø Ø Systematic testing is intentionally non-uniform to address special cases, error conditions, and other small places Dividing a big haystack into small, hopefully uniform piles where the needles might be concentrated April 18, 2017 SE 433: Lecture 4 40 of 101

Basic Techniques of Black Box Testing April 18, 2017 SE 433: Lecture 4 41 of 101

Single Defect Assumption Failures are rarely the result of the simultaneous effects of two (or more) defects. April 18, 2017 SE 433: Lecture 4 42 of 101

Functional Testing Concepts The four key concepts in functional testing are: § Precisely identify the domain of each input and each output variable § Select values from the data domain of each variable having important properties § Consider combinations of special values from different input domains to design test cases § Consider input values such that the program under test produces special values from the domains of the output variables April 18, 2017 SE 433: Lecture 4 43 of 101

Developing Test Cases § Consider: Test cases for input box accepting numbers between 1 and 1000 Ø If you are testing for an input box accepting numbers from 1 to 1000 then there is no use in writing thousand test cases for all 1000 valid input numbers plus other test cases for invalid data. § Using equivalence partitioning method, above test cases can be divided into three sets of input data called as classes. Each test case is a representative of respective class. § We can divide our test cases into three equivalence classes of some valid and invalid inputs. April 18, 2017 SE 433: Lecture 4 44 of 101

Developing Test Cases 1. One input data class with all valid inputs. Pick a single value from range 1 to 1000 as a valid test case. If you select other values between 1 and 1000 then result is going to be same. So one test case for valid input data should be sufficient. 2. Input data class with all values below lower limit. I. e. any value below 1, as a invalid input data test case. 3. Input data with any value greater than 1000 to represent third invalid input class. So using equivalence partitioning you have categorized all possible test cases into three classes. Test cases with other values from any class should give you the same result. April 18, 2017 SE 433: Lecture 4 45 of 101

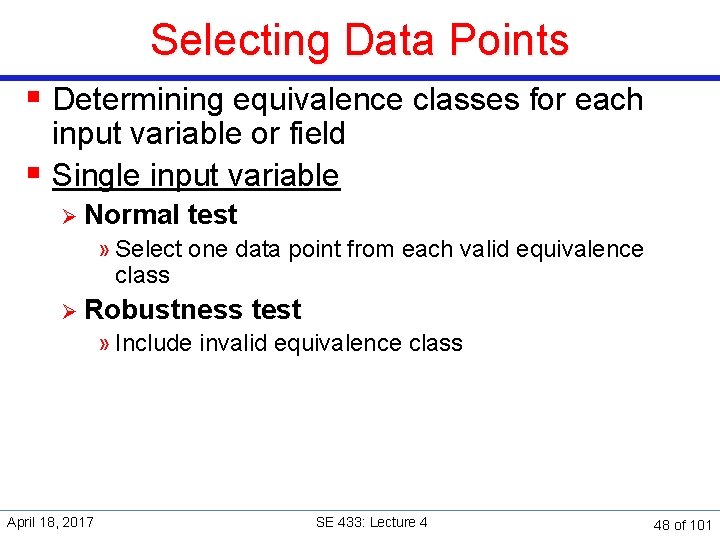

Equivalence Classes § Equivalence classes are the sets of values in a (quasi-) partition of the input, or output domain § Values in an equivalence class cause the program to behave in a similar way: Ø failure or success § Motivation: Ø gain a sense of complete testing and avoid redundancy § First determine the boundaries … then determine the equivalencies April 18, 2017 SE 433: Lecture 4 46 of 101

Determining Equivalence Classes § § § Look for ranges of numbers or values Look for memberships in groups Some may be based on time Include invalid inputs Look for internal boundaries Don’t worry if they overlap with each other — Ø better to be redundant than to miss something § However, test cases will easily overlap with boundary value test cases April 18, 2017 SE 433: Lecture 4 47 of 101

Selecting Data Points § Determining equivalence classes for each input variable or field § Single input variable Ø Normal test » Select one data point from each valid equivalence class Ø Robustness test » Include invalid equivalence class April 18, 2017 SE 433: Lecture 4 48 of 101

Selecting Data Points § Multiple input variables Ø Weak normal test: » Select one data point from each valid equivalence class Ø Strong normal test: » Select one data point from each combination of (the cross product of) the valid equivalence classes Ø Weak/strong robustness test: » Include invalid equivalence classes Ø How many test cases do we need? April 18, 2017 SE 433: Lecture 4 49 of 101

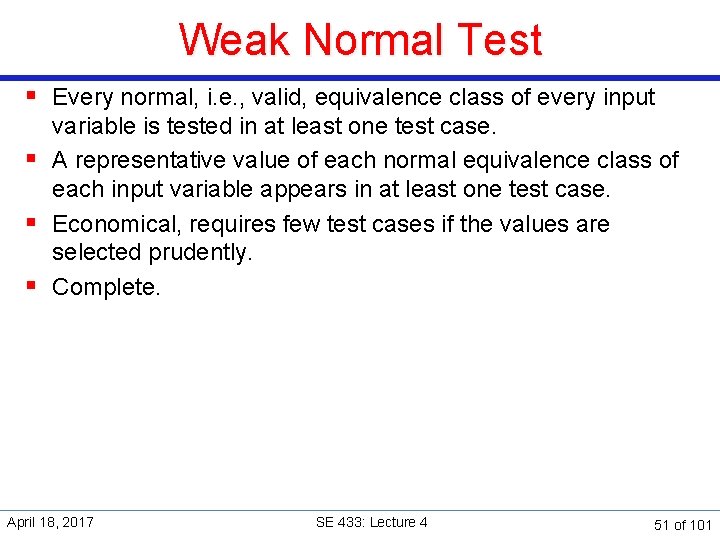

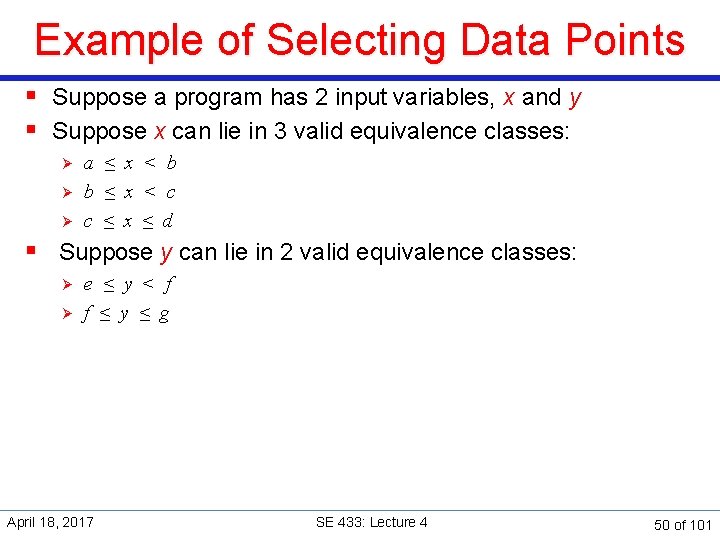

Example of Selecting Data Points § Suppose a program has 2 input variables, x and y § Suppose x can lie in 3 valid equivalence classes: Ø Ø Ø a ≤ x < b b ≤ x < c c ≤ x ≤ d § Suppose y can lie in 2 valid equivalence classes: Ø Ø e ≤ y < f f ≤ y ≤ g April 18, 2017 SE 433: Lecture 4 50 of 101

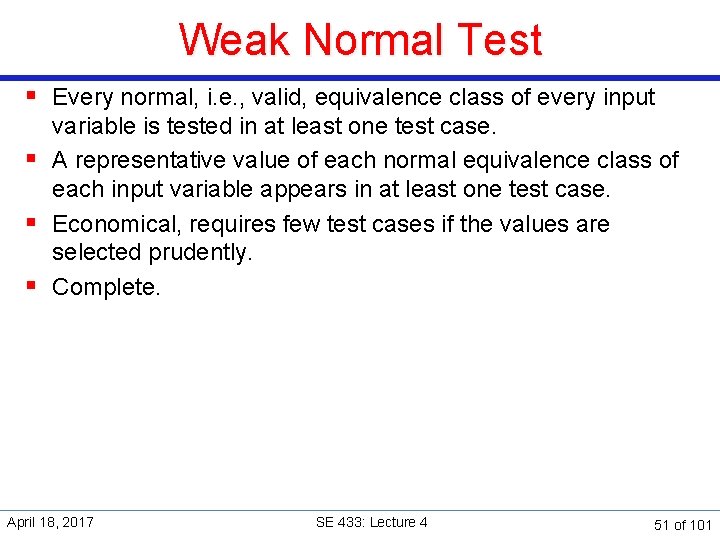

Weak Normal Test § Every normal, i. e. , valid, equivalence class of every input variable is tested in at least one test case. § A representative value of each normal equivalence class of each input variable appears in at least one test case. § Economical, requires few test cases if the values are selected prudently. § Complete. April 18, 2017 SE 433: Lecture 4 51 of 101

Weak Normal Test y g f e a April 18, 2017 b c SE 433: Lecture 4 d x 52 of 101

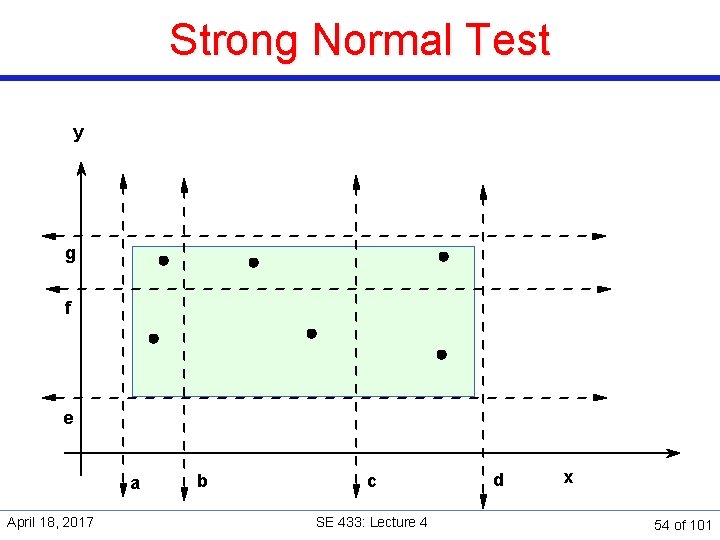

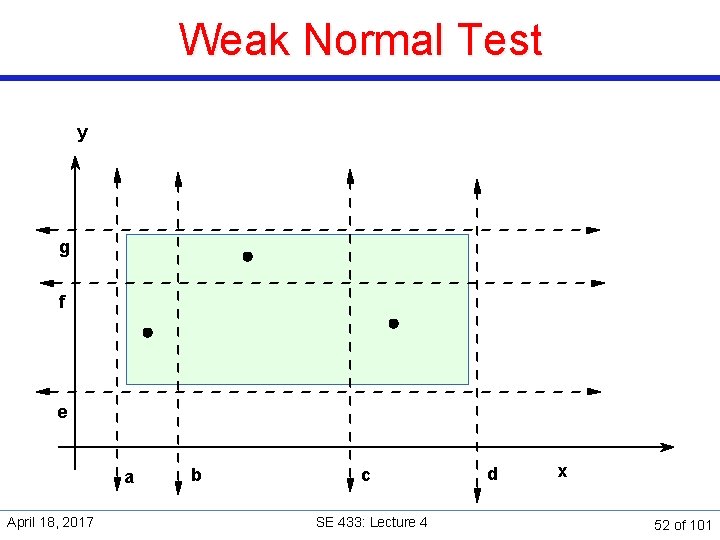

Strong Normal Test § Every combination of normal equivalence classes of every input variable is tested in at least one test cases. § More comprehensive. § Requires more test cases. § May not be practical for programs with large number of input variables. April 18, 2017 SE 433: Lecture 4 53 of 101

Strong Normal Test y g f e a April 18, 2017 b c SE 433: Lecture 4 d x 54 of 101

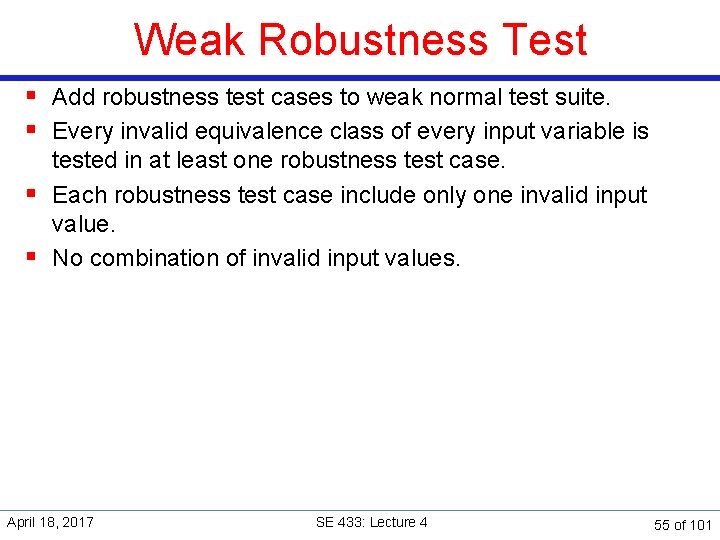

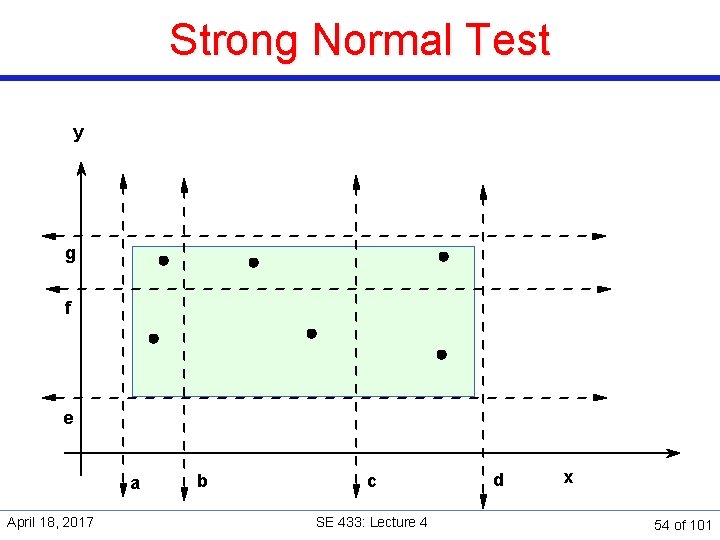

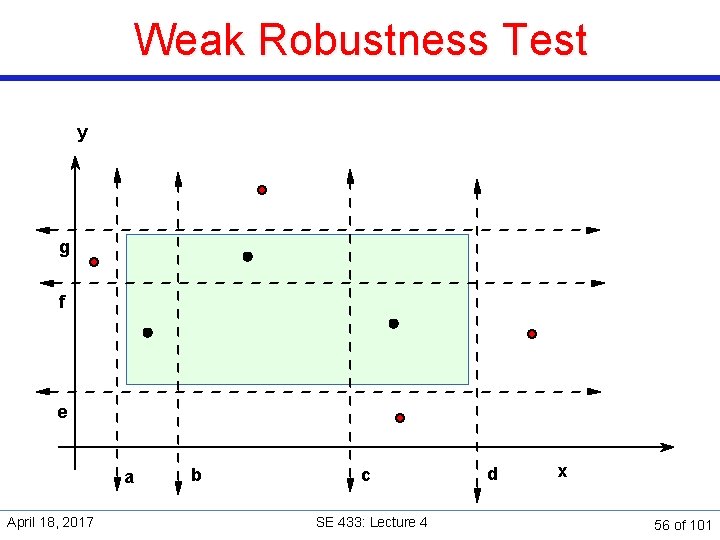

Weak Robustness Test § Add robustness test cases to weak normal test suite. § Every invalid equivalence class of every input variable is tested in at least one robustness test case. § Each robustness test case include only one invalid input value. § No combination of invalid input values. April 18, 2017 SE 433: Lecture 4 55 of 101

Weak Robustness Test y g f e a April 18, 2017 b c SE 433: Lecture 4 d x 56 of 101

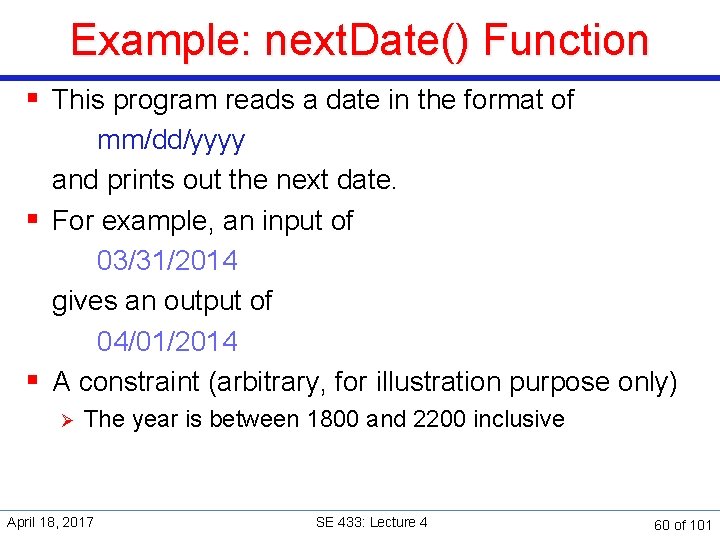

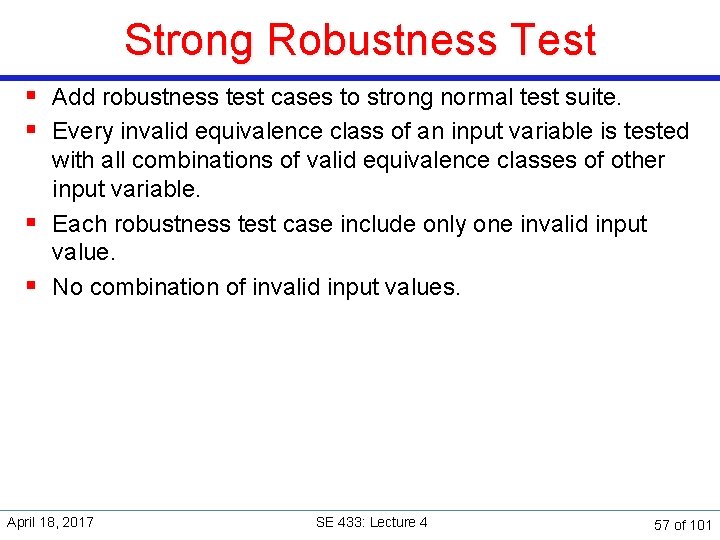

Strong Robustness Test § Add robustness test cases to strong normal test suite. § Every invalid equivalence class of an input variable is tested with all combinations of valid equivalence classes of other input variable. § Each robustness test case include only one invalid input value. § No combination of invalid input values. April 18, 2017 SE 433: Lecture 4 57 of 101

Strong Robustness Test Cases y g f e a April 18, 2017 b c SE 433: Lecture 4 d x 58 of 101

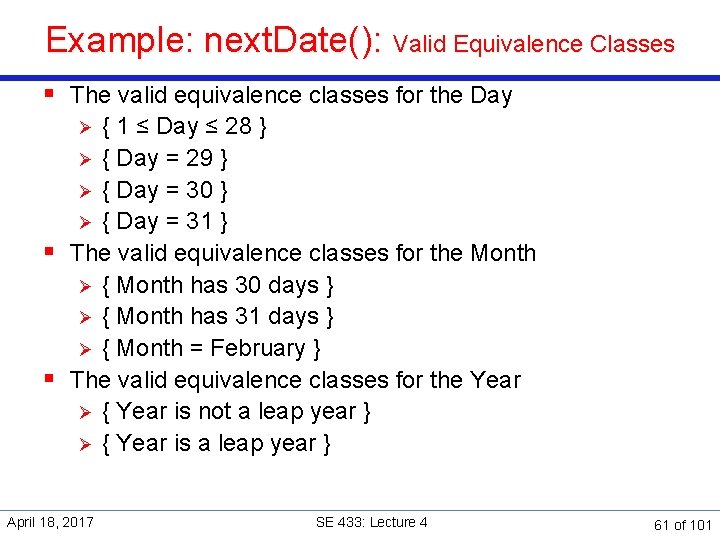

Summary § For Multiple input variables Ø Weak normal test: » Select one data point from each valid equivalence class Ø Strong normal test: » Select one data point from each combination of (the cross product of) the valid equivalence classes Ø Weak/strong robustness test: » Include invalid equivalence classes April 18, 2017 SE 433: Lecture 4 59 of 101

Example: next. Date() Function § This program reads a date in the format of mm/dd/yyyy and prints out the next date. § For example, an input of 03/31/2014 gives an output of 04/01/2014 § A constraint (arbitrary, for illustration purpose only) Ø The year is between 1800 and 2200 inclusive April 18, 2017 SE 433: Lecture 4 60 of 101

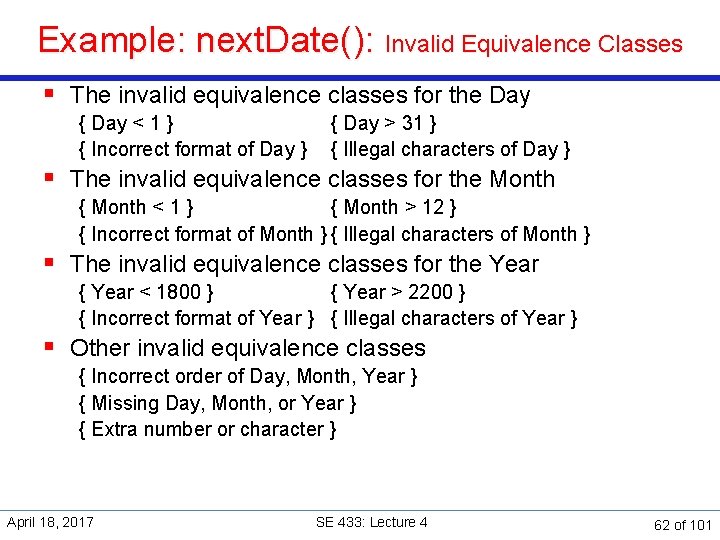

Example: next. Date(): Valid Equivalence Classes § The valid equivalence classes for the Day { 1 ≤ Day ≤ 28 } Ø { Day = 29 } Ø { Day = 30 } Ø { Day = 31 } § The valid equivalence classes for the Month Ø { Month has 30 days } Ø { Month has 31 days } Ø { Month = February } § The valid equivalence classes for the Year Ø { Year is not a leap year } Ø { Year is a leap year } Ø April 18, 2017 SE 433: Lecture 4 61 of 101

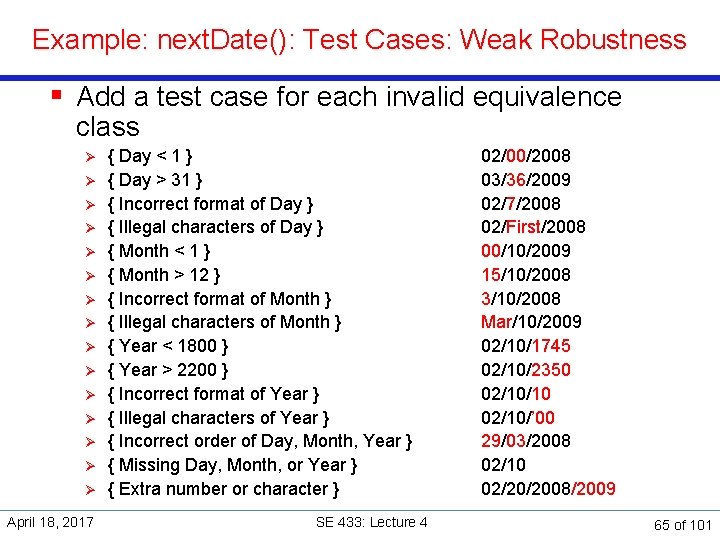

Example: next. Date(): Invalid Equivalence Classes § The invalid equivalence classes for the Day { Day < 1 } { Day > 31 } { Incorrect format of Day } { Illegal characters of Day } § The invalid equivalence classes for the Month { Month < 1 } { Month > 12 } { Incorrect format of Month } { Illegal characters of Month } § The invalid equivalence classes for the Year { Year < 1800 } { Year > 2200 } { Incorrect format of Year } { Illegal characters of Year } § Other invalid equivalence classes { Incorrect order of Day, Month, Year } { Missing Day, Month, or Year } { Extra number or character } April 18, 2017 SE 433: Lecture 4 62 of 101

Example: next. Date(): Test Cases: Weak Normal § Valid equivalence classes and data points Ø Day » » Ø Data Points { 1 ≤ Day ≤ 28 } { Day = 29 } { Day = 30 } { Day = 31 } 10 29 30 31 § Weak normal test cases (4 cases) Month » { Month has 30 days } 04 » { Month has 31 days } 03 » { Month = February } 02 Ø 2. Year 3. » { Year is not a leap year } » { Year is a leap year } 2008 April 18, 2017 1. 2009 SE 433: Lecture 4 4. 02/10/2009 04/29/2009 03/30/2008 03/31/2008 63 of 101

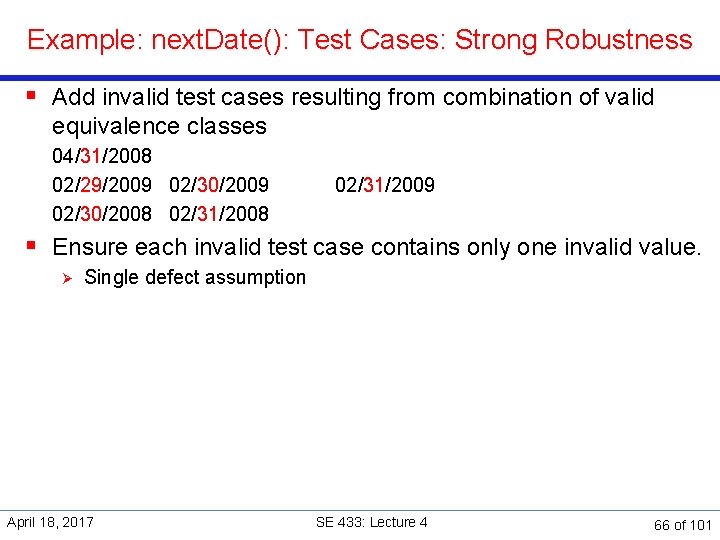

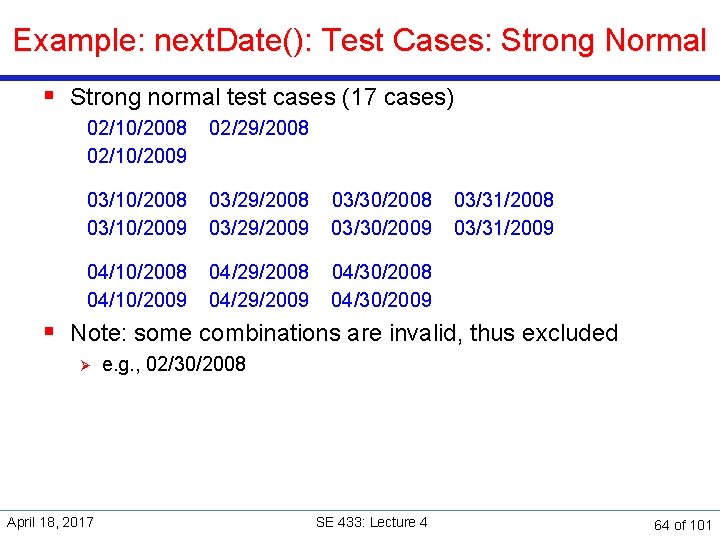

Example: next. Date(): Test Cases: Strong Normal § Strong normal test cases (17 cases) 02/10/2008 02/29/2008 02/10/2009 03/10/2008 03/29/2008 03/30/2008 03/31/2008 03/10/2009 03/29/2009 03/30/2009 03/31/2009 04/10/2008 04/29/2008 04/30/2008 04/10/2009 04/29/2009 04/30/2009 § Note: some combinations are invalid, thus excluded Ø April 18, 2017 e. g. , 02/30/2008 SE 433: Lecture 4 64 of 101

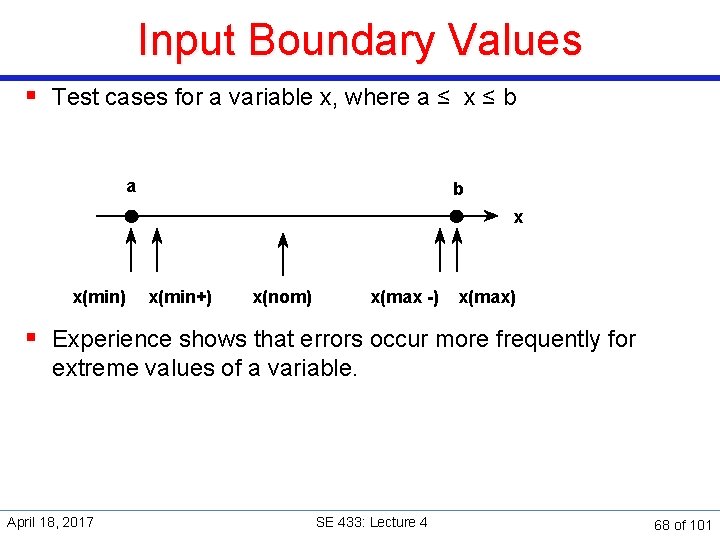

Example: next. Date(): Test Cases: Weak Robustness § Add a test case for each invalid equivalence class Ø Ø Ø Ø April 18, 2017 { Day < 1 } { Day > 31 } { Incorrect format of Day } { Illegal characters of Day } { Month < 1 } { Month > 12 } { Incorrect format of Month } { Illegal characters of Month } { Year < 1800 } { Year > 2200 } { Incorrect format of Year } { Illegal characters of Year } { Incorrect order of Day, Month, Year } { Missing Day, Month, or Year } { Extra number or character } SE 433: Lecture 4 02/00/2008 03/36/2009 02/7/2008 02/First/2008 00/10/2009 15/10/2008 3/10/2008 Mar/10/2009 02/10/1745 02/10/2350 02/10/10 02/10/’ 00 29/03/2008 02/10 02/20/2008/2009 65 of 101

Example: next. Date(): Test Cases: Strong Robustness § Add invalid test cases resulting from combination of valid equivalence classes 04/31/2008 02/29/2009 02/30/2008 02/31/2009 § Ensure each invalid test case contains only one invalid value. Ø Single defect assumption April 18, 2017 SE 433: Lecture 4 66 of 101

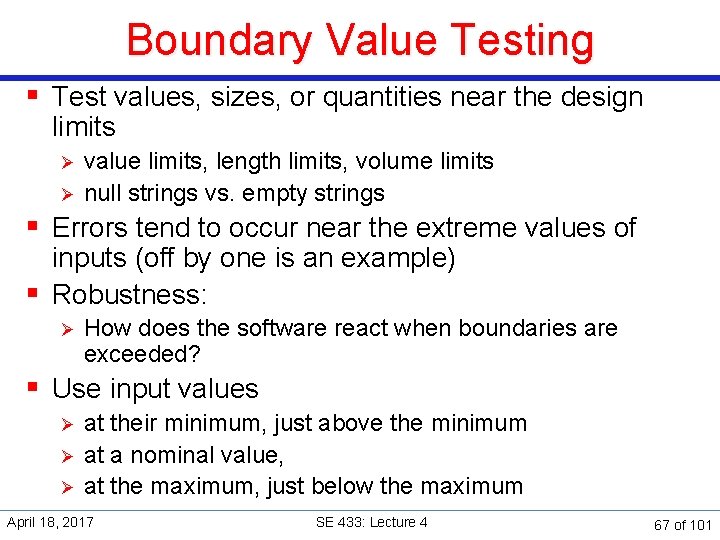

Boundary Value Testing § Test values, sizes, or quantities near the design limits Ø Ø value limits, length limits, volume limits null strings vs. empty strings § Errors tend to occur near the extreme values of inputs (off by one is an example) § Robustness: Ø How does the software react when boundaries are exceeded? § Use input values Ø Ø Ø at their minimum, just above the minimum at a nominal value, at the maximum, just below the maximum April 18, 2017 SE 433: Lecture 4 67 of 101

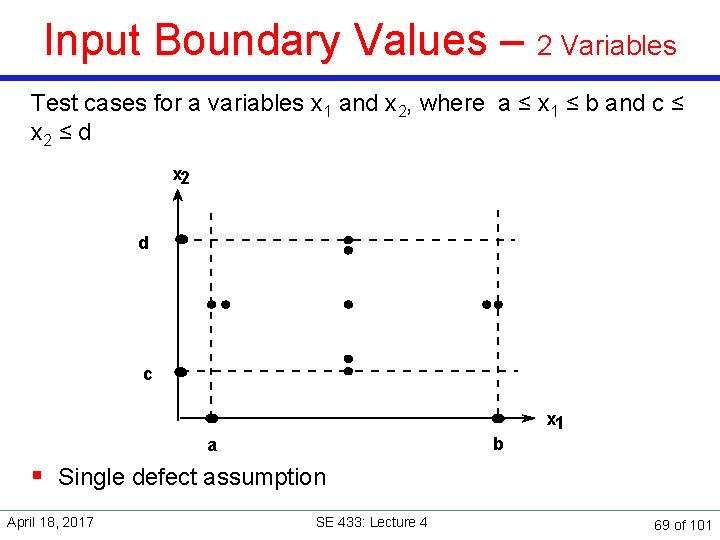

Input Boundary Values § Test cases for a variable x, where a ≤ x ≤ b a b x x(min) x(min+) x(nom) x(max -) x(max) § Experience shows that errors occur more frequently for extreme values of a variable. April 18, 2017 SE 433: Lecture 4 68 of 101

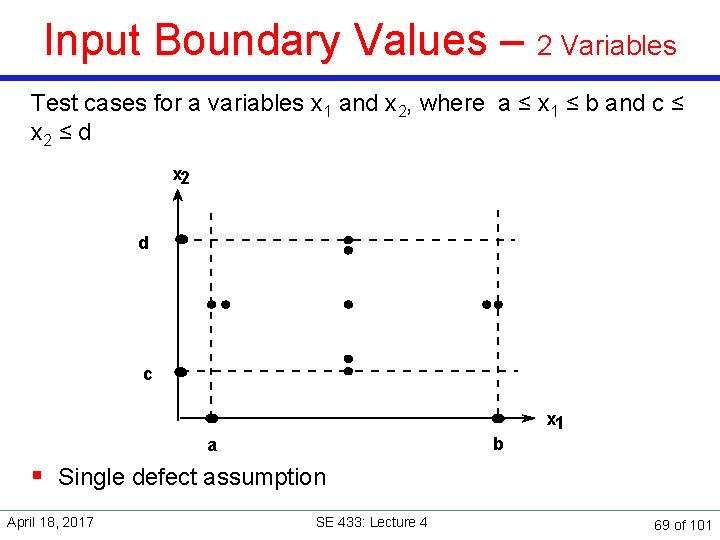

Input Boundary Values – 2 Variables Test cases for a variables x 1 and x 2, where a ≤ x 1 ≤ b and c ≤ x 2 ≤ d x 2 d c x 1 b a § Single defect assumption April 18, 2017 SE 433: Lecture 4 69 of 101

Example: next. Date() – Test Cases: Boundary Values § Additional test cases, valid input 04/01/2009 03/01/2009 02/29/2008 01/01/1800 April 18, 2017 04/30/2009 03/31/2009 02/28/2009 12/31/2008 12/31/2200 SE 433: Lecture 4 70 of 101

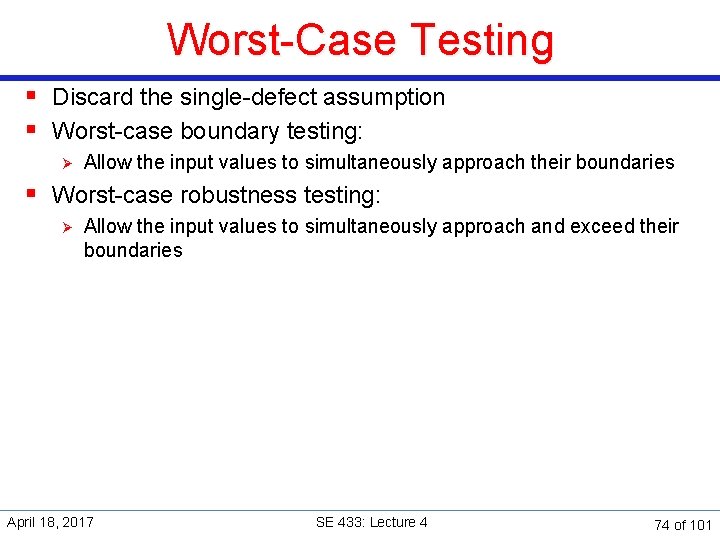

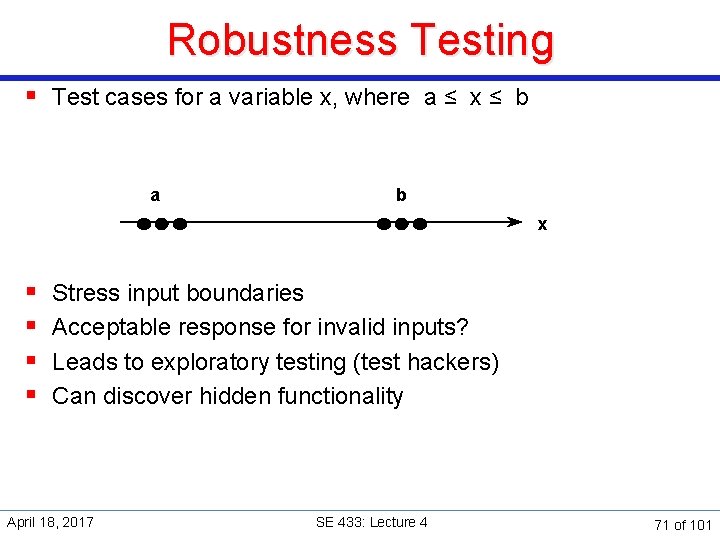

Robustness Testing § Test cases for a variable x, where a ≤ x ≤ b a b x § § Stress input boundaries Acceptable response for invalid inputs? Leads to exploratory testing (test hackers) Can discover hidden functionality April 18, 2017 SE 433: Lecture 4 71 of 101

Robustness Testing – 2 Variables x 2 d c x 1 b a April 18, 2017 SE 433: Lecture 4 72 of 101

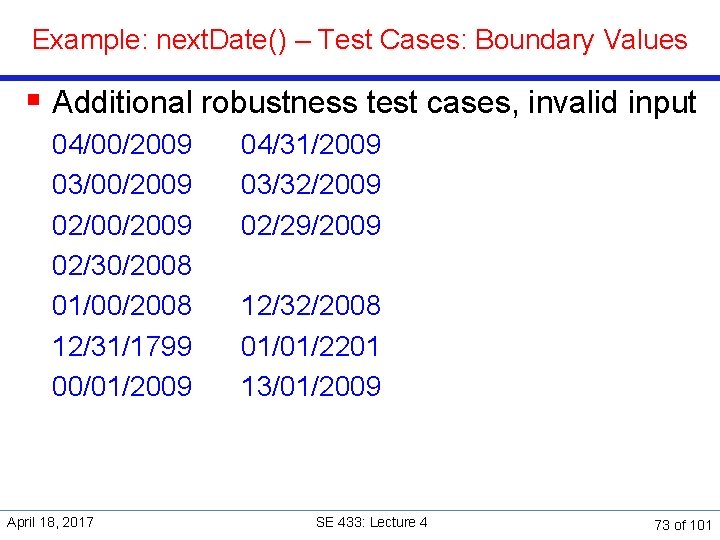

Example: next. Date() – Test Cases: Boundary Values § Additional robustness test cases, invalid input 04/00/2009 03/00/2009 02/30/2008 01/00/2008 12/31/1799 00/01/2009 April 18, 2017 04/31/2009 03/32/2009 02/29/2009 12/32/2008 01/01/2201 13/01/2009 SE 433: Lecture 4 73 of 101

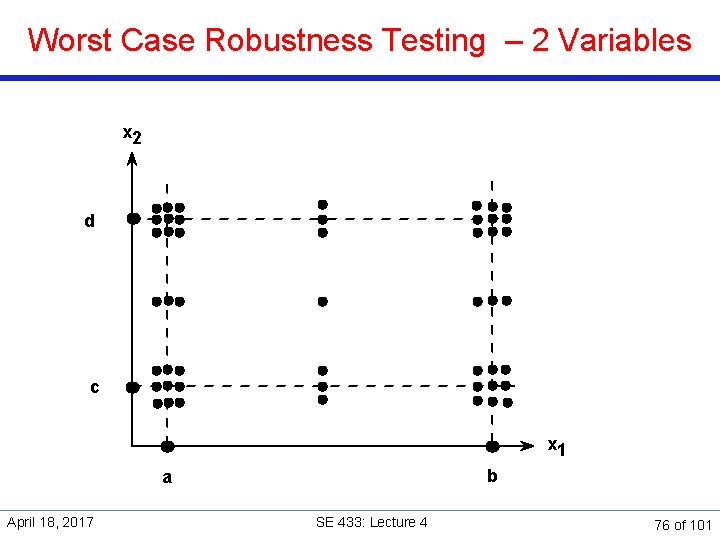

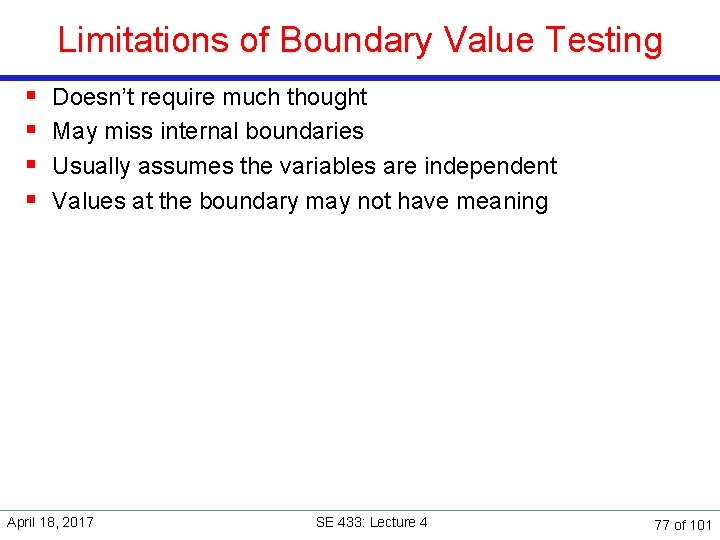

Worst-Case Testing § Discard the single-defect assumption § Worst-case boundary testing: Ø Allow the input values to simultaneously approach their boundaries § Worst-case robustness testing: Ø Allow the input values to simultaneously approach and exceed their boundaries April 18, 2017 SE 433: Lecture 4 74 of 101

Worst Case Boundary Testing – 2 Variables x 2 d c x 1 b a April 18, 2017 SE 433: Lecture 4 75 of 101

Worst Case Robustness Testing – 2 Variables x 2 d c x 1 b a April 18, 2017 SE 433: Lecture 4 76 of 101

Limitations of Boundary Value Testing § § Doesn’t require much thought May miss internal boundaries Usually assumes the variables are independent Values at the boundary may not have meaning April 18, 2017 SE 433: Lecture 4 77 of 101

Special Value Testing § The most widely practiced form of functional testing § The tester uses his or her domain knowledge, experience, or intuition to probe areas of probable errors § Other terms: “hacking”, “out-of-box testing”, “ad hoc testing”, “seat of the pants testing”, “guerilla testing” April 18, 2017 SE 433: Lecture 4 78 of 101

Uses of Special Value Testing § § Complex mathematical (or algorithmic) calculations Worst case situations (similar to robustness) Problematic situations from past experience “Second guess” the likely implementation April 18, 2017 SE 433: Lecture 4 79 of 101

Characteristics of Special Value Testing § Experience really helps § Frequently done by the customer or user § Defies measurement § Highly intuitive § Seldom repeatable § Often, very effective April 18, 2017 SE 433: Lecture 4 80 of 101

Summary: Key Concepts § Black-box testing Ø Ø vs. random testing, white-box testing Partitioning principle § Black box testing techniques Ø Ø Ø Equivalence class Boundary value testing Special value testing § Single defect assumption § Normal vs. robustness testing § Weak and strong combinations April 18, 2017 SE 433: Lecture 4 81 of 101

Guidelines and observations § Equivalence Class Testing is appropriate when input data is defined in terms of intervals and sets of discrete values. § Equivalence Class Testing is strengthened when combined with Boundary Value Testing § Strong equivalence takes the presumption that variables are independent. If that is not the case, redundant test cases may be generated April 18, 2017 SE 433: Lecture 4 82 of 101

An Introduction to JUnit Part 2 April 18, 2017 SE 433: Lecture 4 83 of 101

JUnit Best Practices § Each test case should be independent. § Test cases should be independent of execution order. § No dependencies on the state of previous tests. April 18, 2017 SE 433: Lecture 4 84 of 101

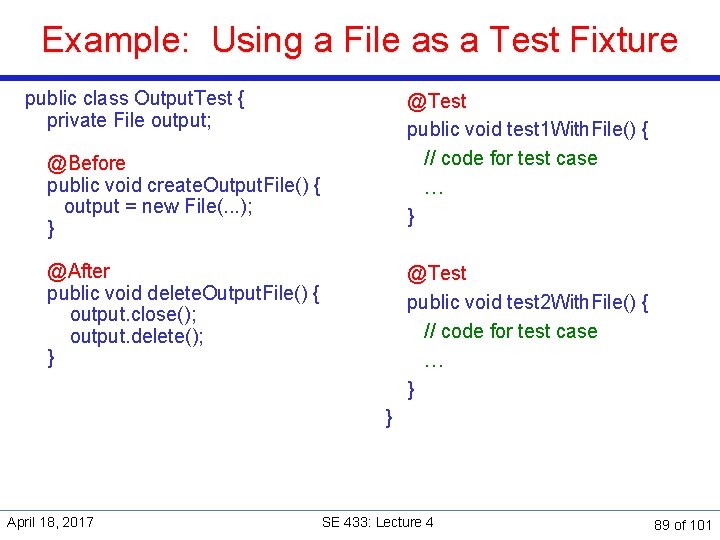

JUnit Test Fixtures § The context in which a test case is executed. § Typically include: Ø Common objects or resources that are available for use by any test case. § Activities to manage these objects Set-up: object and resource allocation Ø Tear-down: object and resource de-allocation Ø April 18, 2017 SE 433: Lecture 4 85 of 101

Set-Up § Tasks that must be done prior to each test case § Examples: Ø Create some objects to work with Ø Open a network connection Ø Open a file to read/write April 18, 2017 SE 433: Lecture 4 86 of 101

Tear-Down § Tasks to clean up after execution of each test case. § Ensures Ø Resources are released Ø the system is in a known state for the next test case § Clean up should not be done at the end of a test case, Ø since a failure ends execution of a test case at that point April 18, 2017 SE 433: Lecture 4 87 of 101

Method Annotations for Set-Up and Tear-Down § @Before annotation: set-up Ø code to run before each test case. § @After annotation: Teardown Ø Ø code to run after each test case. will run regardless of the verdict, even if exceptions are thrown in the test case or an assertion fails. § Multiple annotations are allowed Ø Ø all methods annotated with @Before will be run before each test case but no guarantee of execution order April 18, 2017 SE 433: Lecture 4 88 of 101

Example: Using a File as a Test Fixture public class Output. Test { private File output; @Before public void create. Output. File() { output = new File(. . . ); } @After public void delete. Output. File() { output. close(); output. delete(); } April 18, 2017 @Test public void test 1 With. File() { // code for test case … } @Test public void test 2 With. File() { // code for test case … } } SE 433: Lecture 4 89 of 101

Method Execution Order 1. create. Output. File() 2. test 1 With. File() 3. delete. Output. File() 4. create. Output. File() 5. test 2 With. File() 6. delete. Output. File() Not guaranteed: test 1 With. File runs before test 2 With. File April 18, 2017 SE 433: Lecture 4 90 of 101

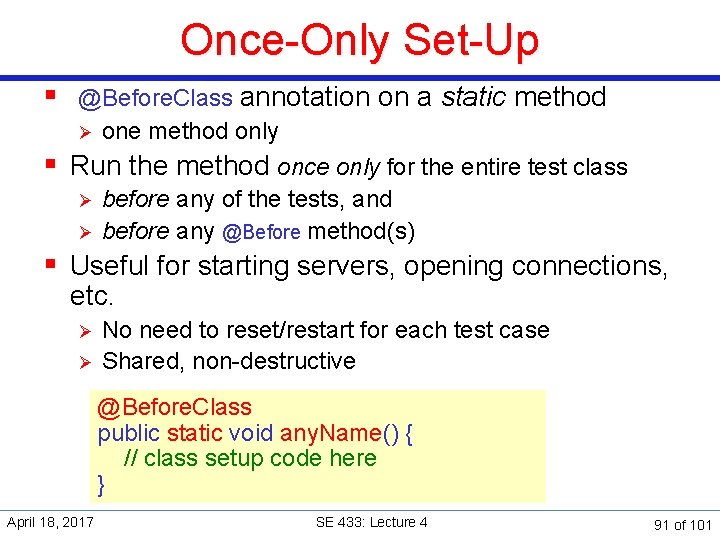

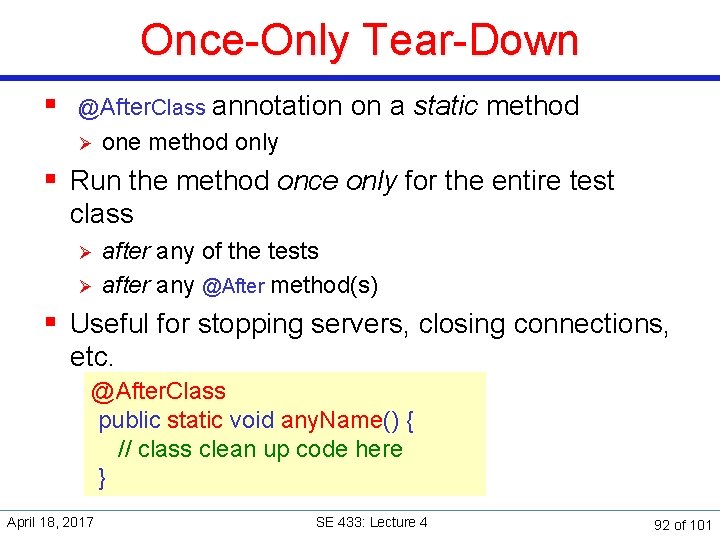

Once-Only Set-Up § @Before. Class annotation on a static method Ø one method only § Run the method once only for the entire test class Ø Ø before any of the tests, and before any @Before method(s) § Useful for starting servers, opening connections, etc. Ø Ø No need to reset/restart for each test case Shared, non-destructive @Before. Class public static void any. Name() { // class setup code here } April 18, 2017 SE 433: Lecture 4 91 of 101

Once-Only Tear-Down § @After. Class annotation on a static method Ø one method only § Run the method once only for the entire test class Ø Ø after any of the tests after any @After method(s) § Useful for stopping servers, closing connections, etc. @After. Class public static void any. Name() { // class clean up code here } April 18, 2017 SE 433: Lecture 4 92 of 101

Timed Tests § Useful for simple performance test Ø Ø Network communication Complex computation § The timeout parameter of @Test annotation Ø in milliseconds @Test(timeout=5000) public void test. Lengthy. Operation() { . . . } § The test fails Ø April 18, 2017 if timeout occurs before the test method completes SE 433: Lecture 4 93 of 101

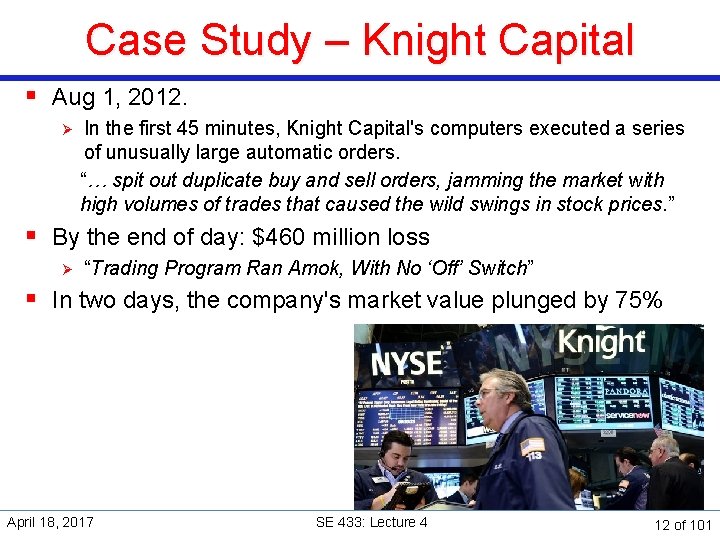

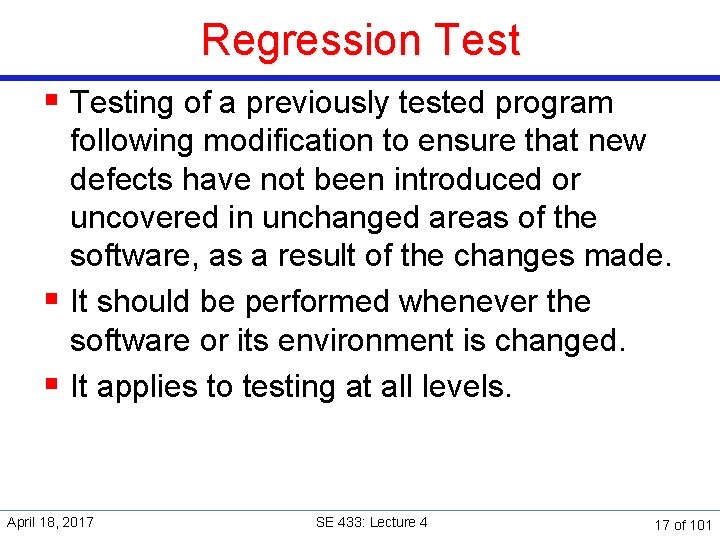

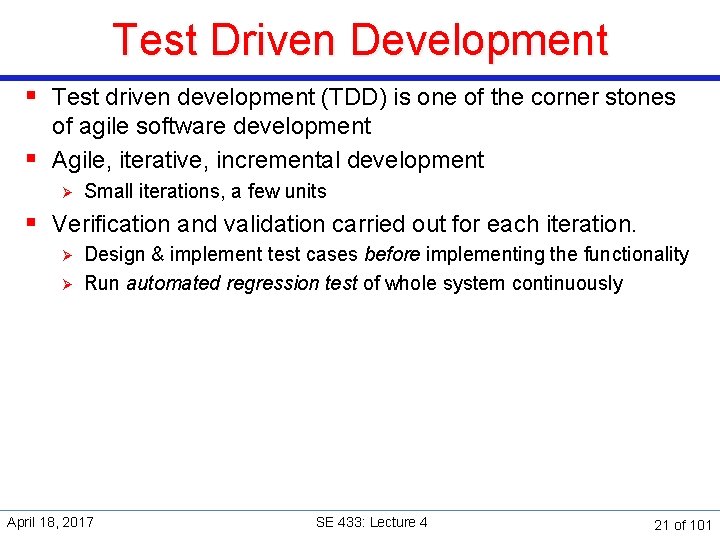

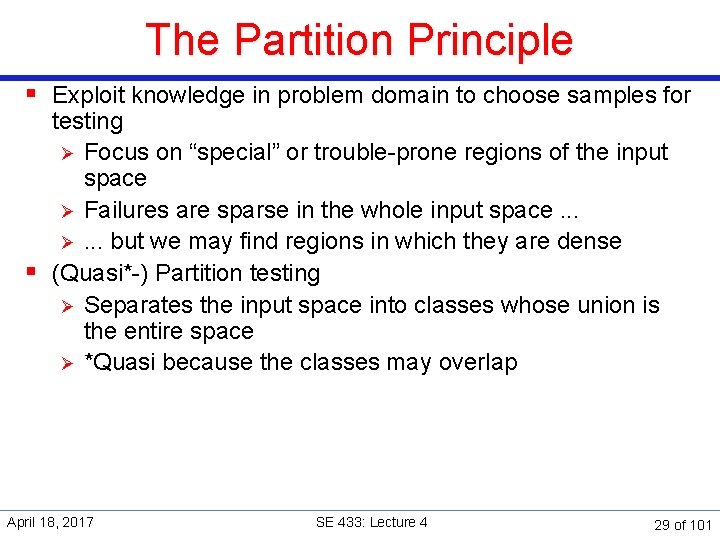

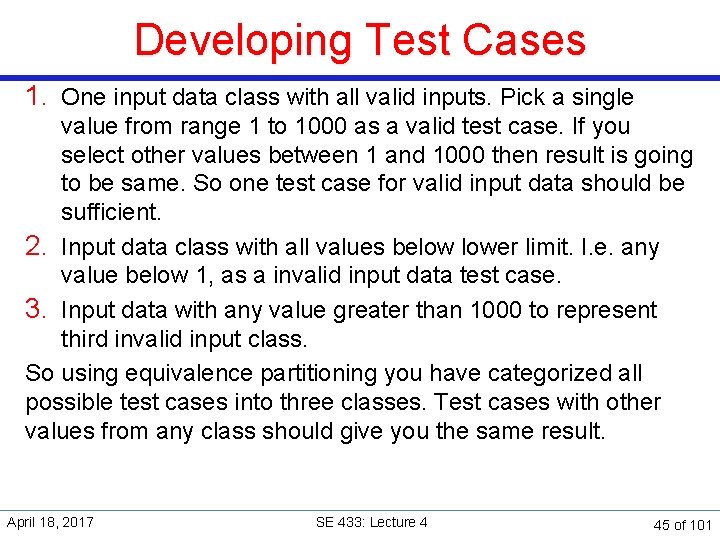

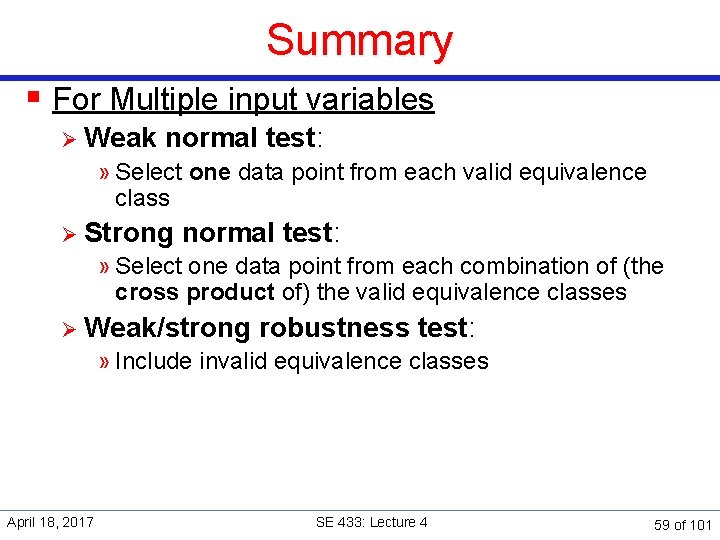

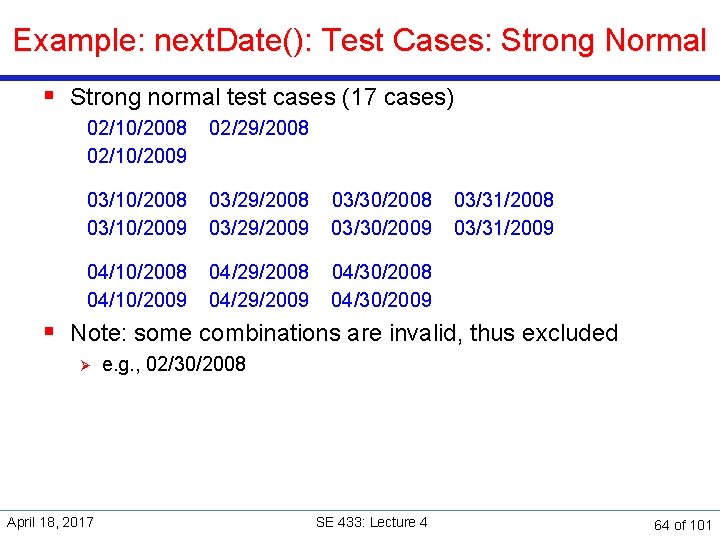

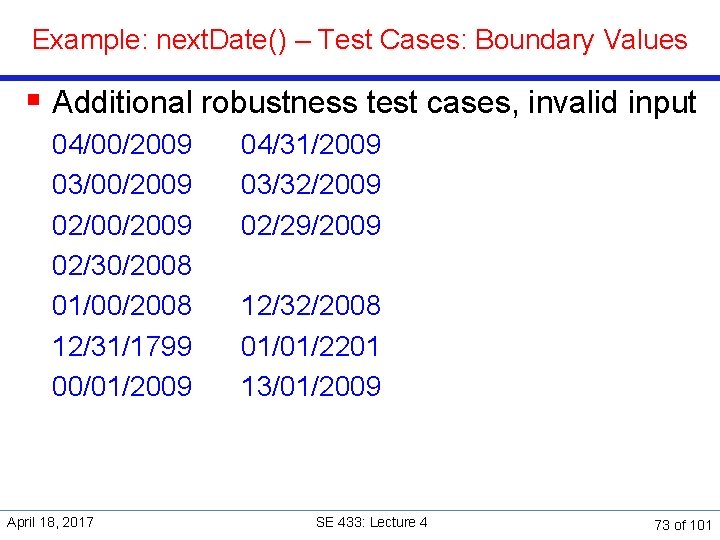

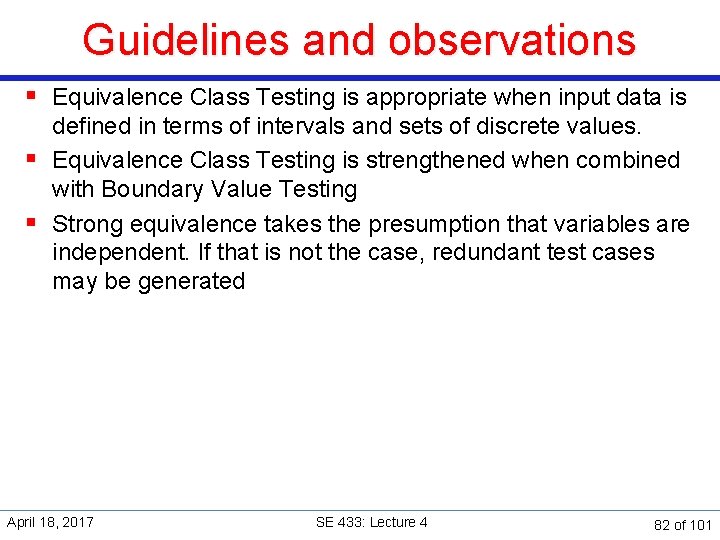

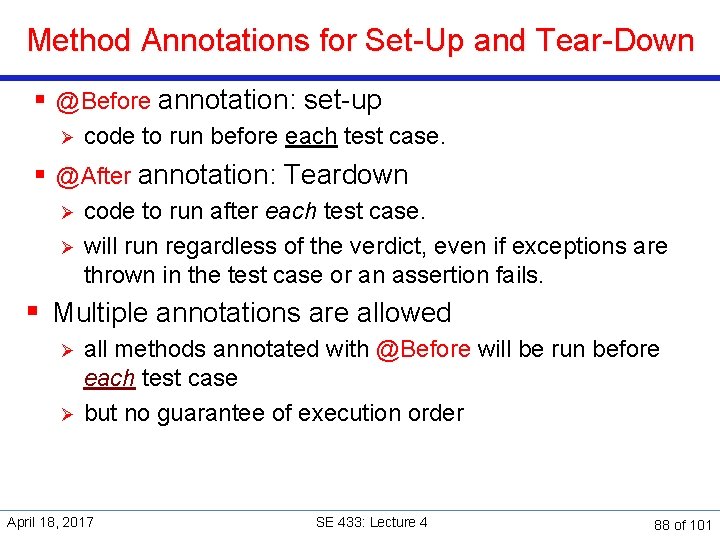

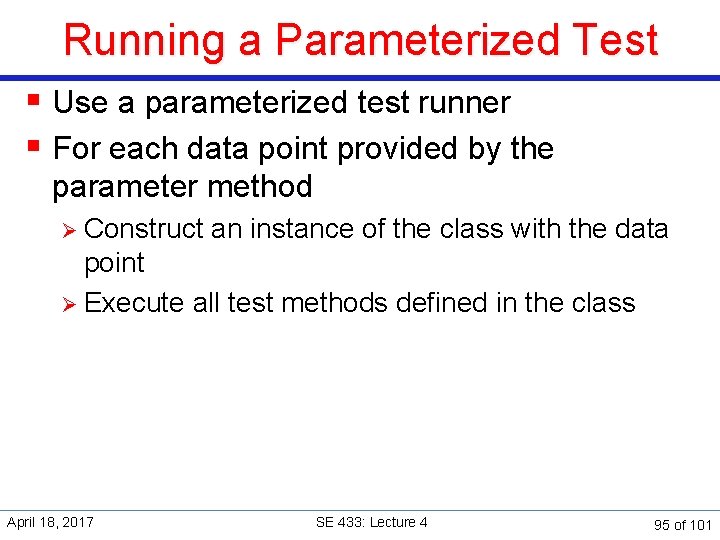

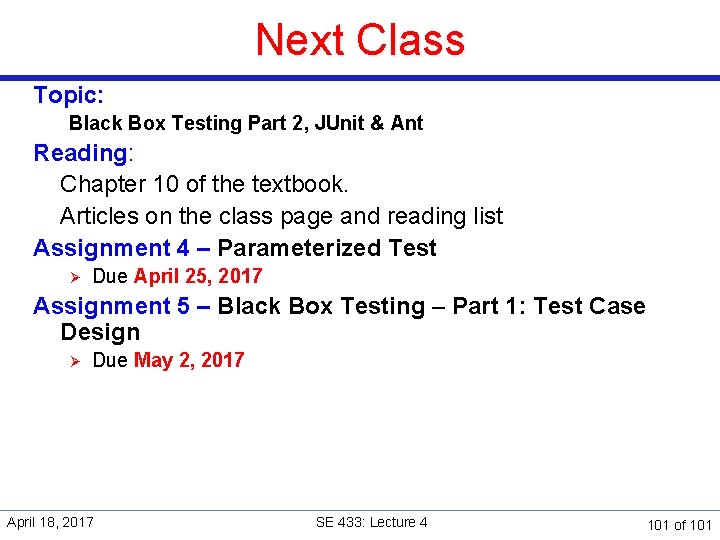

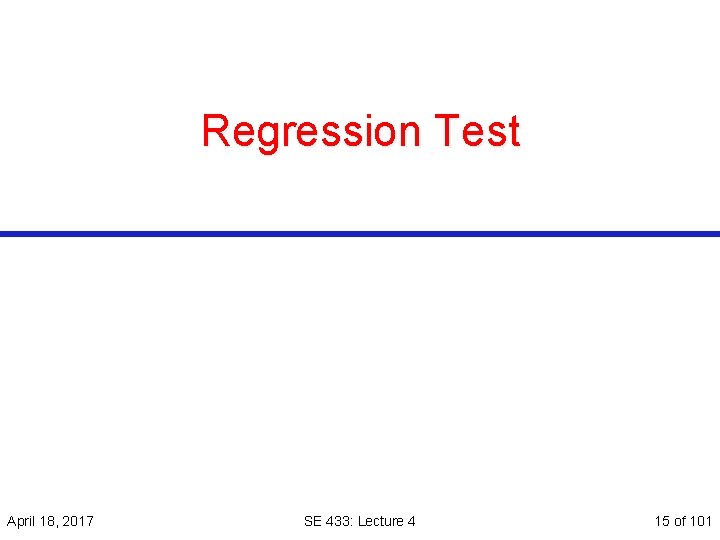

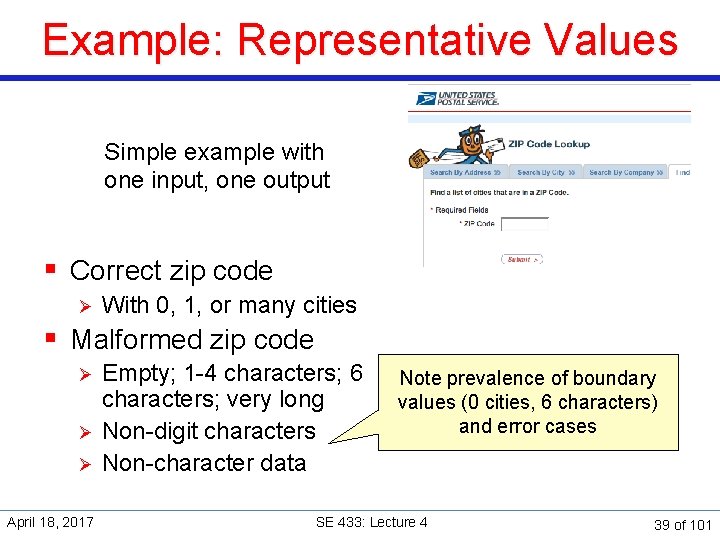

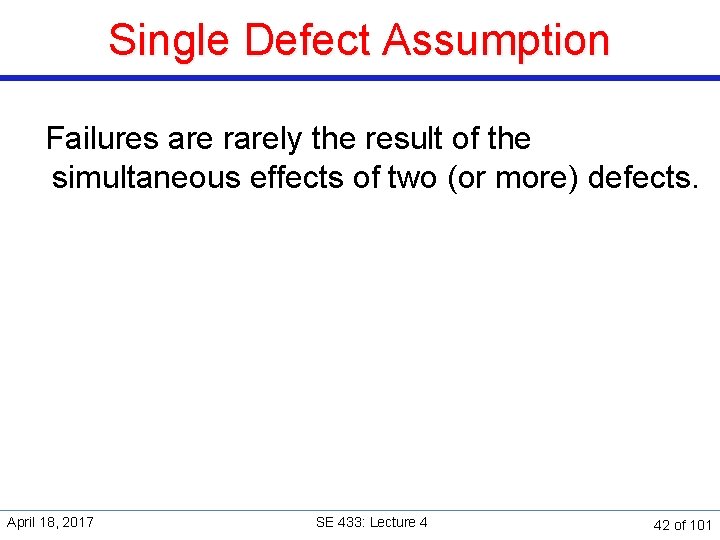

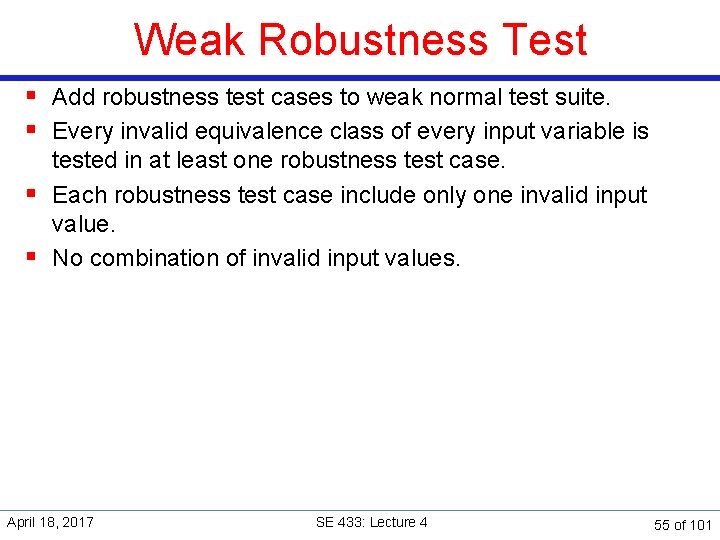

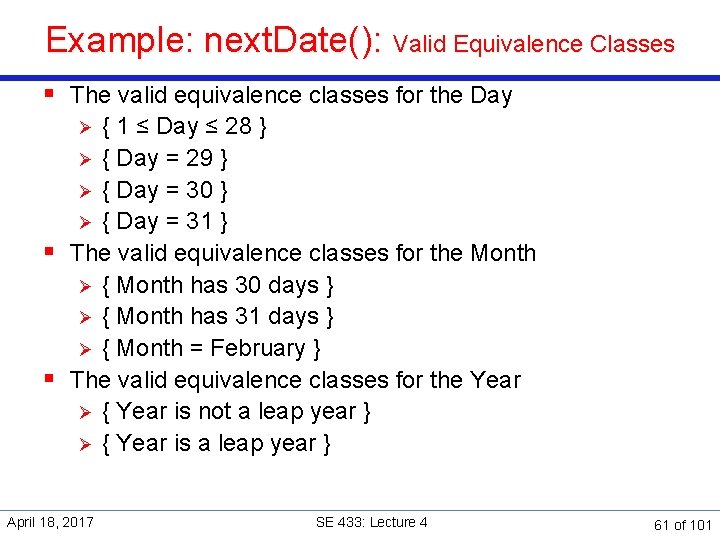

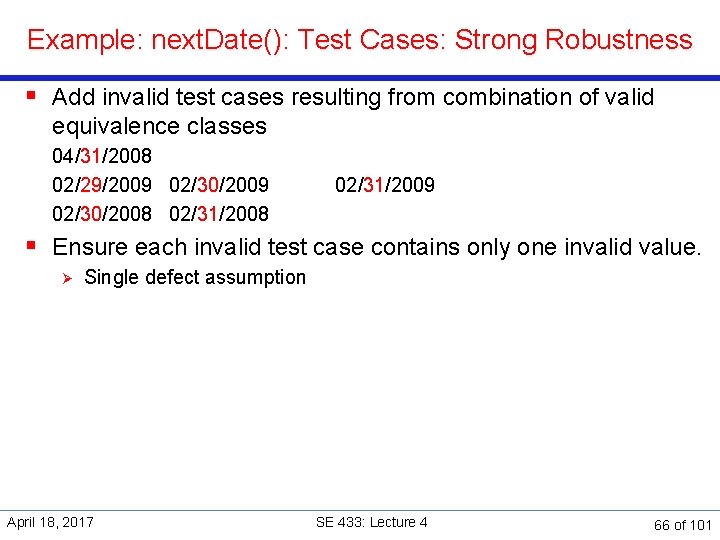

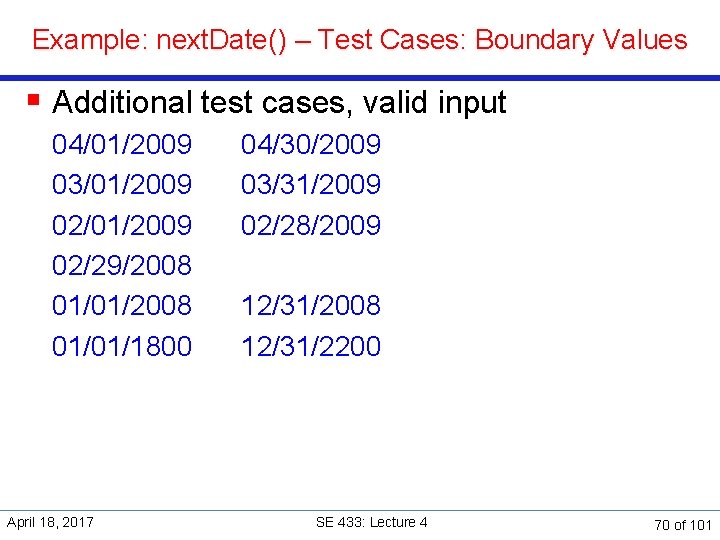

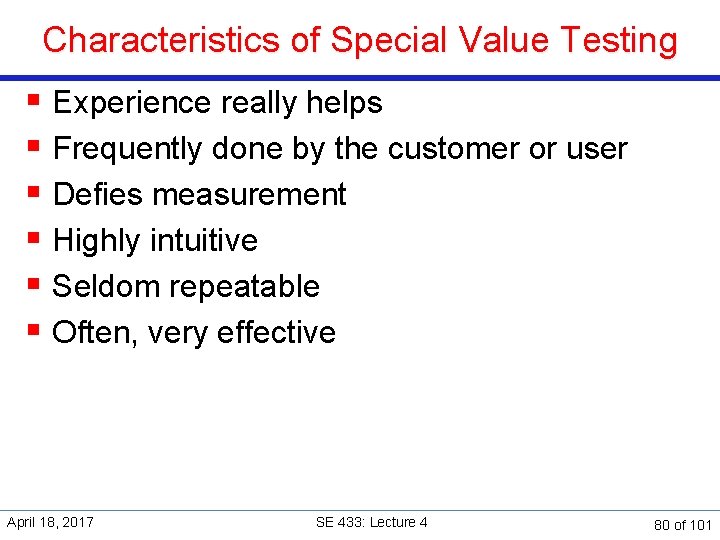

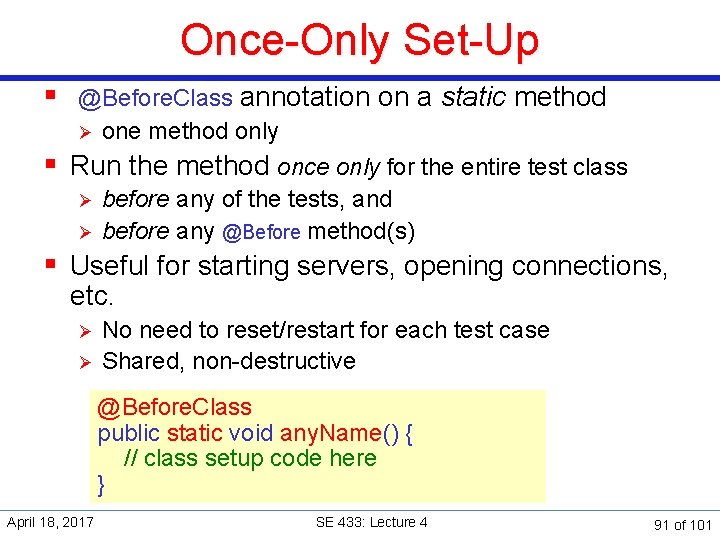

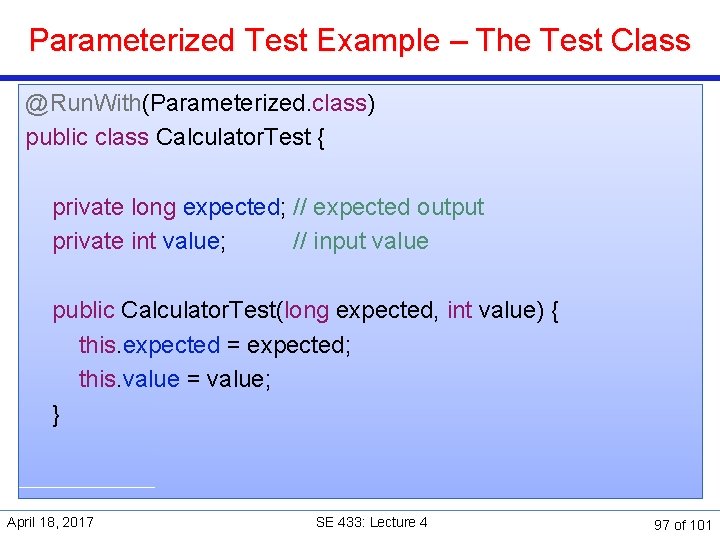

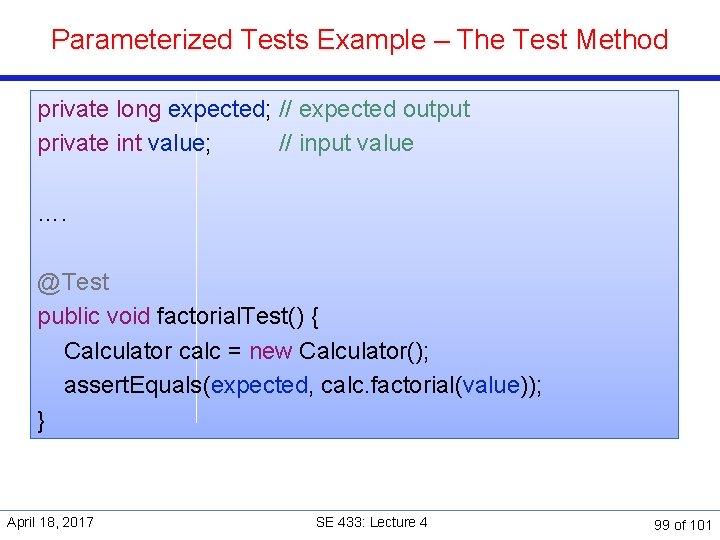

Parameterized Tests § Repeat a test case multiple times with different data § Define a parameterized test Ø Ø Ø April 18, 2017 Class annotation, defines a test runner @Run. With(Parameterized. class) Define a constructor » Input and expected output values for one data point Define a static method returns a Collection of data points » Annotation @Parameter [or @Parameters, depending] » Each data point: an array, whose elements match the constructor arguments SE 433: Lecture 4 94 of 101

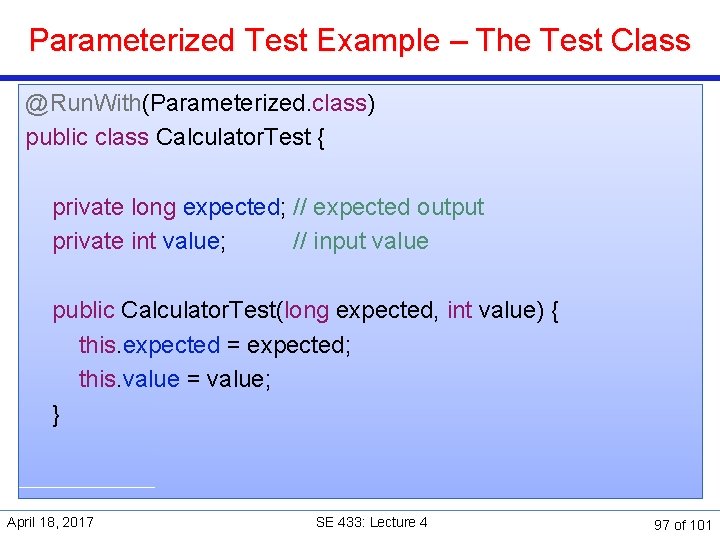

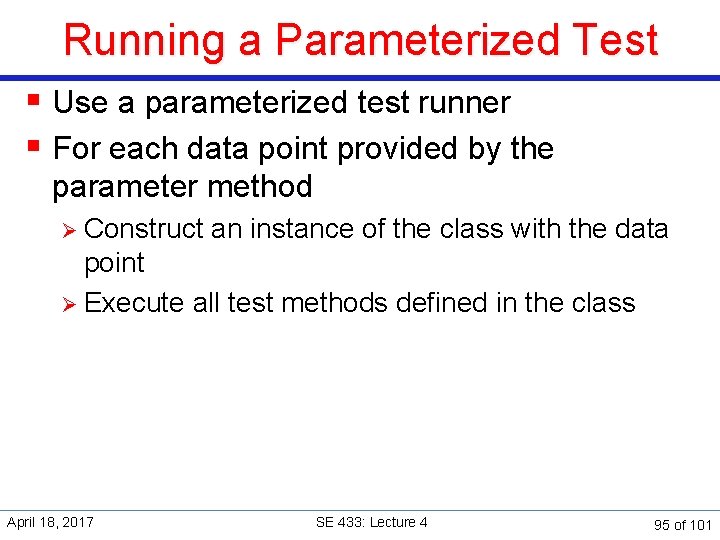

Running a Parameterized Test § Use a parameterized test runner § For each data point provided by the parameter method Ø Construct an instance of the class with the data point Ø Execute all test methods defined in the class April 18, 2017 SE 433: Lecture 4 95 of 101

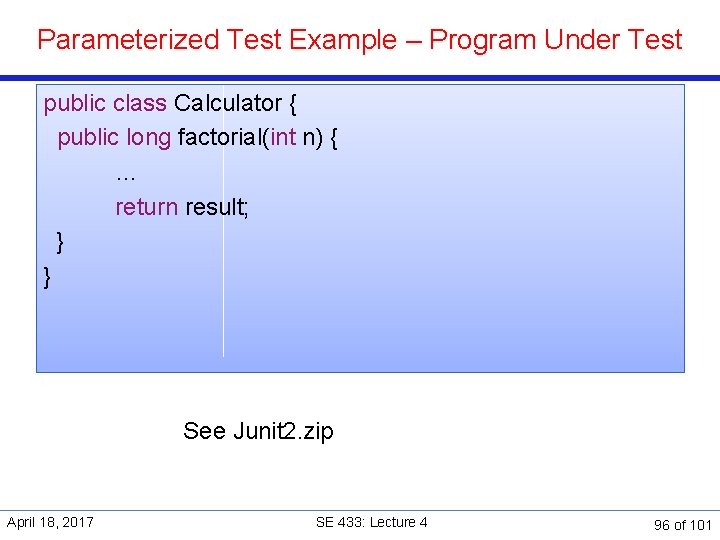

Parameterized Test Example – Program Under Test public class Calculator { public long factorial(int n) { … return result; } } See Junit 2. zip April 18, 2017 SE 433: Lecture 4 96 of 101

Parameterized Test Example – The Test Class @Run. With(Parameterized. class) public class Calculator. Test { private long expected; // expected output private int value; // input value public Calculator. Test(long expected, int value) { this. expected = expected; this. value = value; } April 18, 2017 SE 433: Lecture 4 97 of 101

![Parameterized Tests Example The Parameter Method Parameters public static CollectionInteger data return Parameterized Tests Example – The Parameter Method @Parameters public static Collection<Integer[]> data() { return](https://slidetodoc.com/presentation_image_h/be52d05681641345497be654e48ccd0f/image-98.jpg)

Parameterized Tests Example – The Parameter Method @Parameters public static Collection<Integer[]> data() { return Arrays. as. List(new Integer[][] { { 1, 0 }, // expected, value { 1, 1 }, { 2, 2 }, { 24, 4 }, { 5040, 7 }, }); } April 18, 2017 SE 433: Lecture 4 98 of 101

Parameterized Tests Example – The Test Method private long expected; // expected output private int value; // input value …. @Test public void factorial. Test() { Calculator calc = new Calculator(); assert. Equals(expected, calc. factorial(value)); } April 18, 2017 SE 433: Lecture 4 99 of 101

Readings and References § Chapter 10 of the textbook. § JUnit documentation http: //junit. org § An example of parameterized test Ø JUnit 2. zip in D 2 L Ø April 18, 2017 SE 433: Lecture 4 100 of 101

Next Class Topic: Black Box Testing Part 2, JUnit & Ant Reading: Chapter 10 of the textbook. Articles on the class page and reading list Assignment 4 – Parameterized Test Ø Due April 25, 2017 Assignment 5 – Black Box Testing – Part 1: Test Case Design Ø Due May 2, 2017 April 18, 2017 SE 433: Lecture 4 101 of 101