SDM Integration Framework in the Hurricane of Data

- Slides: 32

SDM Integration Framework in the Hurricane of Data SDM AHM 10/07/2008 Scott A. Klasky klasky@ornl. gov ANL: Ross Cal. Tech: Cummings GT: Abbasi, Lofstead, Schwan, Wolf, Zheng LBNL: Shoshani, Sim, Wu LLNL: Kamath ORNL: Barreto, Hodson, Jin, Kora, Podhorszki NCSU: Breimyer, Mouallem, Nagappan, Samatova, Vouk NWU: Choudhary, Liao Scott Klasky NYU: Chang, Ku PNNL: Critchlow PPPL: Ethier, Fu, Samtaney, Stotler Rutgers: Bennett, Docan, Parashar, Silver SUN: Di Utah: Kahn, Parker, Silva UCI: Lin, Xiao UCD: Ludaescher UCSD: Altintas, Crawl

Outline • Current success stories and future customers of SEIF. • The problem statement. • Vision statement. • SEIF. • ADIOS. • Workflows. • Provenance. • Security. • Dashboard. • The movie. • Vision for the future. Scott Klasky

Current success stories • • Data Management/Workflow • Finally, our project benefits greatly from the expertise of the computational science team at NCCS in extracting physics out of a large amount of simulation data, in particular, through collaborations with Dr. Klasky on visualization, data management, and work flow… HPCWire 2/2007 • Success in using workflows. Used several times with GTC and GTS groups. (Working on everyday uses). ADIOS • July 14 -- A team of researchers from the University of California-Irvine (UCI), in conjunction with staff at Oak Ridge National Laboratory's National Center for Computational Sciences (NCCS), has just completed what it says is the largest run in fusion simulation history. “This huge amount of data needs fast and smooth file writing and reading, " said Xiao. "With poor I/O, the file writing takes up precious computer time and the parallel file system on machines such as Jaguar can choke. With ADIOS, the I/O was vastly improved, consuming less than 3 percent of run time and allowing the researchers to write tens of terabytes of data smoothly without file system failure. “ (HPC Wire 7/2008) • “Chimera code ran 1000 x faster I/O with ADIOS on Jaguar” Messer Sci. DAC 08. • S 3 D will include ADIOS. J. Chen. • ESMF team looking into ADIOS. C. De. Luca • R. Harrison (ORNL) looking into ADIOS. • XGC 1 code using ADIOS everyday. • GTS code now working with ADIOS. Scott Klasky

Current success stories • Scott Klasky Workflow automation. • S 3 D data archiving workflow moved 10 TB of data from NERSC to ORNL. J. Chen • GTC workflow automation saved valuable time during early simulations on jaguar, and simulations on seaborg. • “From a data management perspective, the CPES project is using state-of-the-art technology and driving development of the technology in new and interesting ways. The workflows developed under this project are extremely complex and are pushing the Kepler infrastructure into the HPC arena. The work on ADIOS is a novel and exciting approach to handling high-volume I/O and could be extremely useful for a variety of scientific applications. The current dashboard technology is using existing technology, and not pushing the state of the art in web-based interfaces. Nonetheless, it is providing the CPES scientists an important capability in a useful and intuitive manner. “ CPES reviewer 4 (CPES Sci. DAC review). • There are many approaches to creating user environments for suites of computational codes, data and visualization. This project made choices that worked out well. The effort to construct and deploy the dashboard and workflow framework has been exemplary. It seems clear that the dashboard has already become the tool of choice for interacting with and managing multi-phase edge simulations. The integration of visualization and diagnostics into the dashboard meets many needs of the physicists who use the codes. The effort to capture all the dimensions of provenance is also well conceived, however the capabilities have not reached critical mass yet. Much remains to be done to enable scientists to get the most from the provenance database. This project is making effective use of the HPC resources at ORNL and NERSC. The Dashboard provides run time diagnostics and visualization of intermediate results which allows physicists to determine whether the computation is proceeding as expected. This capability has the potential to save considerable computer time by identifying runs that are going awry so they can be canceled before they would otherwise have finished. (CPES Sci. DAC review, reviewer #1).

Current success stories • The connection to ewok. ccs. ornl. gov is unstable. • I got error an error message like “ssh_exchange_identification: Connection closed by remote host” when I tried ‘ssh ewok’ from jaguar. This error doesn’t happen always (about 30% chance), but running kepler workflow is interrupted by this error. • Please check the system. • Thank you, • Seung-Hoe 10/3/2008 • Hi, • I have a few things to ask you to consider. • Currently workflow copies all *. bp files including restart. *. bp. And It seems that these restart*. bp files are converted into hdf 5 and sent to AVS (not sure. Tell me if I am wrong). This takes really long time for large runs. Could you make workflow not do all of these or do it after movie of other variables are generated? • Currently, dashboard shows only movies after the simulation ends. However, generating movies takes certain amount of time after the simulation ends. If restart files are exist, the time is very long. If I uses –t option for a already completed simulation, the time is about a few hours to generates all AVS graphs. So, if you can make a button which makes dashboard think the simulation is not ended, it would be useful. • The min/max routine doesn’t seem to catch real min/max for very tiny numbers. Some of data has value of ~1 E-15 and global plot is just zero. One example is ‘ion__total_E_flux(avg)’ of shot j 38. • When I tried workflow of restarted xgc run with the same shot number and different job. ID, txt files are overwritten. • When I run workflow for franklin job, txt_file is ignored. Maybe I set something wrong. Could you check txt_file copy of franklin working OK? • Maybe some of the features are not problems with ADIOS version of workflow. If so, let me know please. • Scott Klasky Thanks,

Current and Future customers • • GTC. • Monitoring workflows. • Will want post processing/data analysis workflows. GTS. • • XGC 1 • • Similar to GTC, but will need to get data from experimental source (MDS+). Monitoring workflows. XGC 0 -M 3 D code coupling. • Code coupling. • XGC 1 – GEM code coupling. • GEM • M 3 D-K / GKM • S 3 D • Chimera. • Climate (with ESMF team). Still working out details. Scott Klasky

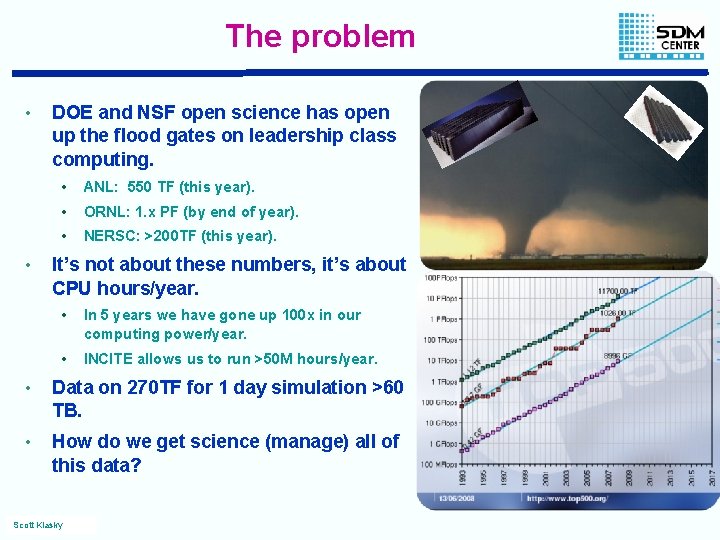

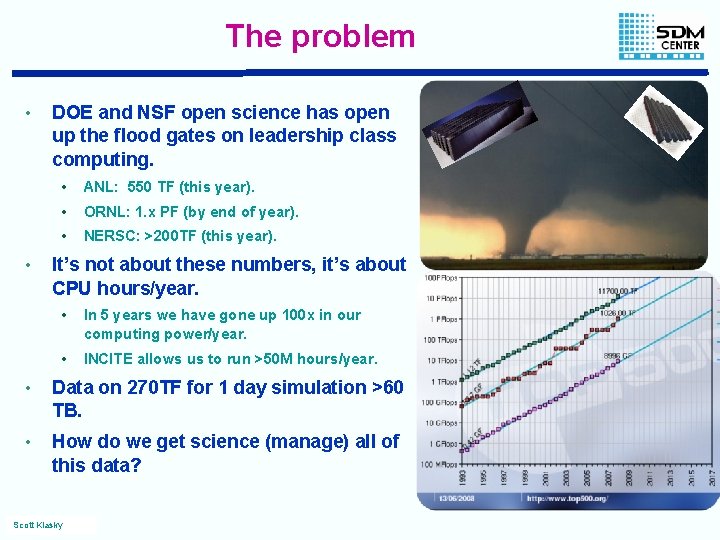

The problem • • DOE and NSF open science has open up the flood gates on leadership class computing. • ANL: 550 TF (this year). • ORNL: 1. x PF (by end of year). • NERSC: >200 TF (this year). It’s not about these numbers, it’s about CPU hours/year. • In 5 years we have gone up 100 x in our computing power/year. • INCITE allows us to run >50 M hours/year. • Data on 270 TF for 1 day simulation >60 TB. • How do we get science (manage) all of this data? Scott Klasky

Vision • Problem: Managing the data from a petascale simulation, and debugging the simulation, and extracting the science involves. • Tracking • the codes: Simulation, Analysis • the input files/parameters • the output files, from the simulation and analysis programs. • the machines and environment the codes ran on. • Gluing all of the pieces together with workflow automation to automate the mundane tasks. • Monitoring simulation in real-time. • Analyzing the results, and visualizing the results without requiring users to know all of the file names, and making this happen in the same place where we can monitor the codes. • Fast I/O which can be easily tracked. • Moving data to remote locations without babysitting data movement. Scott Klasky

Vision • Requirements. • Want all enabling technologies to play well together. • Componentized approach for building pieces in SEIF. • Components should work well by themselves, and work inside of framework. • Fast • Scalable • Easy to use!!!! • Scott Klasky Simplify the life of application scientists!!!

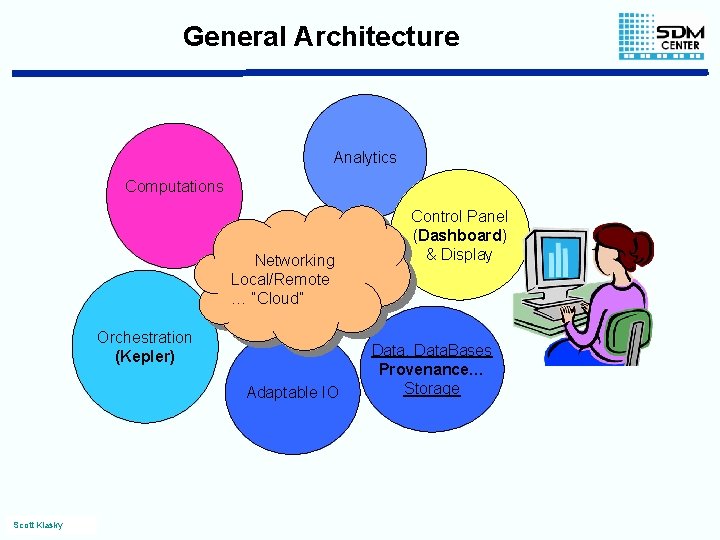

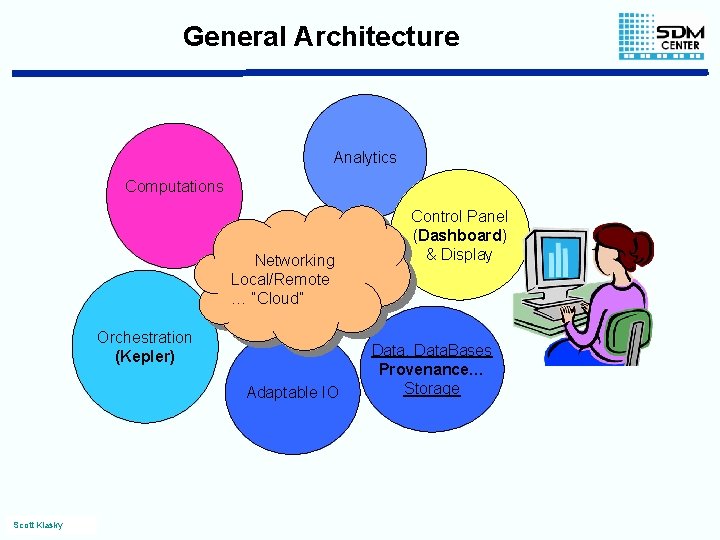

General Architecture Analytics Computations Networking Local/Remote … “Cloud” Orchestration (Kepler) Adaptable IO Scott Klasky Control Panel (Dashboard) & Display Data, Data. Bases Provenance… Storage

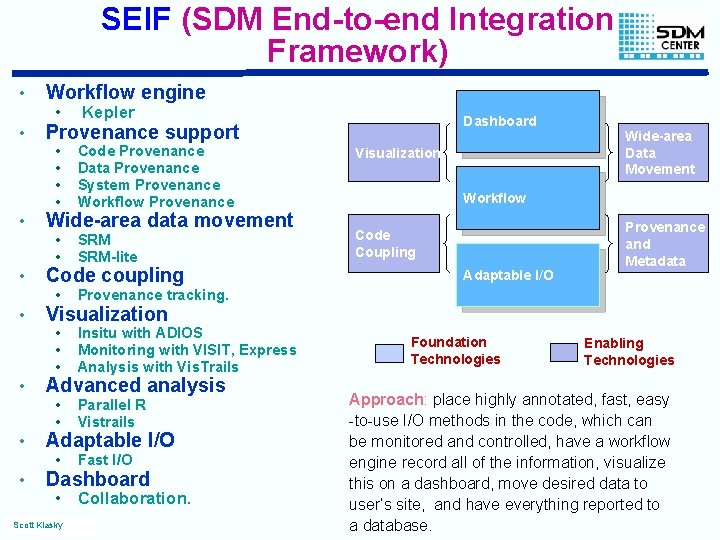

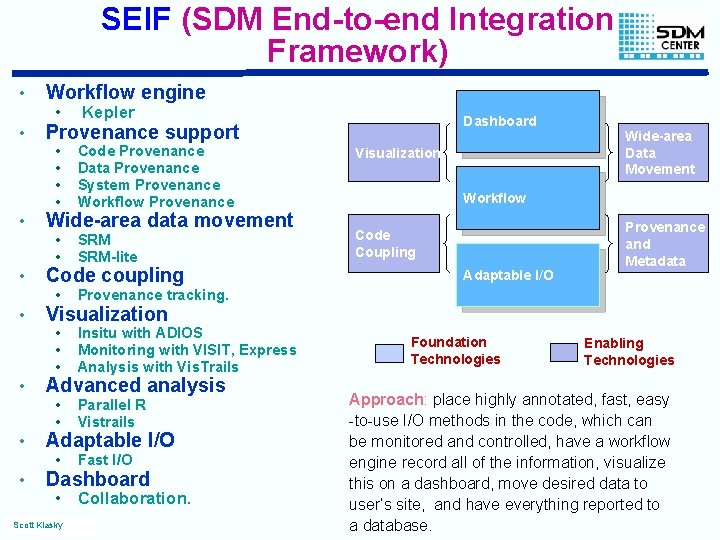

SEIF (SDM End-to-end Integration Framework) • Workflow engine • Provenance support • • • Kepler Code Provenance Data Provenance System Provenance Workflow Provenance Visualization SRM-lite Code Coupling Wide-area data movement • • Dashboard Code coupling • Provenance tracking. • • • Insitu with ADIOS Monitoring with VISIT, Express Analysis with Vis. Trails • • Parallel R Vistrails • Fast I/O Wide-area Data Movement Workflow Adaptable I/O Provenance and Metadata Visualization Advanced analysis Adaptable I/O Dashboard • Collaboration. Scott Klasky Foundation Technologies Enabling Technologies Approach: place highly annotated, fast, easy -to-use I/O methods in the code, which can be monitored and controlled, have a workflow engine record all of the information, visualize this on a dashboard, move desired data to user’s site, and have everything reported to a database.

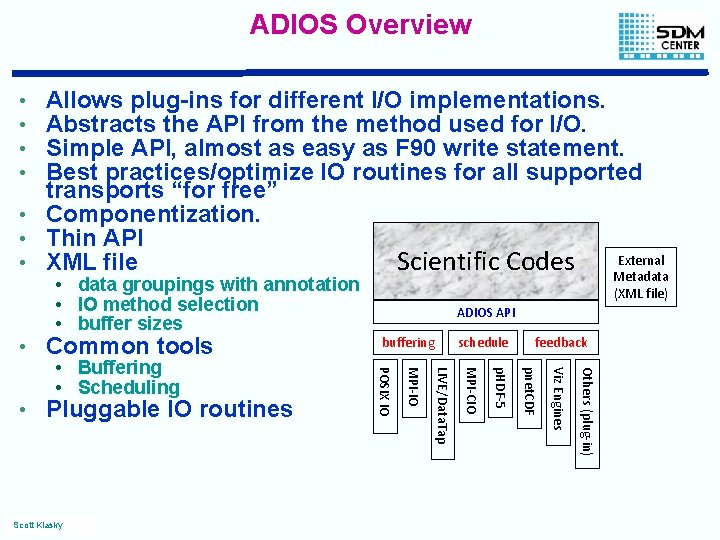

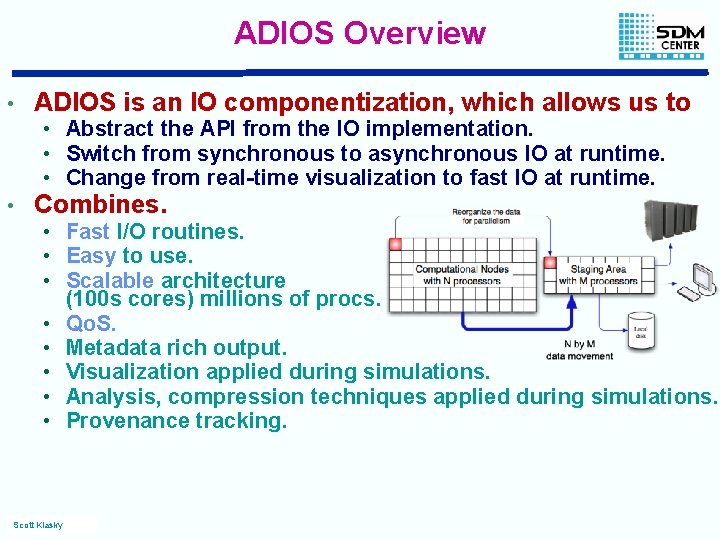

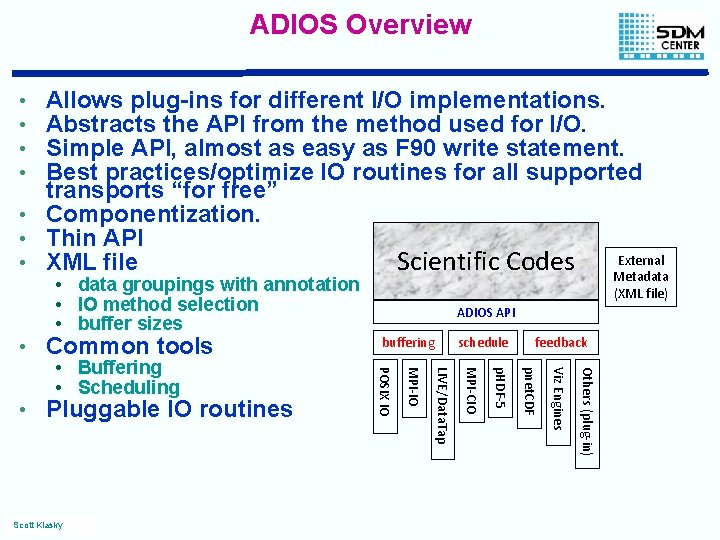

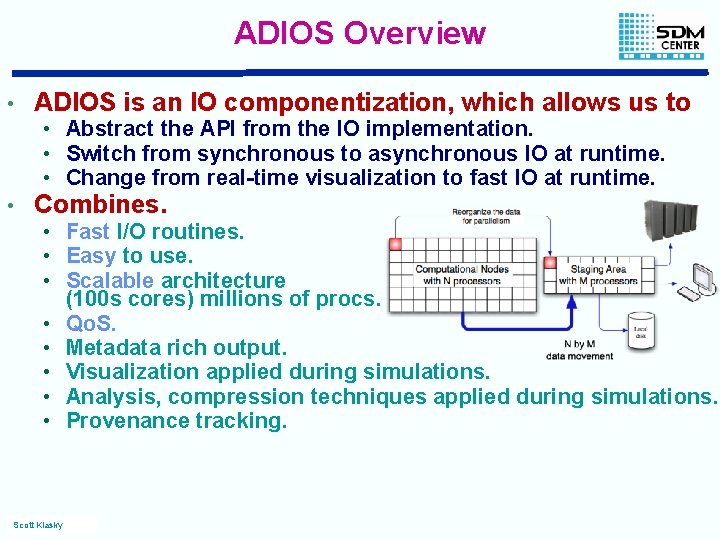

ADIOS Overview Allows plug-ins for different I/O implementations. Abstracts the API from the method used for I/O. Simple API, almost as easy as F 90 write statement. Best practices/optimize IO routines for all supported transports “for free” • Componentization. • Thin API External Scientific Codes Metadata • XML file • • • data groupings with annotation • IO method selection • buffer sizes feedback Others (plug-in) Viz Engines pnet. CDF p. HDF-5 Scott Klasky MPI-CIO Pluggable IO routines schedule LIVE/Data. Tap • • Buffering • Scheduling buffering MPI-IO Common tools ADIOS API POSIX IO • (XML file)

ADIOS Overview • ADIOS is an IO componentization, which allows us to • Abstract the API from the IO implementation. • Switch from synchronous to asynchronous IO at runtime. • Change from real-time visualization to fast IO at runtime. • Combines. • Fast I/O routines. • Easy to use. • Scalable architecture (100 s cores) millions of procs. • Qo. S. • Metadata rich output. • Visualization applied during simulations. • Analysis, compression techniques applied during simulations. • Provenance tracking. Scott Klasky

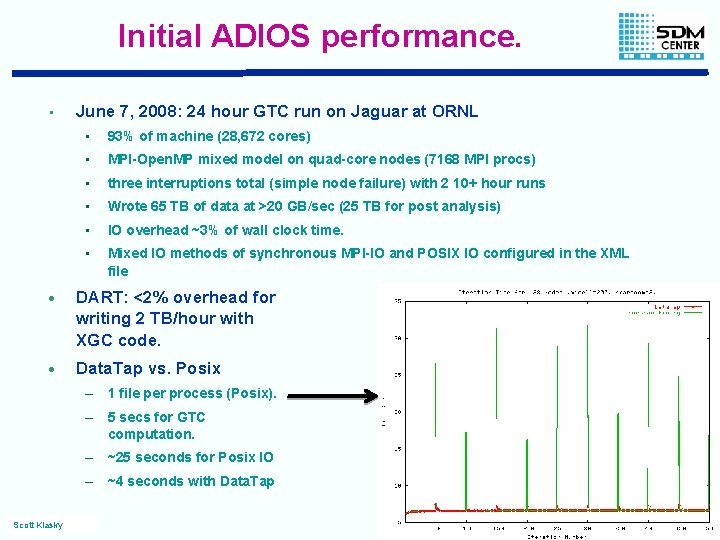

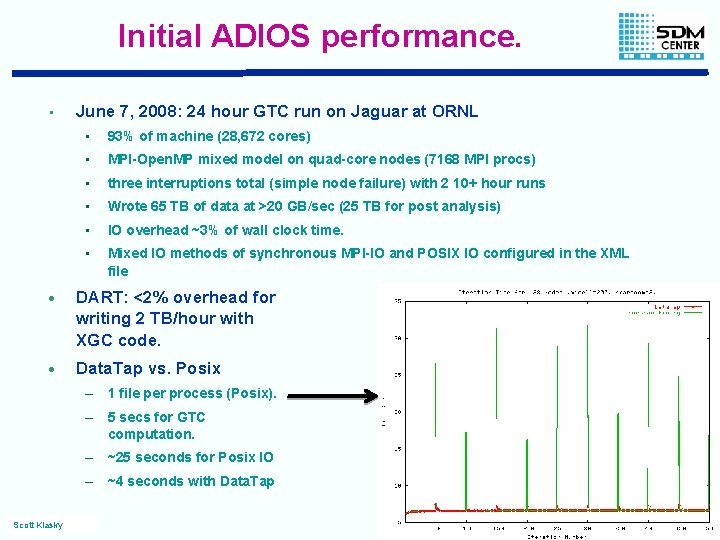

Initial ADIOS performance. • June 7, 2008: 24 hour GTC run on Jaguar at ORNL • 93% of machine (28, 672 cores) • MPI-Open. MP mixed model on quad-core nodes (7168 MPI procs) • three interruptions total (simple node failure) with 2 10+ hour runs • Wrote 65 TB of data at >20 GB/sec (25 TB for post analysis) • IO overhead ~3% of wall clock time. • Mixed IO methods of synchronous MPI-IO and POSIX IO configured in the XML file · DART: <2% overhead for writing 2 TB/hour with XGC code. · Data. Tap vs. Posix – 1 file per process (Posix). – 5 secs for GTC computation. – ~25 seconds for Posix IO – ~4 seconds with Data. Tap Scott Klasky

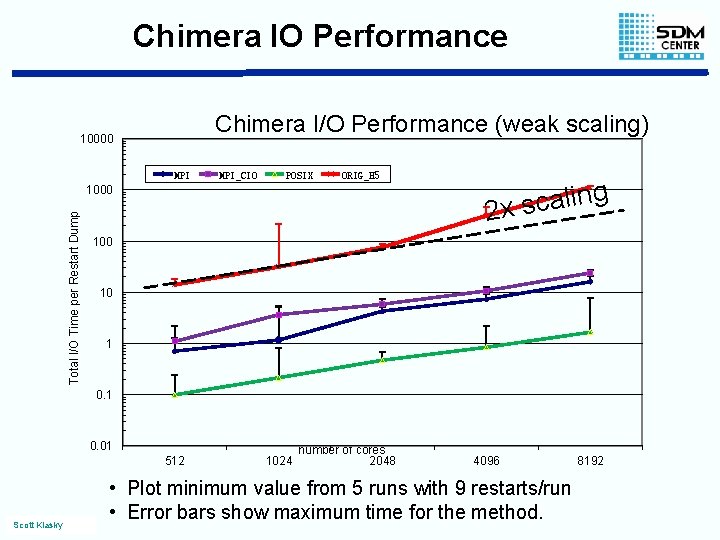

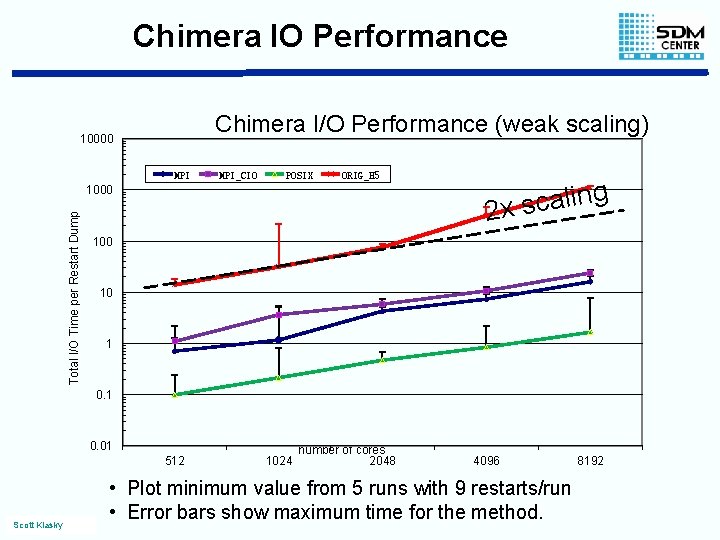

Chimera IO Performance Chimera I/O Performance (weak scaling) 10000 MPI_CIO POSIX ORIG_H 5 Total I/O Time per Restart Dump 1000 g n i l a c s 2 x 100 10 1 0. 01 512 Scott Klasky 1024 number of cores 2048 4096 • Plot minimum value from 5 runs with 9 restarts/run • Error bars show maximum time for the method. 8192

ADIOS challenges • Faster reading. • Faster writing on new petascale/exascale machines. • Work with file system experts to refine our file format for ‘optimal’ performance. • More characteristics in the file by working with analysis and application experts. • Index files better using FASTBIT. • In situ visualization methods. Scott Klasky

Controlling metadata in simulations. • Problem: Codes are producing large amounts of • Files • Data in files. • Information in data. • Need to keep track of this data for extracting the science from the simulations. • Workflows need to keep track of file locations, what information is in the files, etc. • • Solution: • Scott Klasky Makes it easier to develop generic(template) workflows if we can ‘gain’ this information inside of Kepler. Our solution is to provide a link from ADIOS into the provenance (Kepler, …. ).

Current Workflows. • Pre-production. (Uston) • Starting to work on this. • Production workflows. (Norbert) • Currently our most active area of use for SEIF. • Analysis Workflows. (Ayla, Norbert) • Current area of interest. • Need to work with 3 D graphics + parallel data analysis. Scott Klasky

Monitoring a simulation + archiving (XGC) • Net. CDF files - Transfer files to e 2 e system on-the-fly - Generate plots using grace library - Archive Net. CDF files at the end of simulation • Binary files (BP, ADIOS output) - Transfer to e 2 e system using bbcp - Convert to HDF 5 format - Start up AVS/Express service - Generate images with AVS/Express - Archive HDF 5 files in large chunks to HPSS • Generate movies from the images Scott Klasky

Coupling Fusion codes for Full ELM, multi-cycles • Run XGC until unstable conditions • M 3 D coupling data from XGC • Transfer to end-to-end system • Execute M 3 D: compute new equilibrium • Transfer back the new equilibrium to XGC • Execute ELITE: compute growth rate and test linear stability • Execute M 3 D-MPP: to study unstable states (ELM crash) • Restart XGC with new equilibrium from M 3 D-MPP Scott Klasky

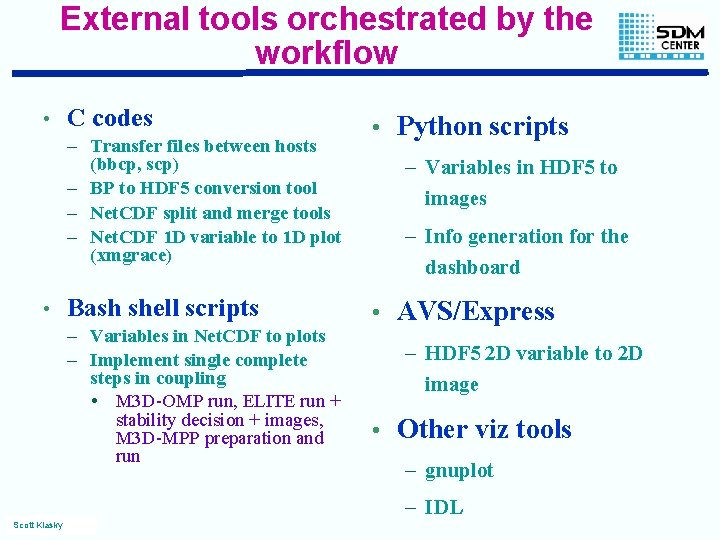

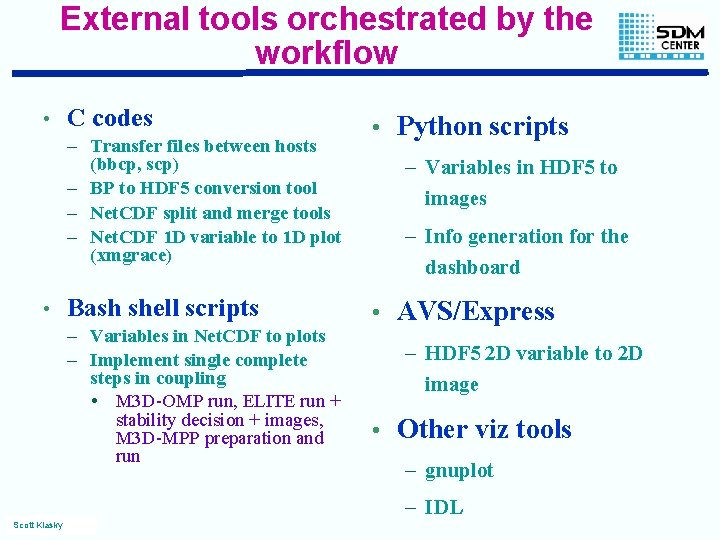

External tools orchestrated by the workflow • C codes – Transfer files between hosts (bbcp, scp) – BP to HDF 5 conversion tool – Net. CDF split and merge tools – Net. CDF 1 D variable to 1 D plot (xmgrace) • Bash shell scripts – Variables in Net. CDF to plots – Implement single complete steps in coupling • M 3 D-OMP run, ELITE run + stability decision + images, M 3 D-MPP preparation and run • Python scripts – Variables in HDF 5 to images – Info generation for the dashboard • AVS/Express – HDF 5 2 D variable to 2 D image • Other viz tools – gnuplot – IDL Scott Klasky

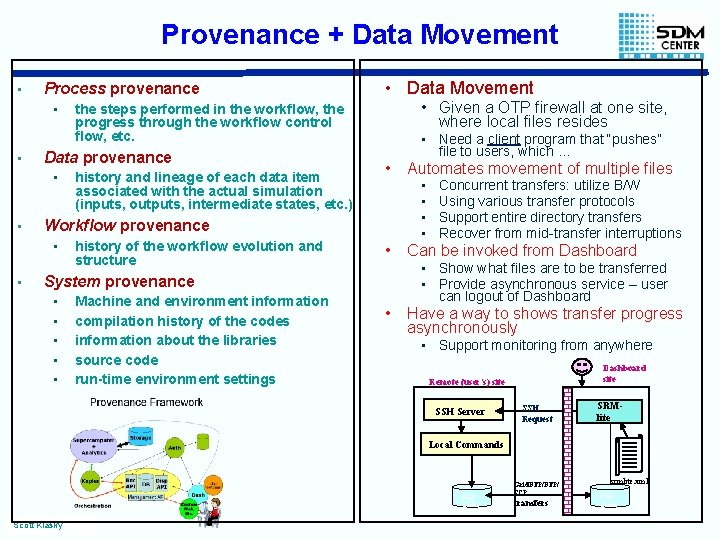

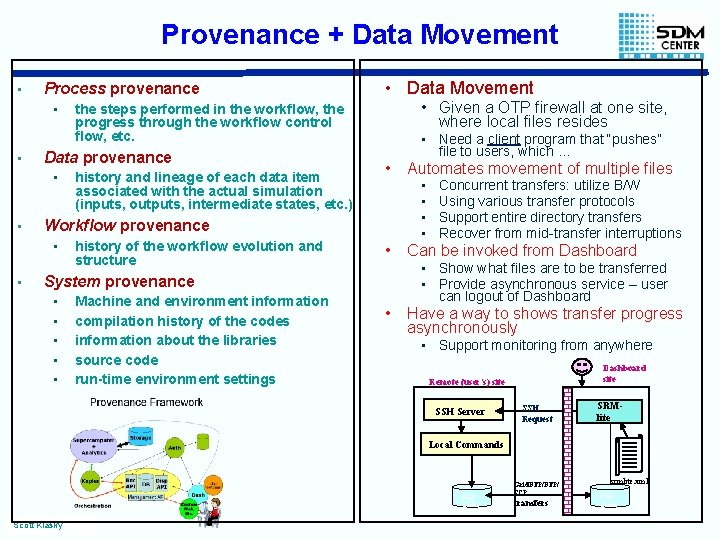

Provenance + Data Movement • Process provenance • • • history and lineage of each data item associated with the actual simulation (inputs, outputs, intermediate states, etc. ) • Need a client program that “pushes” file to users, which … • Workflow provenance • • • Given a OTP firewall at one site, where local files resides the steps performed in the workflow, the progress through the workflow control flow, etc. Data provenance • • Data Movement history of the workflow evolution and structure Machine and environment information compilation history of the codes information about the libraries source code run-time environment settings • • Concurrent transfers: utilize B/W Using various transfer protocols Support entire directory transfers Recover from mid-transfer interruptions • Can be invoked from Dashboard • Have a way to shows transfer progress asynchronously System provenance • • • Automates movement of multiple files • Show what files are to be transferred • Provide asynchronous service – user can logout of Dashboard • Support monitoring from anywhere Dashboard site Remote (user’s) site SSH Server SSH Request SRMlite Local Commands Disk Cache Scott Klasky Grid. FTP/ SCP transfers srmlite. xml Disk Cache

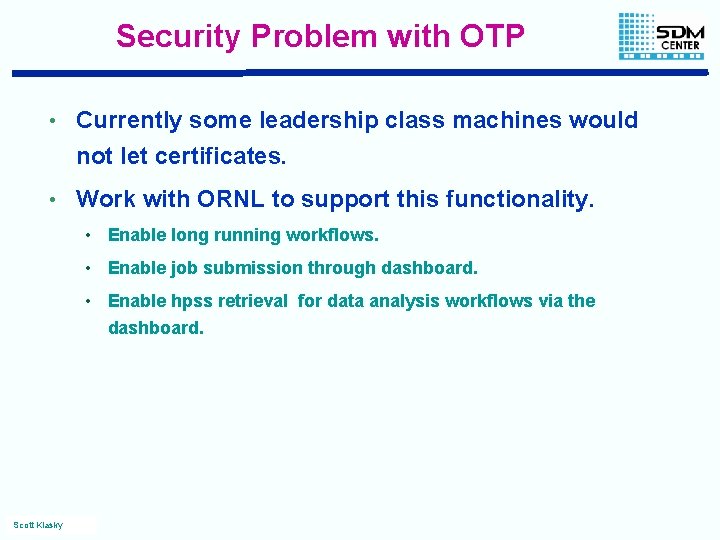

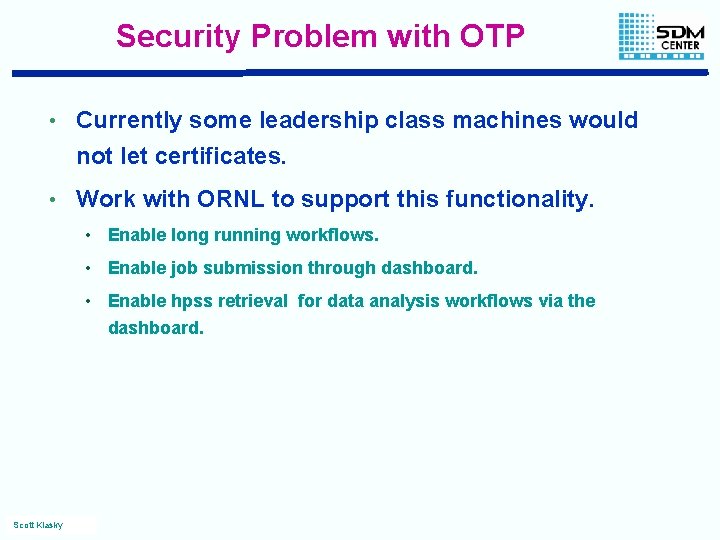

Security Problem with OTP • Currently some leadership class machines would not let certificates. • Work with ORNL to support this functionality. • Enable long running workflows. • Enable job submission through dashboard. • Enable hpss retrieval for data analysis workflows via the dashboard. Scott Klasky

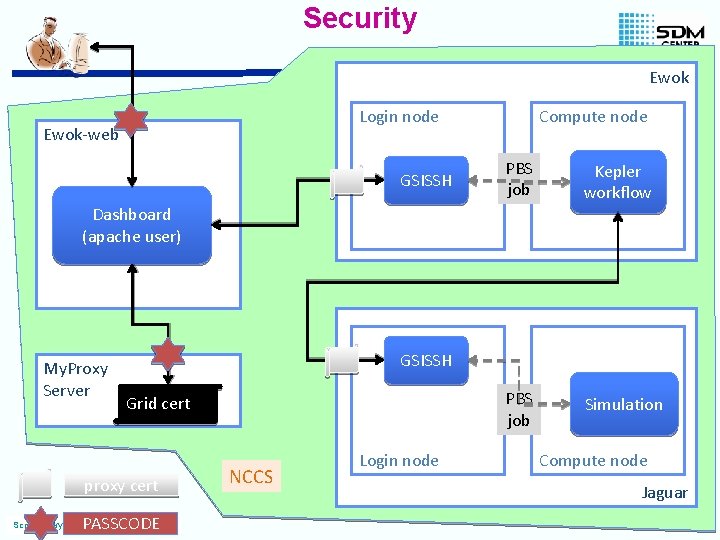

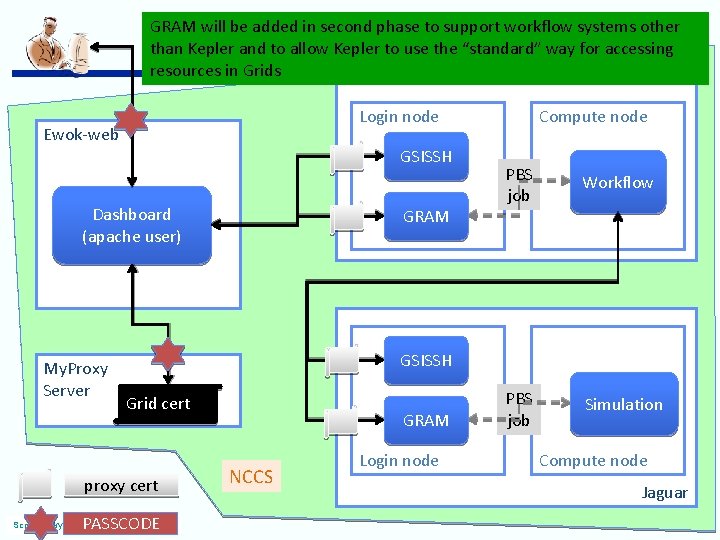

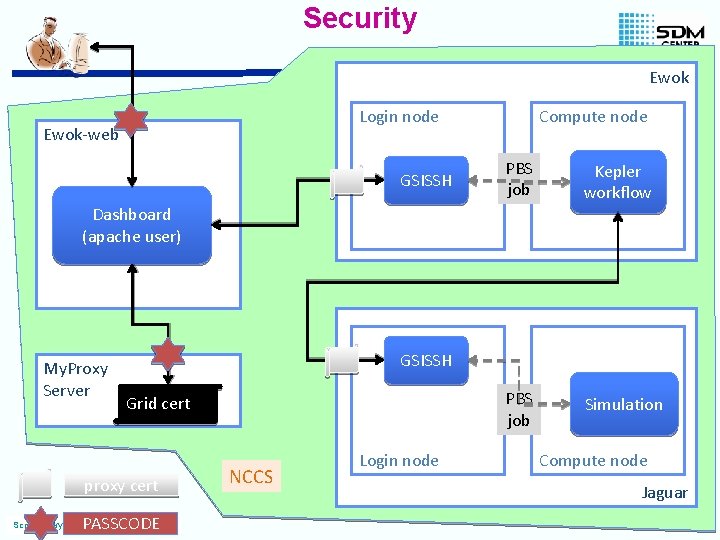

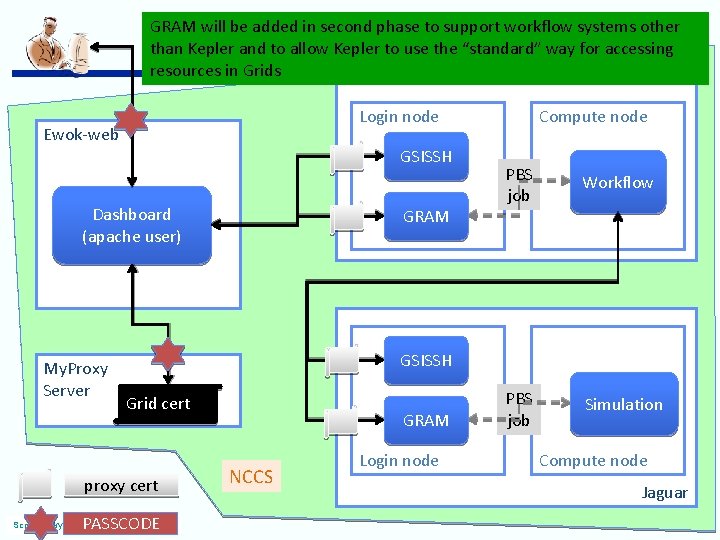

Security Ewok Login node Ewok-web GSISSH Compute node PBS job Kepler workflow PBS job Simulation Dashboard (apache user) My. Proxy Server GSISSH Grid cert proxy cert Scott Klasky PASSCODE NCCS Login node Compute node Jaguar

GRAM will be added in second phase to support workflow systems other than Kepler and to allow Kepler to use the “standard” way for accessing resources in Grids Ewok Login node Ewok-web GSISSH Dashboard (apache user) My. Proxy Server PBS job Workflow PBS job Simulation GSISSH Grid cert proxy cert Scott Klasky GRAM Compute node PASSCODE GRAM NCCS Login node Compute node Jaguar

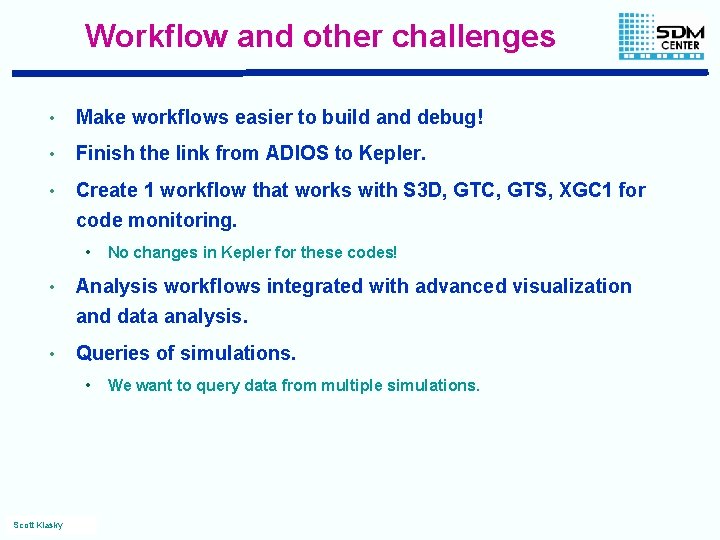

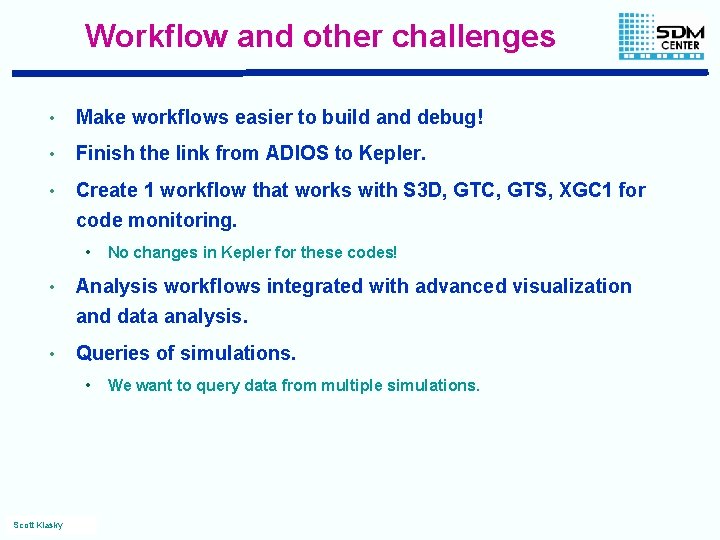

Workflow and other challenges • Make workflows easier to build and debug! • Finish the link from ADIOS to Kepler. • Create 1 workflow that works with S 3 D, GTC, GTS, XGC 1 for code monitoring. • No changes in Kepler for these codes! • Analysis workflows integrated with advanced visualization and data analysis. • Queries of simulations. • Scott Klasky We want to query data from multiple simulations.

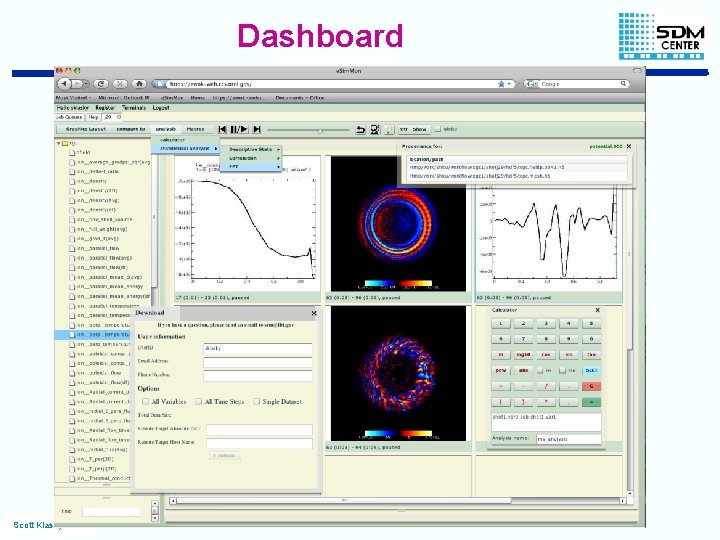

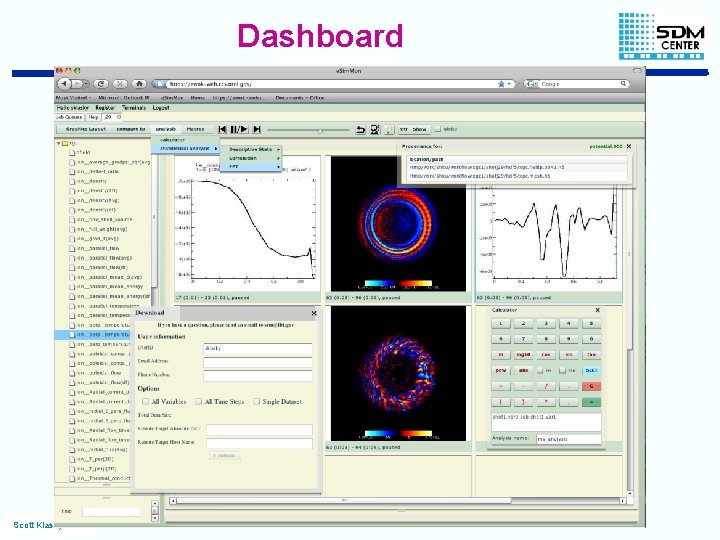

Dashboard Scott Klasky

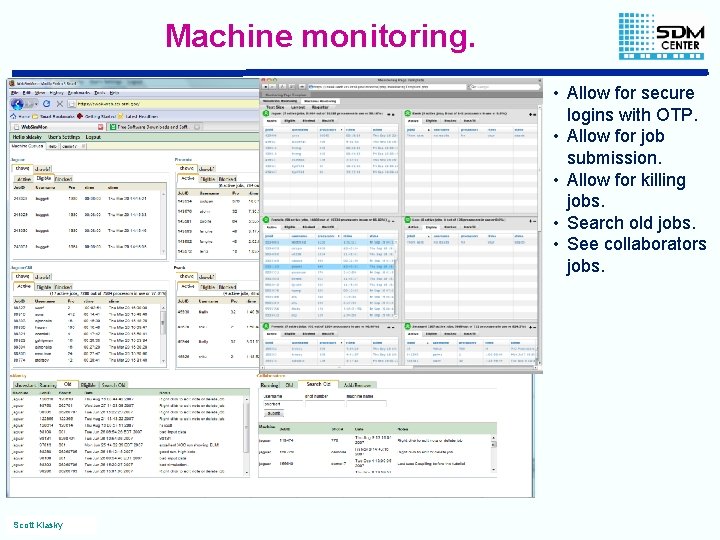

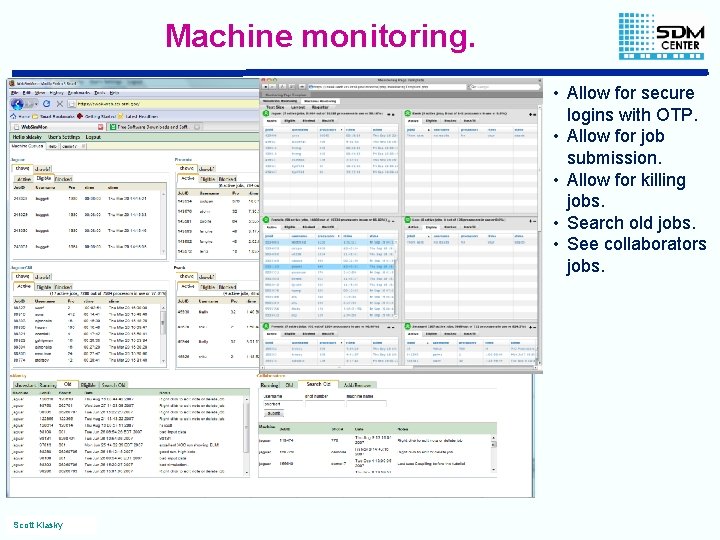

Machine monitoring. • Allow for secure logins with OTP. • Allow for job submission. • Allow for killing jobs. • Search old jobs. • See collaborators jobs. Scott Klasky

Dashboard challenges • Run simulations through the dashboard. • Allow interaction with data from HPSS. • Run advanced analysis and visualization on the dashboard. • Access to more plug-ins for data analysis. • 3 D visualization. • More interactive 2 D visualization. • Query multiple simulations/experimental data through the dashboard for comparative analysis. • Collaboration. Scott Klasky

SEIF Movie Scott Klasky

Vision for the future. • Tiger teams • Work on 1 code with several experts in all areas of DM. • Replace their IO with high performance IO workflow analysis IO provenance • Integrate analysis routines in their analysis workflows. • Create monitoring and analysis workflows. • Track codes. • Integrate into SEIF. • 1 code at a time, 4 months per code. • Rest of team works on core technologies. Scott Klasky Dashboard Team leader

Long term approach for SDM • • Grow the core technologies for e. Xascale computing. • Grow the core by working with more applications. • Don’t build infrastructure if we can’t see ‘core applications’ benefitting from this after 1. 5 years of development. (1 at a time). Team work! • • Create our team of R&D which work with codes. Build mature tools. • Better software testing before we release our software. • Need framework to live with just a few support people. • Need to componetize everything together. • Allow separate pieces to live without SEIF. • Make sure software scales to yottabytes! • • Scott Klasky But first make it work on MB’s. Need to look into better searching of data across multiple simulations.