SDIMS A Scalable Distributed Information Management System Praveen

SDIMS: A Scalable Distributed Information Management System Praveen Yalagandula Mike Dahlin Laboratory for Advanced Systems Research (LASR) University of Texas at Austin Department of Computer Sciences, UT Austin 1

Goal § A Distributed Operating System Backbone Ø Information collection and management § Core functionality of large distributed systems Ø Monitor, query and react to changes in the system Ø Examples: System administration and management Service placement and location Sensor monitoring and control Distributed Denial-of-Service attack detection File location service Multicast tree construction Naming and request routing ………… § Benefits Ø Ø Ease development of new services Facilitate deployment Avoid repetition of same task by different services Optimize system performance Department of Computer Sciences, UT Austin 2

Contributions – SDIMS § Provides an important basic building block Ø Information collection and management § Satisfies key requirements Ø Scalability • With both nodes and attributes • Leverage Distributed Hash Tables (DHT) Ø Flexibility • Enable applications to control the aggregation • Provide flexible API: install, update and probe Ø Autonomy • Enable administrators to control flow of information • Build Autonomous DHTs Ø Robustness • Handle failures gracefully • Perform re-aggregation upon failures – Lazy (by default) and On-demand (optional) Department of Computer Sciences, UT Austin 3

Outline § SDIMS: a distributed operating system backbone § Aggregation abstraction § Our approach Ø Design Ø Prototype Ø Simulation and experimental results § Conclusions Department of Computer Sciences, UT Austin 4

![Aggregation Abstraction Astrolabe [Van. Renesse et al TOCS’ 03] f(f(a, b), 9 f(c, d)) Aggregation Abstraction Astrolabe [Van. Renesse et al TOCS’ 03] f(f(a, b), 9 f(c, d))](http://slidetodoc.com/presentation_image/01e54628e28d0f3d05c1599a063500e2/image-5.jpg)

Aggregation Abstraction Astrolabe [Van. Renesse et al TOCS’ 03] f(f(a, b), 9 f(c, d)) f(a, b) 5 § Attributes 4 f(c, d) Ø Information at machines § Aggregation tree Ø Physical nodes are leaves 2 d 1 2 c 4 a b Ø Each virtual node represents a logical group of nodes A 2 A 1 A 0 • Administrative domains, groups within domains, etc. § Aggregation function, f, for attribute A Ø Computes the aggregated value Ai for level-i subtree • A 0 = locally stored value at the physical node or NULL • Ai = f(Ai-10, Ai-11, …, Ai-1 k) for virtual node with k children Ø Each virtual node is simulated by one or more machines § Example: Total users logged in the system Ø Attribute: num. Users Ø Aggregation Function: Summation Department of Computer Sciences, UT Austin 5

Outline § SDIMS: a distributed operating system backbone § Aggregation abstraction § Our approach Ø Design Ø Prototype Ø Simulation and experimental results § Conclusions Department of Computer Sciences, UT Austin 6

Scalability with nodes and attributes § To be a basic building block, SDIMS should support Ø Large number of machines • Enterprise and global-scale services • Trend: Large number of small devices Ø Applications with a large number of attributes • Example: File location system – Each file is an attribute – Large number of attributes § Challenges: build aggregation trees in a scalable way Ø Build multiple trees • Single tree for all attributes load imbalance Ø Ensure small number of children per node in the tree • Reduces maximum node stress Department of Computer Sciences, UT Austin 7

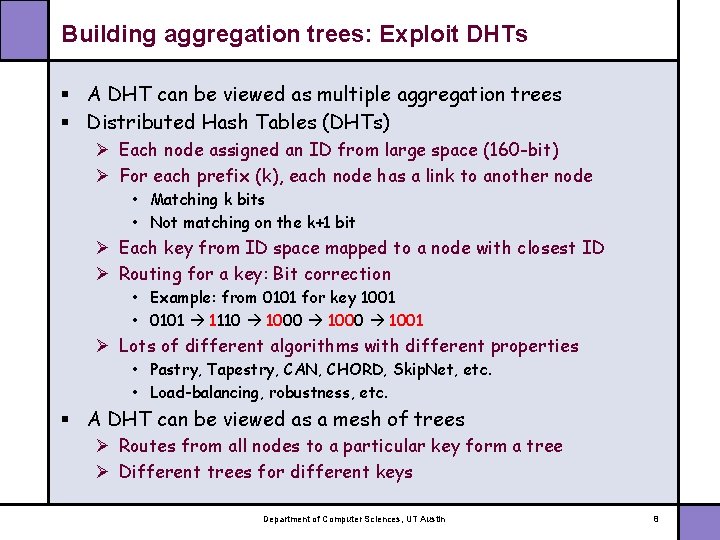

Building aggregation trees: Exploit DHTs § A DHT can be viewed as multiple aggregation trees § Distributed Hash Tables (DHTs) Ø Each node assigned an ID from large space (160 -bit) Ø For each prefix (k), each node has a link to another node • Matching k bits • Not matching on the k+1 bit Ø Each key from ID space mapped to a node with closest ID Ø Routing for a key: Bit correction • Example: from 0101 for key 1001 • 0101 1110 1000 1001 Ø Lots of different algorithms with different properties • Pastry, Tapestry, CAN, CHORD, Skip. Net, etc. • Load-balancing, robustness, etc. § A DHT can be viewed as a mesh of trees Ø Routes from all nodes to a particular key form a tree Ø Different trees for different keys Department of Computer Sciences, UT Austin 8

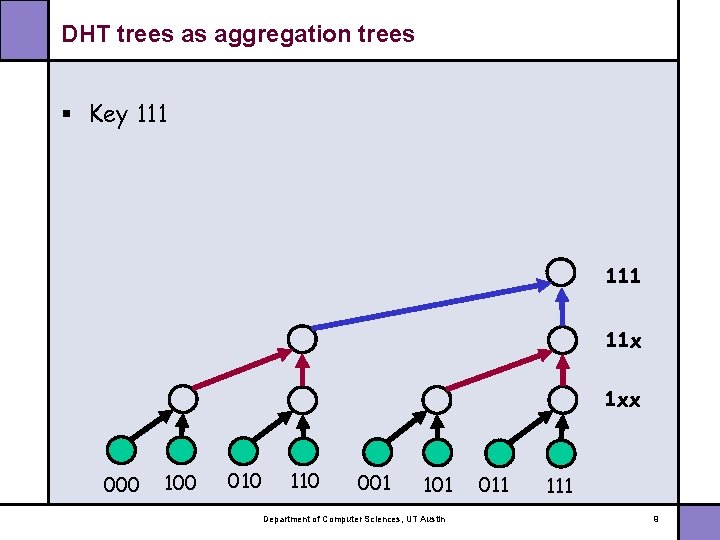

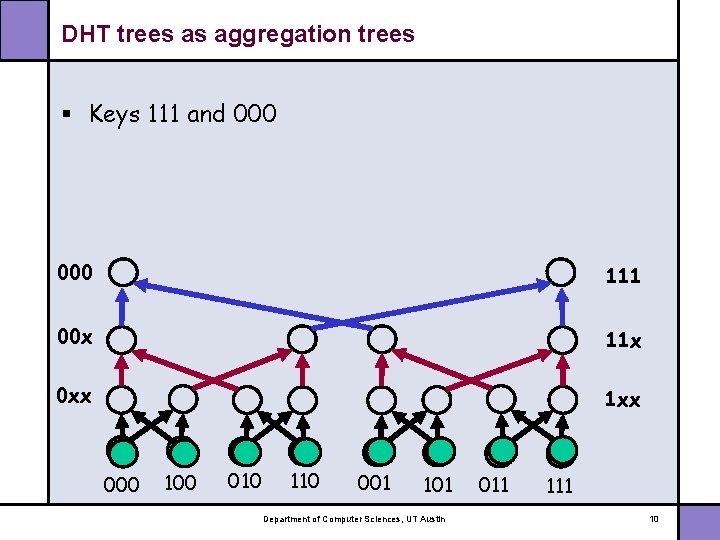

DHT trees as aggregation trees § Key 111 11 x 1 xx 000 100 010 110 001 101 Department of Computer Sciences, UT Austin 011 111 9

DHT trees as aggregation trees § Keys 111 and 000 111 00 x 11 x 0 xx 1 xx 000 100 010 110 001 101 Department of Computer Sciences, UT Austin 011 10

API – Design Goals § Expose scalable aggregation trees from DHT § Flexibility: Expose several aggregation mechanisms Ø Attributes with different read-to-write ratios • “CPU load” changes often: a write-dominated attribute – Aggregate on every write too much communication cost • “Num. CPUs” changes rarely: a read-dominated attribute – Aggregate on reads unnecessary latency Ø Spatial and temporal heterogeneity • Non-uniform and changing read-to-write rates across tree • Example: a multicast session with changing membership § Support sparse attributes of same functionality efficiently Ø Examples: file location, multicast, etc. Ø Not all nodes are interested in all attributes Department of Computer Sciences, UT Austin 11

Design of Flexible API § New abstraction: separate attribute type from attribute name Ø Attribute = (attribute type, attribute name) Ø Example: type=“file. Location”, name=“file. Foo” § Install: an aggregation function for a type Ø Amortize installation cost across attributes of same type Ø Arguments up and down control aggregation on update § Update: the value of a particular attribute Ø Aggregation performed according to up and down Ø Aggregation along tree with key=hash(Attribute) § Probe: for an aggregated value at some level Ø If required, aggregation done to produce this result Ø Two modes: one-shot and continuous Department of Computer Sciences, UT Austin 12

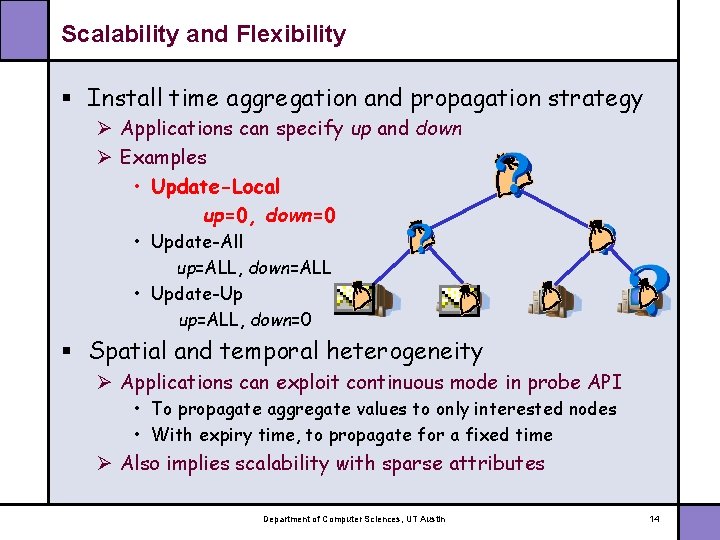

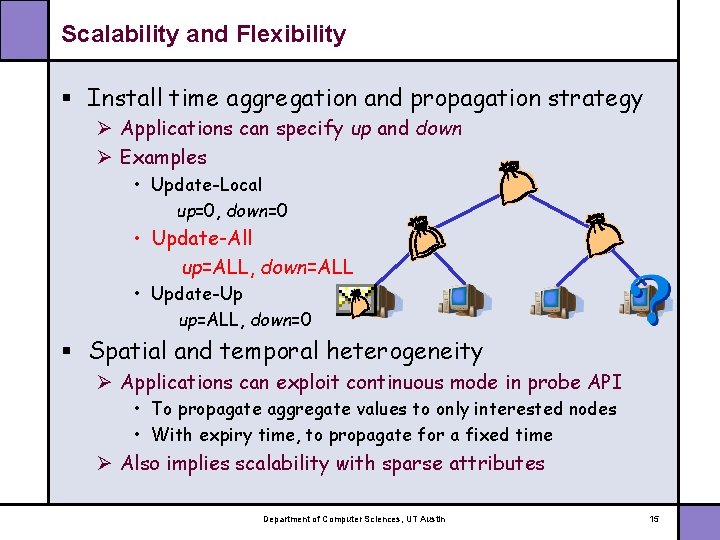

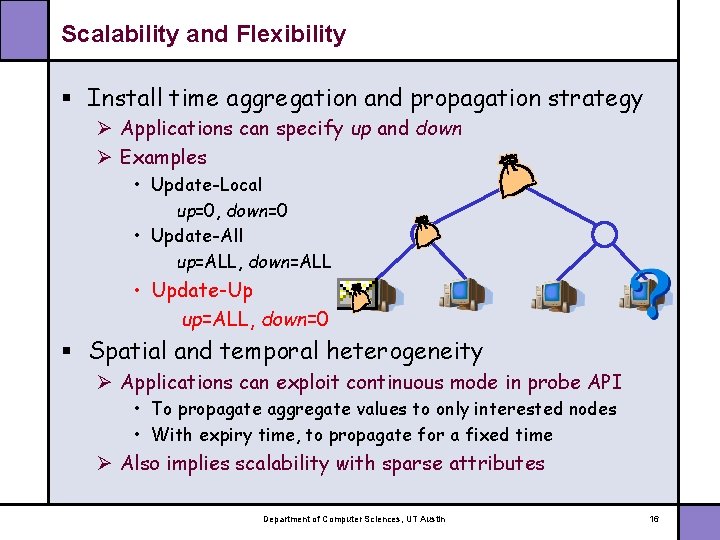

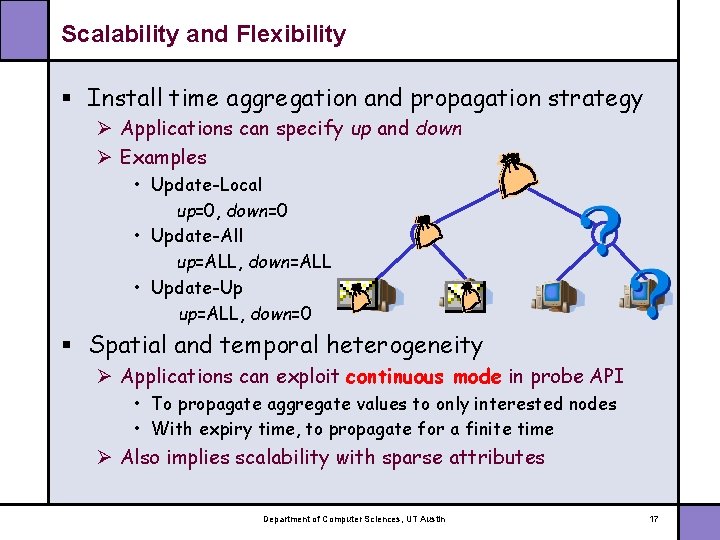

Scalability and Flexibility § Install time aggregation and propagation strategy Ø Applications can specify up and down Ø Examples • Update-Local up=0, down=0 • Update-All up=ALL, down=ALL • Update-Up up=ALL, down=0 § Spatial and temporal heterogeneity Ø Applications can exploit continuous mode in probe API • To propagate aggregate values to only interested nodes • With expiry time, to propagate for a fixed time Ø Also implies scalability with sparse attributes Department of Computer Sciences, UT Austin 13

Scalability and Flexibility § Install time aggregation and propagation strategy Ø Applications can specify up and down Ø Examples • Update-Local up=0, down=0 • Update-All up=ALL, down=ALL • Update-Up up=ALL, down=0 § Spatial and temporal heterogeneity Ø Applications can exploit continuous mode in probe API • To propagate aggregate values to only interested nodes • With expiry time, to propagate for a fixed time Ø Also implies scalability with sparse attributes Department of Computer Sciences, UT Austin 14

Scalability and Flexibility § Install time aggregation and propagation strategy Ø Applications can specify up and down Ø Examples • Update-Local up=0, down=0 • Update-All up=ALL, down=ALL • Update-Up up=ALL, down=0 § Spatial and temporal heterogeneity Ø Applications can exploit continuous mode in probe API • To propagate aggregate values to only interested nodes • With expiry time, to propagate for a fixed time Ø Also implies scalability with sparse attributes Department of Computer Sciences, UT Austin 15

Scalability and Flexibility § Install time aggregation and propagation strategy Ø Applications can specify up and down Ø Examples • Update-Local up=0, down=0 • Update-All up=ALL, down=ALL • Update-Up up=ALL, down=0 § Spatial and temporal heterogeneity Ø Applications can exploit continuous mode in probe API • To propagate aggregate values to only interested nodes • With expiry time, to propagate for a fixed time Ø Also implies scalability with sparse attributes Department of Computer Sciences, UT Austin 16

Scalability and Flexibility § Install time aggregation and propagation strategy Ø Applications can specify up and down Ø Examples • Update-Local up=0, down=0 • Update-All up=ALL, down=ALL • Update-Up up=ALL, down=0 § Spatial and temporal heterogeneity Ø Applications can exploit continuous mode in probe API • To propagate aggregate values to only interested nodes • With expiry time, to propagate for a finite time Ø Also implies scalability with sparse attributes Department of Computer Sciences, UT Austin 17

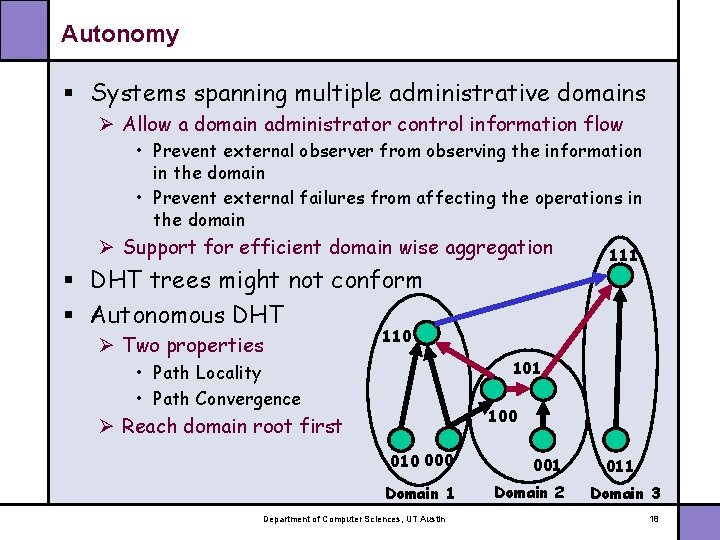

Autonomy § Systems spanning multiple administrative domains Ø Allow a domain administrator control information flow • Prevent external observer from observing the information in the domain • Prevent external failures from affecting the operations in the domain Ø Support for efficient domain wise aggregation § DHT trees might not conform § Autonomous DHT Ø Two properties 111 110 101 • Path Locality • Path Convergence 100 Ø Reach domain root first 010 001 Domain 2 Department of Computer Sciences, UT Austin 011 Domain 3 18

Outline § SDIMS: a distributed operating system backbone § Aggregation abstraction § Our approach Ø Ø Leverage Distributed Hash Tables Separate attribute type from name Flexible API Prototype and evaluation § Conclusions Department of Computer Sciences, UT Austin 19

Prototype and Evaluation § SDIMS prototype Ø Built on top of Free. Pastry [Druschel et al, Rice U. ] Ø Two layers • Bottom: Autonomous DHT • Top: Aggregation Management Layer § Methodology Ø Simulation • Scalability • Flexibility Ø Prototype • Micro-benchmarks on real networks – Planet. Lab – CS Department of Computer Sciences, UT Austin 20

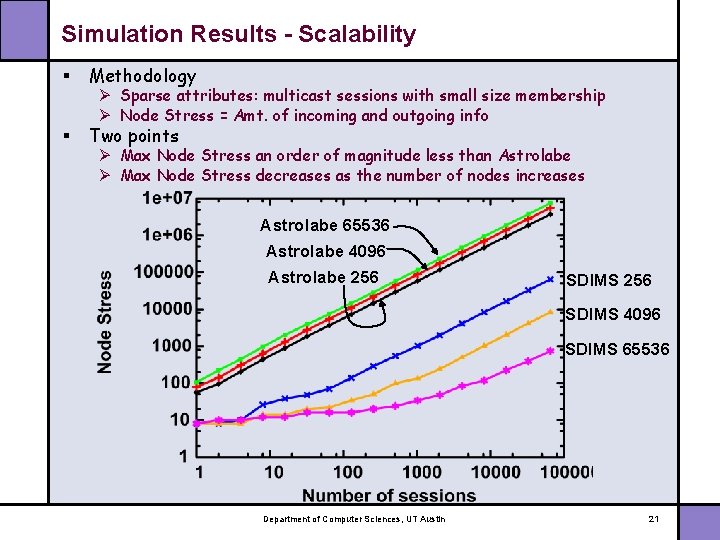

Simulation Results - Scalability § Methodology § Two points Ø Sparse attributes: multicast sessions with small size membership Ø Node Stress = Amt. of incoming and outgoing info Ø Max Node Stress an order of magnitude less than Astrolabe Ø Max Node Stress decreases as the number of nodes increases Astrolabe 65536 Astrolabe 4096 Astrolabe 256 SDIMS 4096 SDIMS 65536 Department of Computer Sciences, UT Austin 21

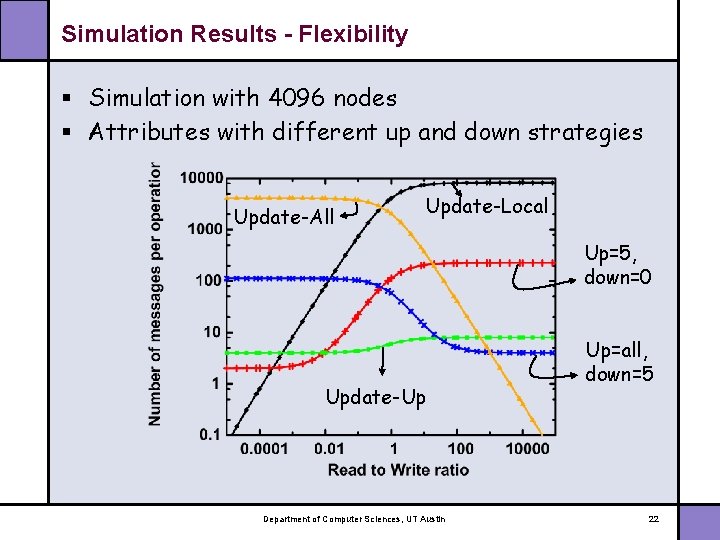

Simulation Results - Flexibility § Simulation with 4096 nodes § Attributes with different up and down strategies Update-All Update-Local Up=5, down=0 Update-Up Department of Computer Sciences, UT Austin Up=all, down=5 22

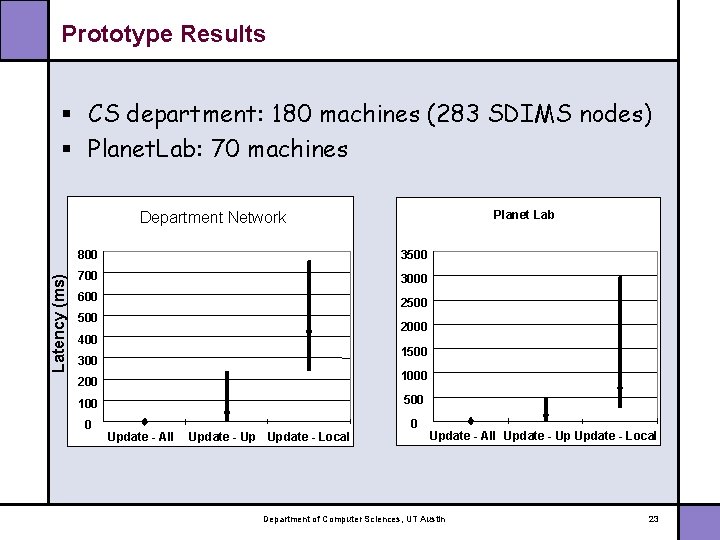

Prototype Results § CS department: 180 machines (283 SDIMS nodes) § Planet. Lab: 70 machines Latency (ms) Department Network Planet Lab 800 3500 700 3000 600 2500 2000 400 1500 300 1000 200 500 100 0 Update - All Update - Up Update - Local Department of Computer Sciences, UT Austin 23

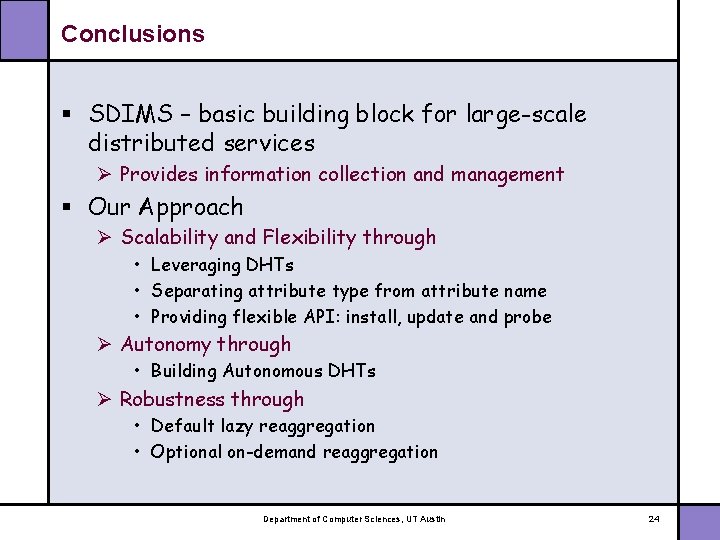

Conclusions § SDIMS – basic building block for large-scale distributed services Ø Provides information collection and management § Our Approach Ø Scalability and Flexibility through • Leveraging DHTs • Separating attribute type from attribute name • Providing flexible API: install, update and probe Ø Autonomy through • Building Autonomous DHTs Ø Robustness through • Default lazy reaggregation • Optional on-demand reaggregation Department of Computer Sciences, UT Austin 24

For more information: http: //www. cs. utexas. edu/users/ypraveen/sdims Department of Computer Sciences, UT Austin 25

- Slides: 25