SCRAMBLE Secure RealTime Decision Making for the Autonomous

SCRAMBLE: Secure, Real-Time Decision. Making for the Autonomous Battlefield Saurabh Bagchi Director, Center for Resilient Infrastructures, Systems and Processes (CRISP) Purdue University PI: Army Research Lab (ARL) 5 -year project in their AI Assured Autonomy Institute (A 2 I 2) Co-PIs: David Inouye, Somali Chaterji, Mung Chiang (Purdue) Prateek Mittal (Princeton) NGC Tech Champions: Larry Deatrick, Jim Mac. Donald, Paul Conoval 1

Problem Context • Many security and mission-critical applications for civilian relief and rescue involve autonomous operations among multiple cyber and physical assets, together with interactions with humans • Such autonomous operation will rely on: – A pipeline of machine learning (ML) algorithms – Executing in real-time on – A distributed set of heterogeneous platforms • Conditions will be adversarial and operation must be guaranteed to be secure while maintaining timeliness guarantees – Security guarantees need to be carefully analyzed and proven, under rigorously quantified adversary models – Move away from one-off solution for specific attack type • Different degrees of autonomy – Some require humans in the loop; in such cases, the cognitive load of any software solution must be analyzed for feasibility – Some require humans on the loop – Some are fully autonomous agents 2

Goals and Deliverables • Fundamental research contributions in – Theory of secure autonomous operations – Theory of distributed execution of autonomous algorithms – Interpretability and other human-machine interactions of autonomous algorithms • Practical demonstrations on real-world testbeds at Purdue and at ARL – Ground-based static sensors, ground-based and aerial mobile A robust, scalable, usable software suite thatservers can nodes, distributed, more powerful edge computing nodes, back-end execute on today’s standard and custom hardware platforms: – Evaluated wrt security, timing, and resource usage properties SCRAMBLE – (Se. Cure Adversarial models. Decision-Making conceptualized and Real-time forinstantiated the Autono. Mous Batt. LEfield) 3

Sample Autonomy Pipelines • Multiple ML algorithms in a pipeline – Different resource requirements, different input-output patterns – Can be required to execute on vastly different platforms 4

Requirements 1. ML algorithms must be capable of – Executing on a distributed set of execution platforms (mobile nodes, ground-based or aerial sensors, edge computing nodes, private cloud nodes, etc. ) – Trained both offline and in the field – Tolerating varying amounts of noise either due to naturally occurring causes or due to maliciously injected errors 2. Autonomous algorithms must interface well with humans who may need to act on their decisions – Interpretable and explainable at the tactical level in real time – Interpretable and explainable at the strategic level so that a commander may make modifications for future missions 5

Requirements 3. Probabilistic guarantees – On accuracy and latency – Guarantees must hold under adversarial actions – Guarantees must hold under batch mode and incremental training 4. Algorithms must be able to ingest heterogeneous sources of data – Data sources will vary in their fidelity, rate, and characteristic – These data sources will be intermixed coming from white, blue, and red networks – In the process of inferencing, the algorithms also tag data sources with their trust level, so that future decision making becomes more accurate 6

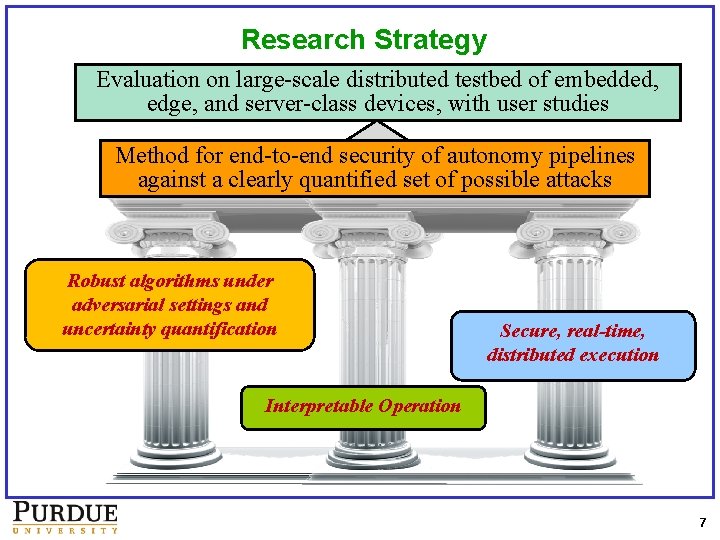

Research Strategy Evaluation on large-scale distributed testbed of embedded, edge, and server-class devices, with user studies Method for end-to-end security of autonomy pipelines against a clearly quantified set of possible attacks Robust algorithms under adversarial settings and uncertainty quantification Secure, real-time, distributed execution Interpretable Operation 7

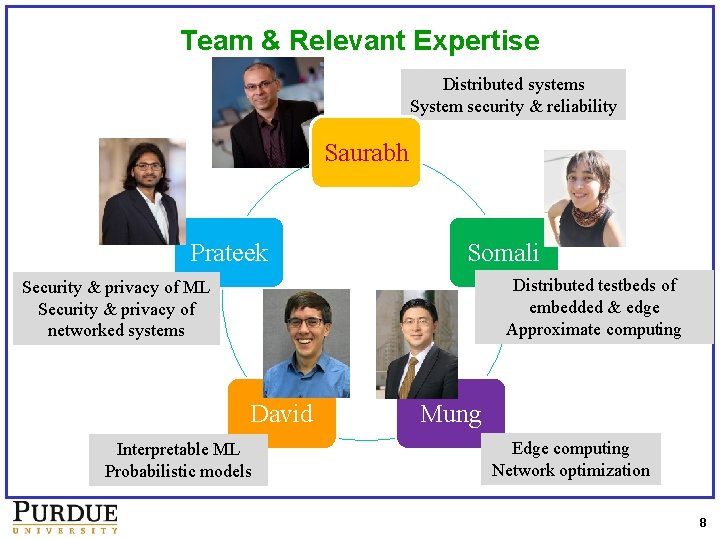

Team & Relevant Expertise Distributed systems System security & reliability Saurabh Prateek Somali Distributed testbeds of embedded & edge Approximate computing Security & privacy of ML Security & privacy of networked systems David Interpretable ML Probabilistic models Mung Edge computing Network optimization 8

Sample Result: Neur. IPS 2020 Paper • “Feature Shift Detection: Localizing Which Features Have Shifted via Conditional Distribution Tests” • Sean M. Kulinski, Saurabh Bagchi, and David I. Inouye. Accepted to appear in Neur. IPS 2020. (Acceptance rate: 1900/9454 = 20. 1%) • Work directly supported by the NGCRC funding 1. Detect if a distribution shift has occurred in a time series 2. Detect if the shift is due to conditional distribution change 3. Perform this through a test statistic based on the density model score function (i. e. , gradient with respect to the input) 4. Perform this efficiently where test statistics for all dimensions is calculated in a single forward and backward pass 5. Perform localization to determine which sensor(s) are compromised 9

Sample Result: AAAI 21 Submission • “Exclusion-Inclusion Generative Adversarial Nets” • Sheikh Shams Azam, Taejin Kim (CMU), Seyyedali Hosseinalipour, Christopher Brinton, Carlee Joe-Wong (CMU), and Saurabh Bagchi. Submitted to AAAI 2021, submission date: September 2020. • Work directly supported by the NGCRC funding 1. Encode data so that adversary objective is thwarted while ally objective is enabled: EIGAN 2. Encoding is done in a distributed manner, such as, at each sensor, without inter-node communication: D-EIGAN 3. Key idea: Use game theory with multiple players, on top of a GAN framework (which naturally includes encoders-decoders) 10

Takeaways • Two NGCRC projects led to fundamental results in: 1. Anomaly detection and localization in time-series sensor data 2. Privacy-preserving adversarial representation to reduce effectiveness of adversary inference and increase inference quality for allies • New ARL project, as part of its Institute for Assured Autonomy, to lead toward secure multi-domain operations • Project will extend the state-of-the-art in: – – – Theory of secure autonomous operations Theory of distributed execution of autonomous algorithms Interpretability/other human-machine interactions of autonomous algorithms Usable distributed software prototype Software capable of executing on sensor, edge, and server nodes Quantified adversary models and instantiations 11

- Slides: 11