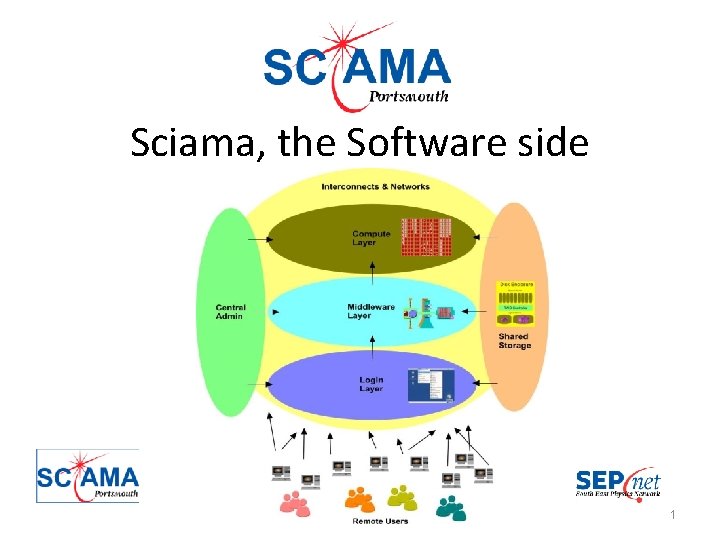

Sciama the Software side 1 Agenda Memory Models

![“gdb” GNU Debugger [burtong@login 1 ~]$ gdb GNU gdb (GDB) Red Hat Enterprise Linux “gdb” GNU Debugger [burtong@login 1 ~]$ gdb GNU gdb (GDB) Red Hat Enterprise Linux](https://slidetodoc.com/presentation_image_h2/a68bb7dfb1f3a285666932b575886a36/image-18.jpg)

- Slides: 45

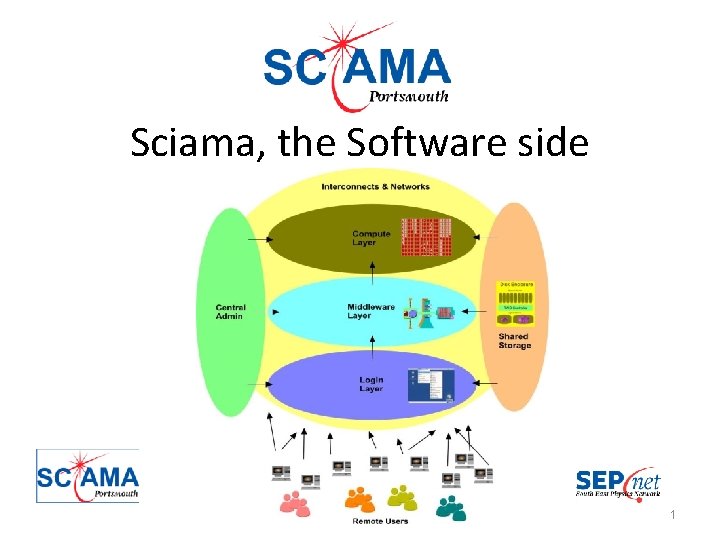

Sciama, the Software side 1

Agenda • Memory Models • Running Jobs, Selecting Packages and Compiling • Open. MP • MPI • Open. MP / MPI mixed mode • Sciama Information

Memory Models 3

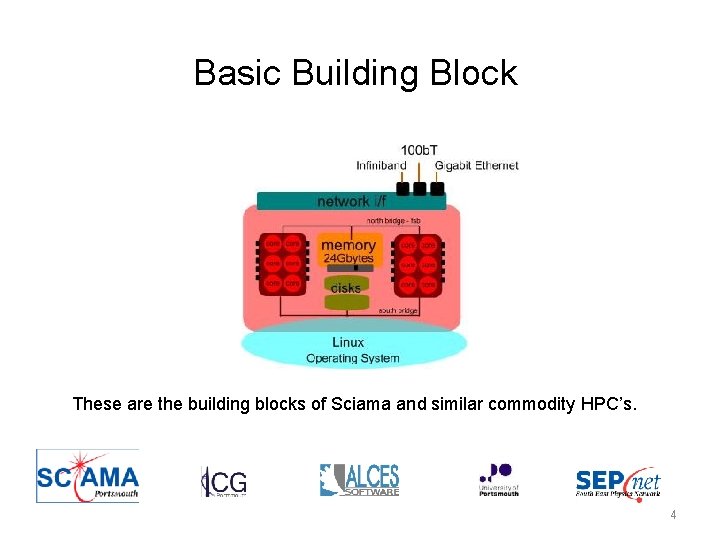

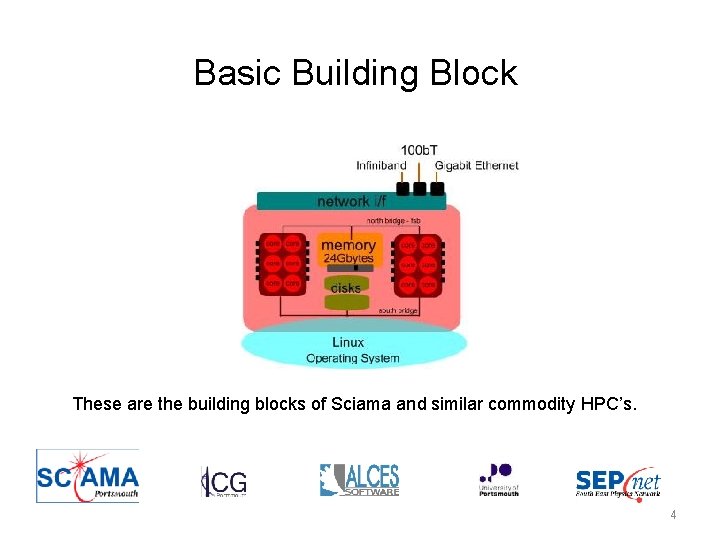

Basic Building Block These are the building blocks of Sciama and similar commodity HPC’s. 4

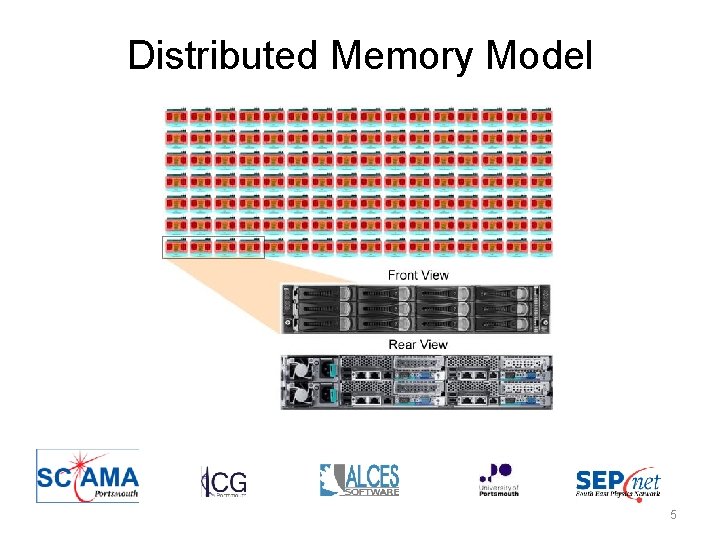

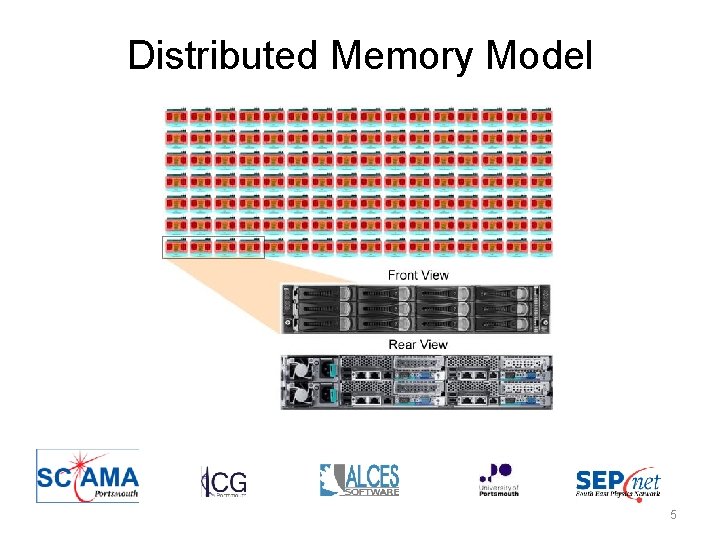

Distributed Memory Model 5

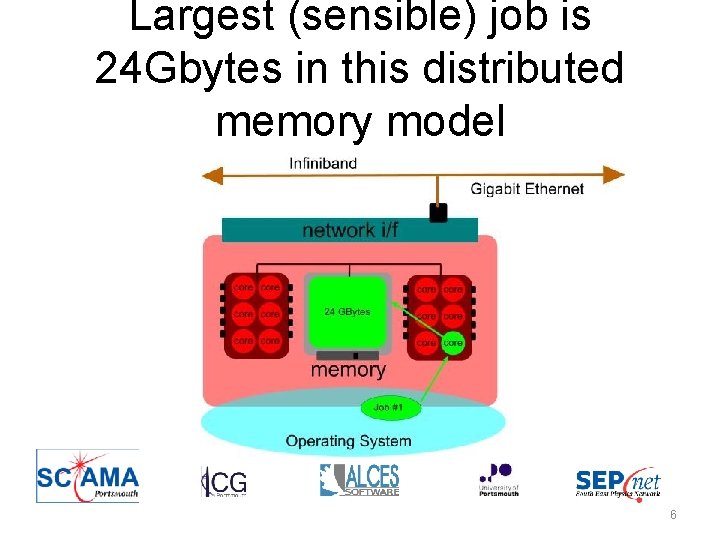

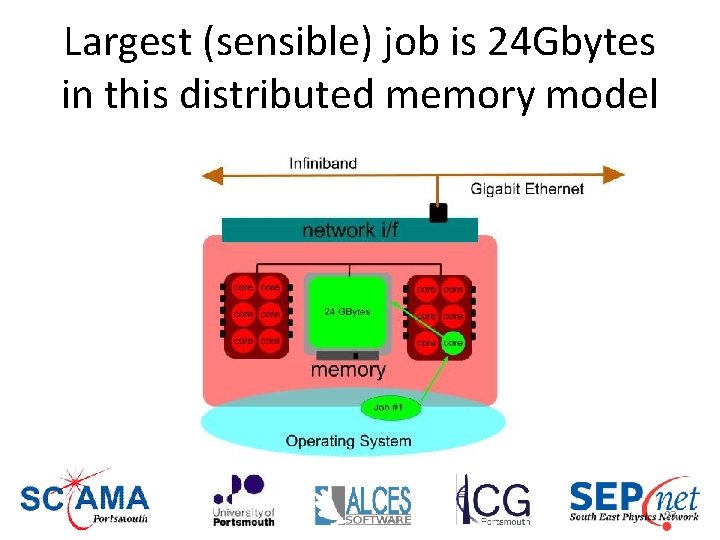

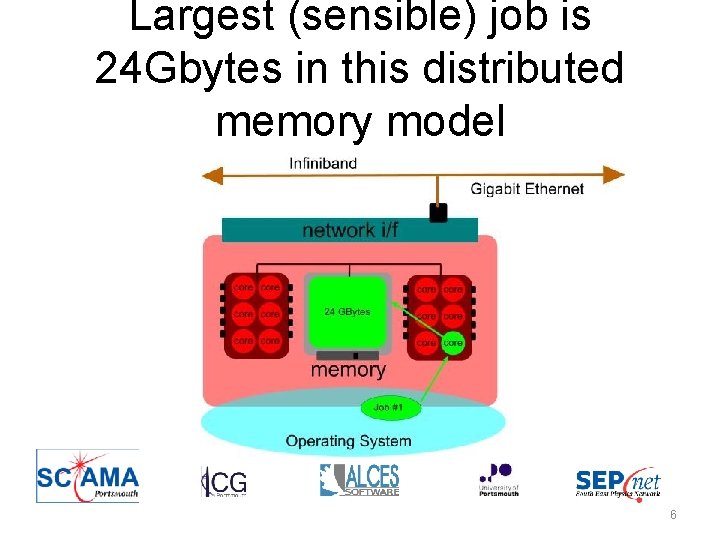

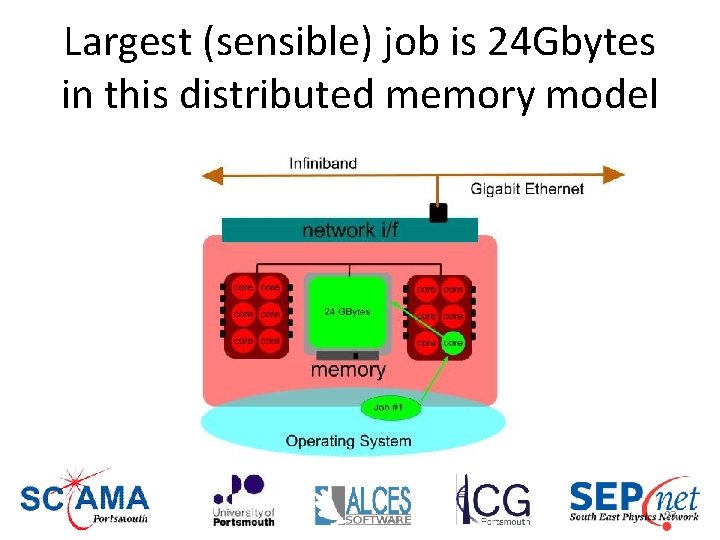

Largest (sensible) job is 24 Gbytes in this distributed memory model 6

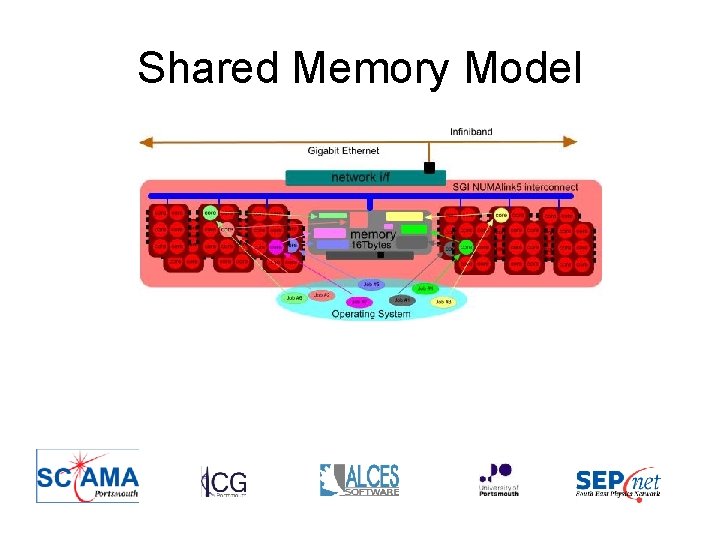

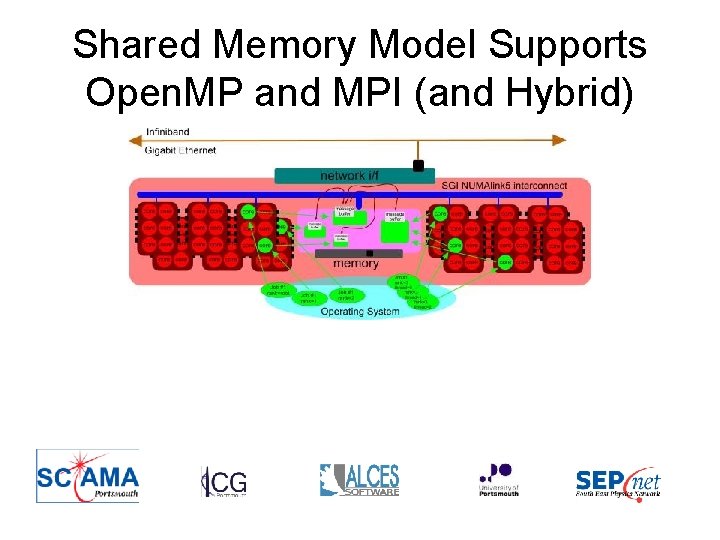

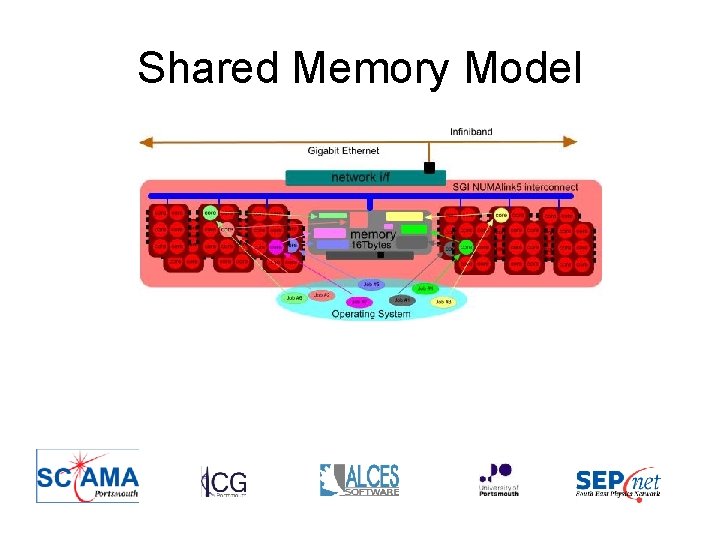

Shared Memory Model

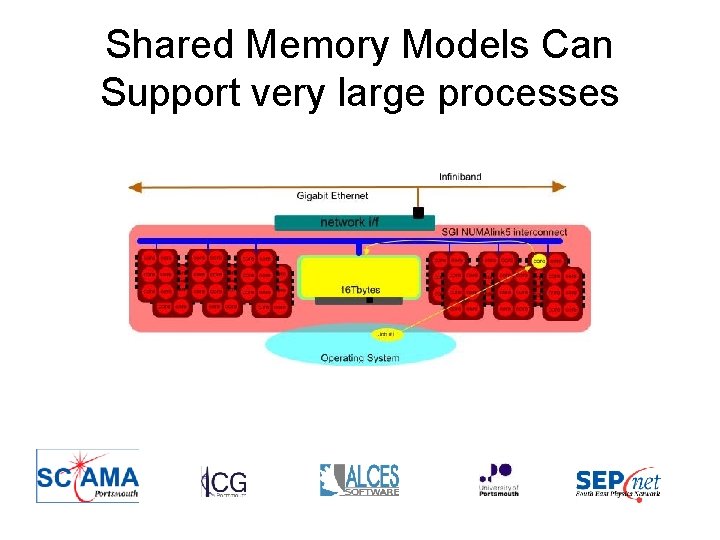

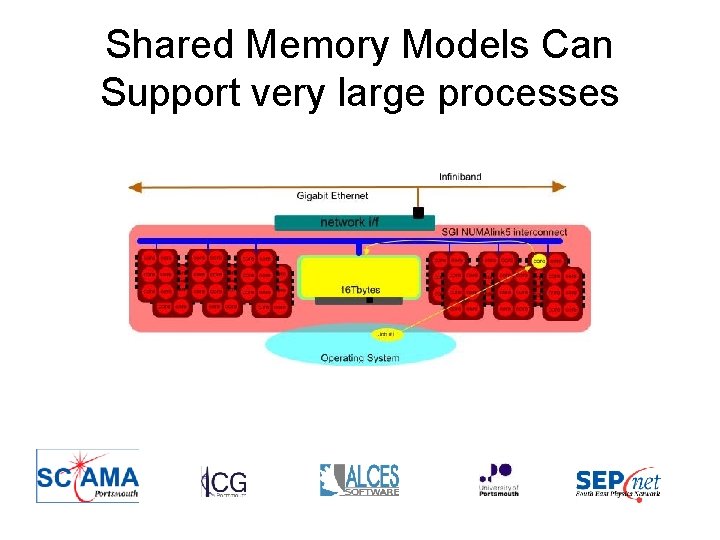

Shared Memory Models Can Support very large processes

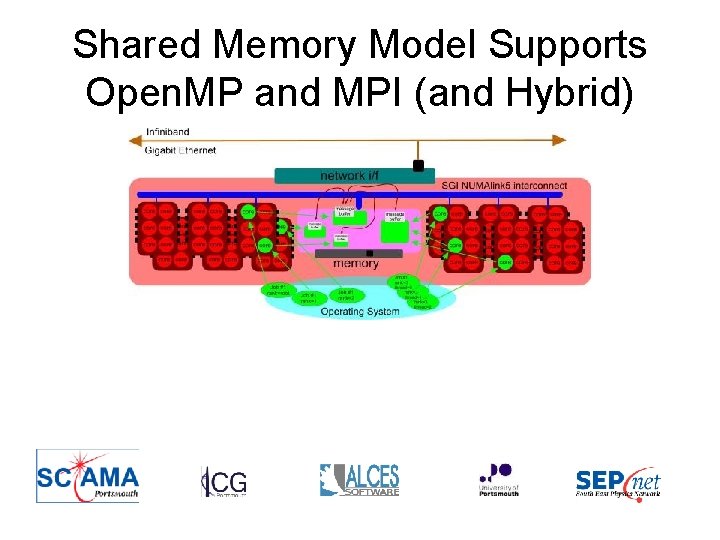

Shared Memory Model Supports Open. MP and MPI (and Hybrid)

Running Jobs, Selecting Packages and Compiling 10

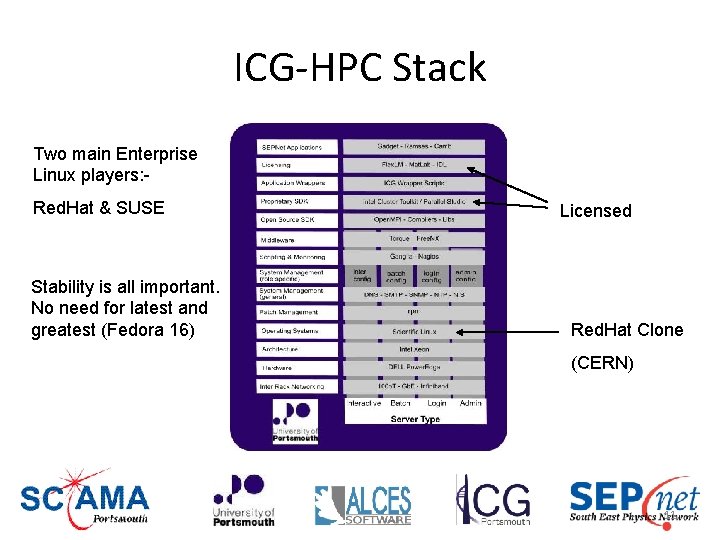

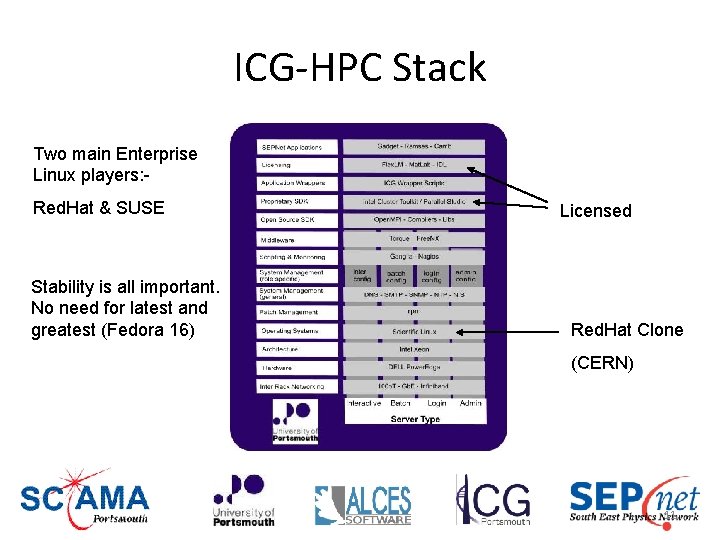

ICG‐HPC Stack Two main Enterprise Linux players: Red. Hat & SUSE Stability is all important. No need for latest and greatest (Fedora 16) Licensed Red. Hat Clone (CERN) 11

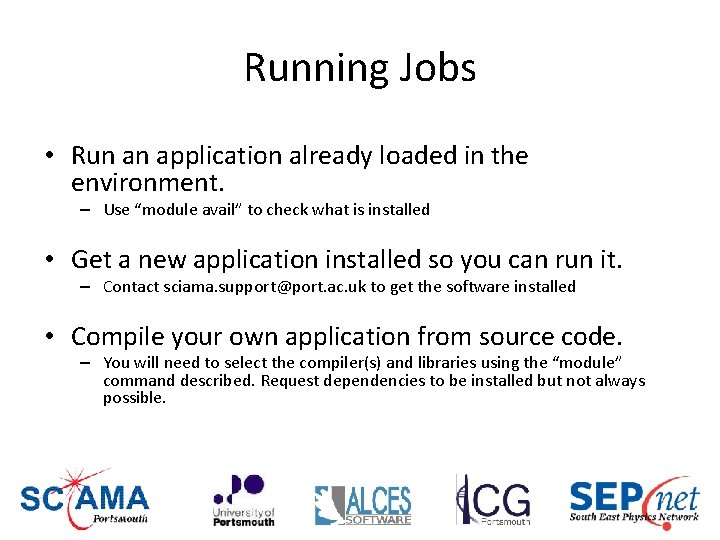

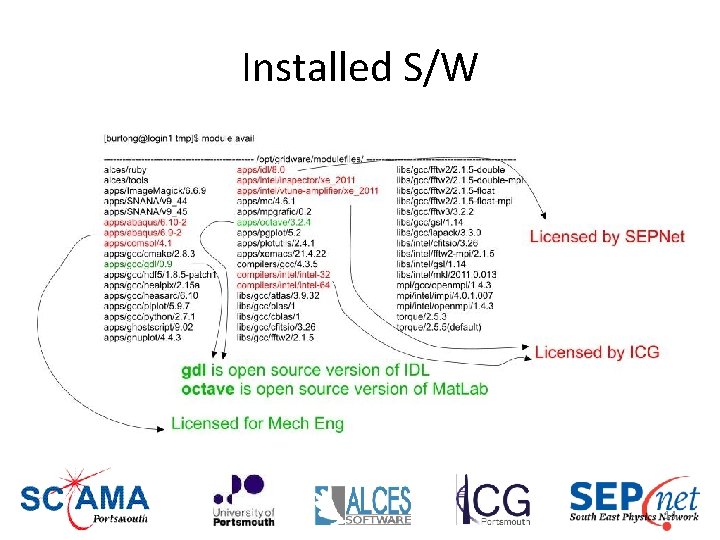

Running Jobs • Run an application already loaded in the environment. – Use “module avail” to check what is installed • Get a new application installed so you can run it. – Contact sciama. support@port. ac. uk to get the software installed • Compile your own application from source code. – You will need to select the compiler(s) and libraries using the “module” command described. Request dependencies to be installed but not always possible.

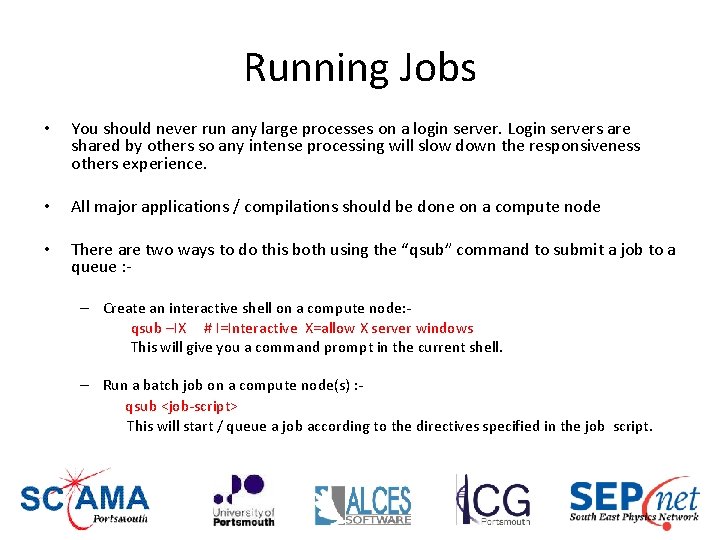

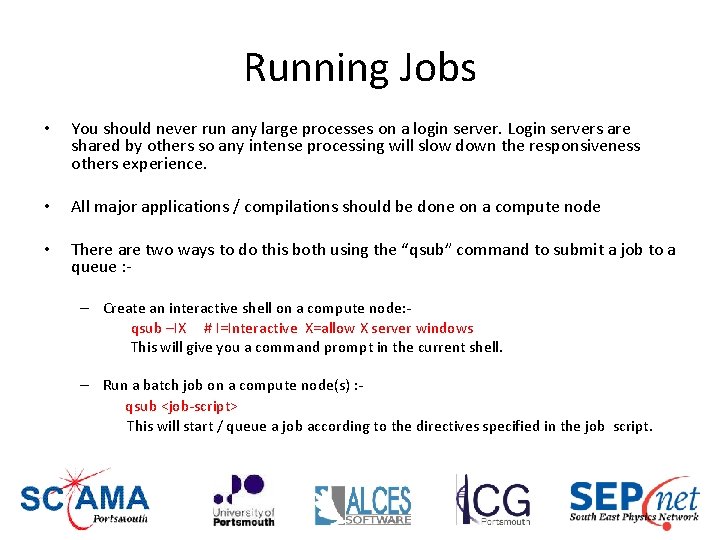

Running Jobs • You should never run any large processes on a login server. Login servers are shared by others so any intense processing will slow down the responsiveness others experience. • All major applications / compilations should be done on a compute node • There are two ways to do this both using the “qsub” command to submit a job to a queue : ‐ – Create an interactive shell on a compute node: ‐ qsub –IX # I=Interactive X=allow X server windows This will give you a command prompt in the current shell. – Run a batch job on a compute node(s) : ‐ qsub <job‐script> This will start / queue a job according to the directives specified in the job script.

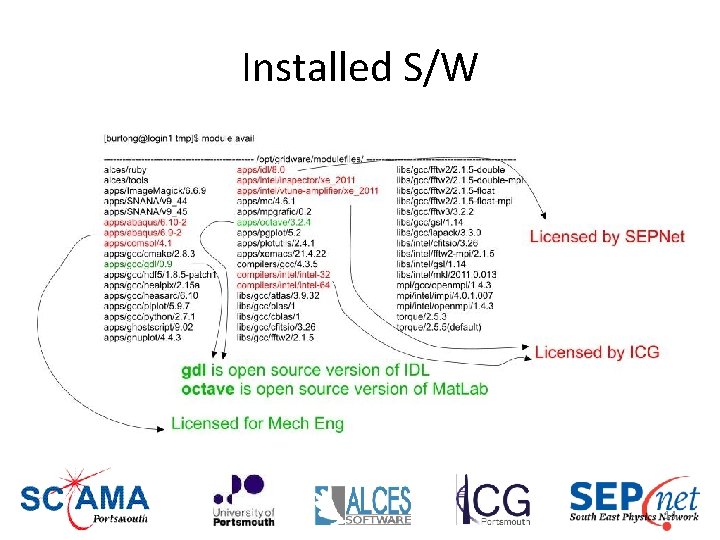

Installed S/W 14

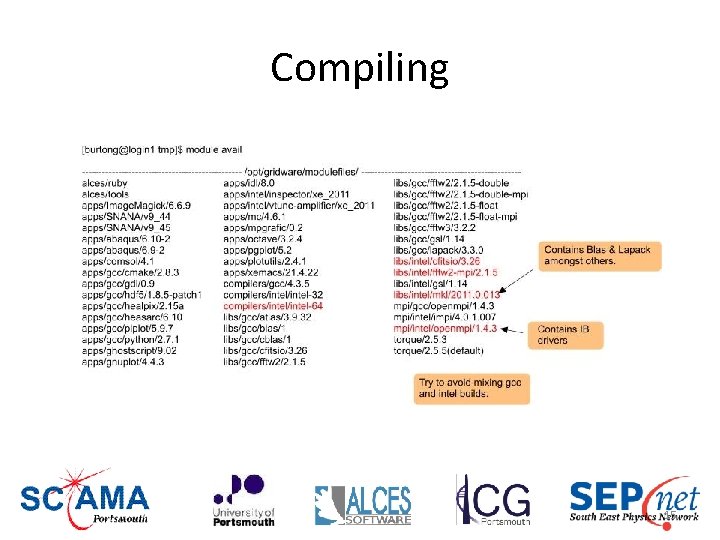

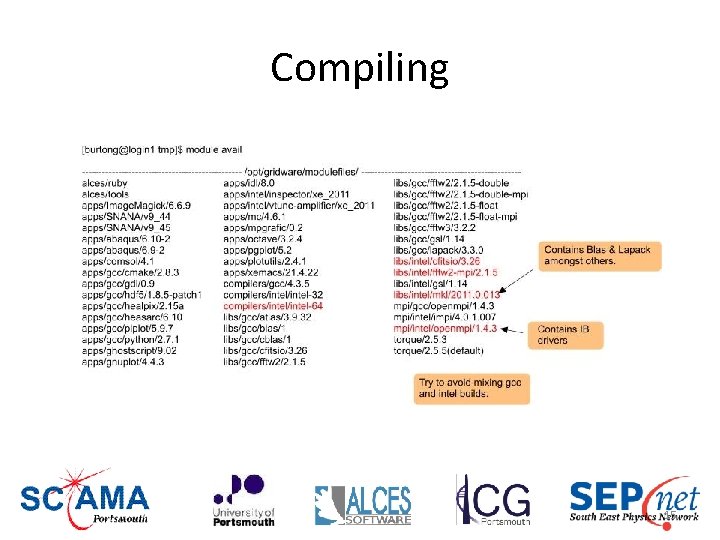

Compiling 15

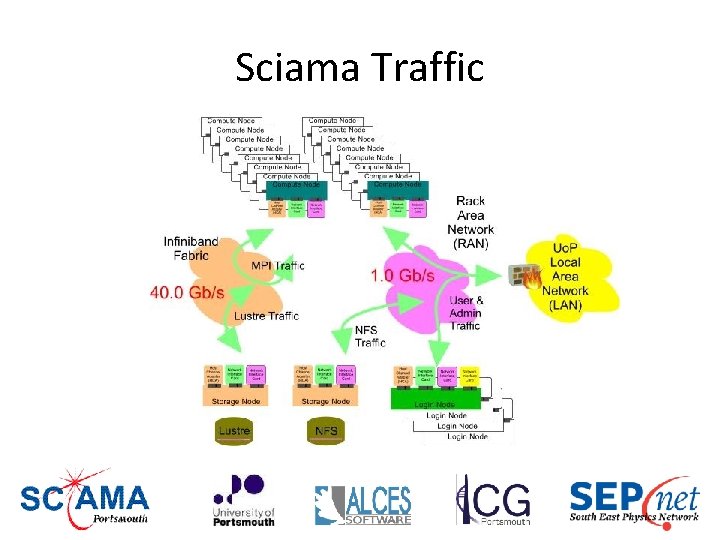

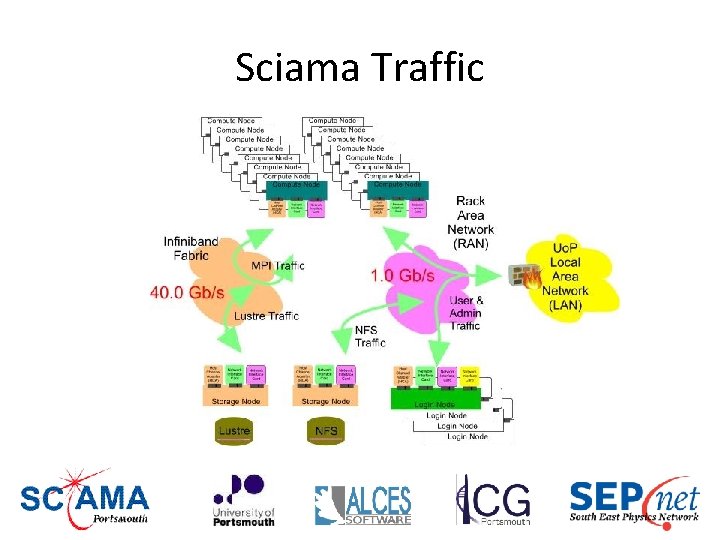

Sciama Traffic

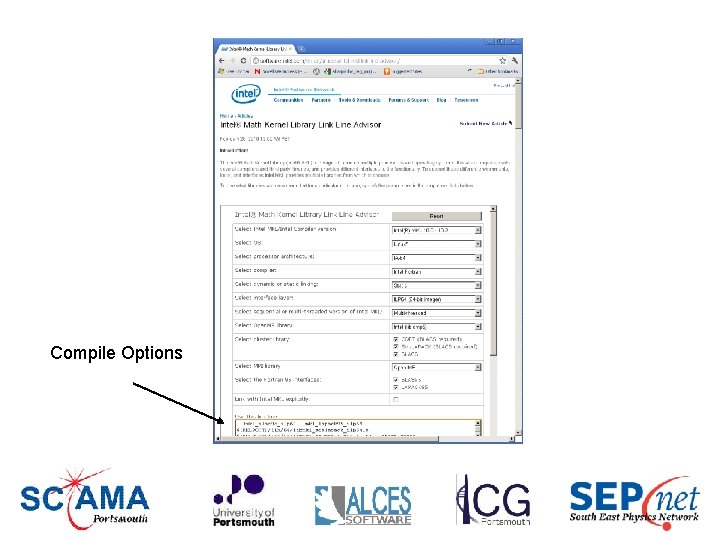

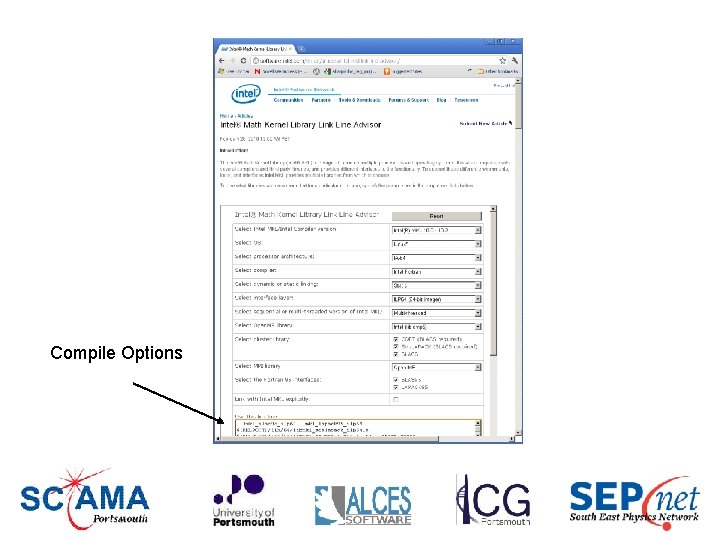

Compile Options

![gdb GNU Debugger burtonglogin 1 gdb GNU gdb GDB Red Hat Enterprise Linux “gdb” GNU Debugger [burtong@login 1 ~]$ gdb GNU gdb (GDB) Red Hat Enterprise Linux](https://slidetodoc.com/presentation_image_h2/a68bb7dfb1f3a285666932b575886a36/image-18.jpg)

“gdb” GNU Debugger [burtong@login 1 ~]$ gdb GNU gdb (GDB) Red Hat Enterprise Linux (7. 0. 1‐ 23. el 5_5. 1) Copyright (C) 2009 Free Software Foundation, Inc. License GPLv 3+: GNU GPL version 3 or later <http: //gnu. org/licenses/gpl. html> This is free software: you are free to change and redistribute it. There is NO WARRANTY, to the extent permitted by law. Type "show copying" and "show warranty" for details. This GDB was configured as "x 86_64‐redhat‐linux‐gnu". For bug reporting instructions, please see: <http: //www. gnu. org/software/gdb/bugs/>. (gdb)

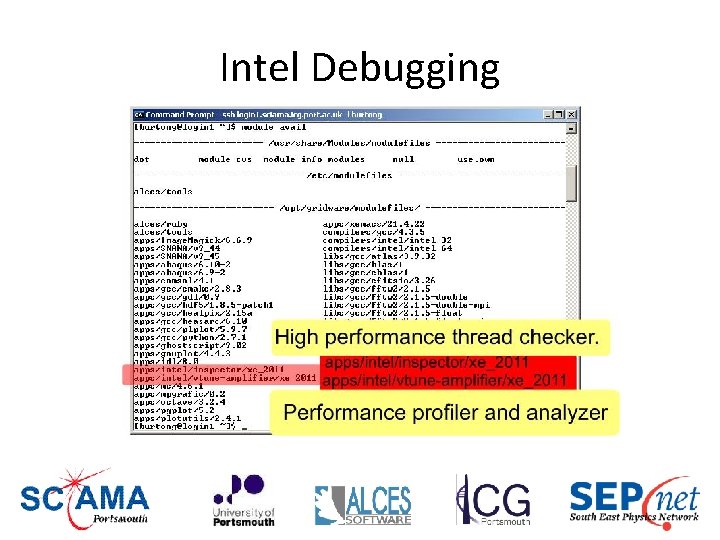

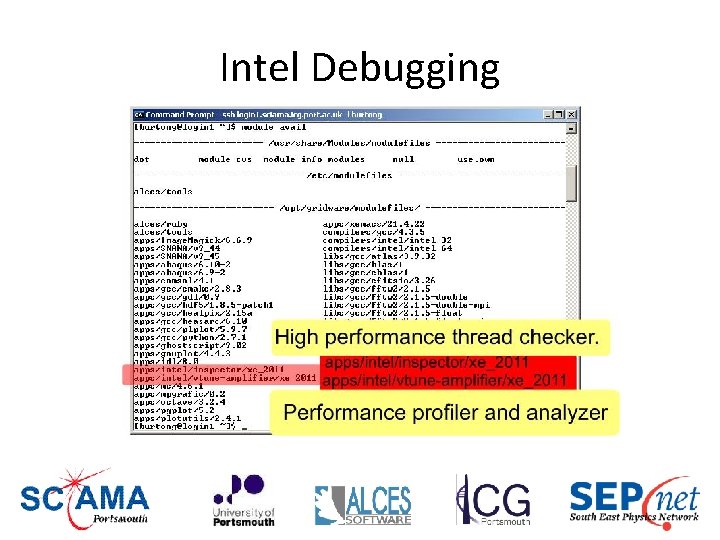

Intel Debugging

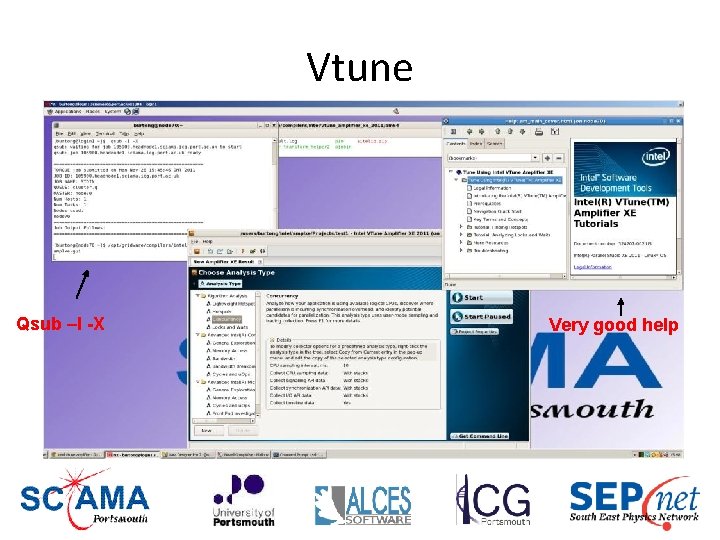

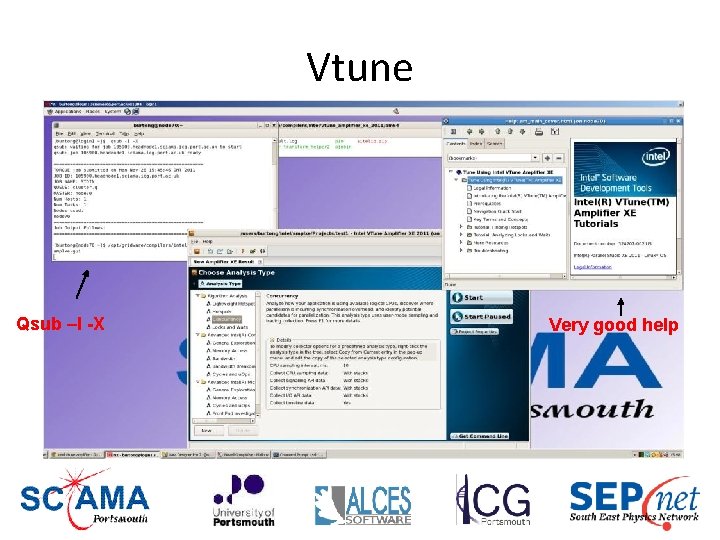

Vtune Qsub –I -X Very good help

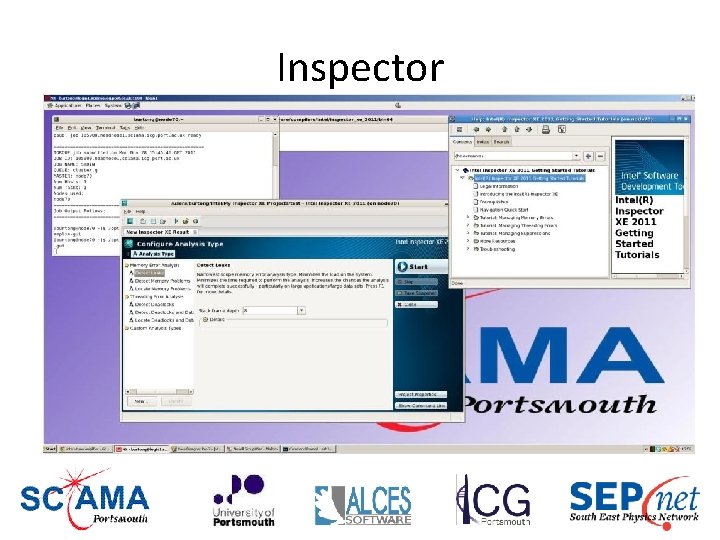

Inspector

Open. MP ( Open Multi Processing) 22

Largest (sensible) job is 24 Gbytes in this distributed memory model 23

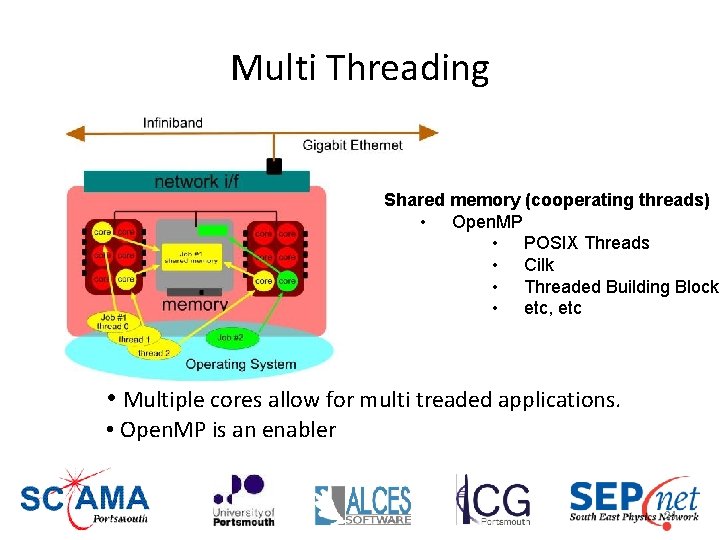

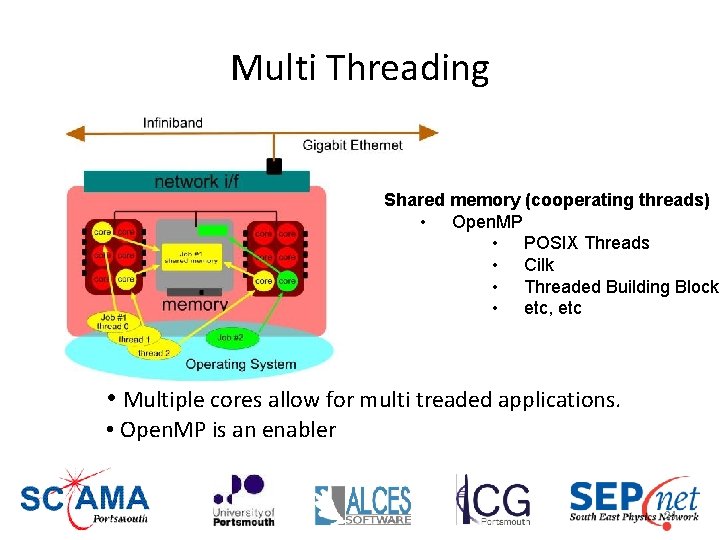

Multi Threading Shared memory (cooperating threads) • Open. MP • POSIX Threads • Cilk • Threaded Building Blocks • etc, etc • Multiple cores allow for multi treaded applications. • Open. MP is an enabler 24

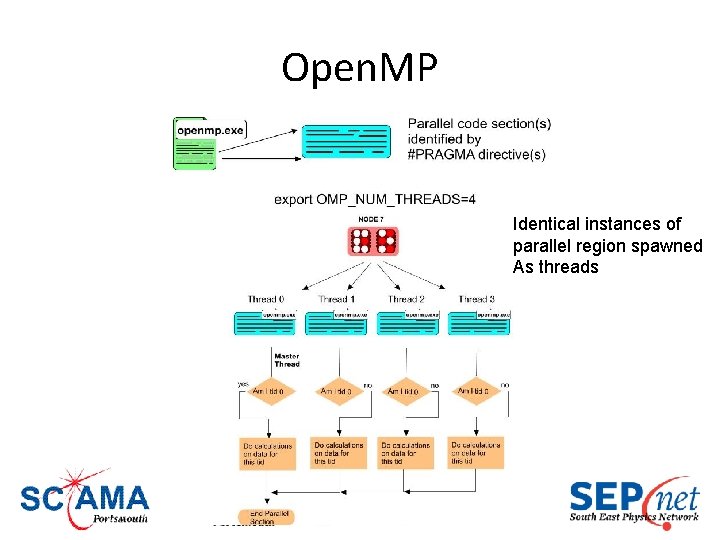

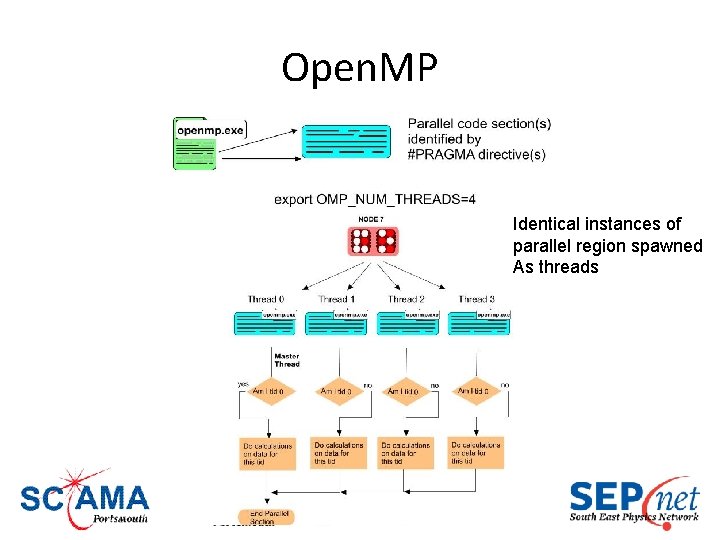

Open. MP Identical instances of parallel region spawned As threads

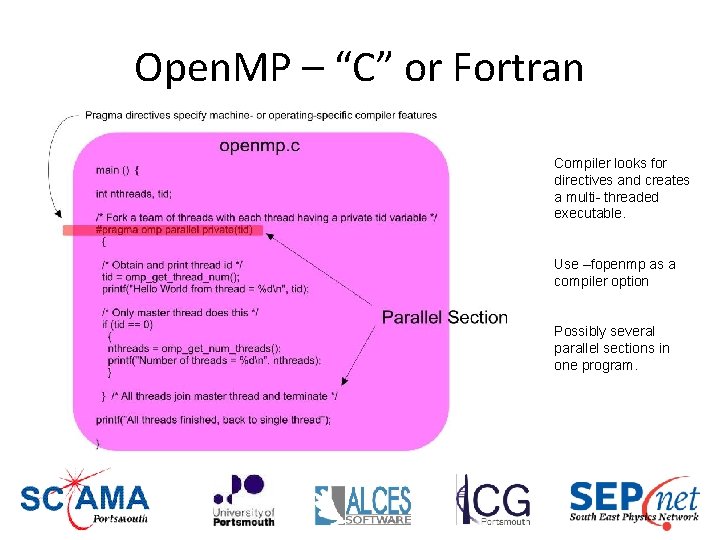

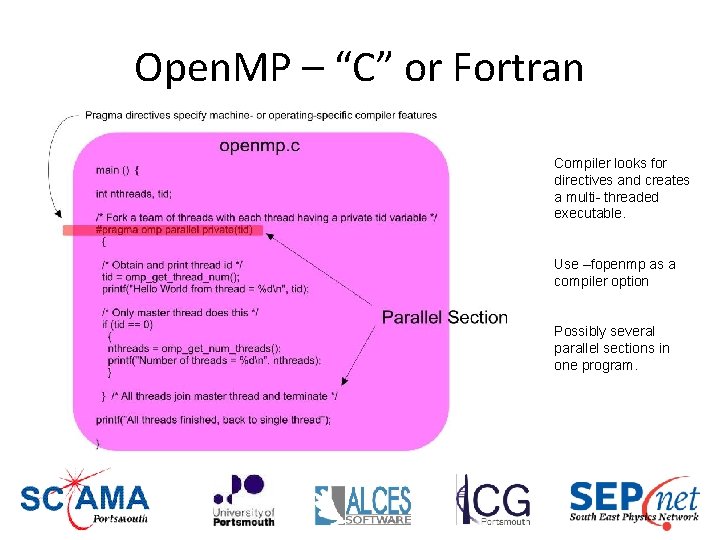

Open. MP – “C” or Fortran Compiler looks for directives and creates a multi- threaded executable. Use –fopenmp as a compiler option Possibly several parallel sections in one program.

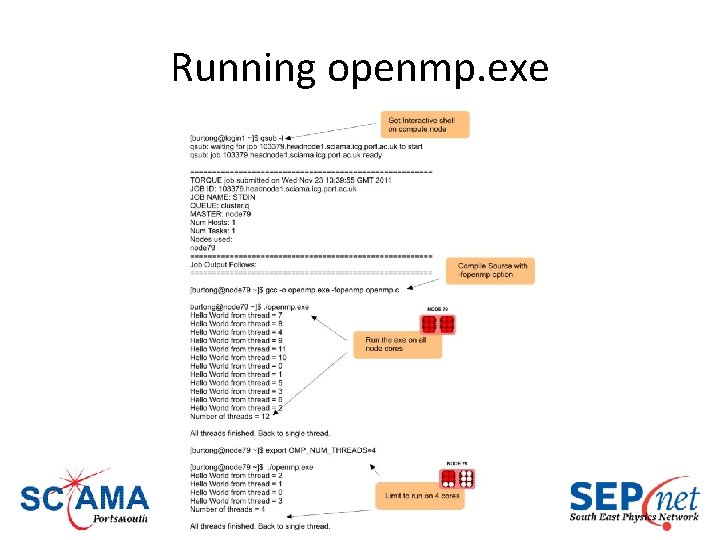

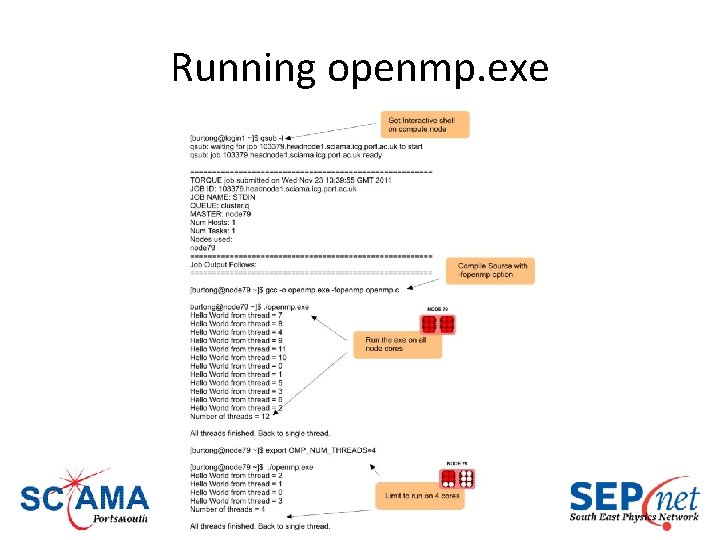

Running openmp. exe

MPI ( Message Passing Interface ) 28

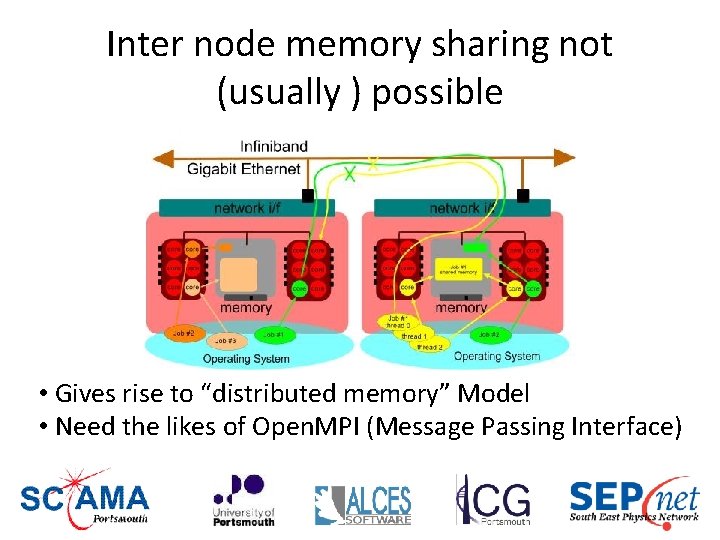

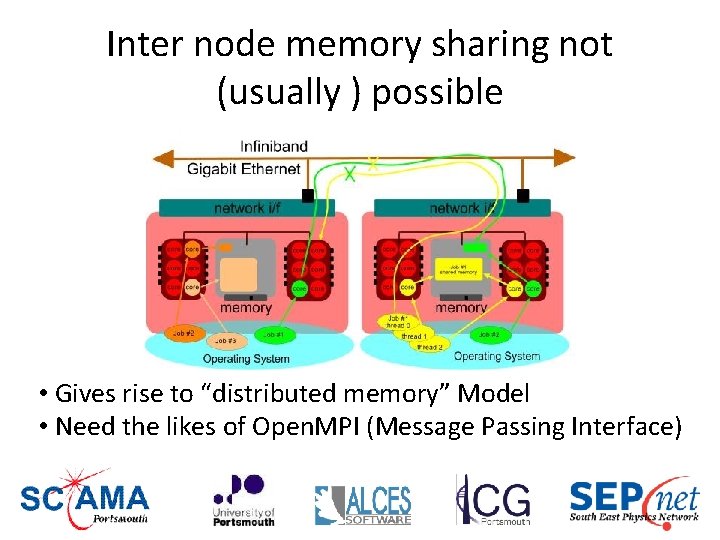

Inter node memory sharing not (usually ) possible • Gives rise to “distributed memory” Model • Need the likes of Open. MPI (Message Passing Interface)

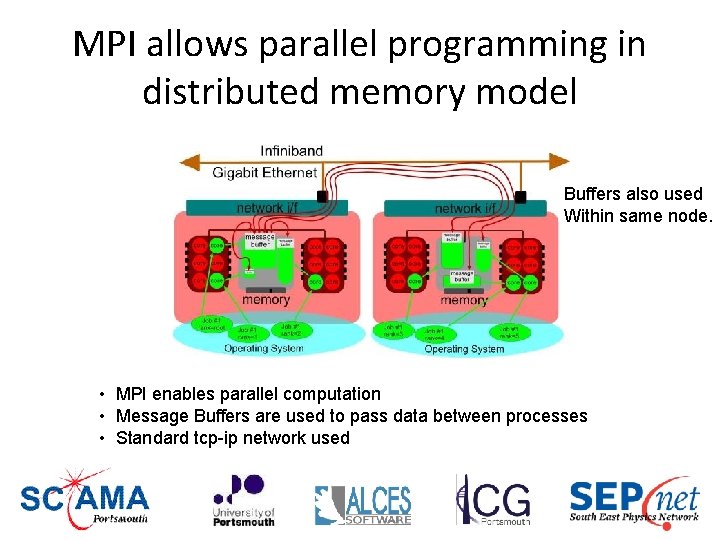

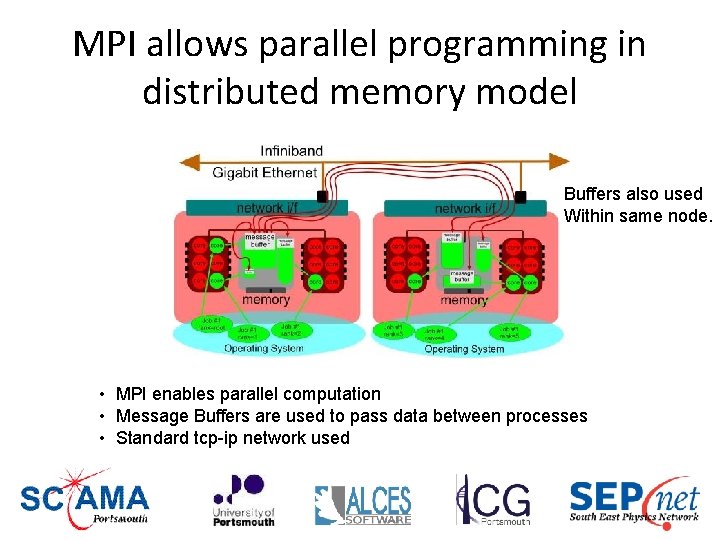

MPI allows parallel programming in distributed memory model Buffers also used Within same node. • MPI enables parallel computation • Message Buffers are used to pass data between processes • Standard tcp-ip network used

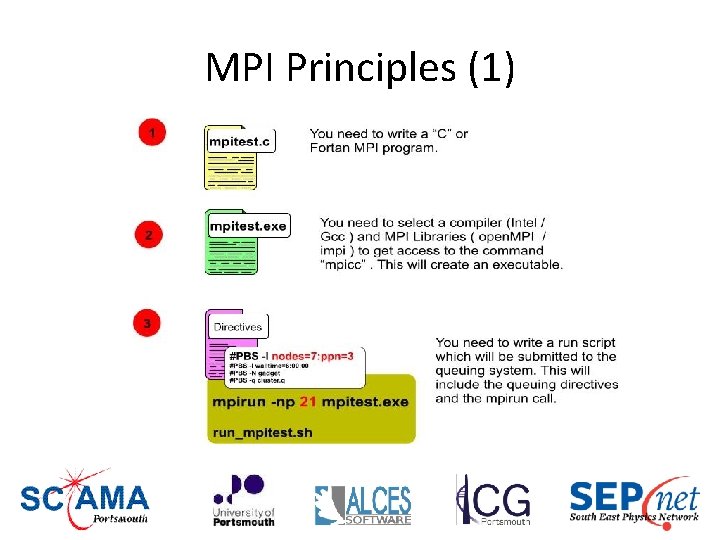

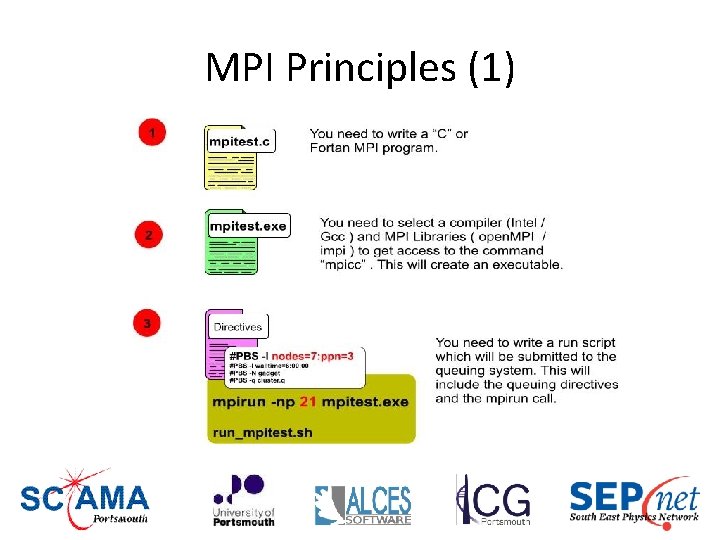

MPI Principles (1)

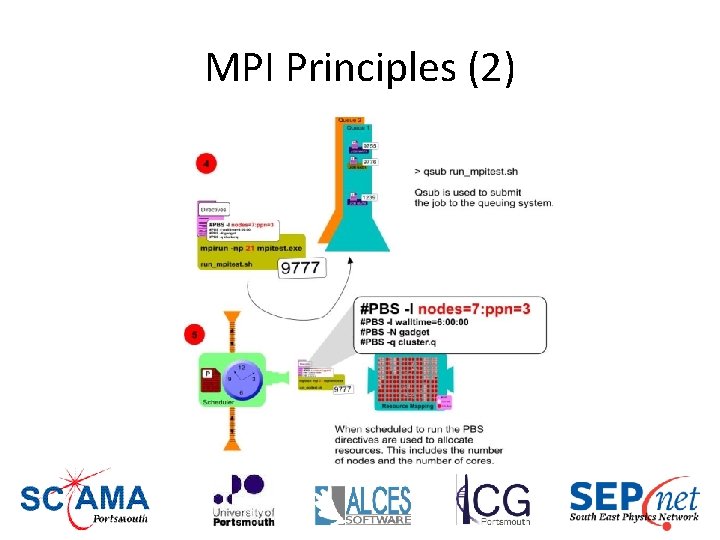

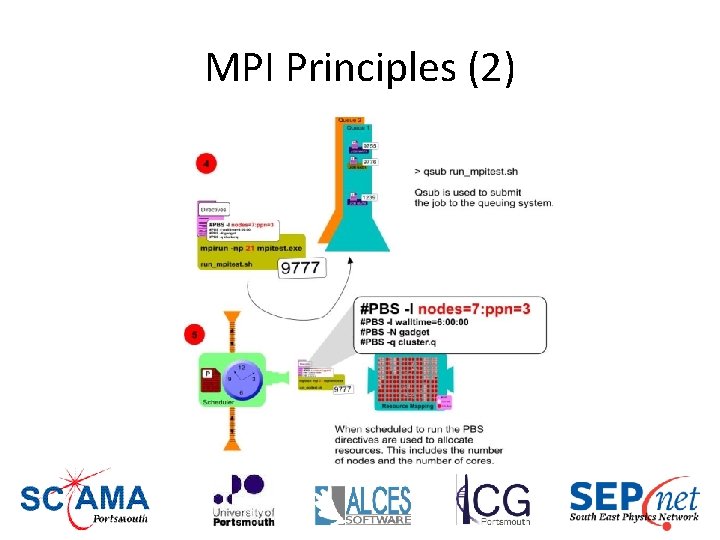

MPI Principles (2)

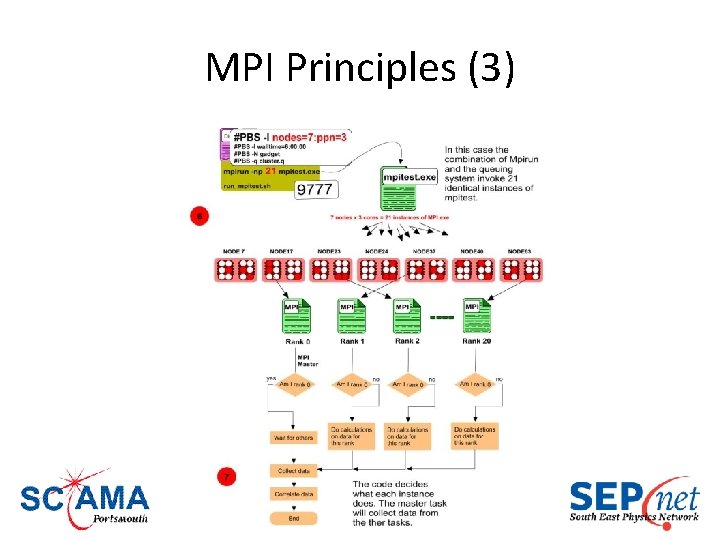

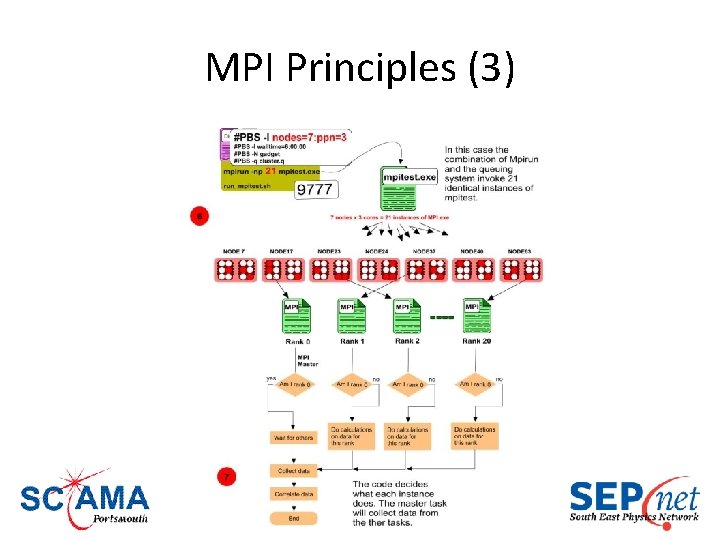

MPI Principles (3)

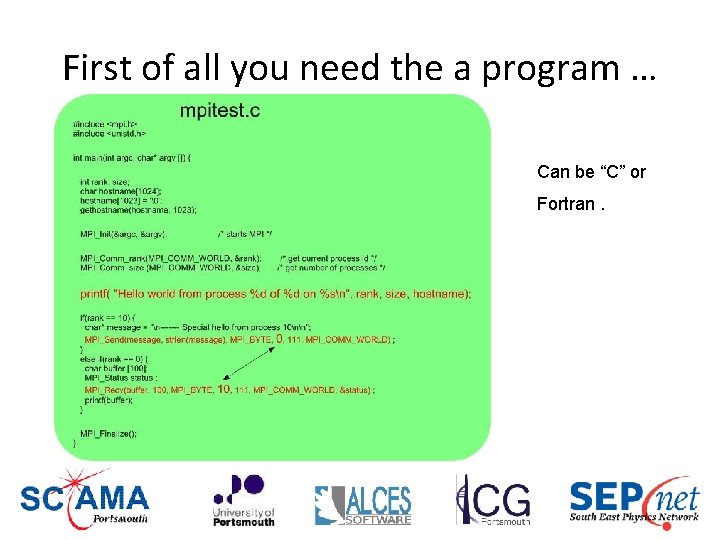

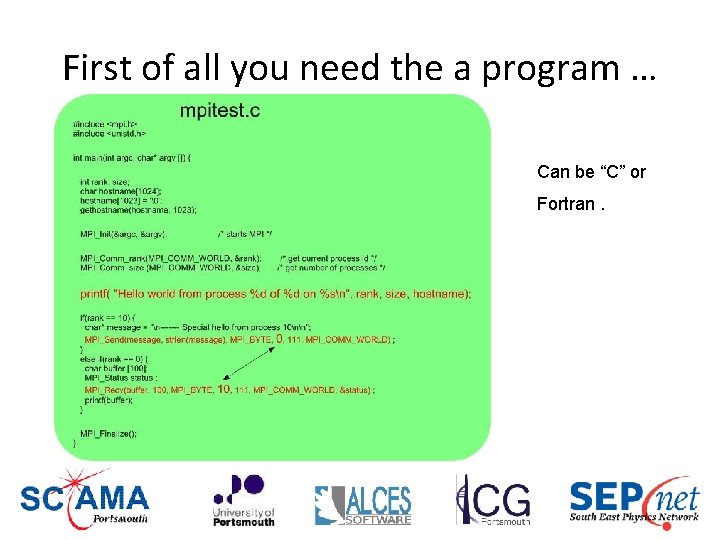

First of all you need the a program … Can be “C” or Fortran.

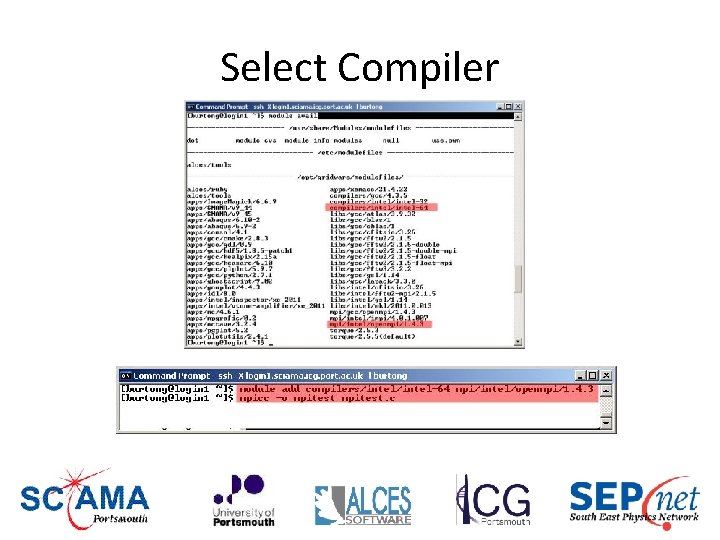

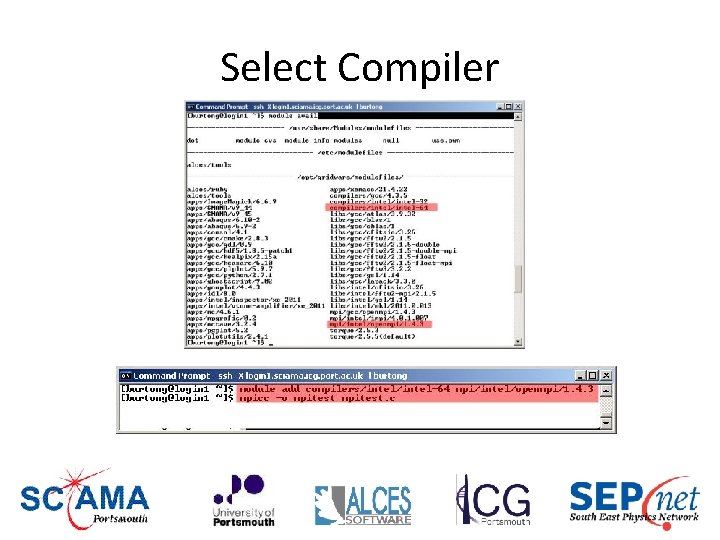

Select Compiler

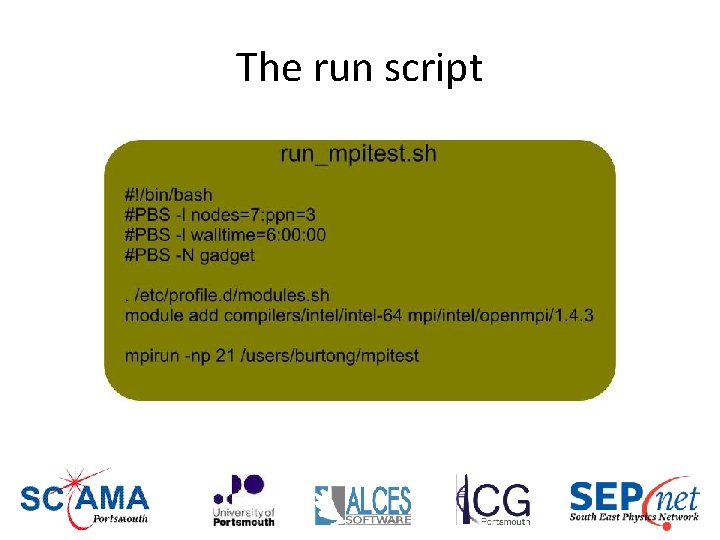

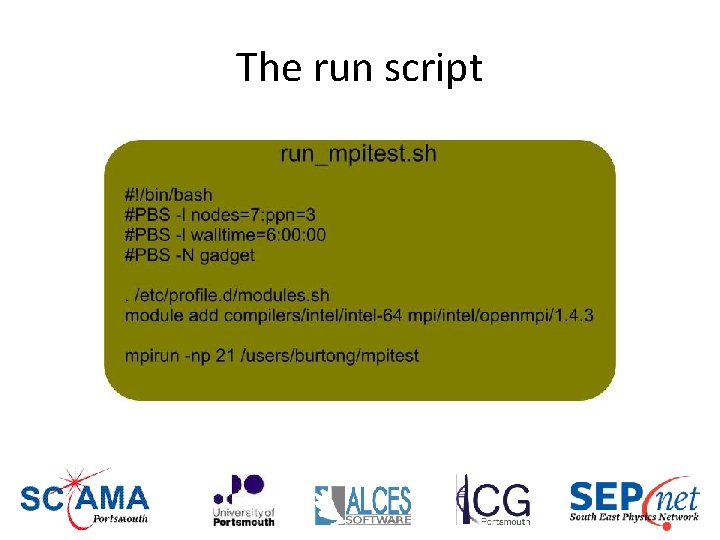

The run script

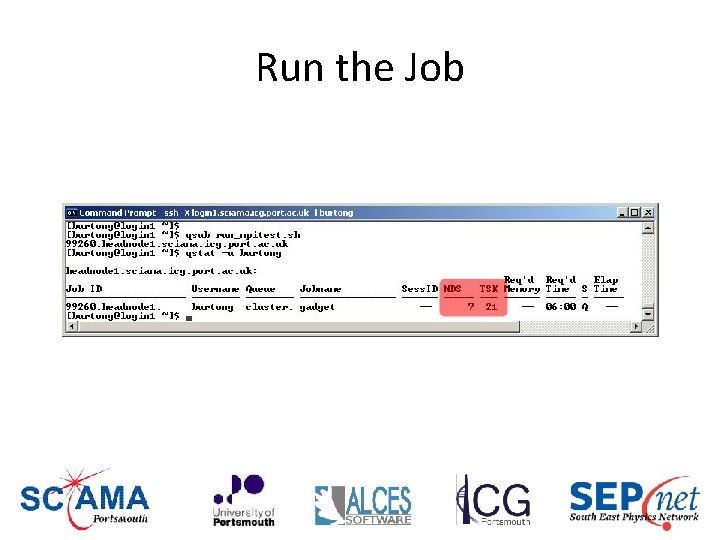

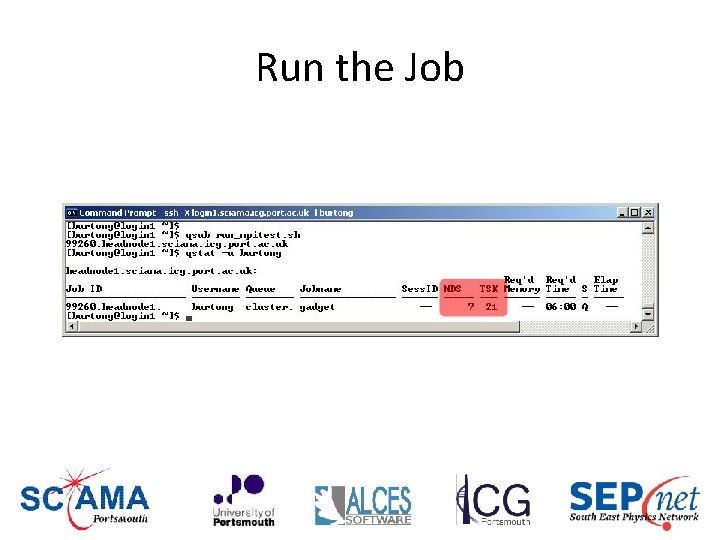

Run the Job

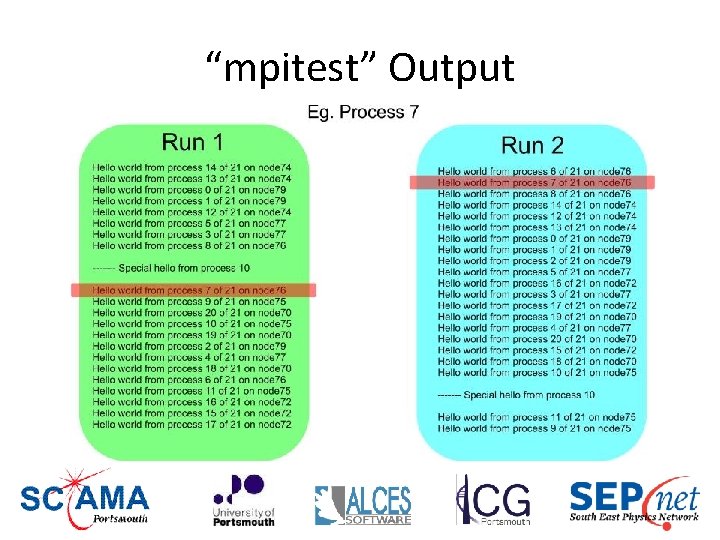

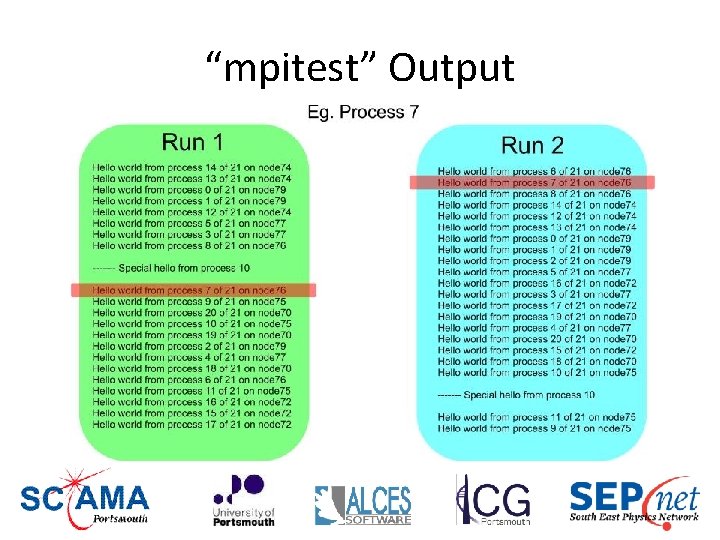

“mpitest” Output

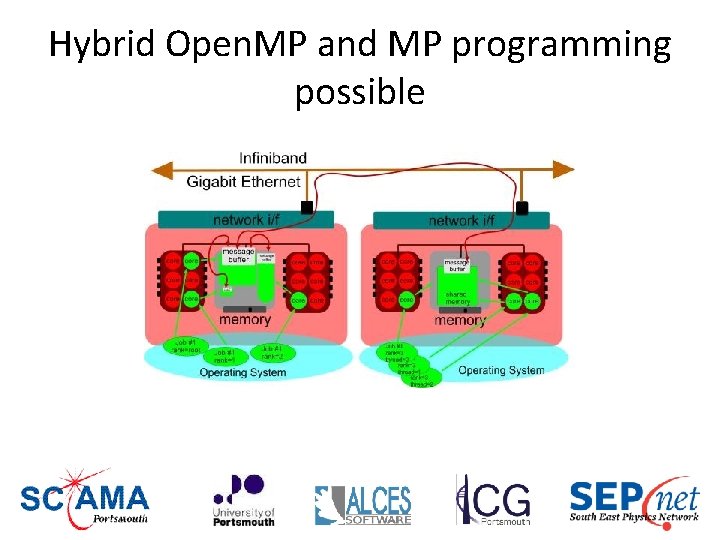

Open. MP / MPI mixed mode and memory models 39

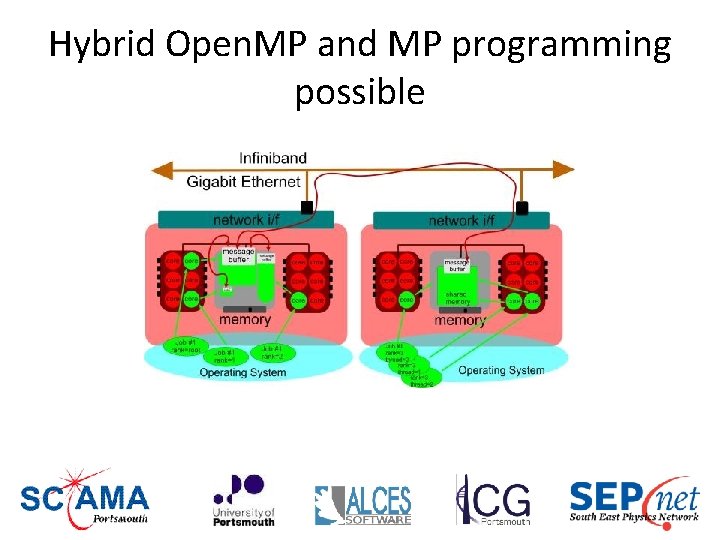

Hybrid Open. MP and MP programming possible

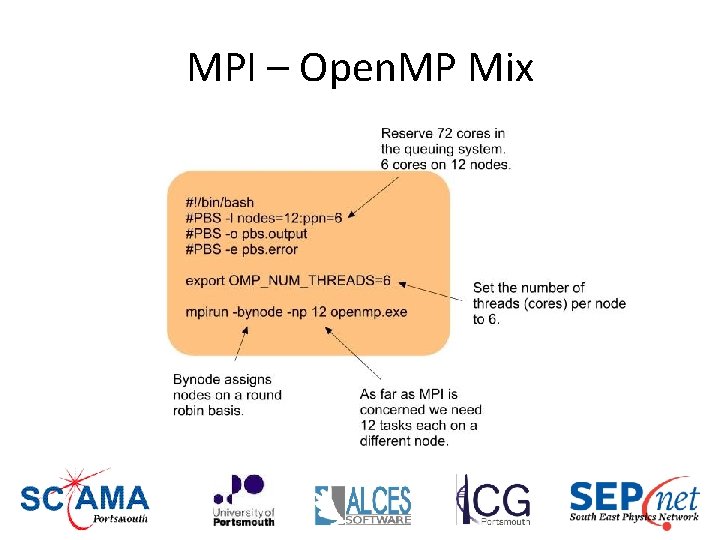

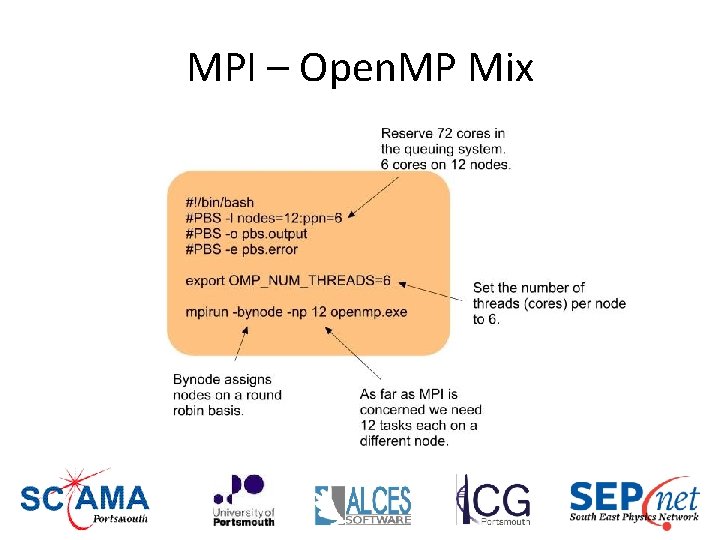

MPI – Open. MP Mix

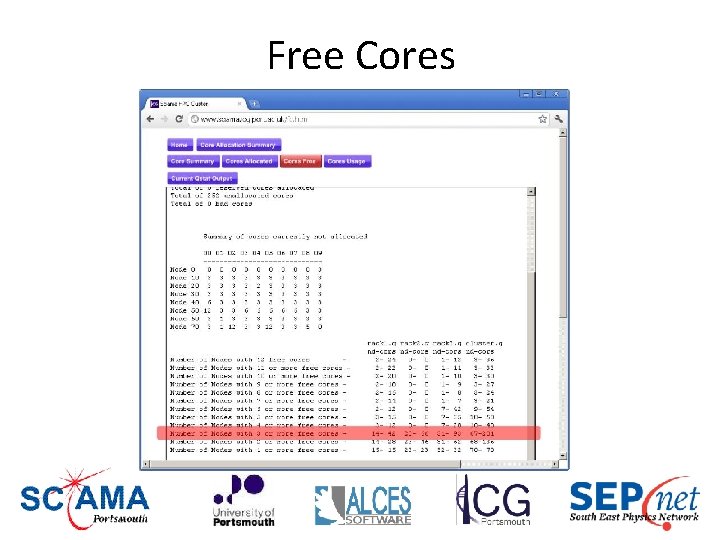

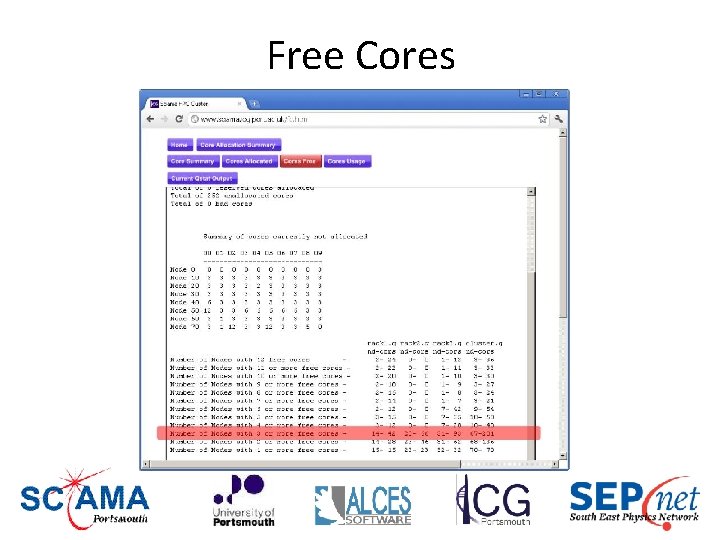

Free Cores

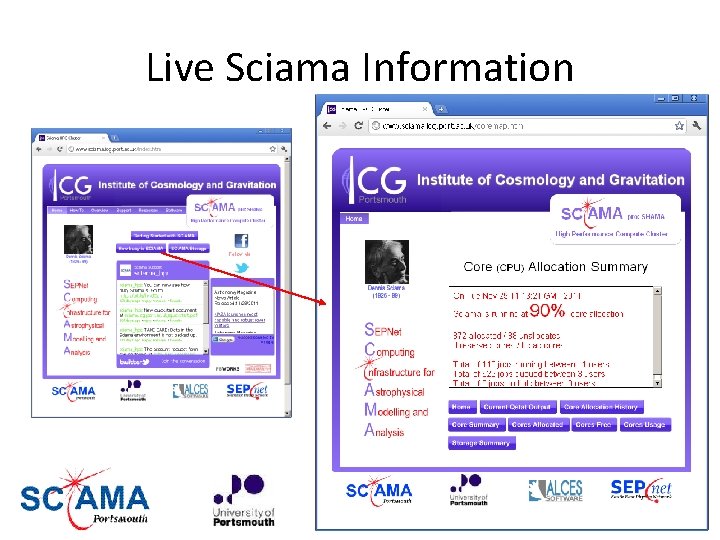

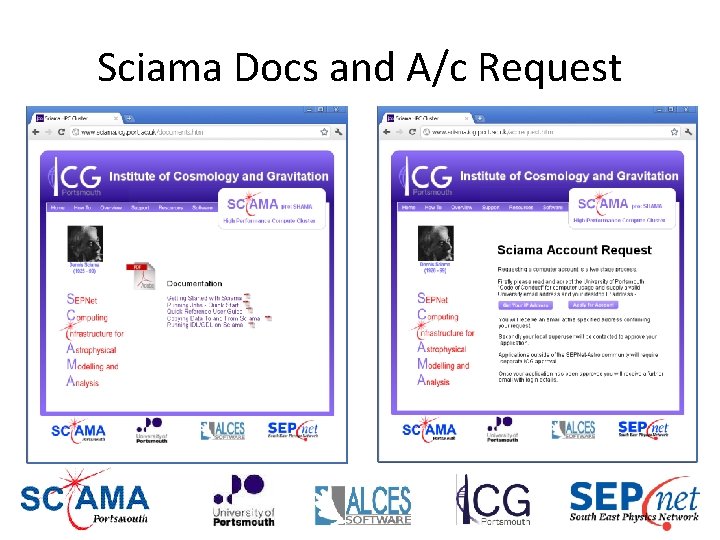

Sciama Information 43

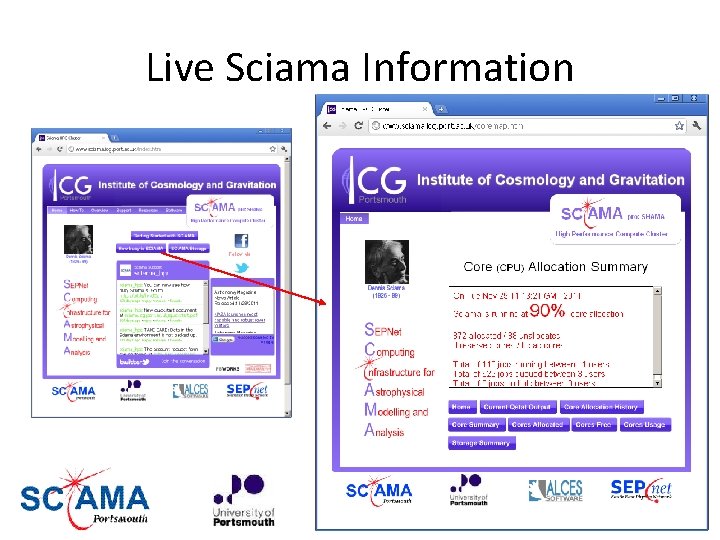

Live Sciama Information

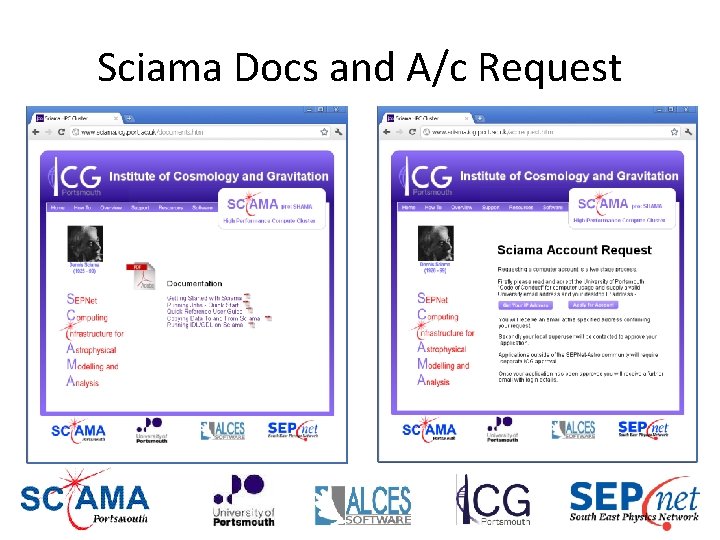

Sciama Docs and A/c Request