Scheduling Strategies Operating Systems Spring 2004 Class 10

- Slides: 16

Scheduling Strategies Operating Systems Spring 2004 Class #10

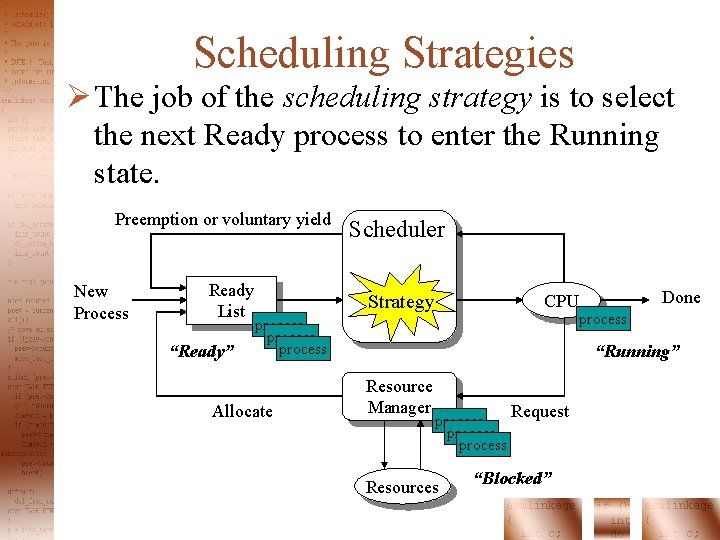

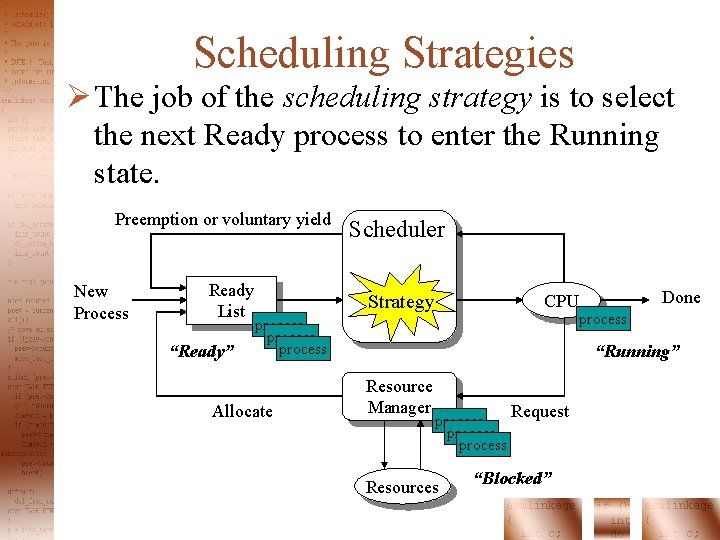

Scheduling Strategies Ø The job of the scheduling strategy is to select the next Ready process to enter the Running state. Preemption or voluntary yield New Process Ready List “Ready” Scheduler Strategy CPU process Allocate Done process “Running” Resource Manager process Resources Request “Blocked”

Some Scheduling Strategies Ø Non-Preemptive Strategies: ü First-Come-First-Served (FCFS) ü Priority* ü Shortest Job Next (SJN)* * These also come in a preemptive flavor. Ø Preemptive Strategies: ü Round Robin ü Multi-level Queue ü Multi-level Feedback Queue

Evaluating Scheduling Strategies Ø Some metrics that are useful in evaluating scheduling strategies: ü CPU Utilization: The percentage of time that the CPU spends executing code on behalf of a user. ü Throughput: The average number of processes completed per time unit. ü Turnaround Time*: The total time from when a process first enters the Ready state to last time it leaves the Running state. ü Wait Time*: The time a process spends in the Ready state before its first transition to the Running state. ü Waiting Time*: The total time that a process spends in the Ready state. ü Response Time*: The average amount of time that a process spends in the Ready state. * These metrics are typically averaged across a collection of processes.

Comparing Scheduling Strategies Ø There are many techniques for comparing scheduling strategies: üDeterministic Modeling üQueuing Models üSimulation üImplementation

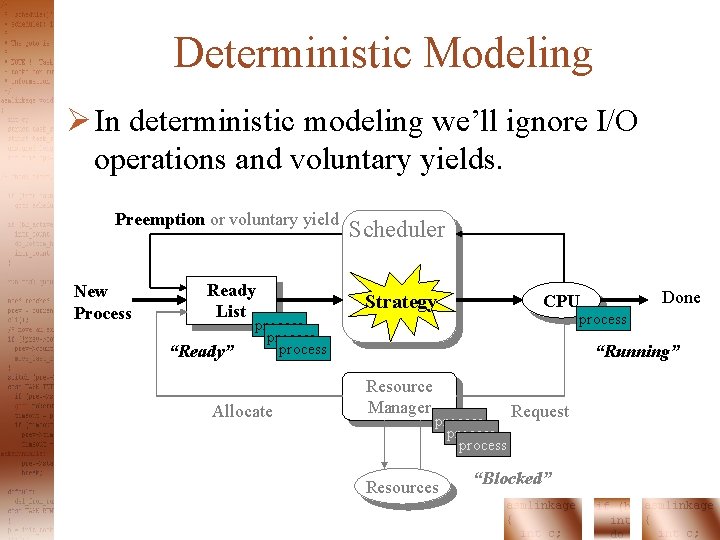

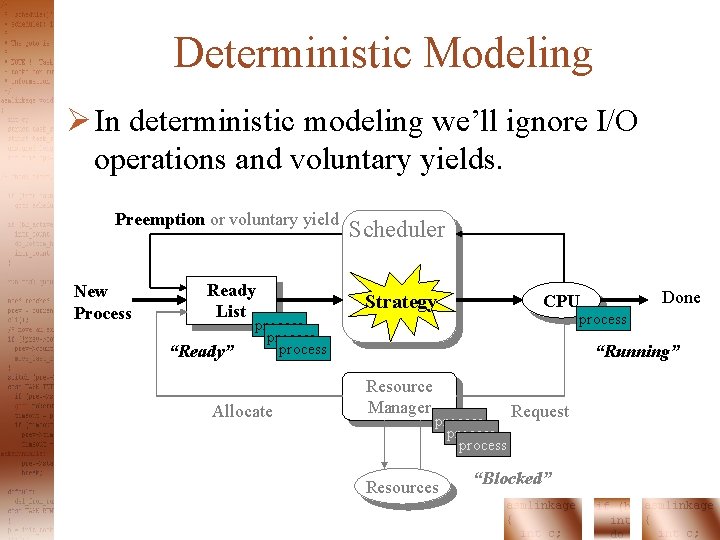

Deterministic Modeling Ø In deterministic modeling we’ll ignore I/O operations and voluntary yields. Preemption or voluntary yield New Process Ready List “Ready” Scheduler Strategy CPU process Allocate Done “Running” Resource Manager process Resources Request “Blocked”

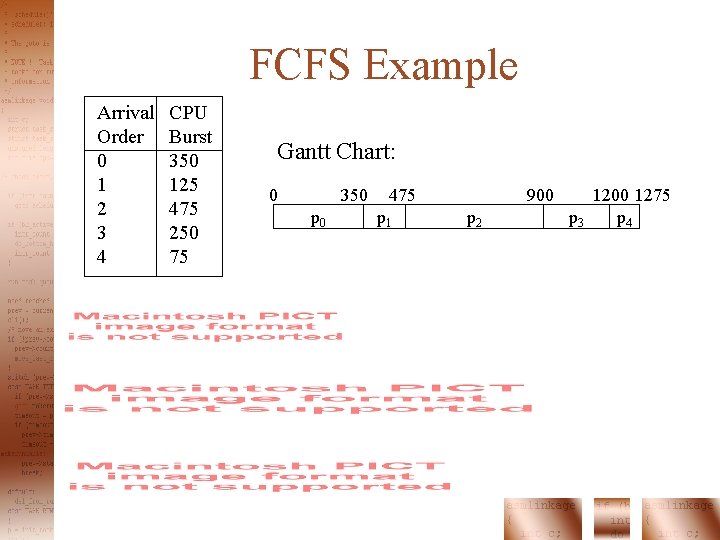

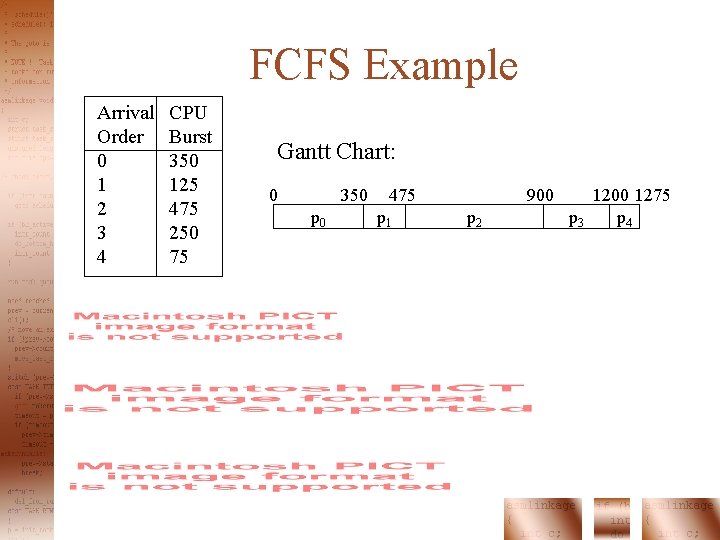

FCFS Example Arrival Order 0 1 2 3 4 CPU Burst 350 125 475 250 75 Gantt Chart: 0 350 p 0 475 p 1 900 p 2 1200 1275 p 3 p 4

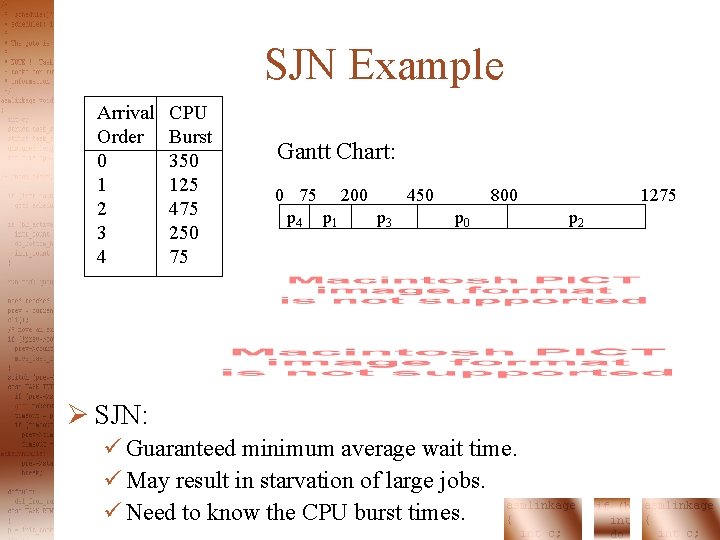

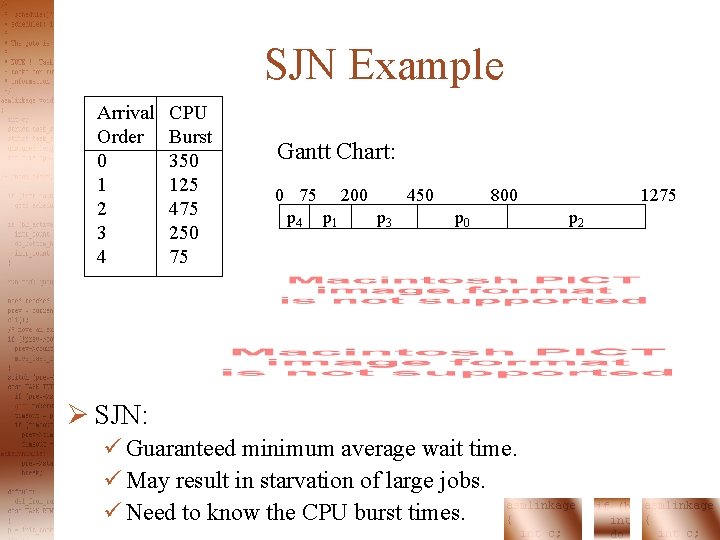

SJN Example Arrival Order 0 1 2 3 4 CPU Burst 350 125 475 250 75 Gantt Chart: 0 75 200 450 p 4 p 1 p 3 800 p 0 Ø SJN: ü Guaranteed minimum average wait time. ü May result in starvation of large jobs. ü Need to know the CPU burst times. 1275 p 2

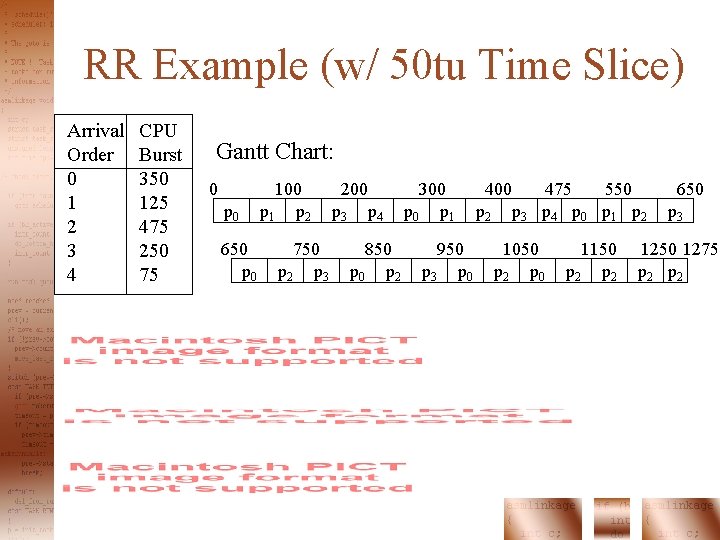

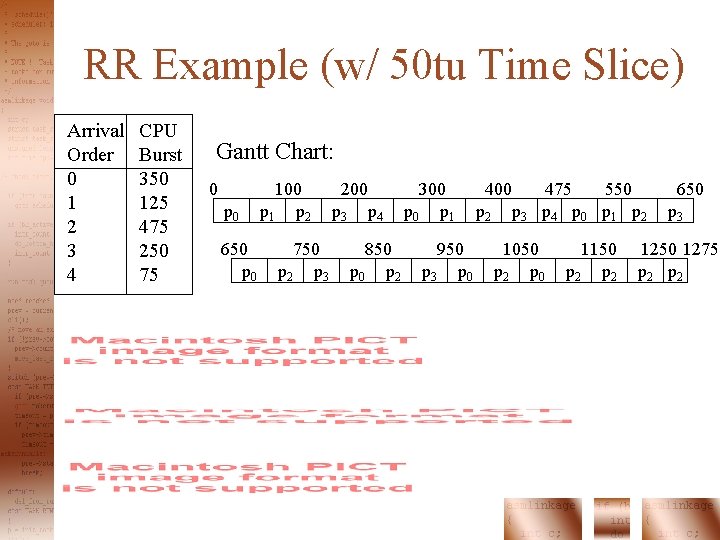

RR Example (w/ 50 tu Time Slice) Arrival Order 0 1 2 3 4 CPU Burst 350 125 475 250 75 Gantt Chart: 0 p 0 650 p 0 100 p 1 p 2 750 p 2 p 3 200 p 3 p 4 850 p 2 300 p 1 950 p 3 p 0 400 475 550 p 2 p 3 p 4 p 0 p 1 p 2 1050 p 2 p 0 1150 p 2 650 p 3 1250 1275 p 2

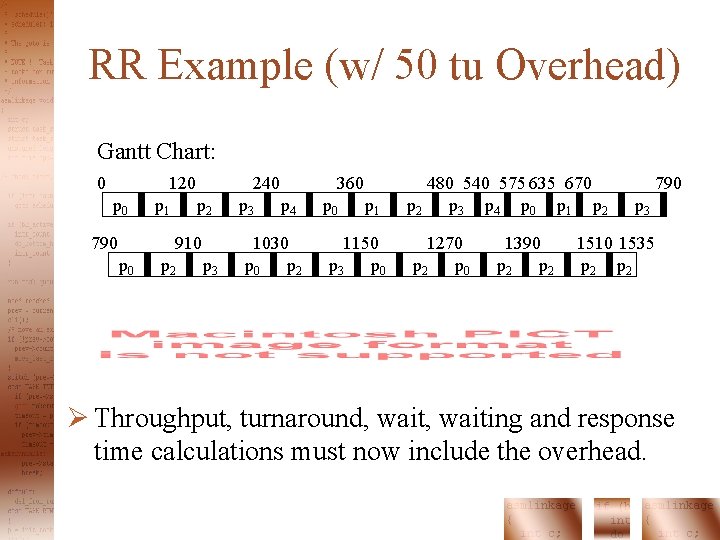

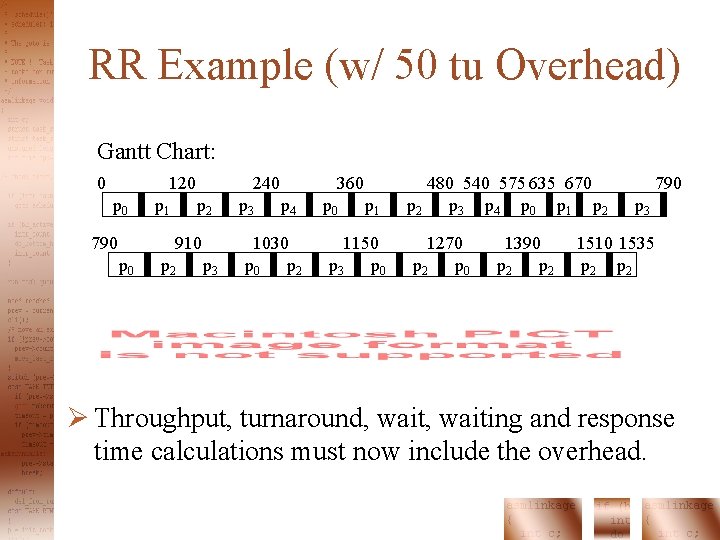

RR Example (w/ 50 tu Overhead) Gantt Chart: 0 120 p 0 790 p 1 240 p 2 910 p 2 p 3 360 p 4 1030 p 2 p 0 p 1 1150 p 3 p 0 480 540 575 635 670 p 2 p 3 p 4 p 0 p 1 p 2 1270 p 2 p 0 1390 p 2 790 p 3 1510 1535 p 2 Ø Throughput, turnaround, waiting and response time calculations must now include the overhead.

Multi-Level Queues Preemption or voluntary yield Ready List 0 Done Ready List 1 Scheduler Ready List 2 Ø For i < j All processes at level i run before any process at level j. Ø Each list may use its own strategy. New Process Ready List. N-1 CPU

Linux Scheduling Ø Linux uses a multi-level queue scheduling strategy: Preemption or voluntary yield FIFO New Process RR Scheduler CPU OTHER ü All threads in FIFO run before any in RR which run before any in OTHER. ü Within each queue a priority scheme is used with higher priority threads running first. ü User threads run in the OTHER queue. ü All queues are preemptive. Done

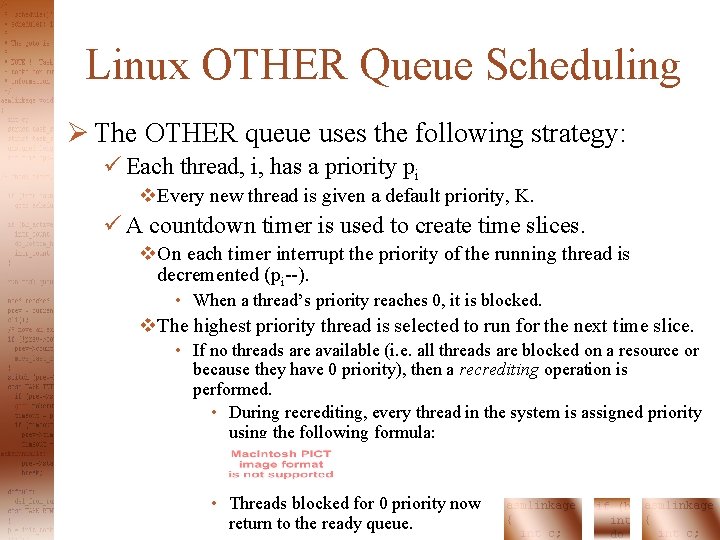

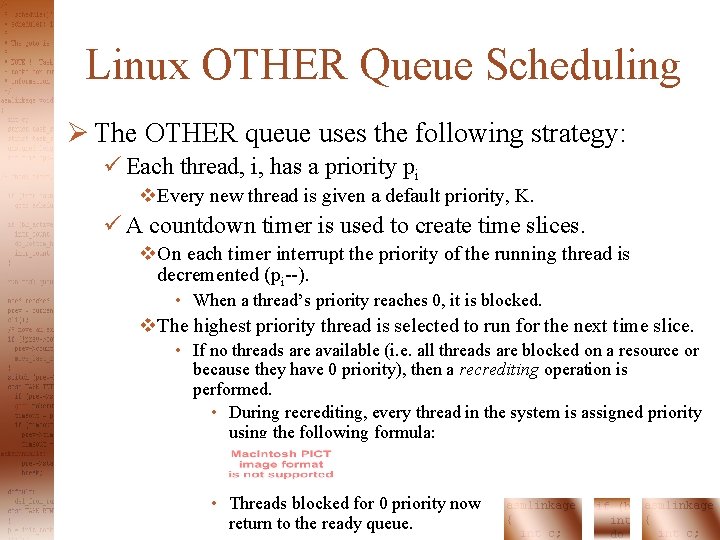

Linux OTHER Queue Scheduling Ø The OTHER queue uses the following strategy: ü Each thread, i, has a priority pi v. Every new thread is given a default priority, K. ü A countdown timer is used to create time slices. v. On each timer interrupt the priority of the running thread is decremented (pi--). • When a thread’s priority reaches 0, it is blocked. v. The highest priority thread is selected to run for the next time slice. • If no threads are available (i. e. all threads are blocked on a resource or because they have 0 priority), then a recrediting operation is performed. • During recrediting, every thread in the system is assigned priority using the following formula: • Threads blocked for 0 priority now return to the ready queue.

Multi-Level Feedback Queues Preemption or voluntary yield Ready List 0 Done Ready List 1 Scheduler Ready List 2 Ø For i < j All processes at level i run before any process at level j. Ø Processes are inserted into queues by priority. New Process Ready List. N-1 CPU ü Process priorities are updated dynamically. ü Each queue typically uses a RR strategy. Ø Popular in Modern OS: ü Windows ü BSD

Scheduling Simulations Ø Scheduling simulations account for several important factors that are frequently ignored by deterministic modeling: üScheduling Overhead üI/O Operations üProcess Profiles: v. CPU Bound v. I/O Bound üVariable process arrival times.

Project Ø Implement the RR and Linux OTHER scheduling strategies and compare their performance on: üI/O Bound Processes üCPU Bound Processes üA mix of CPU and I/O Bound Processes