Schedules of Reinforcement These schedules predict how quickly

- Slides: 7

Schedules of Reinforcement These schedules predict how quickly operant acquisition will occur, and how difficult it will be to extinguish the conditioned behaviour.

Continuous Reinforcement • Basically, the term defines itself: there’s no gap between behaviour and reinforcer, and reinforcement occurs every time. • Extinction happens quickly… why? • Example: tame animals will cease to hunt food. This is why zoos don’t want you feeding them.

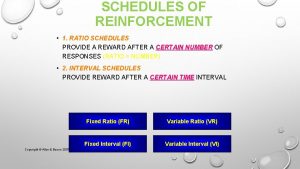

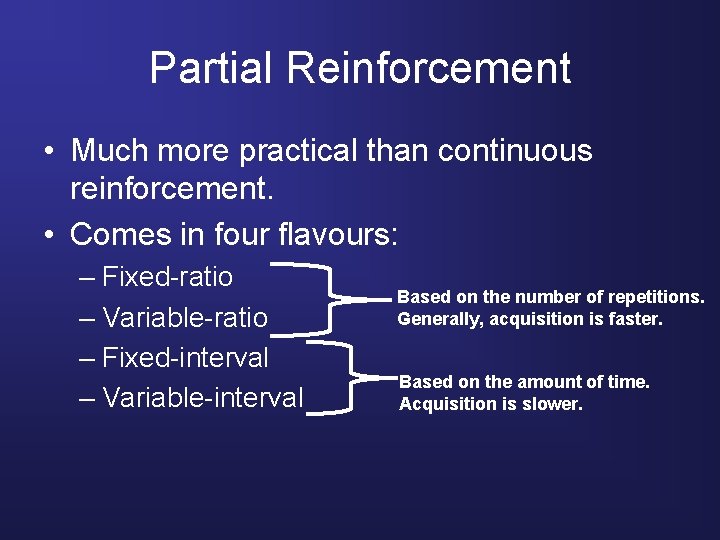

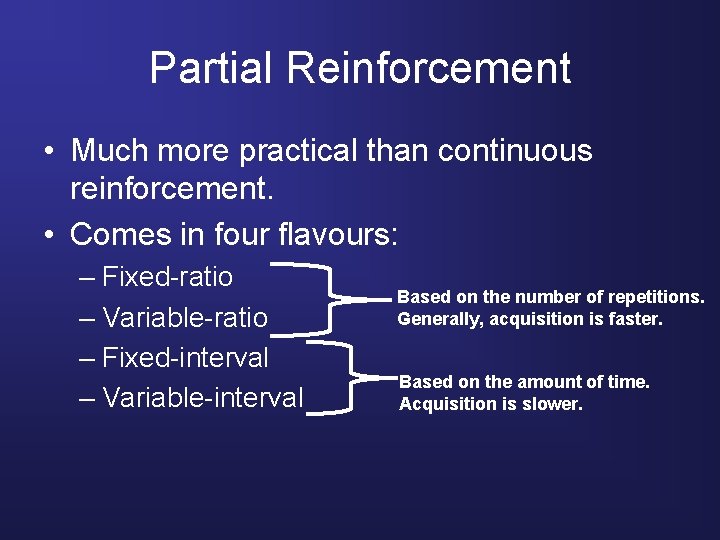

Partial Reinforcement • Much more practical than continuous reinforcement. • Comes in four flavours: – Fixed-ratio – Variable-ratio – Fixed-interval – Variable-interval Based on the number of repetitions. Generally, acquisition is faster. Based on the amount of time. Acquisition is slower.

Fixed-ratio • Reinforcement based on an unchanging number of responses. – Typically, the learner already knows in advance how many repetitions are needed. • The most reliable schedule of reinforcement… why? – Predictability makes us happy. • Examples: – Loyalty cards. – Frequent-flyer miles.

Variable-ratio • Reinforcement based on a changing number of responses. – Typically, the learner will “keep trying” because he/she knows their behaviour will eventually pay off. • This is among the longest-lasting schedule of reinforcement… why? – Extinction depends on the learner losing hope… depending on the reinforcer, that doesn’t happen easily. • Examples: – Drawing Uno cards (hoping for the “Draw Four” card). – Playing the same lottery numbers.

Fixed-interval • Reinforcement based on an unchanging period of time. – Typically, the learner already knows in advance how much time will pass. . . If not, he/she quickly figures it out. • Behaviours increase as the predictable reinforcing time approaches. • Examples: – “A watched pot never boils…” People often force themselves to wait to check for boiling water. – Looking at the clock during Block 5 (increases in frequency as 3: 00 approaches).

Variable-interval • Reinforcement based on a changing amount of time. – Often, especially after a particularly long interval, extinction occurs swiftly. • This also tends to produce long-lasting behaviours, though less reliably than variableratio. • Examples: – Shooting soccer balls during a game (at the goal, not with a gun). – Arrival of text messages.