Scales Indices Measurement Overview Using multiple indicators to

![Cronbach’s Alpha Formula: A=N*r [1+ (N – 1)r] N = number of items in Cronbach’s Alpha Formula: A=N*r [1+ (N – 1)r] N = number of items in](https://slidetodoc.com/presentation_image_h/22c6583f210b4a9d128a06e4ed37f256/image-21.jpg)

- Slides: 22

Scales & Indices

Measurement Overview Using multiple indicators to create variables Two-step process: 1. Which items go together to measure which variables Factor Analysis 2. Evaluating the reliability of multi-item scales Cronbach’s Alpha

Factor Analysis Starts with a group of similar indicators (survey items) Sorts items based on patterns of inter-item similarities I. e. , which items are correlated (which ones group together) Items that group together share some underlying common underlying factor Procedure is based on inter-item correlations Correlation: Measure of similarity between two variables Varies between 1 and -1

Stages in Factor Analysis Extraction How the computer searches for patterns Rotation Mathematical manipulation of patterns Whether the computer produces correlated or uncorrelated factors

Concept measurement example: Research on effects of TV news coverage of social protest Subjects shown one of three TV news stories about an anarchist protest: 1. Extremely critical 2. Highly critical 3. Moderately critical Respond to questionnaire Examined differences between exposure groups

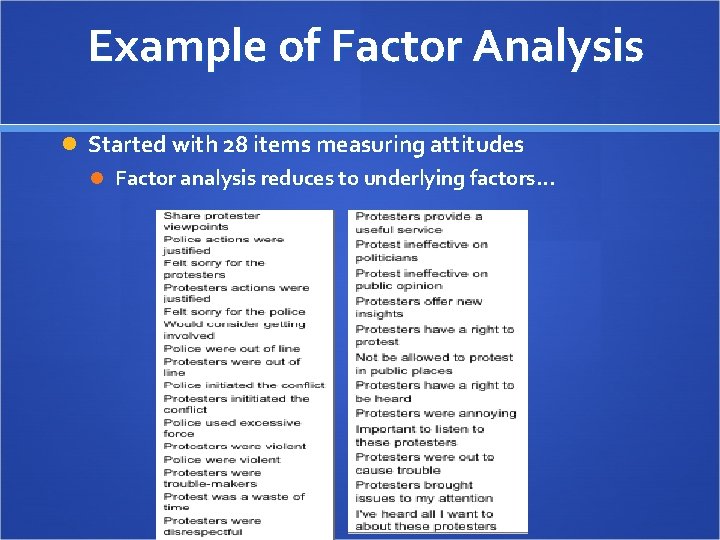

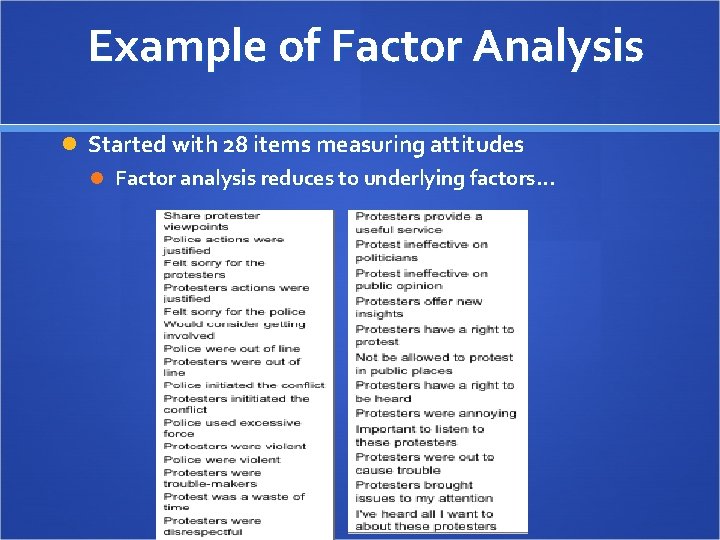

Example of Factor Analysis Started with 28 items measuring attitudes Factor analysis reduces to underlying factors…

Remove

Remove

Remove

Five Factors 1. Protest rights 2. Police hostility 3. Protest utility 4. Blame the protesters 5. Anti-violence

1. Support for Protest Rights A. Protesters have a right to protest B. Protesters should not be allowed to protest in public places (reverse coded) C. Protesters have a right to be heard

2. Hostility the Police A. Police were out of line B. Police used excessive force C. Police were violent

3. Utility of Protest A. Protesters offer new insights B. It’s important to listen to protesters C. Protesters brought issues to my attention

4. Blame the Protesters A. Protesters initiated the conflict B. The protesters were disrespectful C. Protest was ineffective on politicians

5. Opposition to Protest Violence A. I feel sorry for the police because of the way they were treated by the protesters B. The protesters were violent

Combining items into a scale Summative scale Factor scores

Summative scales Adding items or taking the mean E. g. , : Compute scale = sum. 1(var 1, var 2, var 3) Compute scale = mean. 1(var 1, var 2, var 3) Weights each item equally

Factor scores Uses factor loadings from the factor matrix to weight the items Heavier weighting to items that are more central to the factor Use save command when running factor analysis (under “scores”: “save as variables” New variables with values for each case saved in data file for each factor

Cronbach’s Alpha Assessing reliability of a multi-item scale Based on the average inter-item correlation Weighted by the number of items in the scale Measures internal consistency (unidimensionality) Are all the items measuring the same thing? If so, they should all be highly inter-correlated

![Cronbachs Alpha Formula ANr 1 N 1r N number of items in Cronbach’s Alpha Formula: A=N*r [1+ (N – 1)r] N = number of items in](https://slidetodoc.com/presentation_image_h/22c6583f210b4a9d128a06e4ed37f256/image-21.jpg)

Cronbach’s Alpha Formula: A=N*r [1+ (N – 1)r] N = number of items in the scale r = average inter-item correlation

Acceptable alpha for a scale Ideally, alpha >. 80 Some journals accept >. 70 Low alpha means either: 1. Scale is not reliable (items have lots of error) 2. Items could measure two different things Alpha if item deleted can help identify a bad item More than one bad item could be an indicator that there are items that measure a different concept