ScalaSpark Review 6272014 Small Scale Scala Spark Demo

![Partition to split into 2 arrays/lists scala> a res 37: Array[Int] = Array(1, 2, Partition to split into 2 arrays/lists scala> a res 37: Array[Int] = Array(1, 2,](https://slidetodoc.com/presentation_image/cef09676361f29c124f9b98fed7c541c/image-3.jpg)

![Span should do the same thing scala> a. span( _ ==10) res 44: (Array[Int], Span should do the same thing scala> a. span( _ ==10) res 44: (Array[Int],](https://slidetodoc.com/presentation_image/cef09676361f29c124f9b98fed7c541c/image-4.jpg)

![Jobserver Hadoop Hello. World 4) run jar in Spark [dc@localhost spark-jobserver-master]$ curl -d "input. Jobserver Hadoop Hello. World 4) run jar in Spark [dc@localhost spark-jobserver-master]$ curl -d "input.](https://slidetodoc.com/presentation_image/cef09676361f29c124f9b98fed7c541c/image-14.jpg)

![Query Job. Server for results [dc@localhost spark-jobserver-master]$ curl localhost: 8090/jobs/ce 208815 -f 445 -4 Query Job. Server for results [dc@localhost spark-jobserver-master]$ curl localhost: 8090/jobs/ce 208815 -f 445 -4](https://slidetodoc.com/presentation_image/cef09676361f29c124f9b98fed7c541c/image-15.jpg)

- Slides: 15

Scala/Spark Review 6/27/2014 Small Scale Scala Spark Demo, Data. Repos, Spark Executors

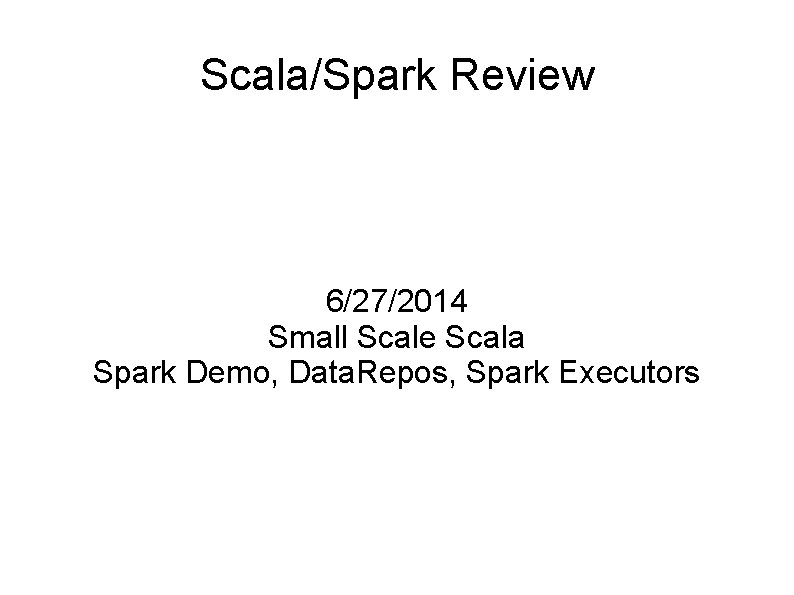

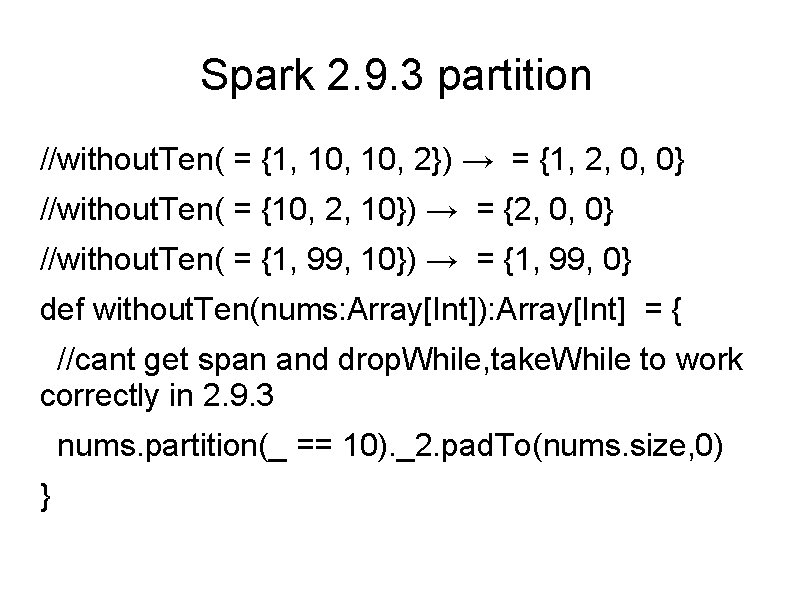

Spark 2. 9. 3 partition //without. Ten( = {1, 10, 2}) → = {1, 2, 0, 0} //without. Ten( = {10, 2, 10}) → = {2, 0, 0} //without. Ten( = {1, 99, 10}) → = {1, 99, 0} def without. Ten(nums: Array[Int]): Array[Int] = { //cant get span and drop. While, take. While to work correctly in 2. 9. 3 nums. partition(_ == 10). _2. pad. To(nums. size, 0) }

![Partition to split into 2 arrayslists scala a res 37 ArrayInt Array1 2 Partition to split into 2 arrays/lists scala> a res 37: Array[Int] = Array(1, 2,](https://slidetodoc.com/presentation_image/cef09676361f29c124f9b98fed7c541c/image-3.jpg)

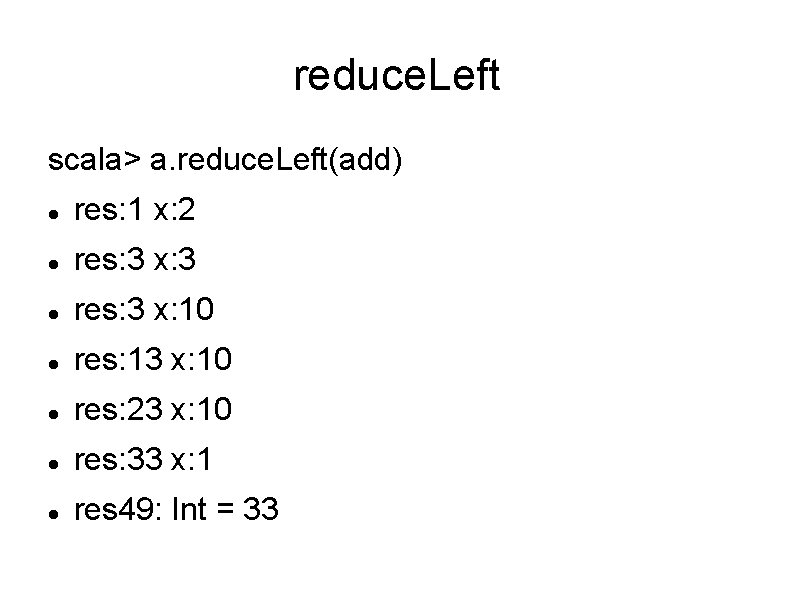

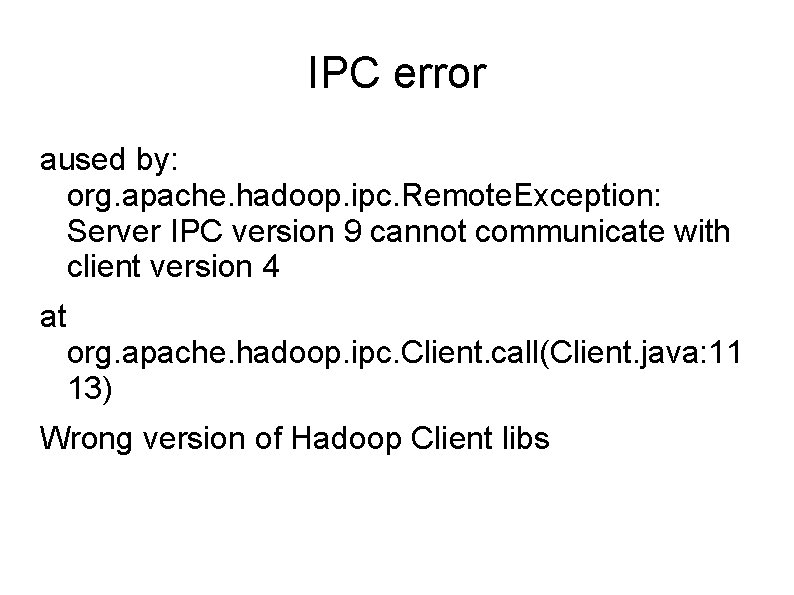

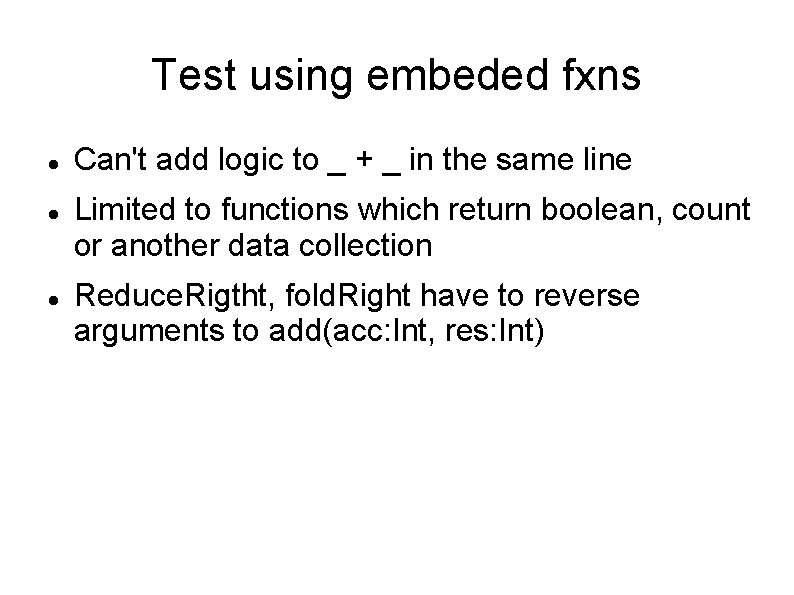

Partition to split into 2 arrays/lists scala> a res 37: Array[Int] = Array(1, 2, 3, 10, 10, 1) scala> a. partition(_ == 10) res 38: (Array[Int], Array[Int]) = (Array(10, 10), Array(1, 2, 3, 1))

![Span should do the same thing scala a span 10 res 44 ArrayInt Span should do the same thing scala> a. span( _ ==10) res 44: (Array[Int],](https://slidetodoc.com/presentation_image/cef09676361f29c124f9b98fed7c541c/image-4.jpg)

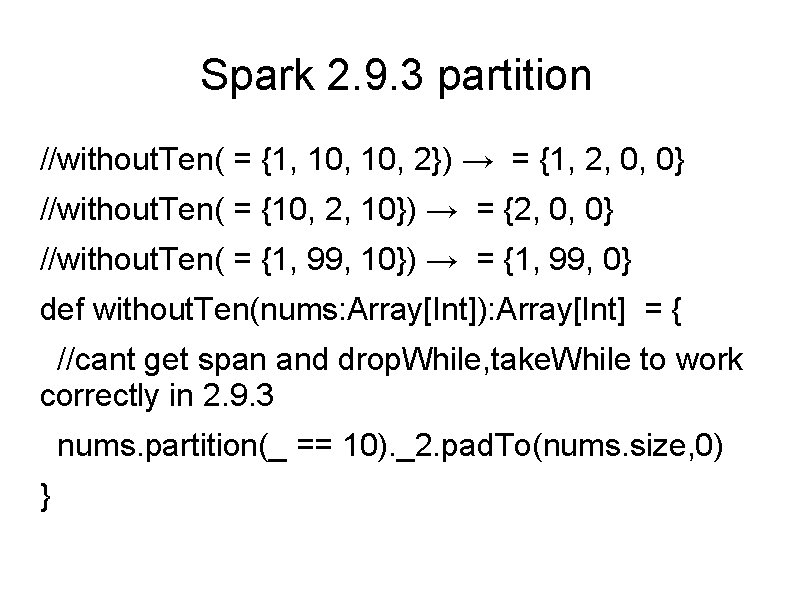

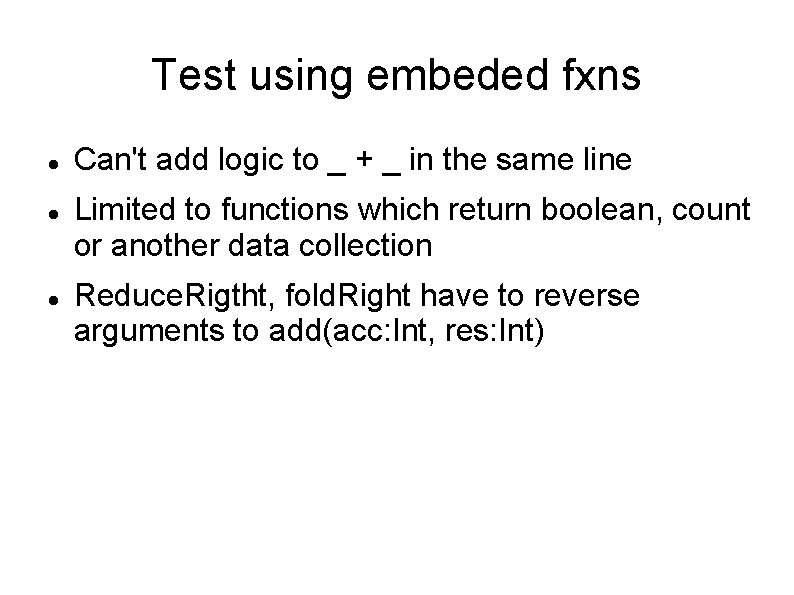

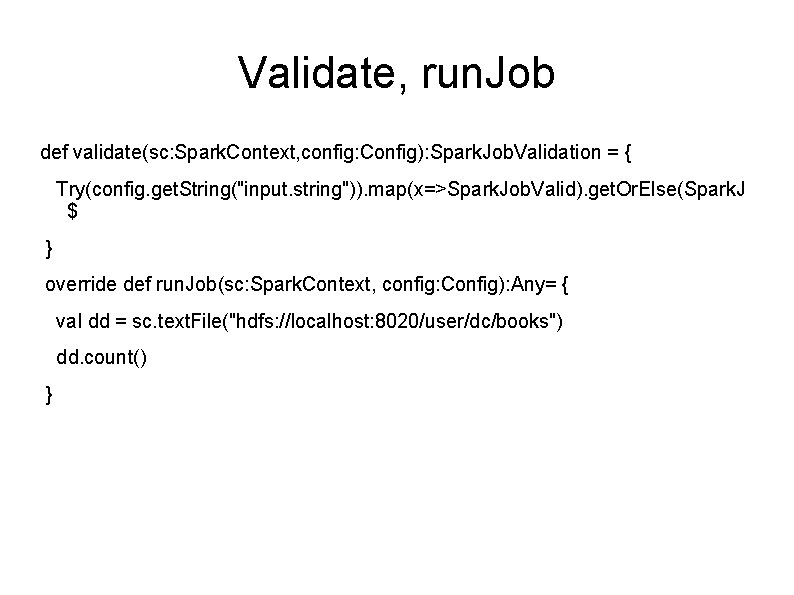

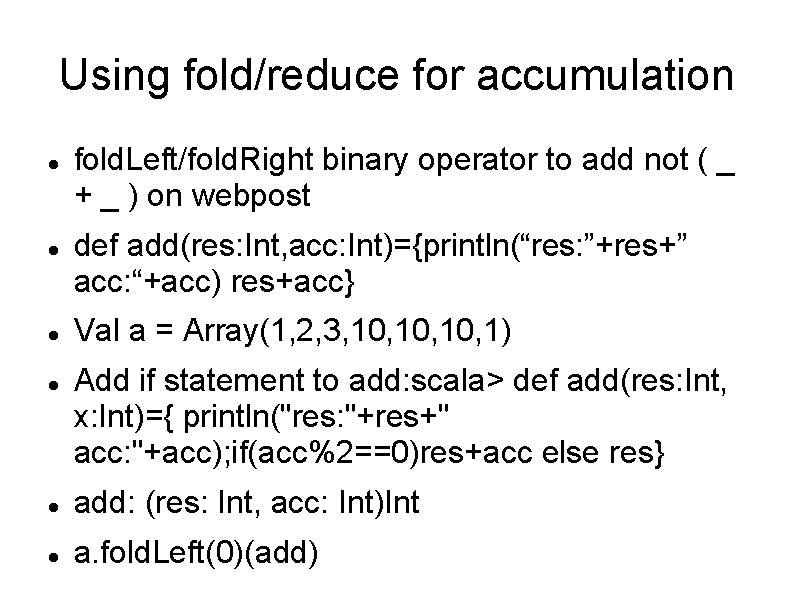

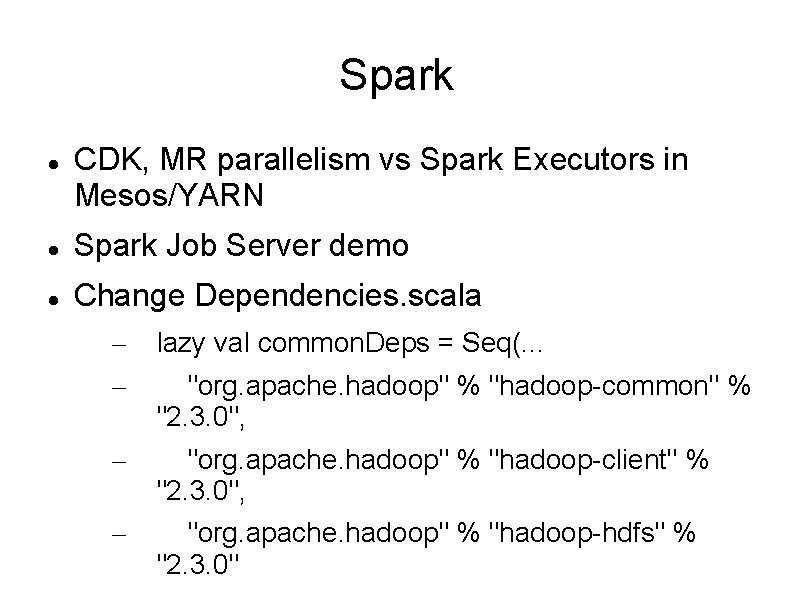

Span should do the same thing scala> a. span( _ ==10) res 44: (Array[Int], Array[Int]) = (Array(), Array(1, 2, 3, 10, 10, 1)) Works for first element only scala> a. span( _ ==1) res 45: (Array[Int], Array[Int]) = (Array(1), Array(2, 3, 10, 10, 1)) take. While/drop. While

Using fold/reduce for accumulation fold. Left/fold. Right binary operator to add not ( _ + _ ) on webpost def add(res: Int, acc: Int)={println(“res: ”+res+” acc: “+acc) res+acc} Val a = Array(1, 2, 3, 10, 10, 1) Add if statement to add: scala> def add(res: Int, x: Int)={ println("res: "+res+" acc: "+acc); if(acc%2==0)res+acc else res} add: (res: Int, acc: Int)Int a. fold. Left(0)(add)

Fold. Left scala> a. fold. Left(0)(add) res: 0 x: 1 res: 0 x: 2 res: 2 x: 3 res: 2 x: 10 res: 12 x: 10 res: 22 x: 10 res: 32 x: 1 res 51: Int = 32

reduce. Left scala> a. reduce. Left(add) res: 1 x: 2 res: 3 x: 3 res: 3 x: 10 res: 13 x: 10 res: 23 x: 10 res: 33 x: 1 res 49: Int = 33

Test using embeded fxns Can't add logic to _ + _ in the same line Limited to functions which return boolean, count or another data collection Reduce. Rigtht, fold. Right have to reverse arguments to add(acc: Int, res: Int)

Spark CDK, MR parallelism vs Spark Executors in Mesos/YARN Spark Job Server demo Change Dependencies. scala – – lazy val common. Deps = Seq(. . . – "org. apache. hadoop" % "hadoop-client" % "2. 3. 0", – "org. apache. hadoop" % "hadoop-hdfs" % "2. 3. 0" "org. apache. hadoop" % "hadoop-common" % "2. 3. 0",

Test HDFS access Hello. World. scala object Hello. World extends Spark. Job{ def main(args: Array[String]){ println("asdf") //wont see this val sc=new Spark. Context("local[2]", "Hello. World") val config = Config. Factory. parse. String("") val results = run. Job(sc, config) println("results: "+results) }

IPC error aused by: org. apache. hadoop. ipc. Remote. Exception: Server IPC version 9 cannot communicate with client version 4 at org. apache. hadoop. ipc. Client. call(Client. java: 11 13) Wrong version of Hadoop Client libs

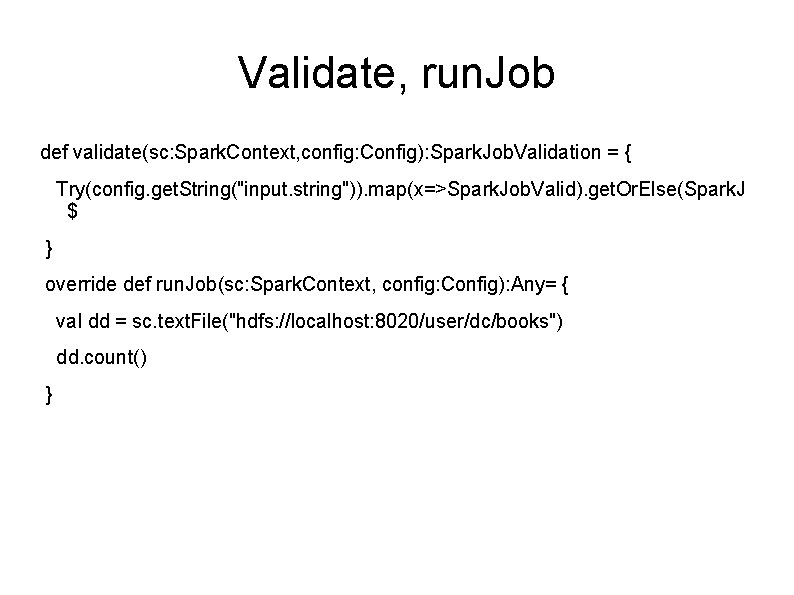

Validate, run. Job def validate(sc: Spark. Context, config: Config): Spark. Job. Validation = { Try(config. get. String("input. string")). map(x=>Spark. Job. Valid). get. Or. Else(Spark. J $ } override def run. Job(sc: Spark. Context, config: Config): Any= { val dd = sc. text. File("hdfs: //localhost: 8020/user/dc/books") dd. count() }

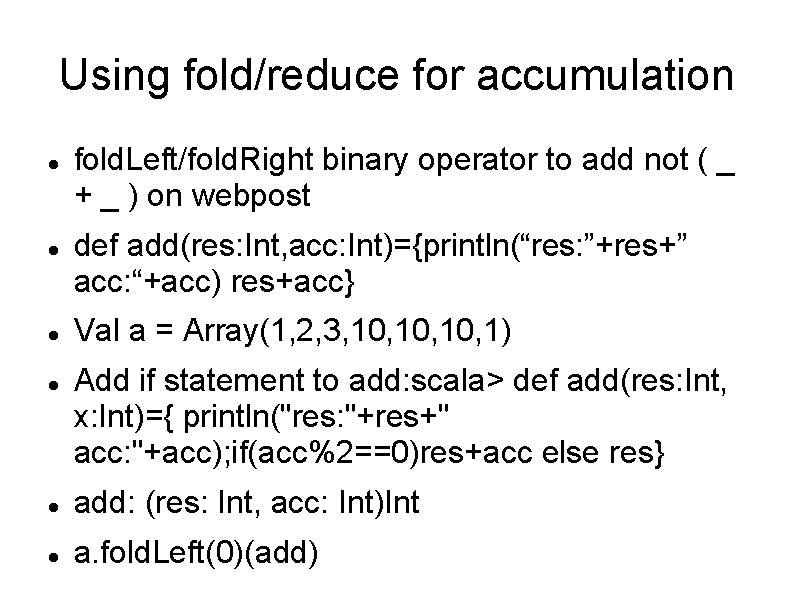

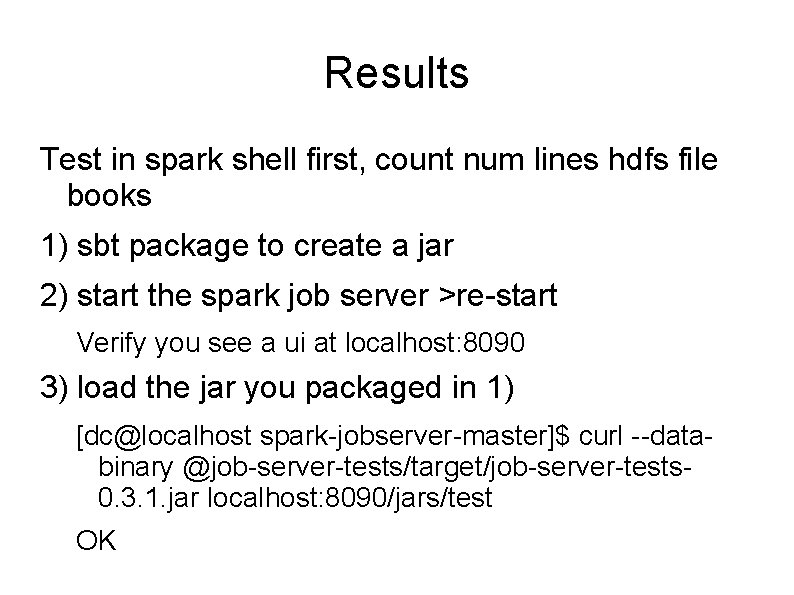

Results Test in spark shell first, count num lines hdfs file books 1) sbt package to create a jar 2) start the spark job server >re-start Verify you see a ui at localhost: 8090 3) load the jar you packaged in 1) [dc@localhost spark-jobserver-master]$ curl --databinary @job-server-tests/target/job-server-tests 0. 3. 1. jar localhost: 8090/jars/test OK

![Jobserver Hadoop Hello World 4 run jar in Spark dclocalhost sparkjobservermaster curl d input Jobserver Hadoop Hello. World 4) run jar in Spark [dc@localhost spark-jobserver-master]$ curl -d "input.](https://slidetodoc.com/presentation_image/cef09676361f29c124f9b98fed7c541c/image-14.jpg)

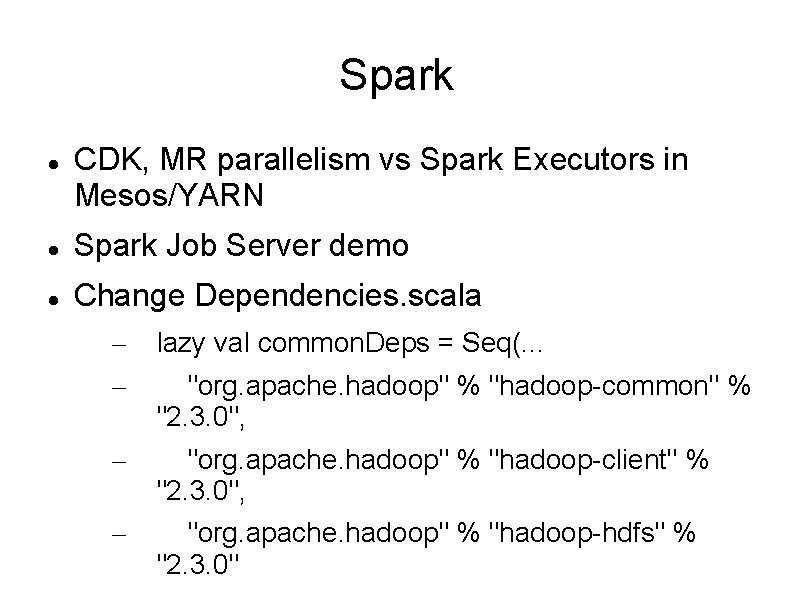

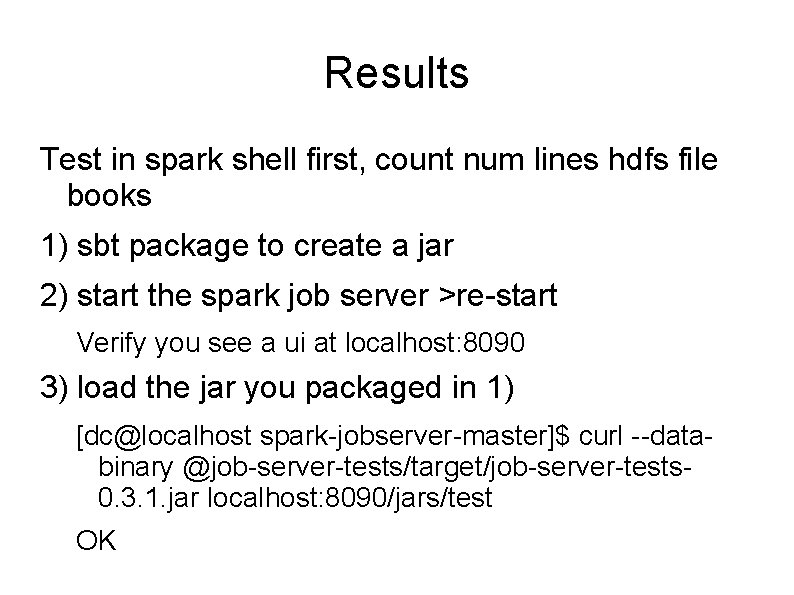

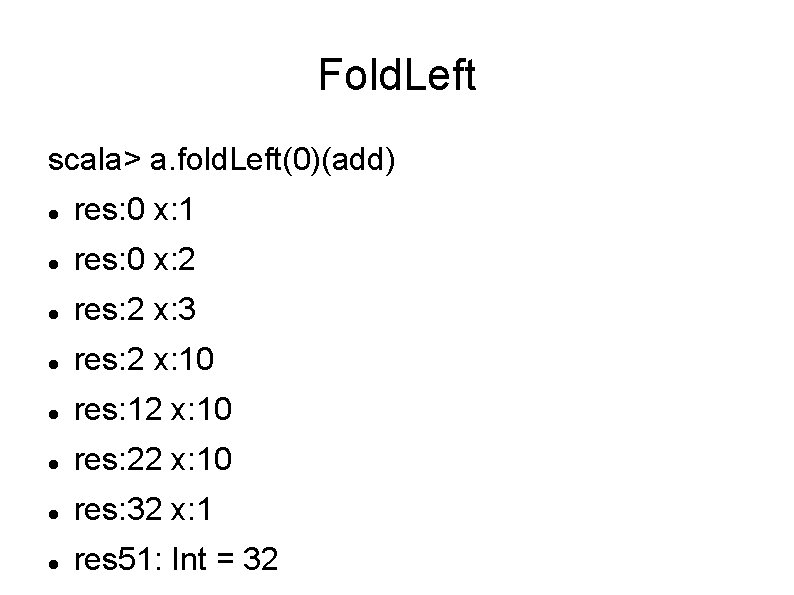

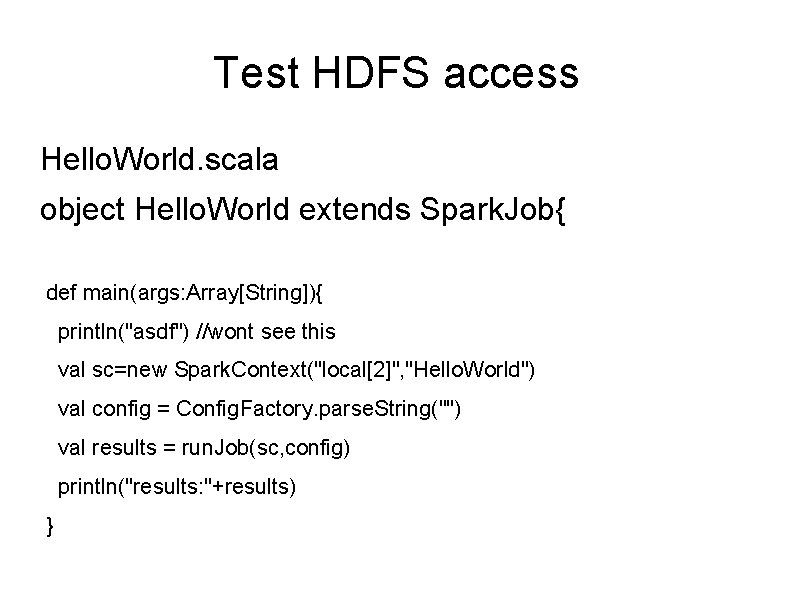

Jobserver Hadoop Hello. World 4) run jar in Spark [dc@localhost spark-jobserver-master]$ curl -d "input. string = a a a a b b" 'localhost: 8090/jobs? app. Name=test&class. Path=spark. jobserver. H ello. World' { "status": "STARTED", "result": { "job. Id": "ce 208815 -f 445 -4 a 77 -866 c-0 be 46 fdd 5 df 9", "context": "70 b 92 cb 1 -spark. jobserver. Hello. World" } }

![Query Job Server for results dclocalhost sparkjobservermaster curl localhost 8090jobsce 208815 f 445 4 Query Job. Server for results [dc@localhost spark-jobserver-master]$ curl localhost: 8090/jobs/ce 208815 -f 445 -4](https://slidetodoc.com/presentation_image/cef09676361f29c124f9b98fed7c541c/image-15.jpg)

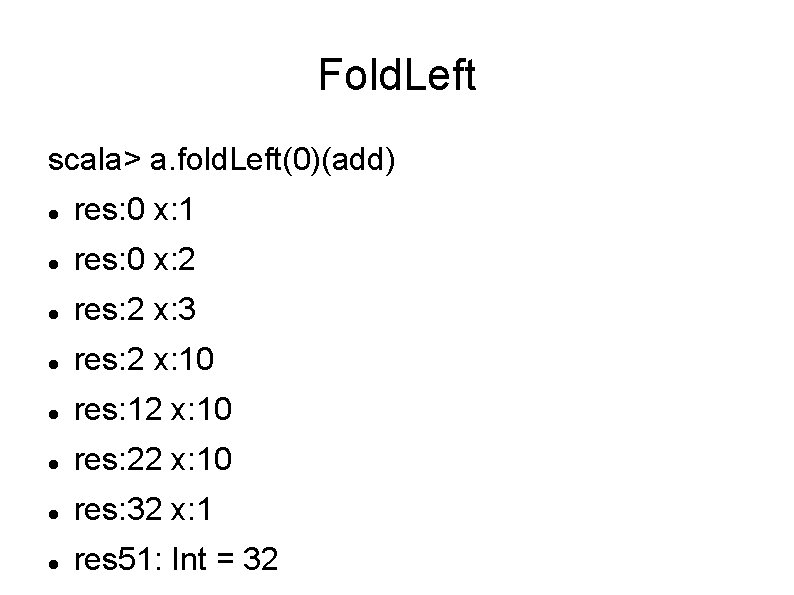

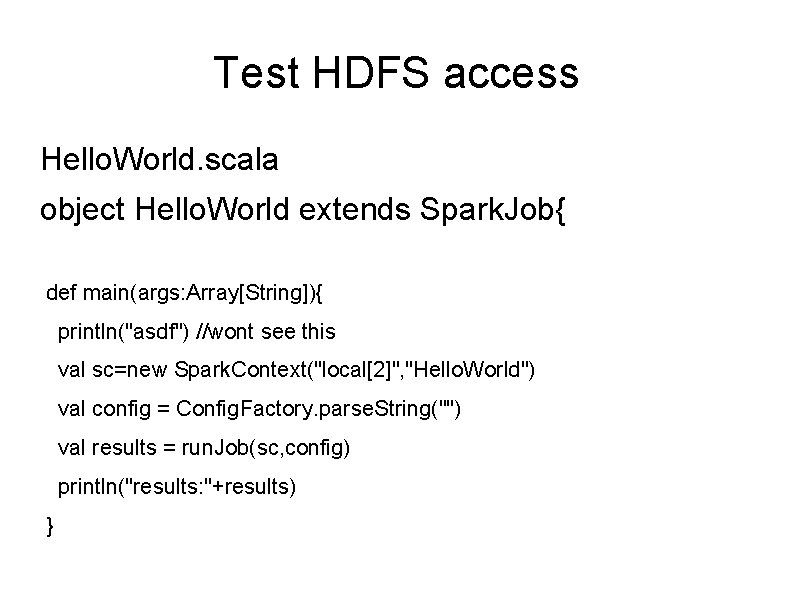

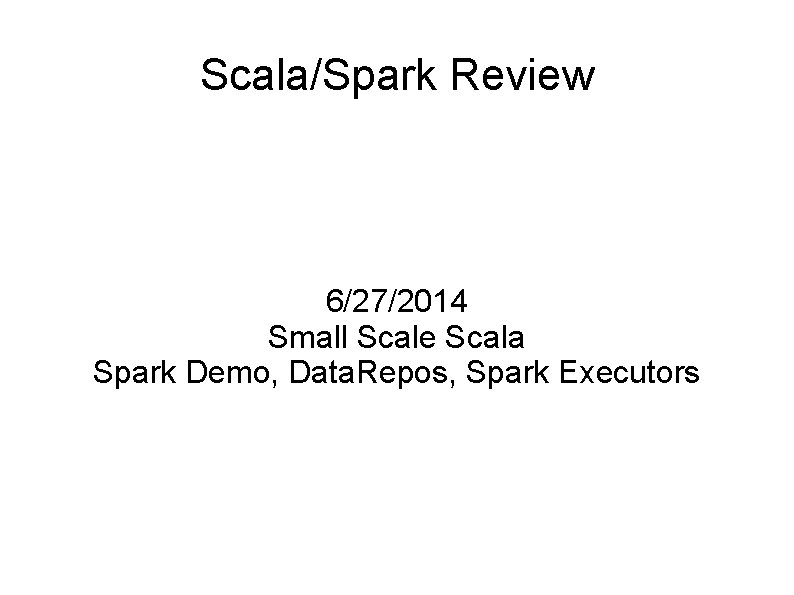

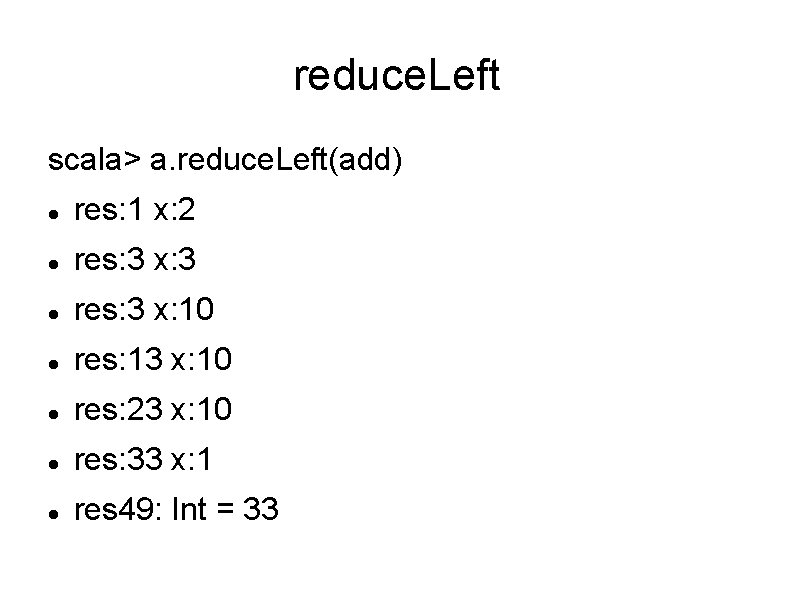

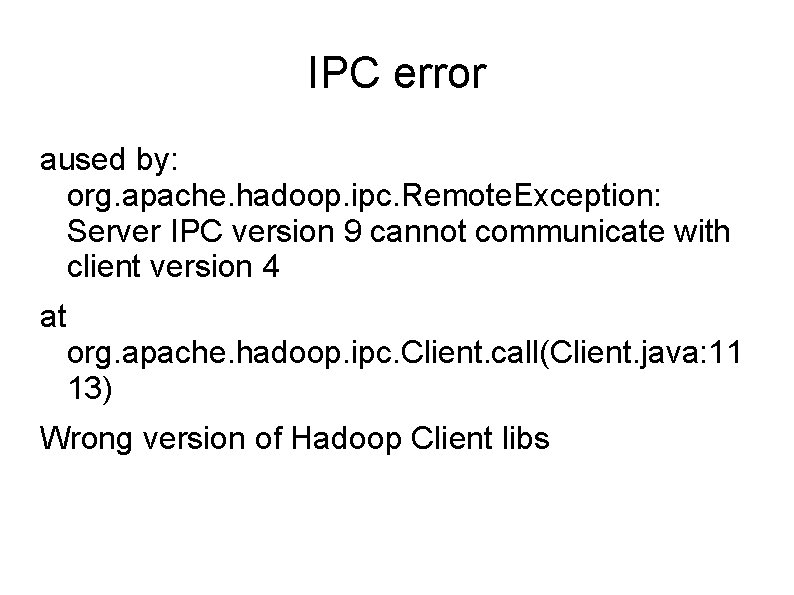

Query Job. Server for results [dc@localhost spark-jobserver-master]$ curl localhost: 8090/jobs/ce 208815 -f 445 -4 a 77 -866 c 0 be 46 fdd 5 df 9 { "status": "OK", "result": 5 }[dc@localhost spark