Scalable and innovative highperformance HPC solution Bee GFS

Scalable and innovative high-performance HPC solution Bee. GFS and Net. App E-Series storage Jean-François Le Fillâtre Senior Systems Architect, Think. Par. Q Gmb. H CS 3, January 2020 www. beegfs. io January 2020

Why Use a Parallel File System? Think. Par. Q Confidential Better Performance ▪ Simultaneous Read/Writes ▪ Separate Meta Data from Data Scalable ▪ Easy Scale-up and Scale-out ▪ Decouple Storage and Compute

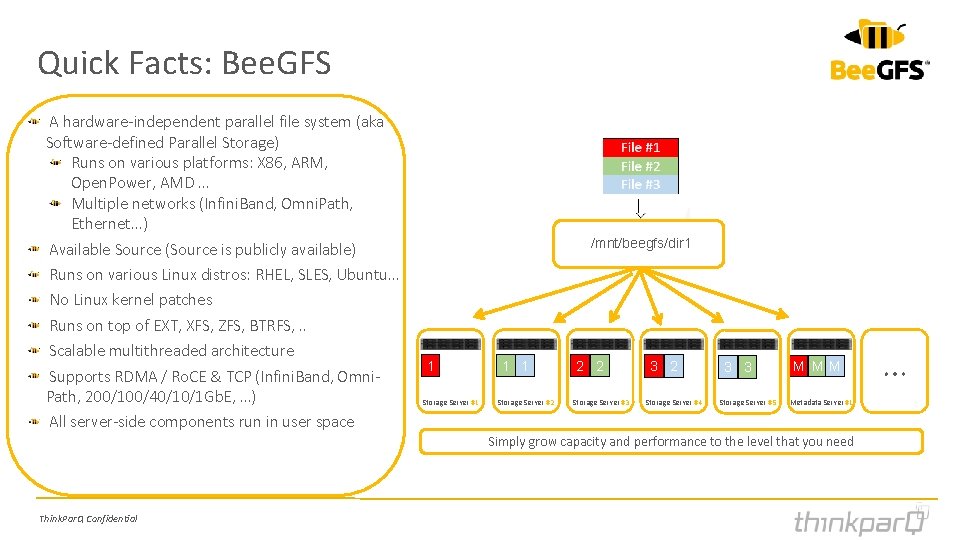

Quick Facts: Bee. GFS A hardware-independent parallel file system (aka Software-defined Parallel Storage) Runs on various platforms: X 86, ARM, Open. Power, AMD … Multiple networks (Infini. Band, Omni. Path, Ethernet. . . ) Available Source (Source is publicly available) Runs on various Linux distros: RHEL, SLES, Ubuntu… No Linux kernel patches Runs on top of EXT, XFS, ZFS, BTRFS, . . Scalable multithreaded architecture Supports RDMA / Ro. CE & TCP (Infini. Band, Omni. Path, 200/100/40/10/1 Gb. E, …) All server-side components run in user space /mnt/beegfs/dir 1 1 Storage Server #1 1 1 Storage Server #2 2 2 Storage Server #3 3 2 Storage Server #4 3 3 Storage Server #5 M MM Metadata Server #1 Simply grow capacity and performance to the level that you need Think. Par. Q Confidential …

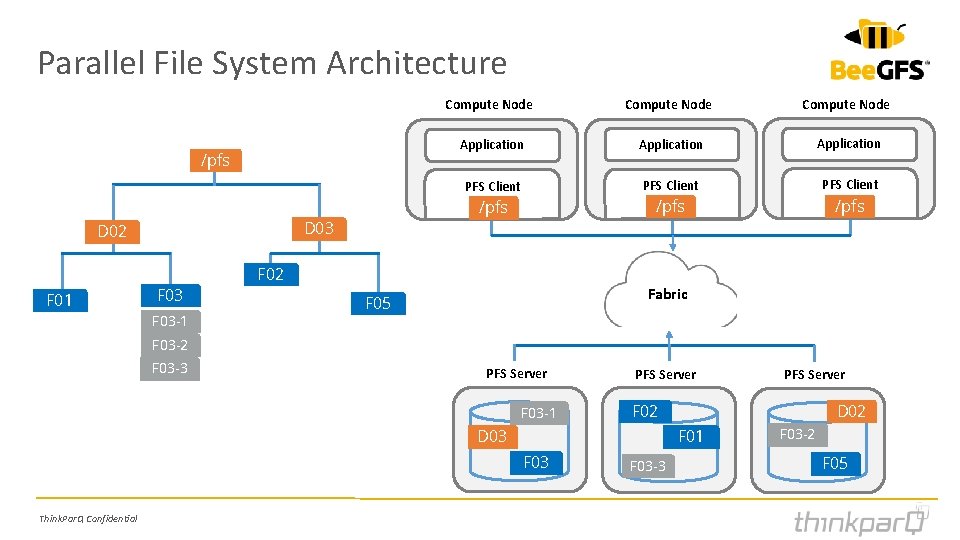

Parallel File System Architecture /pfs Compute Node Application PFS Client /pfs D 03 D 02 F 01 F 03 -1 v v vv F 02 Fabric F 05 F 03 -2 F 03 -3 PFS Server F 03 -1 PFS Server F 02 D 03 Think. Par. Q Confidential D 02 F 01 F 03 PFS Server F 03 -3 F 03 -2 F 05

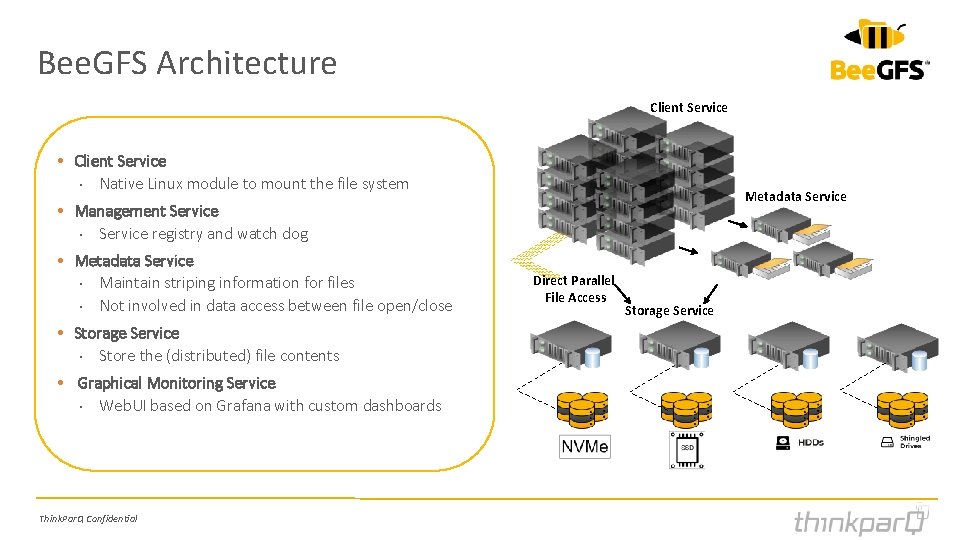

Bee. GFS Architecture Client Service • Client Service • Native Linux module to mount the file system Metadata Service • Management Service • Service registry and watch dog • Metadata Service • Maintain striping information for files • Not involved in data access between file open/close • Storage Service • Store the (distributed) file contents • Graphical Monitoring Service • Web. UI based on Grafana with custom dashboards Think. Par. Q Confidential Direct Parallel File Access Storage Service

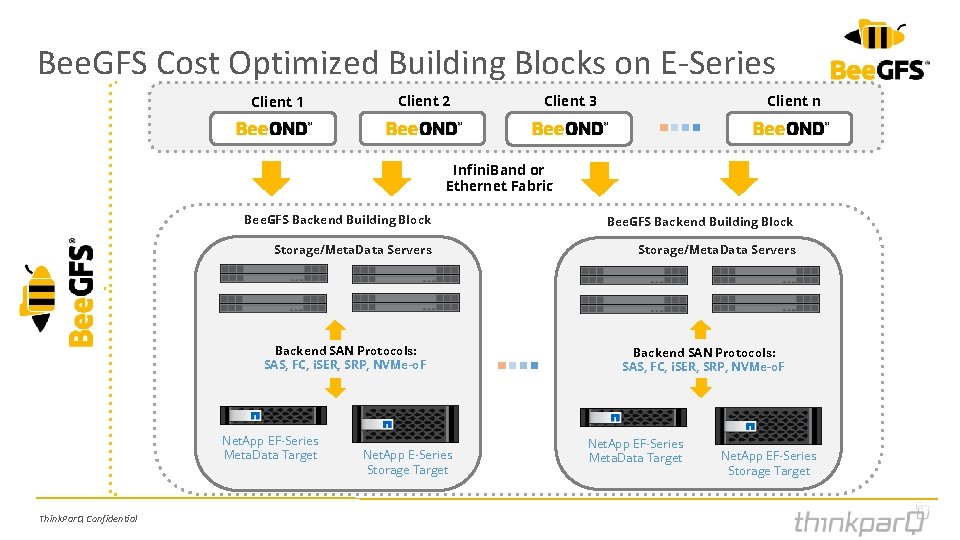

Bee. GFS Cost Optimized Building Blocks on E-Series Client 1 Client 2 Client 3 Client n Infini. Band or Ethernet Fabric Bee. GFS Backend Building Block Storage/Meta. Data Servers Backend SAN Protocols: SAS, FC, i. SER, SRP, NVMe-o. F Net. App EF-Series Meta. Data Target Think. Par. Q Confidential Net. App E-Series Storage Target Backend SAN Protocols: SAS, FC, i. SER, SRP, NVMe-o. F Net. App EF-Series Meta. Data Target Net. App EF-Series Storage Target

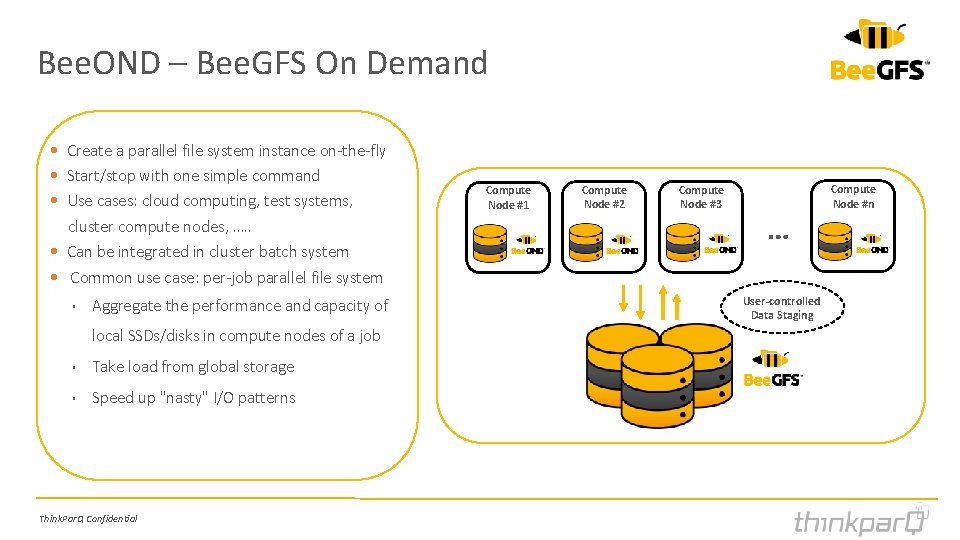

Bee. OND – Bee. GFS On Demand • Create a parallel file system instance on-the-fly • Start/stop with one simple command • Use cases: cloud computing, test systems, cluster compute nodes, …. . • Can be integrated in cluster batch system • Common use case: per-job parallel file system • Aggregate the performance and capacity of local SSDs/disks in compute nodes of a job • Take load from global storage • Speed up "nasty" I/O patterns Think. Par. Q Confidential Compute Node #1 Compute Node #2 Compute Node #3 … User-controlled Data Staging Compute Node #n

• • • HPC SUCCESS STORY Simula Research Lab In Norway • High-performance CPUs: AMD Epyc 7601, Cavium Thunder. X 2 CN 9980 and Intel Scalable Platinum 8176 M GPU nodes: DGX-2 system with 16 NVIDIA V 100 processors internally connected via a 300 GB/s NVLink fabric Fabric: Mellanox HDR Infini. Band capable of 200 Gb/s Parallel file system: Bee. GFS Parallel file system storage: 500 TB of enterprise level hybrid storage (E 5760) from Net. App Parallel file system scratch space: 85 TB NVMe/SSD scratch space at the node level, globally shared using Bee. GFSs Bee. OND feature More information at: https: //www. simula. no/news/simula-signs-contract-nextron-delivery-first-procurement-ex 3 -infrastructure Think. Par. Q Confidential

Follow Bee. GFS: Think. Par. Q Confidential

- Slides: 10