SC 19 Denver Swift Machine Learning Model Serving

- Slides: 34

SC 19 Denver Swift Machine Learning Model Serving Scheduling: A Region Based Reinforcement Learning Approach Heyang Qin 1, Syed Zawad 1, Yanqi Zhou 2, Lei Yang 1, Dongfang Zhao 1, Feng Yan 1 1 University of Nevada, Reno 2 Google Brain Resource Acknowledgement 1

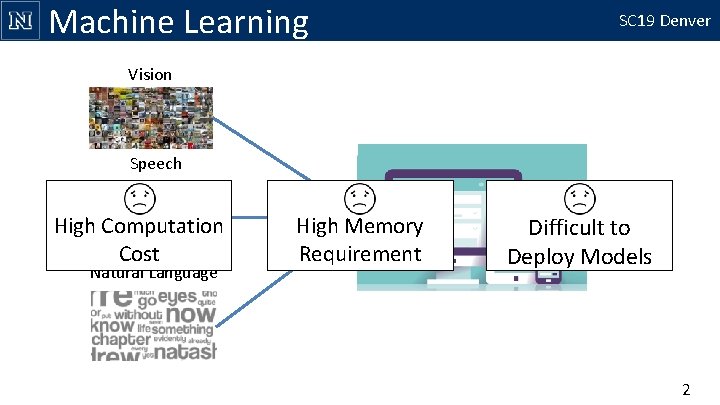

Machine Learning SC 19 Denver Vision Speech High Computation Cost Natural Language High Memory Requirement Difficult to Deploy Models 2

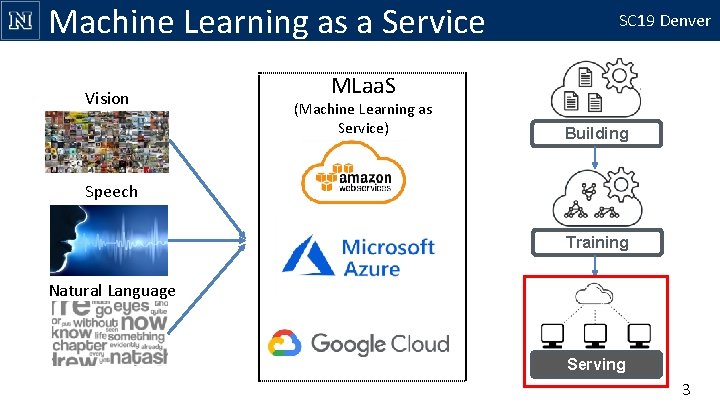

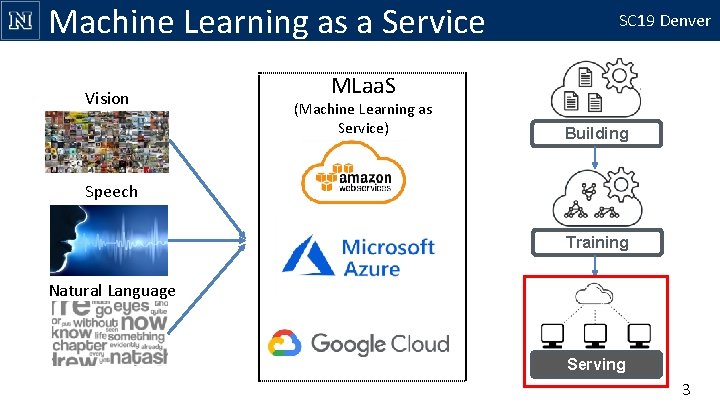

Machine Learning as a Service Vision SC 19 Denver MLaa. S (Machine Learning as Service) Building Speech Training Natural Language Serving 3

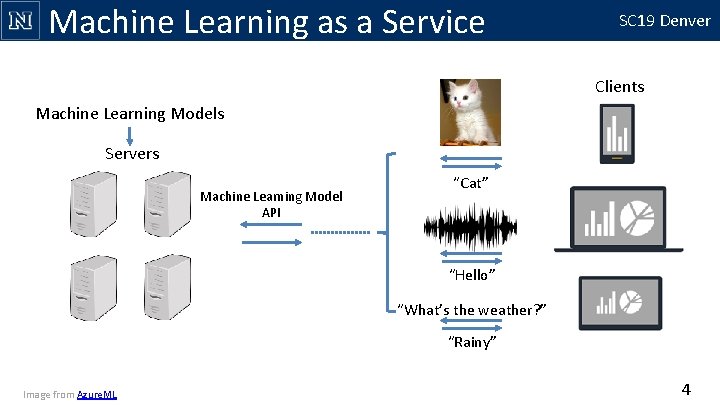

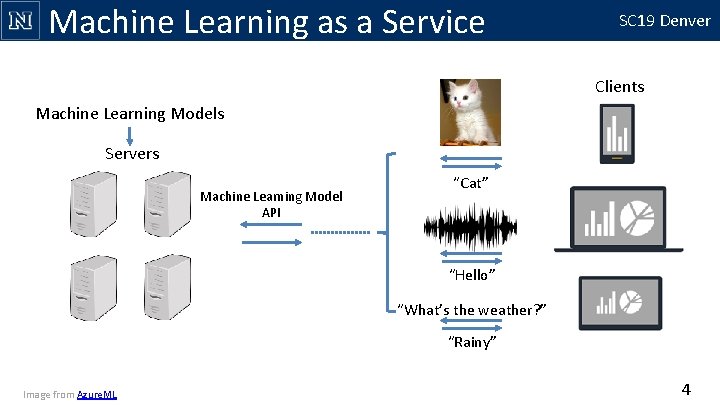

Machine Learning as a Service SC 19 Denver Clients Machine Learning Models Servers Machine Learning Model API “Cat” “Hello” “What’s the weather? ” “Rainy” Image from Azure. ML 4

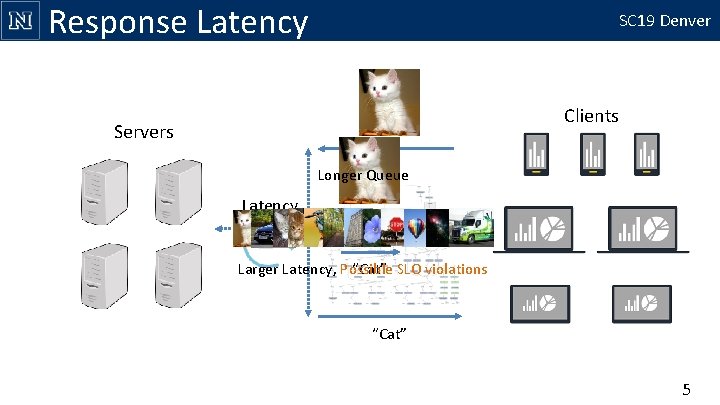

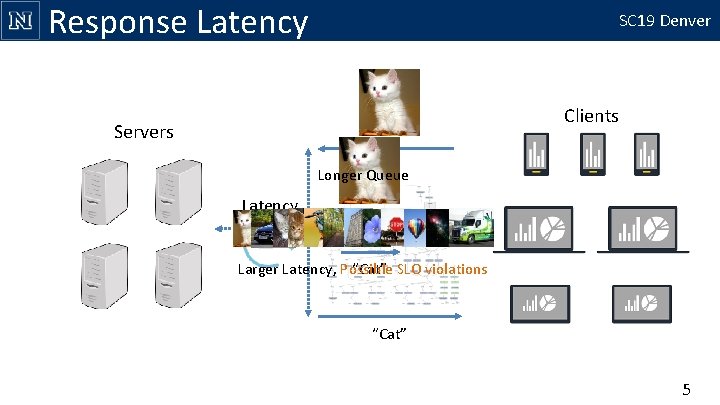

Response Latency SC 19 Denver Clients Servers Longer Queue Clients Latency “Cat” SLO violations Larger Latency, Possible “Cat” 5

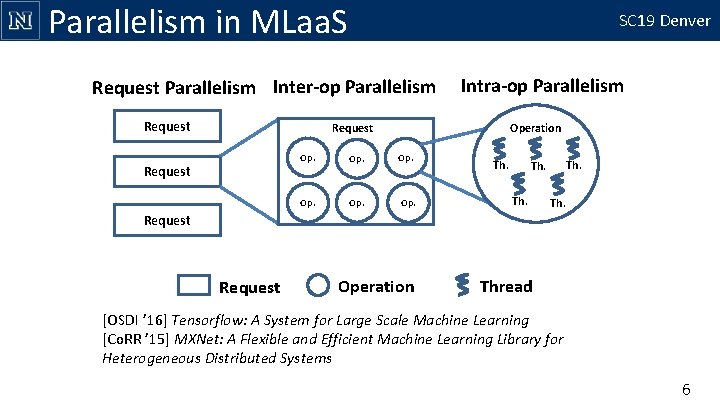

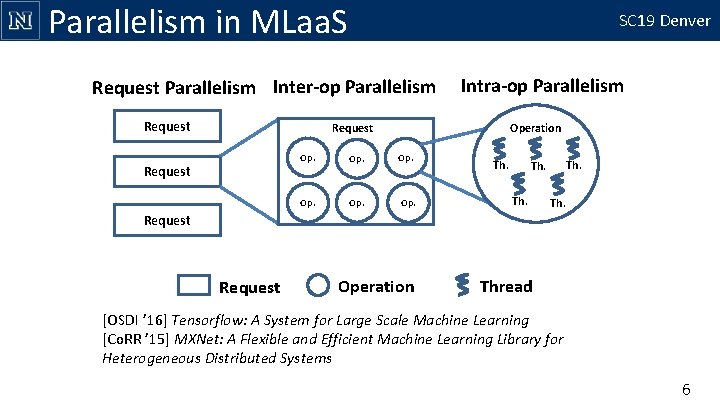

Parallelism in MLaa. S SC 19 Denver Request Parallelism Inter-op Parallelism Request Intra-op Parallelism Operation Request Op. Op. Th. Request Operation Thread [OSDI ’ 16] Tensorflow: A System for Large Scale Machine Learning [Co. RR ’ 15] MXNet: A Flexible and Efficient Machine Learning Library for Heterogeneous Distributed Systems 6

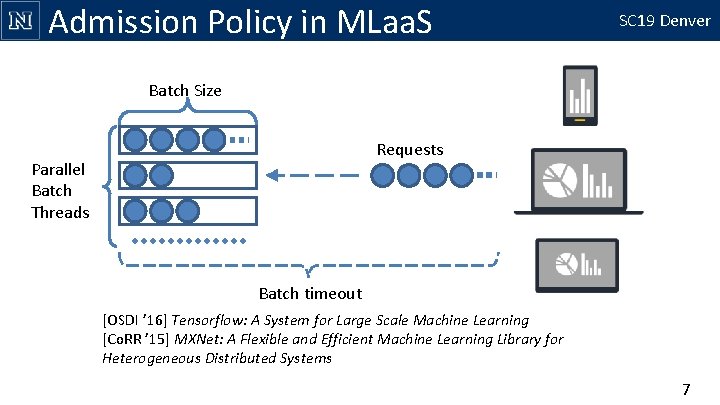

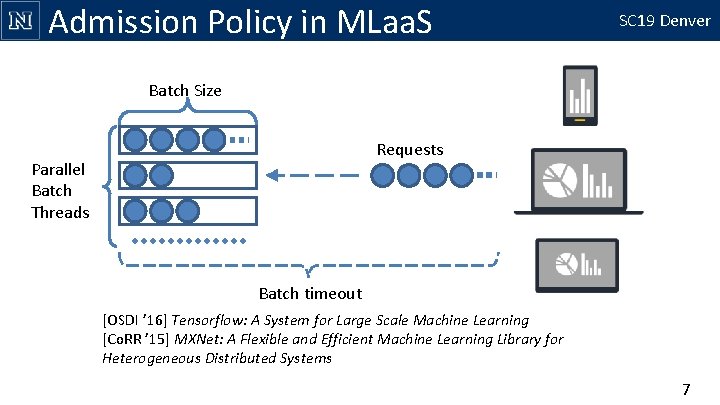

Admission Policy in MLaa. S SC 19 Denver Batch Size Requests Parallel Batch Threads Batch timeout [OSDI ’ 16] Tensorflow: A System for Large Scale Machine Learning [Co. RR ’ 15] MXNet: A Flexible and Efficient Machine Learning Library for Heterogeneous Distributed Systems 7

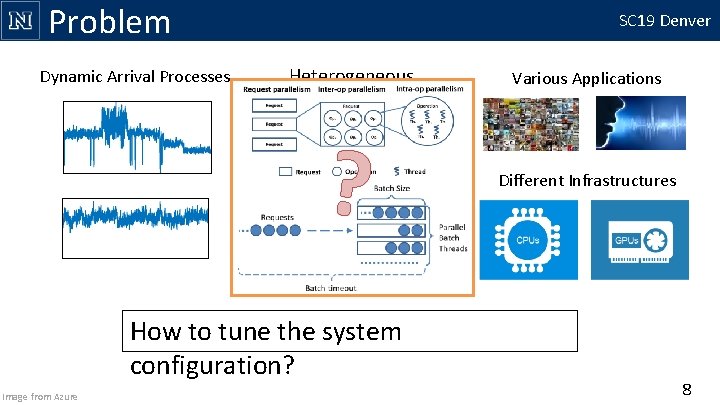

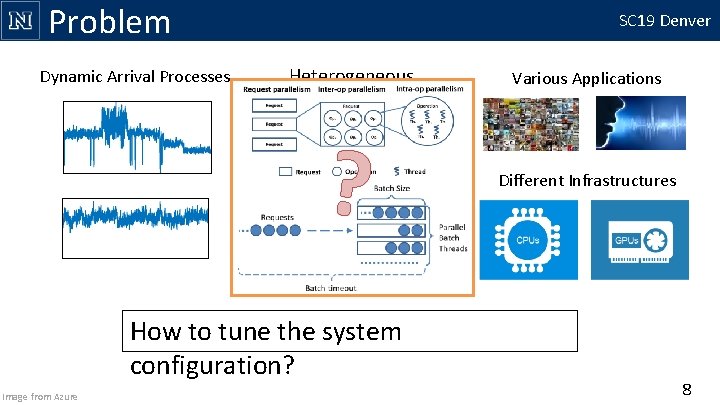

Problem Dynamic Arrival Processes SC 19 Denver Heterogeneous Environments ? How to tune the system configuration? Image from Azure Various Applications Different Infrastructures 8

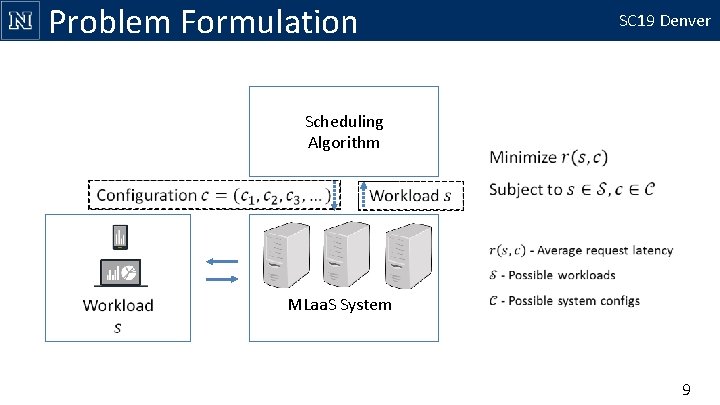

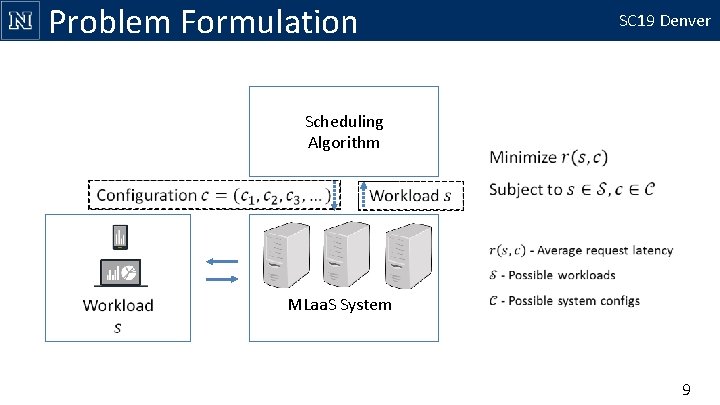

Problem Formulation SC 19 Denver Scheduling Algorithm MLaa. S System 9

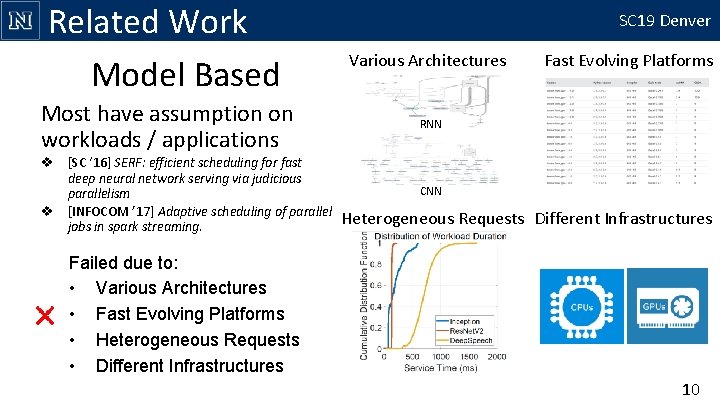

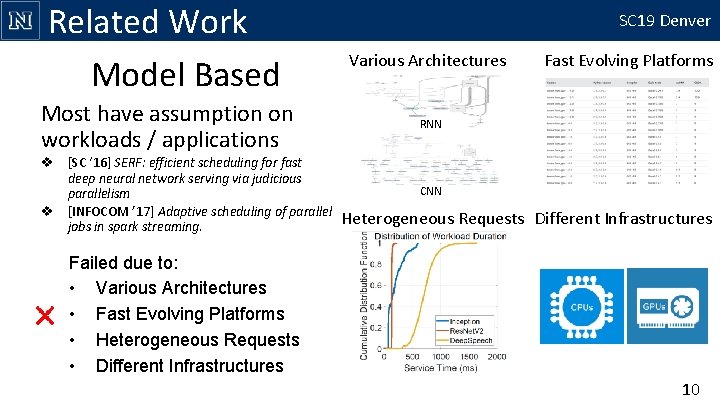

Related Work Model Based SC 19 Denver Various Architectures Most have assumption on workloads / applications RNN v [SC ‘ 16] SERF: efficient scheduling for fast deep neural network serving via judicious parallelism v [INFOCOM ’ 17] Adaptive scheduling of parallel jobs in spark streaming. CNN Fast Evolving Platforms Heterogeneous Requests Different Infrastructures Failed due to: • Various Architectures • Fast Evolving Platforms • Heterogeneous Requests • Different Infrastructures 10

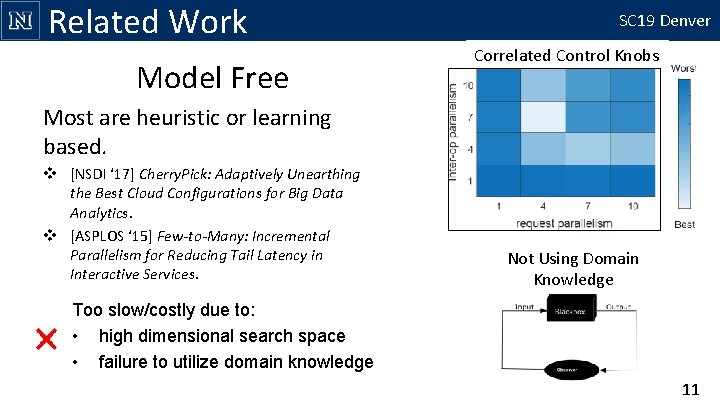

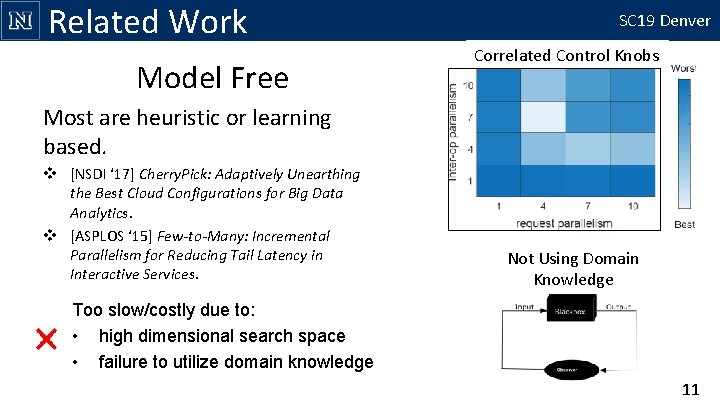

Related Work Model Free SC 19 Denver Correlated Control Knobs Most are heuristic or learning based. v [NSDI ‘ 17] Cherry. Pick: Adaptively Unearthing the Best Cloud Configurations for Big Data Analytics. v [ASPLOS ‘ 15] Few-to-Many: Incremental Parallelism for Reducing Tail Latency in Interactive Services. Not Using Domain Knowledge Too slow/costly due to: • high dimensional search space • failure to utilize domain knowledge 11

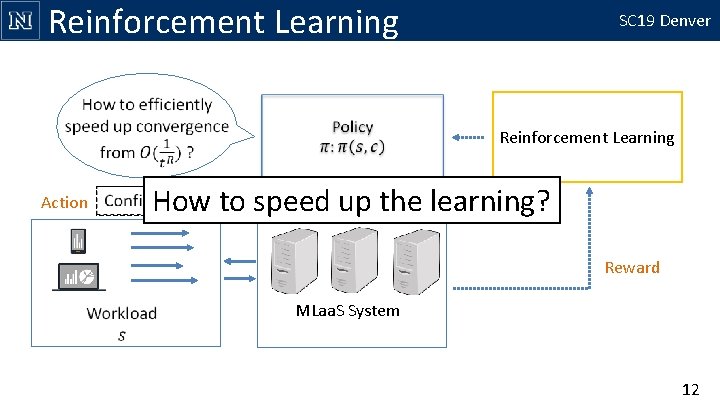

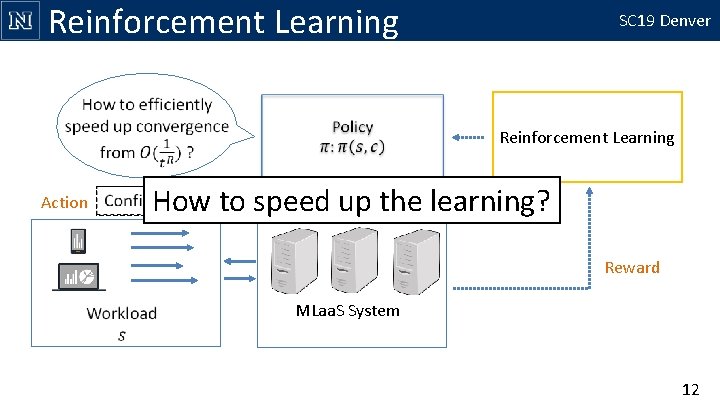

Reinforcement Learning SC 19 Denver Reinforcement Learning Action State How to speed up the learning? Reward MLaa. S System 12

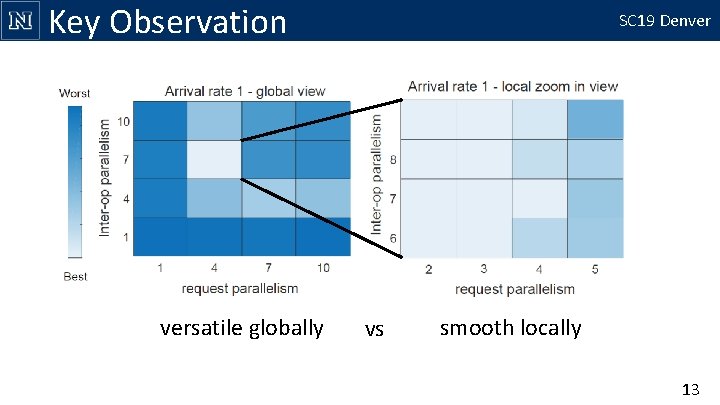

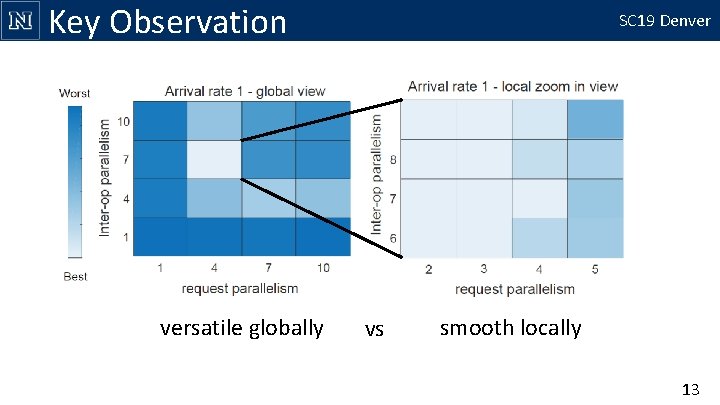

Key Observation versatile globally SC 19 Denver vs smooth locally 13

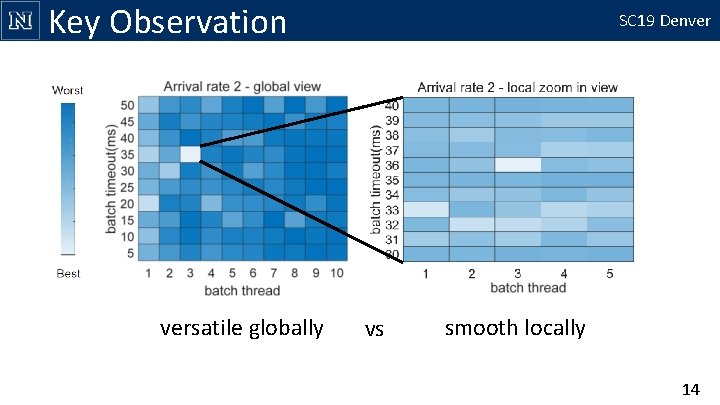

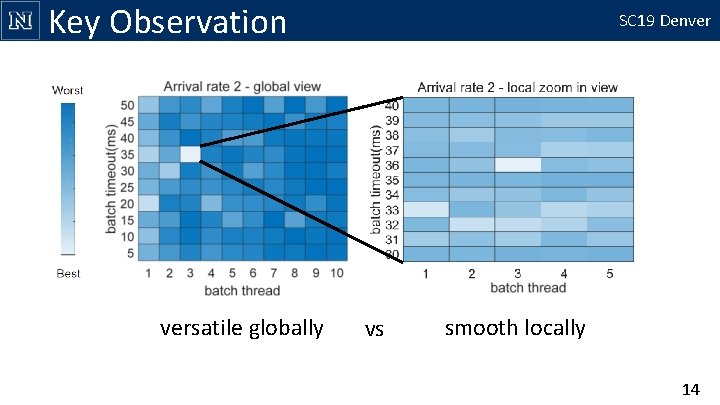

Key Observation versatile globally SC 19 Denver vs smooth locally 14

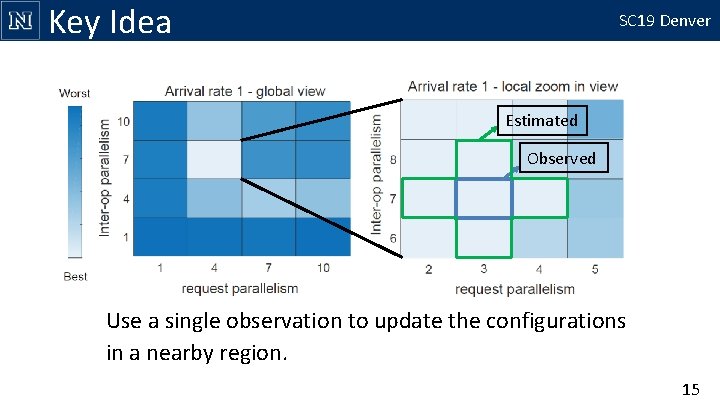

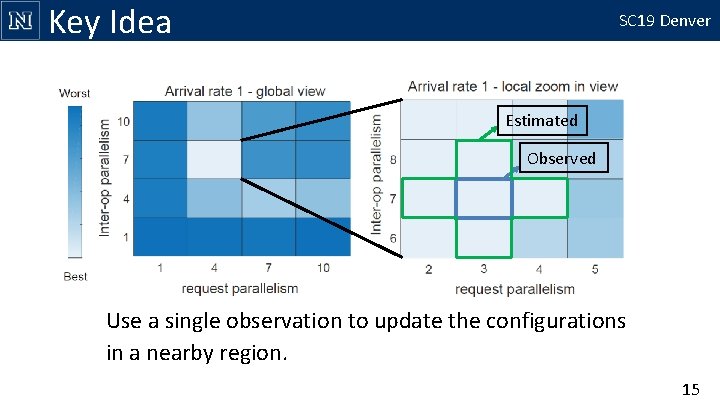

Key Idea SC 19 Denver Estimated Observed Use a single observation to update the configurations in a nearby region. 15

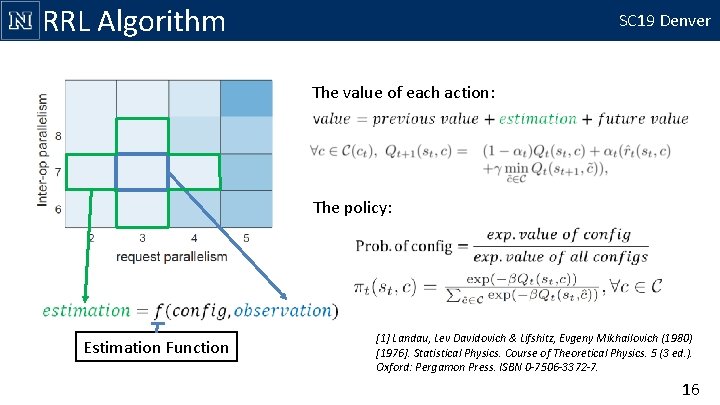

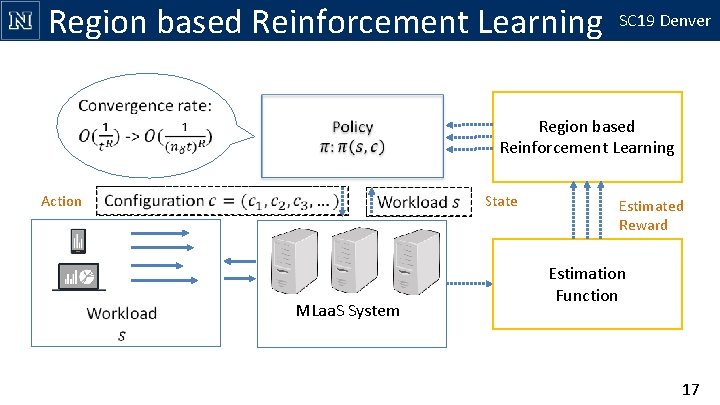

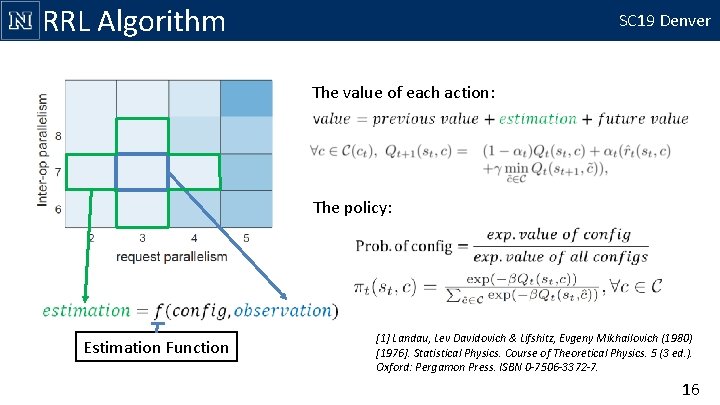

RRL Algorithm SC 19 Denver The value of each action: The policy: Estimation Function [1] Landau, Lev Davidovich & Lifshitz, Evgeny Mikhailovich (1980) [1976]. Statistical Physics. Course of Theoretical Physics. 5 (3 ed. ). Oxford: Pergamon Press. ISBN 0 -7506 -3372 -7. 16

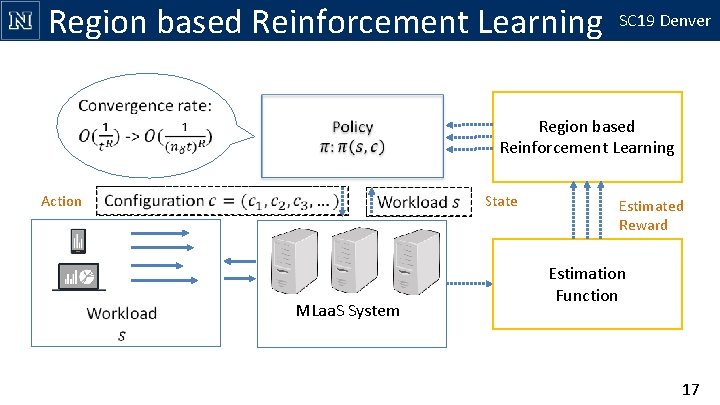

Region based Reinforcement Learning SC 19 Denver Region based Reinforcement Learning Action State MLaa. S System Estimated Reward Estimation Function 17

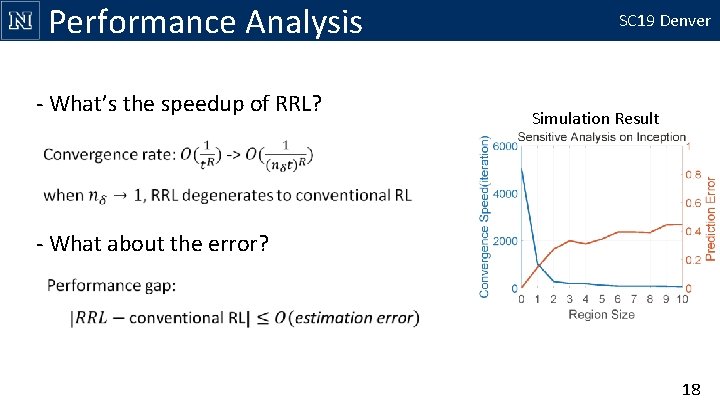

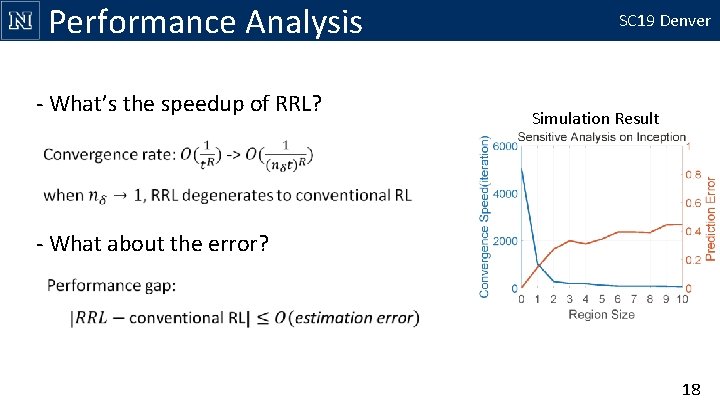

Performance Analysis - What’s the speedup of RRL? SC 19 Denver Simulation Result - What about the error? 18

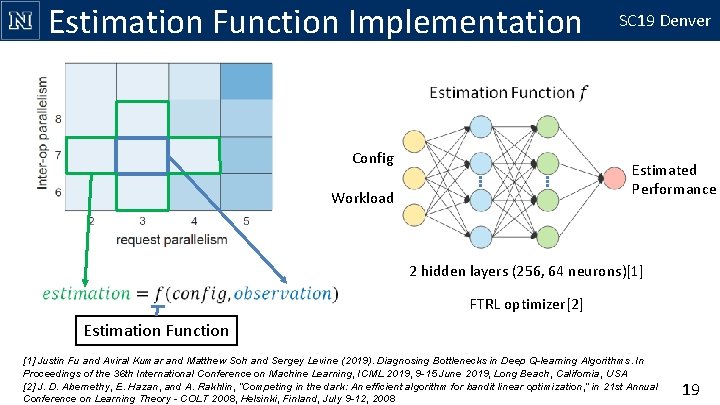

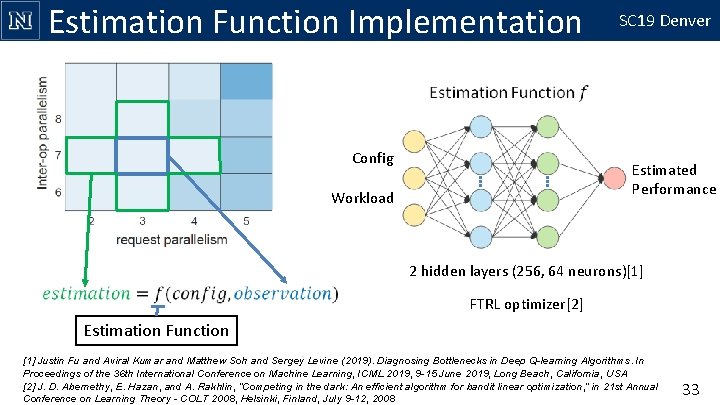

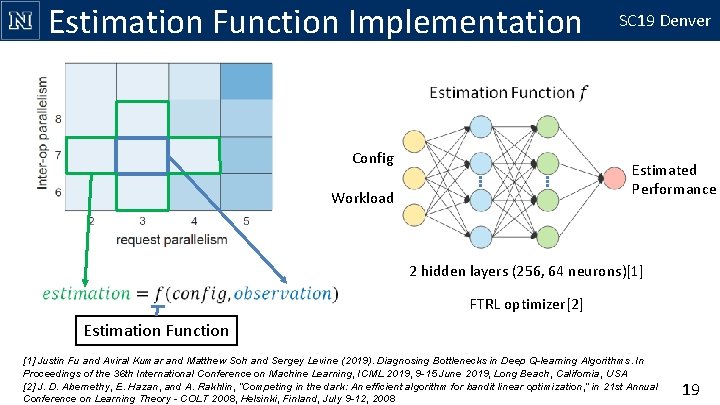

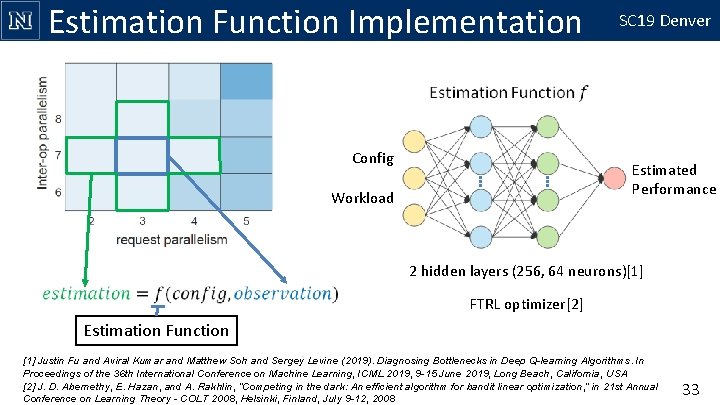

Estimation Function Implementation Config SC 19 Denver Estimated Performance Workload 2 hidden layers (256, 64 neurons)[1] FTRL optimizer[2] Estimation Function [1] Justin Fu and Aviral Kumar and Matthew Soh and Sergey Levine (2019). Diagnosing Bottlenecks in Deep Q-learning Algorithms. In Proceedings of the 36 th International Conference on Machine Learning, ICML 2019, 9 -15 June 2019, Long Beach, California, USA [2] J. D. Abernethy, E. Hazan, and A. Rakhlin, “Competing in the dark: An efficient algorithm for bandit linear optimization, ” in 21 st Annual Conference on Learning Theory - COLT 2008, Helsinki, Finland, July 9 -12, 2008 19

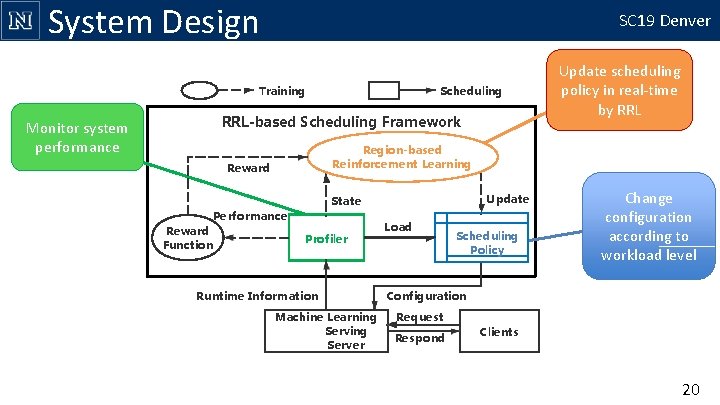

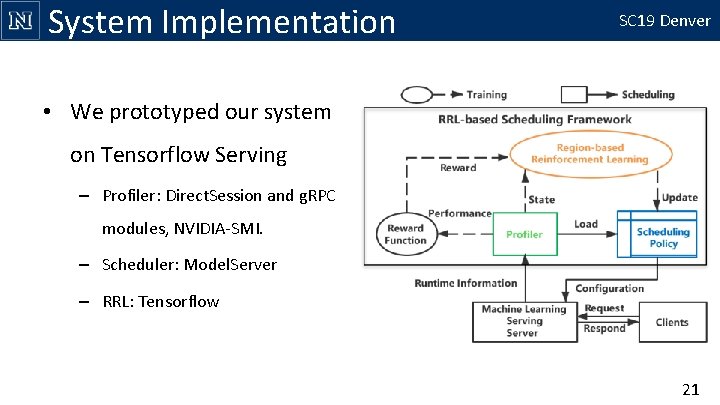

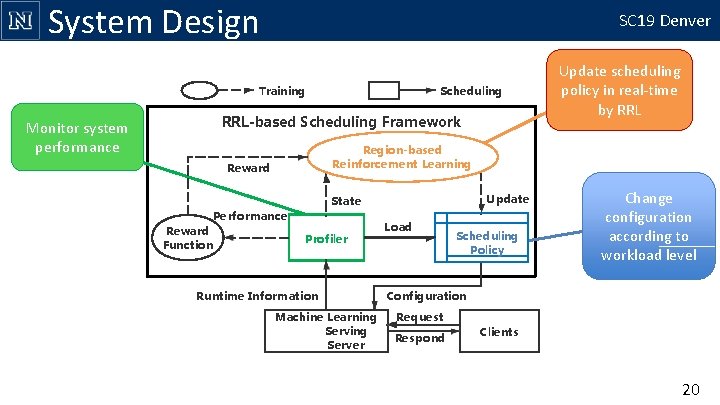

System Design SC 19 Denver Training Monitor system performance Scheduling RRL-based Scheduling Framework Update scheduling policy in real-time by RRL Region-based Reinforcement Learning Reward Performance Reward Function Update State Profiler Runtime Information Machine Learning Server Load Scheduling Policy Change configuration according to workload level Configuration Request Respond Clients 20

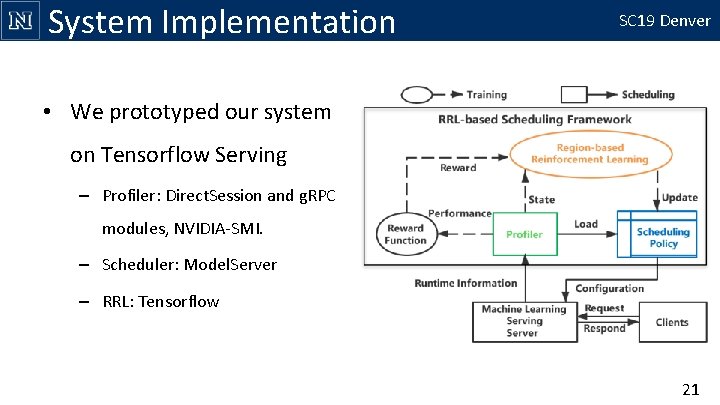

System Implementation SC 19 Denver • We prototyped our system on Tensorflow Serving – Profiler: Direct. Session and g. RPC modules, NVIDIA-SMI. – Scheduler: Model. Server – RRL: Tensorflow 21

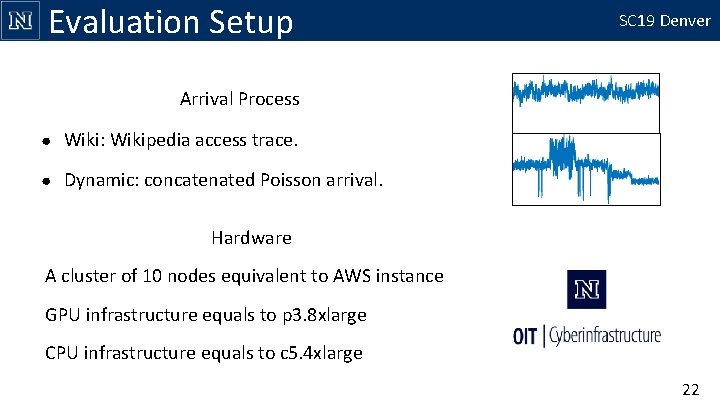

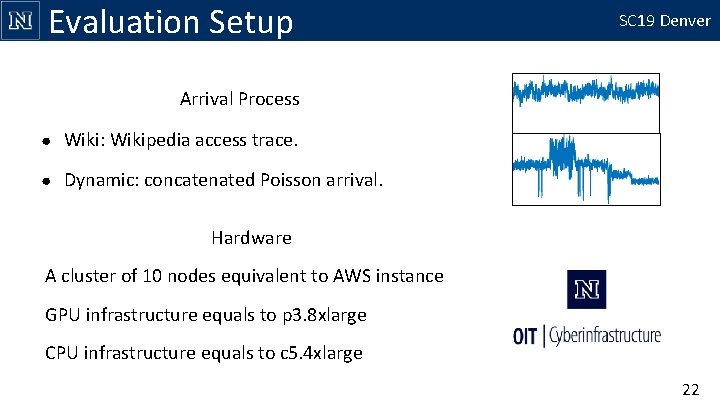

Evaluation Setup SC 19 Denver Arrival Process ● Wiki: Wikipedia access trace. ● Dynamic: concatenated Poisson arrival. Hardware A cluster of 10 nodes equivalent to AWS instance GPU infrastructure equals to p 3. 8 xlarge CPU infrastructure equals to c 5. 4 xlarge 22

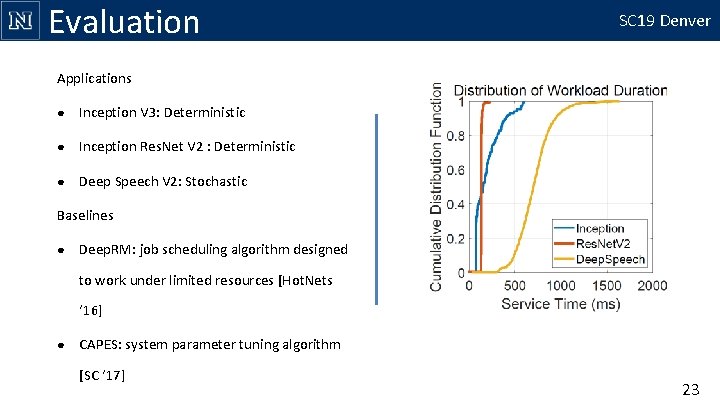

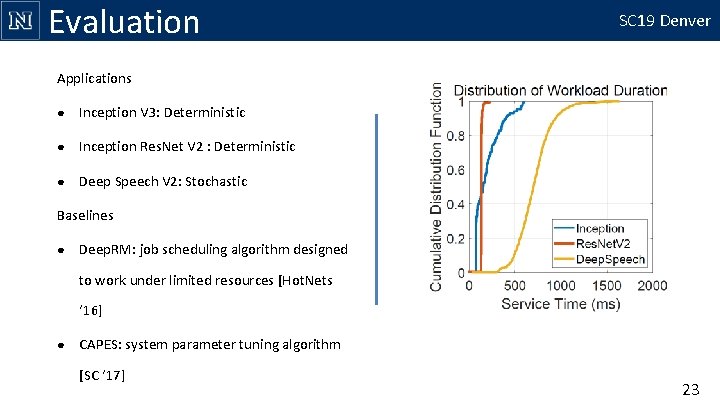

Evaluation SC 19 Denver Applications ● Inception V 3: Deterministic ● Inception Res. Net V 2 : Deterministic ● Deep Speech V 2: Stochastic Baselines ● Deep. RM: job scheduling algorithm designed to work under limited resources [Hot. Nets ‘ 16] ● CAPES: system parameter tuning algorithm [SC ‘ 17] 23

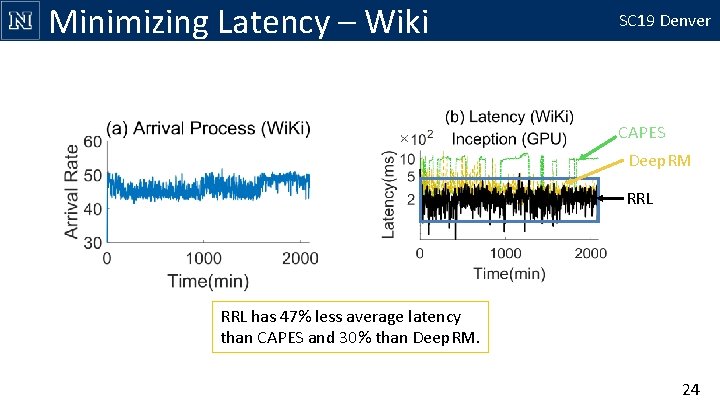

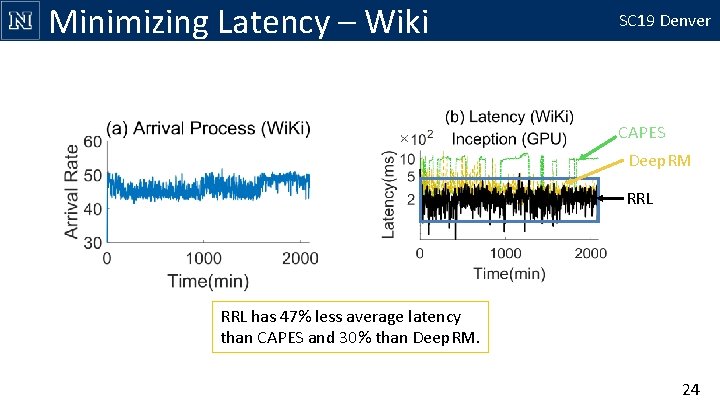

Minimizing Latency – Wiki SC 19 Denver CAPES Deep. RM RRL has 47% less average latency than CAPES and 30% than Deep. RM. 24

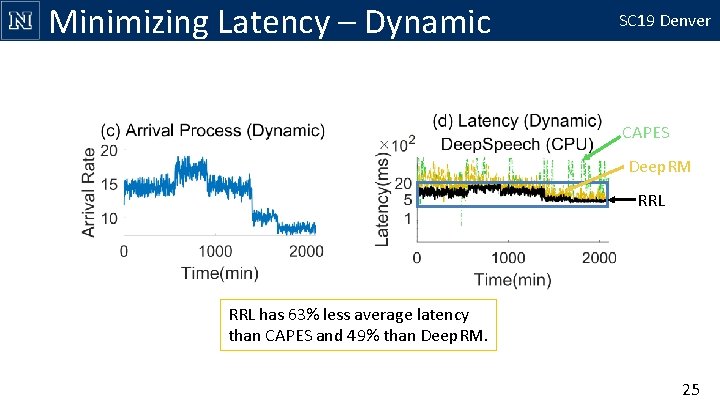

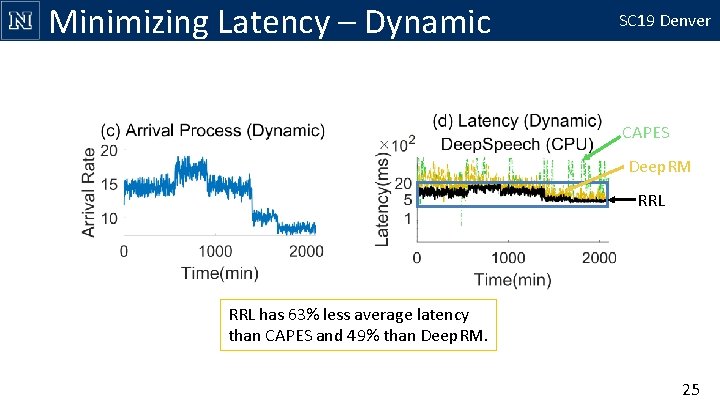

Minimizing Latency – Dynamic SC 19 Denver CAPES Deep. RM RRL has 63% less average latency than CAPES and 49% than Deep. RM. 25

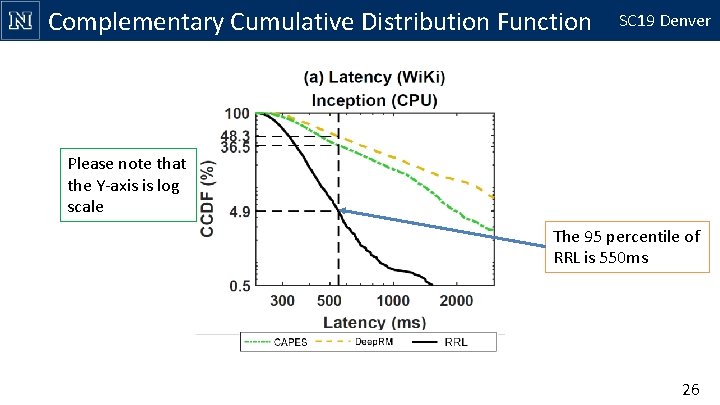

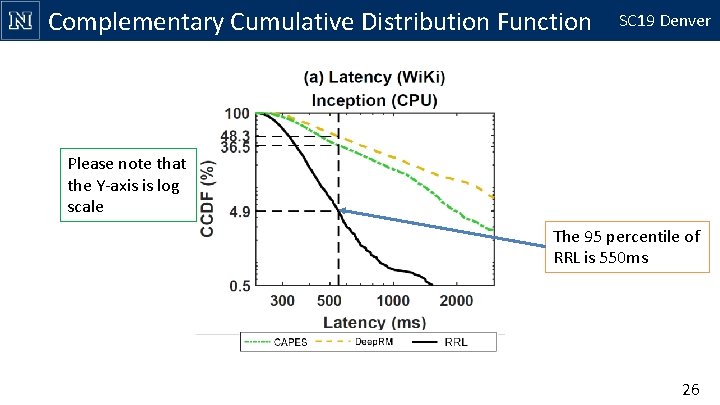

Complementary Cumulative Distribution Function SC 19 Denver Please note that the Y-axis is log scale The 95 percentile of RRL is 550 ms 26

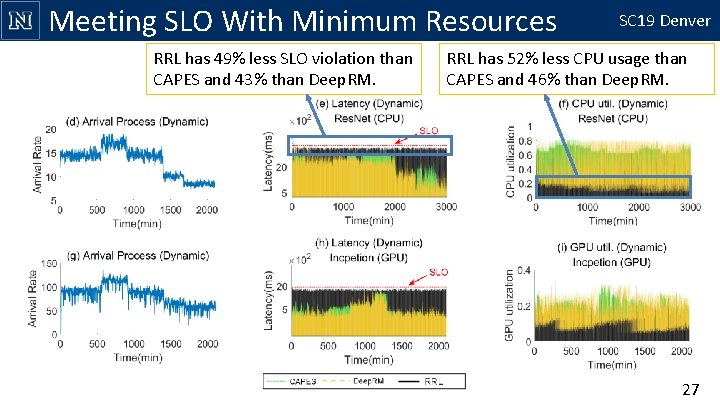

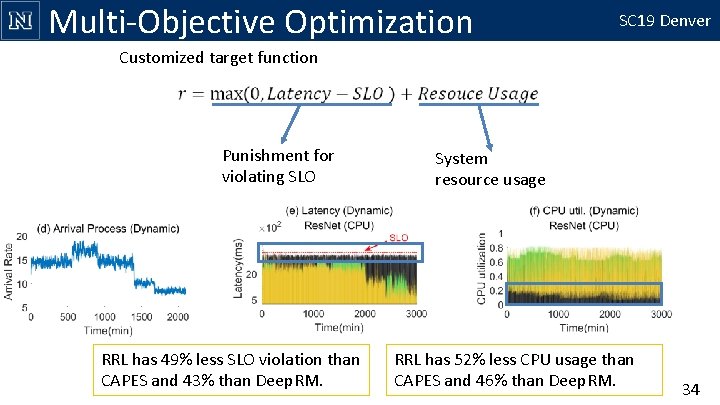

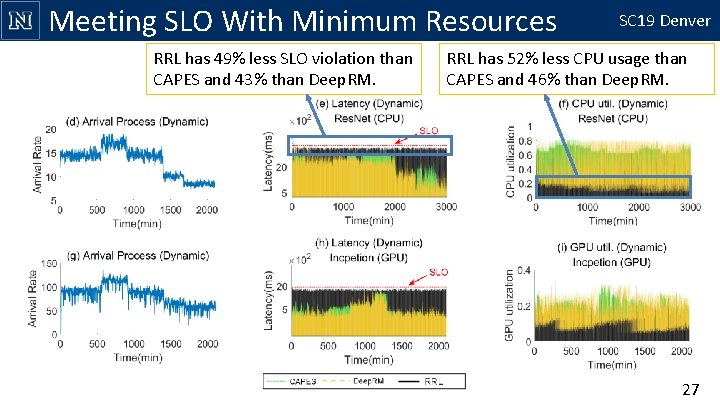

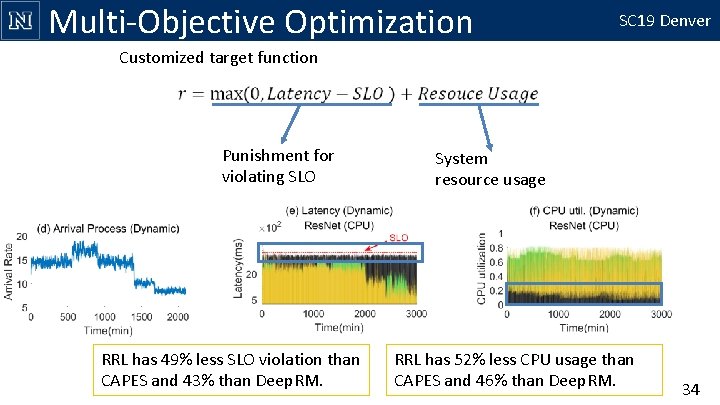

Meeting SLO With Minimum Resources RRL has 49% less SLO violation than CAPES and 43% than Deep. RM. SC 19 Denver RRL has 52% less CPU usage than CAPES and 46% than Deep. RM. 27

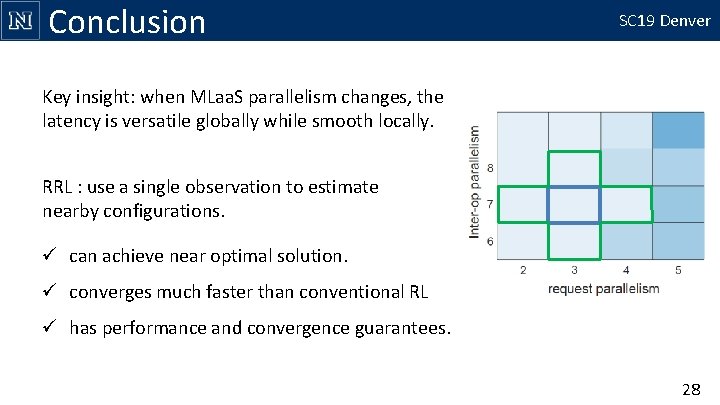

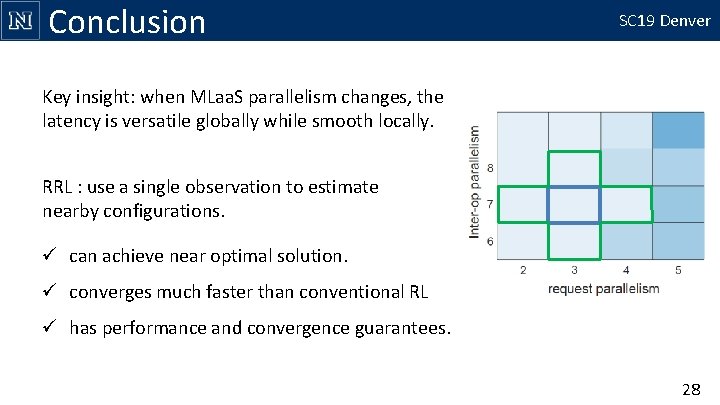

Conclusion SC 19 Denver Key insight: when MLaa. S parallelism changes, the latency is versatile globally while smooth locally. RRL : use a single observation to estimate nearby configurations. ü can achieve near optimal solution. ü converges much faster than conventional RL ü has performance and convergence guarantees. 28

SC 19 Denver Thank you! Questions? Heyang Qin, looking for internships. heyang_qin@nevada. unr. edu, https: //www. cse. unr. edu/~heyangq/ This work is supported by National Science Foundation, Amazon Web Services and University of Nevada, Reno. 29

Auxiliary Slides SC 19 Denver 30

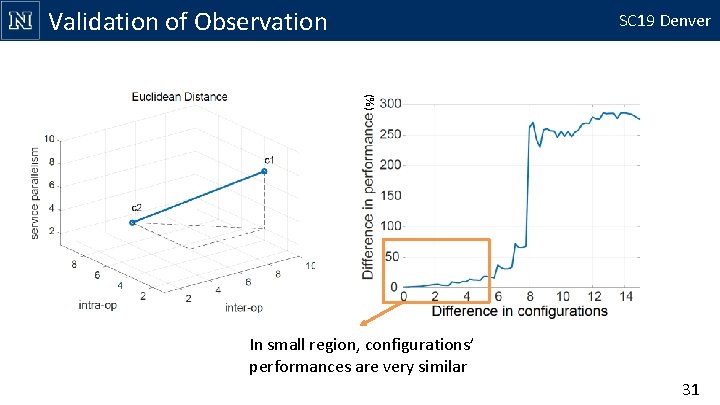

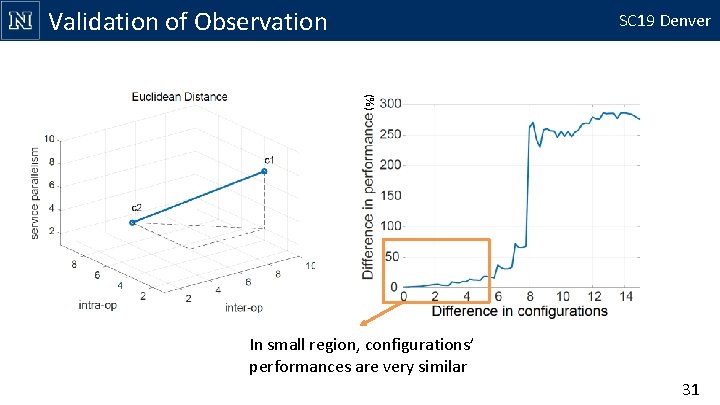

Validation of Observation SC 19 Denver (%) In small region, configurations’ performances are very similar 31

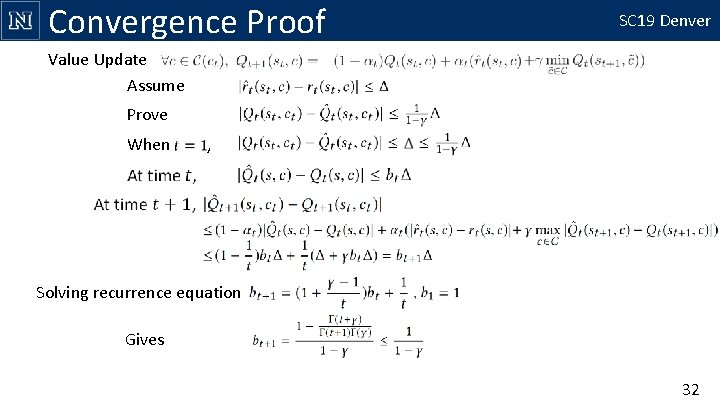

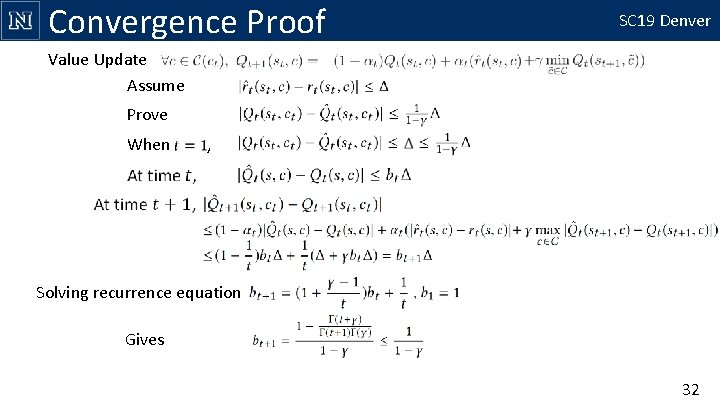

Convergence Proof SC 19 Denver Value Update Assume Prove When , Solving recurrence equation Gives 32

Estimation Function Implementation Config SC 19 Denver Estimated Performance Workload 2 hidden layers (256, 64 neurons)[1] FTRL optimizer[2] Estimation Function [1] Justin Fu and Aviral Kumar and Matthew Soh and Sergey Levine (2019). Diagnosing Bottlenecks in Deep Q-learning Algorithms. In Proceedings of the 36 th International Conference on Machine Learning, ICML 2019, 9 -15 June 2019, Long Beach, California, USA [2] J. D. Abernethy, E. Hazan, and A. Rakhlin, “Competing in the dark: An efficient algorithm for bandit linear optimization, ” in 21 st Annual Conference on Learning Theory - COLT 2008, Helsinki, Finland, July 9 -12, 2008 33

Multi-Objective Optimization SC 19 Denver Customized target function Punishment for violating SLO RRL has 49% less SLO violation than CAPES and 43% than Deep. RM. System resource usage RRL has 52% less CPU usage than CAPES and 46% than Deep. RM. 34