Runahead Execution An Alternative to Very Large Instruction

- Slides: 21

Runahead Execution: An Alternative to Very Large Instruction Windows for Out-of-order Processors Onur Mutlu, The University of Texas at Austin Jared Start, Microprocessor Research, Intel Labs Chris Wilkerson, Desktop Platforms Group, Intel Corp Yale N. Patt, The University of Texas at Austin Presented by: Mark Teper

Outline The Problem p Related Work p The Idea: Runahead Execution p Details p Results p Issues p

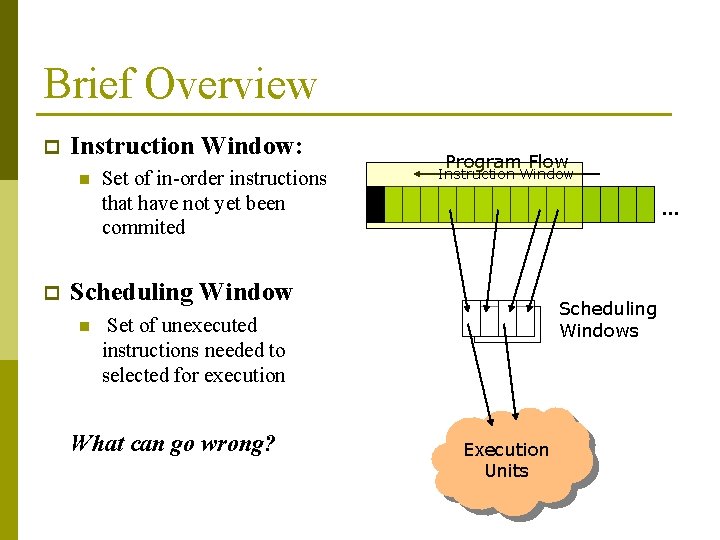

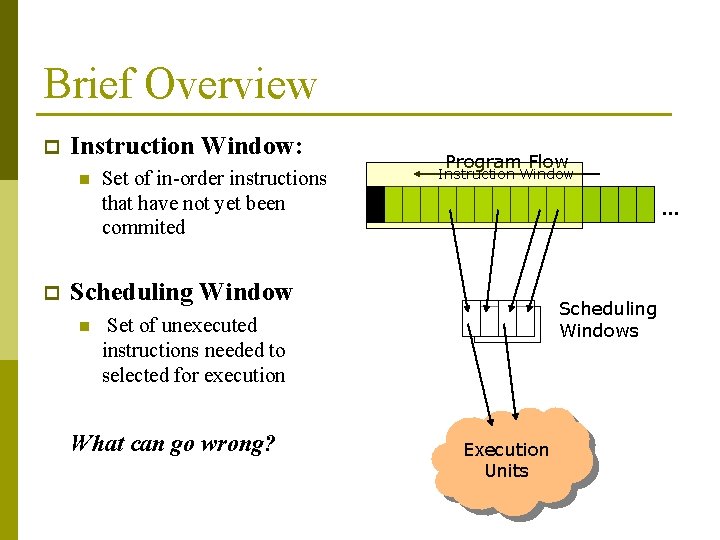

Brief Overview p Instruction Window: n p Set of in-order instructions that have not yet been commited Program Flow Instruction Window … Scheduling Window n Scheduling Windows Set of unexecuted instructions needed to selected for execution What can go wrong? Execution Units

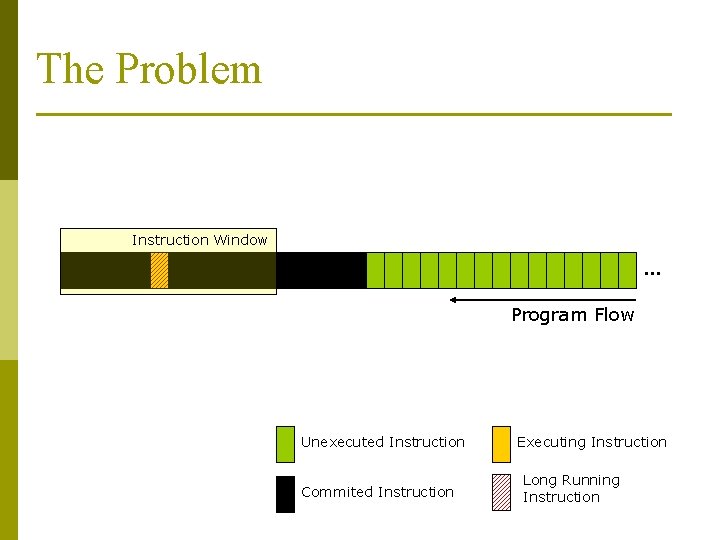

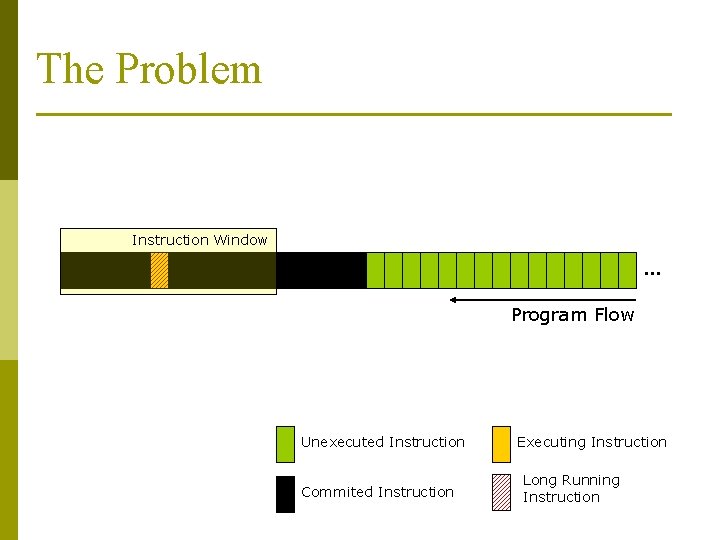

The Problem Instruction Window … Program Flow Unexecuted Instruction Commited Instruction Executing Instruction Long Running Instruction

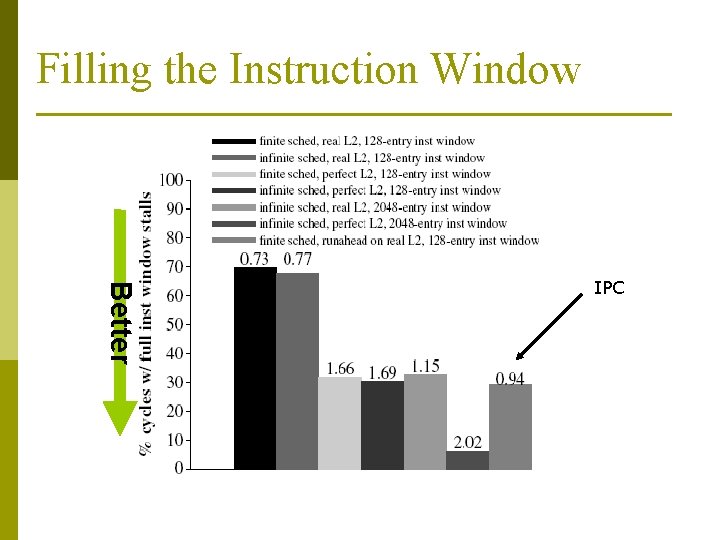

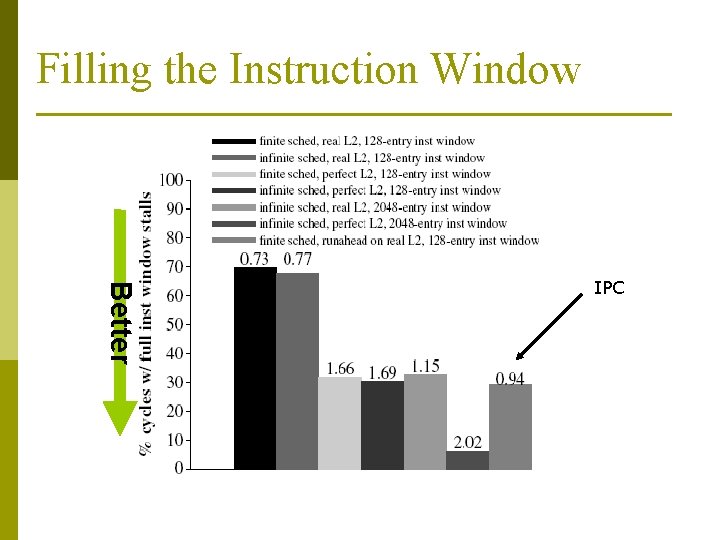

Filling the Instruction Window Better IPC

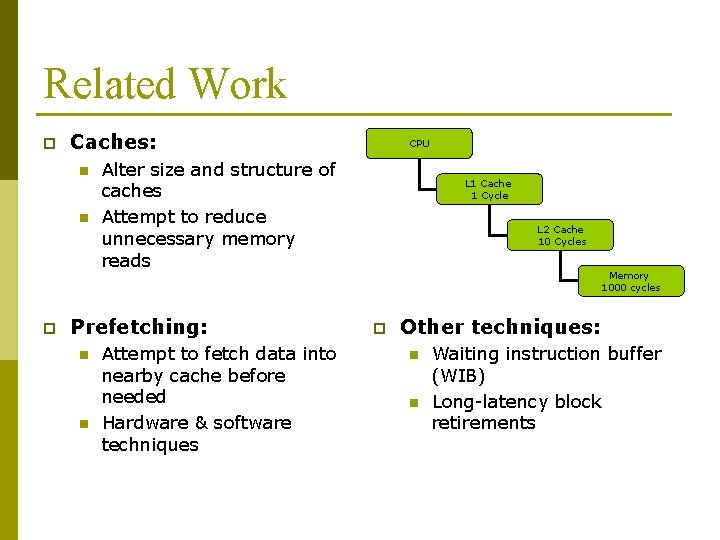

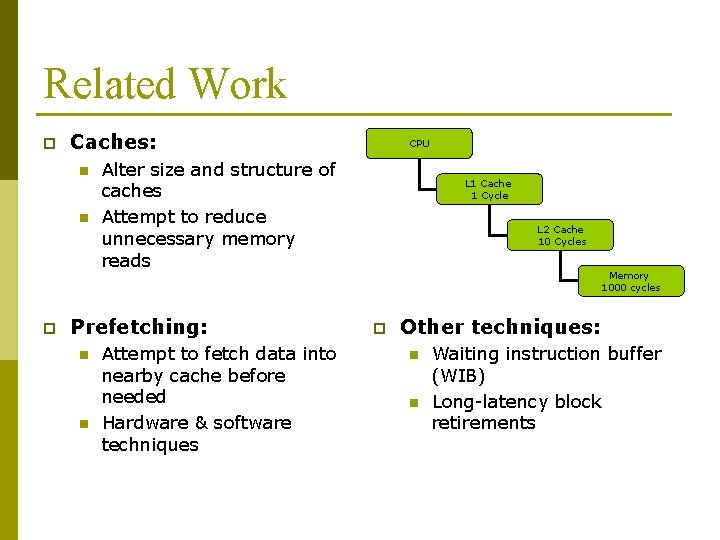

Related Work p Caches: n n p Alter size and structure of caches Attempt to reduce unnecessary memory reads Prefetching: n n CPU Attempt to fetch data into nearby cache before needed Hardware & software techniques L 1 Cache 1 Cycle L 2 Cache 10 Cycles Memory 1000 cycles p Other techniques: n n Waiting instruction buffer (WIB) Long-latency block retirements

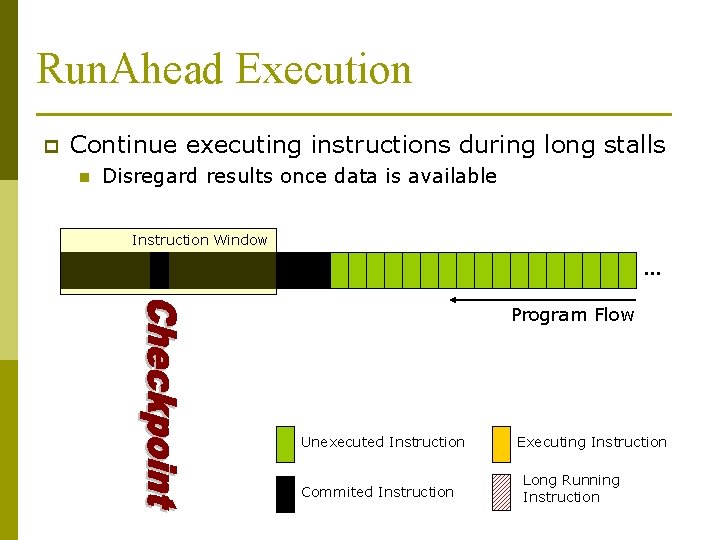

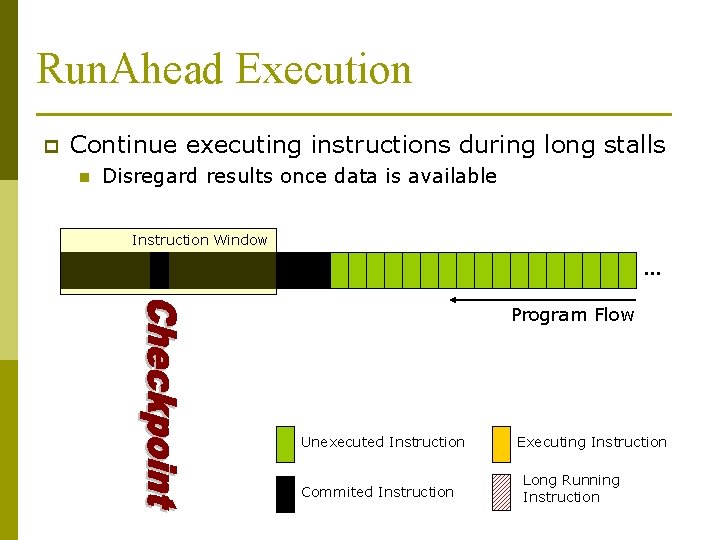

Run. Ahead Execution p Continue executing instructions during long stalls n Disregard results once data is available Instruction Window … Program Flow Unexecuted Instruction Commited Instruction Executing Instruction Long Running Instruction

Benefits p Acts n n as a high accuracy prefetcher Software prefetchers have less information Hardware prefetchers can’t analyze code as well p Biase predictors p Makes use of cycles that are otherwise wasted

Entering Run. Ahead p Processors can enter run-ahead mode at any point n p L 2 Cache Misses used in paper Architecture needs to be able to checkpoint and restore register state n Including branch-history register and return address stack

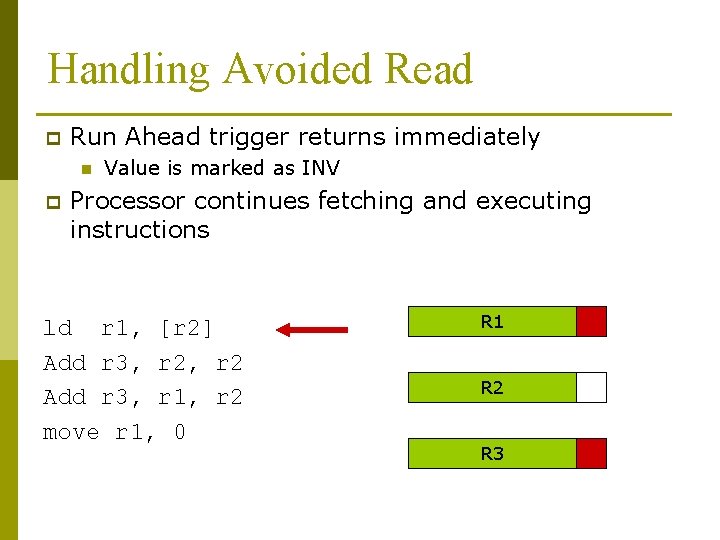

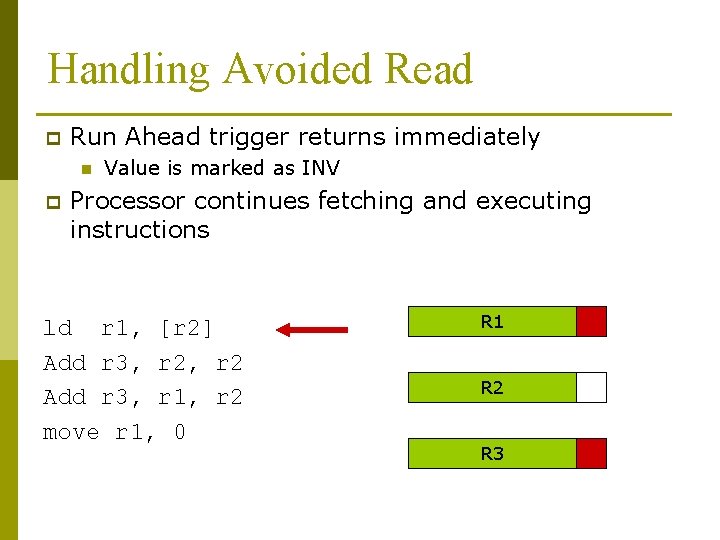

Handling Avoided Read p Run Ahead trigger returns immediately n p Value is marked as INV Processor continues fetching and executing instructions ld r 1, [r 2] Add r 3, r 2 Add r 3, r 1, r 2 move r 1, 0 R 1 R 2 R 3

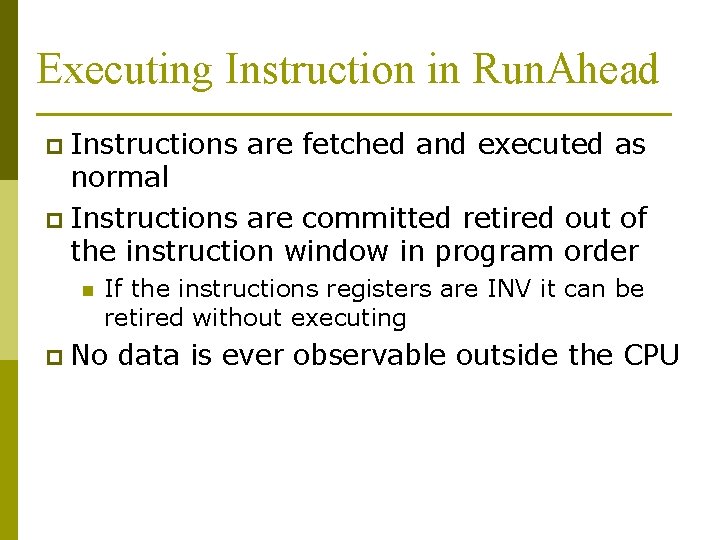

Executing Instruction in Run. Ahead p Instructions are fetched and executed as normal p Instructions are committed retired out of the instruction window in program order n If the instructions registers are INV it can be retired without executing p No data is ever observable outside the CPU

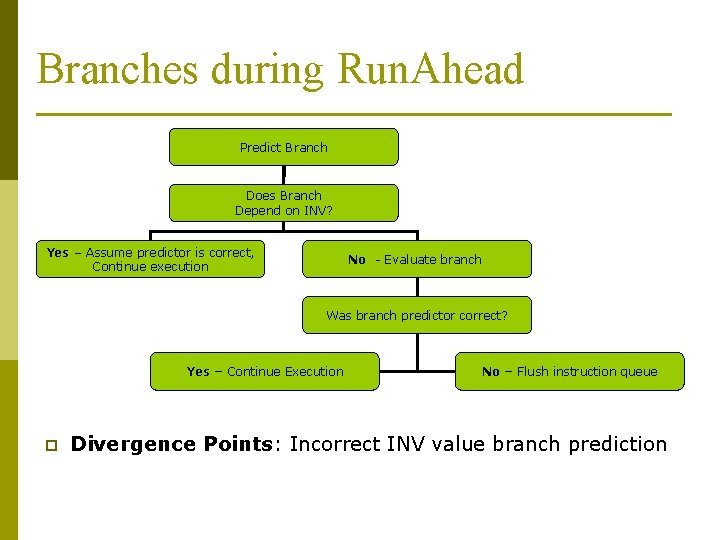

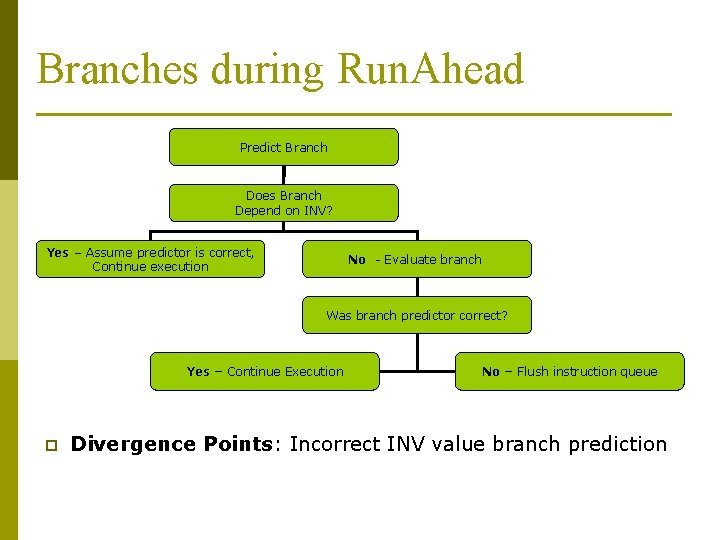

Branches during Run. Ahead Predict Branch Does Branch Depend on INV? Yes – Assume predictor is correct, Continue execution No - Evaluate branch Was branch predictor correct? Yes – Continue Execution p No – Flush instruction queue Divergence Points: Incorrect INV value branch prediction

Exiting Run. Ahead p Occurs returns n n when stalling memory access finally Checkpointed architecture is restored All instructions in the machine are flushed p Processor starts fetching again at instruction which caused Run. Ahead execution n Paper presented optimization where fetching started slightly before stalled instruction returned

Biasing Branch Predictors p Run. Ahead can cause branch predictors to be biased twice on the same branch p Several Alternatives: (1)Always train branch predictors (2)Never train branch predictors (3)Create list of predicted branches (4)Create separate Branch Predictor

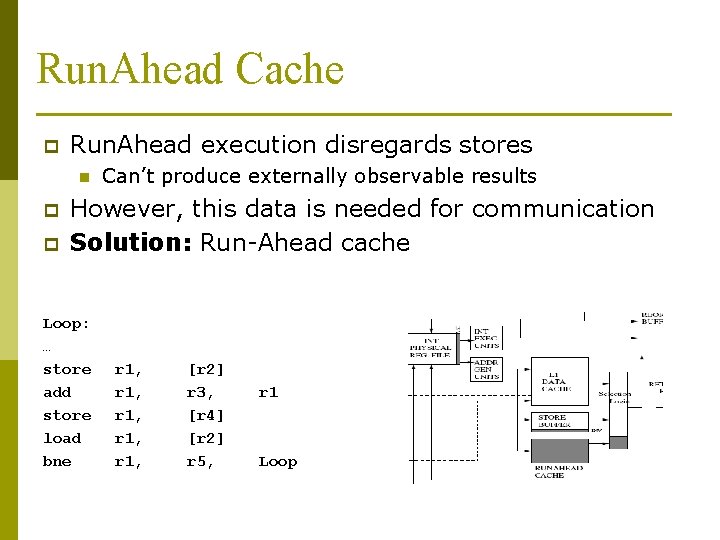

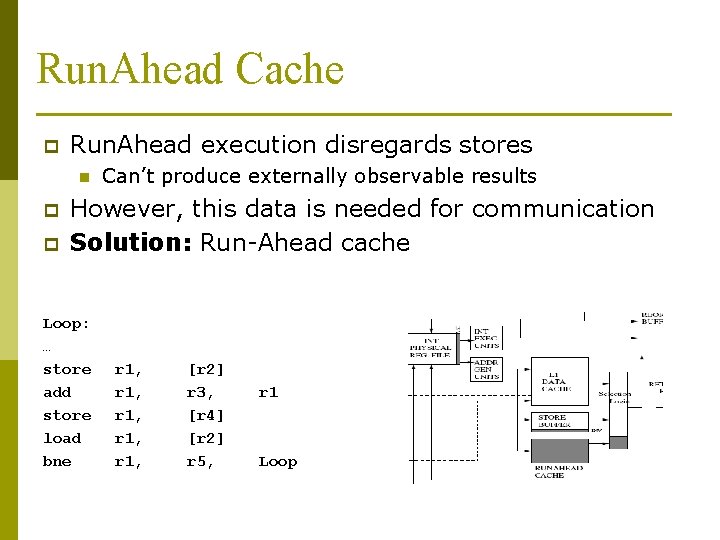

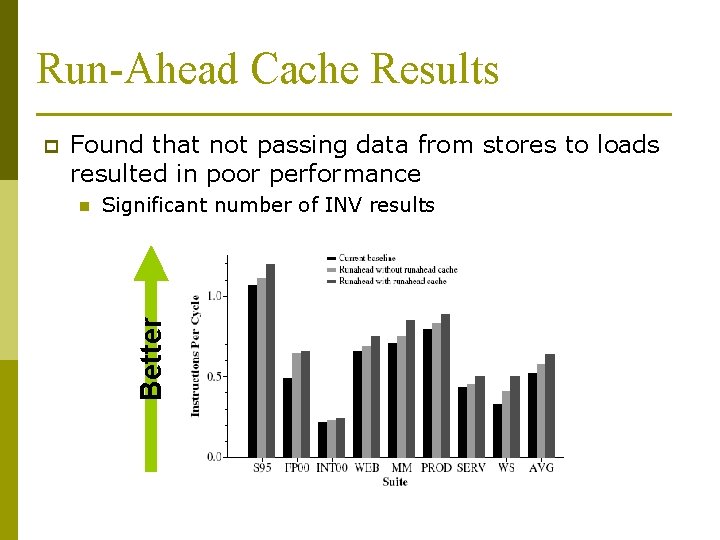

Run. Ahead Cache p Run. Ahead execution disregards stores n p p Can’t produce externally observable results However, this data is needed for communication Solution: Run-Ahead cache Loop: … store add store load bne r 1, r 1, [r 2] r 3, [r 4] [r 2] r 5, r 1 Loop

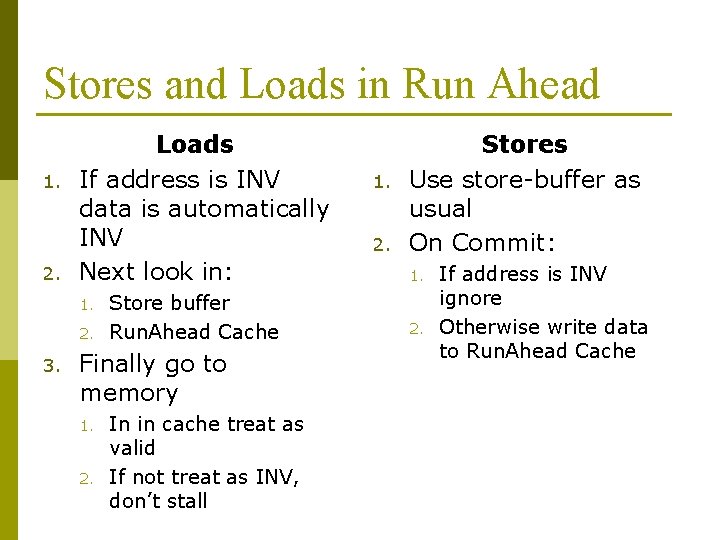

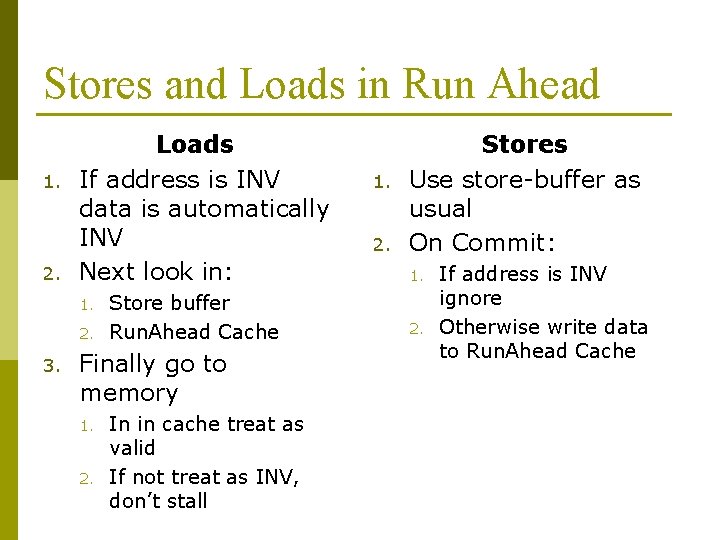

Stores and Loads in Run Ahead 1. 2. Loads If address is INV data is automatically INV Next look in: 1. 2. 3. Store buffer Run. Ahead Cache Finally go to memory 1. 2. In in cache treat as valid If not treat as INV, don’t stall 1. 2. Stores Use store-buffer as usual On Commit: 1. 2. If address is INV ignore Otherwise write data to Run. Ahead Cache

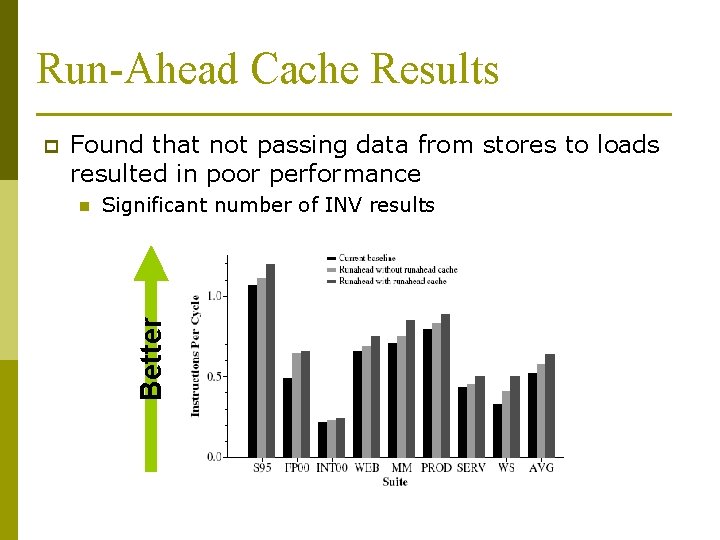

Run-Ahead Cache Results Found that not passing data from stores to loads resulted in poor performance n Significant number of INV results Better p

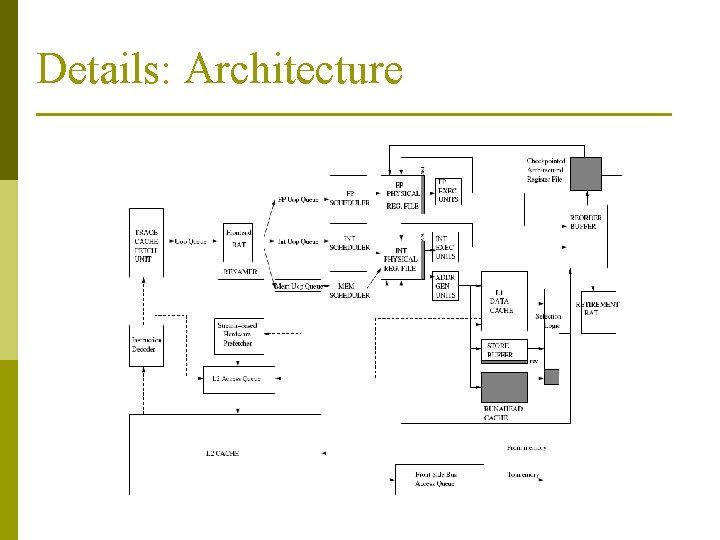

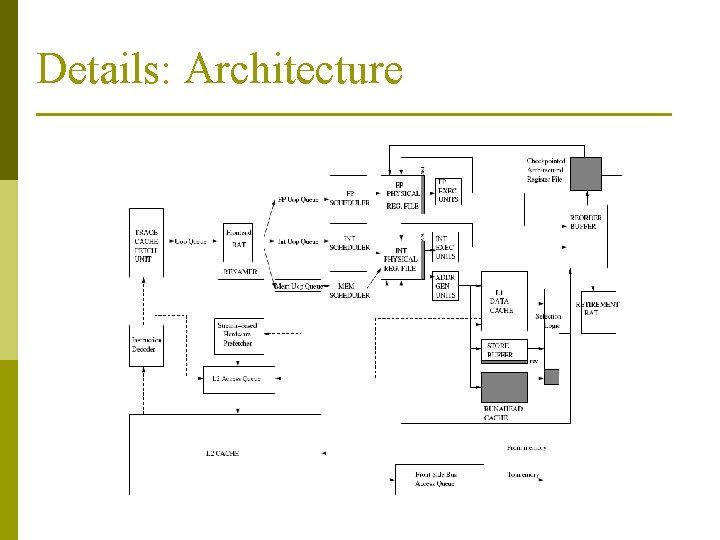

Details: Architecture

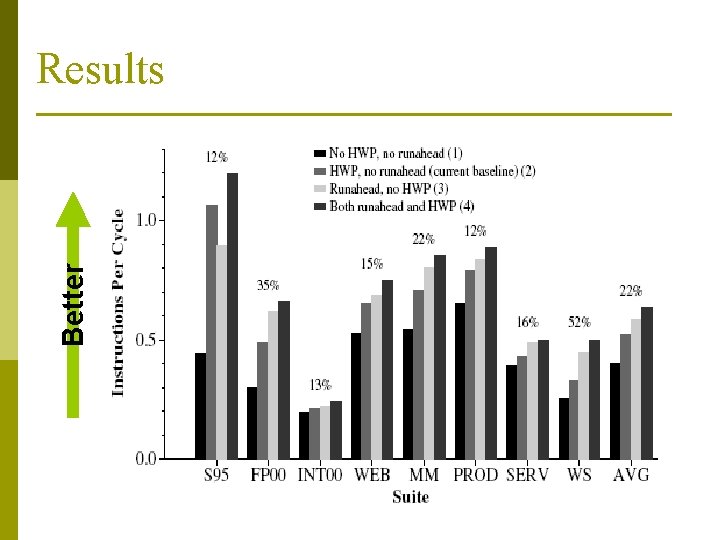

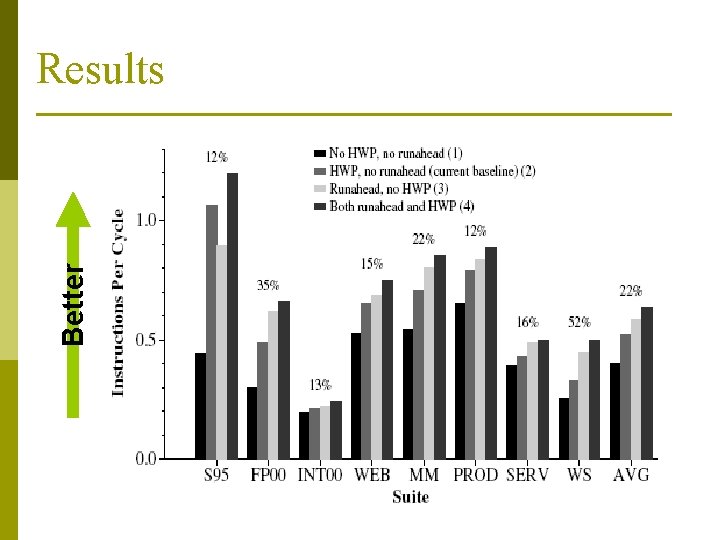

Better Results

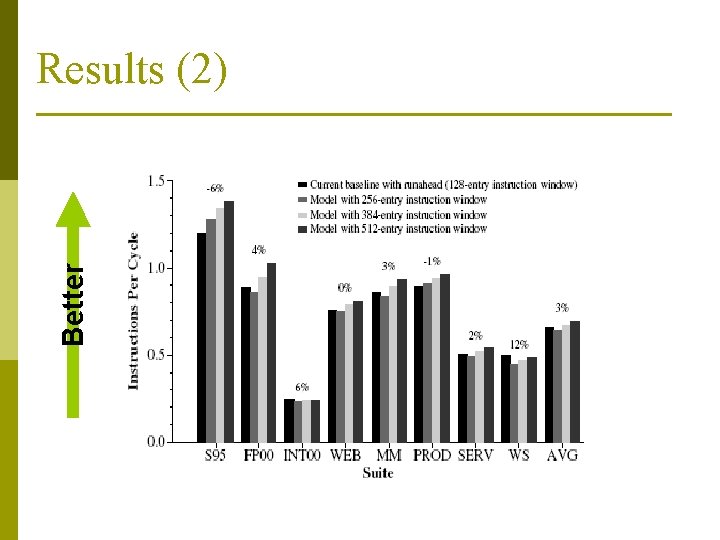

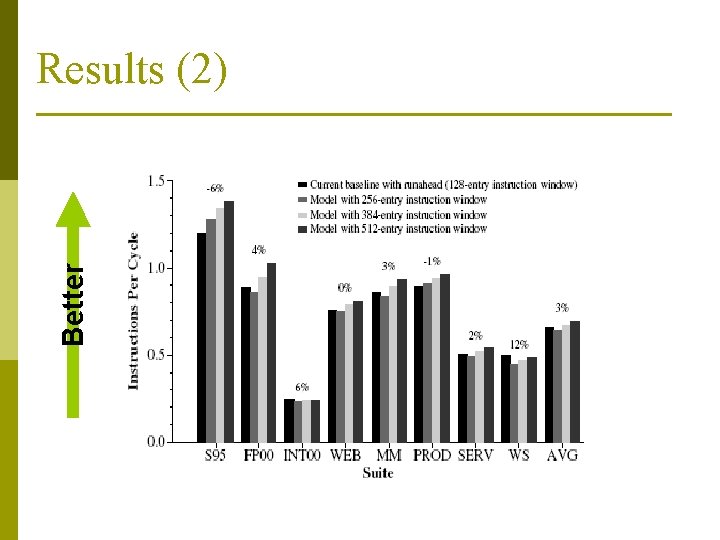

Better Results (2)

Issues p Some wrong assumptions about future machines n Future baseline corresponds poorly to modern architectures p Not a lot of details of architectural requirement for this technique n n Increase architecture size Increase power-requirements