Rubrics and Validity and Reliability Oh My Pre

Rubrics, and Validity, and Reliability: Oh My! Pre Conference Session The Committee on Preparation and Professional Accountability AACTE Annual Meeting 2016

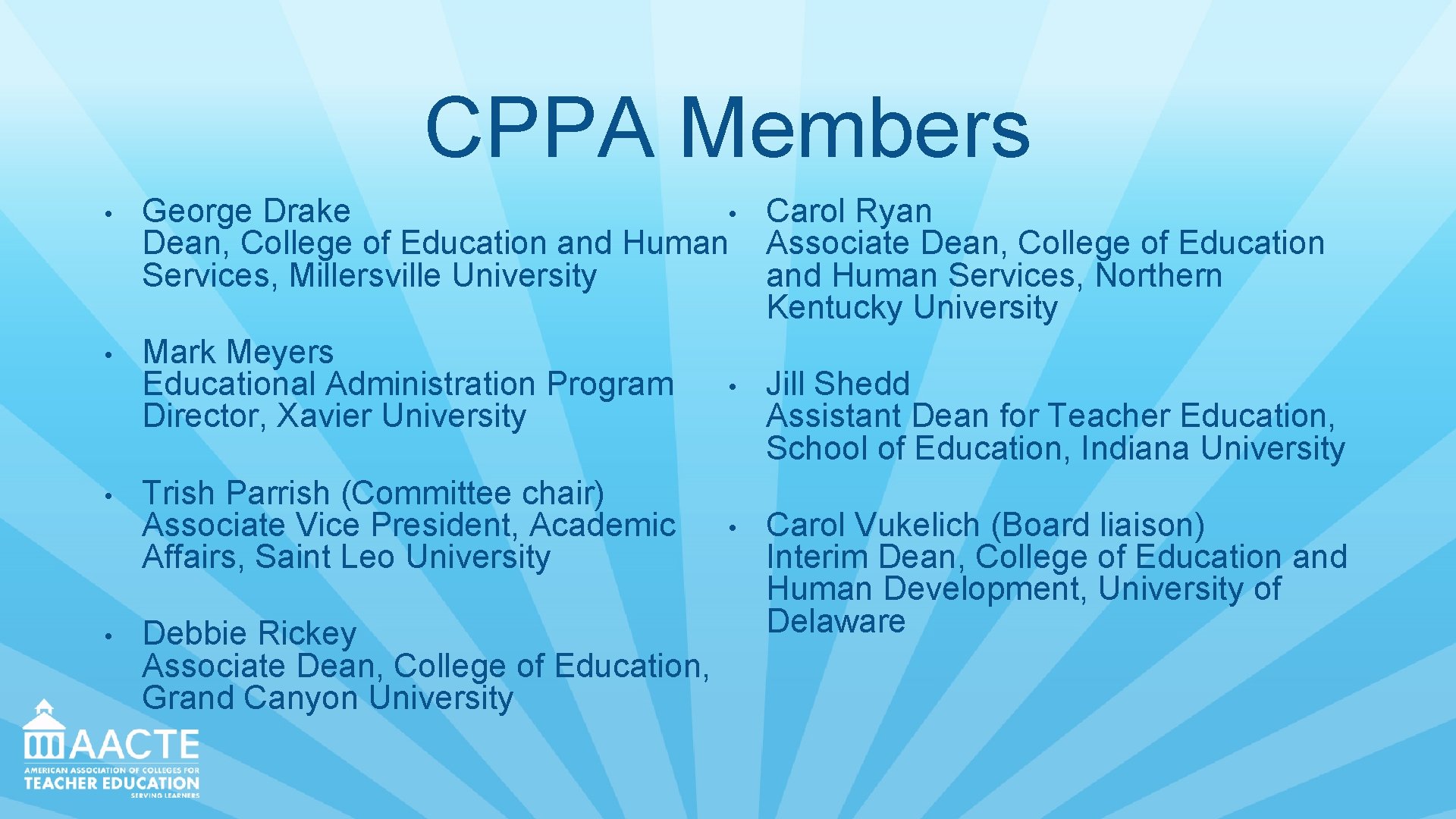

CPPA Members • • • Carol Ryan George Drake Dean, College of Education and Human Associate Dean, College of Education and Human Services, Northern Services, Millersville University Kentucky University Mark Meyers Educational Administration Program • Jill Shedd Assistant Dean for Teacher Education, Director, Xavier University School of Education, Indiana University Trish Parrish (Committee chair) Associate Vice President, Academic • Carol Vukelich (Board liaison) Interim Dean, College of Education and Affairs, Saint Leo University Human Development, University of Delaware Debbie Rickey Associate Dean, College of Education, Grand Canyon University

Agenda • Welcome and Introductions • Rubric Design • BREAK • Table Work: Improving Rubrics • Application: Rubrics for Field Experiences • Debrief and Wrap Up

Table Discussion • What type of assessment system does your EPP use? • Do you use locally developed instruments as part of your key assessment of teacher candidates? • Have you followed a formal process to establish reliability and validity of these instruments?

Rubric Design • Role of rubrics in assessment • Formative and Summative Feedback • Transparency of Expectations • Illustrative Value

Rubric Design • Criteria for Sound Rubric Development • Appropriate • Definable • Observable • Diagnostic • Complete • Retrievable

Rubric Design • Steps in Writing Rubrics • Select Criteria • Set the Scale • Label the Ratings • Identify Basic Meaning • Describe Performance

Resources for Rubric Design • National Postsecondary Education Cooperative http: //nces. ed. gov/pubs 2005/2005832. pdf • Rubric Bank at Univ of Hawaii http: //www. manoa. hawaii. edu/assessment/resources/rubricbank. htm • University of Minnesota http: //www. carla. umn. edu/assessment/vac/improvement/p_4. html • Penn State Rubric Basics http: //www. schreyerinstitute. psu. edu/pdf/Rubric. Basics. pdf • AACU VALUE Project http: //www. aacu. org/value • VALUE Rubrics http: //www. aacu. org/value-rubrics • Irubric http: //www. rcampus. com/indexrubric. cfm

Introduction to Validity • Construct Validity: How well a rubric measures what it claims to measure • Content Validity: Estimate of how the rubric aligns with all elements of a construct • Criterion Validity: Correlation with standards • Face Validity: A measure of how representative a rubric is “at face value”

Table Discussion Which of the types of validity would be most helpful for locally developed rubrics? • Construct • Content • Criterion • Face

Approaches to Establishing Validity • Locally-established methodology, such as tagging, developed by the EPP with the rationale provided by the EPP • Research-based methodology, such as Lawshe, removes need for EPP to develop a rationale

Tagging Example CAEP 1. 2: Providers ensure that candidates use research and evidence to develop an understanding of the teaching profession and use both to measure their P-12 students’ progress and their own professional practice. Component Descriptor Ineffective Emerging Target Developing objectives Lists learning objectives that do not reflect key concepts of the discipline. Lists learning objectives that reflect key concepts of the discipline but are not aligned with relevant state or national standards. Lists learning objectives that reflect key concepts of the discipline and are aligned with state and national standards. Uses pre-assessments Fails to use pre-assessment data when planning instruction. Considers baseline data from pre-assessments; however, preassessments do not align with stated learning targets/objectives. Uses student baseline data from pre-assessments that are aligned with stated learning targets/objectives when planning instruction. Planning assessments Plans methods of assessment that do not measure student performance on the stated objectives. Plans methods of assessment that measure student performance on some of the stated objectives. Plans methods of assessment that measure student performance on each objective.

Table Discussion Why is reliability important in locally developed rubrics? Which types of reliability are most important for locally developed rubrics?

Introduction to Reliability • Inter-Rater: Extent to which different assessors have consistent results • Test-Retest: Consistency of a measure from one time to another • Parallel Forms: Consistency of results of two rubrics designed to measure the same content • Internal Consistency: Consistency of results across items in a rubric

Approaches to Establishing Reliability • This can be done via a research-based approach or through a locally -based approach • More on this in the second half of our presentation!

Time to Practice • • Opportunities to practice two methods of validity • Criterion (Correlation with standards) • Content (Estimate of how the rubric aligns with all elements of a construct) Opportunity to practice inter-rater reliability (Extent to which different assessors have consistent results)

Criterion Validity • Correlation with Standards • • As an individual- • Review the Learning Environment section on the blue document • “Tag” each of the elements in that section to the appropriate In. TASC and CAEP standards As a table- • Come to a consensus on the most appropriate “Tags” for each element of the Learning Environment section

Content Validity • Using the Lawshe Method • As an individual, review and rate each element of the Designing and Planning Instruction section on the yellow document • Choose a table facilitator • Tally the individual ratings • Calculate the CVR Value of each element • CVR= ne- N/2 N/2 ne= # of experts who chose essential; N= total # of experts • The closer to +1. 0 the more essential the element What is the CVR value your group suggests as the minimum score for keeping an element?

Content Validity • Discuss the elements that would be cut • Why was the element rated as less than essential? • Can/ should it be reworded to have a higher CVR value? • If so, how would you reword it?

Inter-Rater Reliability (Extent to which different assessors have consistent results) • Two raters observing the same lesson • In person or via recorded lesson • Raters can be two university clinical educators, two P-12 clinical educators, or one university and one P-12 clinical educator • Each rater independently completes the observation form • Statistics are run to determine the amount of agreement between the two raters (SPSS or Excel)

Inter-Rater Reliability Watch the Elementary Math Teaching video https: //www. youtube. com/watch? v=f. ZMb. CENzaws&featur e=youtu. be • Rate the teacher using the Learning Environment criteria on the blue sheet • Identify a partner at the table and compare your ratings • On which criteria were your ratings the same? Different?

Inter-Rater Reliability Watch the High School Science Teaching video https: //youtu. be/t. OWYMCmx_0 c • Rate the teacher using the Instruction criteria on the pink sheet • Identify a partner at the table and compare your ratings • On which criteria were your ratings the same? Different?

Table Discussion What would you do to increase inter-rater reliability?

Summary • Validity and Reliability directed at two basic questions re: assessments • Is the assessment useful? Does it provide constructive feedback to both candidates and the faculty? • Is the assessment fair? Does it provide feedback consistently and as intended?

What will you attempt on your own campus? What additional information do you need? What questions do you still have?

- Slides: 28