Routing Murat Demirbas SUNY Buffalo Routing patterns in

Routing Murat Demirbas SUNY Buffalo

Routing patterns in WSN Model: static large-scale WSN • Convergecast: Nodes forwards their data to basestation over multihops, scenario: monitoring application • Broadcast: Basestation pushes data to all nodes in WSN, scenario: reprogramming • Data driven: Nodes subscribe for data of interest to them, scenario: operator queries the nearby nodes for some data (similar to querying) 2

Outline • Convergecast ¾ Routing tree ¾ Grid routing ¾ Reliable bursty broadcast • Broadcast ¾ Flood, Flood-Gossip-Flood ¾ Trickle ¾ Polygonal broadcast, Fire-cracker • Data driven ¾ Directed diffusion ¾ Rumor routing 3

Routing tree • Most commonly used approach is to induce a spanning tree over the network ¾ The root is the base-station ¾ Each node forwards data to its parent ¾ In-network aggregation possible at intermediate nodes • Initial construction of the tree is problematic ¾ Broadcast storm, remember Complex behavior at scale • Link status change non-deterministically ¾ Snooping on nearby traffic to choose high-quality neighbors pays off Ø Taming the Underlying Challenges of Reliable Multihop Routing • Trees are problematic since a change somewhere in the tree might lead to escalating changes in the rest (or a deformed structure) 4

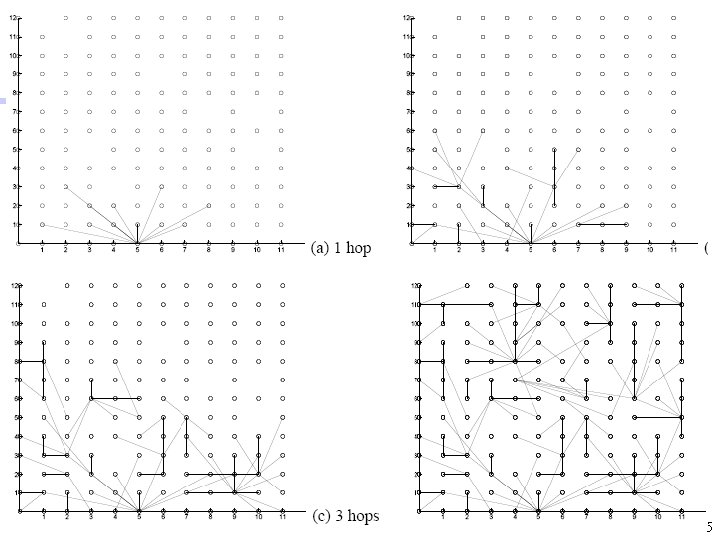

5

Outline • Convergecast ¾ Routing tree ¾ Grid routing ¾ Reliable bursty broadcast • Broadcast ¾ Flood, Flood-Gossip-Flood ¾ Trickle ¾ Polygonal broadcast, Fire-cracker • Data driven ¾ Directed diffusion ¾ Rumor routing 6

Grid Routing Protocol • The protocol is simple: it requires each mote to send only one three-byte msg every T seconds • This protocol is reliable: it can overcome random msg loss and mote failure • Routing on a grid is stateless: perturbed region upon failure of nodes is bounded by their local neighbors 7

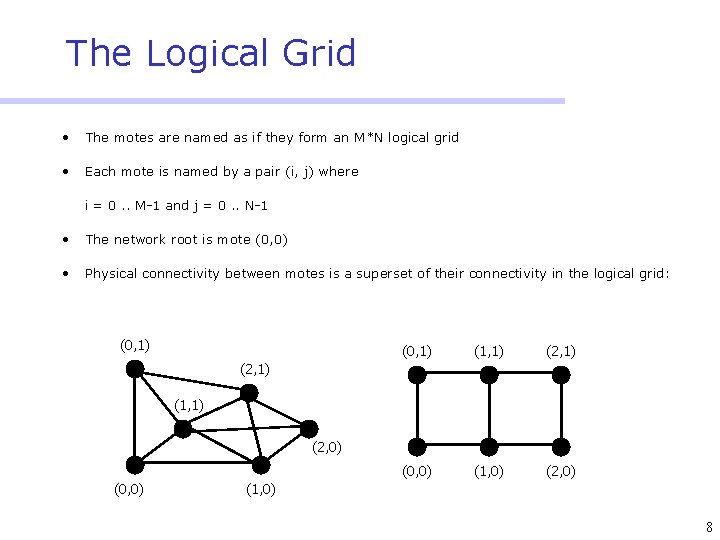

The Logical Grid • The motes are named as if they form an M*N logical grid • Each mote is named by a pair (i, j) where i = 0. . M-1 and j = 0. . N-1 • The network root is mote (0, 0) • Physical connectivity between motes is a superset of their connectivity in the logical grid: (0, 1) (1, 1) (2, 1) (0, 0) (1, 0) (2, 1) (1, 1) (2, 0) (0, 0) (1, 0) 8

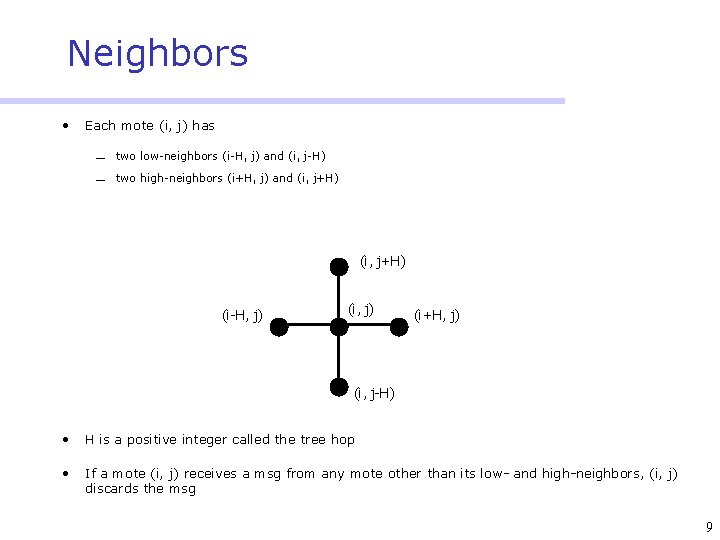

Neighbors • Each mote (i, j) has ¾ two low-neighbors (i-H, j) and (i, j-H) ¾ two high-neighbors (i+H, j) and (i, j+H) (i-H, j) (i+H, j) (i, j-H) • H is a positive integer called the tree hop • If a mote (i, j) receives a msg from any mote other than its low- and high-neighbors, (i, j) discards the msg 9

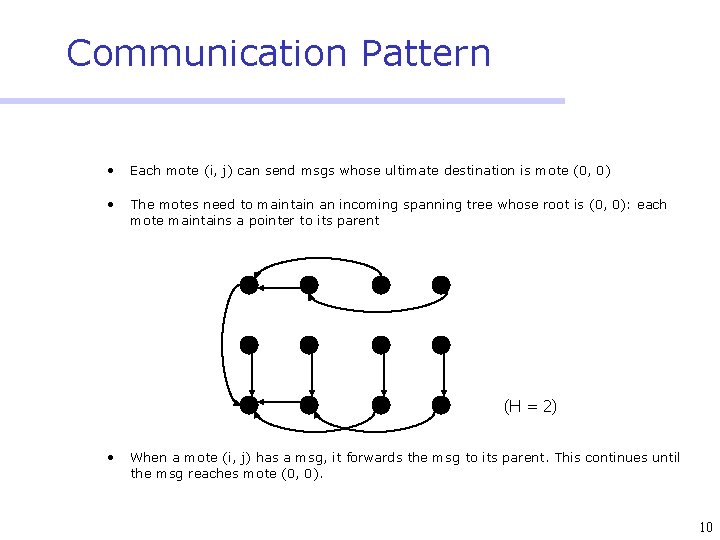

Communication Pattern • Each mote (i, j) can send msgs whose ultimate destination is mote (0, 0) • The motes need to maintain an incoming spanning tree whose root is (0, 0): each mote maintains a pointer to its parent (H = 2) • When a mote (i, j) has a msg, it forwards the msg to its parent. This continues until the msg reaches mote (0, 0). 10

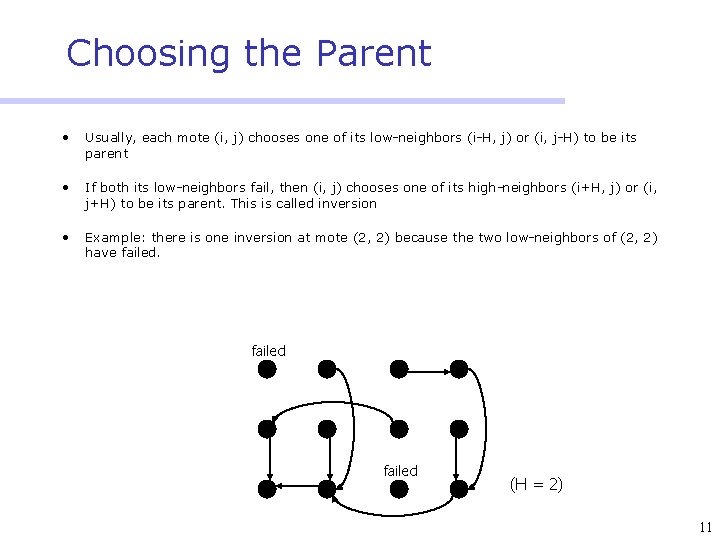

Choosing the Parent • Usually, each mote (i, j) chooses one of its low-neighbors (i-H, j) or (i, j-H) to be its parent • If both its low-neighbors fail, then (i, j) chooses one of its high-neighbors (i+H, j) or (i, j+H) to be its parent. This is called inversion • Example: there is one inversion at mote (2, 2) because the two low-neighbors of (2, 2) have failed (H = 2) 11

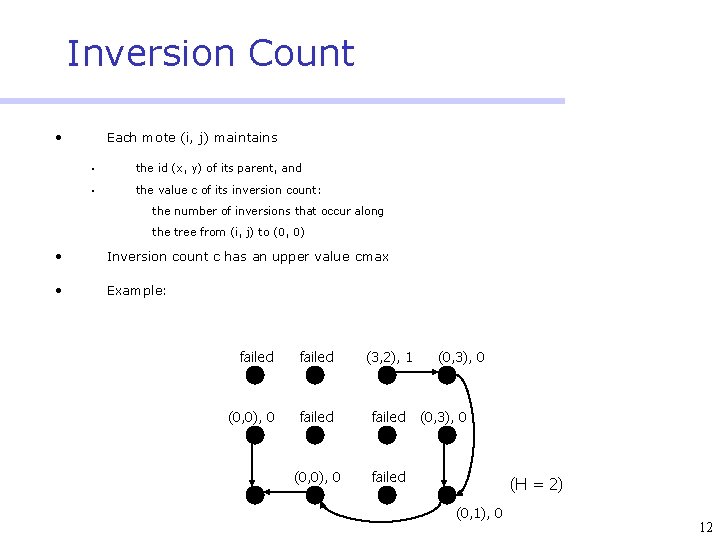

Inversion Count • Each mote (i, j) maintains § the id (x, y) of its parent, and § the value c of its inversion count: the number of inversions that occur along the tree from (i, j) to (0, 0) • Inversion count c has an upper value cmax • Example: failed (3, 2), 1 (0, 0), 0 failed (0, 3), 0 (H = 2) (0, 1), 0 12

Protocol Message • If a mote (i, j) has a parent, then every T seconds it sends a msg with three fields: connected(i, j, c) where c is the inversion count of mote (i, j) • Otherwise, mote (i, j) does nothing. • Every 3 seconds, mote (0, 0) sends a msg with three fields: connected(0, 0, 0) 13

Acquiring a Parent • Initially, every mote (i, j) has no parent. • When mote (i, j) has no parent and receives connected(x, y, e), (i, j) chooses (x, y) as its parent • ¾ if (x, y) is its low-neighbor, or ¾ if (x, y) is its high-neighbor and e < cmax When mote (i, j) receives a connected(x, y, e) and chooses (x, y) to be its parent, (i, j) computes its inversion count c as: ¾ if (x, y) is low-neighbor, c : = e ¾ if (x, y) is high-neighbor, c : = e + 1 14

Keeping the Parent • If mote (i, j) has a parent (x, y) and receives any connected(x, y, e) then (i, j) updates its inversion count c as: ¾ if (x, y) is low-neighbor, c : = e ¾ if (x, y) is high-neighbor and e < cmax, c : = e + 1 ¾ if (x, y) is high-neighbor and e = cmax, then (i, j) loses its parent 15

Losing the Parent • There are two scenarios that cause mote (i, j) to lose its parent (x, y) ¾ (i, j) receives a connected(x, y, cmax) msg and (x, y) happens to be a high-neighbor of (i, j) ¾ (i, j) does not receive any connected(x, y, e) msg for k. T seconds 16

Replacing the Parent • If mote (i, j) has a parent (x, y), and receives a connected(u, v, f) msg where (u, v) is a neighbor of (i, j), and (i, j) detects that by adopting (u, v) as a parent and using f to compute its inversion count c, the value of c is reduced then (i, j) adopts (u, v) as its parent and recomputes its inversion count 17

Allowing Long Links • Add the following rule to the previous rules for acquiring and replacing a parent: ¾ If any mote (i, j) ever receives a message connected(0, 0, 0), then mote (i, j) makes mote (0, 0) its parent 18

Outline • Convergecast ¾ Routing tree ¾ Grid routing ¾ Reliable bursty broadcast • Broadcast ¾ Flood, Flood-Gossip-Flood ¾ Trickle ¾ Polygonal broadcast, Fire-cracker • Data driven ¾ Directed diffusion ¾ Rumor routing 19

Application context • A Line in the Sand (Lites) ¾ field sensor network experiment for real-time target detection, classification, and tracking • A target can be detected by tens of nodes ¾ Traffic burst • Bursty convergecast ¾ Deliver traffic bursts to a base station nearby 20

Problem statement • Only 33. 7% packets are delivered with the default Tiny. OS messaging stack ¾ Unable to support precise event classification • Objectives ¾ Close to 100% reliability ¾ Close to optimal event goodput (real-time) • Experimental study for high fidelity 21

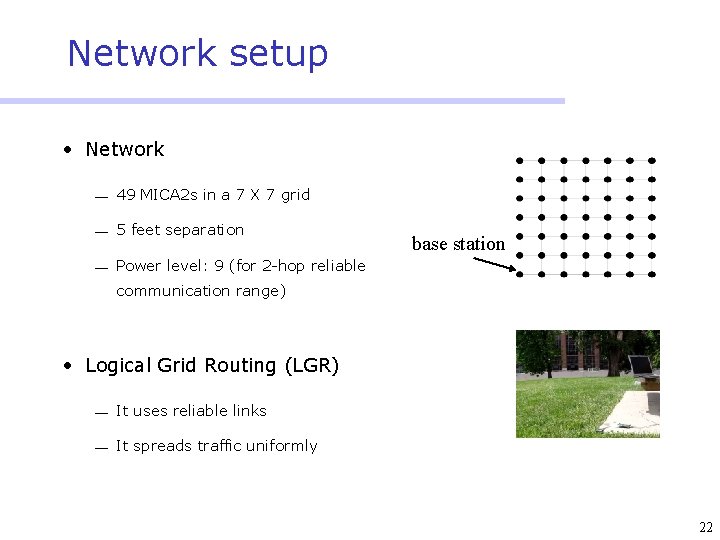

Network setup • Network ¾ 49 MICA 2 s in a 7 X 7 grid ¾ 5 feet separation ¾ Power level: 9 (for 2 -hop reliable base station communication range) • Logical Grid Routing (LGR) ¾ It uses reliable links ¾ It spreads traffic uniformly 22

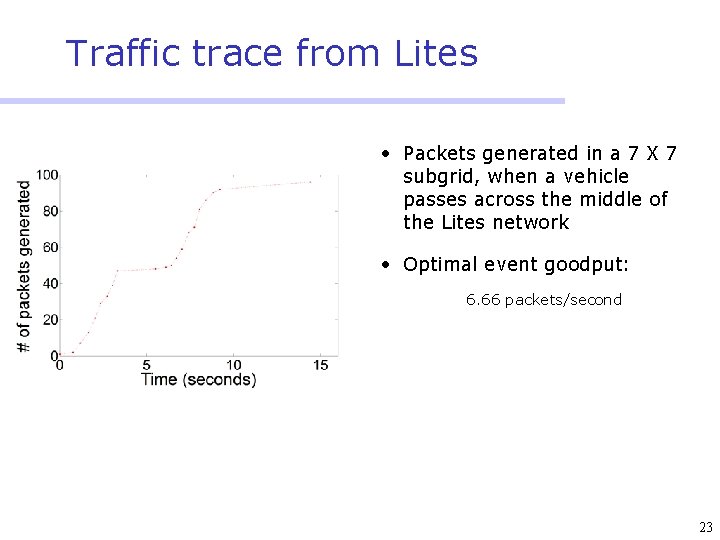

Traffic trace from Lites • Packets generated in a 7 X 7 subgrid, when a vehicle passes across the middle of the Lites network • Optimal event goodput: 6. 66 packets/second 23

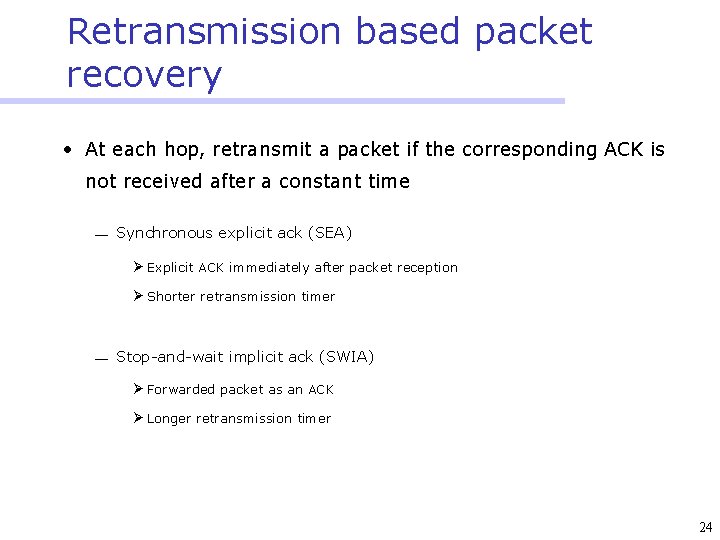

Retransmission based packet recovery • At each hop, retransmit a packet if the corresponding ACK is not received after a constant time ¾ Synchronous explicit ack (SEA) Ø Explicit ACK immediately after packet reception Ø Shorter retransmission timer ¾ Stop-and-wait implicit ack (SWIA) Ø Forwarded packet as an ACK Ø Longer retransmission timer 24

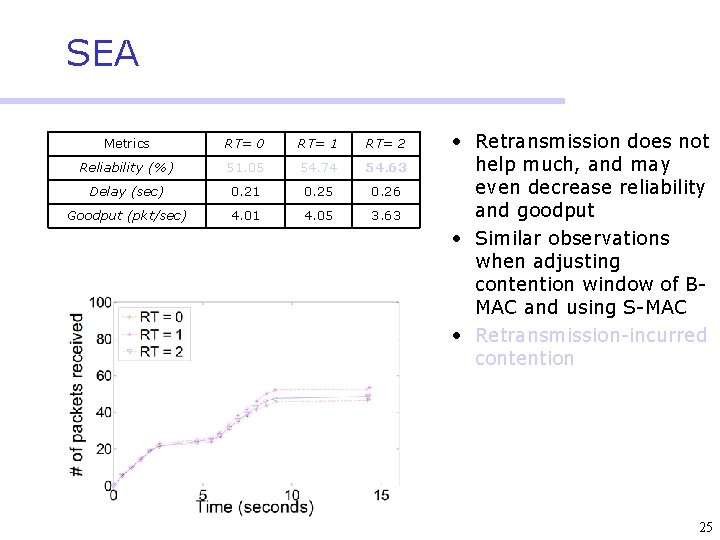

SEA Metrics RT= 0 RT= 1 RT= 2 Reliability (%) 51. 05 54. 74 54. 63 Delay (sec) 0. 21 0. 25 0. 26 Goodput (pkt/sec) 4. 01 4. 05 3. 63 • Retransmission does not help much, and may even decrease reliability and goodput • Similar observations when adjusting contention window of BMAC and using S-MAC • Retransmission-incurred contention 25

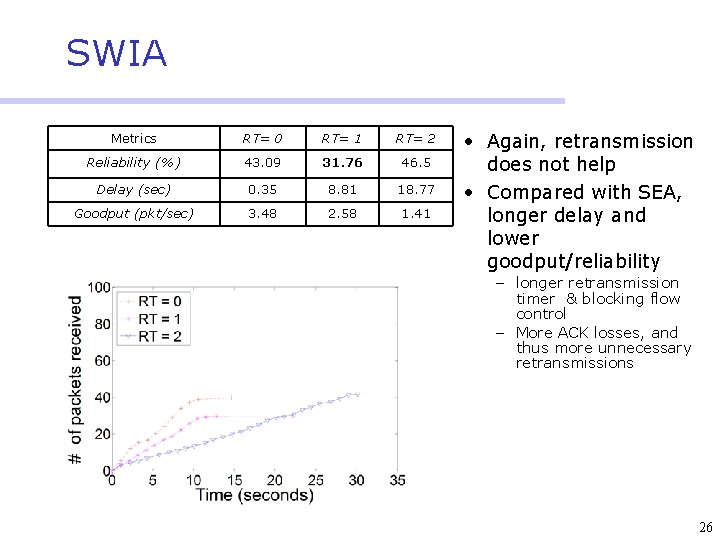

SWIA Metrics RT= 0 RT= 1 RT= 2 Reliability (%) 43. 09 31. 76 46. 5 Delay (sec) 0. 35 8. 81 18. 77 Goodput (pkt/sec) 3. 48 2. 58 1. 41 • Again, retransmission does not help • Compared with SEA, longer delay and lower goodput/reliability – longer retransmission timer & blocking flow control – More ACK losses, and thus more unnecessary retransmissions 26

Protocol RBC • Differentiated contention control ¾ Reduce channel contention caused by packet retransmissions • Window-less block ACK ¾ Non-blocking flow control ¾ Reduce ack loss • Fine-grained tuning of retransmission timers 27

Window-less block ACK Non-blocking window-less queue management ¾ Unlike sliding-window based black ACK, in order packet delivery is not considered Ø Packets have been timestamped ¾ For block ACK, sender and receiver maintain the “order” in which packets have been transmitted Ø “order” is identified without using sliding-window, thus there is no upper bound on the number of un-ACKed packet transmissions 28

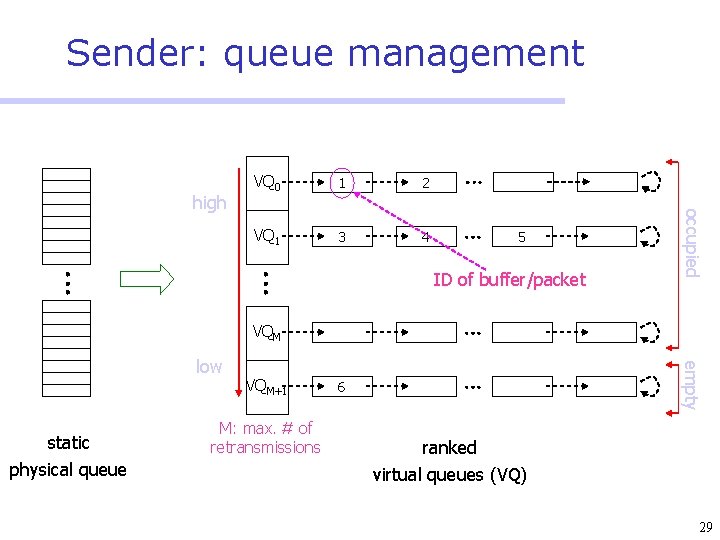

Sender: queue management 1 2 VQ 1 3 4 5 ID of buffer/packet occupied high VQ 0 VQM static physical queue VQM+1 M: max. # of retransmissions empty low 6 ranked virtual queues (VQ) 29

Differentiated contention control • Schedule channel access across nodes • Higher priority in channel access is given to ¾ nodes having fresher packets ¾ nodes having more queued packets 30

Implementation of contention control • The rank of a node j = M - k, |VQk|, ID(j) , where ¾ M: maximum number retransmissions per-hop ¾ VQk: the highest-ranked non-empty virtual queue at j ¾ ID(j): the ID of node j • A node with a larger rank value has higher priority • Neighboring nodes exchange their ranks ¾ Lower ranked nodes leave the floor to higher ranked ones 31

Fine tuning retransmission timer • Timeout value: tradeoff between ¾ delay in necessary retransmissions ¾ probability of unnecessary retransmissions • In RBC ¾ Dynamically estimate ACK delay ¾ Conservatively choose timeout value; also reset timers upon packet and ACK loss 32

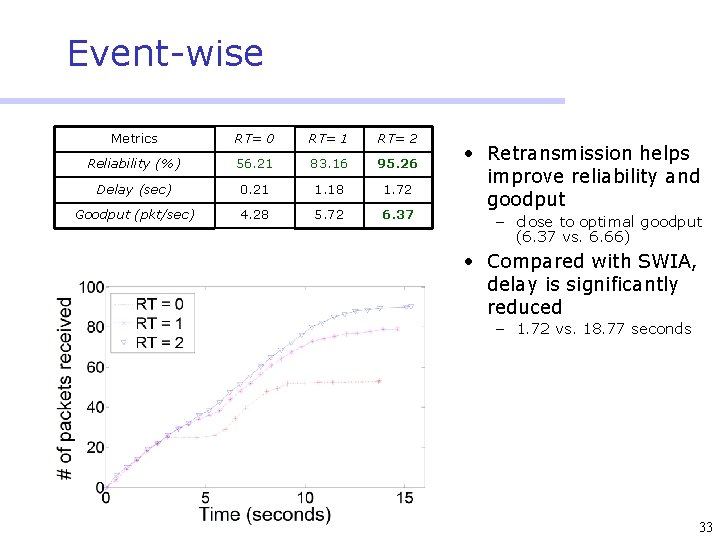

Event-wise Metrics RT= 0 RT= 1 RT= 2 Reliability (%) 56. 21 83. 16 95. 26 Delay (sec) 0. 21 1. 18 1. 72 Goodput (pkt/sec) 4. 28 5. 72 6. 37 • Retransmission helps improve reliability and goodput – close to optimal goodput (6. 37 vs. 6. 66) • Compared with SWIA, delay is significantly reduced – 1. 72 vs. 18. 77 seconds 33

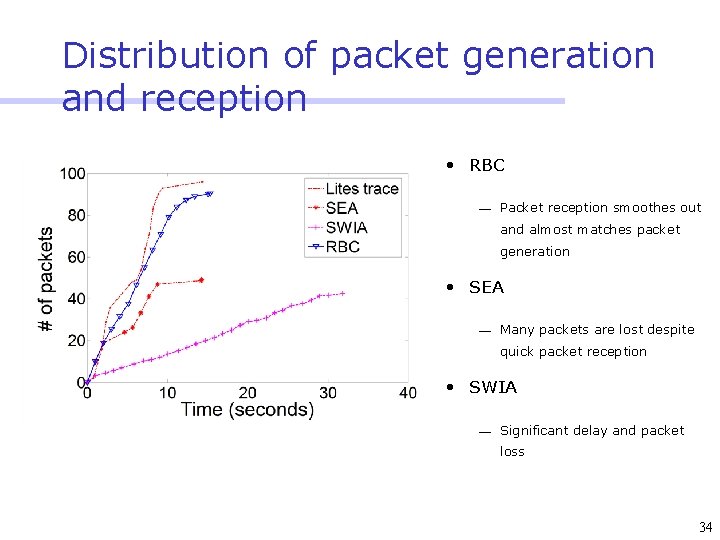

Distribution of packet generation and reception • RBC ¾ Packet reception smoothes out and almost matches packet generation • SEA ¾ Many packets are lost despite quick packet reception • SWIA ¾ Significant delay and packet loss 34

Field deployment (http: //www. cse. ohio- state. edu/exscal) • A Line in the Sand (Lites) ¾ ~ 100 MICA 2’s ¾ 10 X 20 meter 2 field ¾ Sensors: magnetometer, micro impulse radar (MIR) • Ex. Scal ¾ ~ 1, 000 XSM’s, ~ 200 Stargates ¾ 288 X 1260 meter 2 field ¾ Sensors: passive infrared radar (PIR), acoustic sensor, magnetometer 35

Outline • Convergecast ¾ Routing tree ¾ Grid routing ¾ Reliable bursty broadcast • Broadcast ¾ Flood, Flood-Gossip-Flood ¾ Trickle ¾ Polygonal broadcast, Fire-cracker • Data driven ¾ Directed diffusion ¾ Rumor routing 36

Flooding • Forward the message upon hearing it the first time • Leads to broadcast storm and loss of messages • Obvious optimizations are possible ¾ The node sets a timer upon receiving the message first time Ø Might be based on RSSI ¾ If, before the timer expires, the node hears message broadcasted T times, then node decides not to broadcast 37

Outline • Convergecast ¾ Routing tree ¾ Grid routing ¾ Reliable bursty broadcast • Broadcast ¾ Flood, Flood-Gossip-Flood ¾ Trickle ¾ Polygonal broadcast, Fire-cracker • Data driven ¾ Directed diffusion ¾ Rumor routing 38

Flooding, gossiping, flooding, … • Flood a message upon first hearing a message • Gossiping periodically (less frequently) to ensure that there are no missed messages ¾ Upon detecting a missed message disseminate by flooding again • Best effort flooding (fast) followed by a guaranteed coverage gossiping (slow) followed by best effort flooding • Algorithm takes care of delivery to loosely connected sections of the wsn Livadas and Lynch, 2003 39

Outline • Convergecast ¾ Routing tree ¾ Grid routing ¾ Reliable bursty broadcast • Broadcast ¾ Flood, Flood-Gossip-Flood ¾ Trickle ¾ Polygonal broadcast, Fire-cracker • Data driven ¾ Directed diffusion ¾ Rumor routing 40

Trickle • See Phil Levis’s talk. 41

Outline • Convergecast ¾ Routing tree ¾ Grid routing ¾ Reliable bursty broadcast • Broadcast ¾ Flood, Flood-Gossip-Flood ¾ Trickle ¾ Polygonal broadcast, Fire-cracker • Data driven ¾ Directed diffusion ¾ Rumor routing 42

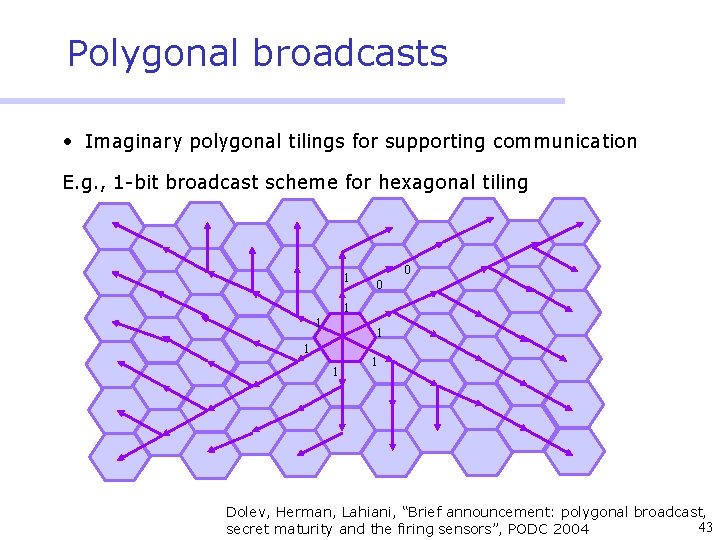

Polygonal broadcasts • Imaginary polygonal tilings for supporting communication E. g. , 1 -bit broadcast scheme for hexagonal tiling 1 0 0 1 1 1 Dolev, Herman, Lahiani, “Brief announcement: polygonal broadcast, 43 secret maturity and the firing sensors”, PODC 2004

Outline • Convergecast ¾ Routing tree ¾ Grid routing ¾ Reliable bursty broadcast • Broadcast ¾ Flood, Flood-Gossip-Flood ¾ Trickle ¾ Polygonal broadcast, Fire-cracker • Data driven ¾ Directed diffusion ¾ Rumor routing 44

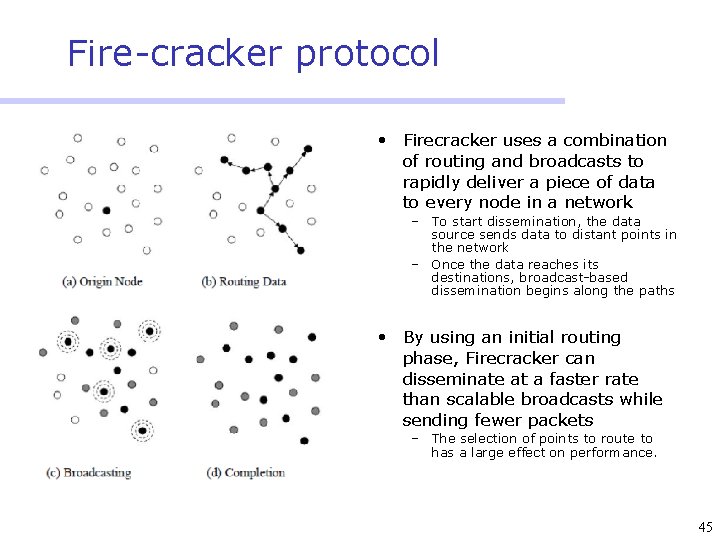

Fire-cracker protocol • Firecracker uses a combination of routing and broadcasts to rapidly deliver a piece of data to every node in a network – To start dissemination, the data source sends data to distant points in the network – Once the data reaches its destinations, broadcast-based dissemination begins along the paths • By using an initial routing phase, Firecracker can disseminate at a faster rate than scalable broadcasts while sending fewer packets – The selection of points to route to has a large effect on performance. 45

Outline • Convergecast ¾ Routing tree ¾ Grid routing ¾ Reliable bursty broadcast • Broadcast ¾ Flood, Flood-Gossip-Flood ¾ Trickle ¾ Polygonal broadcast, Fire-cracker • Data driven ¾ Directed diffusion ¾ Rumor routing 46

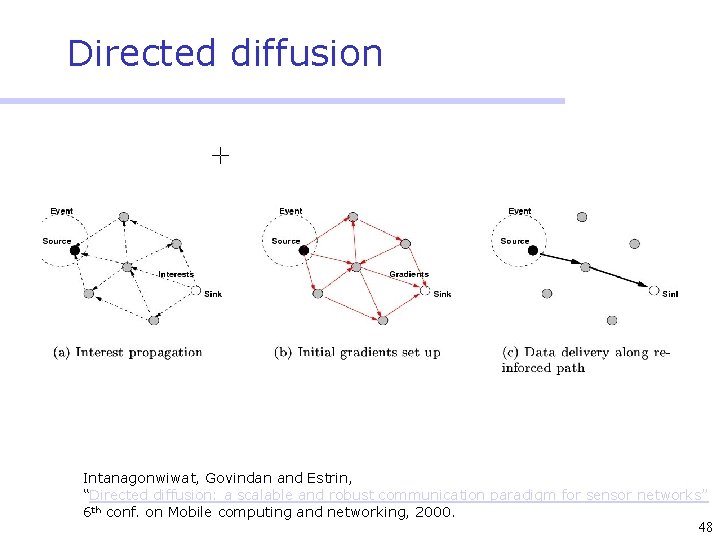

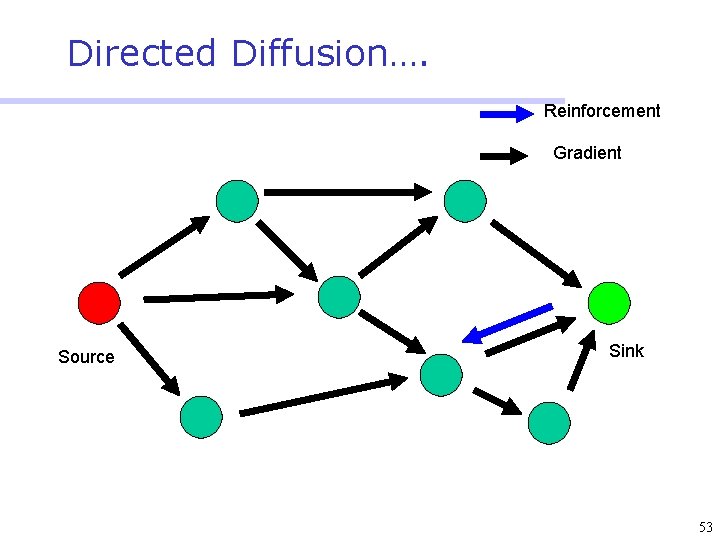

Directed Diffusion • Protocol initiated by destination (through query) • Data has attributes; sink broadcasts interests • Nodes diffuse the interest towards producers via a sequence of local interactions • Nodes receiving the broadcast set up a gradient (leading towards the sink) • Intermediate nodes opportunistically fuse interests, aggregate, correlate or cache data • Reinforcement and negative reinforcement used to converge to efficient distribution 47

Directed diffusion Intanagonwiwat, Govindan and Estrin, “Directed diffusion: a scalable and robust communication paradigm for sensor networks” 6 th conf. on Mobile computing and networking, 2000. 48

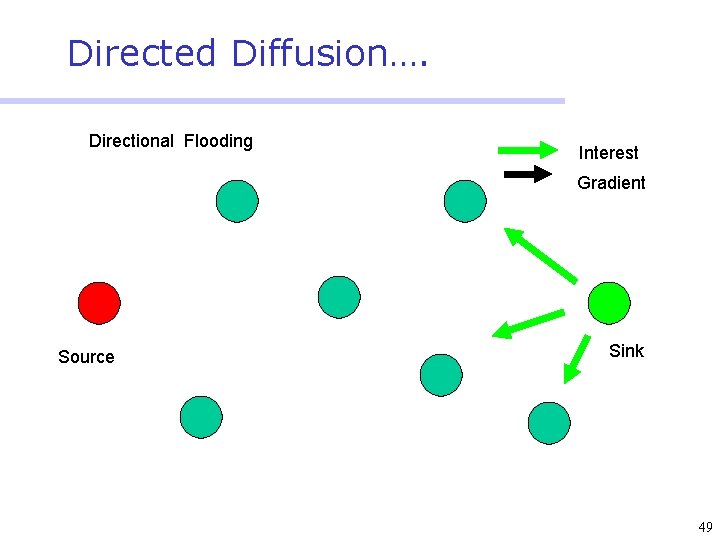

Directed Diffusion…. Directional Flooding Interest Gradient Source Sink 49

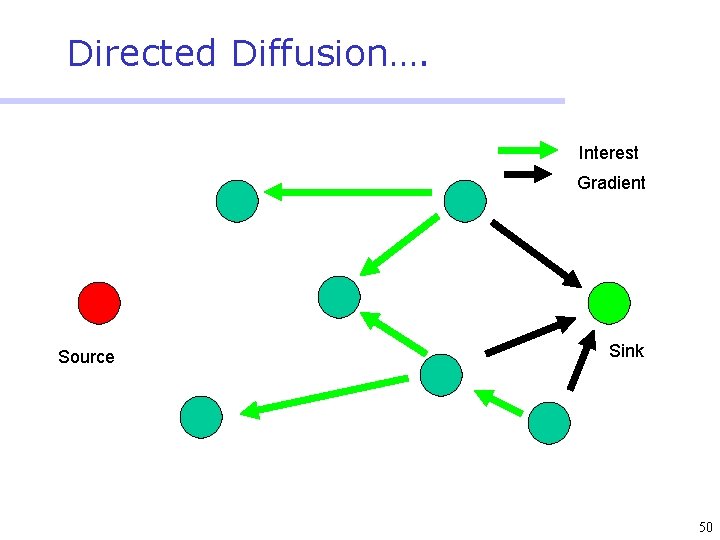

Directed Diffusion…. Interest Gradient Source Sink 50

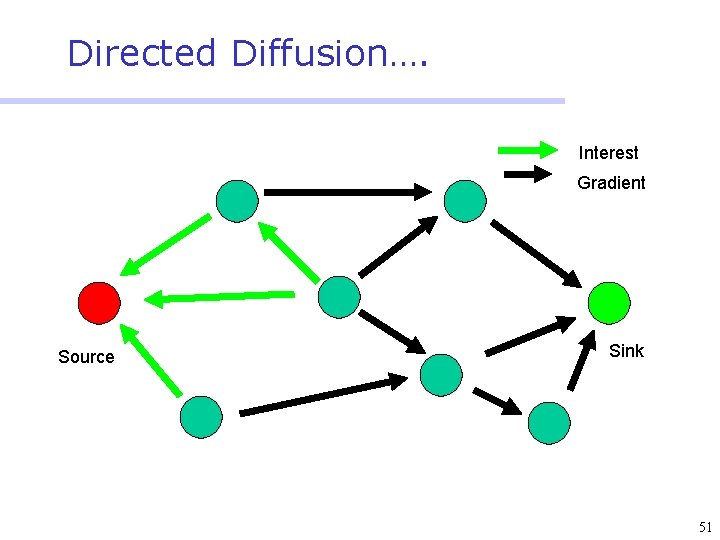

Directed Diffusion…. Interest Gradient Source Sink 51

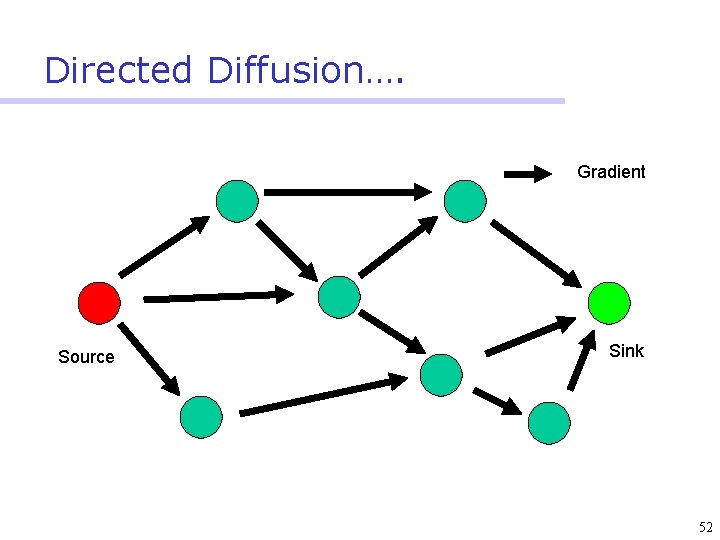

Directed Diffusion…. Gradient Source Sink 52

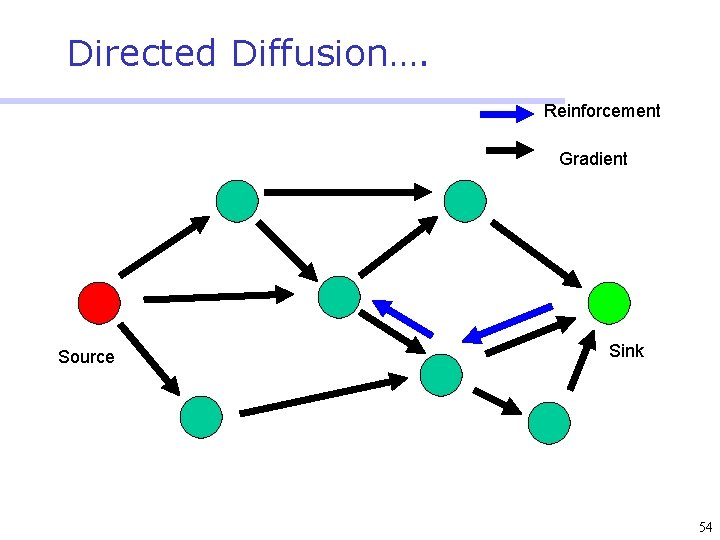

Directed Diffusion…. Reinforcement Gradient Source Sink 53

Directed Diffusion…. Reinforcement Gradient Source Sink 54

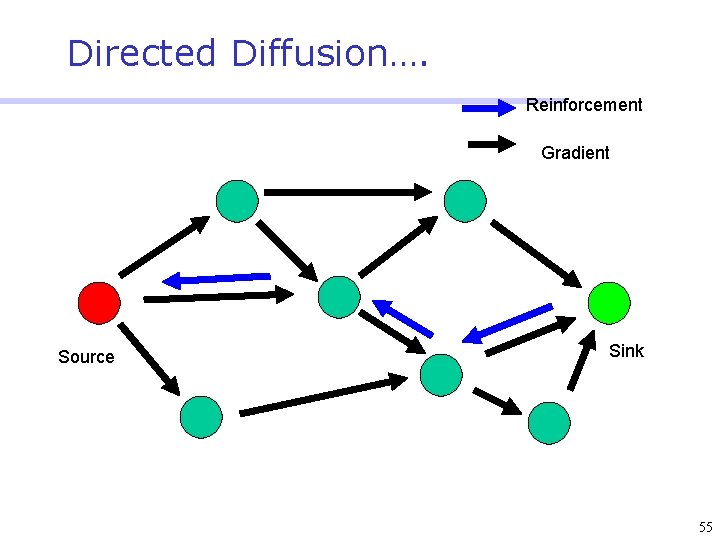

Directed Diffusion…. Reinforcement Gradient Source Sink 55

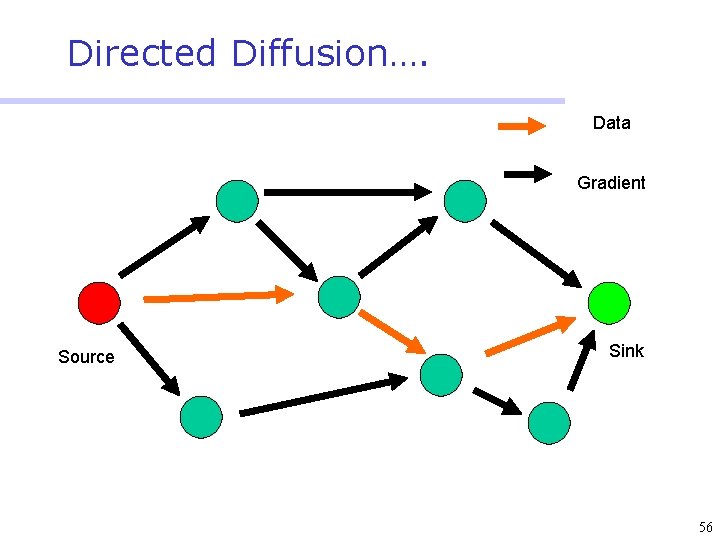

Directed Diffusion…. Data Gradient Source Sink 56

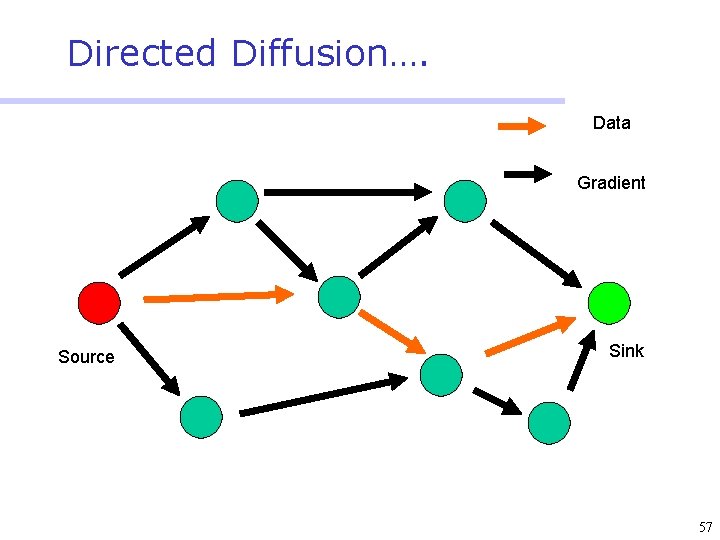

Directed Diffusion…. Data Gradient Source Sink 57

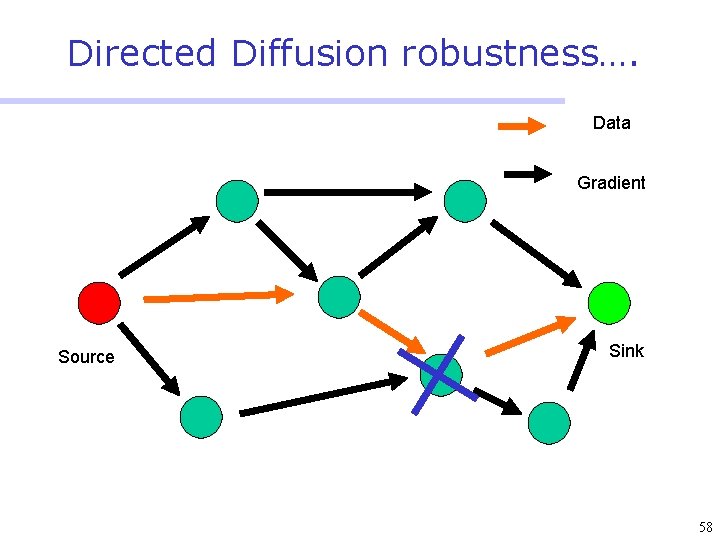

Directed Diffusion robustness…. Data Gradient Source Sink 58

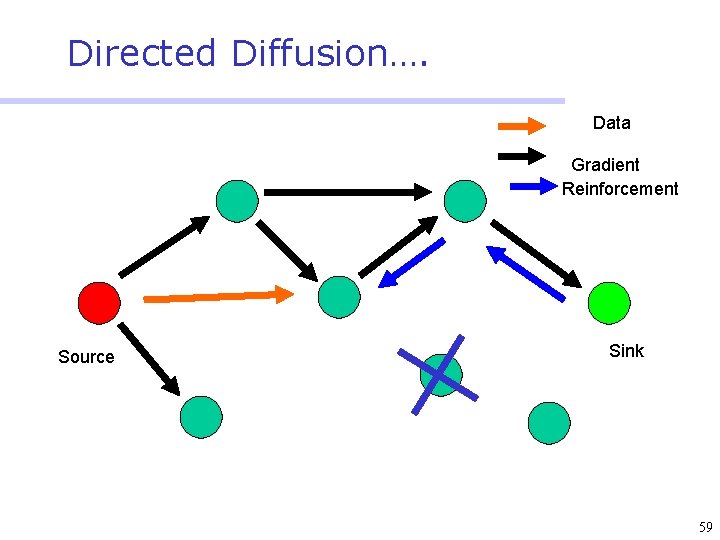

Directed Diffusion…. Data Gradient Reinforcement Source Sink 59

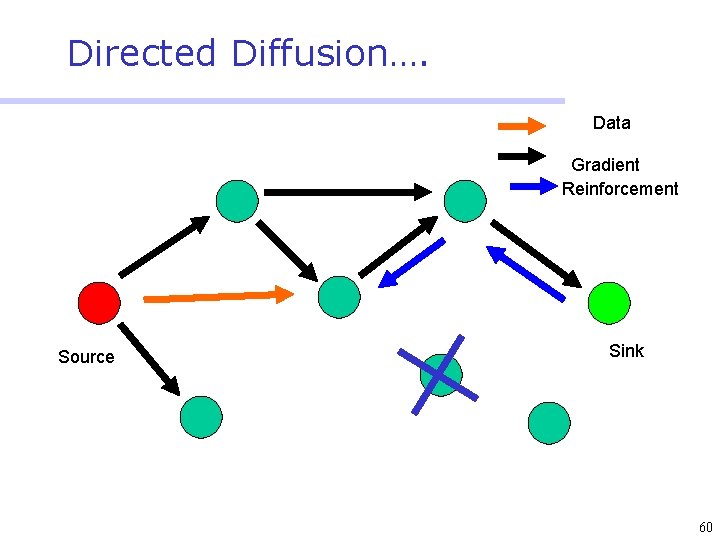

Directed Diffusion…. Data Gradient Reinforcement Source Sink 60

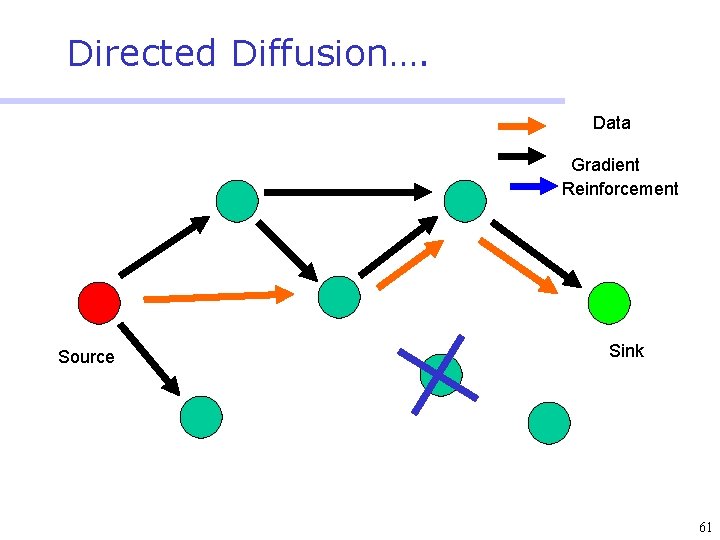

Directed Diffusion…. Data Gradient Reinforcement Source Sink 61

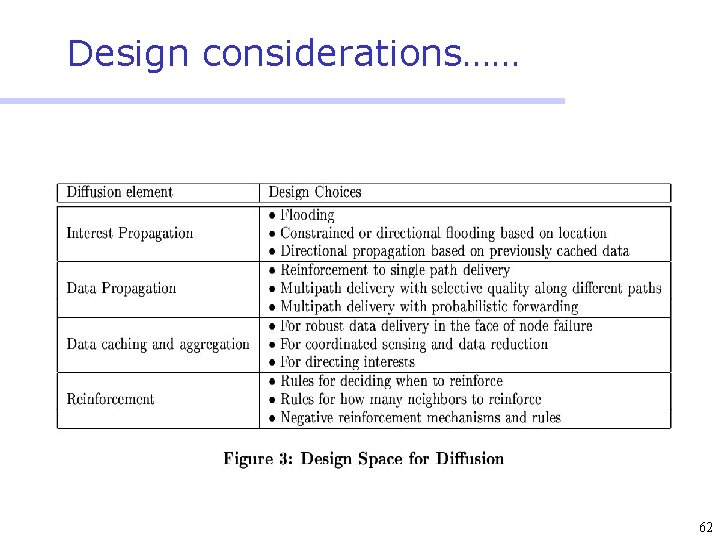

Design considerations…… 62

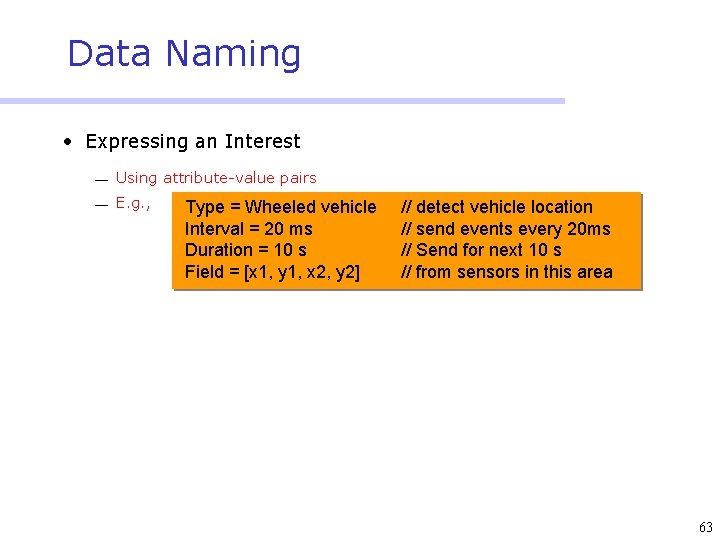

Data Naming • Expressing an Interest ¾ Using attribute-value pairs ¾ E. g. , Type = Wheeled vehicle Interval = 20 ms Duration = 10 s Field = [x 1, y 1, x 2, y 2] // detect vehicle location // send events every 20 ms // Send for next 10 s // from sensors in this area 63

Outline • Convergecast ¾ Routing tree ¾ Grid routing ¾ Reliable bursty broadcast • Broadcast ¾ Flood, Flood-Gossip-Flood ¾ Trickle ¾ Polygonal broadcast, Fire-cracker • Data driven ¾ Directed diffusion ¾ Rumor routing 64

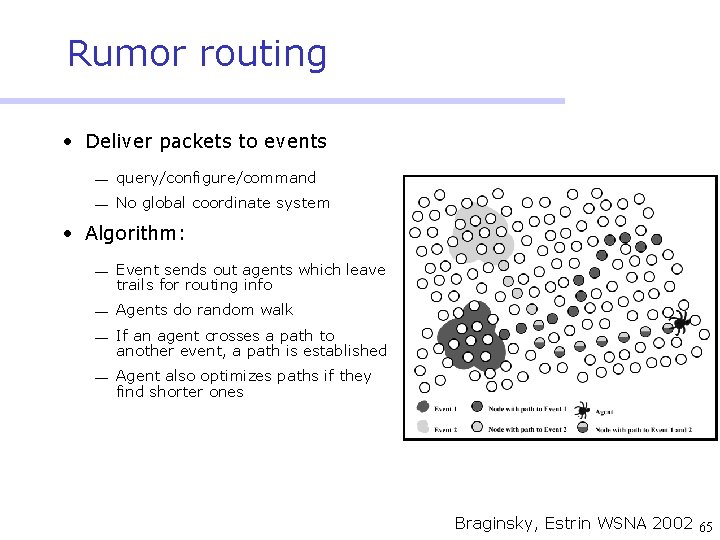

Rumor routing • Deliver packets to events ¾ query/configure/command ¾ No global coordinate system • Algorithm: ¾ Event sends out agents which leave trails for routing info ¾ Agents do random walk ¾ If an agent crosses a path to another event, a path is established ¾ Agent also optimizes paths if they find shorter ones Braginsky, Estrin WSNA 2002 65

- Slides: 65