Root Cause Analysis in the Department of Defense

- Slides: 28

Root Cause Analysis in the Department of Defense February 1 st, 2011 David J. Nicholls David. Nicholls@osd. mil 0

PARCA Introduction 4 PARCA’s institutional role – Provides USD(AT&L) execution-phase situational awareness of major defense acquisition programs – Performs forensics for troubled programs – Reports activities annually to the four defense committees 4 Three functions of PARCA office – “Performance Assessment”; function in statute – “Root Cause Analysis”; function in statute – “EVMS”; function not in statute 1

PARCA Does NOT. . . 4 Forecast program requirements – Funding requirements – Total Life Cycle Cost 4 Evaluate alternative means to execute – Acquisition strategies – Contracting terms / incentives 4 Compare alternative means to achieve an end – Evaluations of alternate approaches – Cost-effectiveness Necessary for independence 2

Overview 4 PARCA root cause analysis 4 Progress so far 4 Areas to pursue 3

Root Cause Analysis Functions 4 Statutory duties defined in WSARA 09 – Conduct root cause analyses for major defense acquisition programs § As part of the Nunn-Mc. Curdy breach certification process § When requested by designated officials. – Issue policies, procedures, and guidance governing the conduct of root cause analyses. 4 Identification of lessons learned for the benefit of acquisition community 4

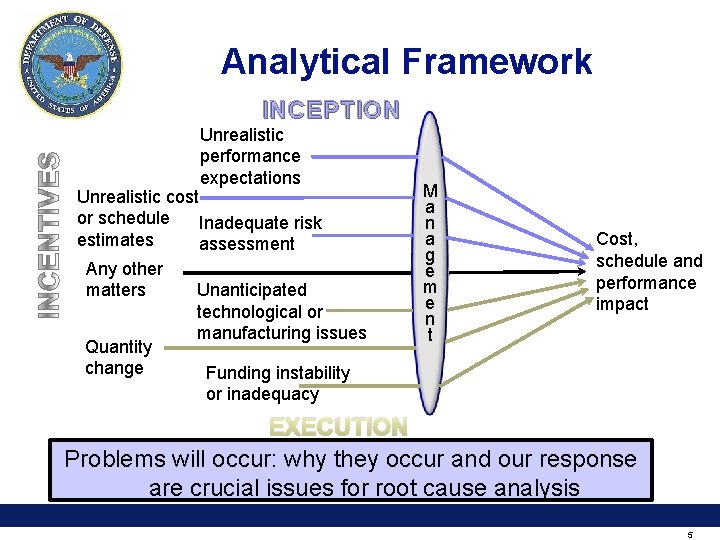

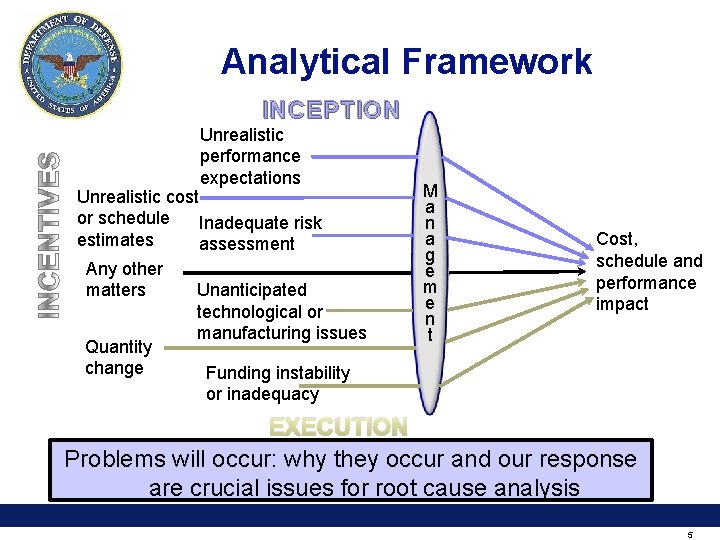

Analytical Framework INCEPTION Unrealistic performance expectations Unrealistic cost or schedule Inadequate risk estimates assessment Any other Unanticipated matters technological or manufacturing issues Quantity change Funding instability or inadequacy M a n a g e m e n t Cost, schedule and performance impact EXECUTION Problems will occur: why they occur and our response are crucial issues for root cause analysis 5

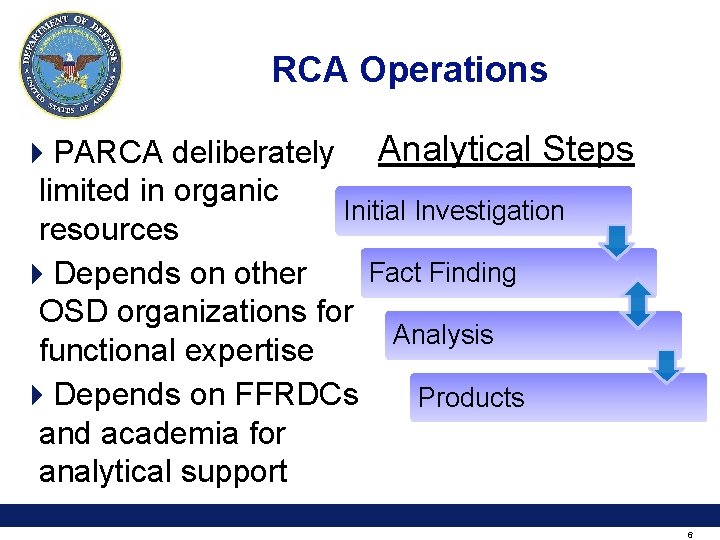

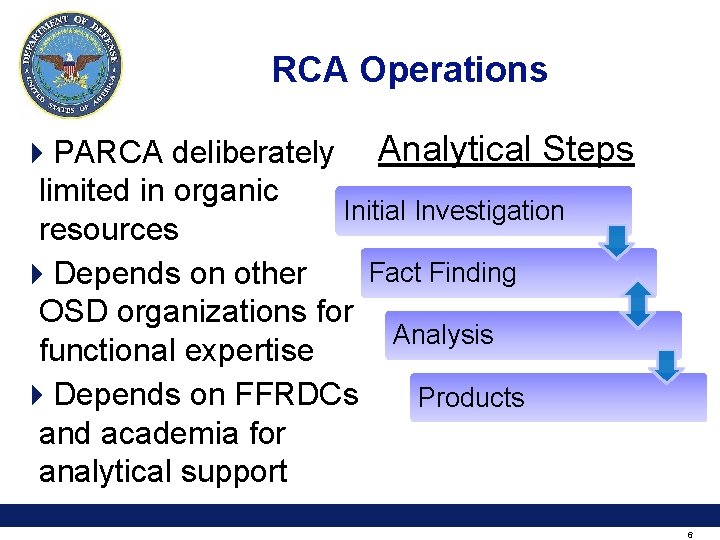

RCA Operations 4 PARCA deliberately Analytical Steps limited in organic Initial Investigation resources Fact Finding 4 Depends on other OSD organizations for Analysis functional expertise 4 Depends on FFRDCs Products and academia for analytical support 6

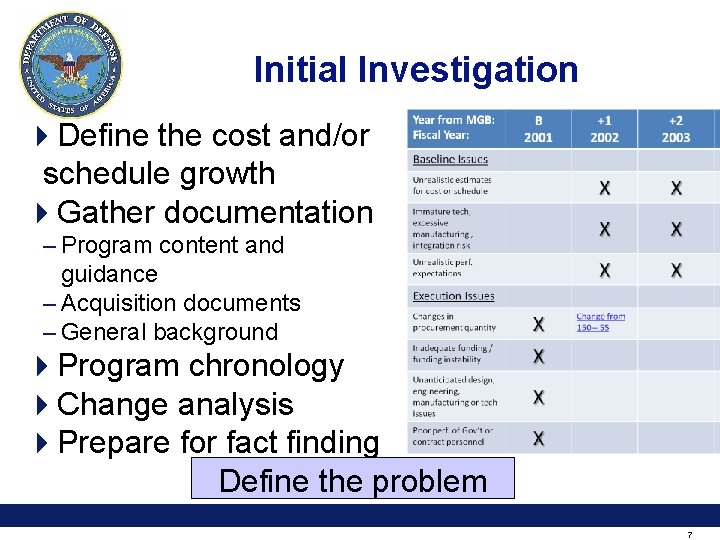

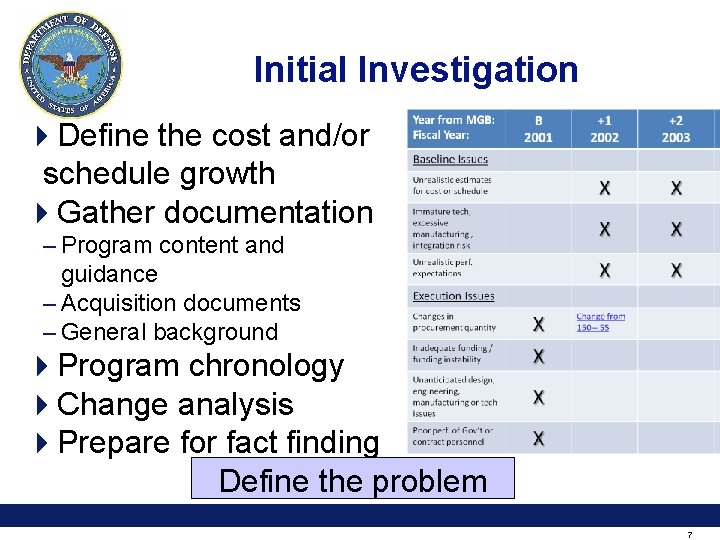

Initial Investigation 4 Define the cost and/or schedule growth 4 Gather documentation – Program content and guidance – Acquisition documents – General background 4 Program chronology 4 Change analysis 4 Prepare for fact finding Define the problem 7

Fact Finding 4 Interviews – Program Office and Contractors – Organizations providing functional support to program 4 Analysis of performance reporting data – EVMS – Cost reporting – Performance metrics 4 Program documentation, studies, reports Comprehend perspectives 8

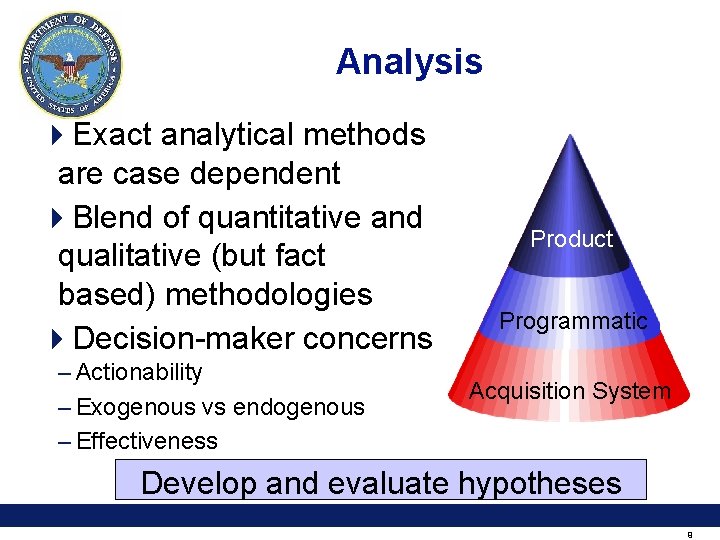

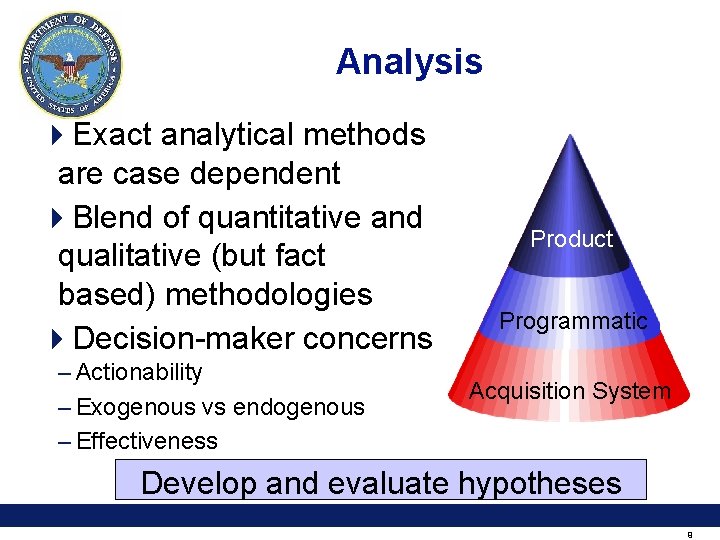

Analysis 4 Exact analytical methods are case dependent 4 Blend of quantitative and qualitative (but fact based) methodologies 4 Decision-maker concerns – Actionability – Exogenous vs endogenous – Effectiveness Product Programmatic Acquisition System Develop and evaluate hypotheses 9

Products 4 One-page memorandum for Nunn-Mc. Curdy certification process – Describes breach and its root causes – Supporting document in the program certification package sent to Congress – Briefing summarizing results 4 Lessons for acquisition community – Reports – Analytical tools 4 Contribution for PARCA’s annual report to Congress 10

Overview 4 PARCA root cause analysis 4 Progress so far 4 Areas to pursue 11

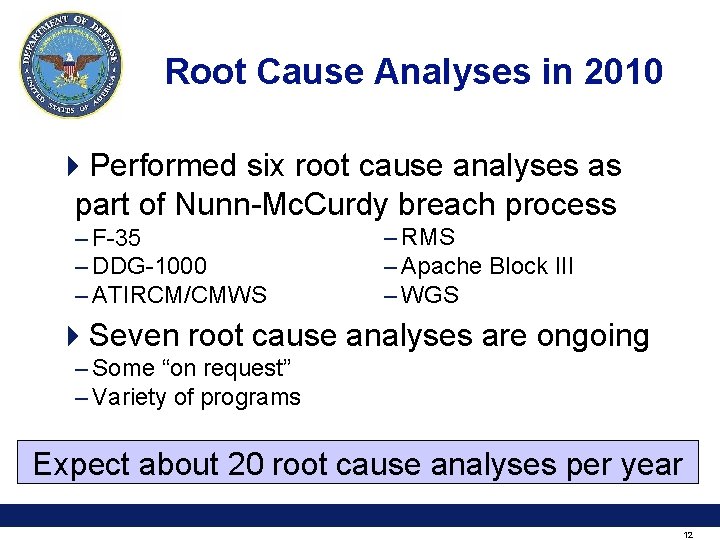

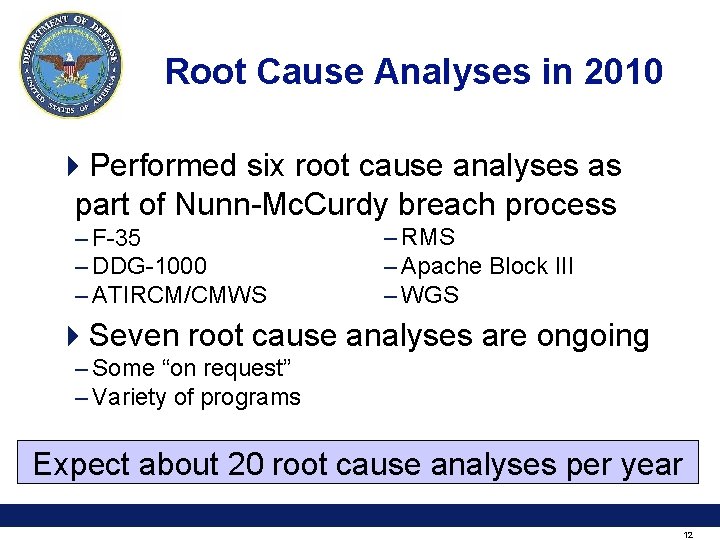

Root Cause Analyses in 2010 4 Performed six root cause analyses as part of Nunn-Mc. Curdy breach process – F-35 – DDG-1000 – ATIRCM/CMWS – RMS – Apache Block III – WGS 4 Seven root cause analyses are ongoing – Some “on request” – Variety of programs Expect about 20 root cause analyses per year 12

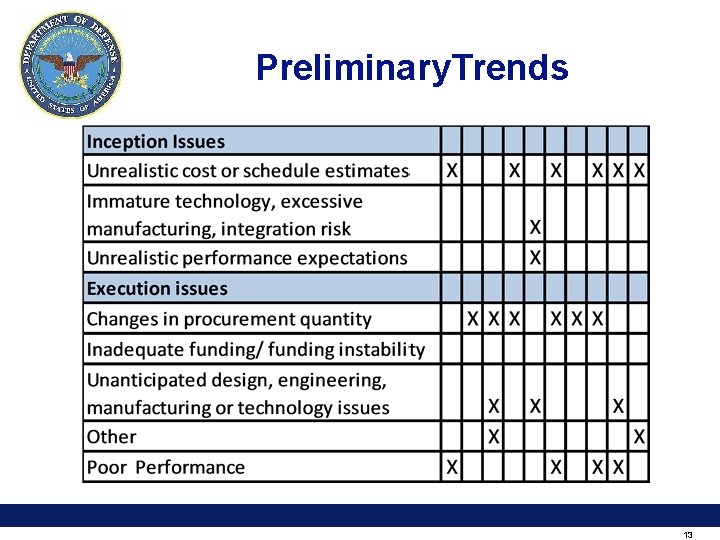

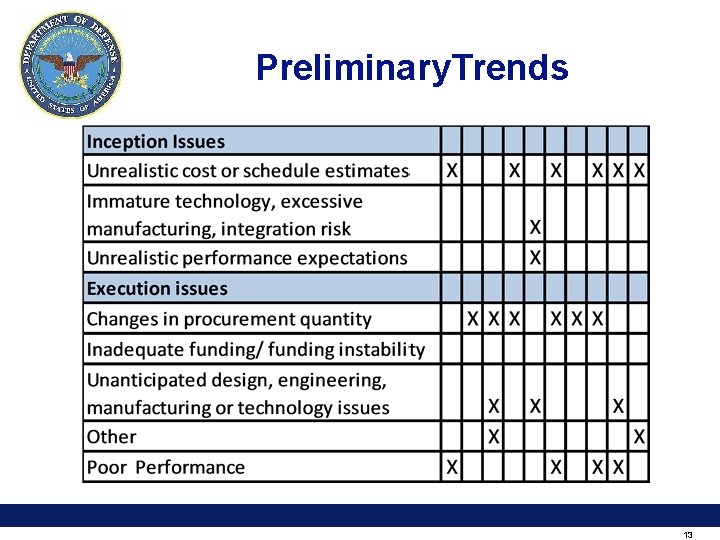

Preliminary. Trends 13

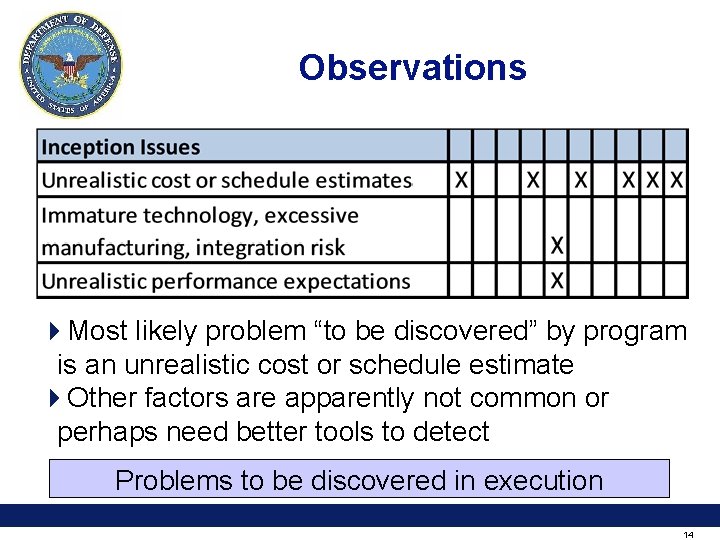

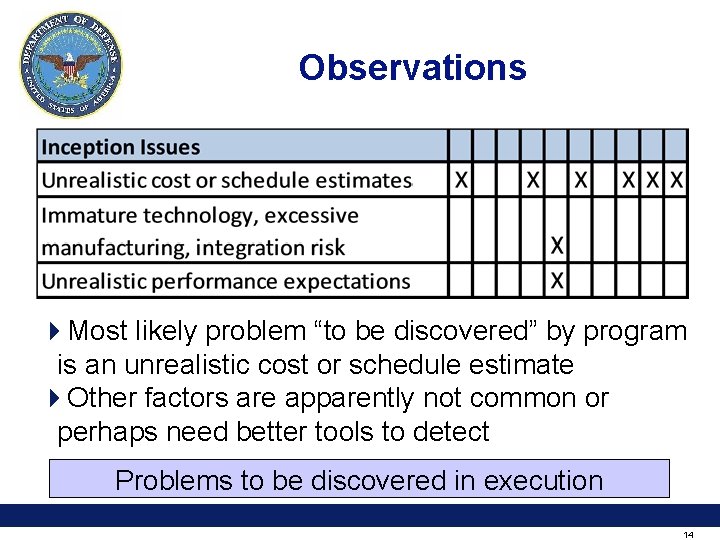

Observations 4 Most likely problem “to be discovered” by program is an unrealistic cost or schedule estimate 4 Other factors are apparently not common or perhaps need better tools to detect Problems to be discovered in execution 14

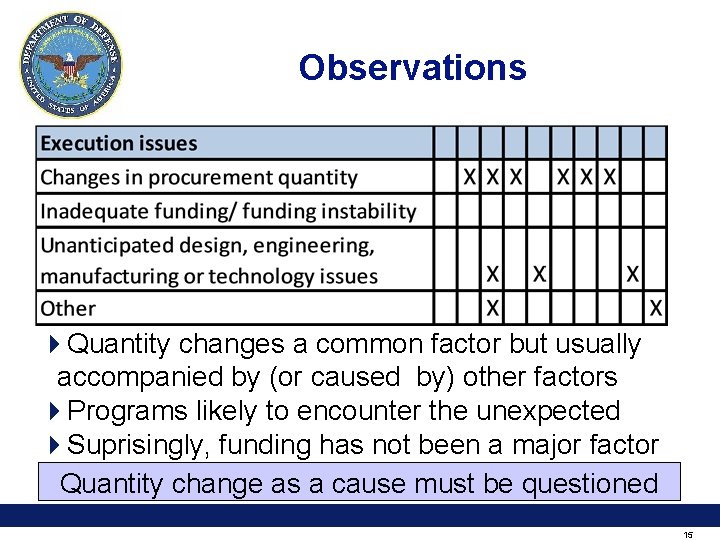

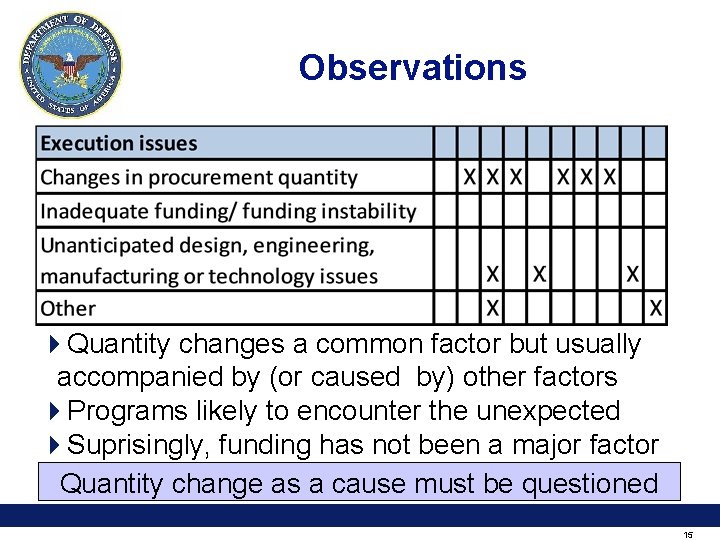

Observations 4 Quantity changes a common factor but usually accompanied by (or caused by) other factors 4 Programs likely to encounter the unexpected 4 Suprisingly, funding has not been a major factor Quantity change as a cause must be questioned 15

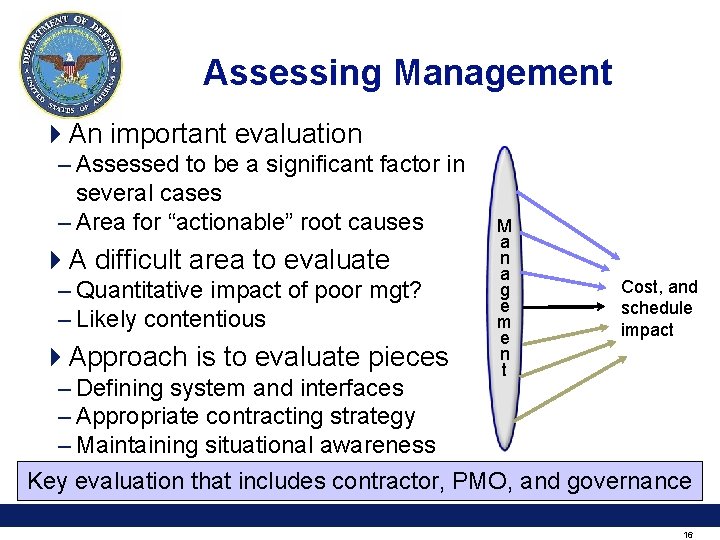

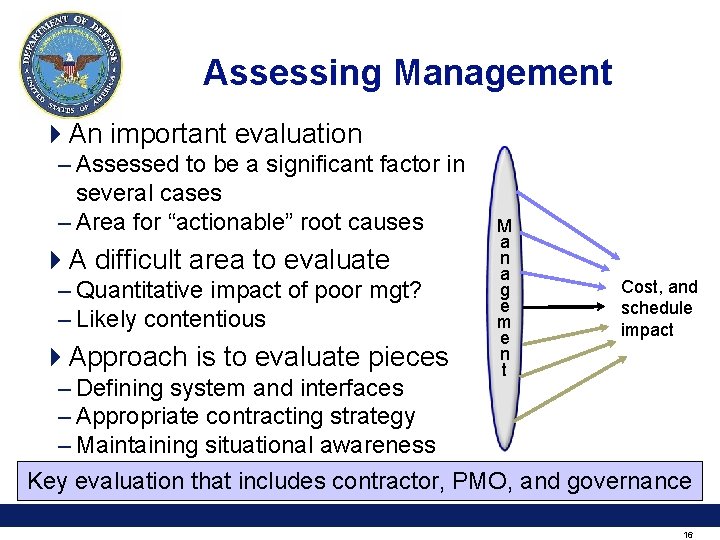

Assessing Management 4 An important evaluation – Assessed to be a significant factor in several cases – Area for “actionable” root causes 4 A difficult area to evaluate – Quantitative impact of poor mgt? – Likely contentious 4 Approach is to evaluate pieces M a n a g e m e n t Cost, and schedule impact – Defining system and interfaces – Appropriate contracting strategy – Maintaining situational awareness Key evaluation that includes contractor, PMO, and governance 16

Defining Systems & Interfaces 4 Complex process which demands SME input § Poorly developed or defined requirements § Undefined dependencies § Insufficient interoperability planning § Requirements instability § Lack of stakeholder involvement § Poorly developed or under specified MS exit/entrance criteria § Insufficient or incomplete SEP § Lack of a well developed Integrated Master Schedule (IMS) § Program Office defaults control/responsibility to contractor § Reliance on schedule over performance § Poor integration test planning § Lack of integrated planning 17

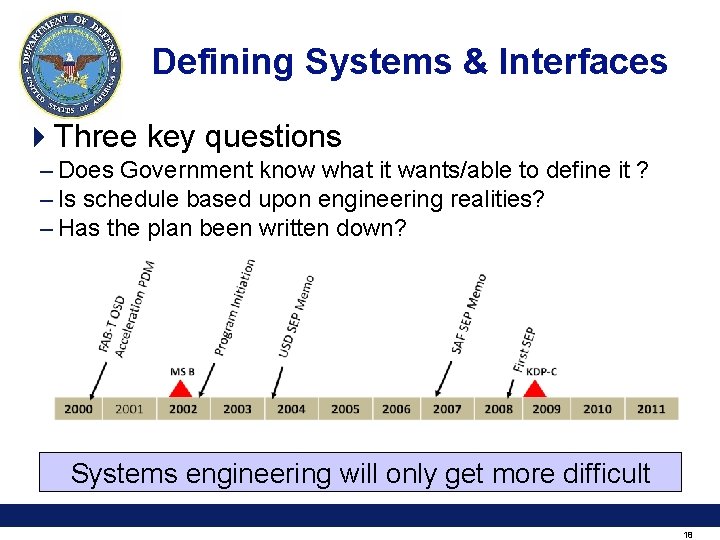

Defining Systems & Interfaces 4 Three key questions – Does Government know what it wants/able to define it ? – Is schedule based upon engineering realities? – Has the plan been written down? Systems engineering will only get more difficult 18

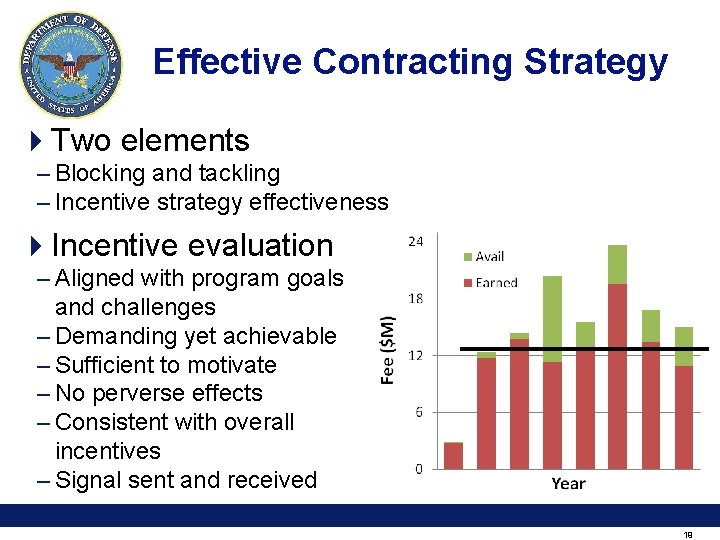

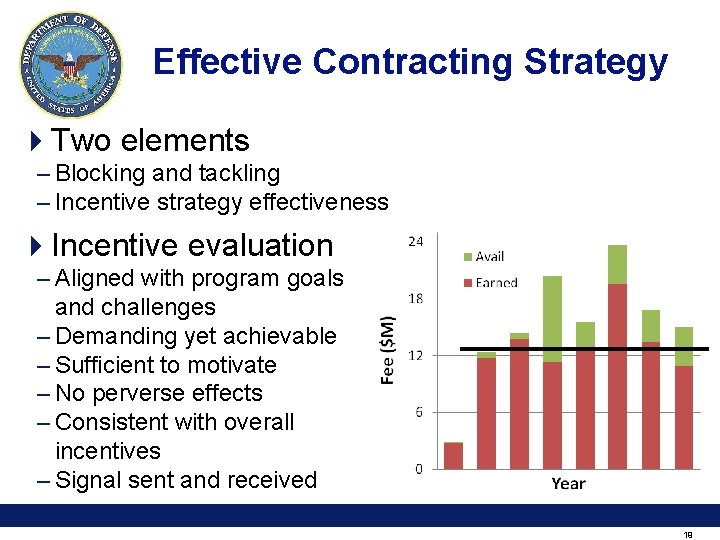

Effective Contracting Strategy 4 Two elements – Blocking and tackling – Incentive strategy effectiveness 4 Incentive evaluation – Aligned with program goals and challenges – Demanding yet achievable – Sufficient to motivate – No perverse effects – Consistent with overall incentives – Signal sent and received 19

Situational Awareness 4 Tailored metrics – Relevant metrics e. g. identification AND tracking of “bets” – Effective metrics e. g. avoiding integration effects 4 Earned Value Management – Compliance – Effectiveness 4 Integrated with PARCA’s program assessments – Semi-annual, statutory reviews after critical Nunn-Mc. Curdy breach – As part of DAES process PA Situational awareness Tailored concerns RCA 20

Overview 4 PARCA root cause analysis 4 Progress so far 4 Areas to pursue 21

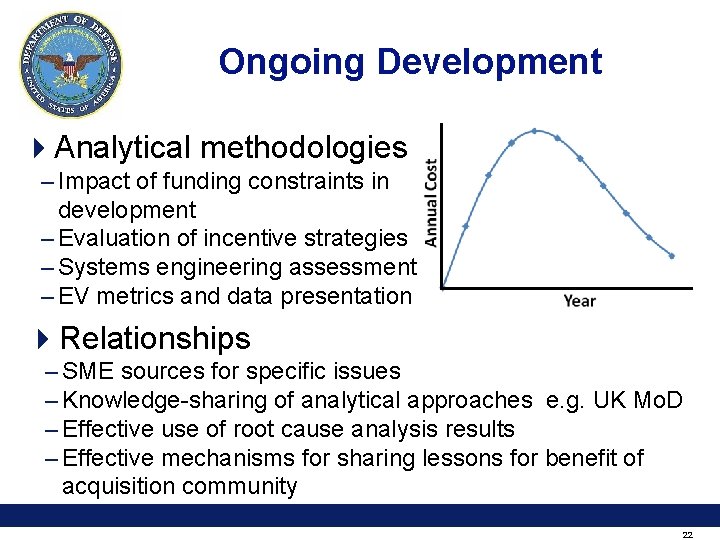

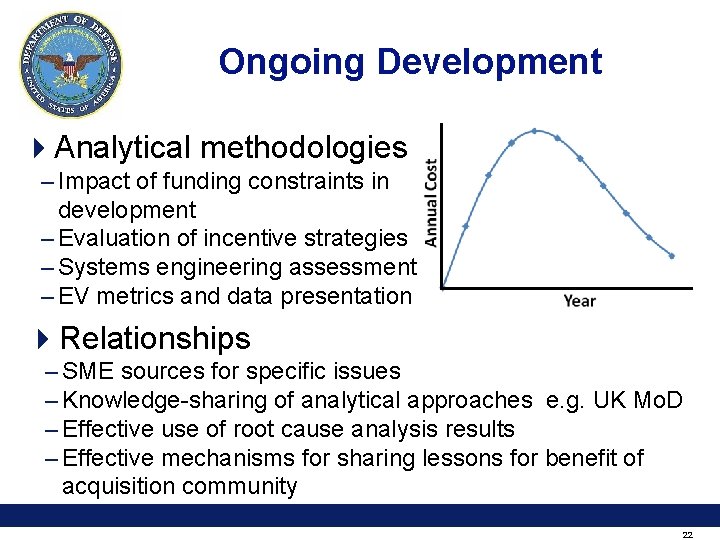

Ongoing Development 4 Analytical methodologies – Impact of funding constraints in development – Evaluation of incentive strategies – Systems engineering assessment – EV metrics and data presentation 4 Relationships – SME sources for specific issues – Knowledge-sharing of analytical approaches e. g. UK Mo. D – Effective use of root cause analysis results – Effective mechanisms for sharing lessons for benefit of acquisition community 22

Policies, Procedures, and Guidance 4 State of play – WSARA 09 requires PARCA to address – Virtually no current guidance for root cause analysis – Other organizations have done some root cause analysis – Key questions exist e. g. is it enough to say that estimate was unrealistic (WSARA 09 category) or should we ask why? 4 Three different purposes – Documentation of PARCA processes – Service conduct of root cause analyses – Program Office conduct of root cause analyses Documentation of organizational lessons 23

Concluding Thoughts 4 Independence 4 Analysis transparently based on facts 4 Clear and accurate definition of the predominant issues – Actionable – Relevant trends 4 Timely results A commitment to not repeating mistakes 24

Discussion Points 1. Poorly developed or defined requirements a. b. c. d. What is the needed capability? “What exactly am I trying to provide to the warfighter? ” Must put bounds on the needed capability to understand task Must specifically (not generally) define the requirements 2. Undefined dependencies a. Which systems must I depend on, which systems must depend on me? b. Lay it out on a chart, identify intersections in the time domain c. Build in “hooks” for known and potentially unknown dependencies 3. Insufficient interoperability planning a. Must be explained in SEP b. Missing test and certification coordination (DISA, JTIC, etc) c. Must be coordinated with dependencies 25

4. Requirements instability a. Requirements authorized initially by JROC for a program b. Incidental changes and “fixes” will quickly escalate to instability c. Do not tolerate requirements changes (biggest threat to a program) 5. Lack of stakeholder involvement a. Every participant in a program , top to bottom, must be involved b. Every participant (including user) has a voice in the outcome c. Need this inclusion to preemptively understand problems and issues d. IPTs, OIPTs, IIPTs, WIPTs are opportunities for inclusion 6. Poorly developed or under specified MS exit/entrance criteria a. Exit/entrance criteria are an indicator of progress (or lack of) b. Not meeting criteria means program cannot go forward c. Criteria must be specific, measurable , quantifiable 26

7. Insufficient or incomplete SEP a. All programs regardless of ACAT designation require a SEP b. Initial SEP required at MS A defines scope and effort of program c. Subsequent SEPs must be specific and detailed e. Lesser than ACAT I programs ignore this at their peril 8. Lack of a well developed Integrated Master Schedule (IMS) a. Sometimes not even available b. Illustrates details of a program end-to-end across timeline c. Needs a Critical Path to demonstrate key points in execution 9. Program Office defaults control/responsibility to contractor a. Contractor does not write the SEP or plan the program b. PO is always the responsible party for program execution c. PO responsibility is to direct and manage the contractor 27