ROD Activities at Dresden Andreas Glatte Andreas Meyer

- Slides: 12

ROD Activities at Dresden Andreas Glatte, Andreas Meyer, Andy Kielburg-Jeka, Arno Straessner LAr Electronics Upgrade Meeting – LAr Week September 2009 1

Outline • ROD trigger L 1 and L 2/EF buffers • Concept for ROD and ROB/ROS • Test Setup in Dresden • Outlook 2

ROD Trigger Buffers and Data Rates • Expected data rates and buffer sizes for one ROD: • at input: ~100 Gbps/FEB x 14 • reduction by L 1 trigger sums: ~ factor 10 (? ) → ~ 140 Gbps to L 1 Calo (? ) • L 1 buffer size (latency 5 μs): ~ 7. 2 Mbit (? ) • assumption: 5 -sample energy+time is calculated each 25 ns • per cell: 16 bit energy/gain/parity + 20%*16 bit time/quality ~ 20 bit → *128 channels *14 FEB *40 MHz * 5 μs = 7. 2 Mbit) • L 1 accept rate: ~200 k. Hz • L 2/EF output rate = ROB input rate: 8. 6 Gbps → over ATCA backplane • derived from the following expectation: 14 FEB * 128 channel * 16 bit * 1. 2 time/quality * 1. 25 (10/8 enc. ) * 200 k. Hz = 8. 6 Gbps • maximum rate could however be: (based on today’s ROB max. input rate) (1. 28 Gbps / 2 FEB) * 14 FEB * 200 k. Hz/100 k. Hz = 18 Gpbs • L 2 latency: ~ 40 ms • ROB buffer size: 4. 1 Gbit (for 12 RODs = 1 crate) • 8. 6 Gbps * 12 * 40 ms • ROB output rate (data requested by L 2/EF/DAQ): ~ 4 Gbps • 0. 3 Gbps / (12 links * 2 FEB) * 14 FEB * 12 ROD * 2 (occupancy) 3

different options Communication between ROD and ROB/ROS 4

ROB/ROS 5

Test Setup 6

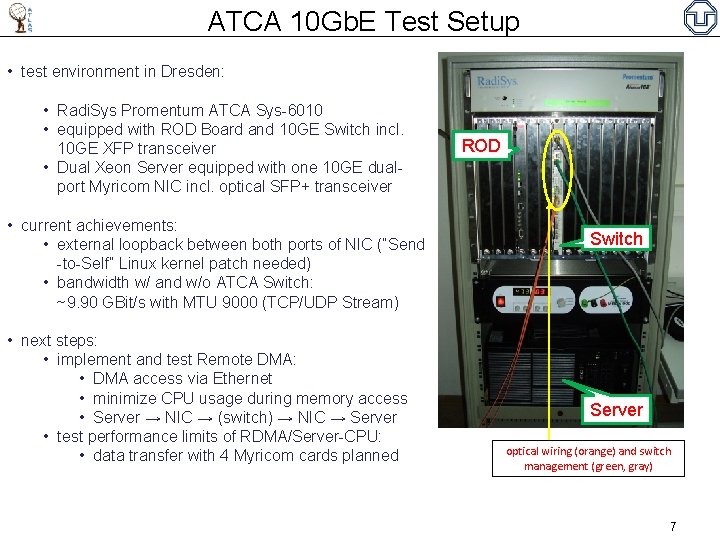

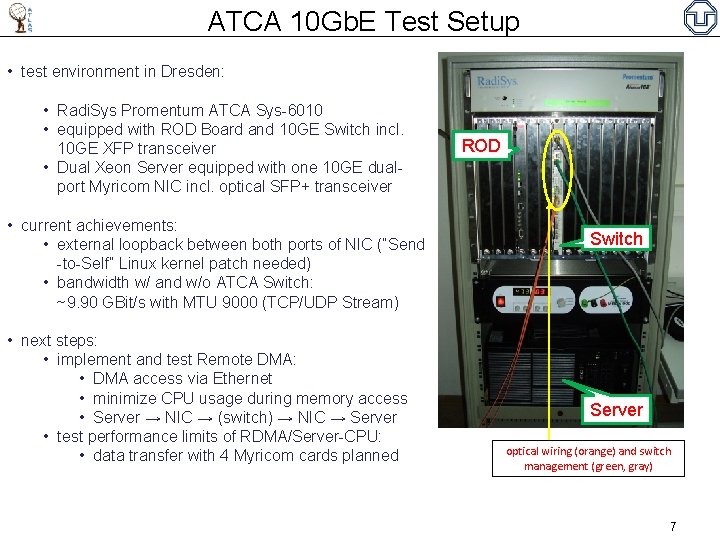

ATCA 10 Gb. E Test Setup • test environment in Dresden: • Radi. Sys Promentum ATCA Sys-6010 • equipped with ROD Board and 10 GE Switch incl. 10 GE XFP transceiver • Dual Xeon Server equipped with one 10 GE dualport Myricom NIC incl. optical SFP+ transceiver • current achievements: • external loopback between both ports of NIC (“Send -to-Self” Linux kernel patch needed) • bandwidth w/ and w/o ATCA Switch: ~9. 90 GBit/s with MTU 9000 (TCP/UDP Stream) • next steps: • implement and test Remote DMA: • DMA access via Ethernet • minimize CPU usage during memory access • Server → NIC → (switch) → NIC → Server • test performance limits of RDMA/Server-CPU: • data transfer with 4 Myricom cards planned ROD Switch Server optical wiring (orange) and switch management (green, gray) 7

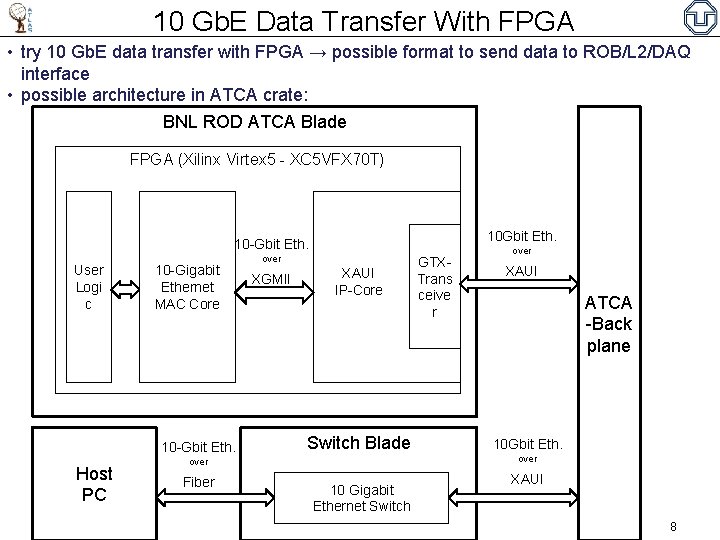

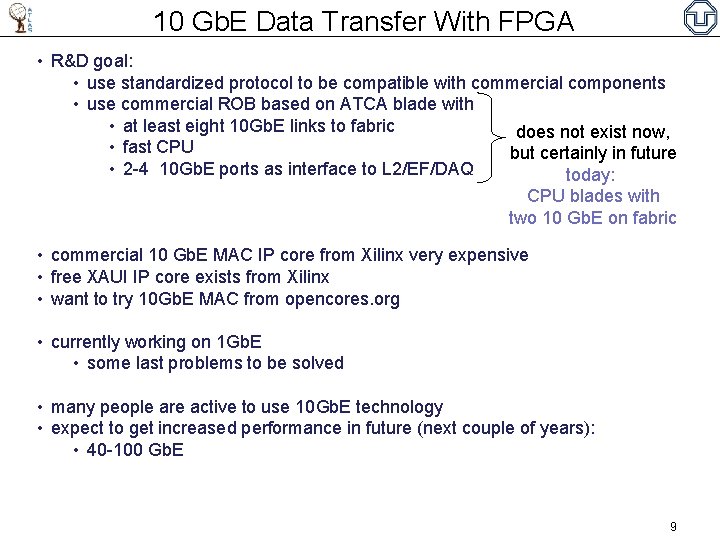

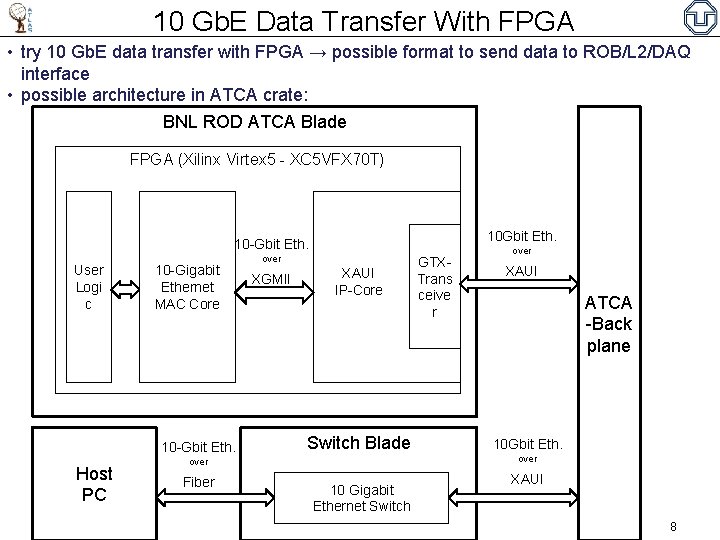

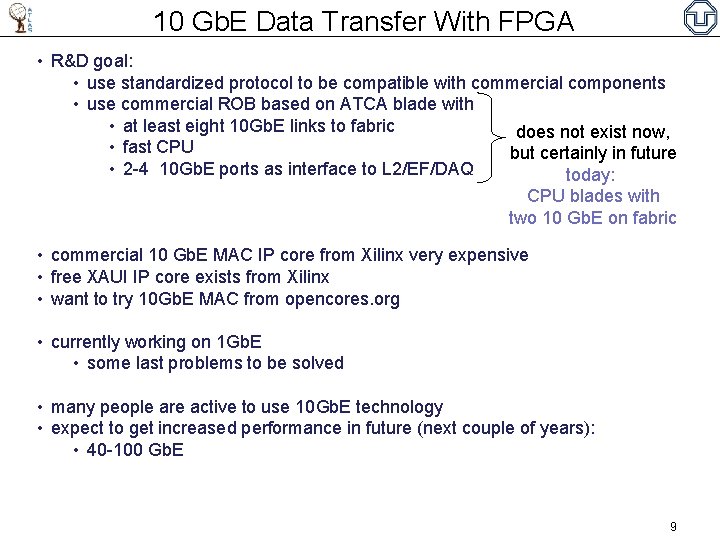

10 Gb. E Data Transfer With FPGA • try 10 Gb. E data transfer with FPGA → possible format to send data to ROB/L 2/DAQ interface • possible architecture in ATCA crate: BNL ROD ATCA Blade FPGA (Xilinx Virtex 5 - XC 5 VFX 70 T) 10 Gbit Eth. 10 -Gbit Eth. User Logi c 10 -Gigabit Ethernet MAC Core 10 -Gbit Eth. Host PC over XGMII XAUI IP-Core Switch Blade over XAUI ATCA -Back plane 10 Gbit Eth. over Fiber GTXTrans ceive r 10 Gigabit Ethernet Switch XAUI 8

10 Gb. E Data Transfer With FPGA • R&D goal: • use standardized protocol to be compatible with commercial components • use commercial ROB based on ATCA blade with • at least eight 10 Gb. E links to fabric does not exist now, • fast CPU but certainly in future • 2 -4 10 Gb. E ports as interface to L 2/EF/DAQ today: CPU blades with two 10 Gb. E on fabric • commercial 10 Gb. E MAC IP core from Xilinx very expensive • free XAUI IP core exists from Xilinx • want to try 10 Gb. E MAC from opencores. org • currently working on 1 Gb. E • some last problems to be solved • many people are active to use 10 Gb. E technology • expect to get increased performance in future (next couple of years): • 40 -100 Gb. E 9

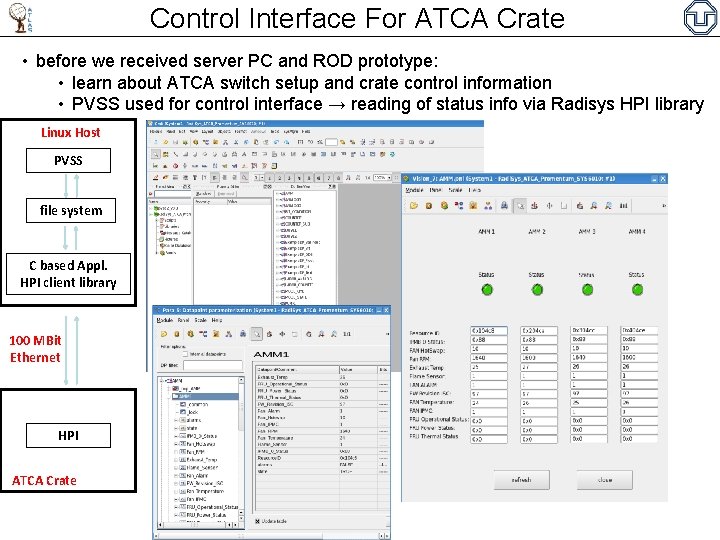

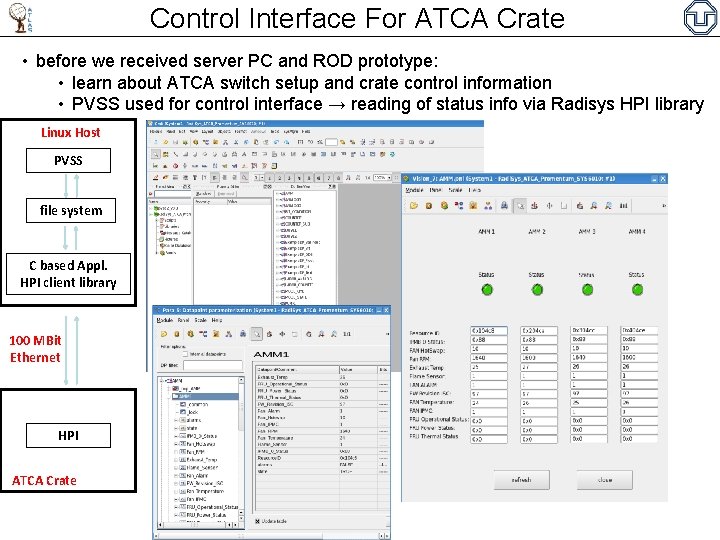

Control Interface For ATCA Crate • before we received server PC and ROD prototype: • learn about ATCA switch setup and crate control information • PVSS used for control interface → reading of status info via Radisys HPI library Linux Host PVSS file system C based Appl. HPI client library 100 MBit Ethernet HPI ATCA Crate

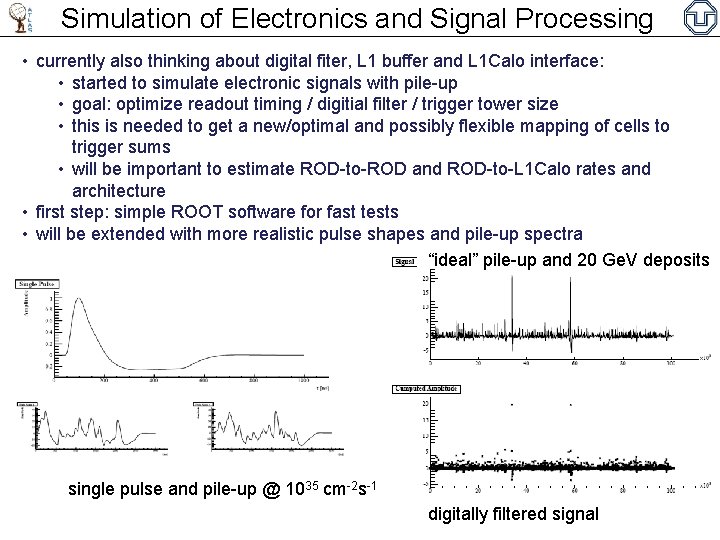

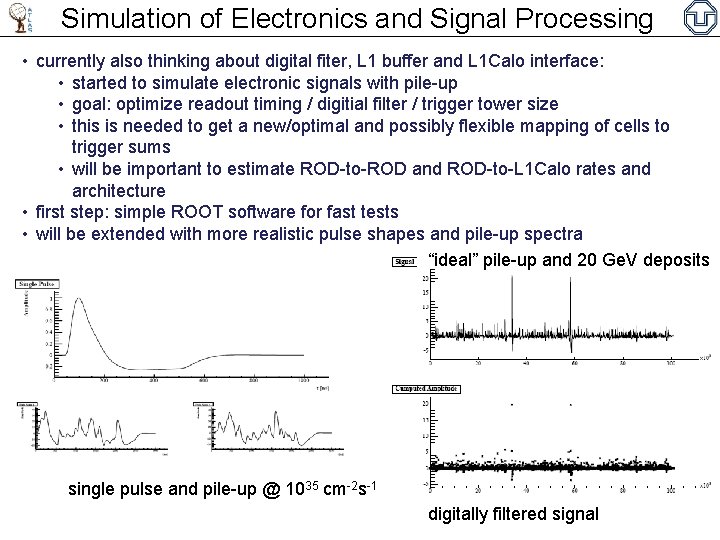

Simulation of Electronics and Signal Processing • currently also thinking about digital fiter, L 1 buffer and L 1 Calo interface: • started to simulate electronic signals with pile-up • goal: optimize readout timing / digitial filter / trigger tower size • this is needed to get a new/optimal and possibly flexible mapping of cells to trigger sums • will be important to estimate ROD-to-ROD and ROD-to-L 1 Calo rates and architecture • first step: simple ROOT software for fast tests • will be extended with more realistic pulse shapes and pile-up spectra “ideal” pile-up and 20 Ge. V deposits single pulse and pile-up @ 1035 cm-2 s-1 digitally filtered signal

Summary and Outlook • we are learning to use ATCA and 10 Gb. E • performance with server PC and ATCA switch reaches 9. 9 Gbps as expected • next big projects: • Remote DMA • 10 Gb. E in FPGA • continue electronics simulation → ROD/L 1 Calo interface 12