Robust speaking rate estimation using broad phonetic class

Robust speaking rate estimation using broad phonetic class recognition Jiahong Yuan and Mark Liberman University of Pennsylvania Mar. 16, 2010

Introduction • Speaking rate has been found to be related to many factors (Yuan et al. 2006, Jacewicz et al. 2009): young people > old people northern speakers > southern speakers (American English) male speakers > female speakers long utterances > short utterances emotion, style, conversation topics, foreign accent, etc. • Listeners ‘normalize’ speaking rate in speech perception (Miller and Liberman 1979); and speaking rate affects listeners’ attitudes to the speaker and the message (Megehee et al. 2003). • Speaking rate also affects the performance of automatic speech recognition. Fast and slow speech lead to a higher word error rate (Siegler and Stern, 1995, Mirghafori et al, 1996). Yuan and Liberman: ICASSP 2010 2

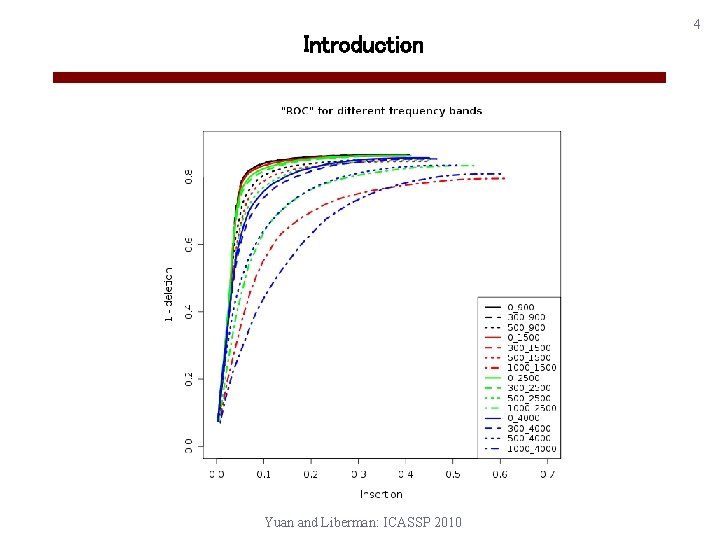

Introduction • The conventional method for building a robust speaking rate estimator is to do syllable detection based on energy measurements and peak picking algorithms (Mermelstein 1975, Morgan and Fosler-Lussier 1998, Xie amd Niyogi 2006, Wang and Narayanan 2007, Zhang and Glass 2009). • The studies have utilized full-band energy, sub-band energy, and sub-band energy correlation in syllable detection. • Howitt (2000) demonstrated that energy in a fixed frequency band (300 -900 Hz) was as good for finding vowel landmarks as the energy at the first formant. • Our study on syllable detection using the convex-hull algorithm (Mermelstein 1975) also shows that this frequency band has the best results. Yuan and Liberman: ICASSP 2010 3

Introduction Yuan and Liberman: ICASSP 2010 4

Introduction • Using automatic speech recognition for speaking rate estimation would be a natural approach, however: • The performance of ASR is much affected by speaking rate; • ASR only works well when the training and test data are from the same speech genre, dialect, or language. • For speaking rate estimation, what is important is not the recognition word error rate (WER) or phone error rate. A recognizer that can robustly distinguish between vowels and consonants would be sufficient. broad phonetic class recognition for speaking rate estimation Yuan and Liberman: ICASSP 2010 5

Introduction • The broad phonetic classes possess more distinct spectral characteristics than the phones within the same broad phonetic classes. • It has been found that almost 80% of misclassified phonemes were within the same broad phonetic class (Halberstadt and Glass 1997). • Broad phonetic classes have been applied for improved phone recognition, and have been shown to be more robust in noise (Scanlon et al. 2007, Sainath and Zue 2008). • Broad phonetic classes have also been used in large vocabulary ASR to overcome the issue of data sparsity and robustness, e. g. , decision treebased clustering with broad phonetic classes. Yuan and Liberman: ICASSP 2010 6

Data and Method • A broad phonetic class recognizer was built using 34, 656 speaker turns from the SCOTUS corpus (~ 66 hours). • The speaker turns were first forced aligned using the Penn Phonetics Lab Forced Aligner, and then, the aligned phones were mapped to broad phonetic classes for training. • The acoustic models are mono broad-class three-state HMMs. Each HMM has 64 Gaussian Mixture components on 39 PLP coefficients. The language model is broad-class bigram probabilities. • To compare, a general monophone recognizer was also built using the same data. • The training was done using the HTK Toolkit, and the HVite tool in HTK was used for testing. Yuan and Liberman: ICASSP 2010 7

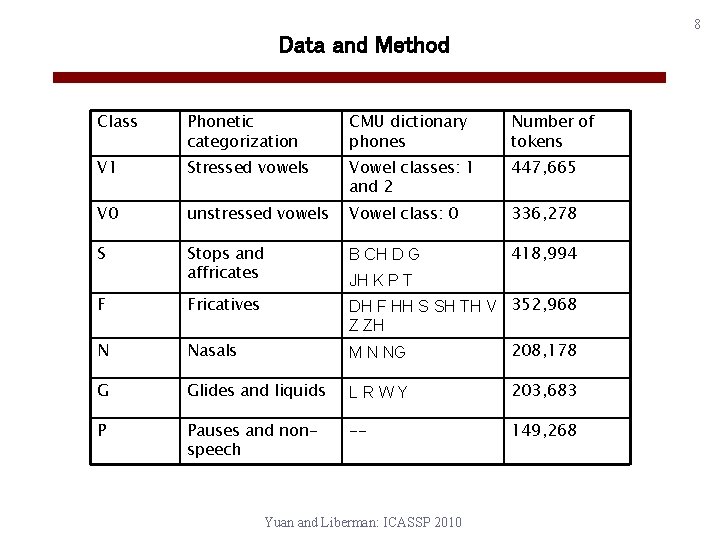

8 Data and Method Class Phonetic categorization CMU dictionary phones Number of tokens V 1 Stressed vowels Vowel classes: 1 and 2 447, 665 V 0 unstressed vowels Vowel class: 0 336, 278 S Stops and affricates B CH D G 418, 994 F Fricatives DH F HH S SH TH V 352, 968 Z ZH N Nasals M N NG 208, 178 G Glides and liquids LRWY 203, 683 P Pauses and nonspeech -- 149, 268 JH K P T Yuan and Liberman: ICASSP 2010

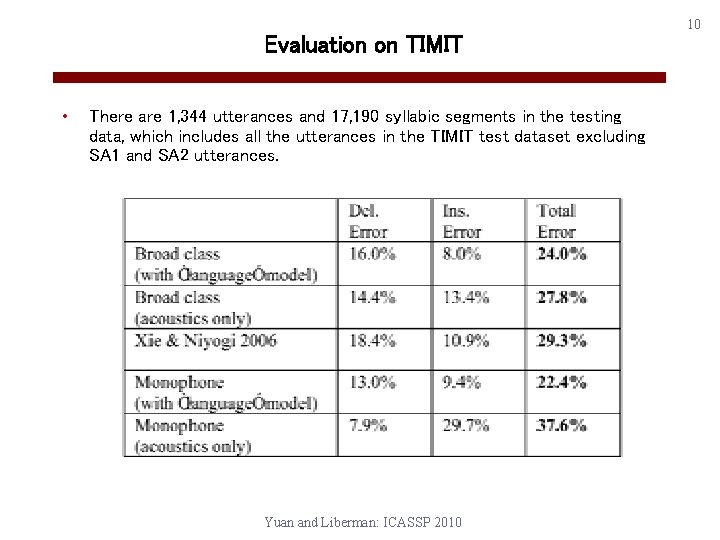

Evaluation on TIMIT • There is no standard scoring toolkit for syllable detection evaluation. We follow the evaluation method in Xie and Niyogi (2006): • Find the middle points of the vowel segments from the recognition output. • A point is counted as correct if it is located within a syllabic segment, otherwise, it is counted as incorrect. • If two or more points are located within a syllabic segment, only one of them is counted as correct and the others as incorrect. • The incorrect points are insertion errors, and the syllabic segments that don’t have any correct points are deletion errors. • Deletion and insertion error rates are both calculated against the number of syllabic segments in the testing data. Yuan and Liberman: ICASSP 2010 9

Evaluation on TIMIT • There are 1, 344 utterances and 17, 190 syllabic segments in the testing data, which includes all the utterances in the TIMIT test dataset excluding SA 1 and SA 2 utterances. Yuan and Liberman: ICASSP 2010 10

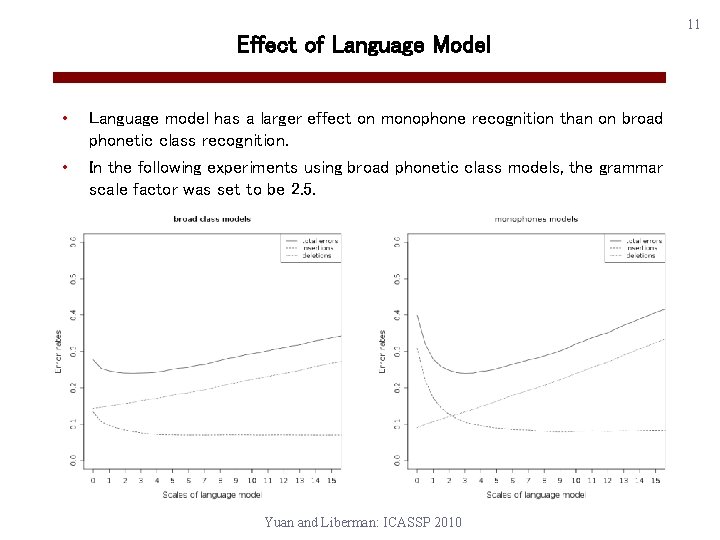

Effect of Language Model • Language model has a larger effect on monophone recognition than on broad phonetic class recognition. • In the following experiments using broad phonetic class models, the grammar scale factor was set to be 2. 5. Yuan and Liberman: ICASSP 2010 11

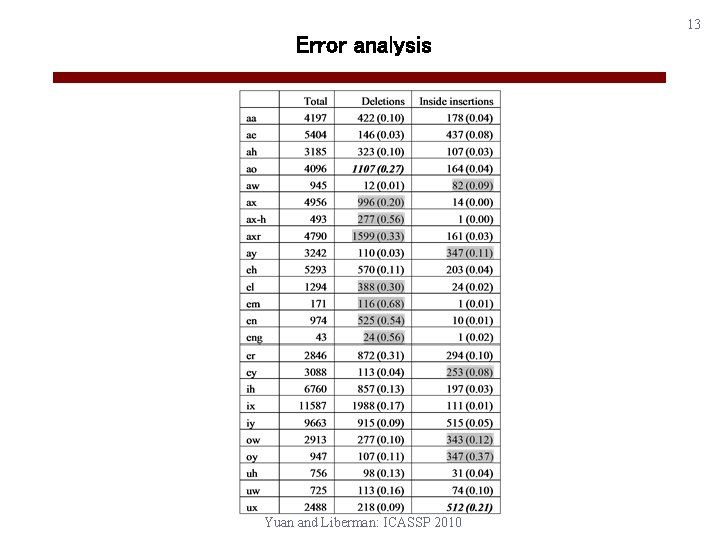

Error analysis • There were 7, 448 outside insertions in total, among which: • /r, l, y, w/: 3635 (48. 8%) • /q/: 1411 (18. 9%) - “a glottal stop that “may be an allophone of t, or may mark an initial vowel or a vowel-vowel boundary”. • The syllabic nasals and laterals, /el, em, eng/, and the schwa vowels, /ax, ax-h, ax-r/, are more likely to be deleted. • The diphthongs, /aw, ay, ey, ow, oy/, are more likely to have inside insertions. Yuan and Liberman: ICASSP 2010 12

Error analysis Yuan and Liberman: ICASSP 2010 13

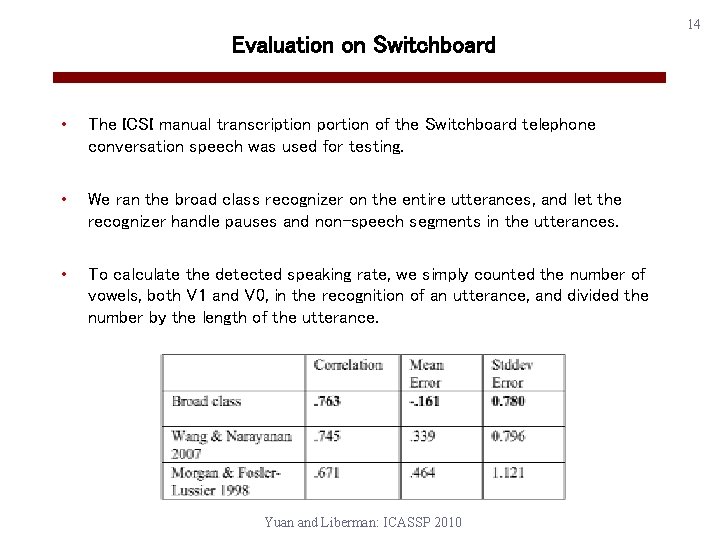

Evaluation on Switchboard • The ICSI manual transcription portion of the Switchboard telephone conversation speech was used for testing. • We ran the broad class recognizer on the entire utterances, and let the recognizer handle pauses and non-speech segments in the utterances. • To calculate the detected speaking rate, we simply counted the number of vowels, both V 1 and V 0, in the recognition of an utterance, and divided the number by the length of the utterance. Yuan and Liberman: ICASSP 2010 14

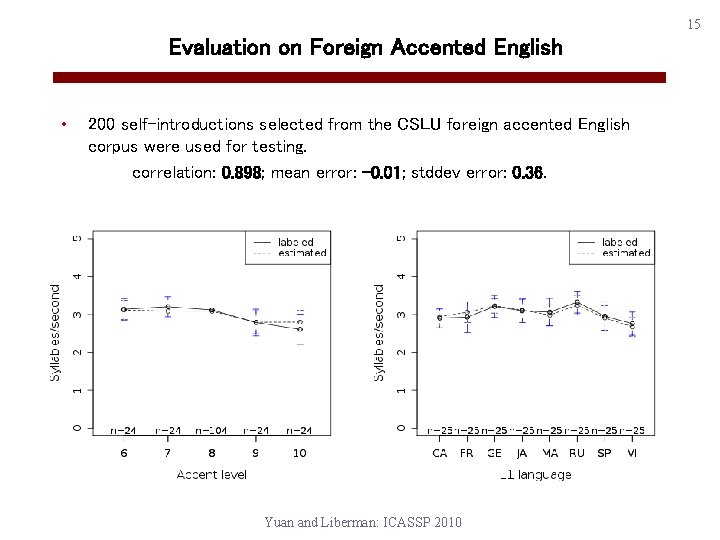

15 Evaluation on Foreign Accented English • 200 self-introductions selected from the CSLU foreign accented English corpus were used for testing. correlation: 0. 898; mean error: -0. 01; stddev error: 0. 36. Yuan and Liberman: ICASSP 2010

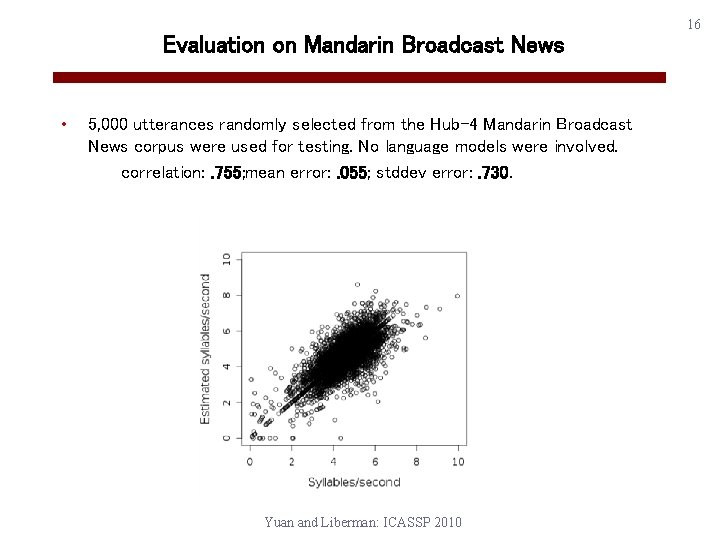

Evaluation on Mandarin Broadcast News • 5, 000 utterances randomly selected from the Hub-4 Mandarin Broadcast News corpus were used for testing. No language models were involved. correlation: . 755; mean error: . 055; stddev error: . 730. Yuan and Liberman: ICASSP 2010 16

Conclusion • We built a broad phonetic class recognizer, and applied it to syllable detection and speaking rate estimation. Its performance is comparable to state-of-the-art syllable detection and speaking rate estimation algorithms, and it is robust for different speech genres and different languages without tuning any parameters. • Unlike the previous algorithms, the broad class phonetic recognizer can automatically handle pauses and non-speech segments. This presents a great advantage for estimating speaking rate in natural speech. • With no language models involved, the broad class recognizer still has good performance on syllable detection and speaking rate estimation, which opens up many opportunities for application. Yuan and Liberman: ICASSP 2010 17

- Slides: 17