Richard Montague Andrey Markov Montague Meets Markov Deep

![Markov Logic Networks [Richardson & Domingos, 2006] • MLN: Soft FOL – Weighted rules Markov Logic Networks [Richardson & Domingos, 2006] • MLN: Soft FOL – Weighted rules](https://slidetodoc.com/presentation_image_h2/a603fc7ad21cc2621fcb82d16099a9e8/image-6.jpg)

![Markov Logic Networks [Richardson & Domingos, 2006] • MLN: Template for constructing Markov networks Markov Logic Networks [Richardson & Domingos, 2006] • MLN: Template for constructing Markov networks](https://slidetodoc.com/presentation_image_h2/a603fc7ad21cc2621fcb82d16099a9e8/image-7.jpg)

![Markov Logic Networks [Richardson & Domingos, 2006] • Probability Mass Function (PMF) a possible Markov Logic Networks [Richardson & Domingos, 2006] • Probability Mass Function (PMF) a possible](https://slidetodoc.com/presentation_image_h2/a603fc7ad21cc2621fcb82d16099a9e8/image-8.jpg)

![Preliminary Results: RTE-1(2005) System Accuracy Logic only: [Bos & Markert, 2005] 52% Our System Preliminary Results: RTE-1(2005) System Accuracy Logic only: [Bos & Markert, 2005] 52% Our System](https://slidetodoc.com/presentation_image_h2/a603fc7ad21cc2621fcb82d16099a9e8/image-15.jpg)

- Slides: 23

Richard Montague Andrey Markov Montague Meets Markov: Deep Semantics with Probabilistic Logical Form Islam Beltagy, Cuong Chau, Gemma Boleda, Dan Garrette, Katrin Erk, Raymond Mooney The University of Texas at Austin

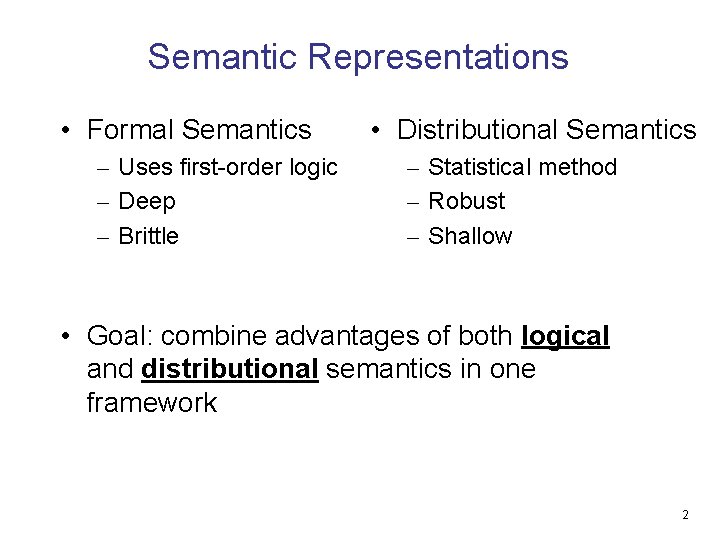

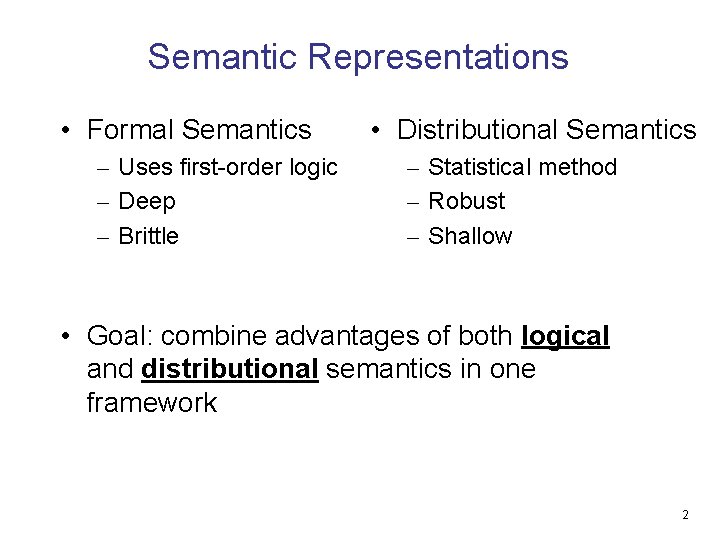

Semantic Representations • Formal Semantics – Uses first-order logic – Deep – Brittle • Distributional Semantics – Statistical method – Robust – Shallow • Goal: combine advantages of both logical and distributional semantics in one framework 2

Semantic Representations • Combining both logical and distributional semantics – Represent meaning using a probabilistic logic (in contrast with standard first-order logic) • Markov Logic Network (MLN) – Generate soft inference rules • From distributional semantics x hamster(x) → gerbil(x) | f(w) 3

Agenda • • • Introduction Background: MLN RTE STS Future work and Conclusion 4

Agenda • • • Introduction Background: MLN RTE STS Future work and Conclusion 5

![Markov Logic Networks Richardson Domingos 2006 MLN Soft FOL Weighted rules Markov Logic Networks [Richardson & Domingos, 2006] • MLN: Soft FOL – Weighted rules](https://slidetodoc.com/presentation_image_h2/a603fc7ad21cc2621fcb82d16099a9e8/image-6.jpg)

Markov Logic Networks [Richardson & Domingos, 2006] • MLN: Soft FOL – Weighted rules Rules weights FOL rules 6

![Markov Logic Networks Richardson Domingos 2006 MLN Template for constructing Markov networks Markov Logic Networks [Richardson & Domingos, 2006] • MLN: Template for constructing Markov networks](https://slidetodoc.com/presentation_image_h2/a603fc7ad21cc2621fcb82d16099a9e8/image-7.jpg)

Markov Logic Networks [Richardson & Domingos, 2006] • MLN: Template for constructing Markov networks • Two constants: Anna (A) and Bob (B) Friends(A, A) Smokes(B) Cancer(A) Friends(B, B) Cancer(B) Friends(B, A) 7

![Markov Logic Networks Richardson Domingos 2006 Probability Mass Function PMF a possible Markov Logic Networks [Richardson & Domingos, 2006] • Probability Mass Function (PMF) a possible](https://slidetodoc.com/presentation_image_h2/a603fc7ad21cc2621fcb82d16099a9e8/image-8.jpg)

Markov Logic Networks [Richardson & Domingos, 2006] • Probability Mass Function (PMF) a possible truth assignment Normalization constant Weight of formula i No. of true groundings of formula i in x • Inference: calculate probability of atoms – P(Cancer(Anna) | Friends(Anna, Bob), Smokes(Bob)) 8

Agenda • • • Introduction Background: MLN RTE STS Future work and Conclusion 9

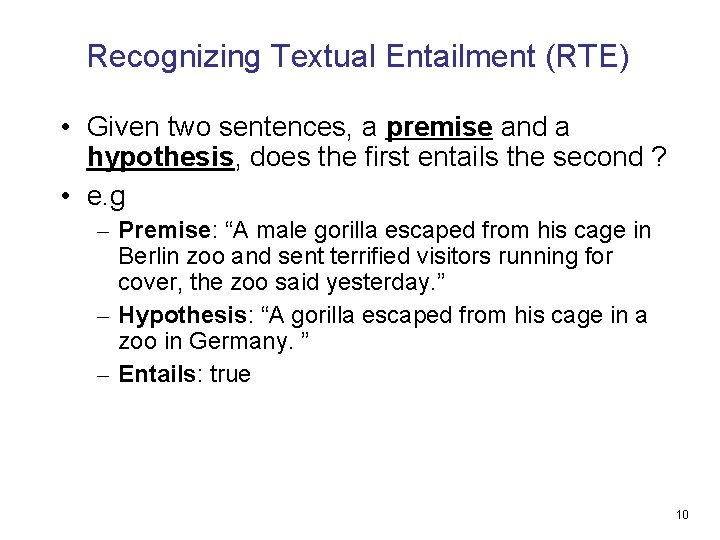

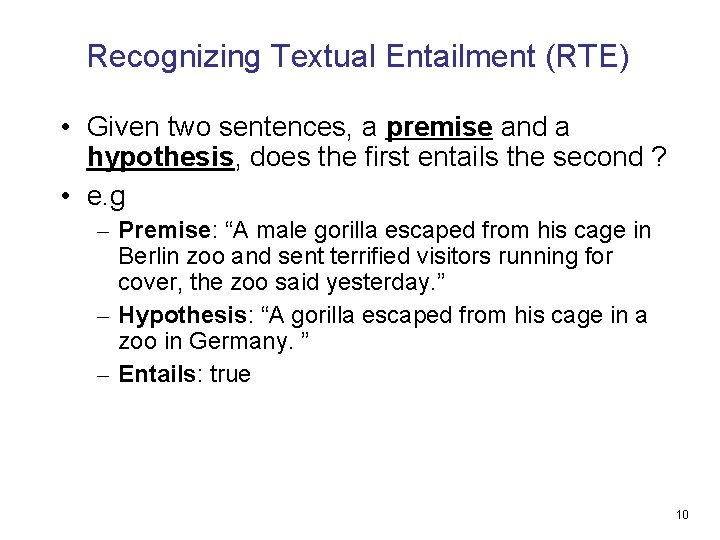

Recognizing Textual Entailment (RTE) • Given two sentences, a premise and a hypothesis, does the first entails the second ? • e. g – Premise: “A male gorilla escaped from his cage in Berlin zoo and sent terrified visitors running for cover, the zoo said yesterday. ” – Hypothesis: “A gorilla escaped from his cage in a zoo in Germany. ” – Entails: true 10

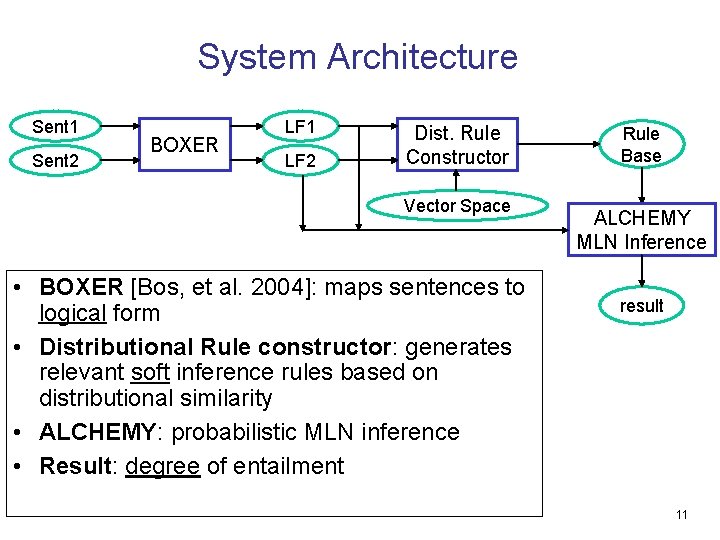

System Architecture Sent 1 Sent 2 BOXER LF 1 LF 2 Dist. Rule Constructor Vector Space • BOXER [Bos, et al. 2004]: maps sentences to logical form • Distributional Rule constructor: generates relevant soft inference rules based on distributional similarity • ALCHEMY: probabilistic MLN inference • Result: degree of entailment Rule Base ALCHEMY MLN Inference result 11

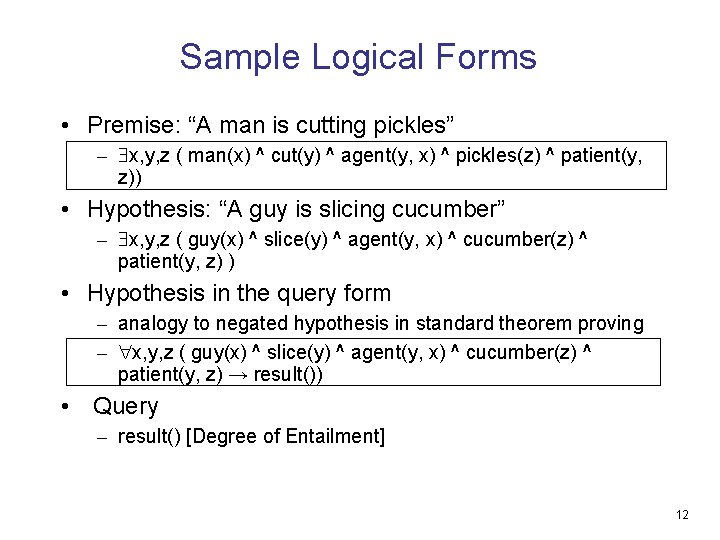

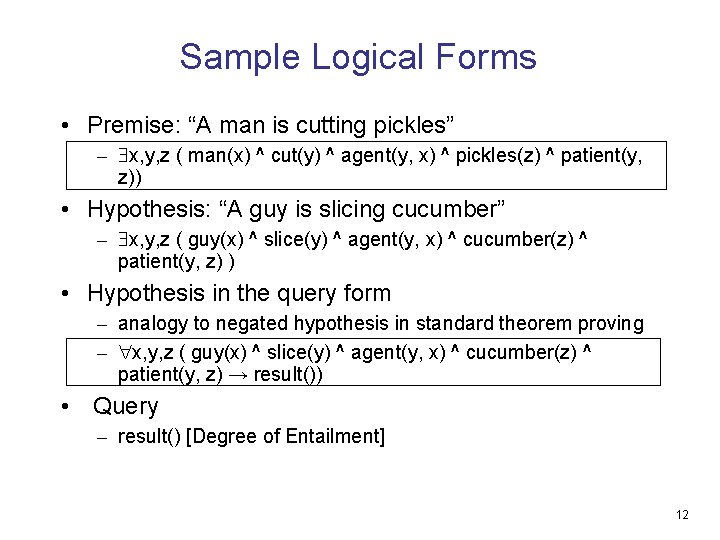

Sample Logical Forms • Premise: “A man is cutting pickles” – x, y, z ( man(x) ^ cut(y) ^ agent(y, x) ^ pickles(z) ^ patient(y, z)) • Hypothesis: “A guy is slicing cucumber” – x, y, z ( guy(x) ^ slice(y) ^ agent(y, x) ^ cucumber(z) ^ patient(y, z) ) • Hypothesis in the query form – analogy to negated hypothesis in standard theorem proving – x, y, z ( guy(x) ^ slice(y) ^ agent(y, x) ^ cucumber(z) ^ patient(y, z) → result()) • Query – result() [Degree of Entailment] 12

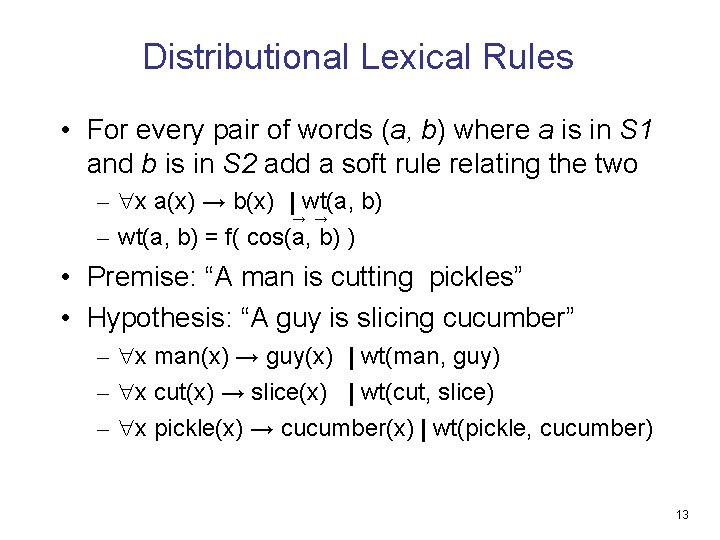

Distributional Lexical Rules • For every pair of words (a, b) where a is in S 1 and b is in S 2 add a soft rule relating the two – x a(x) → b(x) | wt(a, b) → → – wt(a, b) = f( cos(a, b) ) • Premise: “A man is cutting pickles” • Hypothesis: “A guy is slicing cucumber” – x man(x) → guy(x) | wt(man, guy) – x cut(x) → slice(x) | wt(cut, slice) – x pickle(x) → cucumber(x) | wt(pickle, cucumber) 13

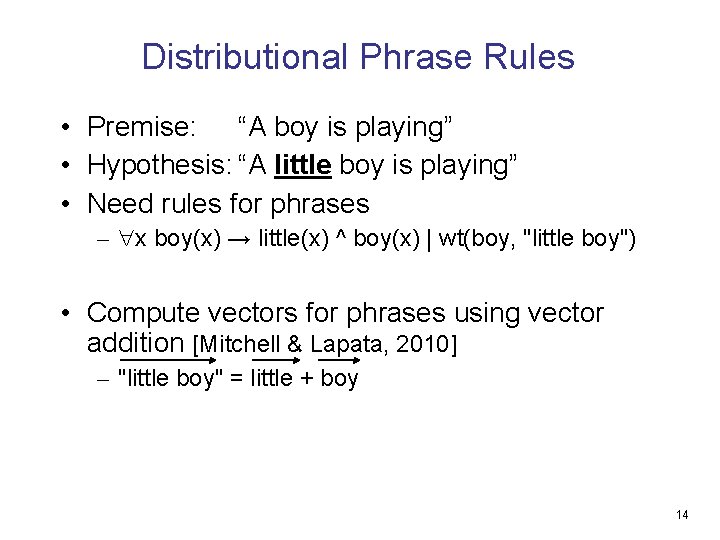

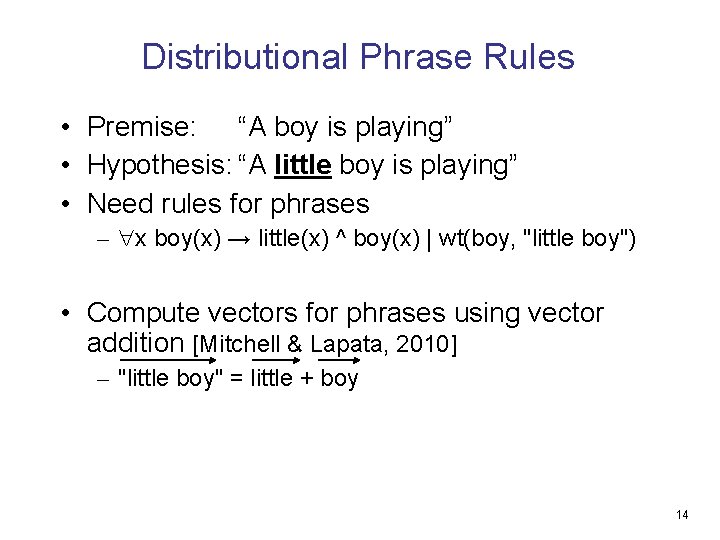

Distributional Phrase Rules • Premise: “A boy is playing” • Hypothesis: “A little boy is playing” • Need rules for phrases – x boy(x) → little(x) ^ boy(x) | wt(boy, "little boy") • Compute vectors for phrases using vector addition [Mitchell & Lapata, 2010] – "little boy" = little + boy 14

![Preliminary Results RTE12005 System Accuracy Logic only Bos Markert 2005 52 Our System Preliminary Results: RTE-1(2005) System Accuracy Logic only: [Bos & Markert, 2005] 52% Our System](https://slidetodoc.com/presentation_image_h2/a603fc7ad21cc2621fcb82d16099a9e8/image-15.jpg)

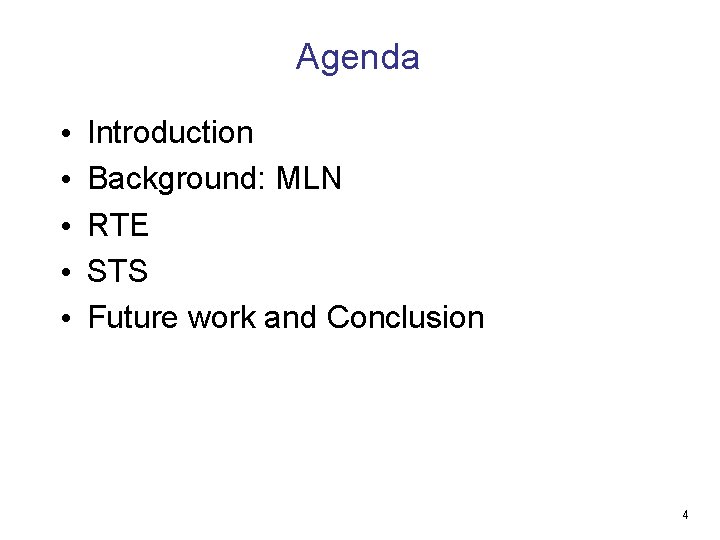

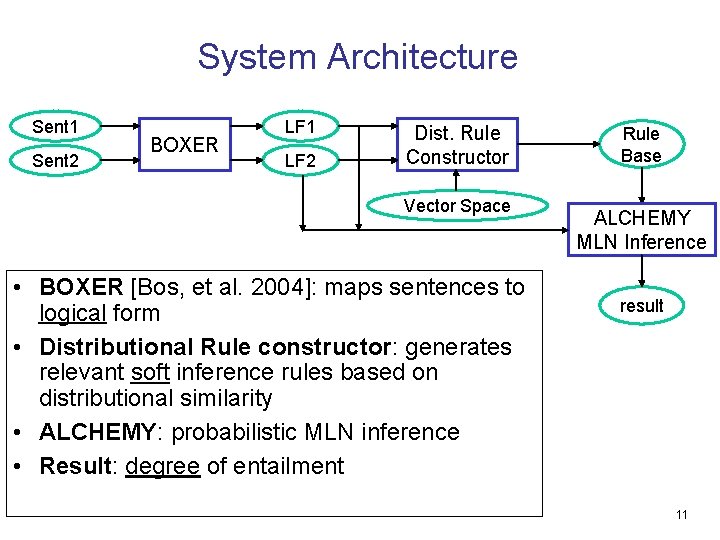

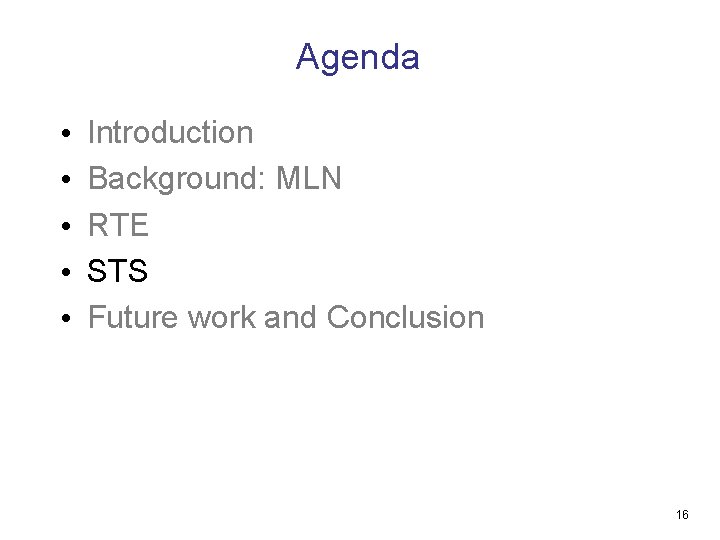

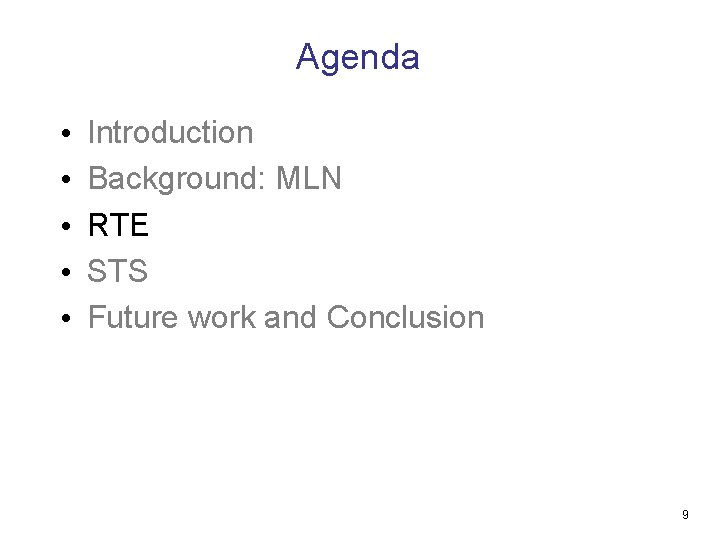

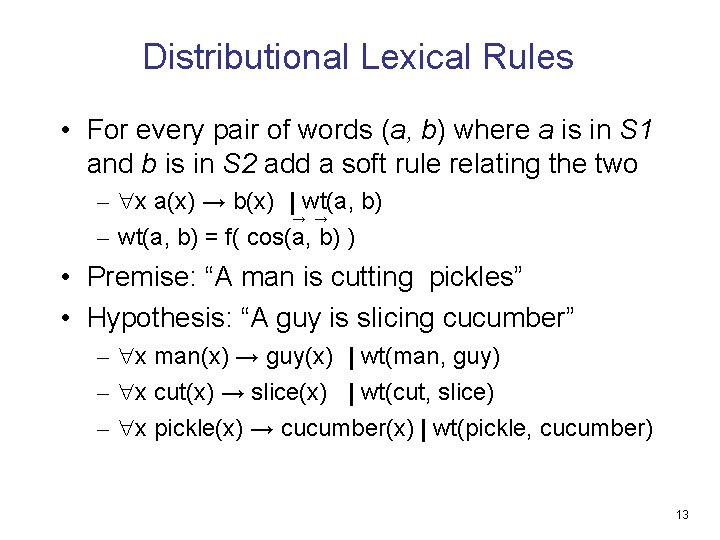

Preliminary Results: RTE-1(2005) System Accuracy Logic only: [Bos & Markert, 2005] 52% Our System 57% 15

Agenda • • • Introduction Background: MLN RTE STS Future work and Conclusion 16

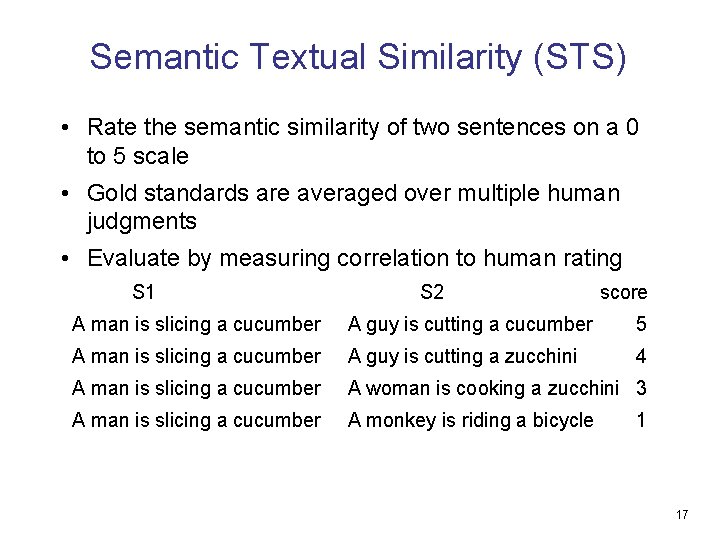

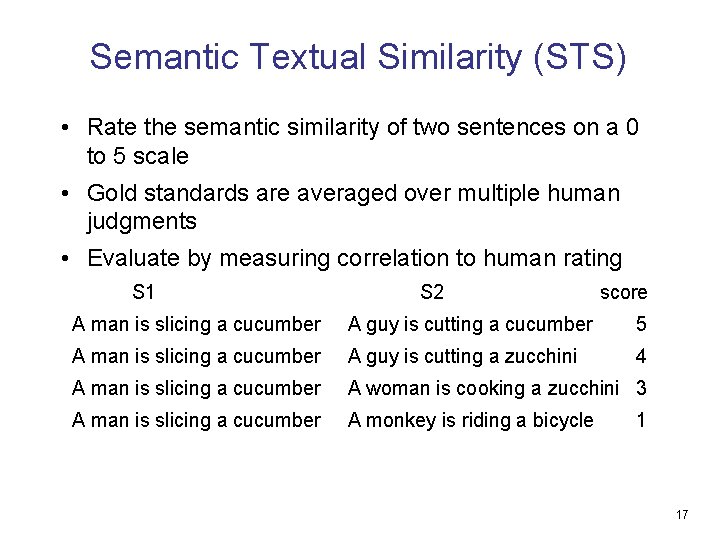

Semantic Textual Similarity (STS) • Rate the semantic similarity of two sentences on a 0 to 5 scale • Gold standards are averaged over multiple human judgments • Evaluate by measuring correlation to human rating S 1 S 2 score A man is slicing a cucumber A guy is cutting a cucumber 5 A man is slicing a cucumber A guy is cutting a zucchini 4 A man is slicing a cucumber A woman is cooking a zucchini 3 A man is slicing a cucumber A monkey is riding a bicycle 1 17

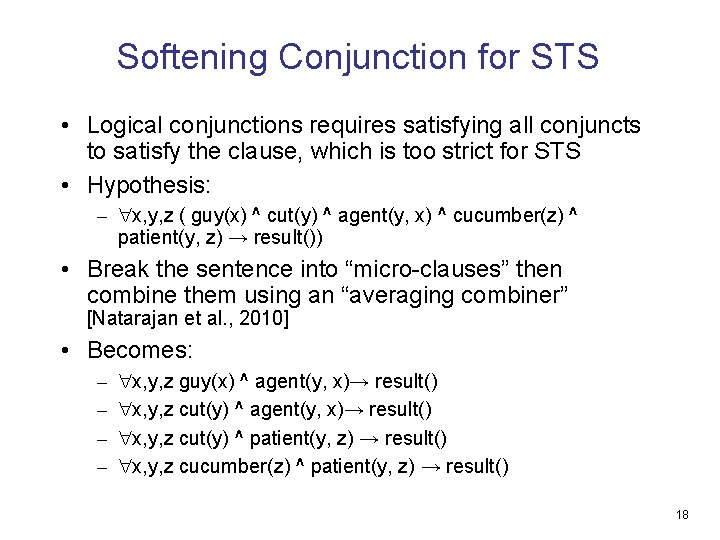

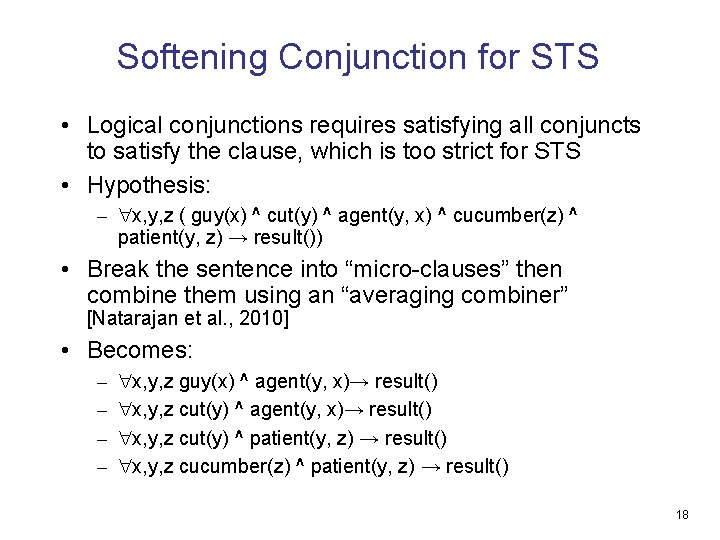

Softening Conjunction for STS • Logical conjunctions requires satisfying all conjuncts to satisfy the clause, which is too strict for STS • Hypothesis: – x, y, z ( guy(x) ^ cut(y) ^ agent(y, x) ^ cucumber(z) ^ patient(y, z) → result()) • Break the sentence into “micro-clauses” then combine them using an “averaging combiner” [Natarajan et al. , 2010] • Becomes: – – x, y, z guy(x) ^ agent(y, x)→ result() x, y, z cut(y) ^ patient(y, z) → result() x, y, z cucumber(z) ^ patient(y, z) → result() 18

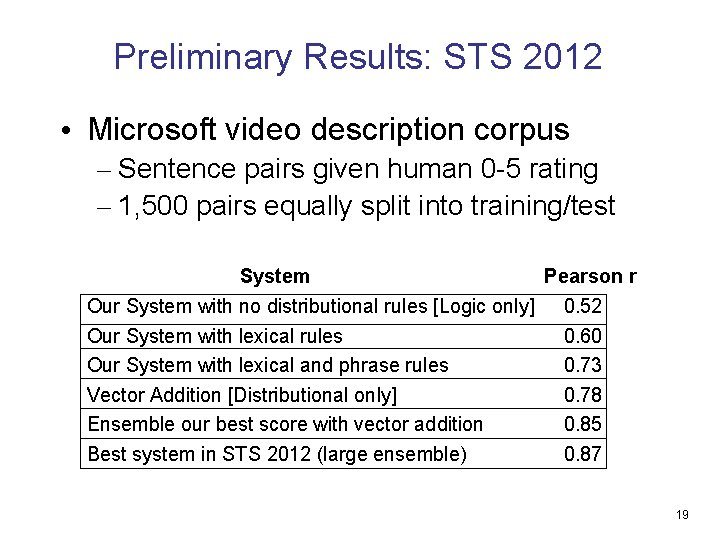

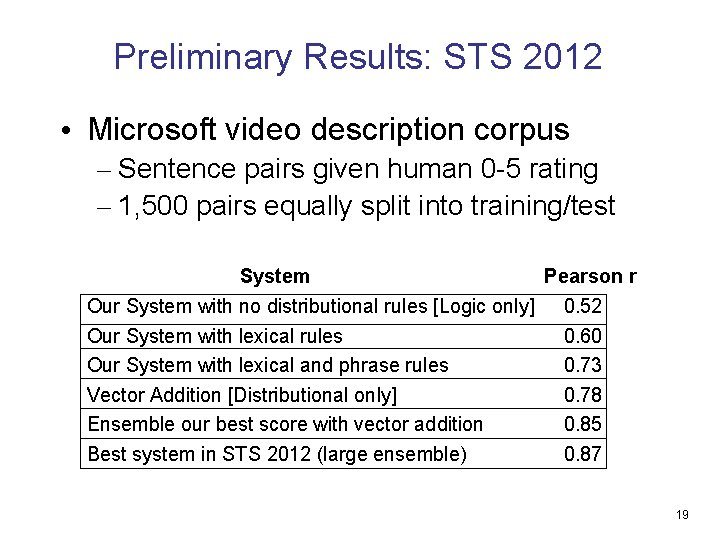

Preliminary Results: STS 2012 • Microsoft video description corpus – Sentence pairs given human 0 -5 rating – 1, 500 pairs equally split into training/test System Pearson r Our System with no distributional rules [Logic only] 0. 52 Our System with lexical rules 0. 60 Our System with lexical and phrase rules 0. 73 Vector Addition [Distributional only] 0. 78 Ensemble our best score with vector addition 0. 85 Best system in STS 2012 (large ensemble) 0. 87 19

Agenda • • • Introduction Background: MLN RTE STS Future work and Conclusion 20

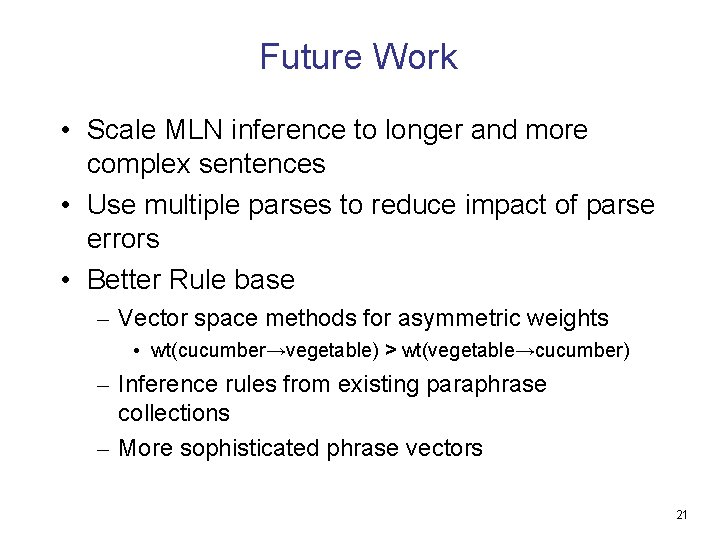

Future Work • Scale MLN inference to longer and more complex sentences • Use multiple parses to reduce impact of parse errors • Better Rule base – Vector space methods for asymmetric weights • wt(cucumber→vegetable) > wt(vegetable→cucumber) – Inference rules from existing paraphrase collections – More sophisticated phrase vectors 21

Conclusion • Using MLN to represent semantics • Combining both logical and distributional approaches – Deep semantics: representences using logic – Robust system: • Probabilistic logic and Soft inference rule • Wide coverage of distributional semantics 22

Thank You