Retention Time based Peak Clustering in Comprehensive Two

Retention Time based Peak Clustering in Comprehensive Two. Dimensional Gas Chromatography A presentation for Multi-Dimensional Chromatography Systems, Informatics, and Applications Seminar By Shilpa Deshpande University of Nebraska-Lincoln

Outline of the Presentation n n n Brief Introduction to GCx. GC Motivation for Clustering in GCx. GC Clustering Overview Implementation and Results Application Challenges Conclusion 10/21/2021 2

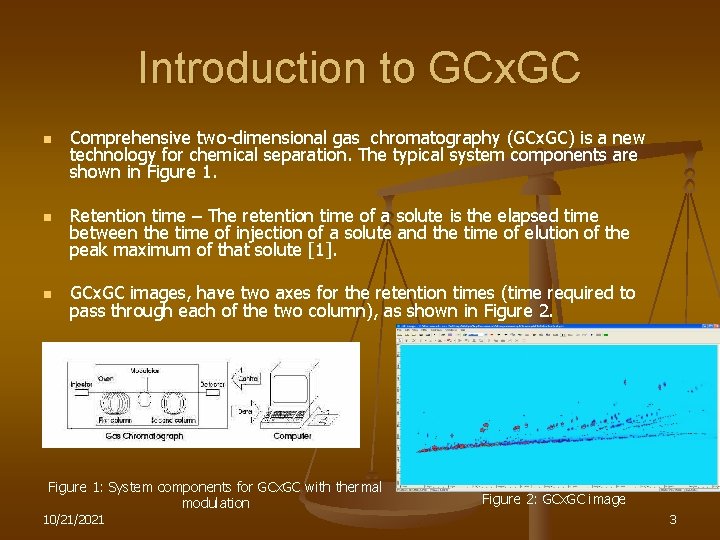

Introduction to GCx. GC n n n Comprehensive two-dimensional gas chromatography (GCx. GC) is a new technology for chemical separation. The typical system components are shown in Figure 1. Retention time – The retention time of a solute is the elapsed time between the time of injection of a solute and the time of elution of the peak maximum of that solute [1]. GCx. GC images, have two axes for the retention times (time required to pass through each of the two column), as shown in Figure 2. Figure 1: System components for GCx. GC with thermal modulation 10/21/2021 Figure 2: GCx. GC image 3

Motivation n n The relationship between chemical structure and position on the retention time plane produces a basis for logical chemical interpretation of chromatograms and is an important benefit of GCx. GC [2]. Clustering, the process of grouping similar items, can expedite the process of chemical quantification, which is of interest to chemists. 10/21/2021 4

Clustering in GC Image™ n In GC Image™, templates are patterns of peaks and graphic objects observed image(s) used to recognize similar patterns of peaks in subsequent image(s). n Template matching is the process of assigning the known information about peaks in an image to similar peaks in other subsequent image. n A peak template is a set of peaks, whose metadata has been identified. A target peak set is a set of peaks whose metadata is to be determined. n Retention time based peak clustering can be used to automate the process of construction of peak template. 10/21/2021 5

Clustering Overview n n Clustering is the process of grouping data items into classes or clusters so that data items within a cluster are similar to each other, but dissimilar to data items in other clusters [4]. Clustering is an unsupervised learning in pattern recognition. The objective of clustering is to “reveal" the structure of datapoints into “sensible“ clusters (groups) which allow to discover similarities and differences among datapoints and to derive useful conclusions about them [9]. 10/21/2021 6

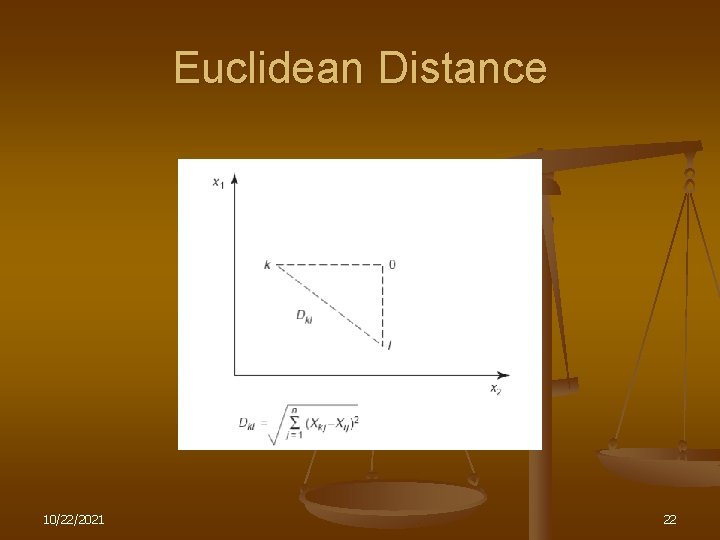

Clustering Tasks n n Feature Selection: Primary and secondary column retention time. Proximity Measure Definition: Euclidean distance. Clustering Criterion Selection: Minimize or maximize proximity measure. Clustering Algorithm Definition: Reveal the structure of data. n Result Validation: Cophenetic correlation coefficient. n Result Interpretation: Mass spec analysis, CLIC. 10/21/2021 7

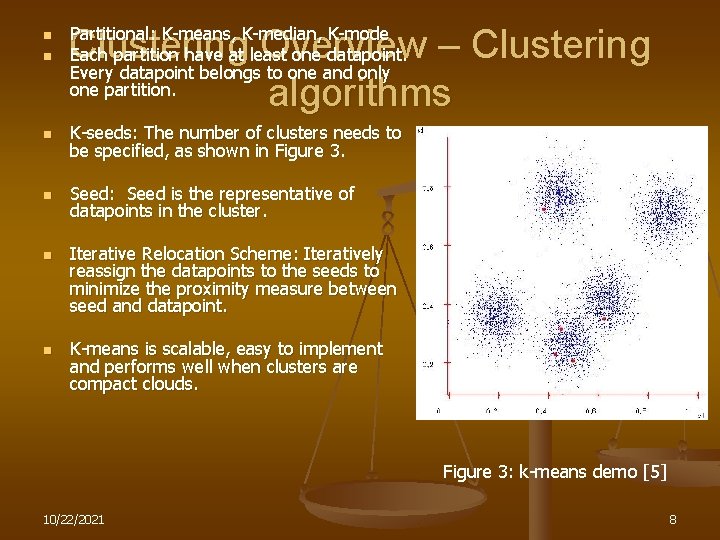

n n Clustering Overview – Clustering algorithms Partitional: K-means, K-median, K-mode Each partition have at least one datapoint. Every datapoint belongs to one and only one partition. n K-seeds: The number of clusters needs to be specified, as shown in Figure 3. n Seed: Seed is the representative of datapoints in the cluster. n n Iterative Relocation Scheme: Iteratively reassign the datapoints to the seeds to minimize the proximity measure between seed and datapoint. K-means is scalable, easy to implement and performs well when clusters are compact clouds. Figure 3: k-means demo [5] 10/22/2021 8

Clustering Overview – Clustering algorithms n n Hierarchical: Hierarchical algorithms produce a hierarchy of nested clustering [5], also known as a dendrogram. Approaches n Agglomerative : In these algorithms, the initial number of clusters is equal to number of datapoints. The clustering produced at each step results from previous one by combining two clusters into one. n n n Divisive: In divisive algorithms, the initial number of clusters is one. The clustering produced at each step results from previous one by splitting one cluster into two. Advantages of hierarchical clustering are. n Hierarchical clustering can handle any forms of similarity or distance and can be applied to any feature type. Disadvantages of hierarchical clustering are. n Stopping criterion not defined. n Most of the hierarchical algorithms do not revisit the constructed clusters, so any improvement in clustering is not possible [6]. 10/22/2021 9

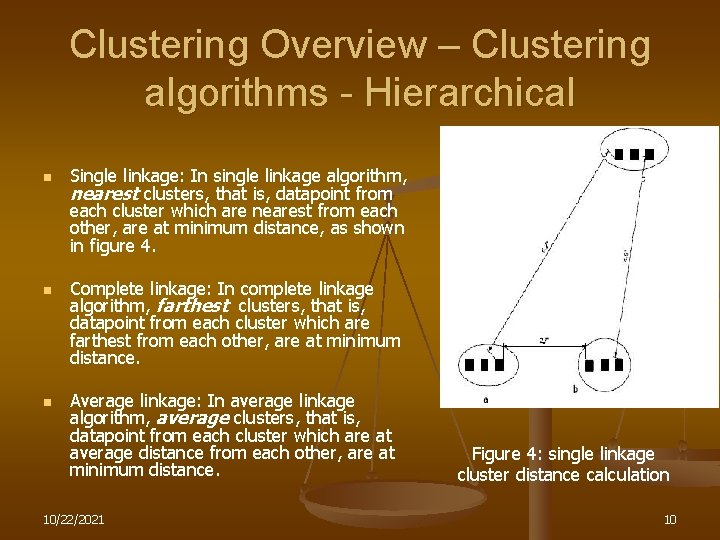

Clustering Overview – Clustering algorithms - Hierarchical n n n Single linkage: In single linkage algorithm, nearest clusters, that is, datapoint from each cluster which are nearest from each other, are at minimum distance, as shown in figure 4. Complete linkage: In complete linkage algorithm, farthest clusters, that is, datapoint from each cluster which are farthest from each other, are at minimum distance. Average linkage: In average linkage algorithm, average clusters, that is, datapoint from each cluster which are at average distance from each other, are at minimum distance. 10/22/2021 Figure 4: single linkage cluster distance calculation 10

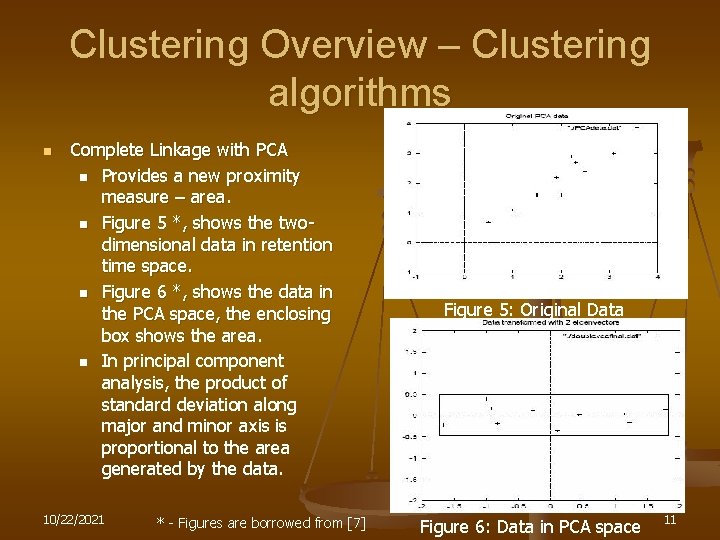

Clustering Overview – Clustering algorithms n Complete Linkage with PCA n Provides a new proximity measure – area. n Figure 5 *, shows the twodimensional data in retention time space. n Figure 6 *, shows the data in the PCA space, the enclosing box shows the area. n In principal component analysis, the product of standard deviation along major and minor axis is proportional to the area generated by the data. 10/22/2021 * - Figures are borrowed from [7] Figure 5: Original Data Figure 6: Data in PCA space 11

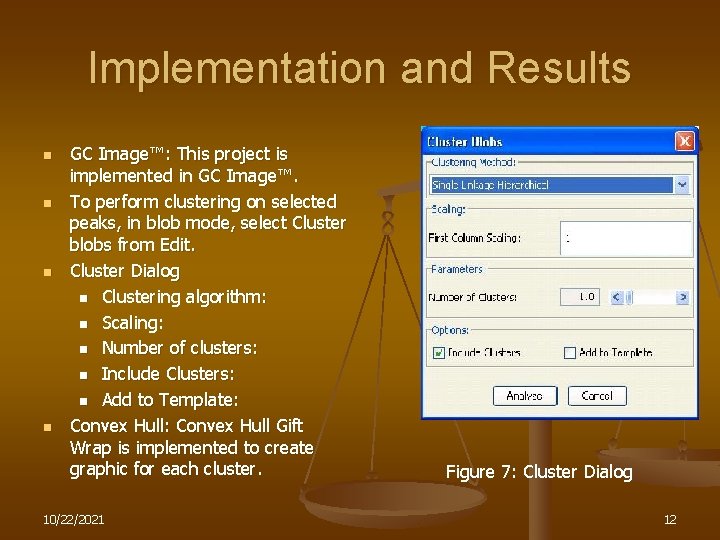

Implementation and Results n n GC Image™: This project is implemented in GC Image™. To perform clustering on selected peaks, in blob mode, select Cluster blobs from Edit. Cluster Dialog n Clustering algorithm: n Scaling: n Number of clusters: n Include Clusters: n Add to Template: Convex Hull Gift Wrap is implemented to create graphic for each cluster. 10/22/2021 Figure 7: Cluster Dialog 12

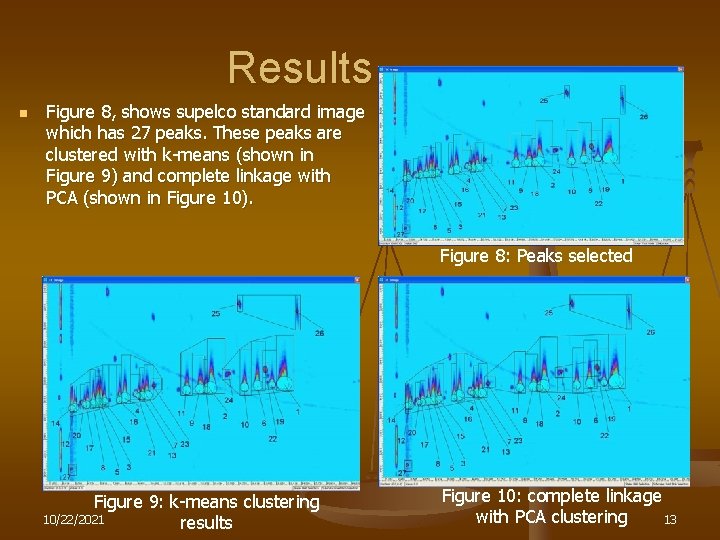

Results n Figure 8, shows supelco standard image which has 27 peaks. These peaks are clustered with k-means (shown in Figure 9) and complete linkage with PCA (shown in Figure 10). Figure 8: Peaks selected F i g u r e Figure 9: k-means clustering 10/22/2021 results Figure 10: complete linkage with PCA clustering 13

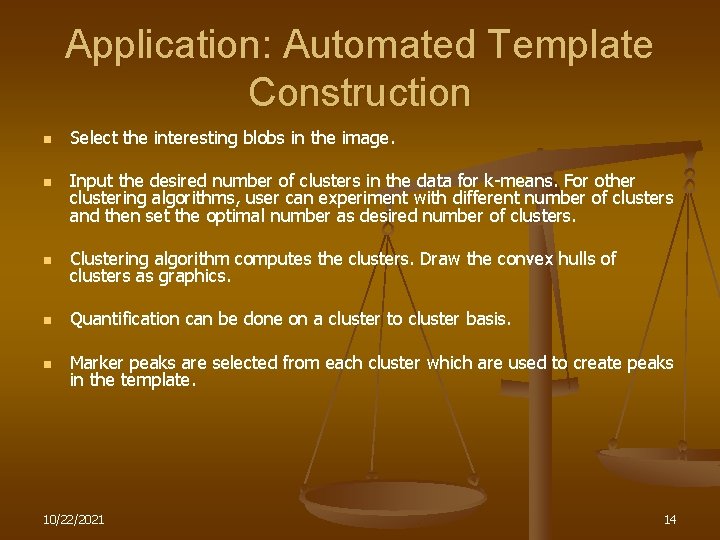

Application: Automated Template Construction n n Select the interesting blobs in the image. Input the desired number of clusters in the data for k-means. For other clustering algorithms, user can experiment with different number of clusters and then set the optimal number as desired number of clusters. n Clustering algorithm computes the clusters. Draw the convex hulls of clusters as graphics. n Quantification can be done on a cluster to cluster basis. n Marker peaks are selected from each cluster which are used to create peaks in the template. 10/22/2021 14

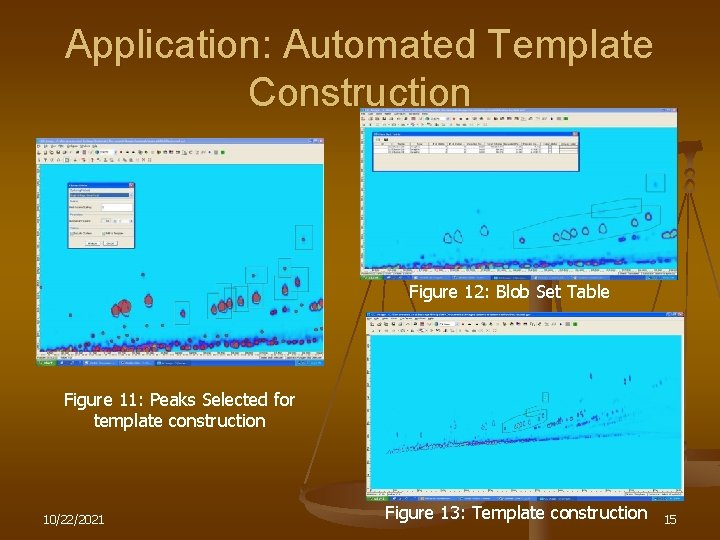

Application: Automated Template Construction Figure 12: Blob Set Table Figure 11: Peaks Selected for template construction 10/22/2021 Figure 13: Template construction 15

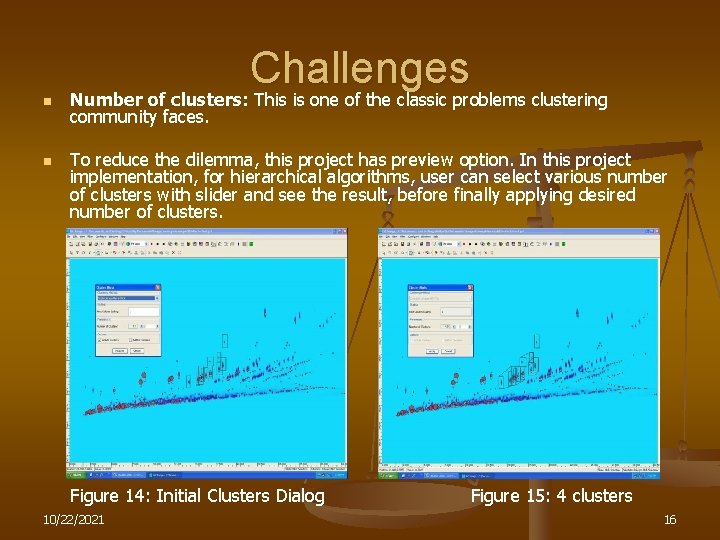

n n Challenges Number of clusters: This is one of the classic problems clustering community faces. To reduce the dilemma, this project has preview option. In this project implementation, for hierarchical algorithms, user can select various number of clusters with slider and see the result, before finally applying desired number of clusters. Figure 14: Initial Clusters Dialog 10/22/2021 Figure 15: 4 clusters 16

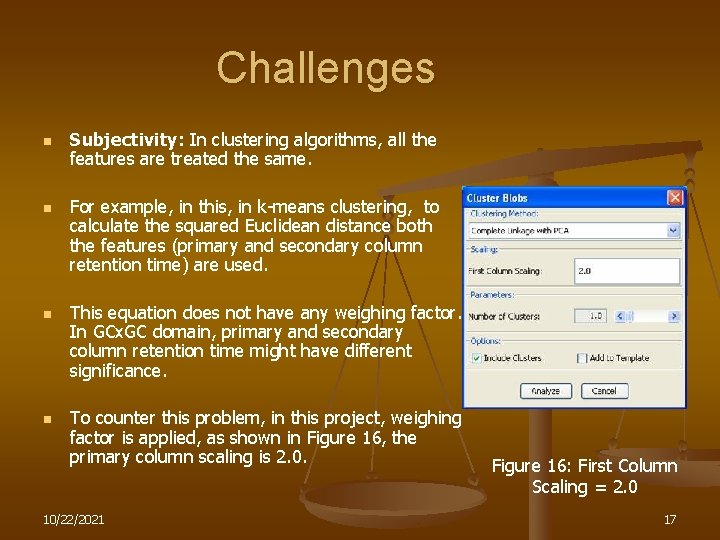

Challenges n n Subjectivity: In clustering algorithms, all the features are treated the same. For example, in this, in k-means clustering, to calculate the squared Euclidean distance both the features (primary and secondary column retention time) are used. This equation does not have any weighing factor. In GCx. GC domain, primary and secondary column retention time might have different significance. To counter this problem, in this project, weighing factor is applied, as shown in Figure 16, the primary column scaling is 2. 0. 10/22/2021 Figure 16: First Column Scaling = 2. 0 17

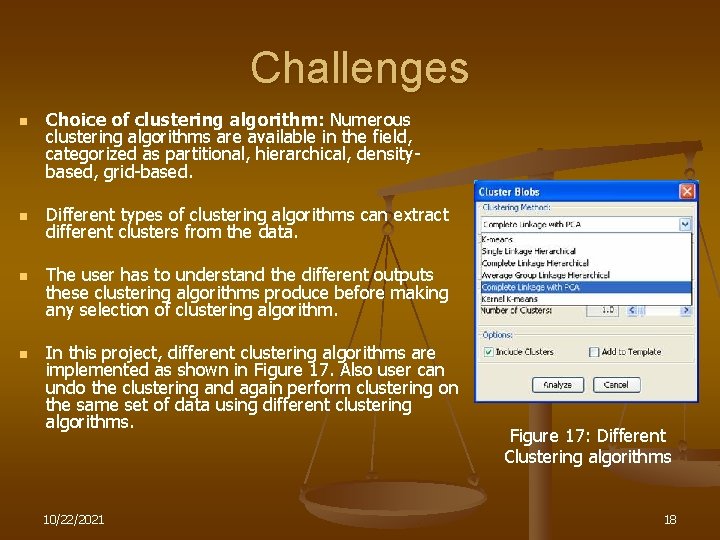

Challenges n n Choice of clustering algorithm: Numerous clustering algorithms are available in the field, categorized as partitional, hierarchical, densitybased, grid-based. Different types of clustering algorithms can extract different clusters from the data. The user has to understand the different outputs these clustering algorithms produce before making any selection of clustering algorithm. In this project, different clustering algorithms are implemented as shown in Figure 17. Also user can undo the clustering and again perform clustering on the same set of data using different clustering algorithms. 10/22/2021 Figure 17: Different Clustering algorithms 18

Conclusion n n Retention time based peak clustering in comprehensive two dimensional gas chromatography is a new stream of research. Clustering in one and two dimensional gas chromatography has usually been done in mass spec domain [8]. Clustering algorithms in one or two dimensional gas chromatography, usually use principal component analysis to reduce the size of data and use different clustering algorithms to cluster in reduced domain space. n This project used a algorithm to perform clustering using principal component analysis to get natural clusters. n This project proposes to use these clusters to automatically construct templates. n The choice of clustering algorithm and user parameters play an important role in extracting clusters which can be validated by experts or some other means such as library search of mass spec image. 10/22/2021 19

![Reference [1] Retention-time, http: //www. chromatography-online. org/topics/ retention/time. html. " [2] R. B. Gaines, Reference [1] Retention-time, http: //www. chromatography-online. org/topics/ retention/time. html. " [2] R. B. Gaines,](http://slidetodoc.com/presentation_image_h2/dcbf378b6e00bc4e4ff4ce9a70c055dc/image-20.jpg)

Reference [1] Retention-time, http: //www. chromatography-online. org/topics/ retention/time. html. " [2] R. B. Gaines, G. S. Frysinger, M. S. Hendrick-Smith, and J. D. Stuart, Oil spill source identification by comprehensive two-dimensional gas chromatography, " Environ. Sci. Technol. , vol. 33, pp. 2106 -2112, 1999 [3] GC Image™, “http: //www. gcimage. com” [4] J. Han and M. Kamber, Data Mining Concepts and Techniques. San Francisco: Morgan Kauffann Publisher, 2001 [5] A. W. Moore , “K-means and Hierarchical Clustering Slides”, http: //www. cs. cmu. edu/~awm/tutorials, Carnegie Mellon University [6] P. Berkhin, Survey of Clustering Data Mining Techniques. Accrue Software, Inc [7] L. I. Smith, “A tutorial on Principal Components Analysis”, 2002 [8] K. Pierce, J. Hopea, K. Johnson, B. Wright, and R. Synovec, Classification of gasoline data obtained by gas chromatography using a piecewise alignment algorithm combined with feature selection and principal component analysis, " Journal of Chromatography, vol. 1096, pp. 101 -110, 2005. [9] S. Theodoridis and K. Koutroumbus, Pattern Recognition. Academic Press, 1998. 10/22/2021 20

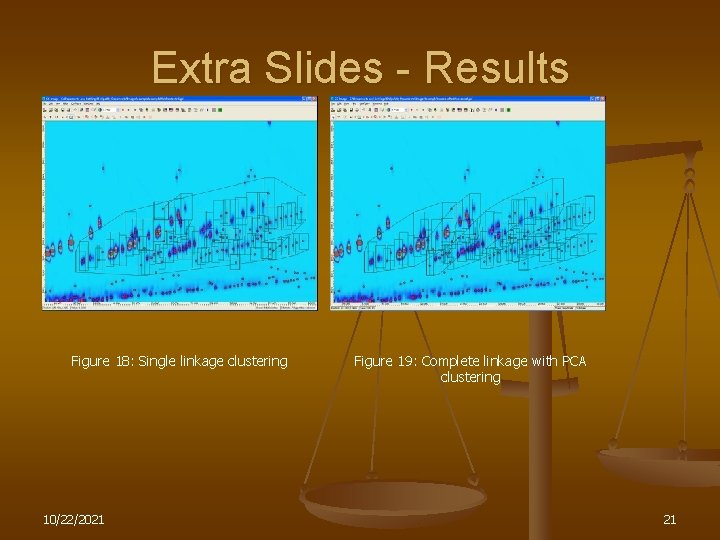

Extra Slides - Results Figure 18: Single linkage clustering 10/22/2021 Figure 19: Complete linkage with PCA clustering 21

Euclidean Distance 10/22/2021 22

- Slides: 22