Residual Attention Network for Wavelet Domain Super Resolution

- Slides: 18

Residual Attention Network for Wavelet Domain Super Resolution Jing Liu, Yuan Xie, Haichuan Song, Wang Yuan, Lizhuang Ma ICASSP 2020

Introduction Method Experiments Conclusion

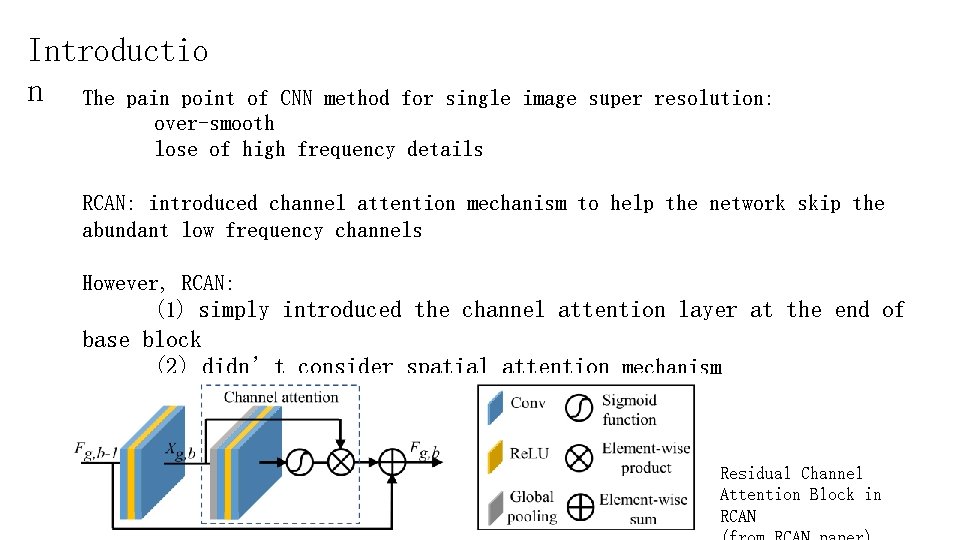

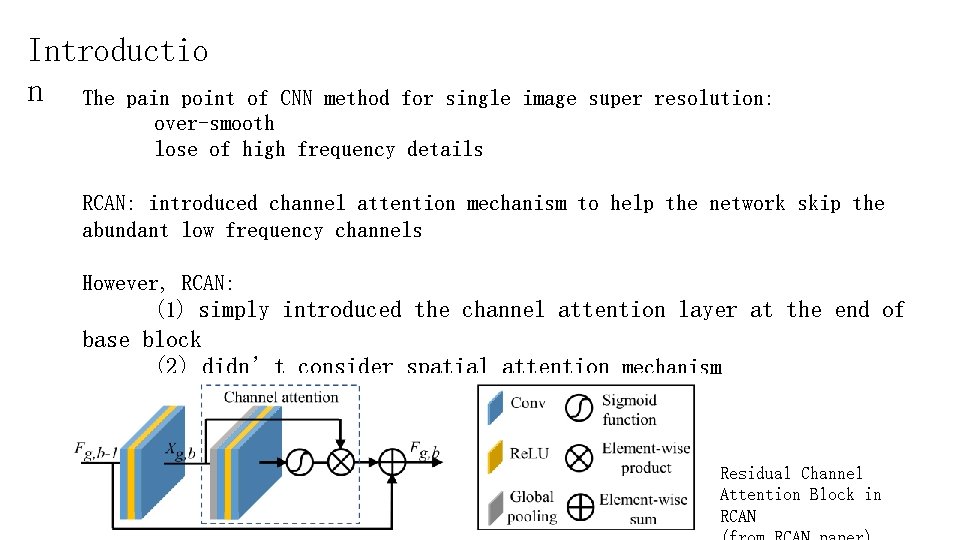

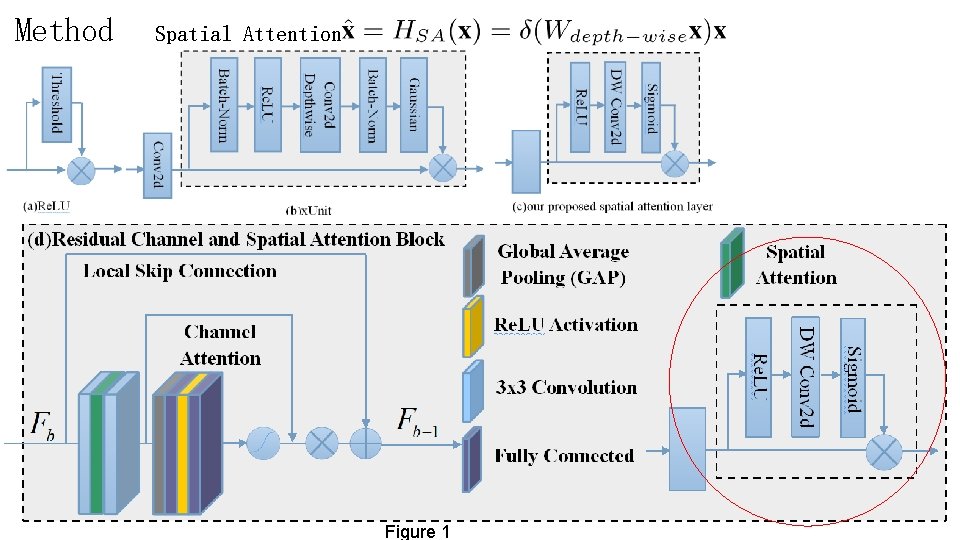

Introductio n The pain point of CNN method for single image super resolution: over-smooth lose of high frequency details RCAN: introduced channel attention mechanism to help the network skip the abundant low frequency channels However, RCAN: (1) simply introduced the channel attention layer at the end of base block (2) didn’t consider spatial attention mechanism Residual Channel Attention Block in RCAN

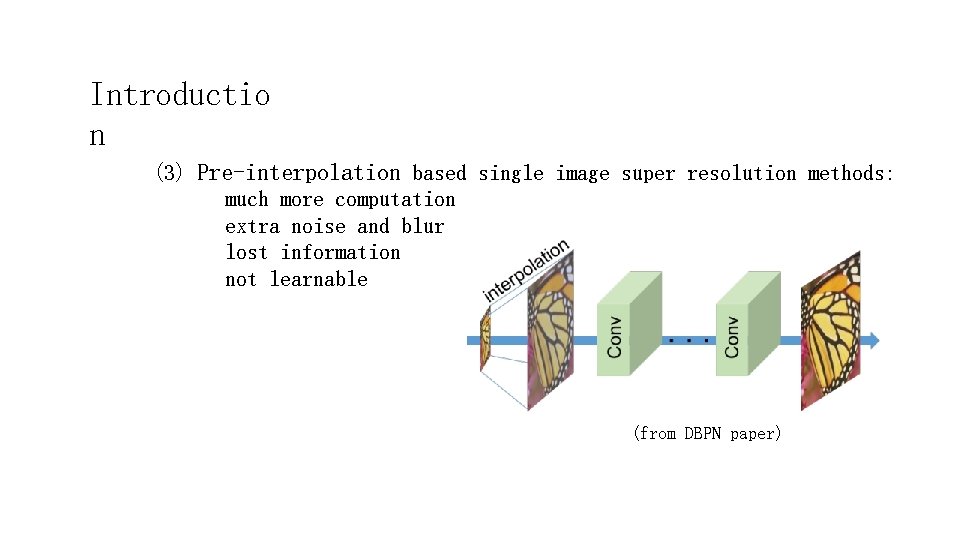

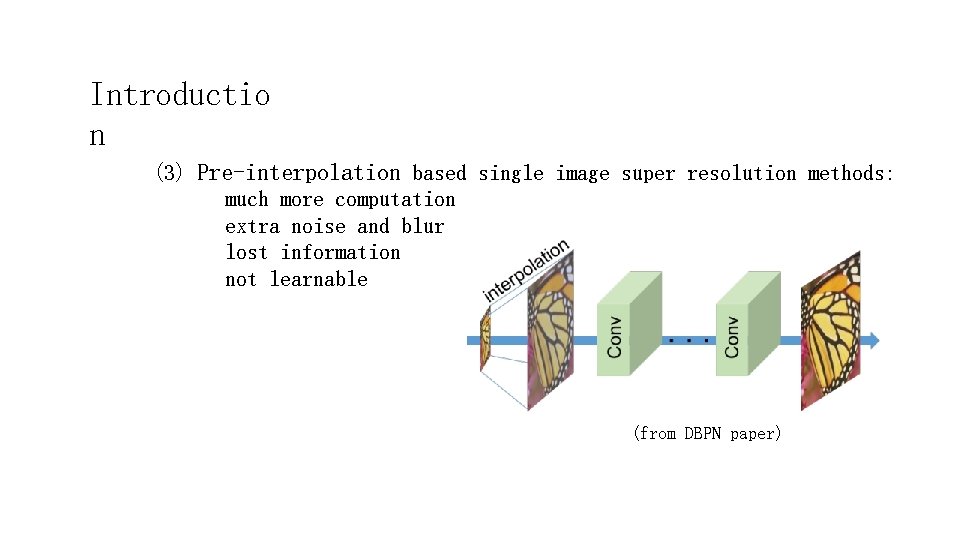

Introductio n (3) Pre-interpolation based single image super resolution methods: much more computation extra noise and blur lost information not learnable (from DBPN paper)

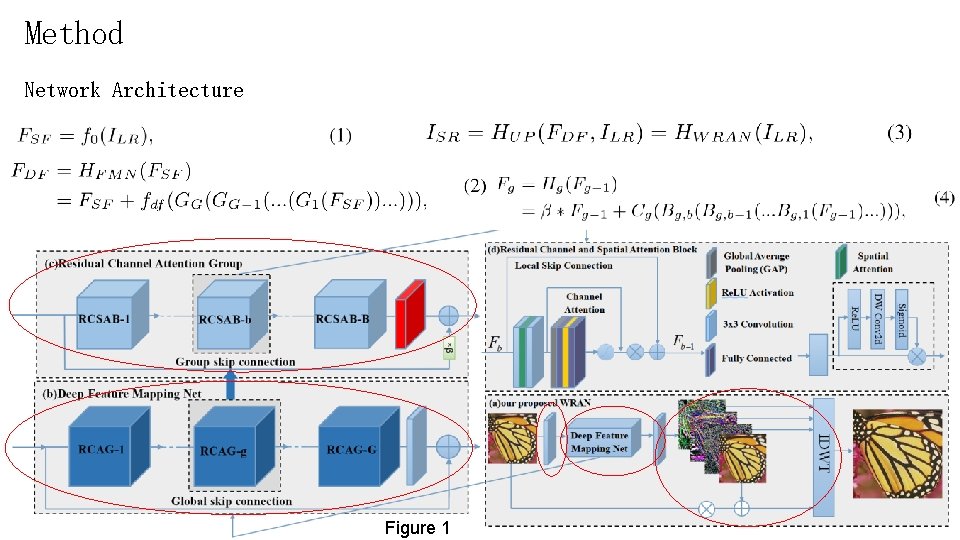

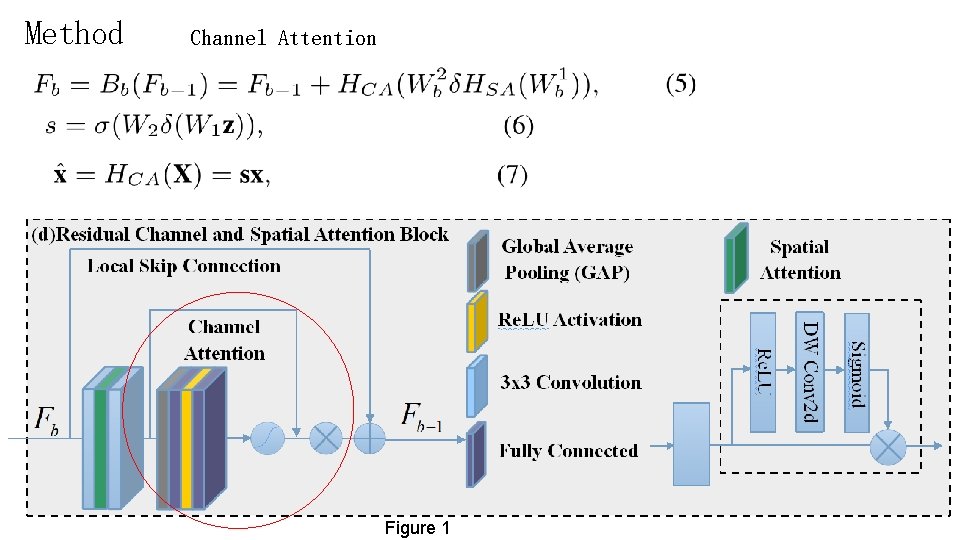

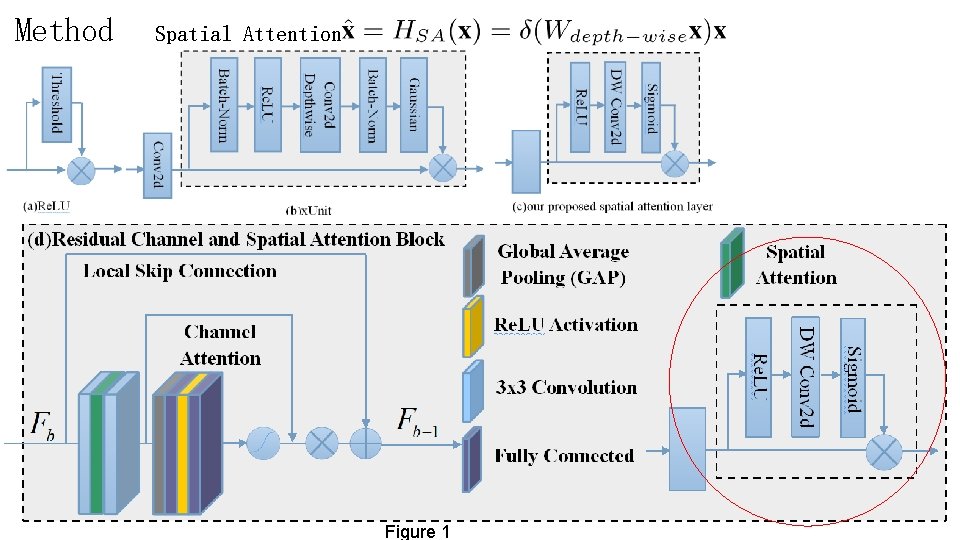

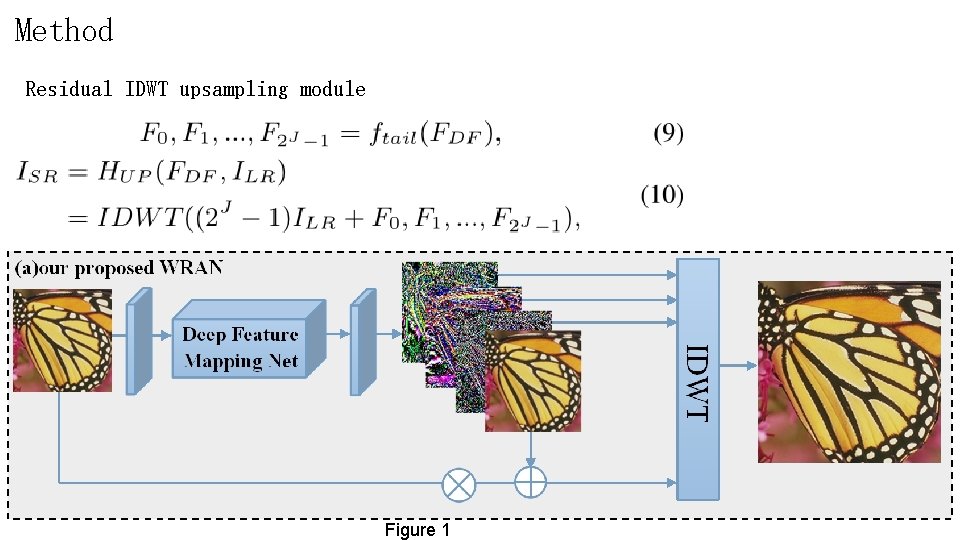

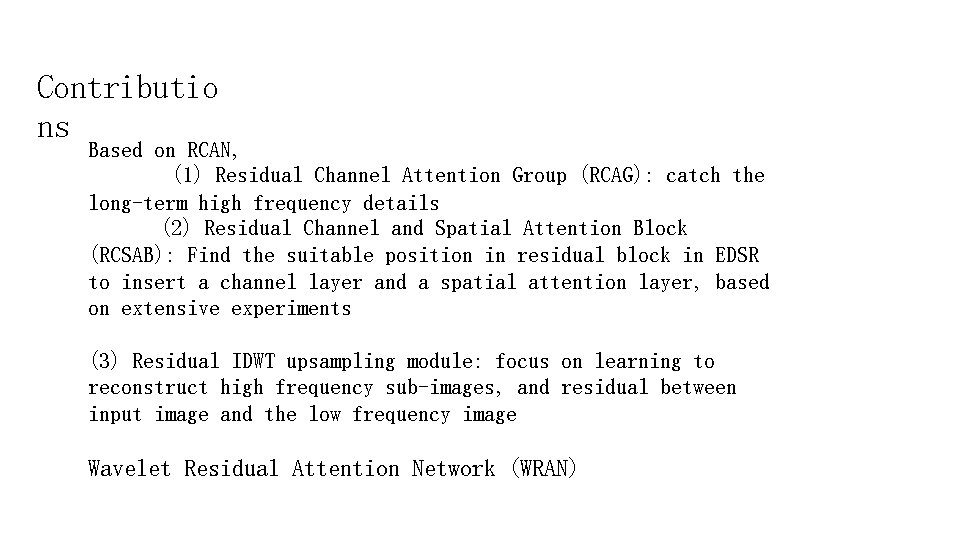

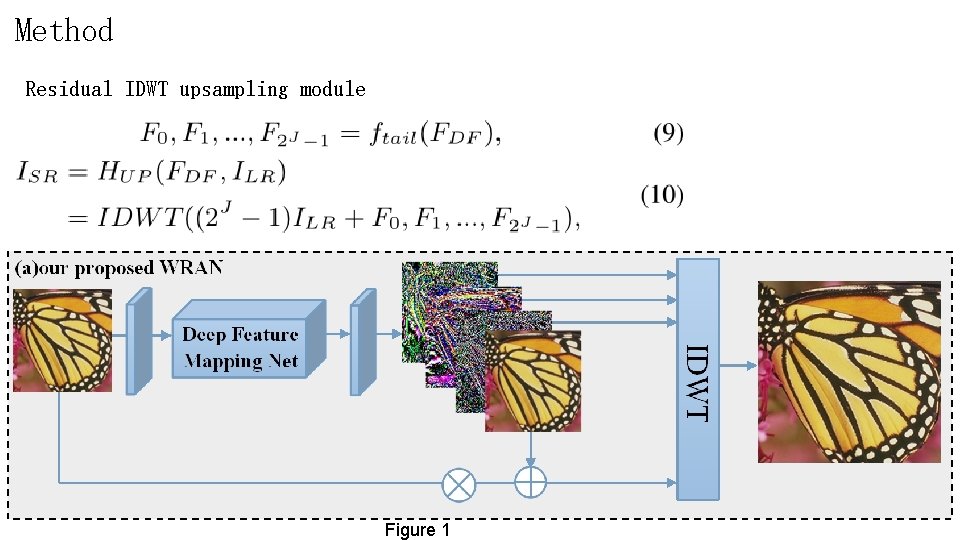

Contributio ns Based on RCAN, (1) Residual Channel Attention Group (RCAG): catch the long-term high frequency details (2) Residual Channel and Spatial Attention Block (RCSAB): Find the suitable position in residual block in EDSR to insert a channel layer and a spatial attention layer, based on extensive experiments (3) Residual IDWT upsampling module: focus on learning to reconstruct high frequency sub-images, and residual between input image and the low frequency image Wavelet Residual Attention Network (WRAN)

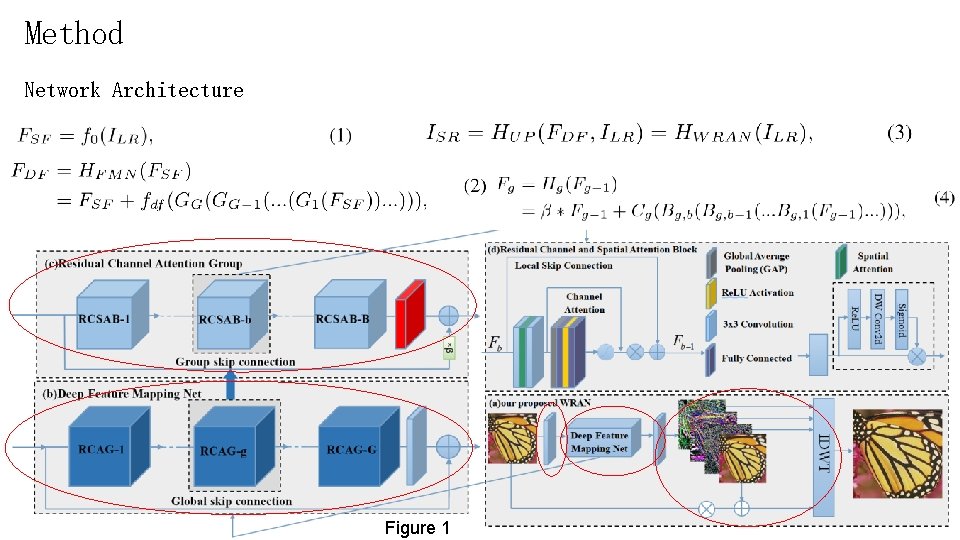

Method Network Architecture Figure 1

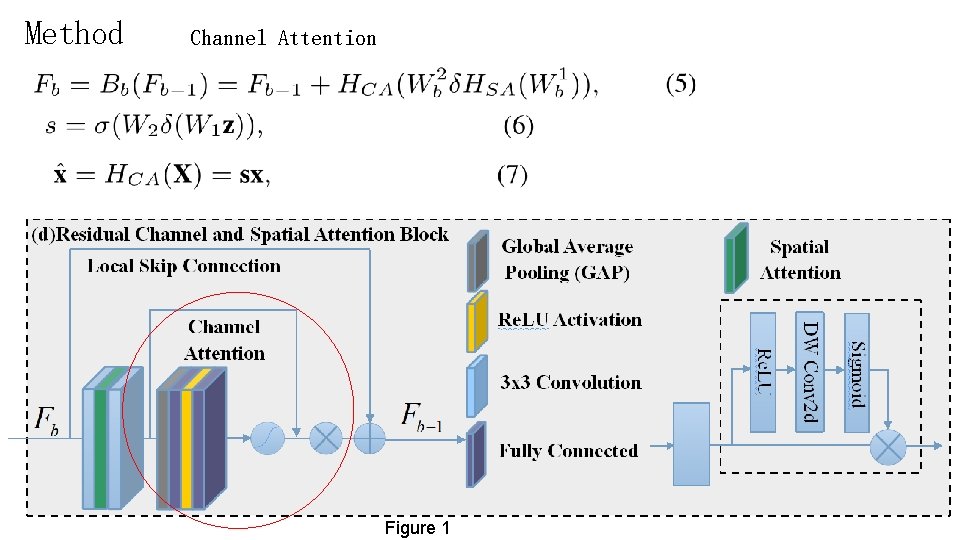

Method Channel Attention Figure 1

Method Spatial Attention Figure 1

Method Residual IDWT upsampling module Figure 1

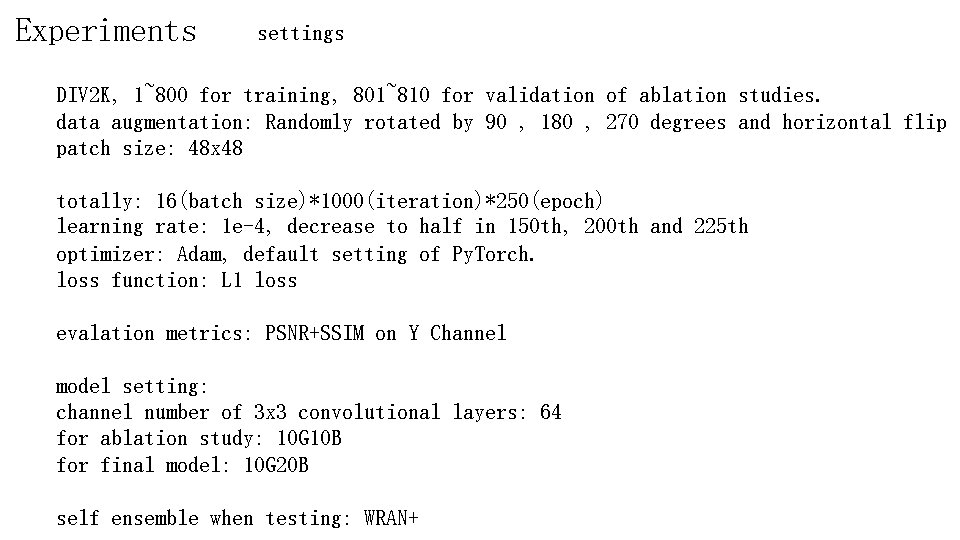

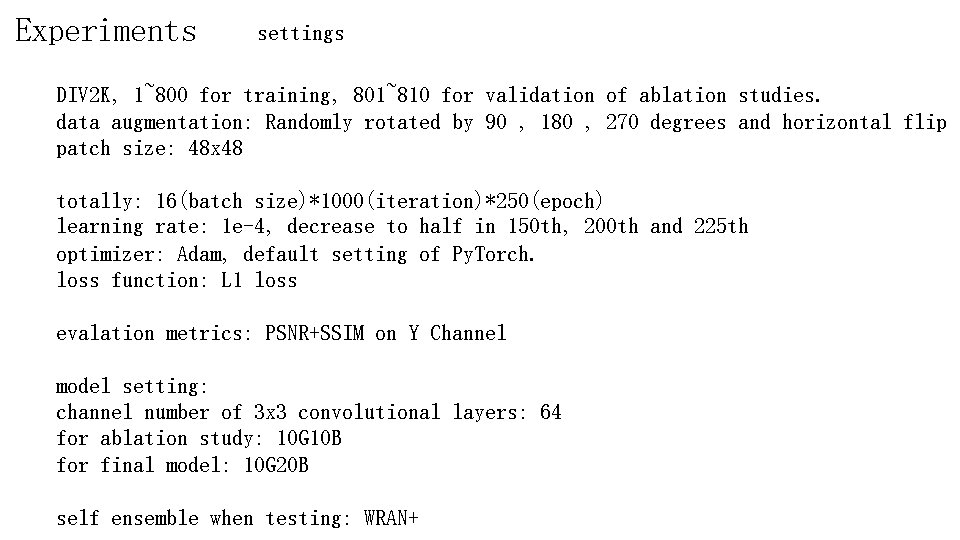

Experiments settings DIV 2 K, 1~800 for training, 801~810 for validation of ablation studies. data augmentation: Randomly rotated by 90 , 180 , 270 degrees and horizontal flip patch size: 48 x 48 totally: 16(batch size)*1000(iteration)*250(epoch) learning rate: 1 e-4, decrease to half in 150 th, 200 th and 225 th optimizer: Adam, default setting of Py. Torch. loss function: L 1 loss evalation metrics: PSNR+SSIM on Y Channel model setting: channel number of 3 x 3 convolutional layers: 64 for ablation study: 10 G 10 B for final model: 10 G 20 B self ensemble when testing: WRAN+

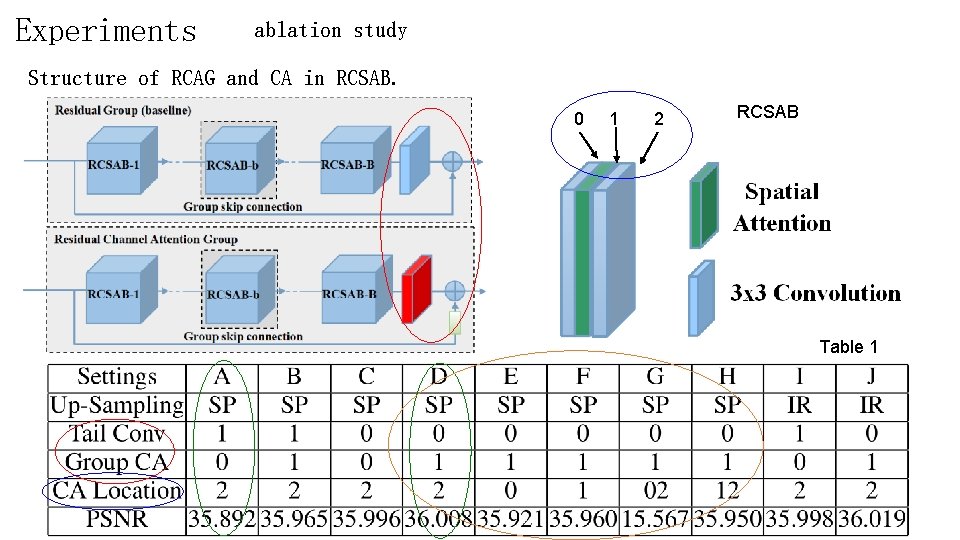

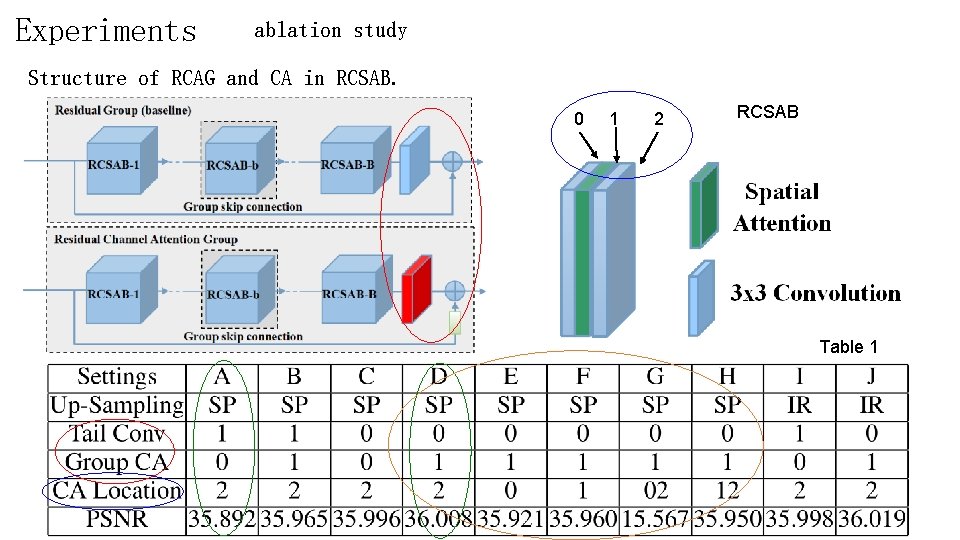

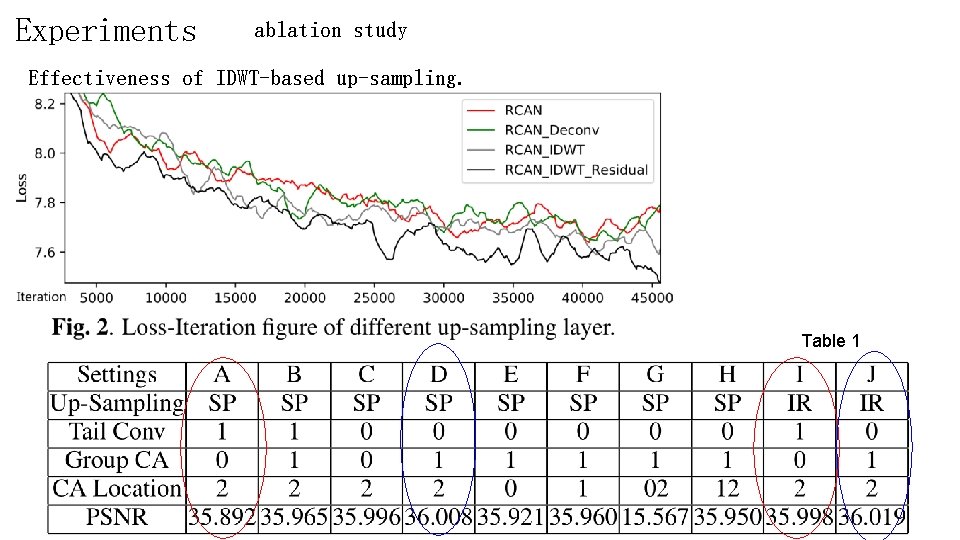

Experiments ablation study Structure of RCAG and CA in RCSAB. 0 1 2 RCSAB Table 1

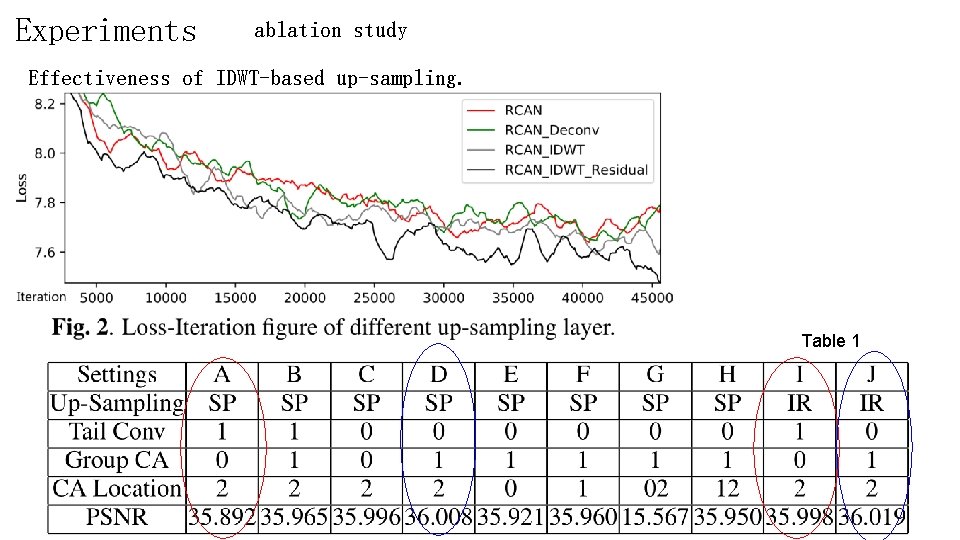

Experiments ablation study Effectiveness of IDWT-based up-sampling. Table 1

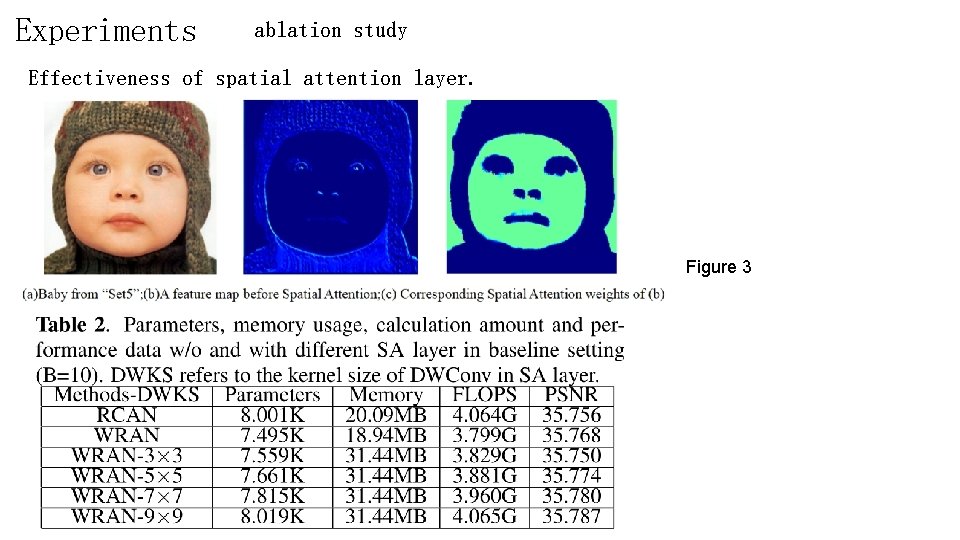

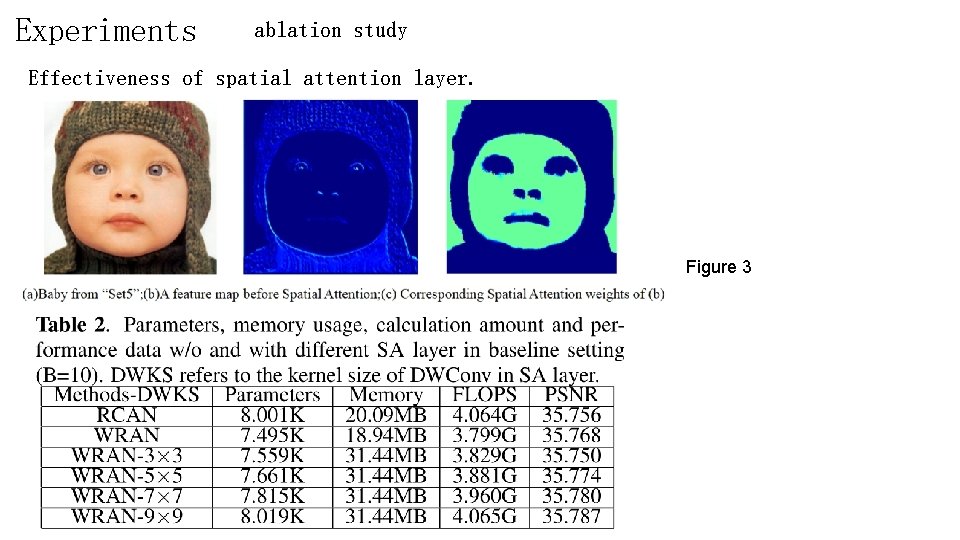

Experiments ablation study Effectiveness of spatial attention layer. Figure 3

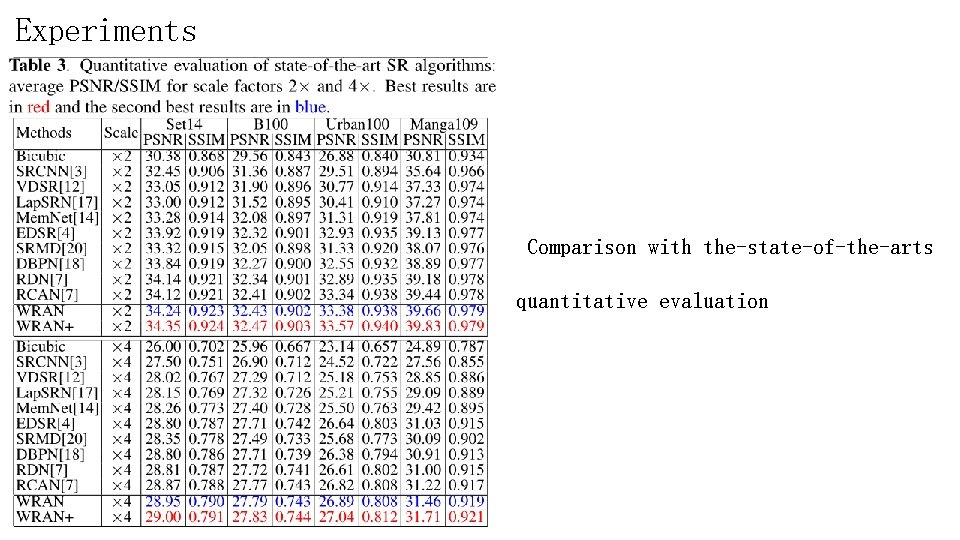

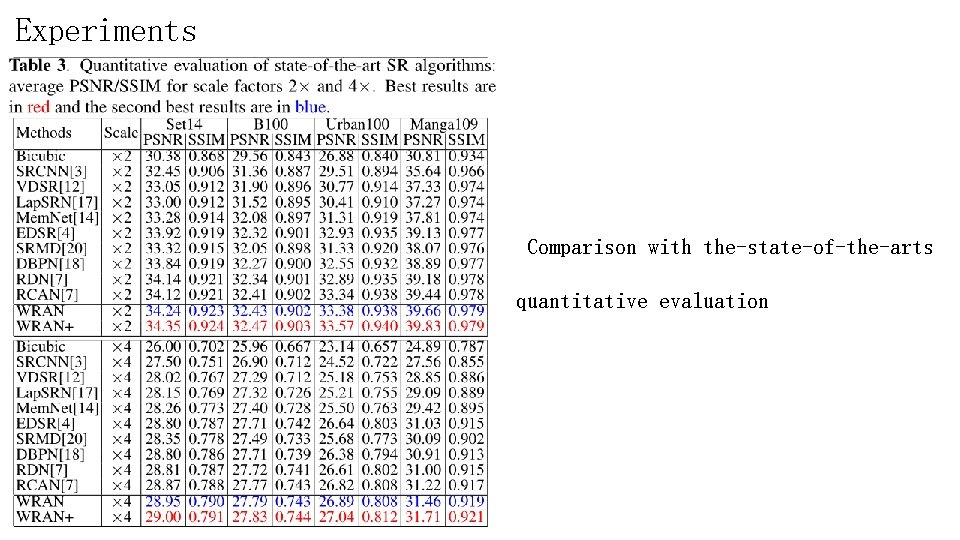

Experiments Comparison with the-state-of-the-arts quantitative evaluation

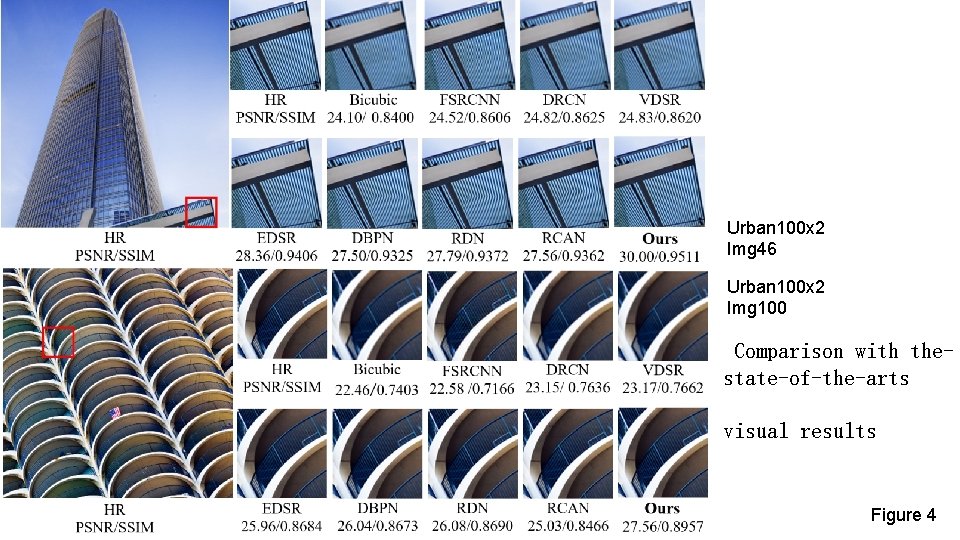

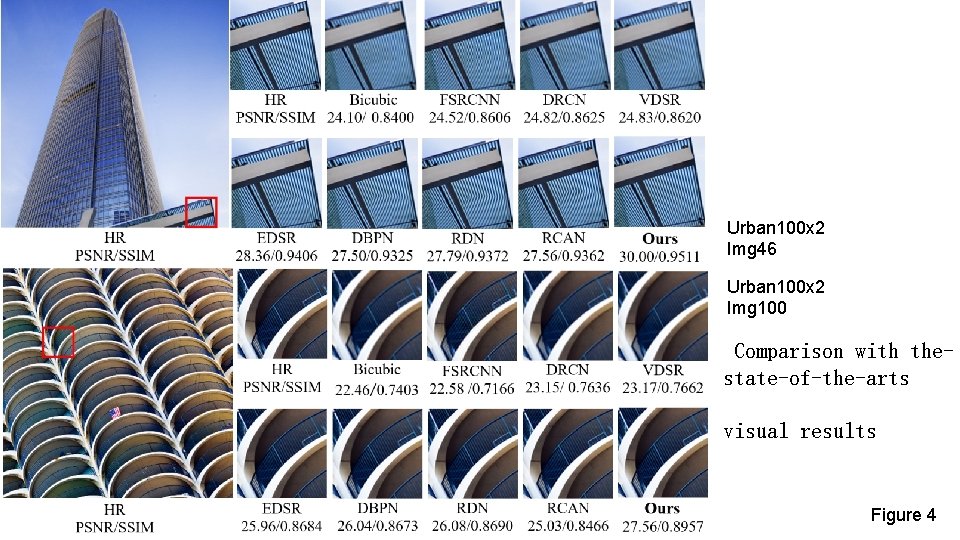

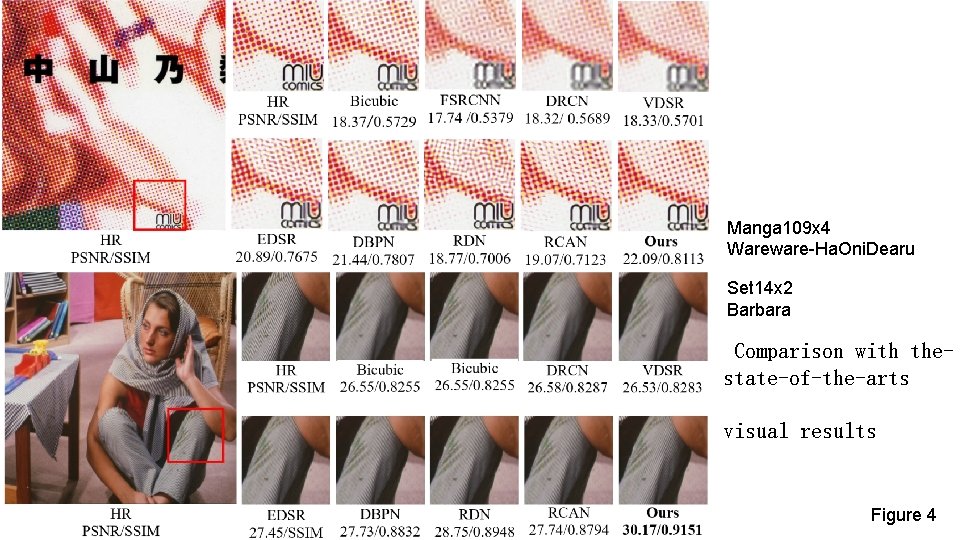

Urban 100 x 2 Img 46 Urban 100 x 2 Img 100 Comparison with thestate-of-the-arts visual results Figure 4

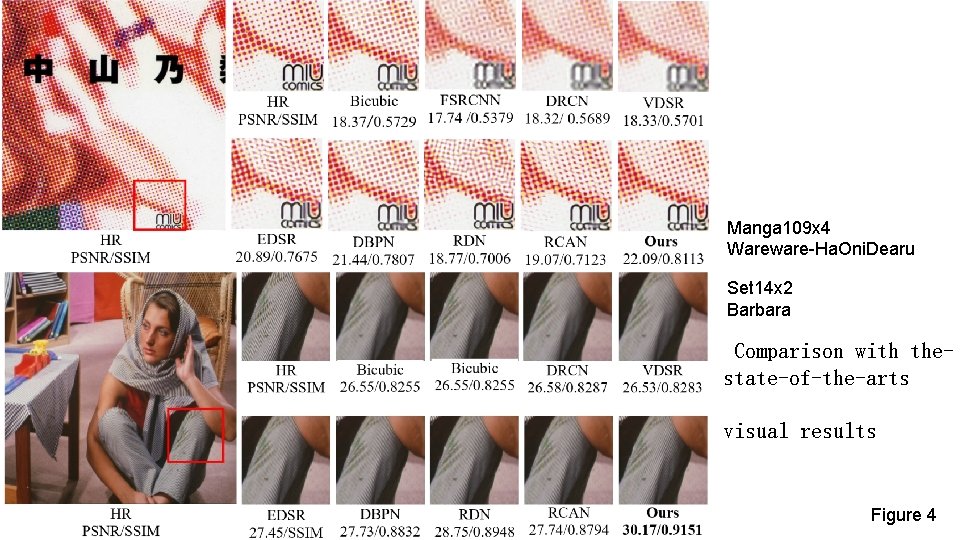

Manga 109 x 4 Wareware-Ha. Oni. Dearu Set 14 x 2 Barbara Comparison with thestate-of-the-arts visual results Figure 4

Conclusion In this paper, we propose a residual attention network for wavelet domain super-resolution. (WRAN) Residual Channel Attention Group is proposed to make the training process stable and catch the longterm high frequency information easily. (RCAG) Channel attention and spatial attention are integrated in our base block. (RCSAB) Furthermore, we propose an IDWT-based upsampling module which can replace deconv or Sub-Pixel layer for up-sampling in SR tasks. (Residual-IDWT)

Thanks!