Research Methods Michael A Dover MSW Ph D

- Slides: 99

Research Methods Michael A. Dover, MSW, Ph. D. Chapter 7 Begins on Slide 43, Chapter 8 on 56

PART TWO: PROBLEM FORMULATION AND MEASUREMENT

Problem Formulation Phase 1. Problem Formulation- a difficulty recognized- a question posed. Progressive sharpening, specificity, relevance, and meaningfulness. Review the literature. n Phase 2. Designing the Study- Alternative logical arrangements and data collection methods are constructed. w Consideration of feasibility, purpose of the research, research design (logical foundation and pragmatic issues, e. g. design, sampling, sources and procedures for data collection, measurement issues, data analysis plans, etc. ) n Phase 3. Data Collection - record review, existing database, survey, observation, etc. w Deductive studies require accuracy and objectivity w Qualitative focus on understanding meaning and generation of hypothesis.

Problem Formulation Overview of the Research Process n n Phase 4. Data Processing - important to consider in how you structure your data coding, collection, and analysis plans. Phase 5. Data Analysis-structured around answering the research question, but can yield additional, unanticipated results that can further the research process. Phase 6. Interpreting the Finding- there is no way to assure nor should there be, that the outcome of a study will provide the correct answer. Phase 7. Writing the Research Report w Introduction, methodology, results, and discussion. w See Appendix C.

Phase 1: Problem Formulation Phase 1. Problem Formulation- a difficulty recognized- a question posed. Review the literature. Progressive sharpening, specificity, relevance, and eaningfulness. Originating Question: Example: To what extent do social work students understand social justice? Specifying Question (Sharpening): To what extent do social work majors and major intents of varying class standings, compared to students in large sections of SWK 100 (excluding those who have signed major intents), understand the nature of social and economic justice and injustice, as well as the relationship of justice to human needs and human rights?

Originating, Specifying, Subsidiary Questions (1)Is it possible that there is something about the growth of public and nonprofit institutions - the very institutions often entrusted with solving America’s urban social problems - which may have actually exacerbated these problems? (2)Is it possible that the growth of the property tax exempt real property of public, nonprofit and religious organizations had significant unanticipated negative consequences for urban schools, governments and communities? (3)Has the growth of property tax exempt public, nonprofit and religious sector property been characterized by the displacement of developed property from the tax rolls, or has it primarily involved the development of undeveloped land or underdeveloped or deteriorating property?

Originating, Specifying, Subsidiary Questions The first question on the previous slide was my originating question. It addresses the larger issue or concern which first drew me to the problem. The second question was my specifying question. It concerned the unique way in which my study sought to address the problem. The third question is the subsidiary question or research question. The use of originating and specifying questions evolved through the research of Robert Merton, his student Maurice Zeitlin, and Zeitlin’s student Howard Kimeldorf (private communication, Howard Kimeldorf 2002). Charles Tilly suggested the value of beginning research with a wide-ranging question, exploring theory relevant to such a question, and finally posing a more manageable subsidiary question which can be addressed within the framework of the larger question and its related theory (1990). It is often necessary to write and re-write such questions many times during the preparation of a study. Ideally you never change them during the course of the study, but is that inviolable?

Phase 2: Designing the Study n Designing the Study- Alternative logical arrangements and data collection methods are considered. w Consideration of feasibility, purpose of the research, research design (logical foundation and pragmatic issues, e. g. design, sampling, sources and procedures for data collection, measurement issues, data analysis plans, etc. )

Phase 3: Data Collection n Phase 3. Data Collection - record review, existing database, survey, observation, etc. w Deductive studies require accuracy and objectivity w Qualitative focus on understanding meaning and generation of hypothesis.

Phase 4: Data Processing n Phase 4. Data Processing - important to consider in how you structure your data coding, collection, and analysis plans.

Phase 5: Data Analysis n Phase 5. Data Analysis-structured around answering the research question, but can yield additional, unanticipated results that can further the research process.

Phase 6: Interpreting findings n Phase 6. Interpreting the Finding- there is no way to assure nor should there be, that the outcome of a study will provide the correct answer.

Phase 7: Research Report Phase 7. Writing the Research Report w Introduction, methodology, results, and discussion. w See Appendix C.

Problem Formulation RESEARCH PROPOSAL - generally submitted in order to obtain funding or approval n Problem or Objective w What do you want to study? Is it worth it? Practical significance. n Literature - What have others said about the topic? w Theories, findings, flaws, disagreement, agreement n n n Subjects for Study - Who, what, identified in a general theoretic terms, sample selection, ensurance of no harm Measurement - key variables, measurement duplicate prior studies Data-Collection Methods - experiment or survey, data entry ready forms Analysis - what methods, purpose and logic of the analysis Schedule - provision of a time-line, allows for measurement of how you are doing. Budget- specification of types and amounts of spending

Problem Formulation PROBLEM IDENTIFICATION n n Topic Selection Decisions confronting social service agencies or information needed to solve practical problems in social welfare, guide policy, planning, or practice decisions. w Theory (from literature) provides a framework that helps others comprehend the rationale for and significance of framing the question in the way one does. w A topic is not a question

Problem Formulation Problem Identification n n "SO WHAT? " What difference will your proposed research make to others concerned about social work practice or social welfare? Relevant questions, specificity, observable evidence, feasible design, and more than one possible acceptable answer. Important to obtain critical feedback from peers. Narrowing (p. 116) and MD: Originating, specifying, subsidiary

Attributes of question A topic is not a question Be narrow and specific Pose in a way that can be answered by observable evidence Relevance for social work/social welfare or social science

Problem Formulation Problem Identification n FEASIBILITY - It’s easier to conceive of rigorous, valuable studies than figure out how they can feasibly implement them. w The larger the scope of the study, the more it will cost in time, money, and cooperation. w What are the ethical considerations? w Will the value and quality of the research outweigh any potential discomfort, inconvenience, or risk experienced by participants in the study? w The fiscal costs of a study are easily underestimated. w Time constraints also may turn out to be much worse than anticipated. Esp. advance authorization for the study. w Cooperation-agency, human subjects, funding, clients some agencies resist for legitimate reasons and others do so out of fear, mistrust. w Researchers must engage agency representatives in an honest dialog about the purposed work.

Problem Formulation Problem Identification n LITERATURE REVIEW (Chapter 6) w Researchers should be thoroughly grounded in the relevant literature. w Can insure that the research question adequately addresses a burning issue in the field. w Builds on the work of others. w Instructor will provide references that provide useful steps in literature reviews

Problem Formulation Purposes of Research w w w w Exploration – Freq. used in S. W. research, Used when the researcher is examining an new interest, Used when the subject of study is relatively new, Used to test the feasibility of undertaking a more careful study. Provides new insights, but seldom provides complete answers. Often do not use representative samples. Provides a rough understanding of some phenomenon.

Problem Formulation Purpose of Research n Description –the precise measurement and reporting of characteristics of some population or phenomenon under study. w Emphasize accuracy, precision, and generalizability. w Describe situations and events e. g. canvas of schools of social work. Over time can see trends.

Problem Formulation Purpose of Research n Explanation – the discovery and reporting of relationships among different aspects of the phenomenon under study. w Often focuses on the question “Why? ” w Seeks to test hypotheses.

Problem Formulation Purpose of Research n Evaluation – Evaluate social policies, programs and interventions. w Process evaluation w Outcome evaluation

Problem Formulation The Time Dimension n Cross-sectional studies w observe things at one point in time. w Limited in attempting to understand causal processes over time. n Longitudinal w conduct observations over an extended period of time w Attempt to understand causal processes over time.

Problem Formulation Contructing New Measurement Instruments n Research is geared towards methodological improvements for use in subsequent substantive research.

Time Dimension: p. 127 Longitudinal Studies – Three types n n n Trend studies – observe changes within some general population over time. Cohort studies – examine how subpopulations change over time. The specific individuals may be different with each sampling, but the cohort remains the same. Panel studies – Same set of subjects are studied over time.

Problem Formulation Units of Analysis- the major entity that you are analyzing in your study. n For instance, any of the following could be a unit of analysis in a study: individuals groups artifacts (books, photos, newspapers) geographical units (town, census tract, state) social interactions (dyadic relations, divorces, arrests) w Organizations w Societies w Events w w

MD: Subject and Objective of Research Ragin and Zaret distinguished between the object of research (the observational units, MD: what you are observing) and the subject of research (MD: what you are studying, in nomethetic research relationships among variables or in idiographic research the nature of cases). (1983: 740). Ragin, Charles and Zaret, David. Theory and method in comparative research: two strategies. Social Forces. 1983; 61(3, March): 731 -753.

Problem Formulation n n The ecological fallacy (p. 134) occurs when you make conclusions about individuals based only on analyses of group data. For instance, assume that you measured the math scores of a particular classroom and found that they had the highest average score in the district. Later (probably at the mall) you run into one of the kids from that class and you think to yourself "she must be a math whiz. " Aha! Fallacy! Just because she comes from the class with the highest average doesn't mean that she is automatically a high-scorer in math. She could be the lowest math scorer in a class that otherwise consists of math geniuses! An exception fallacy is sort of the reverse of the ecological fallacy. It occurs when you reach a group conclusion on the basis of exceptional cases. This is the kind of fallacious reasoning that is at the core of a lot of sexism and racism. The stereotype is of the guy who sees a woman make a driving error and concludes that "women are terrible drivers. " Wrong! Fallacy! n Copyright © 2001, William M. K. Trochim, All Rights Reserved

Problem Formulation Reductionism (p. 135) – A fault of some researchers: a strict limitation (reduction) of the kinds of concepts to be considered relevant to the phenomenon under study.

Chapter 7: Conceptualization and Operationalization

Conceptualization and Operationalization Conceptual explication n n n Variables-specific concepts or theoretical constructs under investigation Independent variable-a variable that is postulated to explain another variable Dependent variable-a variable being explained Hypothesis-a statement that postulates the relationship between the independent variable and the dependent variable Extraneous variable-a variable that represents an alternative explanation for the relationship between the independent variable and the dependent variable Control variable- a variable that is held constant in an intent to further clarify the relationship between two other variables

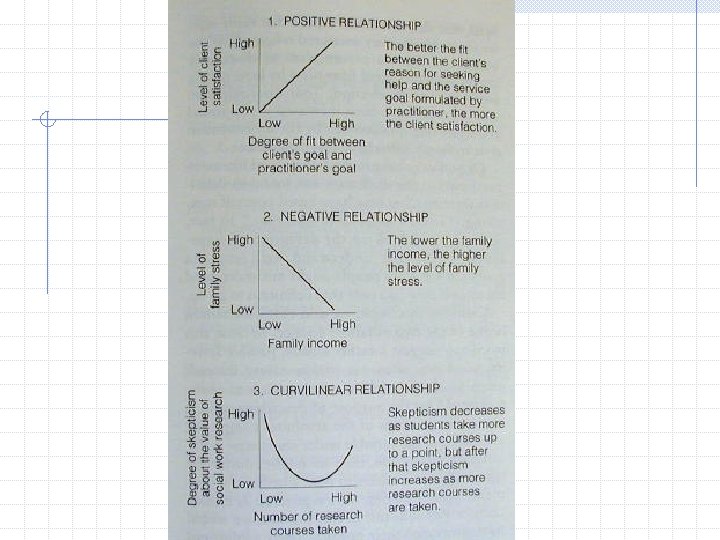

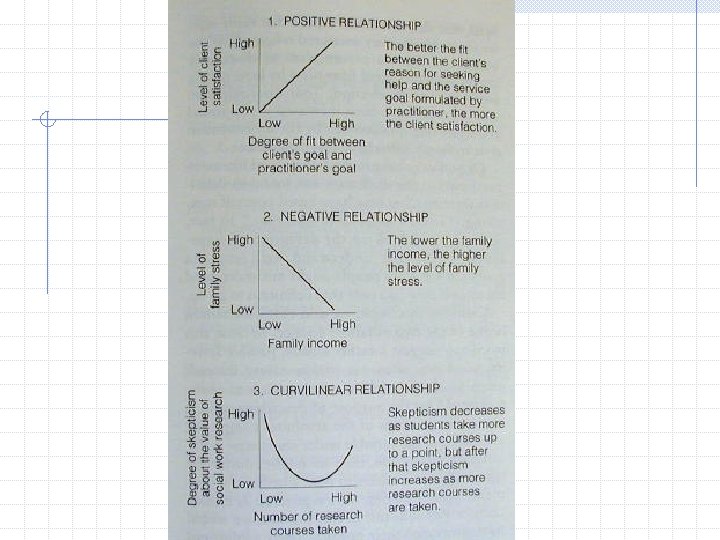

Conceptualization and Operationalization Types of Relationships between Variablespositive, negative, or curvilinear n n n Positive-the dependent variable increases as the independent variable increases Negative-also referred to as inverse-the two variables move in opposite directions, as one increases the other decreases. Curvilinear-the relationship is one in which the nature of the relationship changes at certain levels of the variables.

Conceptualization and Operationalization Operational Definitions- the translation of variables into observable terms. n n Conceptions And Concepts-a conception may be thought of as a mental image. Kaplan distinguished three classes of things that scientists measure. w 1. Direct observables-does things that we can observe rather simply indirectly, for example the color of an apple w 2. Indirect observables-these require relatively more subtle, complex, or indirect observations, for example minutes of the meeting may indicate past social actions. w 3. Constructs-theoretical creations based on observations but which themselves cannot be observe directly or indirectly, for example compassion.

Conceptualization and Operationalization n n Conceptualization -the process through which we precisely specify what we will mean when we use a particular term. Indicators and Dimensions-the end product of conceptualization is a set of indicators, markers that indicate the presence or absence of the concept we are studying. Dimension-a specific aspect or facet of a concept, for example dimensions of group satisfaction The Interchangeability of Indicators- if several direct indicators all represent, to some degree, the same concept, then all of them will behave in the same way that the concept would behave if it were real and observable.

Conceptualization and Operationalization The Confusion over Definitions and Reality n Reification-the process of regarding unreal things as real, such as the use of a diagnostic label for a client.

Conceptualization and Operationalization Choicesn n Range of Variation-refers to the amount of variability in the variable being measured in the necessity to capture or measure the full range of the variability necessary for your research purpose. It is important to consider that the opposite of the concept under study, for instance “antireligiosity” when measuring religiosity. Operationalization continues throughout the research project into the analysis of data.

Conceptualization and Operationalization Existing self-reports scales are popular way to operationally defined many social work variables. They have often been used successfully by others and provide cost advantages in terms of time and money. Scale should be selected carefully and are not always the best way to operationally defined a variable. Additional ways to operationalized variables involve the use direct behavioral observation, interviews, and available records. Qualitative studies eschew operational definitions so that the researchers can immerse themselves in non predetermined observations into the deeper subjective meetings of the phenomenon.

Measurement – Chapter 7 Levels of Measurement Issues n n n n n Attribute - a characteristic or quality of something. Variables - two important qualities 1. the attributes composing it should be exhaustive, e. g. level of education, gender, ethnic group can always use "other" 2. the attributes should be mutually exclusive "classification of every attribute in terms of one and only one attribute. decisions, e. g. Diagnosis.

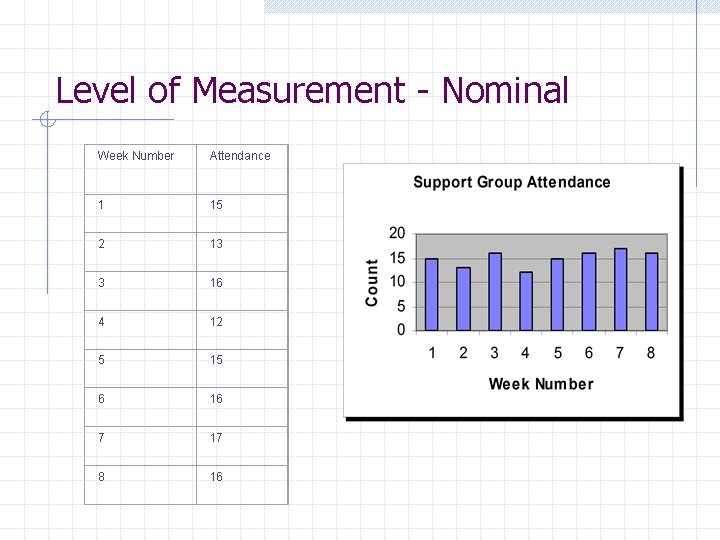

Measurement Levels of Measurement n Nominal Measures - specifies differences in kind only. Sometimes referred to as categorical or qualitative variables, e. g. gender, ethnicity, religious affiliation, birthplace. w In coding nominal measures, the number used has no quantitative meaning, only denotes qualitative differences. w Cannot calculate mean, median, standard deviation, etc. w Can compute the frequency of each attribute.

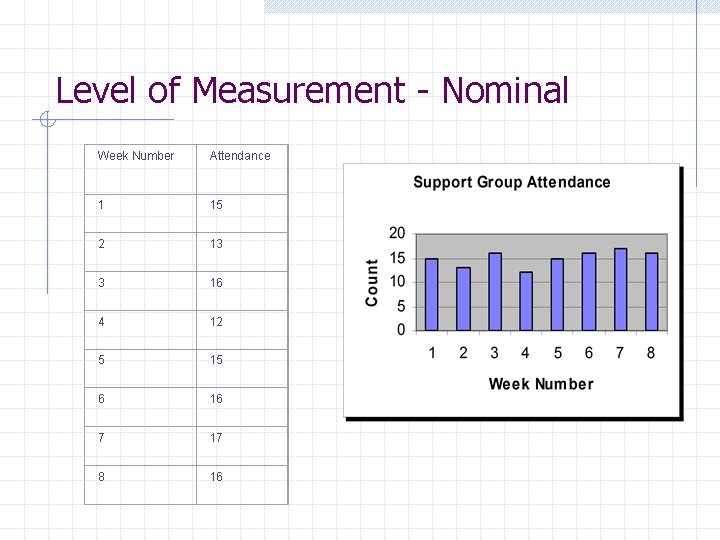

Level of Measurement - Nominal Week Number Attendance 1 15 2 13 3 16 4 12 5 15 6 16 7 17 8 16

Measurement Level of Measurement n n Ordinal - variables that can be logically rank ordered, but provides no quantitative measure by which to make comparisons. Provides information on relative position, but not absolute quantity. Member’s Evaluation of Today’s Group Session (Reid, 1997, p. 238) w w w w w I feel: 1. _____ with the amount of time I had to share my personal issues. 2. _____ with the leader’s involvement in the group. 3. _____ with the comfort of the room. 4. _____ with the trust level in the group. 5. _____ with the other members’ respect for each other. 6. _____ with the honesty during the group. 7. _____ with the degree of sharing that goes on in the group. 8. _____ with the level of cohesion in the group. 9. I would rate the level of group cohesion in today’s group as ______. w 1 = Very dissatisfied, 5 = No feeling one way or another, and 9 – Very satisfied

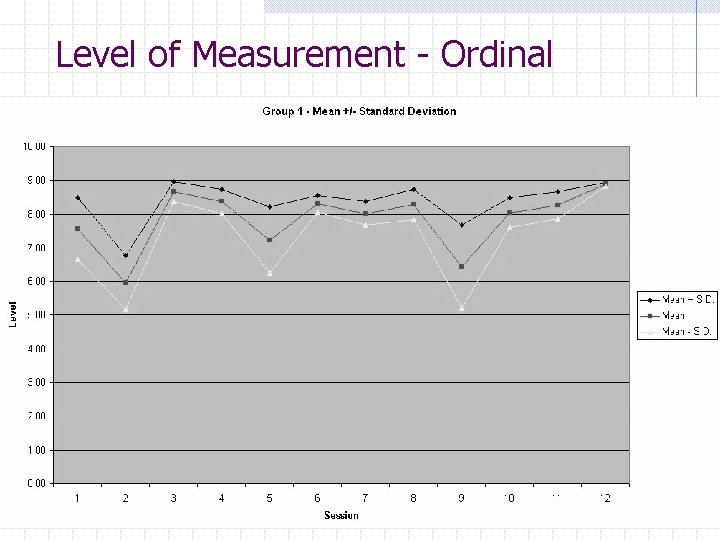

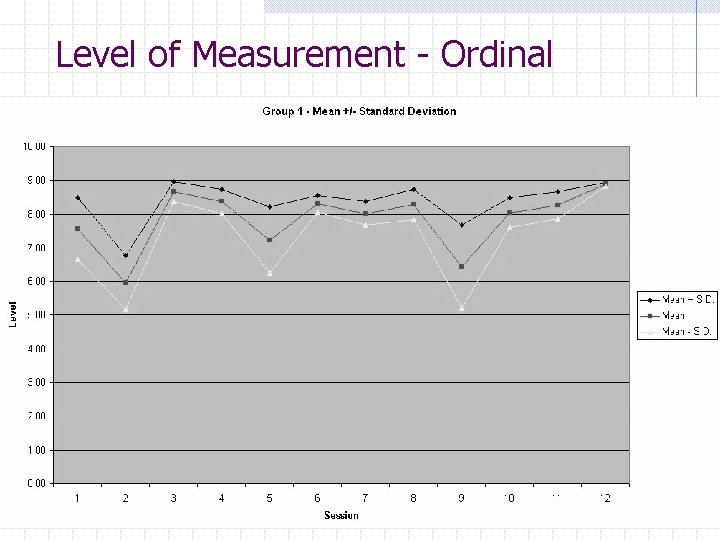

Level of Measurement - Ordinal

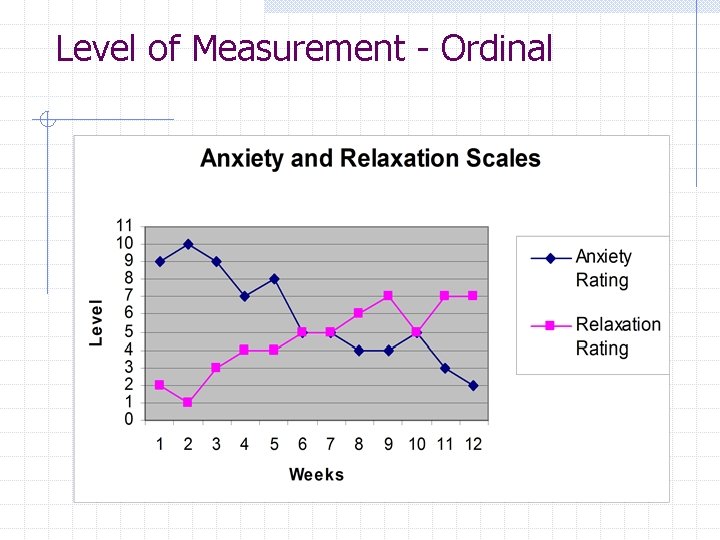

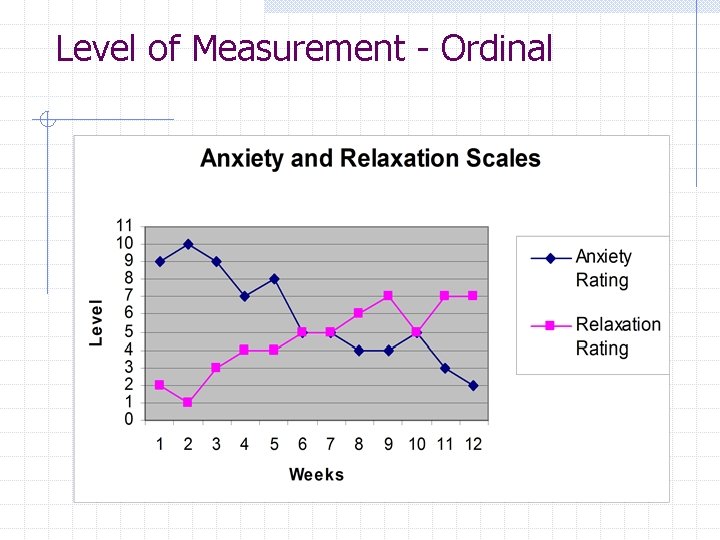

Level of Measurement - Ordinal

Level of Measurement - Interval Measures– n n one can determine the distance separating attributes, placement on an equally spaced continuum (meaningful standard intervals). Interval measures commonly used in social science research are constructed measures such as standardized intelligence tests. No true zero point is present.

Level of Measurement - Interval

Level of Measurement - Ratio - most of the social scientific variables meeting the minimum requirements for interval measures also meet the requirements for ratio measures. There is a true zero point. E. g. age, length of residence, number of children.

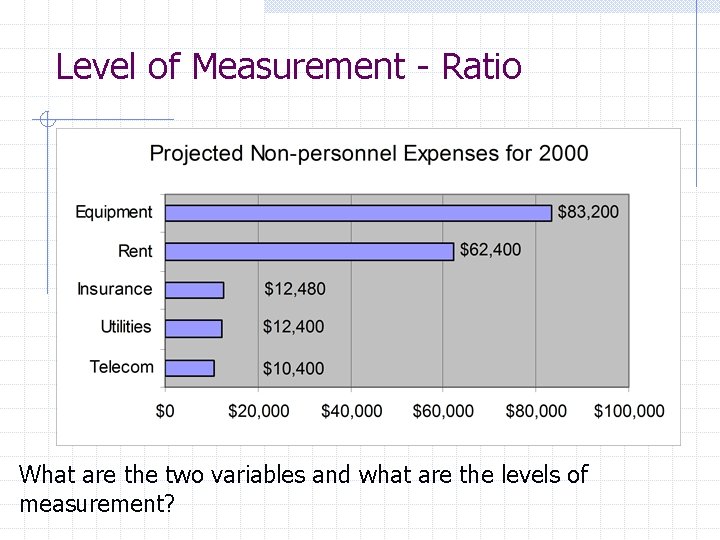

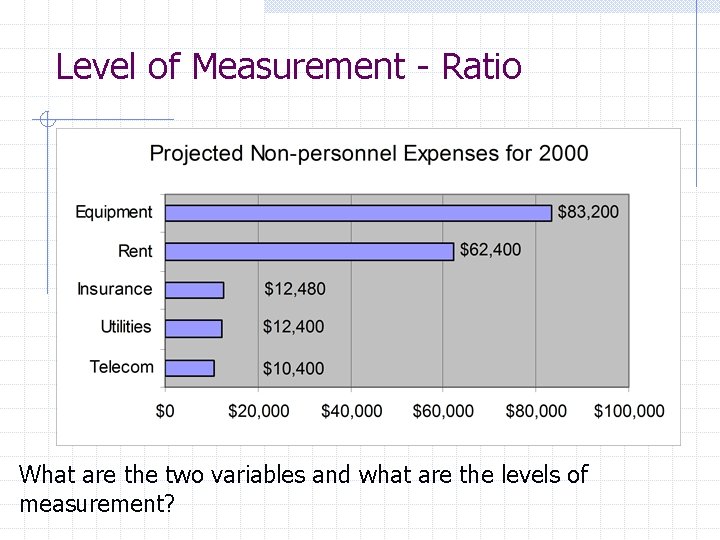

Level of Measurement - Ratio What are the two variables and what are the levels of measurement?

Level of Measurement - Ratio

Implications of Levels of Measurement Dramatically impacts analysis of data. You should anticipate drawing research conclusions appropriate to the levels of measurement used for your variables. Certain statistics require certain minimum level of measurement. n Parametric – Interval and ratio n Non-parametric – Ordinal and nominal E. g. you don't report the mean religious affiliation, but the modal. Unidirectional data reduction- ratio and interval data can be reduced to ordinal, but it does not go the other way.

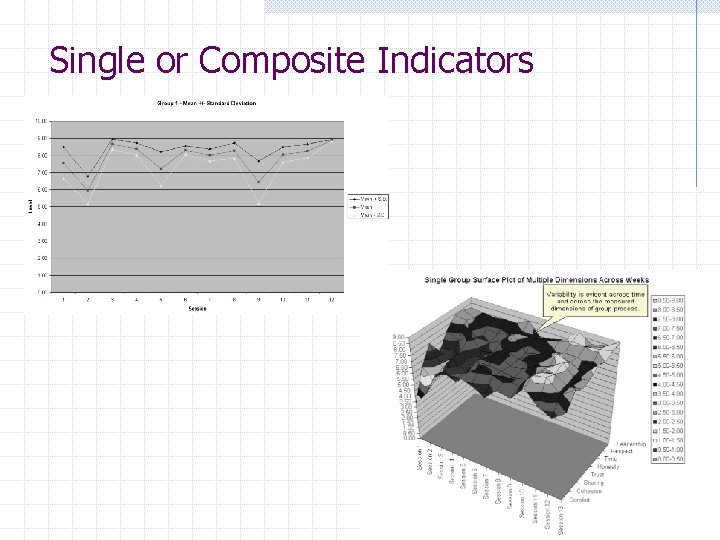

Single or Composite Indicators Most variables are single indicators Variables can be combined to form composite indicators, n n n e. g. GPA as an indicator of school performance, Beck's Depression Scale Group Satisfaction

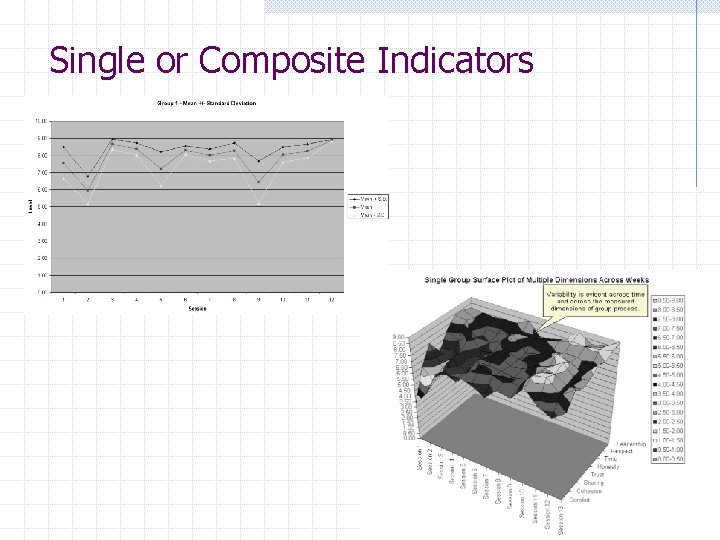

Single or Composite Indicators

Chapter 8: Measurement in Quantitative and Qualitative Inquiry First, before going into this content from Chapter 8, let’s review what was discussed before about errors in research.

Errors in Personal Inquiry Inaccurate Observation – All inquiry is based on observation. n n n Occurs when we are too causal in our observations, not making deliberate attempts to reduce errors. It is essential to know what is occurring before we can determine why it is occurring. General human observation is unsystematic and haphazard, whereas scientific observation is conscious activity and thereby reduces error.

Errors in Personal Inquiry Overgeneralization - occurs when we generalize based on an insufficient number of observations. PB example of interviewing “rioters”: My research in Detroit in 1967. As long as you don’t overgeneralize, can be a source of valuable hypotheses for further research, such as role of exempt property in deterioration of cities. Science guards against it with large samples and replication (hopefully independent)

Errors in Personal Inquiry Selective Observation - We attend to events that correspond to our predictions and overlook contradictions. “The world is as we see it. ” The review of peers assists scientist to control for this source of error. Value of using eco-systems approach to organize research findings in a way which guides practice, and avoid selective observation: “Nobody Loves You When You’re Down and Out: Knowledge of the Psychosocial Consequences of Unemployment, 1979 and 2004” (Dover 1979 and Dover in progress, see blackboard)

Errors in Personal Inquiry Made-Up Information – refers a process were we think up reasons to explain away inconsistencies between what we believe and what we observe. Straw person argument: simplify or distort a position in order to shoot it down Ad hominem attack: attack the messenger rather than the message

Errors in Personal Inquiry Ex post facto reasoning and hypothesizing RB 26 - occurs when we go fishing for reasons to explain away discrepancies between observations and beliefs. n n Battered women’s outreach example. Let’s look at that one. Freud and Fliess and the abandonment of the seduction theory. If research fails to support a favorite intervention, we might say the intervention was not implemented properly.

Errors in Personal Inquiry Illogical Reasoning – used to explain away inconsistencies between observations and beliefs. n n n "the exception that proves the rule“ "gambler's fallacy“ - a good turn of luck is just around the corner. Related: string of good or bad luck seen as a real trend Saved by not wearing a seatbelt example: anomalies, a key concept.

Errors in Personal Inquiry Ego Involvement in Understanding – can create error when we resist accepting observations that make us look less desirable. n n Always a danger and partially controlled for in science by peer review. Commonly seen in program evaluation research.

Errors in Personal Inquiry The Premature Closure of Inquiry – occurs when we rule out certain lines of inquiry that might produce findings that we would find undesirable. n n Overgeneralization, selective observations, made-up information, and defensive uses of illogical reasoning. A particual type of treatment working with on client does not mean it will work with all clients.

Errors in Personal Inquiry Mystification - occurs when attribute things we do not understand to supernatural or mystical causes. n n Assertion of the unknowable- A cherished belief about practice effectiveness must be true and is beyond the ability of researchers to test out. An article of faith in science is that everything is potentially knowable

To Err is Human Science differs from casual, day-to-day inquiry in two ways… n n Science inquiry is a conscious activity, we decide what, how, and for how long we will observe the object or phenomenon of interest. Scientific inquiry is more careful than our causal efforts.

Common Sources of Measurement Error Cultural bias- Intelligence tests have been cited as biased against certain minority groups. n Children growing up with different values, opportunities, and speaking patterns are at a disadvantage with they take IQ tests geared to a white, middle-class environment. w This issue remains controversial and has yet to be clearly demonstrated.

Common Sources of Measurement Error Random Error (Problems with Reliability) n n No consistent patterns of effects, does not create bias but instead makes measures inconsistent from one measurement to the next. May be due to measures being too complex, boring, or fatiguing that subjects just say anything to get the measurement over with as quickly as possible. May be due to poor rater training and interrater disagreement. Clients may answer randomly if they do not understand the question.

Common Sources of Measurement Error Systemic Error (Problems with Validity) n n P. 99: “Occurs when the information we collect consistently reflects a false picture of the concept we seek to measure, either because of the way we collect the data or the dynamics of those who are providing the data…. our measures don’t measure what we think they do. ” Examples: Cultural Bias, Acquiescent Reponse Set, Social Desirability Bias.

Common Sources of Measurement Error Systematic Error-occurs when data reflect a false or inaccurate picture of the concept we seek to measure. n n n Due to the data collection method or the dynamics of those providing the data. Measures may not measure what we think they are measuring; words don't always match deeds. Bias- Drew (1984) -" any influence in (measurement) that distorts the experimental results and thereby causes error in the findings. “ Acquiescent response set-asking questions in a way that predispose individuals to answer in the we want them to, or the provisions of cues and nonverbal behaviors that support a particular type of response. Social desirability bias- the answering of question in a way that distorts true behavior negative and well as positive bias can occur. Should review questions in light of how the subject would feel if asked such a question.

Avoiding Measurement Error In questionnaire construction use unbiased wording and terms that the respondents will understand. Pretest your instruments. Arrange direct observations so that clients are not too keenly aware that the observations are occurring. If you are collecting data from existing records, ask about any possible bias in record keeping. Triangulation- use of several different research methods to collect data. n n Must recognize that different sources are also different potential sources of data error. Do they tend to produce the same finding?

Reliability A matter of whether the same technique applied repeatedly to the same object will yield the same result each time Has to do with the amount of random error in a measurement. The more reliable the measure, the less random error. Reliability does not assure accuracy, e. g. bathroom scales. How to create reliable measures. n n 1. ask about things that are relevant. 2. be clear about what you are asking

Reliability Use measures with previously demonstrated reliability. If you use raters, focus on… n n n specificity, training, and practice.

Types of Reliability Interrater (Interobserver) Reliability-e. g. diagnostic agreement, rated level of empathy. n Calculate the correlation between two sets of ratings. Test-retest reliability- measures stability over time-same instrument with the same individuals on two different occasions. Parallel-forms reliability- used with written instrumentsconstruction of two equivalent measures. Internal consistency reliability- assesses the homogeneity of the measure. Divide the instrument into two halves and measure the correlation between the halves with a Coefficient alpha.

Validity The extent to which an empirical measure adequately reflects the real meaning of the concept under consideration. Face validity-it appears to measure what it is intended to measure. Content validity- the degree to which a measure covers the range of meanings included within a concept. Established on the basis of judgments by experts. n Does the measure cover the universe of facts that make up the concept?

Validity Empirical Validity - evidence about the degree to which a measure is correlated with other indicators of the concept it intends to measure and related to concepts. Two subtypes are Criterion-related validity and Construct validity n Criterion-related validity - based on external criteria, e. g. MMPI paranoia scale related to the dx of paranoia, college boards and college success. w Two subtypes - Predictive validity - ability to predict the future occurrence of a criteria, e. g. GRE’s relationship to success in graduate school. w Concurrent validity - corresponds to a criterion that is know concurrently, e. g. the relationship between a measure of interviewing skills and ratings of video-taped interviewing skills. n Known groups validity – assesses an instrument’s ability to distinguish between groups that it should theoretically be able to distinguish between.

Validity Construct Validity- based on the way a measure relates to other variables within a system of theoretical relationships. n Can also involve assessing whether the measure has both convergent validity and discriminant validity. w Convergent validity – The results from one instrument correspond to the results of other methods of measuring the same construct. Clinician and scale assessment of marital satisfaction. w Discriminant validity - when a measures results do not correspond as highly with measures of other constructs as they do with other measures of the same construct and when its results correspond more highly with the other measures of the same constructs than do measures of alternative constructs.

Reliability and Validity Handled differently in qualitative research. Qualitative researchers differ on definitions and criteria for reliability and validity. Some researchers suggest they are not applicable to qualitative research. This disagreement is related to differing epistemological assumptions about the nature of reality and objectivity.

Kronick’s Criteria For Evaluating the Validity of Qualitative Interpretation of Texts 1. The interpretation of parts of the text should be consistent with other parts of the whole text. 2. The interpretation should be complete, taking all the evidence into account. 3. Conviction – the interpretation should be the most compelling one in light of the evidence within the text. 4. It should be meaningful and extend our understanding.

Chapter 9: Measurement Instruments Three Types of Instruments w Questionnaires, w Interview schedules, w Scales. n Interview schedule-the list of questions used by interviewer w Interview Guide-used in qualitative studies. Interviewer as more freedom to explore issues in the interview. Less structured than an interview schedule. n Questionnaire or self-administered questionnairew may be composed of a combination of questions or statements that the respondent indicates their level of agreement with.

Constructing Measurement Instruments Open-Ended and Closed-Ended Questions n n Open-Ended Questions - the respondent is asked to provide his or her own answer to the question. In an interview schedule, the interviewer may be asked to probe for information as needed. Must be coded before they can be processed for computer analysis. Closed-Ended Questions-the respondent selects an answer from among the list provided by the researcher. w Can be used in self-administered and questionnaires and interview schedules. w The chief limitation is the researcher structuring of the responses may limit the range of responses captured. w Response categories should be exhaustive (include all possible responses that might be expected) and the answer categories must be mutually exclusive. w The respondent should not feel compelled to select more than one. w It is helpful to include in the instructions the request that the respondents select the best answer.

Constructing Measurement Instruments Make Items Clearn questionnaire items should be precise so that the respondent knows exactly what question the researcher wants answered. Avoid Double-Barreled Questionsn whenever the word and appears in a question or questionnaire statement issued a check to see if it is in fact a double barreled question. Respondents Must Be Competent Answer-Exampletalking back to parents. n Does the respondent have been necessary information or experience to answer the question? Respondents Must Be Willing to Answer – n We often want to know what people are unwilling to share with us. Sometimes American respondents will say they are undecided when they think they are in the minority.

Constructing Measurement Instruments Questions Should Be Relevant – n Have the respondents thought about the issue or does it have implications for their lives. Babbie’s research asking respondents about a factious political candidate. 9% of the respondents were familiar with him. Half reported seeing him on TV and reading about him in the paper. Short Items Are Best – n Respondents are often unwilling to study an item in order to understand it. Assume they will read items quickly. Provide short, clear items that will not be misinterpreted.

Constructing Measurement Instruments Avoid Negative Items – n The appearance of negation in a questionnaire item paves the way for easy misinterpretation. “The community should not have a residential facility for the developmentally disabled. ” Or “ Do you favor a residential facility for the developmentally disabled in this community? ” Avoid Biased Items and Terms – n Terms like prejudice have no ultimately correct definition. Terms of this type can lead to random measurement error. Questions that encourage respondents to answer in a particular way are called biased.

QUESTIONNAIRE CONSTRUCTION General Questionnaire Format – n The questionnaire should be spread out and uncluttered. Reduces the chances errors and confusion resulting from having to read crowded and abbreviated questions. Formats for Respondents – n Use boxes, parentheses, but avoid slashes and underscores. Circling a number also works well and facilitates data entry. Contingency Questions – n Some questions are relevant to some respondents and not to others. w If ______ is true for you, then answer 6. B, if not skip to item 10.

QUESTIONNAIRE CONSTRUCTION Matrix Questions – n n n applicable when you have several questions that have the same set of answer categories. Uses space efficiently. Respondents are likely able to complete the questions more quickly. May increase the comparability of responses given to different questions for the respondents as well as the researcher. Disadvantages include w (1) may feel forced to use matrix responses when a more idiosyncratic set of responses would work better, w (2) may foster a response set among respondents (acquiescent response set).

QUESTIONNAIRE CONSTRUCTION Ordering Questions in a Questionnaire – n n n The order affects the answers given. The answer to earlier questions can affect the answer to later questions. It is important to recognize this possibility when designing the layout of the questionnaire. Questionnaires may benefit from putting the most interesting questions at the beginning in order to engage the respondent, though initial questions should not be threatening, e. g. questions about sexual behavior, drugs, and so forth. Demographics should be placed at the end of a self -administered questionnaire.

QUESTIONNAIRE CONSTRUCTION Interview schedules should be constructed with less threatening questions (demographics) at the beginning in order to allow for the development of rapport. Instructions – n n n Begin with basic instructions. If there are subsections, introduce each one with short statement about contents and purpose. Some questions require special instructions, e. g. “answer the primary reason…”

CONSTRUCTING COMPOSITE MEASURES Some variables are too complex or multifaceted to be measured with just one item on a questionnaire, e. g. marital satisfaction, level of social functioning, or quality of life. Composite or cumulative measures of complex variables are called scales and indexes. They are typically ordinal measures of variables.

CONSTRUCTING COMPOSITE MEASURES Item Selection – n n n Items should have face validity. Unidimensionality – restriction of scale items to those that relate directly to the variable of interest. Items should have adequate variance. Consider the range of attributes. Pretest and examine the range of responses to each item. Procedures for examining the influence each item has on the reliability and validity of the scale are reviewed later in the course.

CONSTRUCTING COMPOSITE MEASURES Handling Missing Data – n n n An inescapable part of collecting data. If there is a limited number of cases with missing data one may simply exclude those cases. This may bias the sample if the cases share particular characteristic. One may treat missing data based on the available data, for example, respondents select "yes", but never select "no". Another option is to randomly assign a value. This may prevent one from detecting the relationship one is seeking to find. Another option is to assign the mean value for the variable.

Some Prominent Scaling Procedures Likert scalingn n n Respondent is presented with a statement in the questionnaire and then asked to respond to an ordinal range of possible responses. A straightforward method of scale or index construction. Based on this assumption: an overall score based on responses to many items that reflect a particular variable under construction provides a reasonably good measure of the variable. Overall scores are not the final product index or scale construction; rather, they are used in item analysis to select the best items. The uniform scoring of Likert-item response categories assume that each item has about the same intensity is the rest.

Some Prominent Scaling Procedures Semantic Differential-this format asks respondents to choose between two opposite positions, e. g. n n interesting-boring, simple-complex, uncaring-caring, useful-useless

Constructing Culturally Sensitive Instruments Cannot assume that scales and indexes that appear to be reliable and valid when tested with one culture are reliable and valid when used with other cultures.

Constructing Culturally Sensitive Instruments Language Difficulties-When subjects do not speak English well or at all, three-step should be taken. w 1. Use bilingual interviewers. w 2. Translate the measures into the language of the respondents. w 3. Pretest the instruments to see if they are understood as intended. n Back translation – w A bilingual person translates the instrument and its instructions into the target language. w Another bilingual person translates the target language back into the original language (not seeing the original version of the instrument). w Then the original instrument is compared to the back translated version, and items with discrepancies for further modified.

Constructing Culturally Sensitive Instruments Other factors in cultural bias – n n Are items in a questionnaire applicable to the socioeconomic status of the respondents. Measurement error can result from cultural differences in social desirability of particular scale responses (admitting to psychiatric symptoms in a Puerto Rican community). Rogler recommends n n 1. Direct immersion in the culture 2. Interview knowledgeable informants.

Constructing Culturally Sensitive Instruments Measurement Equivalence-a hierarchy of three types of measurement equivalence in cross ethnic group research n n n 1. Concept equivalence-insuring that instruments and observed behaviors that the same meaning across cultures. “I feel blue” 2. Metric equivalence-requires conceptual equivalence and that, across different cultures, the observed indicators should relate in the same way to their referent theoretical constructs. “Time spent” 3. Structural Equivalence-assumes the other two forms of equivalence and requires that causal linkages among constructs and their consequents be the same across two or more cultures. “Caregiver consequences and value of elderly. ”

Constructing Qualitative Measures Qualitative measures rely on interviews that are usually unstructured and mainly contain openended questions with in-depth probes. They can be completely unstructured, informal conversational interviews that use no measurement instruments to highly structured, standardized interviews in which interviewers must ask questions in the exact order and with the exact wording in which they are written in advance.