Research Infrastructure Simon Hood Research Infrastructure Coordinator itsriteammanchester

- Slides: 6

Research Infrastructure Simon Hood Research Infrastructure Coordinator its-ri-team@manchester. ac. uk

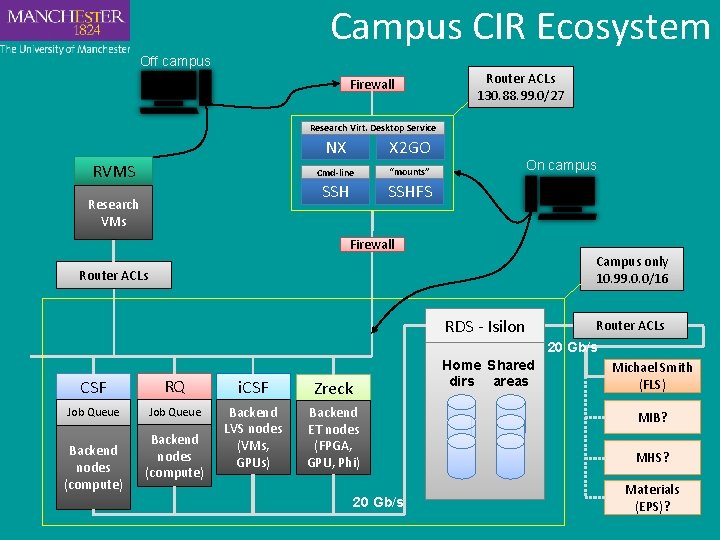

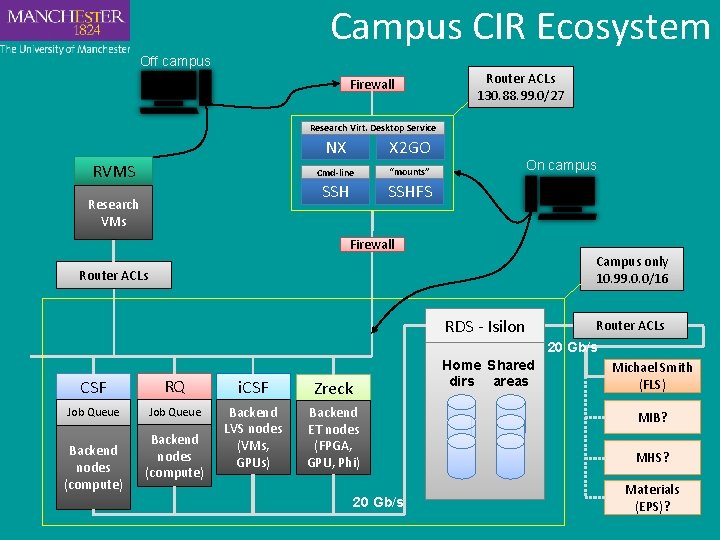

Campus CIR Ecosystem Off campus Firewall Router ACLs 130. 88. 99. 0/27 Research Virt. Desktop Service RVMS Research VMs NX X 2 GO Cmd-line “mounts” SSHFS On campus Firewall Campus only 10. 99. 0. 0/16 Router ACLs RDS - Isilon Router ACLs 20 Gb/s Home Shared dirs areas CSF RQ i. CSF Zreck Job Queue Backend LVS nodes (VMs, GPUs) Backend ET nodes (FPGA, GPU, Phi) Backend nodes (compute) 20 Gb/s Michael Smith (FLS) MIB? MHS? Materials (EPS)?

Ecosystem Workflow 1. Input preparation. EG: upload data to RDS, set job parameter in application GUI, in office on campus. SSHFS RDS RVDS i. CSF RDS 2. Submit compute job. EG: long running parallel high memory heat/stress analysis from home. 3. Check on compute job. EG: while away at conference in Barcelona. Submit other jobs. RVDS i. CSF RDS SSH CSF RDS 5. Publish results. EG: Front-end Web Server running on RVMS accessing Isilon share. RVMS RDS 4. Analyse results EG: In application GUI on laptop in hotel & back in office. RVDS i. CSF RDS

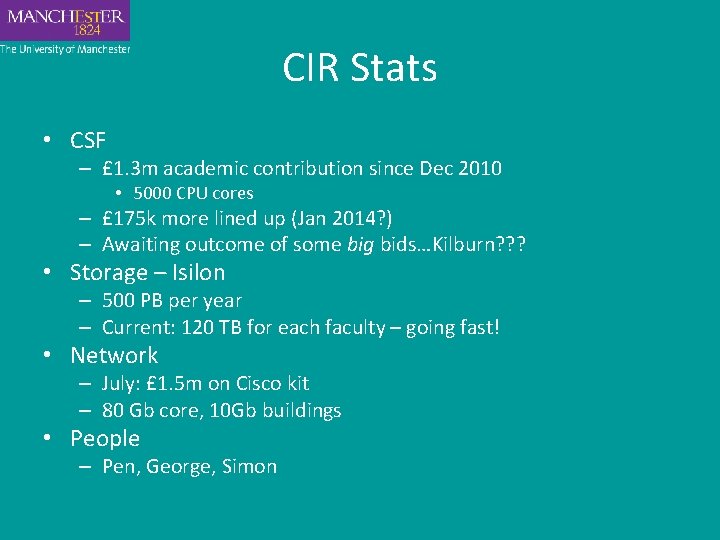

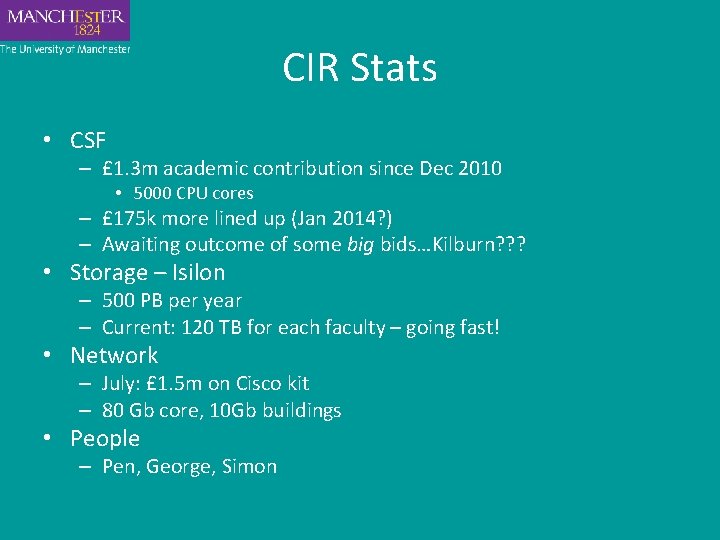

CIR Stats • CSF – £ 1. 3 m academic contribution since Dec 2010 • 5000 CPU cores – £ 175 k more lined up (Jan 2014? ) – Awaiting outcome of some big bids…Kilburn? ? ? • Storage – Isilon – 500 PB per year – Current: 120 TB for each faculty – going fast! • Network – July: £ 1. 5 m on Cisco kit – 80 Gb core, 10 Gb buildings • People – Pen, George, Simon

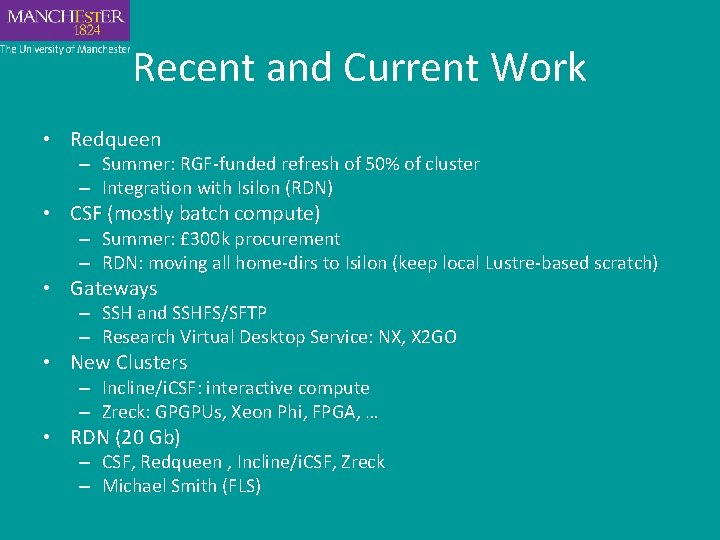

Recent and Current Work • Redqueen – Summer: RGF-funded refresh of 50% of cluster – Integration with Isilon (RDN) • CSF (mostly batch compute) – Summer: £ 300 k procurement – RDN: moving all home-dirs to Isilon (keep local Lustre-based scratch) • Gateways – SSH and SSHFS/SFTP – Research Virtual Desktop Service: NX, X 2 GO • New Clusters – Incline/i. CSF: interactive compute – Zreck: GPGPUs, Xeon Phi, FPGA, … • RDN (20 Gb) – CSF, Redqueen , Incline/i. CSF, Zreck – Michael Smith (FLS)

Thankyou! Email questions to me: Simon. Hood@manchester. ac. uk