Research design I Experimental design and quasiexperimental research

- Slides: 21

Research design I: Experimental design and quasi-experimental research

Today’s Agenda • Types of studies – Meta-analysis – Experimental design

Meta-analysis • Combining the results from multiple studies. • Goal: identify patterns among studies, find disagreements, find other relationships that only appear when examining multiple studies.

Meta-analysis and the file drawer problem • Many studies are conducted but never reported. • Strong bias towards publishing significant findings. • Bias against publishing negative findings. Study in 1979 of 293 registered clinical trials found that significant findings were 12 times more likely to be published.

Experimental and quasi-experimental designs • Share many features • We’ll start with the common features and end with the differences. • Spoiler alert: major difference is that quasiexperimental designs do not use random selection. • …while we go through this, let’s discuss issues of random vs. non-random assignment

Variance • Variance has both conceptual and statistical meanings • We’re only talking about the conceptual piece here

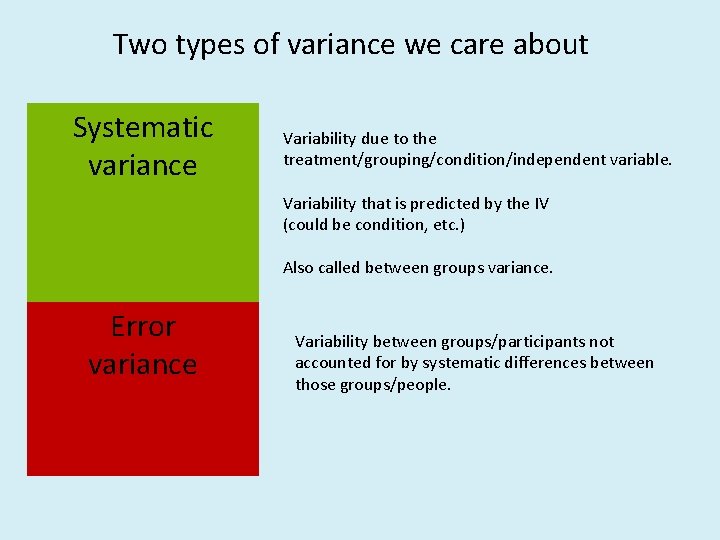

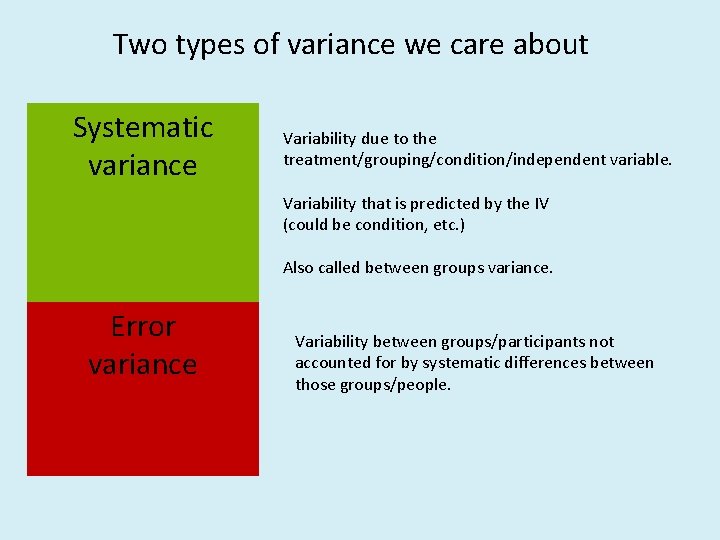

Two types of variance we care about Systematic Variance variance Variability due to the treatment/grouping/condition/independent variable. Variability that is predicted by the IV (could be condition, etc. ) Also called between groups variance. Error variance Variability between groups/participants not accounted for by systematic differences between those groups/people.

Where does error variance come from? 1. Individual differences – pre-existing differences between people; this is the most common source of error variance 2. Transient states – at the time of the experiment, participants differ in how they feel (mood, health, fatigue, interest, etc. )

Sources of Error Variance 3. Environmental factors – differences in the conditions under which the study is conducted (noise, time of day, temperature, etc. ) 4. Differential treatment –treating different participants in slightly different ways 3. Measurement error – unreliable measures contribute to error variance

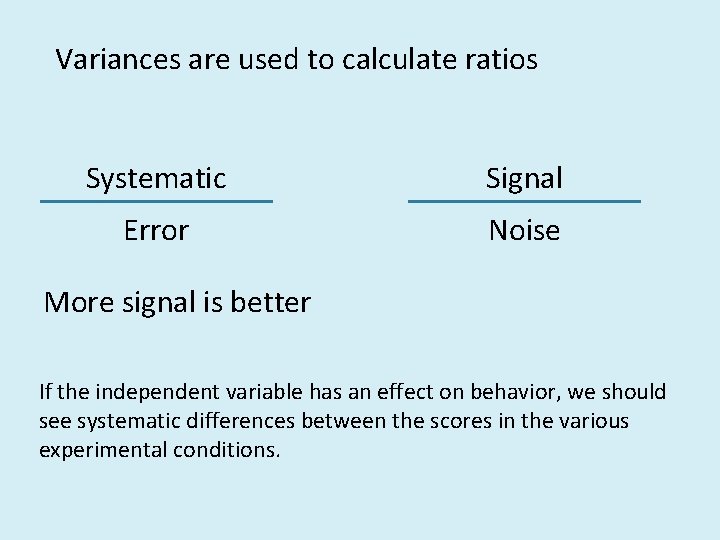

Variances are used to calculate ratios Systematic Signal Error Noise More signal is better If the independent variable has an effect on behavior, we should see systematic differences between the scores in the various experimental conditions.

How can we reduce error variance? • Error variance = unknowns • The more you know, the easier it is to reduce variance • Control variables

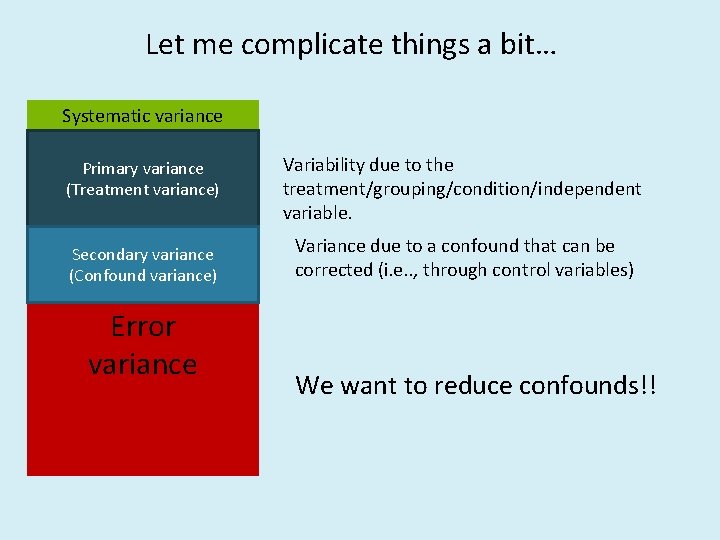

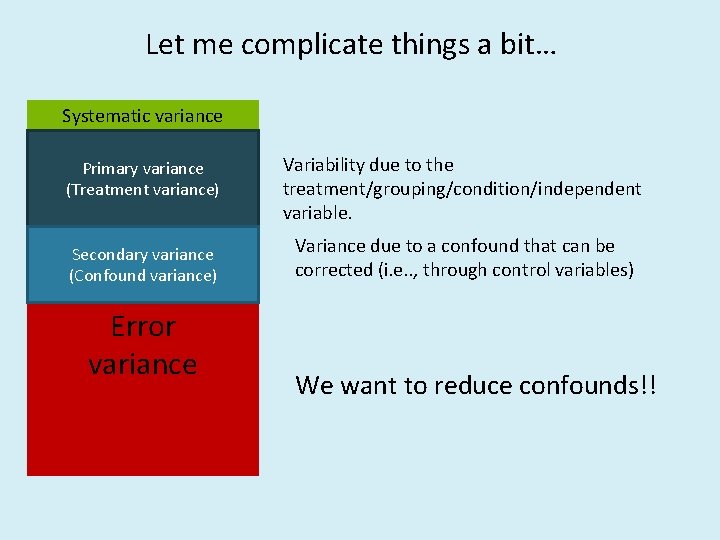

Let me complicate things a bit… Systematic variance Systematic Primary variance (Treatment variance) Variability due to the treatment/grouping/condition/independent variable. Secondary variance (Confound variance) Variance due to a confound that can be corrected (i. e. . , through control variables) Error variance We want to reduce confounds!!

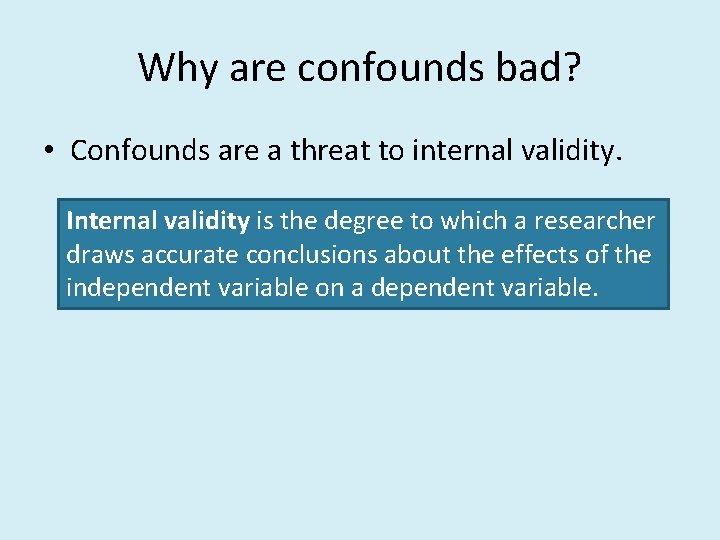

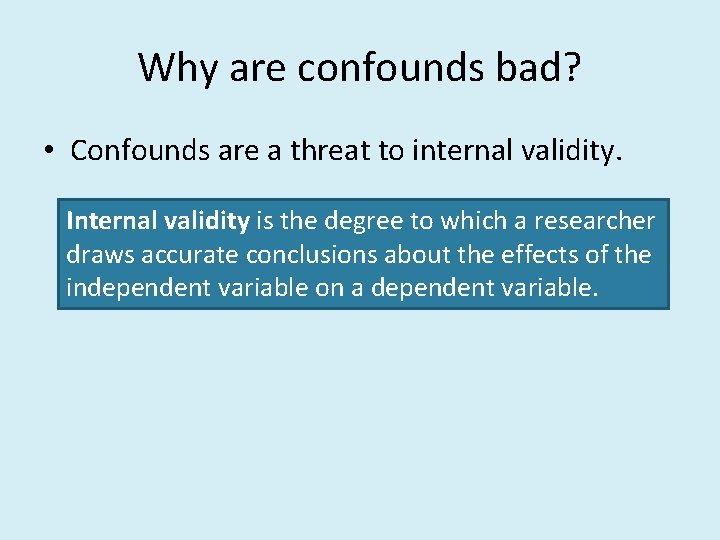

Why are confounds bad? • Confounds are a threat to internal validity. Internal validity is the degree to which a researcher draws accurate conclusions about the effects of the independent variable on a dependent variable.

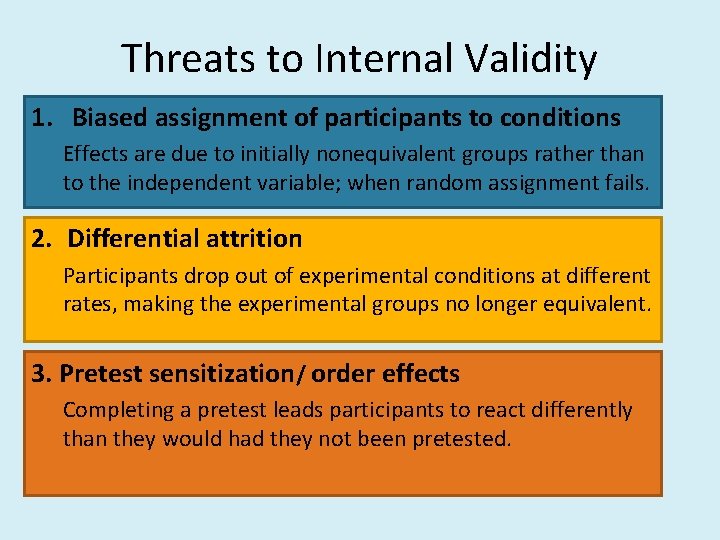

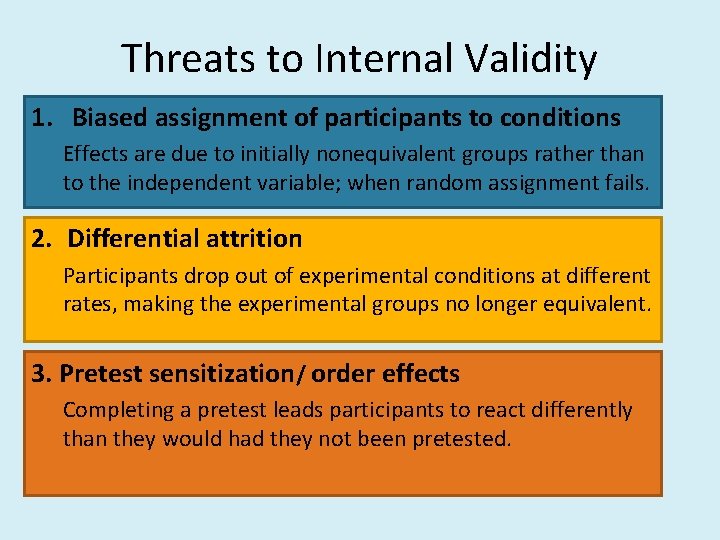

Threats to Internal Validity 1. Biased assignment of participants to conditions Effects are due to initially nonequivalent groups rather than to the independent variable; when random assignment fails. 2. Differential attrition Participants drop out of experimental conditions at different rates, making the experimental groups no longer equivalent. 3. Pretest sensitization/ order effects Completing a pretest leads participants to react differently than they would had they not been pretested.

How do you avoid threats to internal validity? Design a good study…

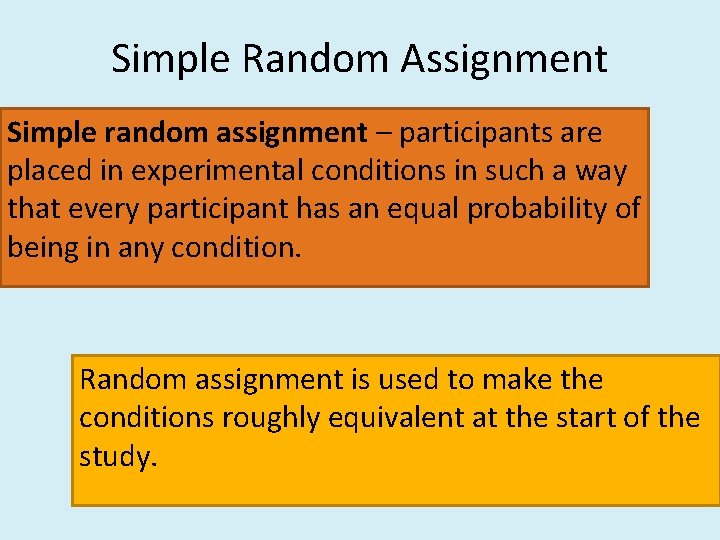

Simple Random Assignment Simple random assignment – participants are placed in experimental conditions in such a way that every participant has an equal probability of being in any condition. Random assignment is used to make the conditions roughly equivalent at the start of the study.

Match Random Assignment Matched random assignment – participants are matched into homogenous blocks, and then participants within each block are assigned randomly to conditions Helps to ensure that the conditions will be similar along some specific dimension, such as age or intelligence. Important to decide which characteristics people should be matched on.

Repeated Measures Design Repeated measures design – an experimental design in which each participant serves in all conditions of the experiment; also called a within-subjects design n Repeated measure designs eliminate the need for random assignment because every participant is tested at every level of the independent variable. n Designs in which each participant serves in only one experimental condition are called betweensubjects designs.

Order Effects When the effects of an experimental condition are contaminated by its order in the sequence of experimental conditions in which participants are tested. Practice effects – participants’ responses are affected by completing the dependent variable many times Fatigue effects – participants become tired or bored as the experiment progresses Sensitization – participants gradually become suspicious of the hypothesis as the experiment progresses

Counterbalancing To protect against order effects, use counterbalancing. Counterbalancing involves presenting the levels of the independent variables in different orders to different participants.

See you on Thursday! • Next class: More research design • Next Tuesday: Developing proposals