RESEARCH AND PROGRAM EVALUATION METHODS IN APPLIED PSYCHOLOGY

- Slides: 30

RESEARCH AND PROGRAM EVALUATION METHODS IN APPLIED PSYCHOLOGY Fall 2017 Alla Chavarga Program Evaluation

WHY DO WE NEED PROGRAM EVALUATION?

WHAT IS PROGRAM EVALUATION? • Alternative term: Evaluation Research • Definition: • “Investigations… designed to test the effectiveness or impact of a social program or intervention. ” (Vogt, 1999, p. 100) • “Evaluation can be viewed as a structured process that creates and synthesizes information intended to reduce the level of uncertainty for decision makers and stakeholders about a given program or policy. ” (Rossi, Freeman, & Lipsey, 2003) • Systematic investigation of effectiveness of intervention programs

GOALS OF PROGRAM EVALUATION • Type of applied research (as opposed to Basic Research) • Goal: Evaluate effectiveness, mostly to assess whether costs of intervention/program are justified • Not a goal: Producing generalizable knowledge or testing theories! • But: Methodology used is the same/similar as in basic research

“Research seeks to prove (provide support for), evaluation seeks to improve…” M. Q. Patton

SURVEILLANCE & MONITORING VS. PROGRAM EVALUATION • Surveillance - tracks disease or risk behaviors • Monitoring - tracks changes in program outcomes over time • Evaluation - seeks to understand specifically why these changes occur

WHAT IS A PROGRAM? • “…a group of related activities that is intended to achieve one or several related objectives”. (Mc. David & Hawthorn, 2006, p. 15) • A program is not a single intervention (think back to last class) • Means-ends relationships: (contrast with cause-effect) • Resources are “consumed” or “converted” into activities • Activities are intended as means to achieve objectives • Varying in scale • Varying in cost

EXAMPLES OF PROGRAMS • New York: • Stop and Frisk or Broken Windows Theory • US: • Health Care Reform • Brooklyn College: • Team Based Learning courses • Alcoholics Anonymous: 12 -Step program • Richmond, California: Reducing homicide rates in city • http: //www. cnn. com/2016/05/19/health/cash-for-criminalsrichmond-california/index. html

WHY EVALUATE PROGRAMS? • To gain insight about a program and its operations – to see where we are going and where we are coming from, and to find out what works and what doesn’t • To improve practice – to modify or adapt practice to enhance the success of activities • To assess effects – to see how well we are meeting objectives and goals, how the program benefits the community, and to provide evidence of effectiveness • To build capacity - increase funding, enhance skills, strengthen accountability

PROGRAM EVALUATION AND PERFORMANCE MEASUREMENT • Used by managers, not evaluators • Challenges: find performance measures that are valid • Albert Einstein: “Everything that can be counted does not necessarily count; everything that counts cannot necessarily be counted. ”

EXAMPLES OF PROGRAM EVALUATION (PE) • Assessing literacy • Occupational training programs • Public health initiatives to reduce mortality • Western Electric: Hawthorne studies (productivity) • Evaluate propaganda techniques (election results? Surveys? ) • Family planning programs (rate of unwanted pregnancies? Abortions? ) (Rossi, Lipsey, & Freeman, 2003)

KEY QUESTIONS FOR PROGRAM EVALUATION • Did the program achieve its intended objectives? • Did the program achieve the observed outcomes? • Are the observed outcomes consistent with the intended outcomes? Mc. David & Hawthorn, 2006

KEY EVALUATION QUESTIONS • Was the program efficient? • Technically efficient: cost per unit of work done • Was the program cost-effective? • Cost per unit of outcome achieved • Was the program appropriate? • What is the rationale for the program? • Still relevant? • Was the program adequate? • Large enough to do the job? • How well (if at all) was the program implemented? Mc. David & Hawthorn, 2006

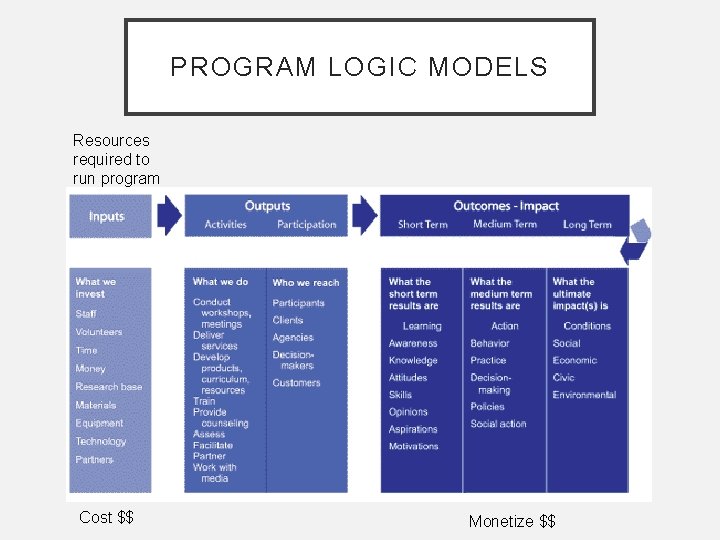

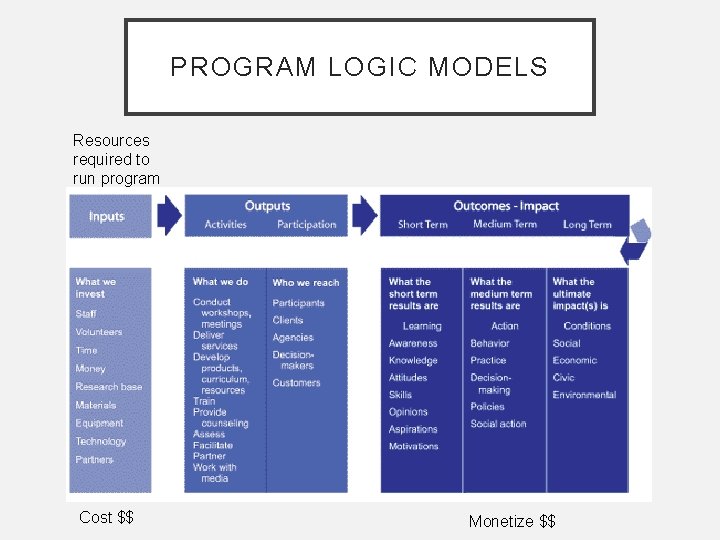

PROGRAM LOGIC MODELS Resources required to run program Cost $$ Monetize $$

PROGRAM INPUTS-OUTPUTS • Compare program costs to outputs (technical efficiency) • Compare program costs to outcomes (costeffectiveness) • Compare costs to monetized values of outcomes (cost -benefit analysis)

EXAMPLE • E. g. : Problem: residents are leaving neighborhood because it is deteriorating • Program: Offer property tax breaks to home owners if they upgrade physical appearance of their homes • Outcomes: • Short term: number of houses rehabilitated • Long-term: people don’t leave the neighborhood any more

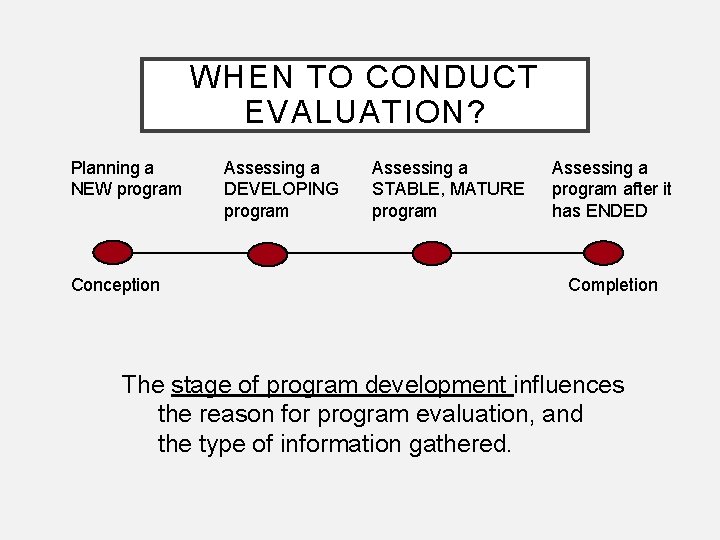

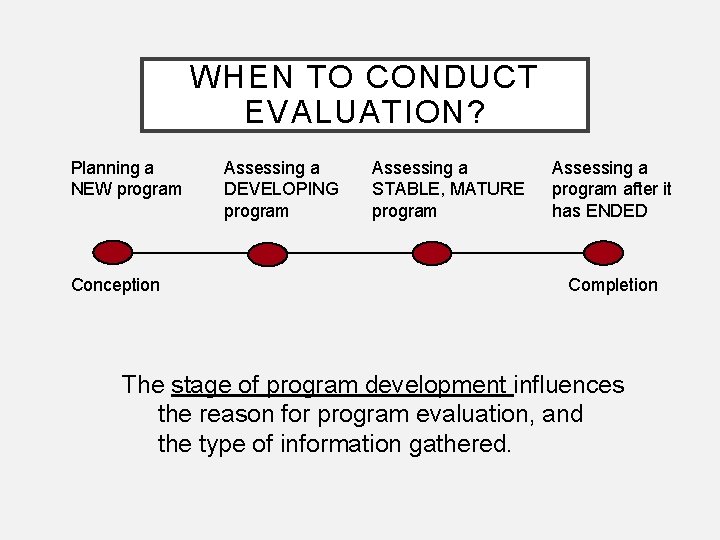

WHEN TO CONDUCT EVALUATION? Planning a NEW program Conception Assessing a DEVELOPING program Assessing a STABLE, MATURE program Assessing a program after it has ENDED Completion The stage of program development influences the reason for program evaluation, and the type of information gathered.

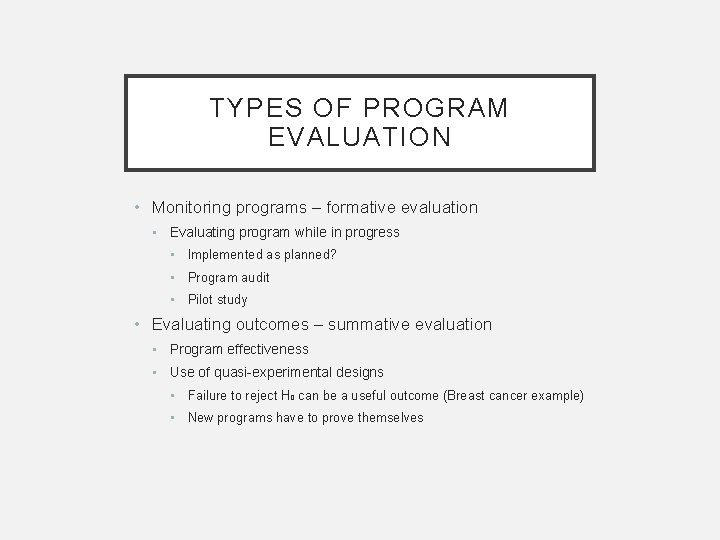

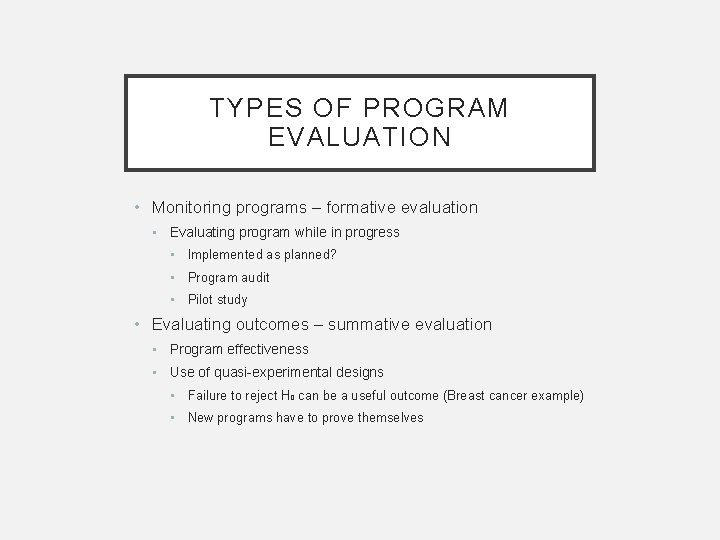

TYPES OF PROGRAM EVALUATION • Monitoring programs – formative evaluation • Evaluating program while in progress • Implemented as planned? • Program audit • Pilot study • Evaluating outcomes – summative evaluation • Program effectiveness • Use of quasi-experimental designs • Failure to reject H 0 can be a useful outcome (Breast cancer example) • New programs have to prove themselves

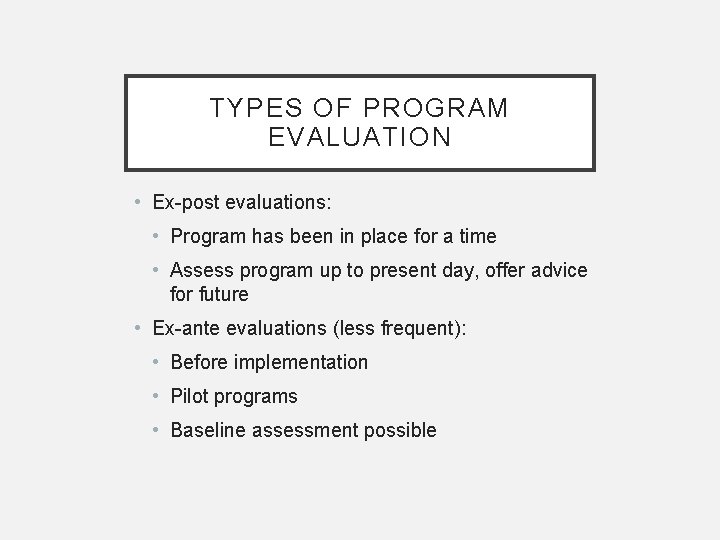

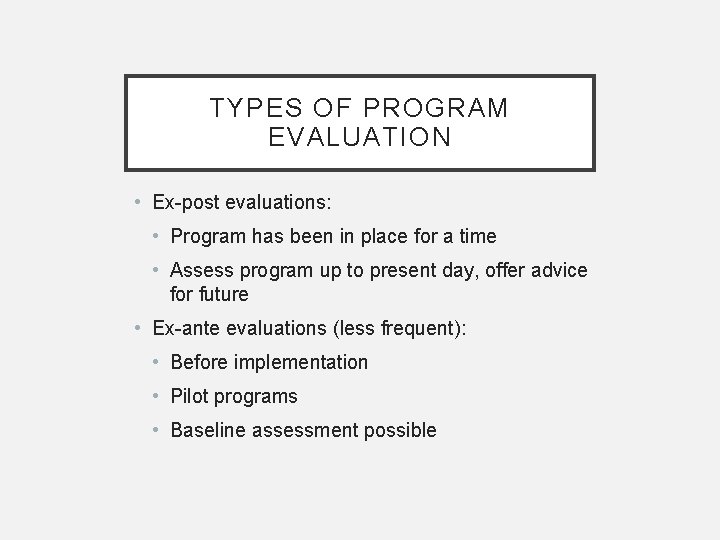

TYPES OF PROGRAM EVALUATION • Ex-post evaluations: • Program has been in place for a time • Assess program up to present day, offer advice for future • Ex-ante evaluations (less frequent): • Before implementation • Pilot programs • Baseline assessment possible

IMPACT EVALUATION • Impact: more than just outcomes • Long-term outcomes • Deeper outcomes • Which other factors may have influenced results? • Unintended outcomes? • Accidental outcomes?

RESEARCH DESIGNS FOR PROGRAM EVALUATION • Standard experimental designs • Two groups comparison • Two by two factorial design • Pre-test post-test design • Post-test only design • Solomon Four Group Design

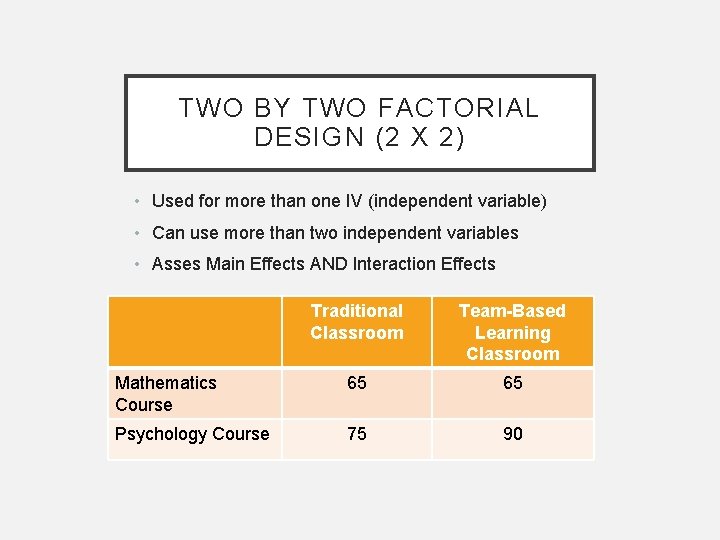

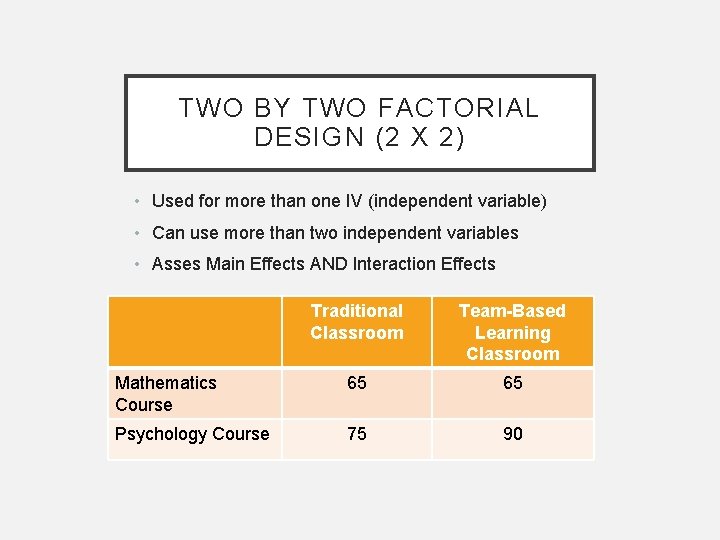

TWO BY TWO FACTORIAL DESIGN (2 X 2) • Used for more than one IV (independent variable) • Can use more than two independent variables • Asses Main Effects AND Interaction Effects Traditional Classroom Team-Based Learning Classroom Mathematics Course 65 65 Psychology Course 75 90

SOLOMON FOUR GROUP DESIGN Group 1 Pre Group 2 Pre Group 3 Group 4 Program Post

ASSESSING NEEDS FOR A PROGRAM • Become familiar with political/organizational context • Identify the users and uses of needs assessment • Identify the target population(s) who will be or are currently being served • Inventory existing services available to target population, identify potential gaps • Identify needs, using complementary strategies for collecting and recording data

PROGRAM EVALUATION • Needs analysis Resources • Census data • Surveys of potential users • Key informants, focus groups, community forums • Research example 33 • Healthy behaviors in the workplace (Du. Pont) • Examined employee health data • Surveyed existing company programs • Surveyed employee knowledge of healthy behavior

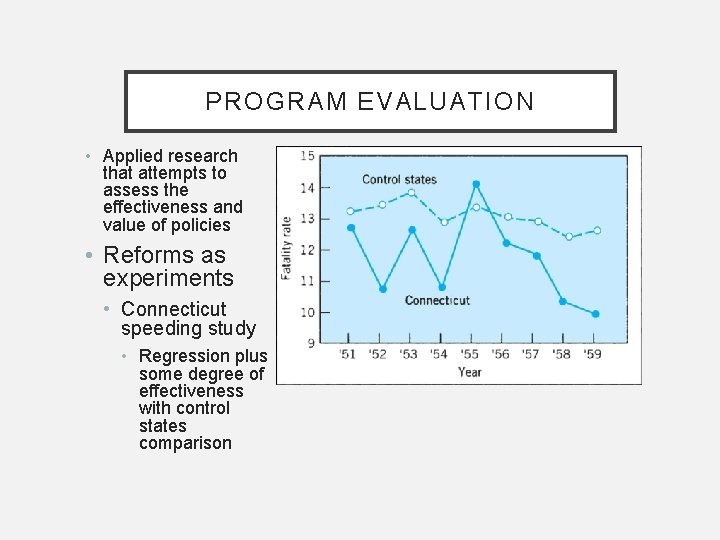

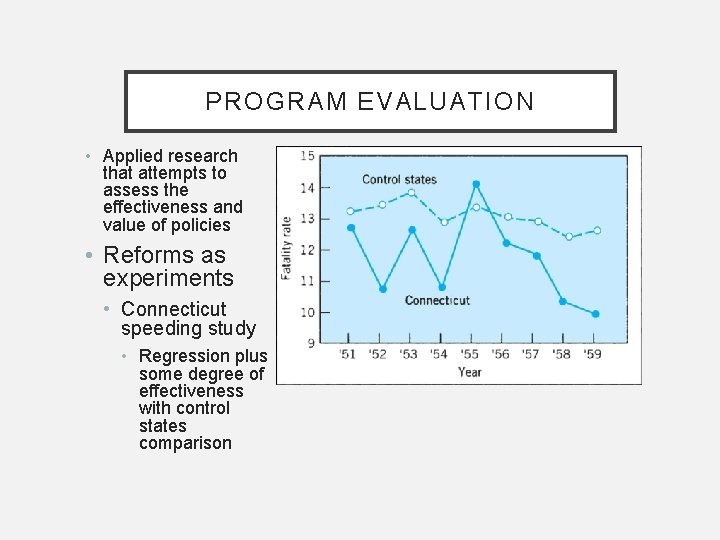

PROGRAM EVALUATION • Applied research that attempts to assess the effectiveness and value of policies • Reforms as experiments • Connecticut speeding study • Regression plus some degree of effectiveness with control states comparison

PROGRAM EVALUATION • A note on qualitative analysis • Common in program analysis • Needs analysis interview info • Formative and summative evaluations observations • e. g. , energy conservation in Bath, England included both quantitative (energy usage) and qualitative (focus group interviews) information

PROGRAM EVALUATION • Ethics and program evaluation • Consent issues (coercion, fear of loss of services, often vulnerable populations receiving social services) • Confidentiality issues – anonymity not compatible with evaluation measures, longitudinal data gathering, reports to stakeholders, etc • Perceived injustice • Participant crosstalk control group perceives themselves at a disadvantage

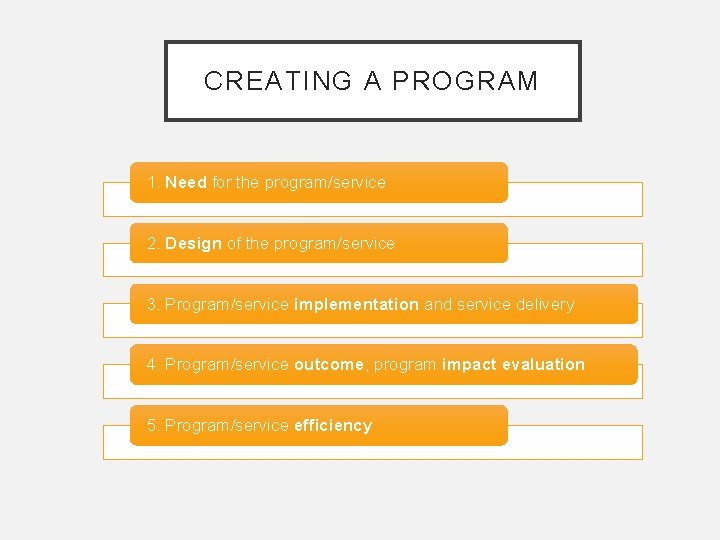

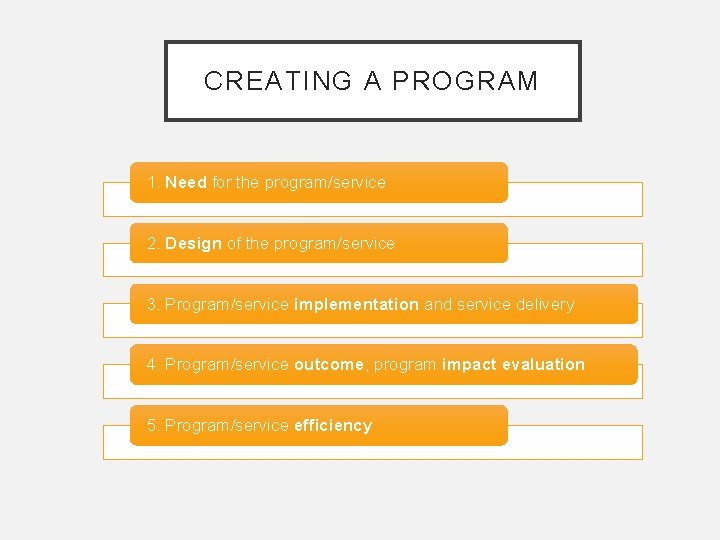

CREATING A PROGRAM 1. Need for the program/service 2. Design of the program/service 3. Program/service implementation and service delivery 4. Program/service outcome; program impact evaluation 5. Program/service efficiency

THE FOUR STANDARDS • Utility: Who needs the information and what information do they need? (stakeholders!) Typically this kind of research is not “experimenter”motivated • Feasibility: How much money, time, and effort can we put into this? • Propriety: What steps need to be taken for the evaluation to be ethical? • Accuracy: What design will lead to accurate information?