Reproducibility On computational processes dynamic data and why

Reproducibility: On computational processes, dynamic data, and why we should bother Andreas Rauber Vienna University of Technology rauber@ifs. tuwien. ac. at http: //www. ifs. tuwien. ac. at/~andi

Outline What are the challenges in reproducibility? What do we gain from reproducibility? (and: why is non-reproducibility interesting? ) How to address the challenges of complex processes? How to deal with “Big Data”? Summary

Challenges in Reproducibility http: //www. plosone. org/article/info%3 Adoi%2 F 10. 1371%2 Fjournal. pone. 0038234

Challenges in Reproducibility Excursion: Scientific Processes

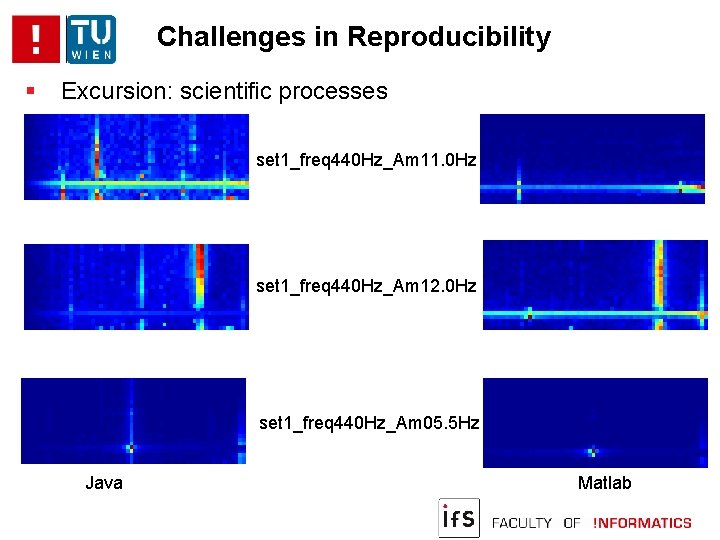

Challenges in Reproducibility Excursion: scientific processes set 1_freq 440 Hz_Am 11. 0 Hz set 1_freq 440 Hz_Am 12. 0 Hz set 1_freq 440 Hz_Am 05. 5 Hz Java Matlab

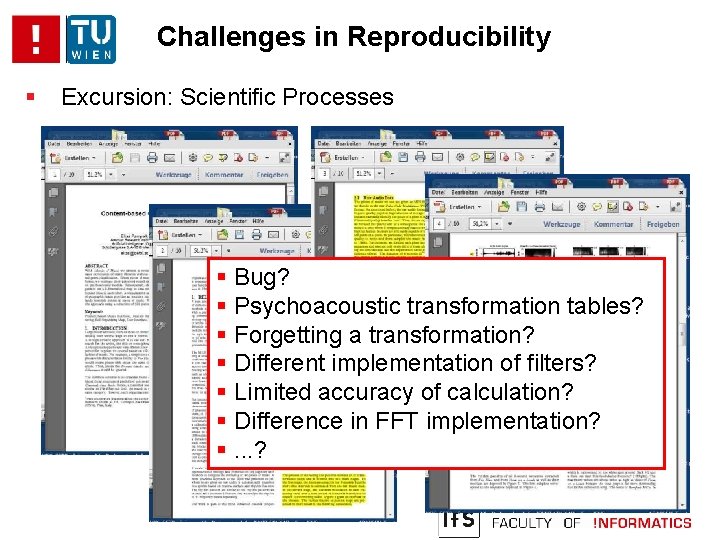

Challenges in Reproducibility Excursion: Scientific Processes Bug? Psychoacoustic transformation tables? Forgetting a transformation? Different implementation of filters? Limited accuracy of calculation? Difference in FFT implementation? . . . ?

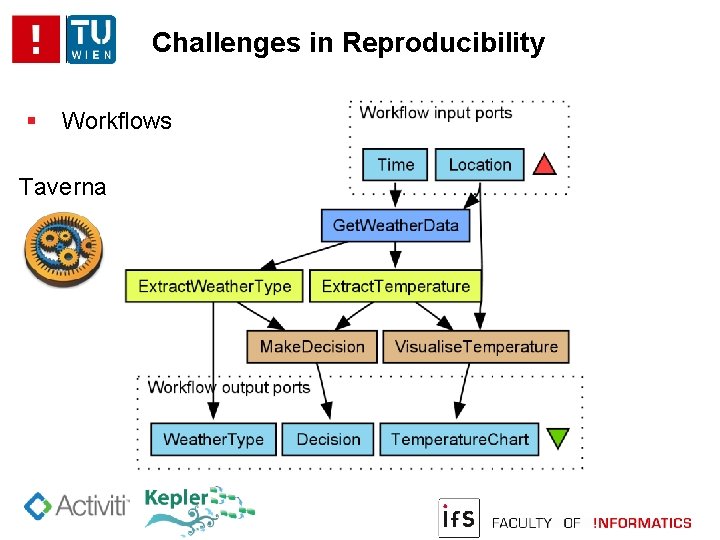

Challenges in Reproducibility Workflows Taverna

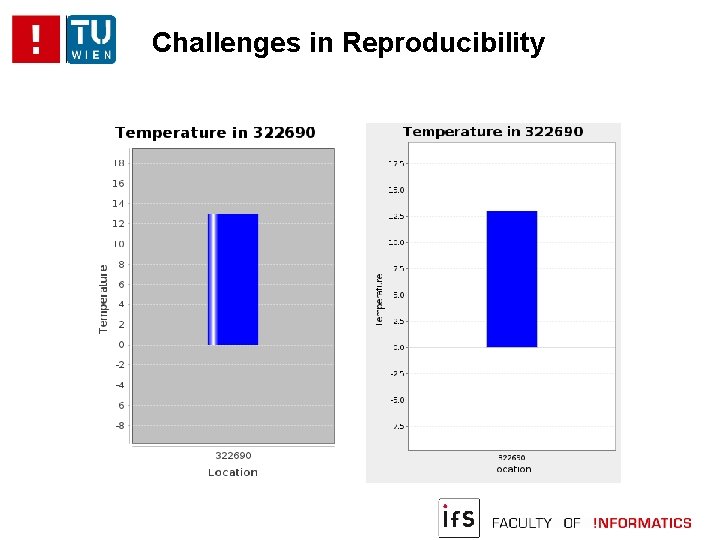

Challenges in Reproducibility

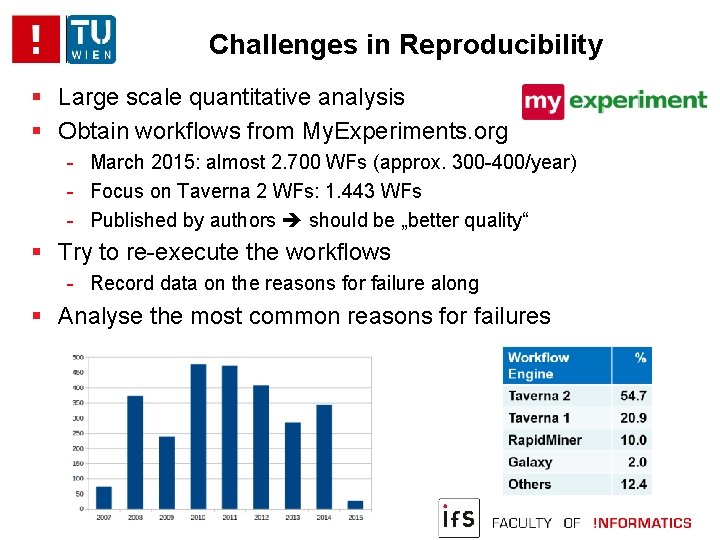

Challenges in Reproducibility Large scale quantitative analysis Obtain workflows from My. Experiments. org - March 2015: almost 2. 700 WFs (approx. 300 -400/year) - Focus on Taverna 2 WFs: 1. 443 WFs - Published by authors should be „better quality“ Try to re-execute the workflows - Record data on the reasons for failure along Analyse the most common reasons for failures

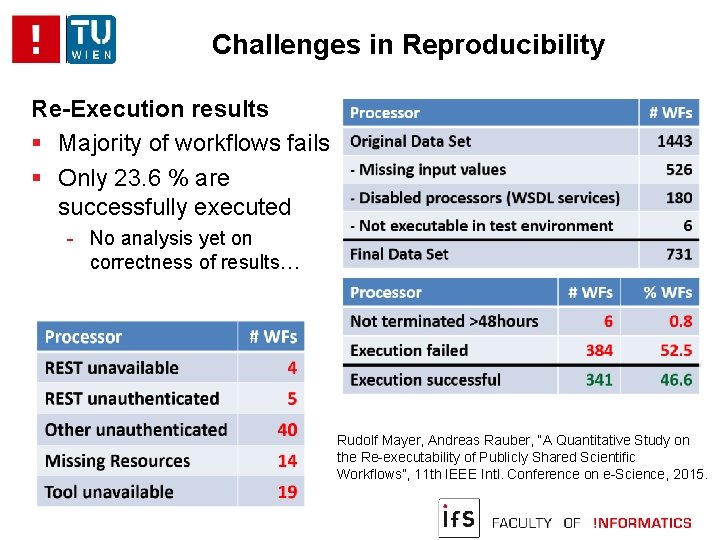

Challenges in Reproducibility Re-Execution results Majority of workflows fails Only 23. 6 % are successfully executed - No analysis yet on correctness of results… Rudolf Mayer, Andreas Rauber, “A Quantitative Study on the Re-executability of Publicly Shared Scientific Workflows”, 11 th IEEE Intl. Conference on e-Science, 2015.

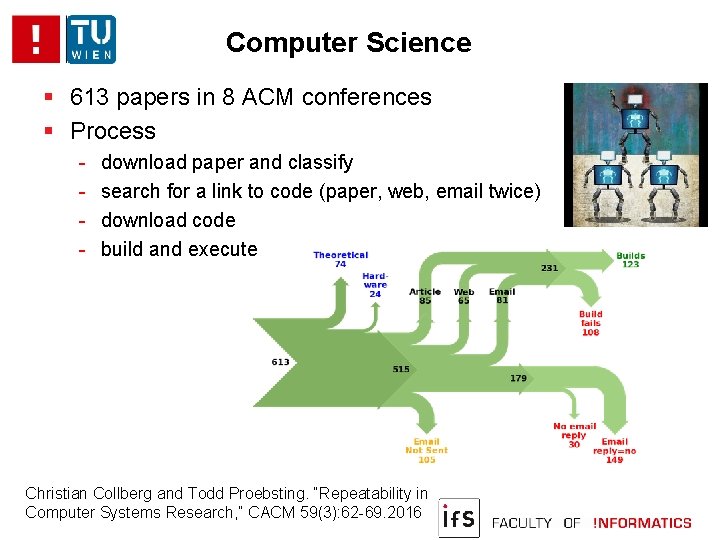

Computer Science 613 papers in 8 ACM conferences Process - download paper and classify search for a link to code (paper, web, email twice) download code build and execute Christian Collberg and Todd Proebsting. “Repeatability in Computer Systems Research, ” CACM 59(3): 62 -69. 2016

Challenges in Reproducibility In a nutshell – and another aspect of reproducibility: Source: xkcd

Outline What are the challenges in reproducibility? What do we gain by aiming for reproducibility? How to address the challenges of complex processes? How to deal with dynamic data? Summary

Reproducibility – solved! (? ) Provide source code, parameters, data, … Wrap it up in a container/virtual machine, … LXC … Why do we want reproducibility? Which levels or reproducibility are there? What do we gain by different levels of reproducibility?

Reproducibility – solved! (? ) Dagstuhl Seminar: Reproducibility of Data-Oriented Experiments in e-Science January 2016, Dagstuhl, Germany

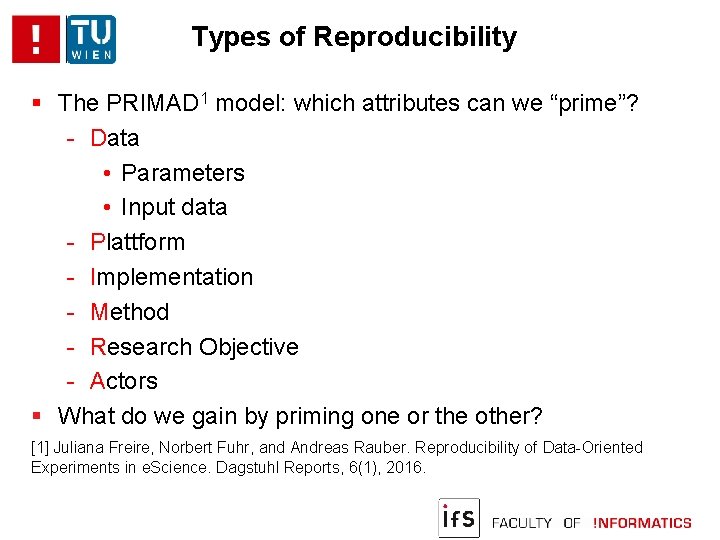

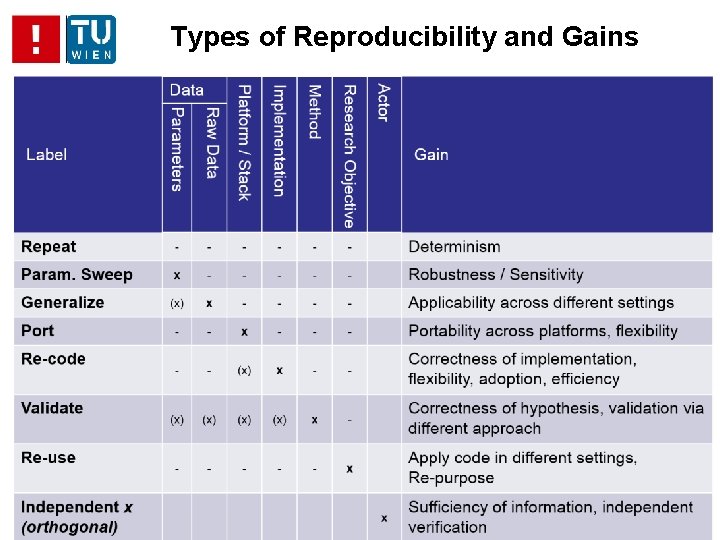

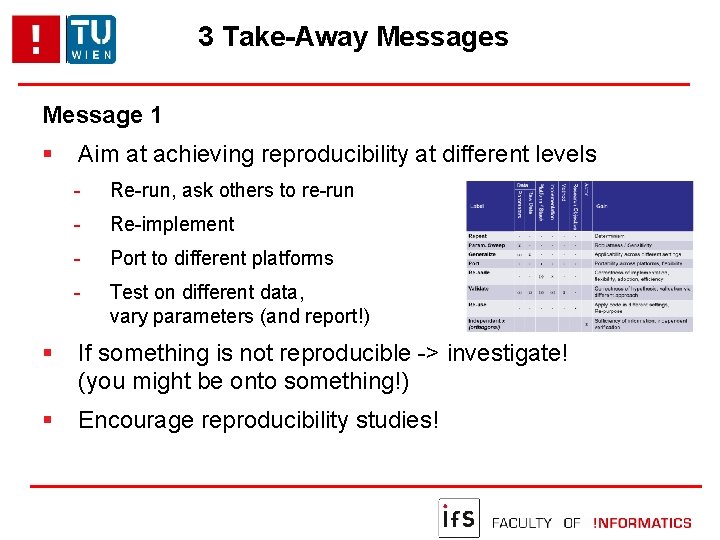

Types of Reproducibility The PRIMAD 1 model: which attributes can we “prime”? - Data • Parameters • Input data - Plattform - Implementation - Method - Research Objective - Actors What do we gain by priming one or the other? [1] Juliana Freire, Norbert Fuhr, and Andreas Rauber. Reproducibility of Data-Oriented Experiments in e. Science. Dagstuhl Reports, 6(1), 2016.

Types of Reproducibility and Gains

Reproducibility Papers Aim for reproducibility: for one’s own sake – and as Chairs of conference tracks, editor, reviewer, superviser, … - Review of reproducibility of submitted work (material provided) - Encouraging reproducibility studies - (Messages to stakeholders in Dagstuhl Report) Consistency of results, not identity! Reproducibility studies and papers - Not just re-running code / a virtual machine - When is a reproducibility paper worth the effort / worth being published?

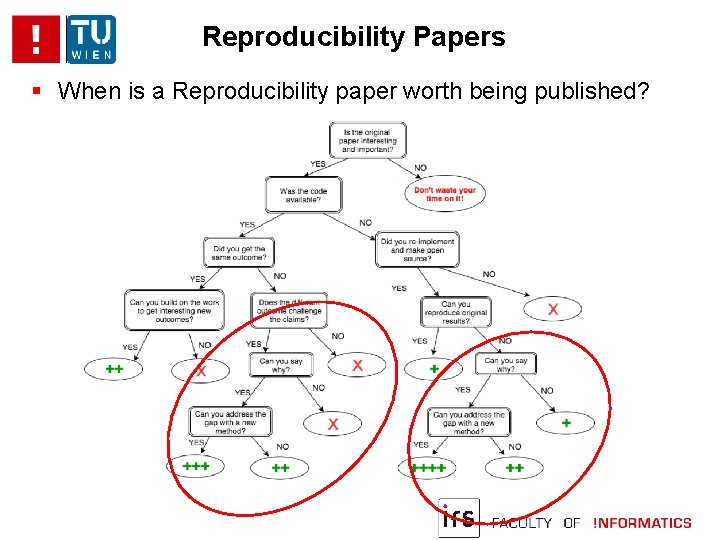

Reproducibility Papers When is a Reproducibility paper worth being published?

Learning from Non-Reproducibility Do we always want reproducibility? - Scientifically speaking: yes! Research is addressing challenges: - Looking for and learning from non-reproducibility! Non-reproducibility if - Some (un-known) aspect of a study influences results - Technical: parameter sweep, bug in code, OS, … -> fix it! - Non-technical: input data! (specifically: “the user”)

Learning from Non-Reproducibility Challenges in MIR – “things don’t seem to work” Virtual Box, Github, <your favourite tool> are starting points Same features, same algorithm, different data -> Same data, different listeners -> Understanding “the rest”: - Isolating unknown influence factors - Generating hypotheses - Verifying these to understand the “entire system”, cultural and other biases, … Benchmarks and Meta-Studies

Outline What are the challenges in reproducibility? What do we gain by aiming for reproducibility? How to address the challenges of complex processes? How to deal with “Big Data”? Summary

Deja-vue… http: //www. plosone. org/article/info%3 Adoi%2 F 10. 1371%2 Fjournal. pone. 0038234

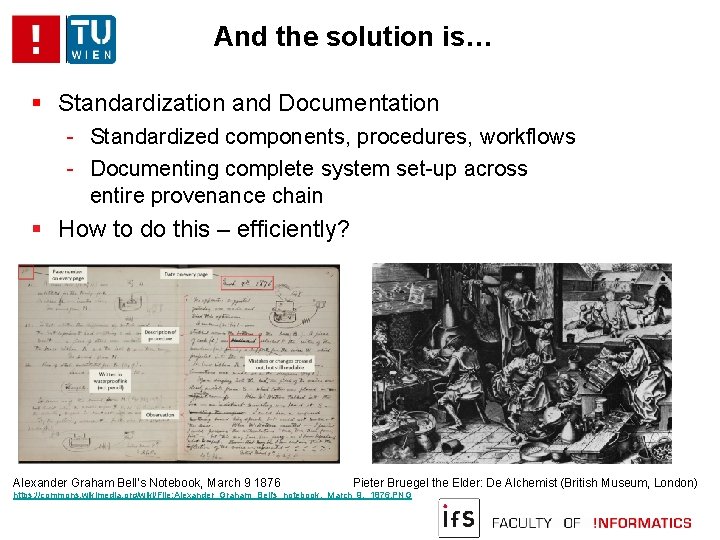

And the solution is… Standardization and Documentation - Standardized components, procedures, workflows - Documenting complete system set-up across entire provenance chain How to do this – efficiently? Alexander Graham Bell’s Notebook, March 9 1876 Pieter Bruegel the Elder: De Alchemist (British Museum, London) https: //commons. wikimedia. org/wiki/File: Alexander_Graham_Bell's_notebook, _March_9, _1876. PNG

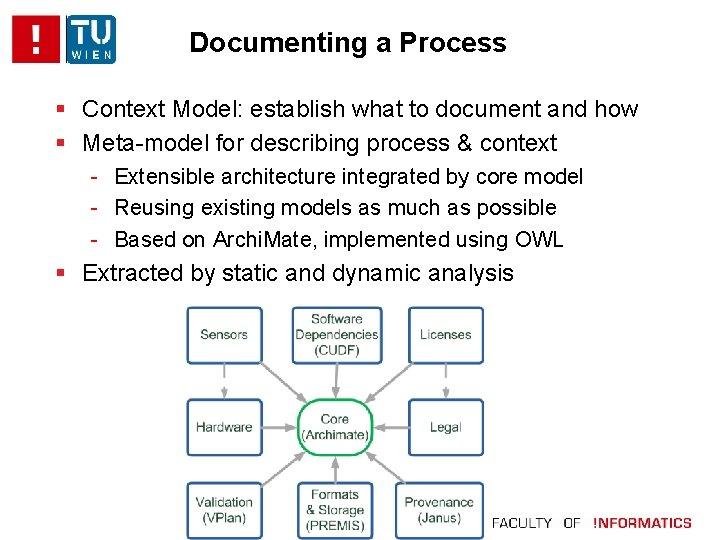

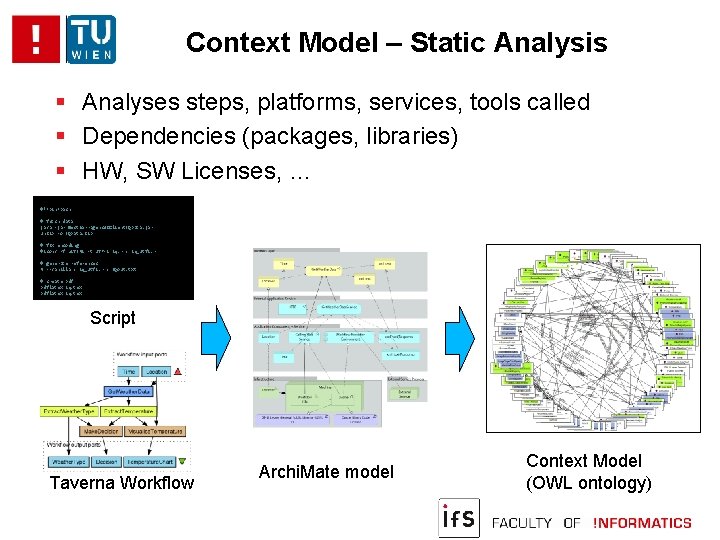

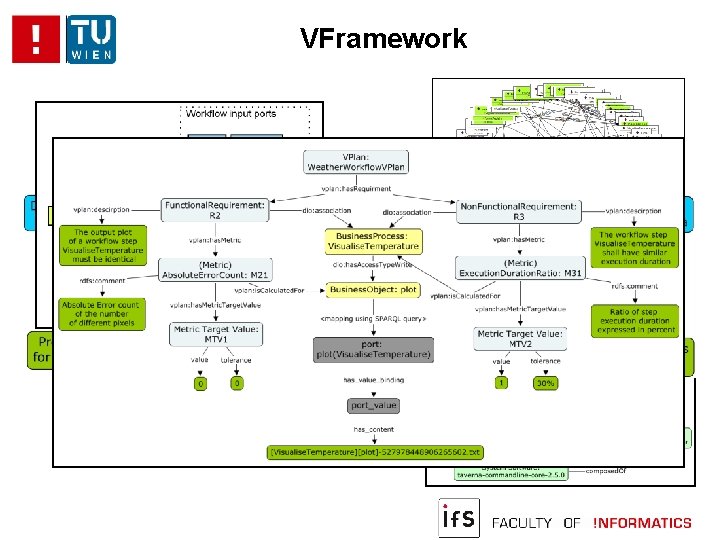

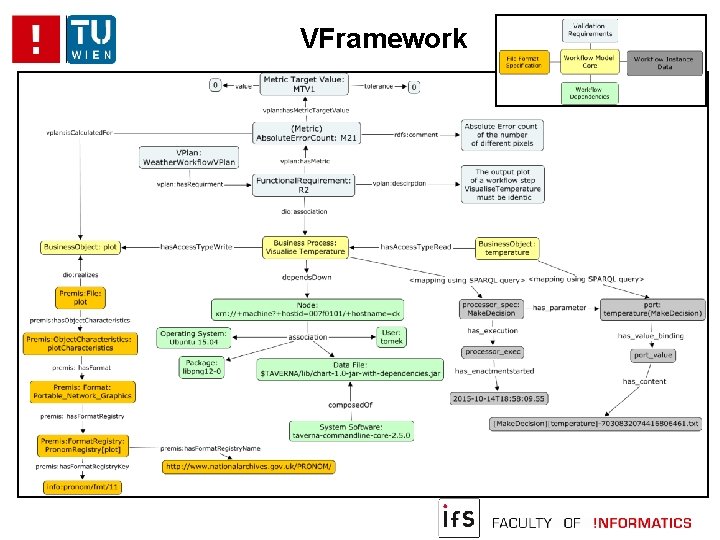

Documenting a Process Context Model: establish what to document and how Meta-model for describing process & context - Extensible architecture integrated by core model - Reusing existing models as much as possible - Based on Archi. Mate, implemented using OWL Extracted by static and dynamic analysis

Context Model – Static Analysis Analyses steps, platforms, services, tools called Dependencies (packages, libraries) HW, SW Licenses, … #!/bin/bash # fetch data java -jar Gest. Barragens. WSClient. IQData. jar unzip -o IQData. zip # fix encoding #iconv -f LATIN 1 -t UTF-8 iq. r > iq_utf 8. r # generate references R --vanilla < iq_utf 8. r > IQout. txt # create pdflatex iq. tex Script Taverna Workflow Archi. Mate model Context Model (OWL ontology)

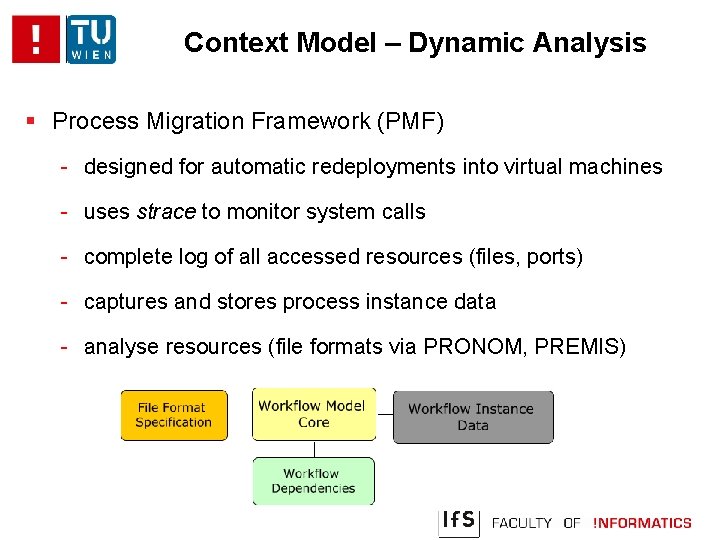

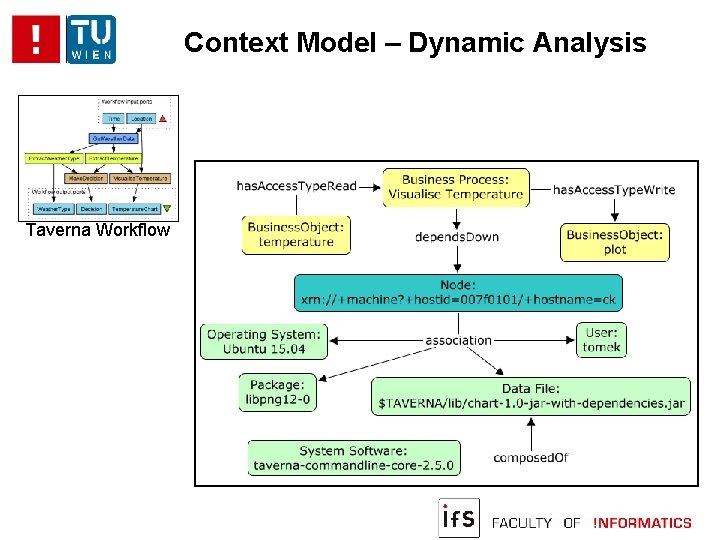

Context Model – Dynamic Analysis Process Migration Framework (PMF) - designed for automatic redeployments into virtual machines - uses strace to monitor system calls - complete log of all accessed resources (files, ports) - captures and stores process instance data - analyse resources (file formats via PRONOM, PREMIS)

Context Model – Dynamic Analysis Taverna Workflow

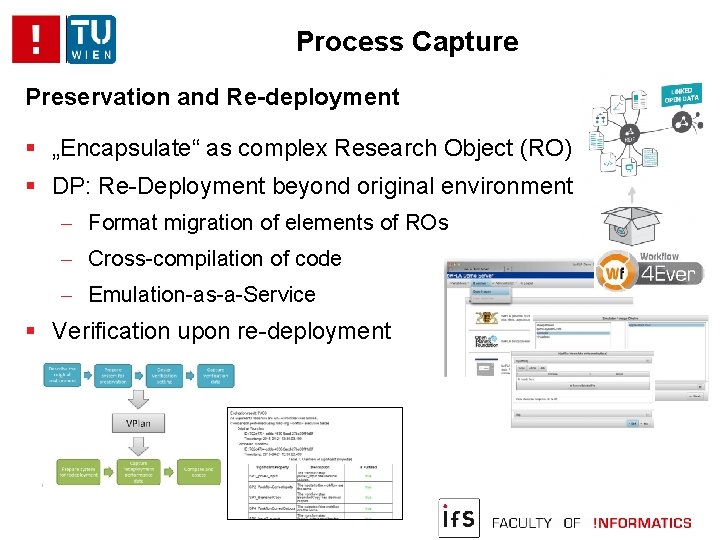

Process Capture Preservation and Re-deployment „Encapsulate“ as complex Research Object (RO) DP: Re-Deployment beyond original environment - Format migration of elements of ROs - Cross-compilation of code - Emulation-as-a-Service Verification upon re-deployment

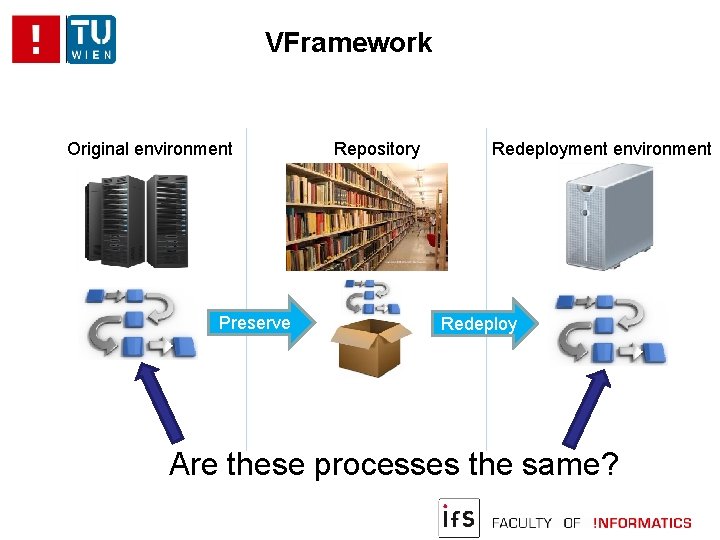

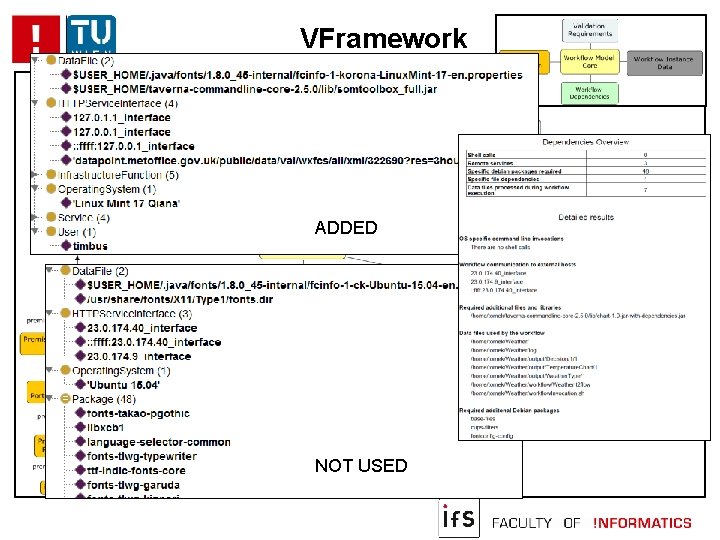

VFramework Original environment Preserve Repository Redeployment environment Redeploy Are these processes the same?

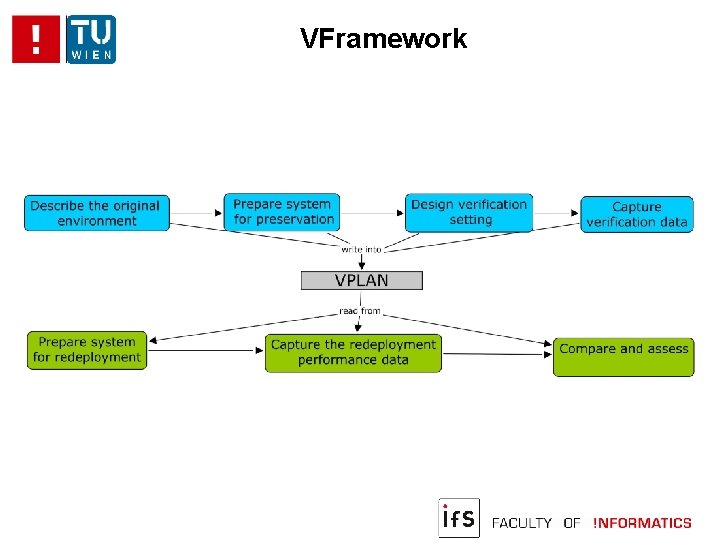

VFramework

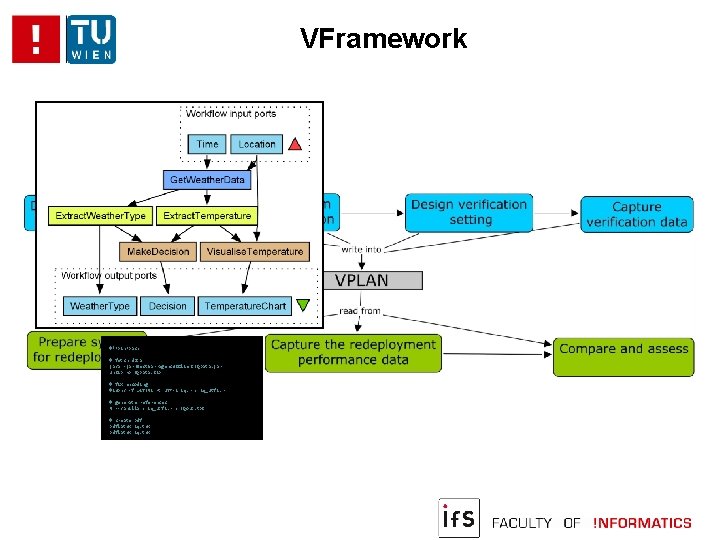

VFramework #!/bin/bash # fetch data java -jar Gest. Barragens. WSClient. IQData. jar unzip -o IQData. zip # fix encoding #iconv -f LATIN 1 -t UTF-8 iq. r > iq_utf 8. r # generate references R --vanilla < iq_utf 8. r > IQout. txt # create pdflatex iq. tex

VFramework #!/bin/bash # fetch data java -jar Gest. Barragens. WSClient. IQData. jar unzip -o IQData. zip # fix encoding #iconv -f LATIN 1 -t UTF-8 iq. r > iq_utf 8. r # generate references R --vanilla < iq_utf 8. r > IQout. txt # create pdflatex iq. tex

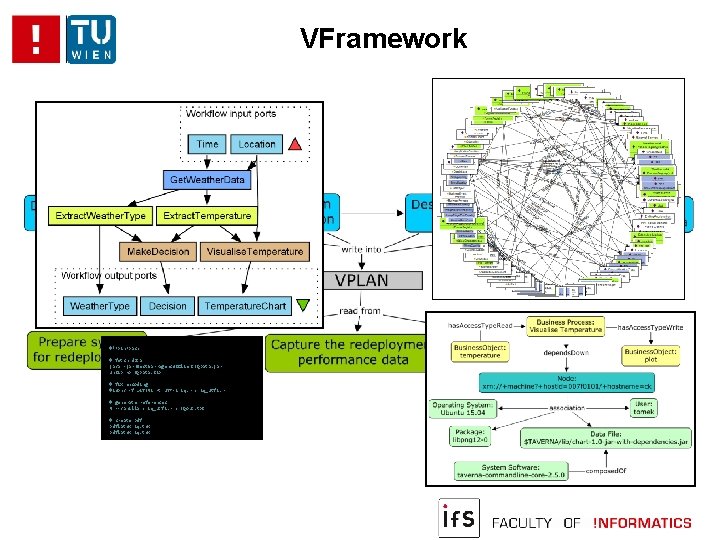

VFramework #!/bin/bash # fetch data java -jar Gest. Barragens. WSClient. IQData. jar unzip -o IQData. zip # fix encoding #iconv -f LATIN 1 -t UTF-8 iq. r > iq_utf 8. r # generate references R --vanilla < iq_utf 8. r > IQout. txt # create pdflatex iq. tex

VFramework #!/bin/bash # fetch data java -jar Gest. Barragens. WSClient. IQData. jar unzip -o IQData. zip # fix encoding #iconv -f LATIN 1 -t UTF-8 iq. r > iq_utf 8. r # generate references R --vanilla < iq_utf 8. r > IQout. txt # create pdflatex iq. tex

VFramework ADDED #!/bin/bash # fetch data java -jar Gest. Barragens. WSClient. IQData. jar unzip -o IQData. zip # fix encoding #iconv -f LATIN 1 -t UTF-8 iq. r > iq_utf 8. r # generate references R --vanilla < iq_utf 8. r > IQout. txt # create pdflatex iq. tex NOT USED

Outline What are the challenges in reproducibility? What do we gain by aiming for reproducibility? How to address the challenges of complex processes? How to deal with “Big Data”? Summary

Data and Data Citation So far focus on the process Processes work with data Data as a “ 1 st-class citizen” in science We need to be able to - preserve data and keep it accessible - cite data to give credit and show which data was used - identify precisely the data used in a study/process for reproducibility, evaluating progress, … Why is this difficult? (after all, it’s being done…)

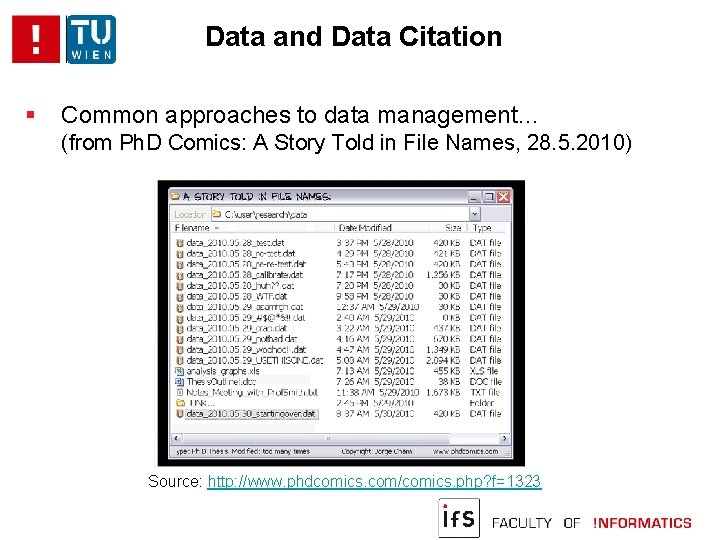

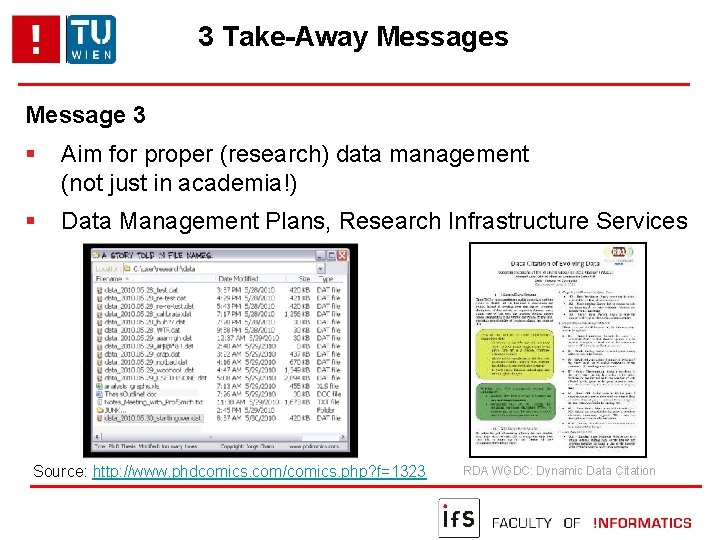

Data and Data Citation Common approaches to data management… (from Ph. D Comics: A Story Told in File Names, 28. 5. 2010) Source: http: //www. phdcomics. com/comics. php? f=1323

Identification of Dynamic Data Citable datasets have to be static - Fixed set of data, no changes: no corrections to errors, no new data being added But: (research) data is dynamic - Adding new data, correcting errors, enhancing data quality, … - Changes sometimes highly dynamic, at irregular intervals Current approaches - Identifying entire data stream, without any versioning - Using “accessed at” date - “Artificial” versioning by identifying batches of data (e. g. annual), aggregating changes into releases (time-delayed!) Would like to identify precisely the data as it existed at a specific point in time Page 42

Granularity of Data Identification What about the granularity of data to be identified? - Databases collect enormous amounts of data over time - Researchers use specific subsets of data - Need to identify precisely the subset used Current approaches - Storing a copy of subset as used in study -> scalability - Citing entire dataset, providing textual description of subset -> imprecise (ambiguity) - Storing list of record identifiers in subset -> scalability, not for arbitrary subsets (e. g. when not entire record selected) Would like to be able to identify precisely the subset of (dynamic) data used in a process Page 43

RDA WG Data Citation Research Data Alliance WG on Data Citation: Making Dynamic Data Citeable WG officially endorsed in March 2014 - Concentrating on the problems of large, dynamic (changing) datasets - Focus! Identification of data! Not: PID systems, metadata, citation string, attribution, … - Liaise with other WGs and initiatives on data citation (CODATA, Data. Cite, Force 11, …) - https: //rd-alliance. org/working-groups/data-citation-wg. html

Making Dynamic Data Citeable Data Citation: Data + Means-of-access Data time-stamped & versioned (aka history) Researcher creates working-set via some interface: Access assign PID to QUERY, enhanced with - Time-stamping for re-execution against versioned DB - Re-writing for normalization, unique-sort, mapping to history - Hashing result-set: verifying identity/correctness leading to landing page Andreas Rauber, Ari Asmi, Dieter van Uytvanck and Stefan Proell. Identification of Reproducible Subsets for Data Citation, Sharing and Re-Use. Bulletin of IEEE Technical Committee on Digital Libraries (TCDL), vol. 12, 2016 http: //www. ieee-tcdl. org/Bulletin/current/papers/IEEE-TCDL-DC-2016_paper_1. pdf Stefan Pröll and Andreas Rauber. Scalable Data Citation in Dynamic Large Databases: Model and Reference Implementation. In IEEE Intl. Conf. on Big Data 2013 (IEEE Big. Data 2013), 2013 http: //www. ifs. tuwien. ac. at/~andi/publications/pdf/pro_ieeebigdata 13. pdf Prototype for CSV: http: //datacitation. eu/

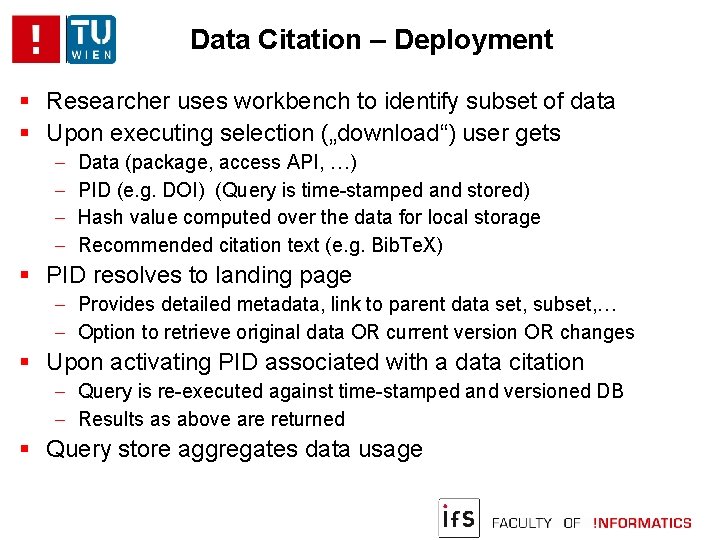

Data Citation – Deployment Researcher uses workbench to identify subset of data Upon executing selection („download“) user gets - Data (package, access API, …) PID (e. g. DOI) (Query is time-stamped and stored) Hash value computed over the data for local storage Recommended citation text (e. g. Bib. Te. X) PID resolves to landing page - Provides detailed metadata, link to parent data set, subset, … - Option to retrieve original data OR current version OR changes Upon activating PID associated with a data citation - Query is re-executed against time-stamped and versioned DB - Results as above are returned Query store aggregates data usage

Data Citation – Deployment Note: query string provides excellent Researcher uses workbench to identify subset of data provenance information on the data set! Upon executing selection („download“) user gets - Data (package, access API, …) PID (e. g. DOI) (Query is time-stamped and stored) Hash value computed over the data for local storage Recommended citation text (e. g. Bib. Te. X) PID resolves to landing page - Provides detailed metadata, link to parent data set, subset, … - Option to retrieve original data OR current version OR changes Upon activating PID associated with a data citation - Query is re-executed against time-stamped and versioned DB - Results as above are returned Query store aggregates data usage

Data Citation – Deployment Note: query string provides excellent Researcher uses workbench to identify subset of data provenance information on the data set! Upon executing selection („download“) user gets - Data (package, access API, …) This is an important advantage over PID (e. g. DOI) (Query is time-stamped and stored) traditional approaches relying on, e. g. Hash value computed over the data for local storage storing a list of identifiers/DB dump!!! Recommended citation text (e. g. Bib. Te. X) PID resolves to landing page - Provides detailed metadata, link to parent data set, subset, … - Option to retrieve original data OR current version OR changes Upon activating PID associated with a data citation - Query is re-executed against time-stamped and versioned DB - Results as above are returned Query store aggregates data usage

Data Citation – Deployment Note: query string provides excellent Researcher uses workbench to identify subset of data provenance information on the data set! Upon executing selection („download“) user gets - Data (package, access API, …) This is an important advantage over PID (e. g. DOI) (Query is time-stamped and stored) traditional approaches relying on, e. g. Hash value computed over the data for local storage storing a list of identifiers/DB dump!!! Recommended citation text (e. g. Bib. Te. X) PID resolves to landing page Identify which parts of the data are used. - Provides detailed metadata, link to parent data set, subset, … If data changes, identify which queries - Option to retrieve original data OR current version OR changes (studies) are affected Upon activating PID associated with a data citation - Query is re-executed against time-stamped and versioned DB - Results as above are returned Query store aggregates data usage

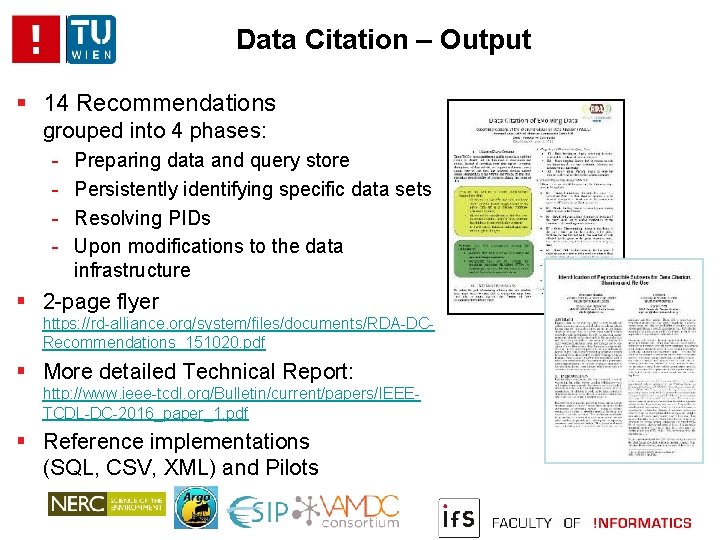

Data Citation – Output 14 Recommendations grouped into 4 phases: - Preparing data and query store Persistently identifying specific data sets Resolving PIDs Upon modifications to the data infrastructure 2 -page flyer https: //rd-alliance. org/system/files/documents/RDA-DCRecommendations_151020. pdf More detailed Technical Report: http: //www. ieee-tcdl. org/Bulletin/current/papers/IEEETCDL-DC-2016_paper_1. pdf Reference implementations (SQL, CSV, XML) and Pilots

Join RDA and Working Group If you are interested in joining the discussion, contributing a pilot, wish to establish a data citation solution, … Register for the RDA WG on Data Citation: - Website: https: //rd-alliance. org/working-groups/data-citation-wg. html Mailinglist: https: //rd-alliance. org/node/141/archive-post-mailinglist Web Conferences: https: //rd-alliance. org/webconference-data-citation-wg. html List of pilots: https: //rd-alliance. org/groups/data-citationwg/wiki/collaboration-environments. html

3 Take-Away Messages Message 1 Aim at achieving reproducibility at different levels - Re-run, ask others to re-run - Re-implement - Port to different platforms - Test on different data, vary parameters (and report!) If something is not reproducible -> investigate! (you might be onto something!) Encourage reproducibility studies!

3 Take-Away Messages Message 2 Aim for better procedures and documentation Document the research process, environment, interim results, … (preferably automatically, 80: 20, …) Source: xkdc Pieter Bruegel the Elder: De Alchemist (British Museum, London) Research Objects, Context Models, VFramework

3 Take-Away Messages Message 3 Aim for proper (research) data management (not just in academia!) Data Management Plans, Research Infrastructure Services Source: http: //www. phdcomics. com/comics. php? f=1323 RDA WGDC: Dynamic Data Citation

Summary Trustworthy and efficient e-Science Need to move beyond preserving code + data Need to move beyond the focus on description Capture Process and entire execution context Precisely identify data used in process Verification of re-execution Data and process re-use as basis for data driven science - evidence - investment - efficiency Tru st! !

Summary Preaching and eating… Do we do all this in our lab for our experiments? No! Researchers (particularly in CS) Institutions and Research Infrastructures (not yet? )

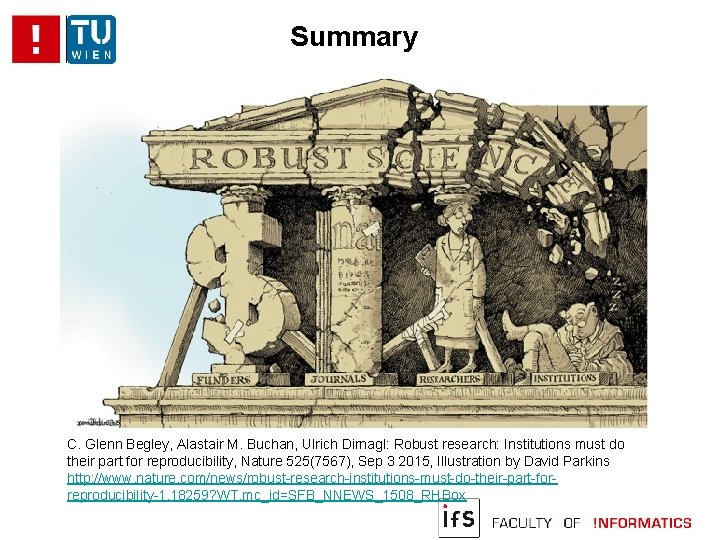

Summary C. Glenn Begley, Alastair M. Buchan, Ulrich Dirnagl: Robust research: Institutions must do their part for reproducibility, Nature 525(7567), Sep 3 2015, Illustration by David Parkins http: //www. nature. com/news/robust-research-institutions-must-do-their-part-forreproducibility-1. 18259? WT. mc_id=SFB_NNEWS_1508_RHBox

Acknowledgements Johannes Binder Rudolf Mayer Tomasz Miksa Stefan Pröll Stephan Strodl Marco Unterberger TIMBUS SBA: Secure Business Austria RDA: Research Data Alliance WGDC

References Juliana Freire, Norbert Fuhr, and Andreas Rauber. Reproducibility of Data-Oriented Experiments in e. Science. Dagstuhl Reports, 6(1), 2016. Andreas Rauber, Ari Asmi, Dieter van Uytvanck and Stefan Proell. Identification of Reproducible Subsets for Data Citation, Sharing and Re-Use. Bulletin of IEEE Technical Committee on Digital Libraries (TCDL), vol. 12, 2016. Andreas Rauber, Tomasz Miksa, Rudolf Mayer and Stefan Proell. Repeatability and Re. Usability in Scientific Processes: Process Context, Data Identification and Verification. In Proceedings of the 17 th International Conference on Data Analytics and Management in Data Intensive Domains (DAMDID), 2015. Tomasz Miksa, Rudolf Mayer and Andreas Rauber. Ensuring sustainability of web services dependent processes. International Journal of Computational Science and Engineering (IJCSE). 2015 Vol. 10, No. 1/2, pp. 70 – 81 Rudolf Mayer and Andreas Rauber, A Quantitative Study on the Re-executability of Publicly Shared Scientific Workflows. 11 th IEEE Intl. Conference on e-Science, 2015. Rudolf Mayer, Tomasz Miksa and Andreas Rauber. Ontologies for describing the context of scientific experiment processes. 10 th IEEE Intl. Conference on e-Science, 2014. Tomasz Miksa, Stefan Proell, Rudolf Mayer, Stephan Strodl, Ricardo Vieira, Jose Barateiro and Andreas Rauber, Framework for verification of preserved and redeployed processes. 10 th International Conference on Preservation of Digital Objects (IPRES 2013), 2013. Tomasz Miksa, Stephan Strodl and Andreas Rauber, Process Management Plans. International Journal of Digital Curation, Vol 9, No 1 (2014), pp. 83 -97.

Thank you! http: //www. ifs. tuwien. ac. at/imp

- Slides: 58