Reliability and Validity what is measured and how

Reliability and Validity what is measured and how well

Reliability l Consistency – Does the test agree with itself? l Stability – Does the test agree with itself over time? l Agreement – Do different raters agree with each other?

Consistency Reliability l Equivalent forms reliability – Correlation between the scores on two parallel forms of a test l Internal consistency reliability – Correlation between half sections of the test (Split Half), or between all of the items (Internal Consistency)

Stability Reliability l Test – Retest Reliability l The same test is given at two different administrations to the same group of respondents. l Correlation between time 1 and time 2.

Agreement Reliability l Inter-Rater Reliability – Correlation between raters – Correlation between rater and expert – % agreement between raters – % agreement between rater and expert – Chance corrected methods (Kappa) – Variance partitioning methods

The Radio Signal Analogy l Signal to noise ratio l Total Signal Received = True signal + Noise l Signal / (Signal + Noise)

A Little Math About Reliability l X=T+E l Observed Score = True Score + Error l σ2 X = σ 2 T + σ 2 E l The spread of Observed scores = The spread is True scores + The spread in Error scores.

A Little Math About Reliability l rxx’ = σ 2 T / σ 2 X l rxx’ = 1 – (σ2 E / σ2 X)

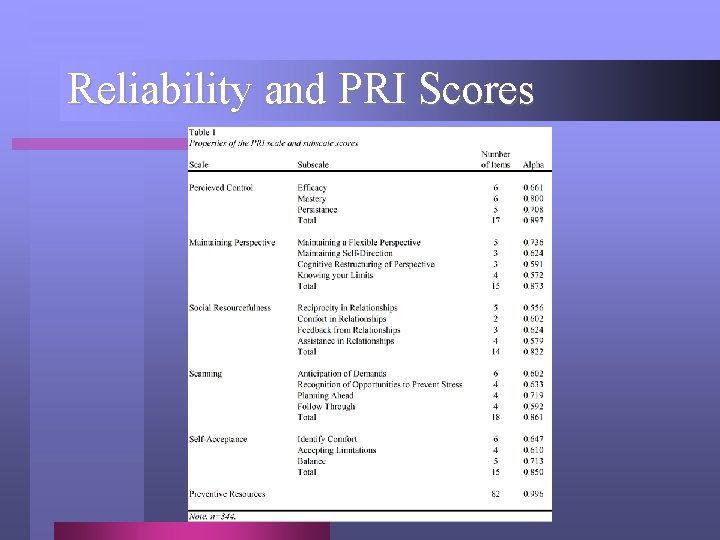

Reliability and PRI Scores

Validity l Validity is the degree to which a test measures what it is intended to measure. l Validity is the meaningfulness, appropriateness, and usefulness of the inferences made from the information a test provides.

Validity l “Truth” and “Use” l What is the test really measuring? l For whom is the test appropriate? l How should the information the test provides be used?

Constructs l Assumptions we make when we use a test: l The subject possesses some true amount of the latent theoretical construct that the test is designed to measure. l Depression, Coping, Math Aptitude, etc.

Constructs l The amount of the construct the subject possesses is not directly measurable. l Observable behaviors can represent the latent construct (ability, trait, etc. ) and can be measured. l The goal is to measure as many of these observable behaviors as we can and to measure them accurately.

Types of Validity l Content Validity l Does the test cover all of the intended content? l Measured by expert opinion.

Types of Validity l Concurrent Validity l Does the test agree with other existing measures of the same construct? l Correlations between the test scores and scores from other measures.

Types of Validity l Types of Concurrent Validity Evidence l Convergent Validity l Discriminant Validity

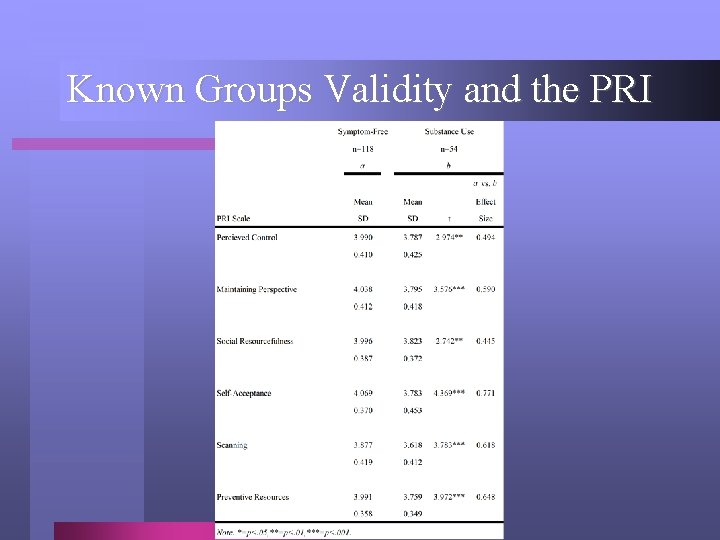

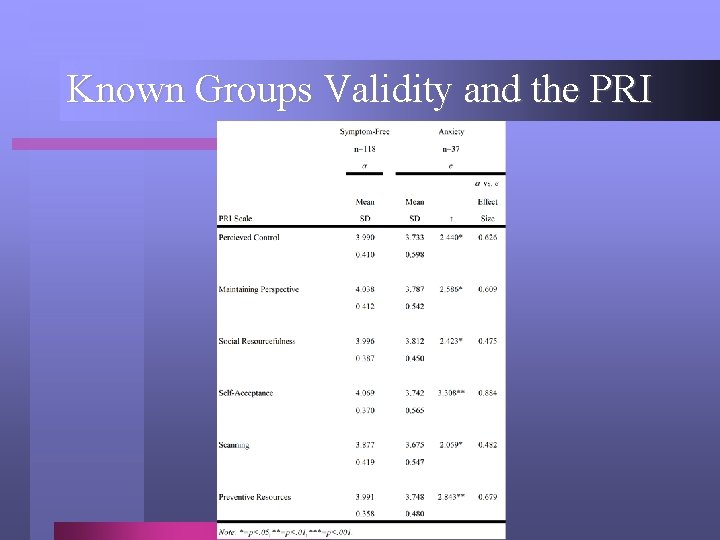

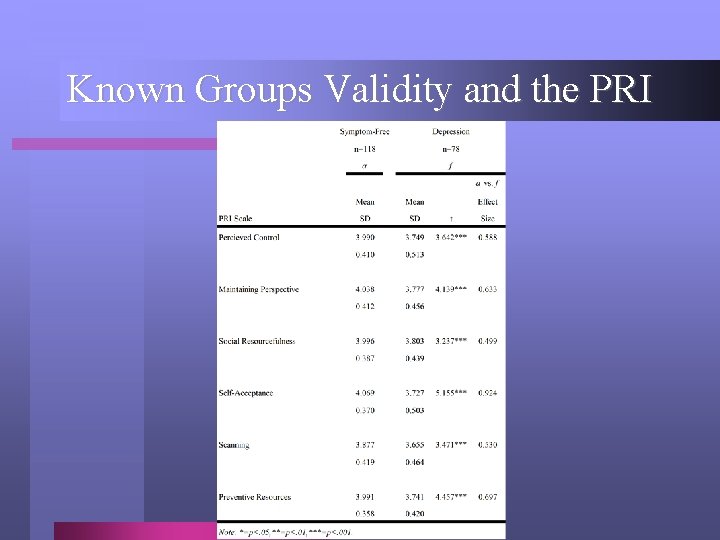

Types of Validity l Known Groups Validity l Does the test distinguish between groups of subjects with known differences on the construct or related constructs?

Known Groups Validity and the PRI

Known Groups Validity and the PRI

Known Groups Validity and the PRI

Consequential Validity l Is the test information useful for decision making? l Does it have any unintended consequences? l Can the information be misused?

Predictive Validity l Can the test be used to predict future behavior? l Like Concurrent Validity (both are Criterion Validity), but some time passes between the test and the criterion. l SAT and GPA.

Construct Validity l All validity is really construct validity. l Does it measure what it is intended to measure? l Does the test agree with theory in the field? l Does it reveal the true amount of the construct that a subject possesses?

Other Related Issues l Tests should have Face Validity. l Does the subject believe the test is measuring the intended construct? l Some tests do not directly reveal what is being measured.

Other Related Issues l Reliability and validity are properties of the information that a test provides, NOT of the test itself. l The farther away you get from the original purpose for which a test was developed and validated, the weaker the inferences that can be made.

Other Related Issues l No single indicator is sufficient for decision making. A battery of indicators, or sources of information, is always better. l Reliability is a necessary condition for the correct use of a test, but not a sufficient one.

Other Related Issues l Validity is the most important property of the information a test provides. l Consistent information. l Truthful information. l Useful information.

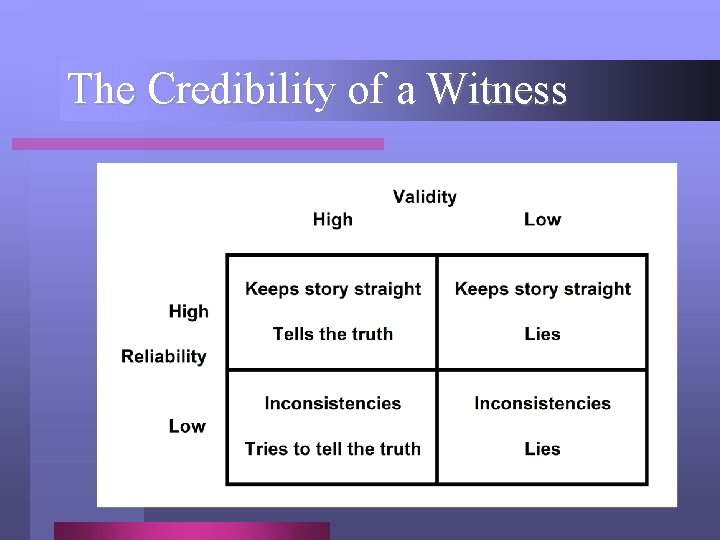

The Credibility of a Witness

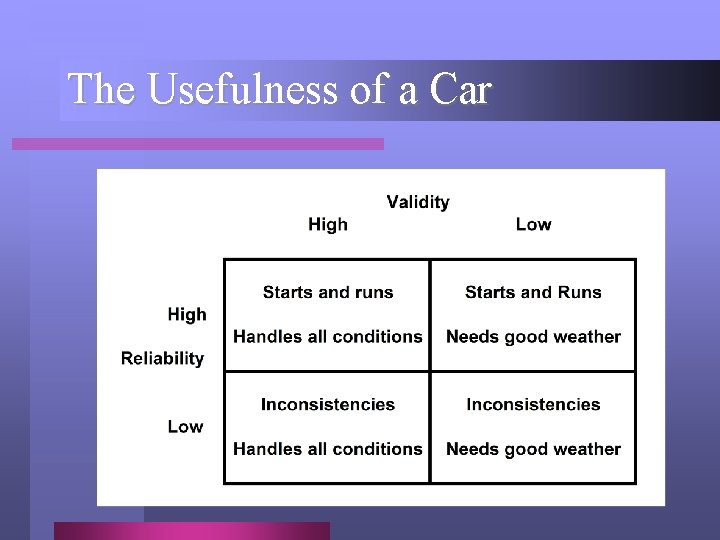

The Usefulness of a Car

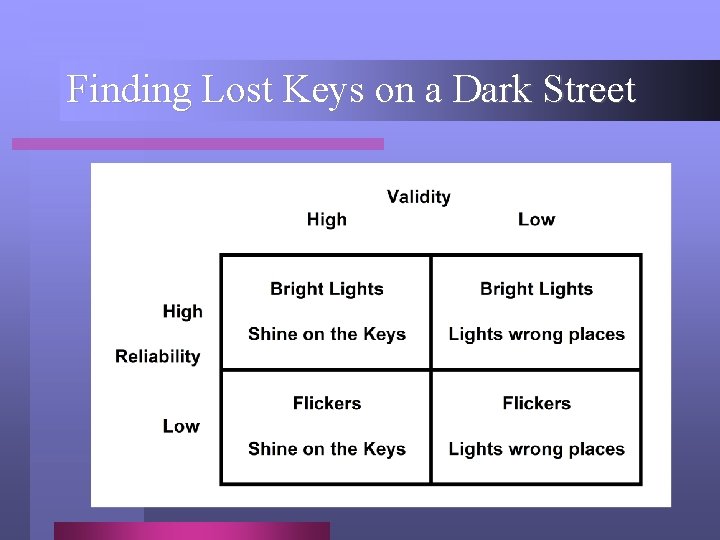

Finding Lost Keys on a Dark Street

- Slides: 30