Relational Representations Daniel Lowd University of Oregon April

- Slides: 21

Relational Representations Daniel Lowd University of Oregon April 20, 2015

Caveats • The purpose of this talk is to inspire meaningful discussion. • I may be completely wrong. My background: Markov logic networks, probabilistic graphical models

Q: Why relational representations? A: To model relational data.

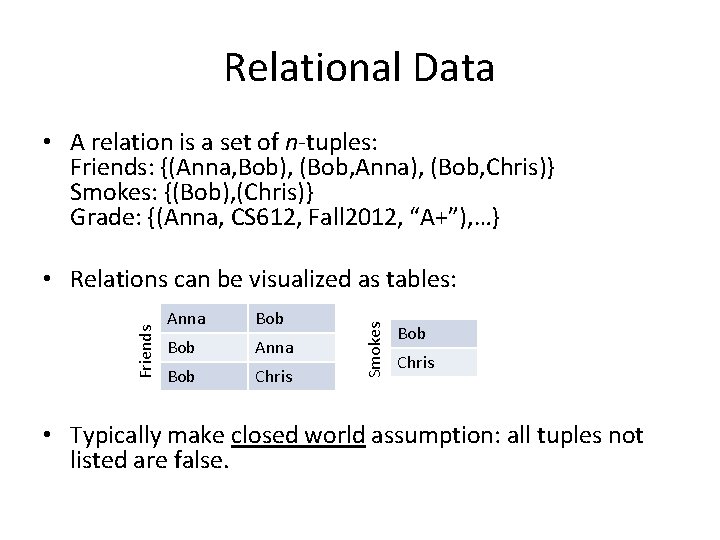

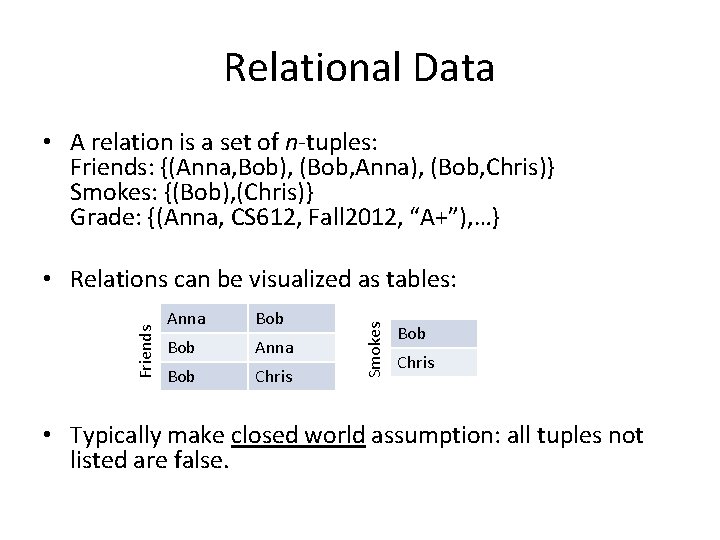

Relational Data • A relation is a set of n-tuples: Friends: {(Anna, Bob), (Bob, Anna), (Bob, Chris)} Smokes: {(Bob), (Chris)} Grade: {(Anna, CS 612, Fall 2012, “A+”), …} Anna Bob Chris Smokes Friends • Relations can be visualized as tables: Bob Chris • Typically make closed world assumption: all tuples not listed are false.

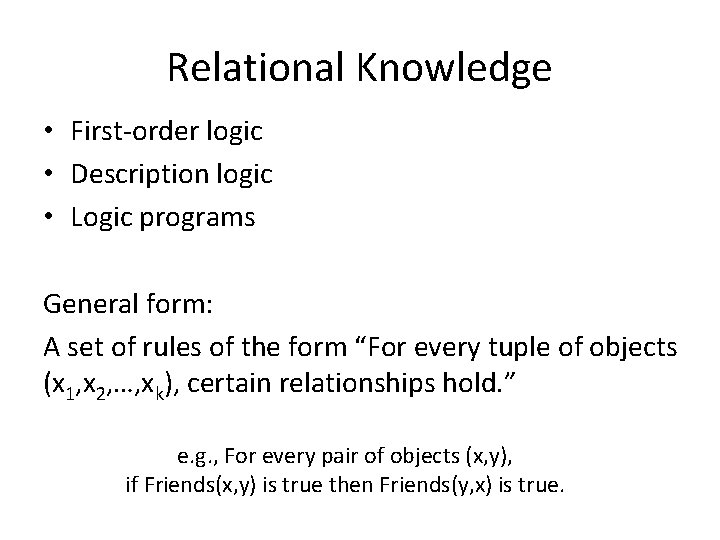

Relational Knowledge • First-order logic • Description logic • Logic programs General form: A set of rules of the form “For every tuple of objects (x 1, x 2, …, xk), certain relationships hold. ” e. g. , For every pair of objects (x, y), if Friends(x, y) is true then Friends(y, x) is true.

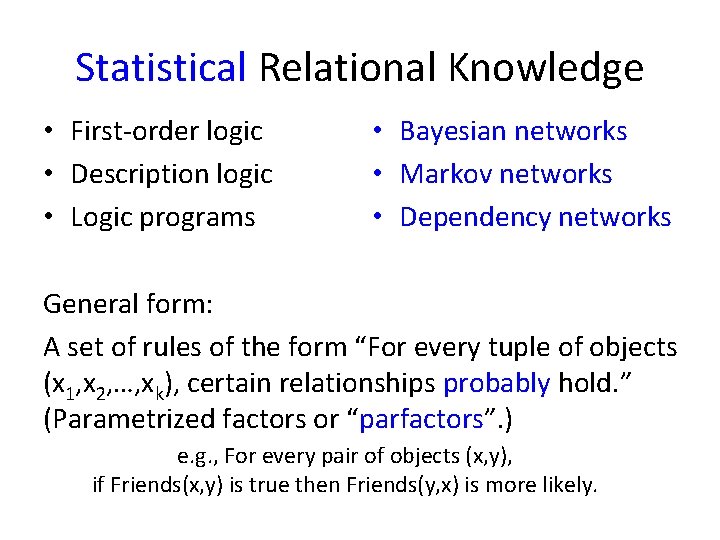

Statistical Relational Knowledge • First-order logic • Description logic • Logic programs • Bayesian networks • Markov networks • Dependency networks General form: A set of rules of the form “For every tuple of objects (x 1, x 2, …, xk), certain relationships probably hold. ” (Parametrized factors or “parfactors”. ) e. g. , For every pair of objects (x, y), if Friends(x, y) is true then Friends(y, x) is more likely.

Applications and Datasets What are the “killer apps” of relational learning? They must be relational.

Graph or Network Data • Many kinds of networks: – Social networks – Interaction networks – Citation networks – Road networks – Cellular pathways – Computer networks – Webgraph

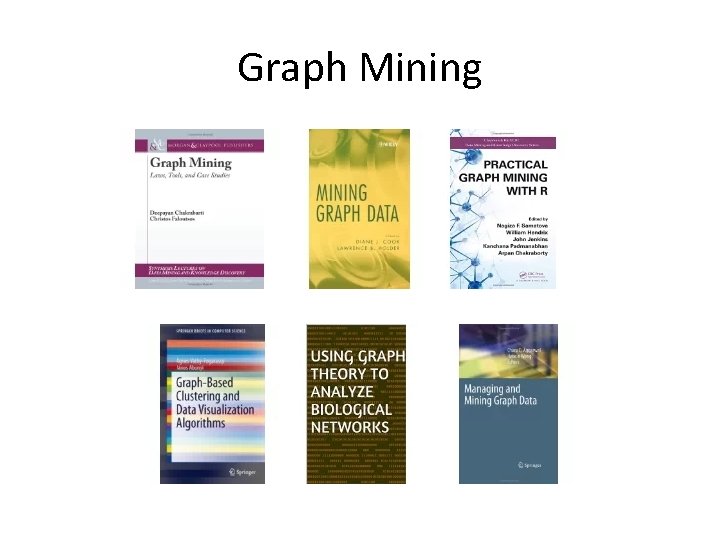

Graph Mining

Graph Mining • Well-established field within data mining • Representation: nodes are objects, edges are relations • Many problems and methods – Frequent subgraph mining – Generative models to explain degree distribution and graph evolution over time – Community discovery – Collective classification – Link prediction – Clustering • What’s the difference between graph mining and relational learning?

Social Network Analysis

Specialized vs. General Representations In many domains, the best results come from more restricted, “specialized” representations and algorithms. • Specialized representations and algorithms – May represent key domain properties better – Typically much more efficient – E. g. , stochastic block model, label propagation, HITS • General representations – – Can be applied to new and unusual domains Easier to define complex models Easier to modify and extend E. g. , MLNs, PRMs, HL-MRFs, Prob. Log, RBNs, PRISM, etc.

Specializing and Unifying Representations There have been many representations proposed over the years, each with their own advantages and disadvantages. • How many do we need? • Which comes first, representational power or algorithmic convenience? • What are the right unifying frameworks? • When should we resort to domain-specific representations? • Which domain-specific ideas actually generalize to other domains?

Applications and Datasets What are the “killer apps” of general relational learning? They must be relational. They should probably be complex.

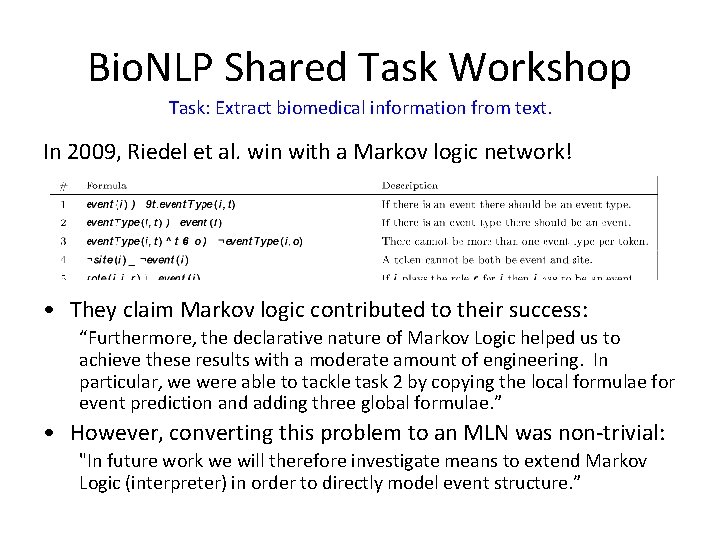

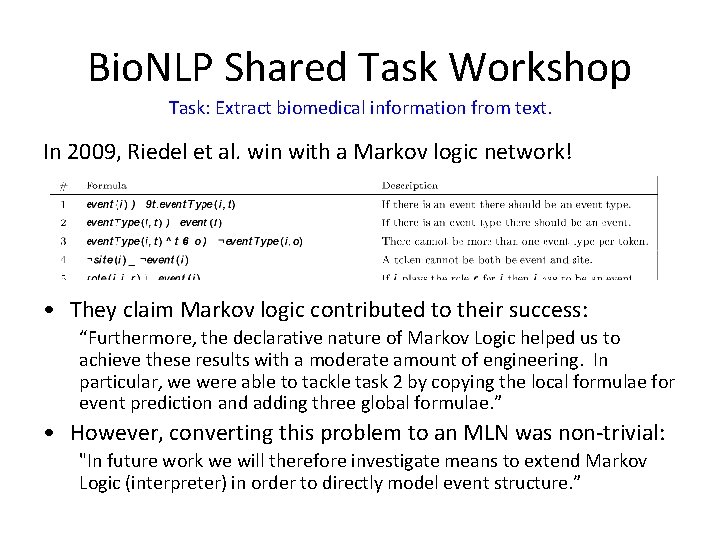

Bio. NLP Shared Task Workshop Task: Extract biomedical information from text. In 2009, Riedel et al. win with a Markov logic network! • They claim Markov logic contributed to their success: “Furthermore, the declarative nature of Markov Logic helped us to achieve these results with a moderate amount of engineering. In particular, we were able to tackle task 2 by copying the local formulae for event prediction and adding three global formulae. ” • However, converting this problem to an MLN was non-trivial: "In future work we will therefore investigate means to extend Markov Logic (interpreter) in order to directly model event structure. ”

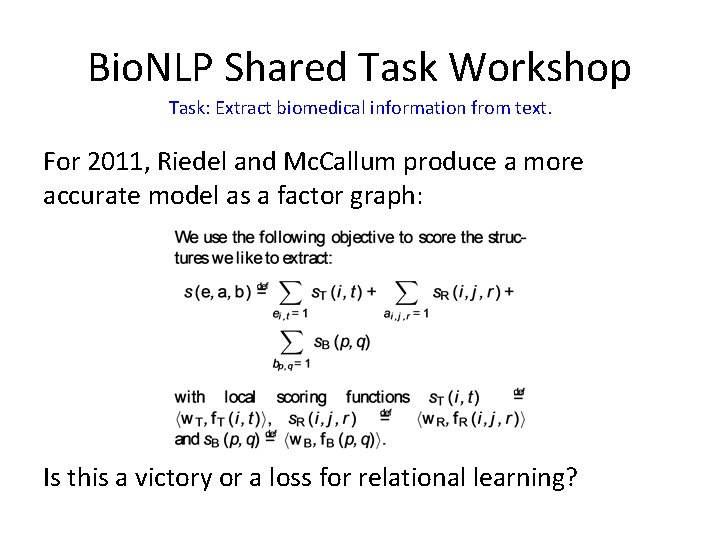

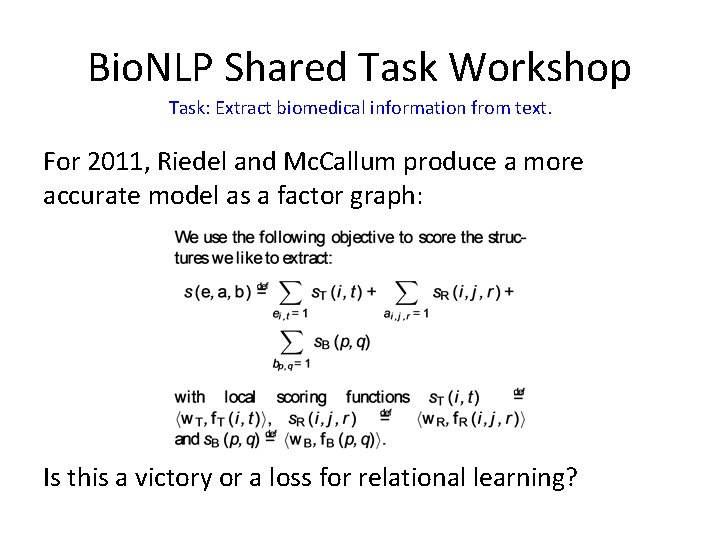

Bio. NLP Shared Task Workshop Task: Extract biomedical information from text. For 2011, Riedel and Mc. Callum produce a more accurate model as a factor graph: Is this a victory or a loss for relational learning?

Other NLP Tasks? Hoifung Poon and Pedro Domingos obtained great NLP results with MLNs: • “Joint Unsupervised Coreference Resolution with Markov Logic, ” ACL 2008. • “Unsupervised Semantic Parsing, ” EMNLP 2009. Best Paper Award. • “Unsupervised Ontology Induction from Text, ” ACL 2010. …but Hoifung hasn’t used Markov logic in any of his follow-up work: • “Probabilistic Frame Induction, ” NAACL 2013. (with Jackie Cheung and Lucy Vanderwende) • “Grounded Unsupervised Semantic Parsing, ” ACL 2013. • “Grounded Semantic Parsing for Complex Knowledge Extraction, ” NAACL 2015. (with Ankur P. Parikh and Kristina Toutanova)

MLNs were successfully used to obtain state-of-the-art results on several NLP tasks. Why were they abandoned? Because it was easier to hand-code a custom solution as a log-linear model.

Software • There are many good machine learning toolkits – Classification: scikit-learn, Weka – SVMs: SVM-Light, Lib. SVM, LIBLINEAR – Graphical models: BNT, FACTORIE – Deep learning: Torch, Pylearn 2, Theano • What’s the state of software for relational learning and inference? – Frustrating. – Are the implementations too primitive? – Are the algorithms immature? – Are the problems just inherently harder?

Hopeful Analogy: Neural Networks • In computer vision, specialized feature models (e. g. , SIFT) outperformed general feature models (neural networks) for a long time. • Recently, convolutional nets are best and are used everywhere for image recognition. • What changed? More processing power and more data. Specialized relational models are widely used. Is there a revolution in general relational learning waiting to happen?

Conclusion • Many kinds of relational data and models – Specialized relational models are clearly effective. – General relational models have potential, but they haven’t taken off. • Questions: – When can effective specialized representations become more general? – What advances do we need for general-purpose methods to succeed? – What “killer apps” should we be working on?