Relating Reinforcement Learning Performance to Classification performance Presenter

![Illustrative Example Two-step MDP problem: • Continuous state space S = [0, 1] • Illustrative Example Two-step MDP problem: • Continuous state space S = [0, 1] •](https://slidetodoc.com/presentation_image/17dec3176702e18d72f7b987867217fb/image-16.jpg)

- Slides: 18

Relating Reinforcement Learning Performance to Classification performance Presenter: Hui Li Sept. 11, 2006

Outline • Motivation • Reduction from reinforcement learning to classifier learning • Results • Conclusion

Motivation A Simple Relationship: The goal of reinforcement learning: The goal of (binary) classifier learning:

Motivation Question: • The problem of classification has been intensively investigated • The problem of reinforcement learning is still under investigation • Is it possible to reduce the reinforcement learning to classifier learning ?

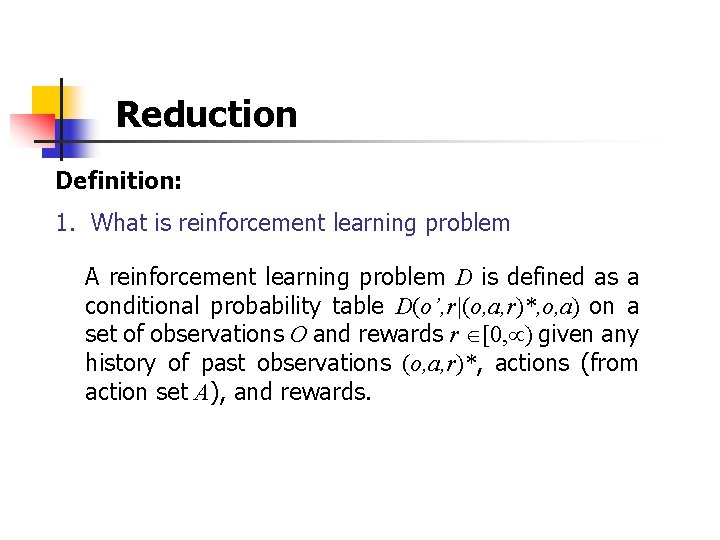

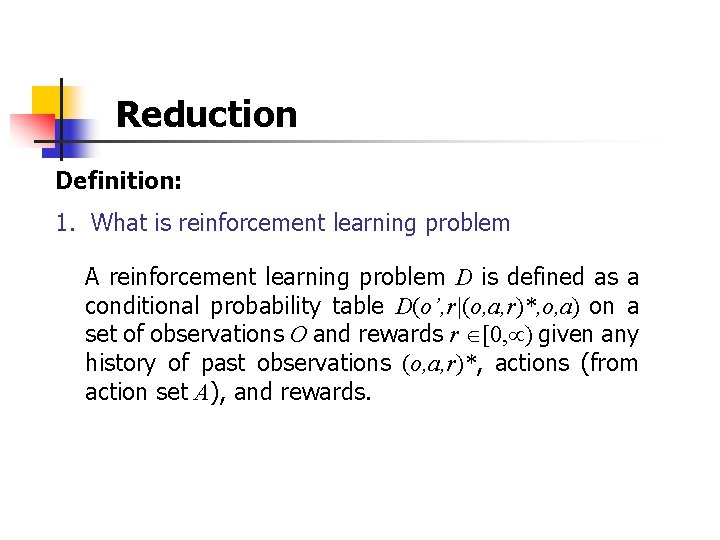

Reduction Definition: 1. What is reinforcement learning problem A reinforcement learning problem D is defined as a conditional probability table D(o’, r|(o, a, r)*, o, a) on a set of observations O and rewards r [0, ) given any history of past observations (o, a, r)*, actions (from action set A), and rewards.

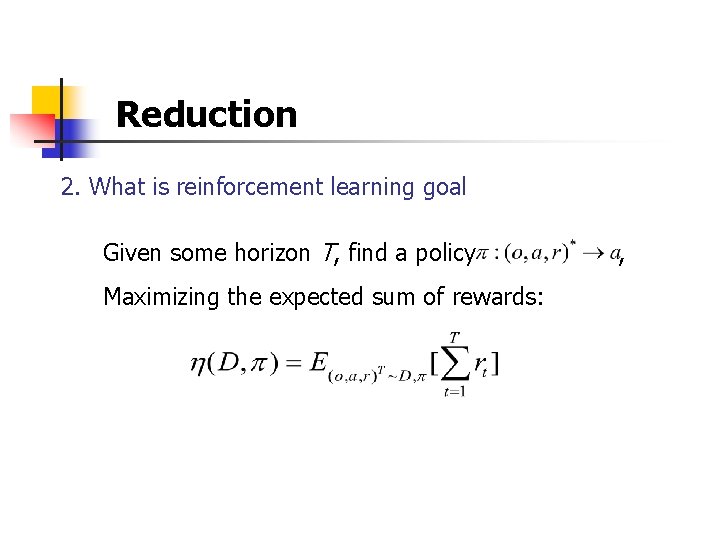

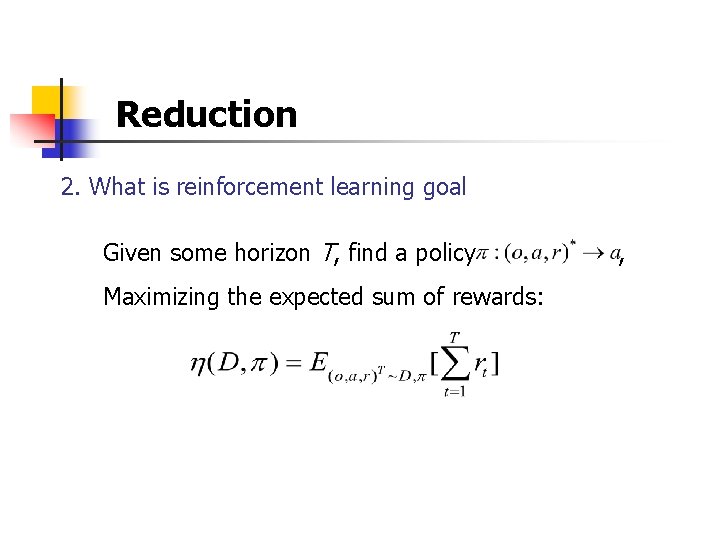

Reduction 2. What is reinforcement learning goal Given some horizon T, find a policy Maximizing the expected sum of rewards: ,

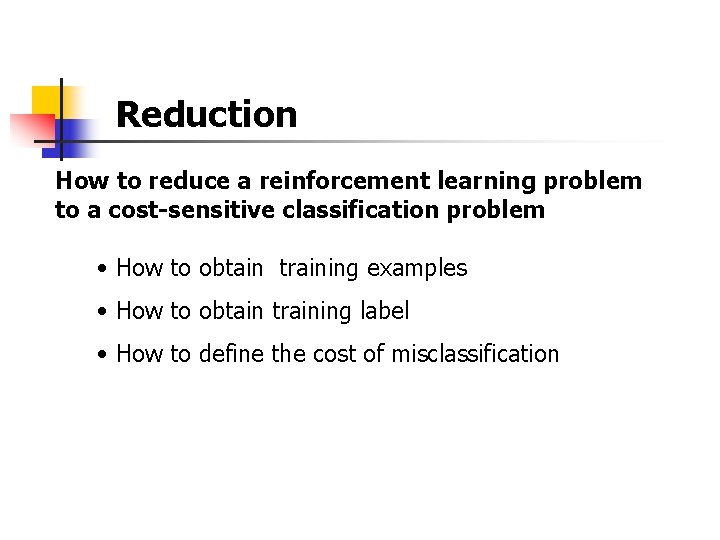

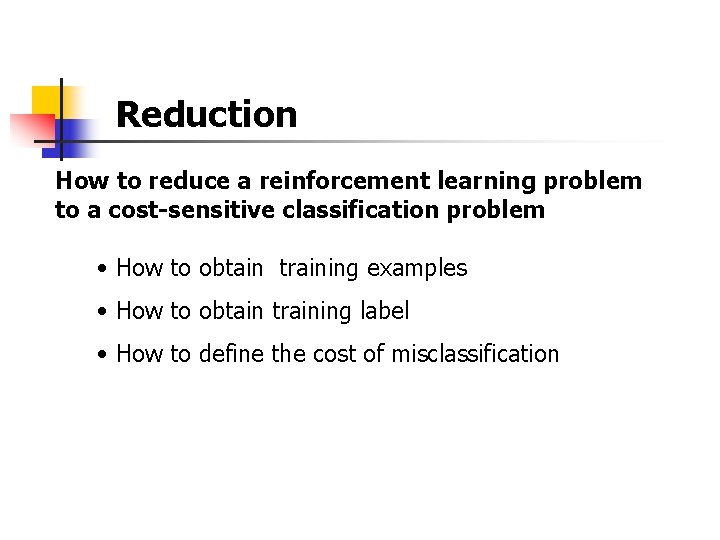

Reduction How to reduce a reinforcement learning problem to a cost-sensitive classification problem • How to obtain training examples • How to obtain training label • How to define the cost of misclassification

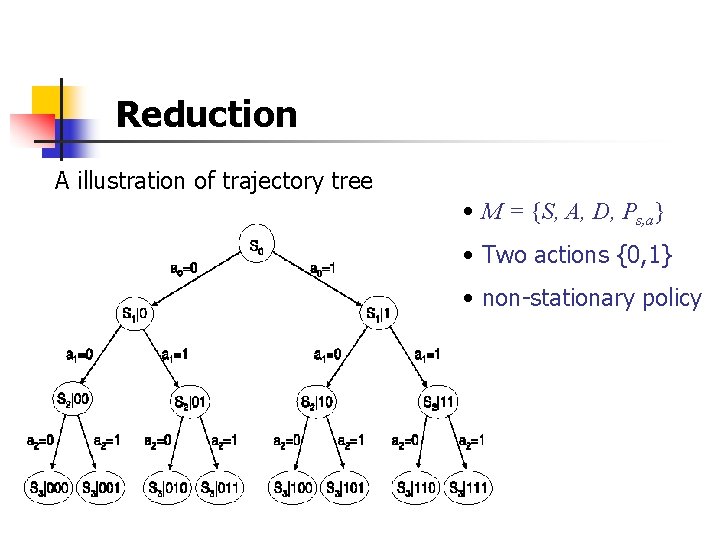

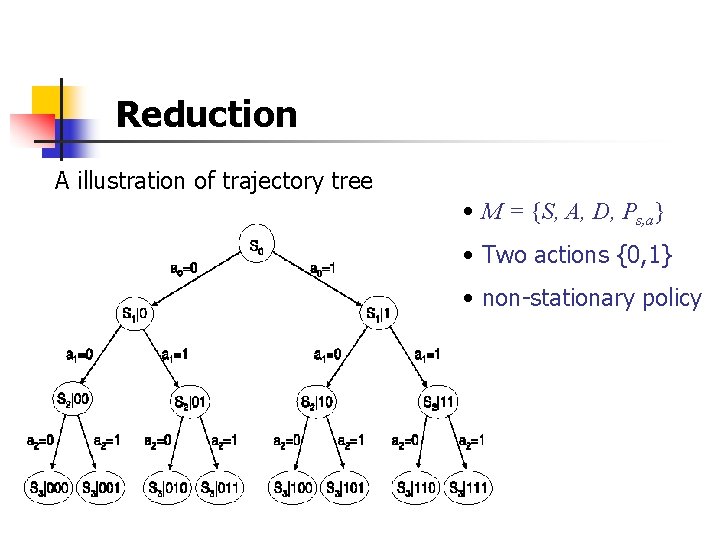

Reduction A illustration of trajectory tree • M = {S, A, D, Ps, a} • Two actions {0, 1} • non-stationary policy

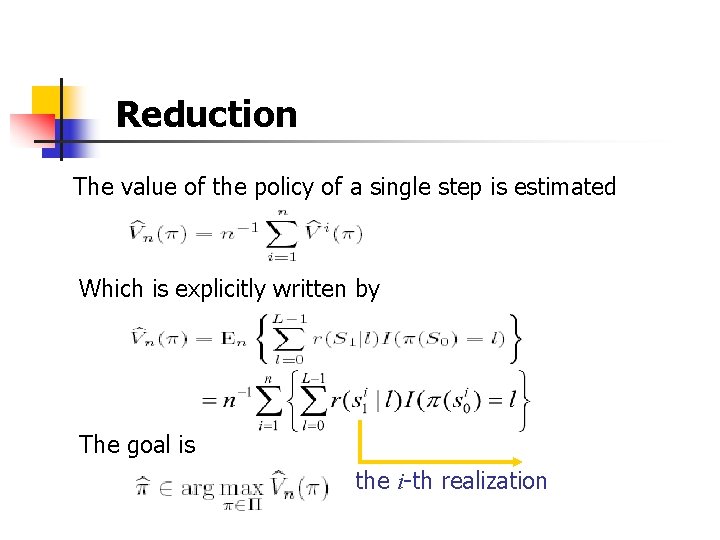

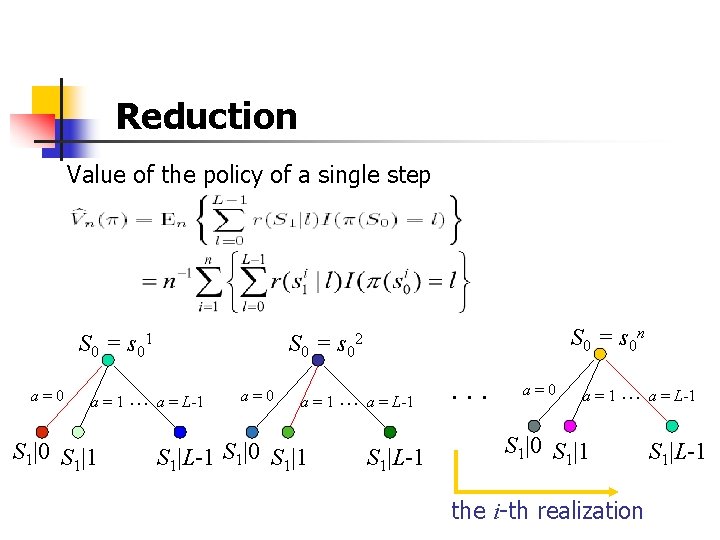

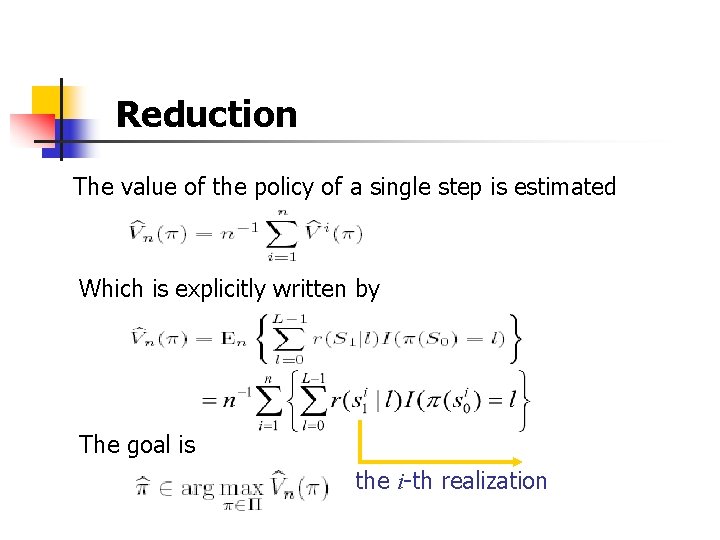

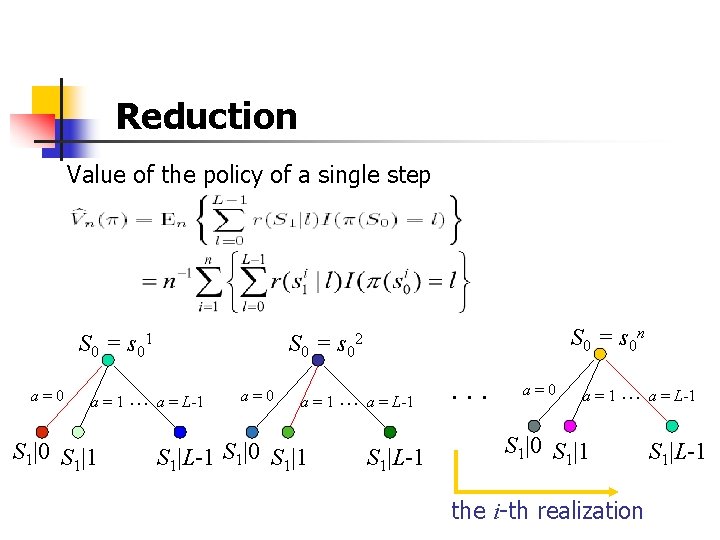

Reduction The value of the policy of a single step is estimated Which is explicitly written by The goal is the i-th realization

Reduction Value of the policy of a single step S 0 = s 01 a=0 a = 1 … a = L-1 S 1|0 S 1|1 S 0 = s 0 n S 0 = s 02 a=0 a = 1 … a = L-1 S 1|0 S 1|1 S 1|L-1 . . . a=0 a = 1 … a = L-1 S 1|0 S 1|1 the i-th realization S 1|L-1

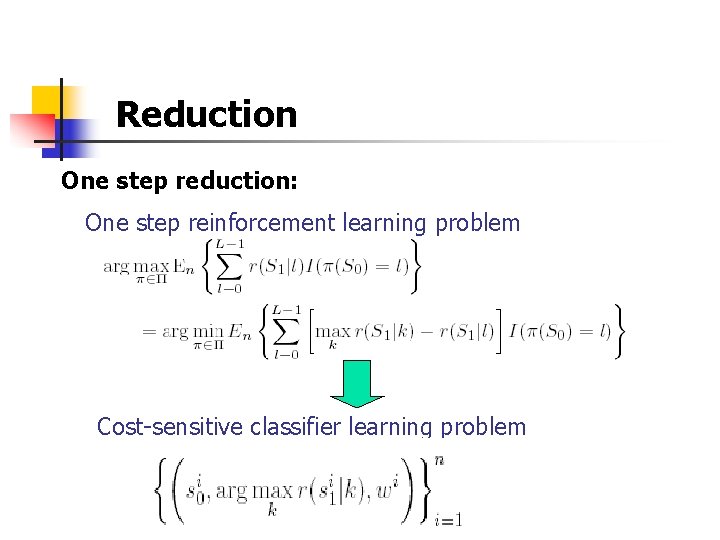

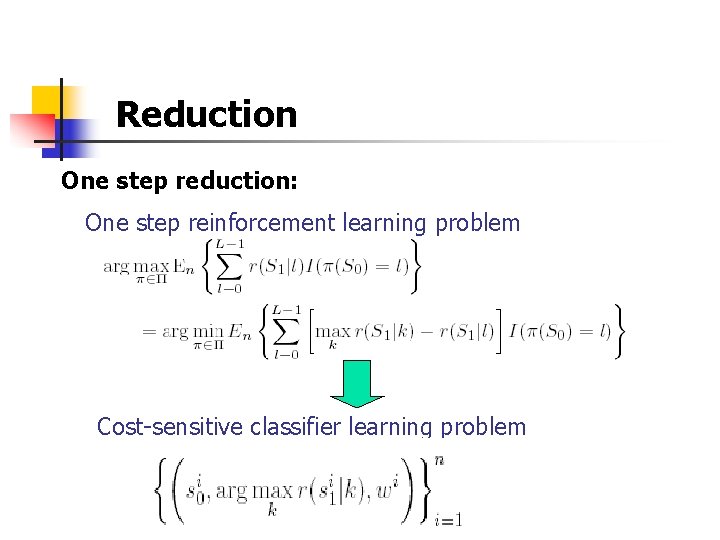

Reduction One step reduction: One step reinforcement learning problem Cost-sensitive classifier learning problem

Reduction where • s 0 i: the ith sample (or data) • : label • wi: the costs of classifying example i to each of of possible labels.

Reduction Properties of cost • The cost for misclassification is always positive • The cost for correct classification is zero • The larger the difference between the possible actions in terms of future reward, the larger the cost (or weight)

Reduction T-step MDP reduction How to find good policies for a T-step MDP by solving a sequence of weighted classification problems T-step policy =( 0, 1, … T-1) • When updating t, hold the rest constant • When updating t, the trees are pruned form the root to stage t by keeping only the branch which agree with controls 0, 1, … t-1

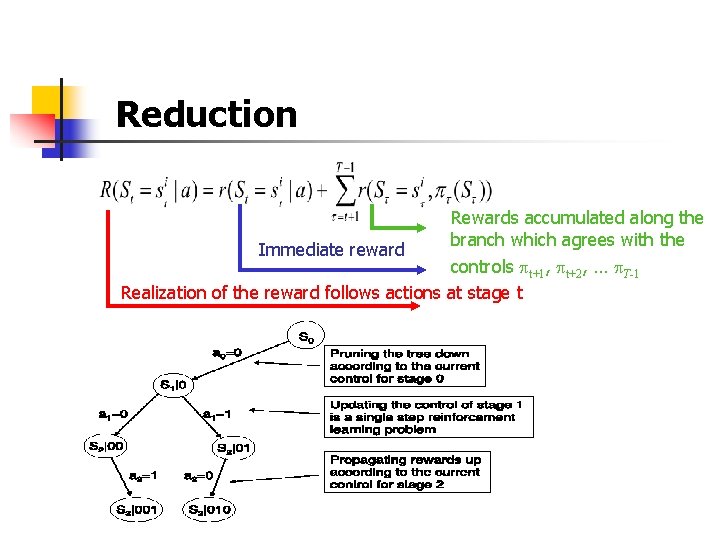

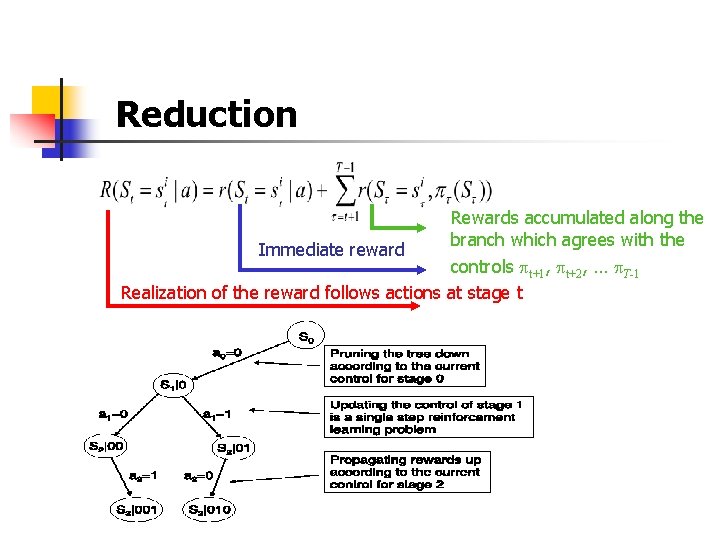

Reduction Immediate reward Rewards accumulated along the branch which agrees with the controls t+1, t+2, … T-1 Realization of the reward follows actions at stage t

![Illustrative Example Twostep MDP problem Continuous state space S 0 1 Illustrative Example Two-step MDP problem: • Continuous state space S = [0, 1] •](https://slidetodoc.com/presentation_image/17dec3176702e18d72f7b987867217fb/image-16.jpg)

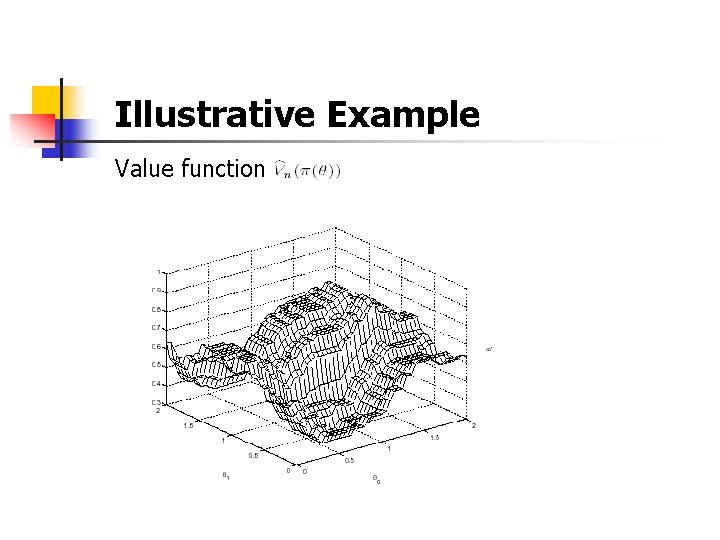

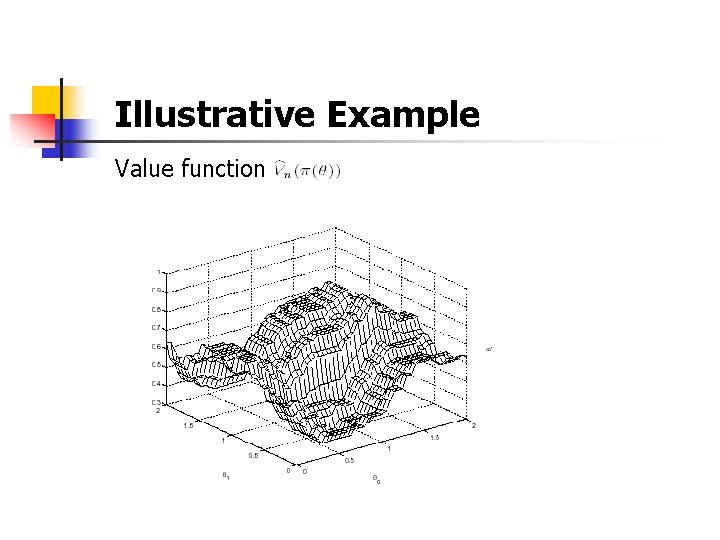

Illustrative Example Two-step MDP problem: • Continuous state space S = [0, 1] • Binary action Space A = {0, 1} • Uniform distribution over the initial state • •

Illustrative Example Value function

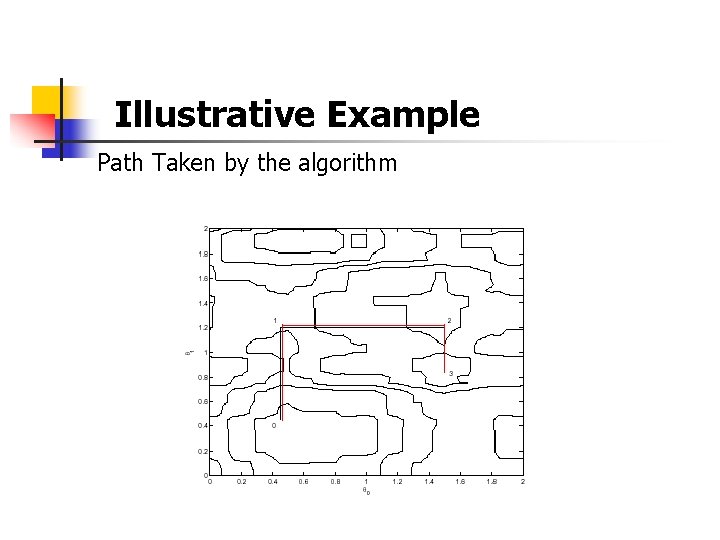

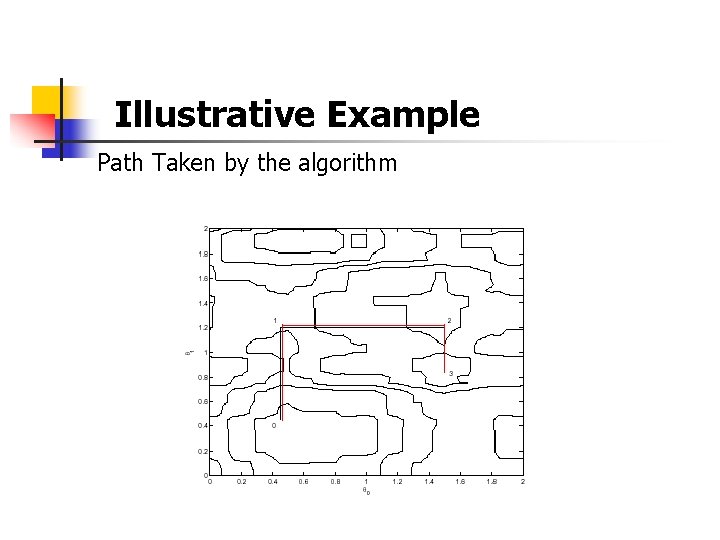

Illustrative Example Path Taken by the algorithm