Reinforcement Learning Yishay Mansour TelAviv University Outline Goal

![Typical Applications • Robotics – Elevator control [CB]. – Robo-soccer [SV]. • Board games Typical Applications • Robotics – Elevator control [CB]. – Robo-soccer [SV]. • Board games](https://slidetodoc.com/presentation_image_h2/c7fde7ac2275256f9cc0b7ff044a1306/image-5.jpg)

![Algorithms - optimal control State-Action Value function: Qp(s, a) = E [ R(s, a)] Algorithms - optimal control State-Action Value function: Qp(s, a) = E [ R(s, a)]](https://slidetodoc.com/presentation_image_h2/c7fde7ac2275256f9cc0b7ff044a1306/image-24.jpg)

![Resources • Reinforcement Learning (an introduction) [Sutton & Barto] • Markov Decision Processes [Puterman] Resources • Reinforcement Learning (an introduction) [Sutton & Barto] • Markov Decision Processes [Puterman]](https://slidetodoc.com/presentation_image_h2/c7fde7ac2275256f9cc0b7ff044a1306/image-37.jpg)

- Slides: 37

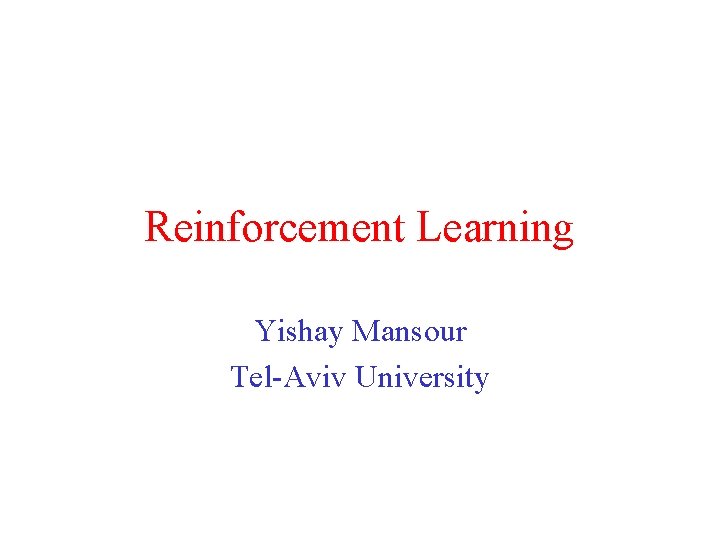

Reinforcement Learning Yishay Mansour Tel-Aviv University

Outline • Goal of Reinforcement Learning • Mathematical Model (MDP) • Planning 2

Goal of Reinforcement Learning Goal oriented learning through interaction Control of large scale stochastic environments with partial knowledge. Supervised / Unsupervised Learning Learn from labeled / unlabeled examples 3

Reinforcement Learning - origins Artificial Intelligence Control Theory Operation Research Cognitive Science & Psychology Solid foundations; well established research. 4

![Typical Applications Robotics Elevator control CB Robosoccer SV Board games Typical Applications • Robotics – Elevator control [CB]. – Robo-soccer [SV]. • Board games](https://slidetodoc.com/presentation_image_h2/c7fde7ac2275256f9cc0b7ff044a1306/image-5.jpg)

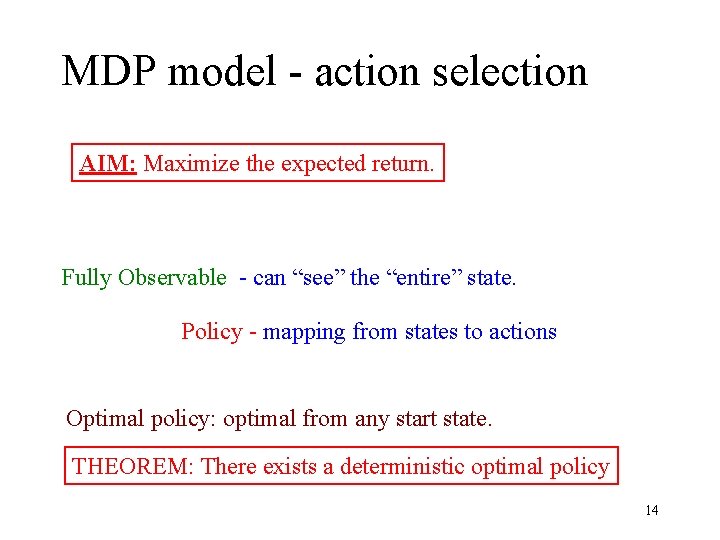

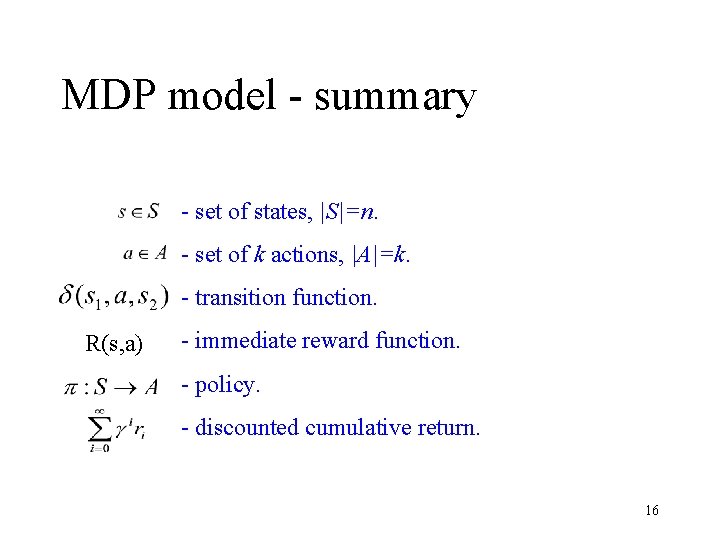

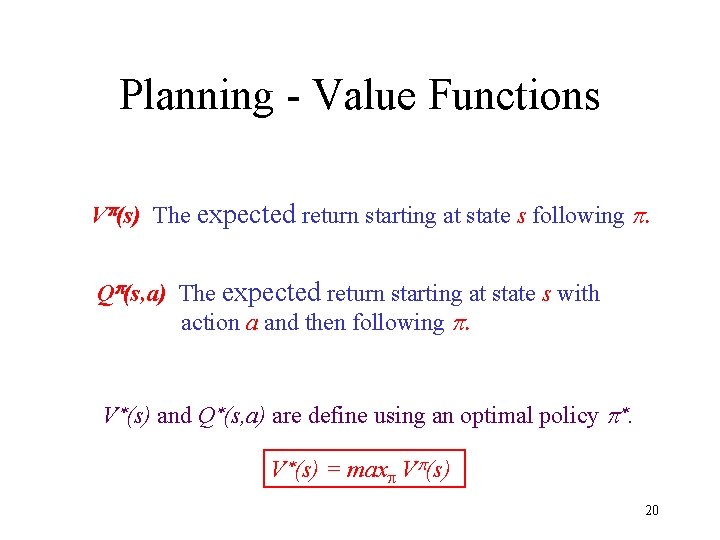

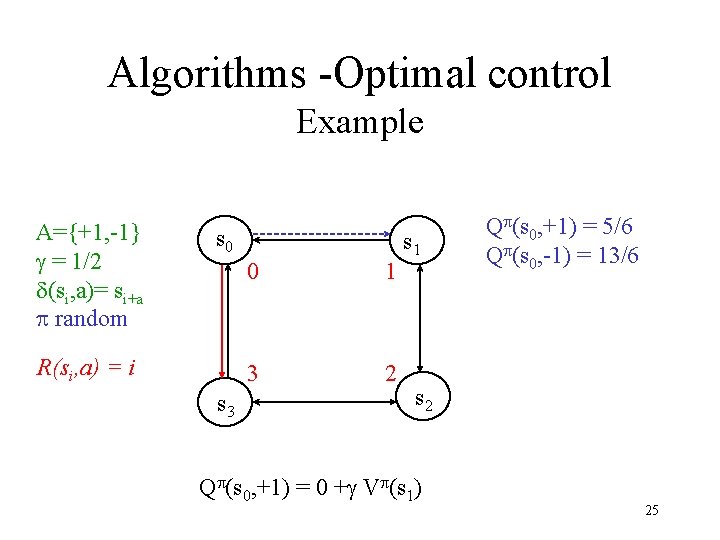

Typical Applications • Robotics – Elevator control [CB]. – Robo-soccer [SV]. • Board games – backgammon [T], – checkers [S]. – Chess [B] • Scheduling – Dynamic channel allocation [SB]. – Inventory problems. 5

Contrast with Supervised Learning The system has a “state”. The algorithm influences the state distribution. Inherent Tradeoff: Exploration versus Exploitation. 6

Mathematical Model - Motivation Model of uncertainty: Environment, actions, our knowledge. Focus on decision making. Maximize long term reward. Markov Decision Process (MDP) 7

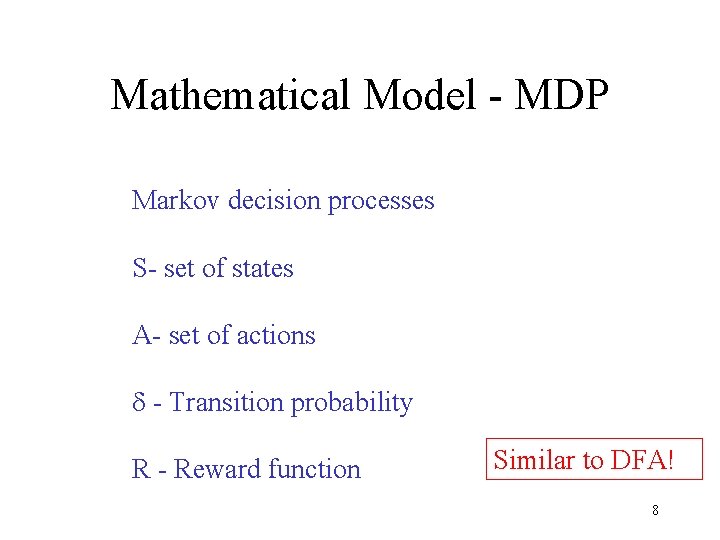

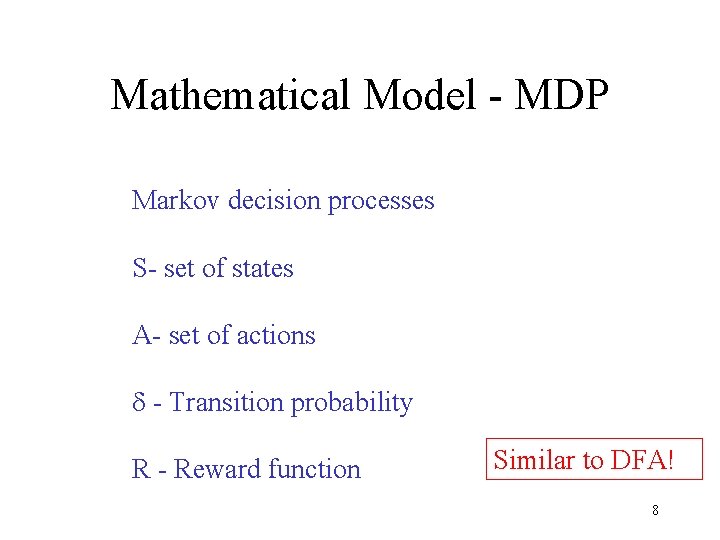

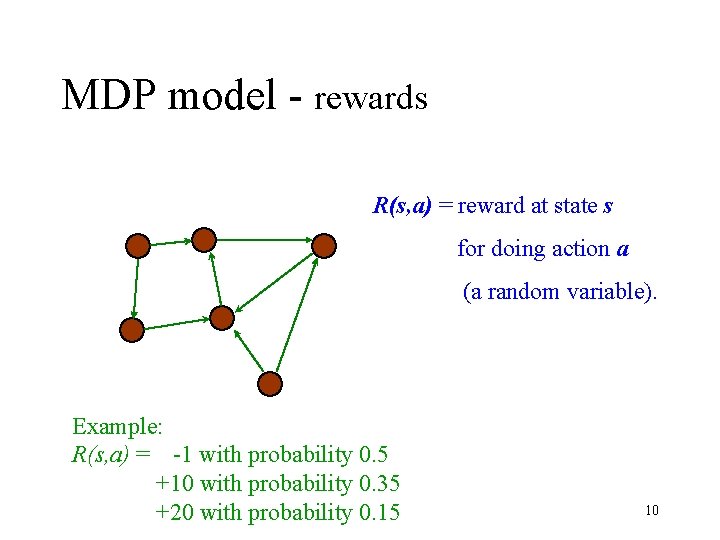

Mathematical Model - MDP Markov decision processes S- set of states A- set of actions d - Transition probability R - Reward function Similar to DFA! 8

MDP model - states and actions Environment = states 0. 7 0. 3 action a Actions = transitions 9

MDP model - rewards R(s, a) = reward at state s for doing action a (a random variable). Example: R(s, a) = -1 with probability 0. 5 +10 with probability 0. 35 +20 with probability 0. 15 10

MDP model - trajectories trajectory: s 0 a 0 r 0 s 1 a 1 r 1 s 2 a 2 r 2 11

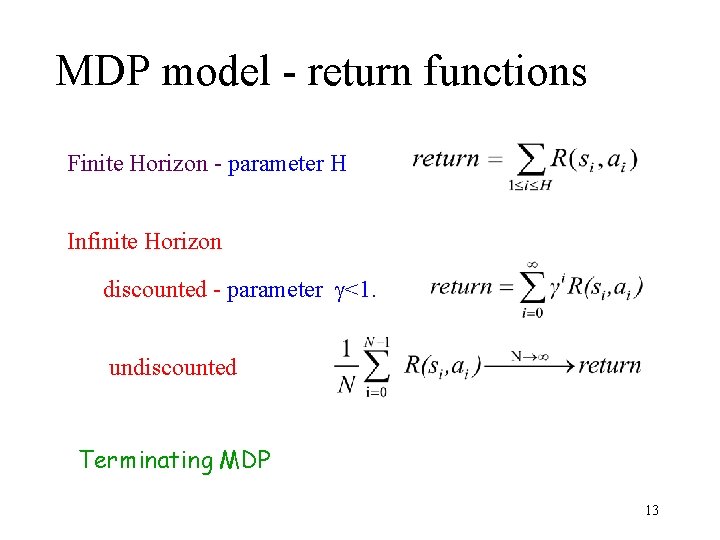

MDP - Return function. Combining all the immediate rewards to a single value. Modeling Issues: Are early rewards more valuable than later rewards? Is the system “terminating” or continuous? Usually the return is linear in the immediate rewards. 12

MDP model - return functions Finite Horizon - parameter H Infinite Horizon discounted - parameter g<1. undiscounted Terminating MDP 13

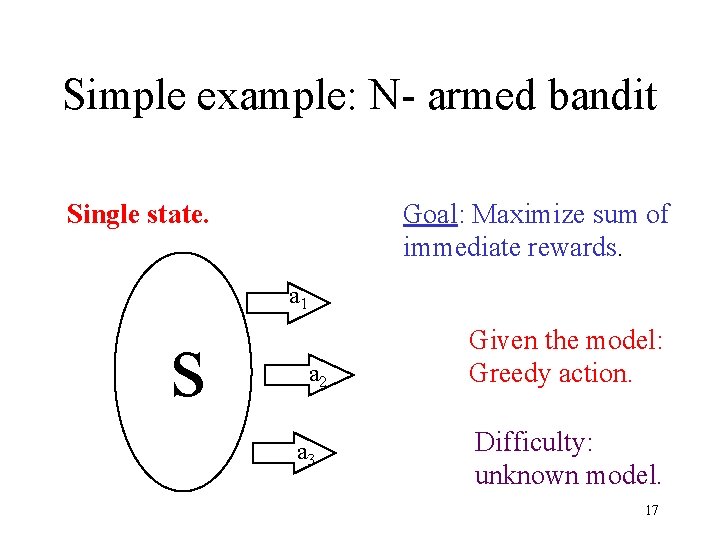

MDP model - action selection AIM: Maximize the expected return. Fully Observable - can “see” the “entire” state. Policy - mapping from states to actions Optimal policy: optimal from any start state. THEOREM: There exists a deterministic optimal policy 14

Contrast with Supervised Learning: Fixed distribution on examples. Reinforcement Learning: The state distribution is policy dependent!!! A small local change in the policy can make a huge global change in the return. 15

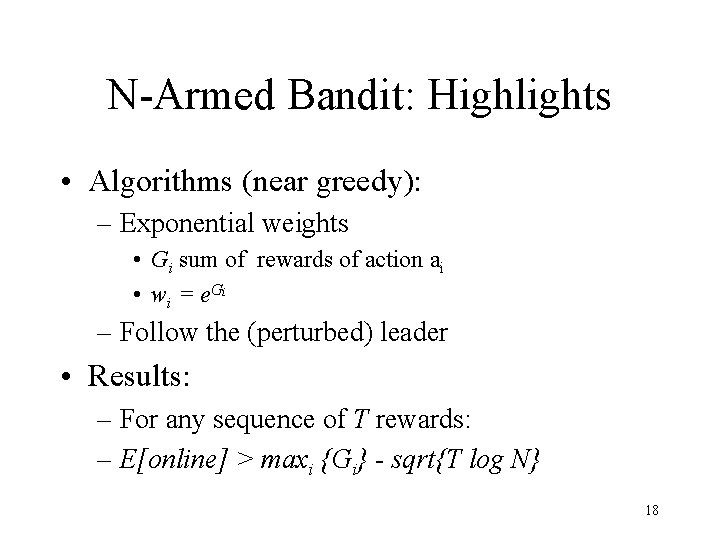

MDP model - summary - set of states, |S|=n. - set of k actions, |A|=k. - transition function. R(s, a) - immediate reward function. - policy. - discounted cumulative return. 16

Simple example: N- armed bandit Goal: Maximize sum of immediate rewards. Single state. s a 1 a 2 a 3 Given the model: Greedy action. Difficulty: unknown model. 17

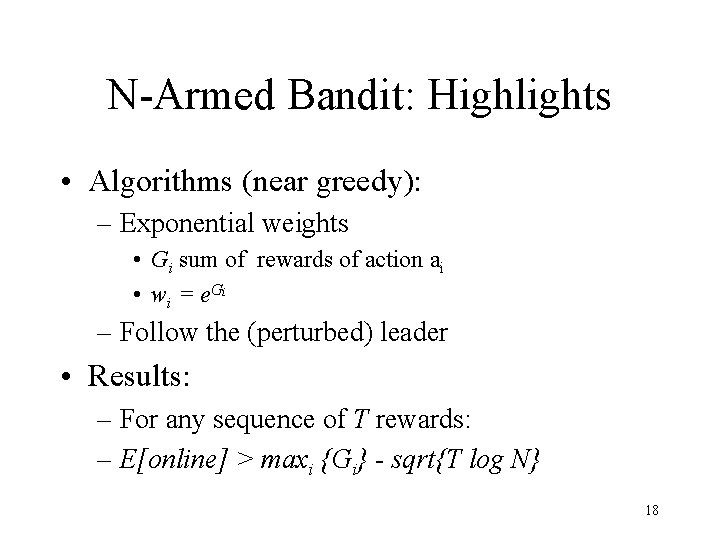

N-Armed Bandit: Highlights • Algorithms (near greedy): – Exponential weights • Gi sum of rewards of action ai • w i = e Gi – Follow the (perturbed) leader • Results: – For any sequence of T rewards: – E[online] > maxi {Gi} - sqrt{T log N} 18

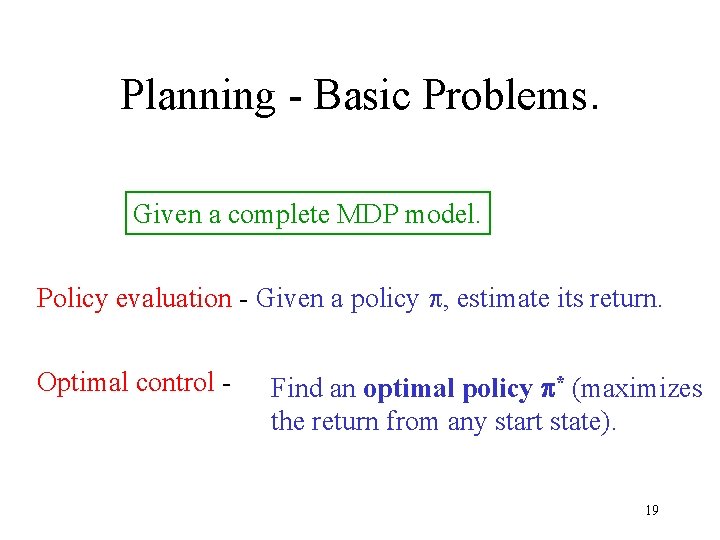

Planning - Basic Problems. Given a complete MDP model. Policy evaluation - Given a policy p, estimate its return. Optimal control - Find an optimal policy p* (maximizes the return from any start state). 19

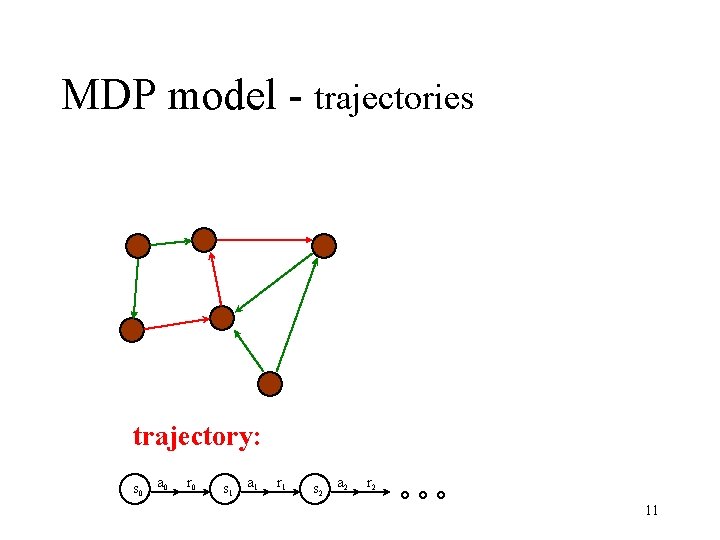

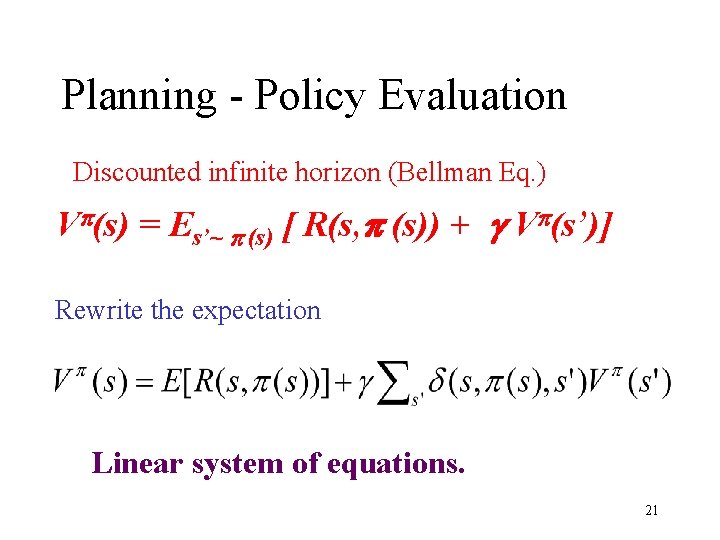

Planning - Value Functions Vp(s) The expected return starting at state s following p. Qp(s, a) The expected return starting at state s with action a and then following p. V*(s) and Q*(s, a) are define using an optimal policy p*. V*(s) = maxp Vp(s) 20

Planning - Policy Evaluation Discounted infinite horizon (Bellman Eq. ) Vp(s) = Es’~ p (s) [ R(s, p (s)) + g Vp(s’)] Rewrite the expectation Linear system of equations. 21

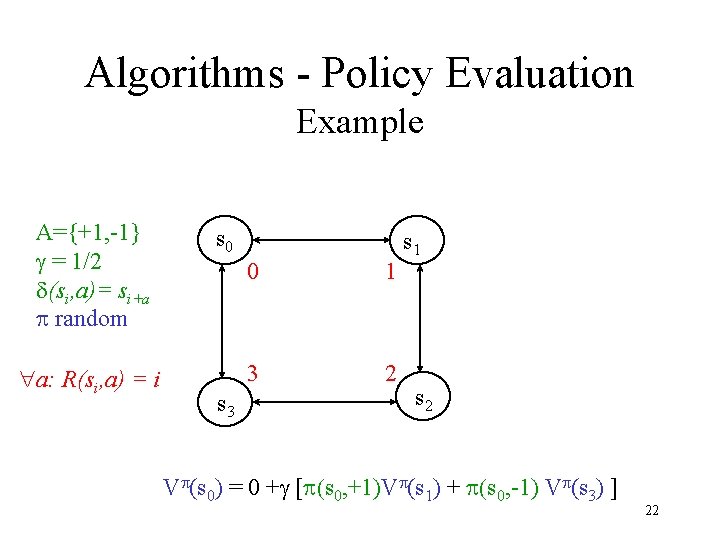

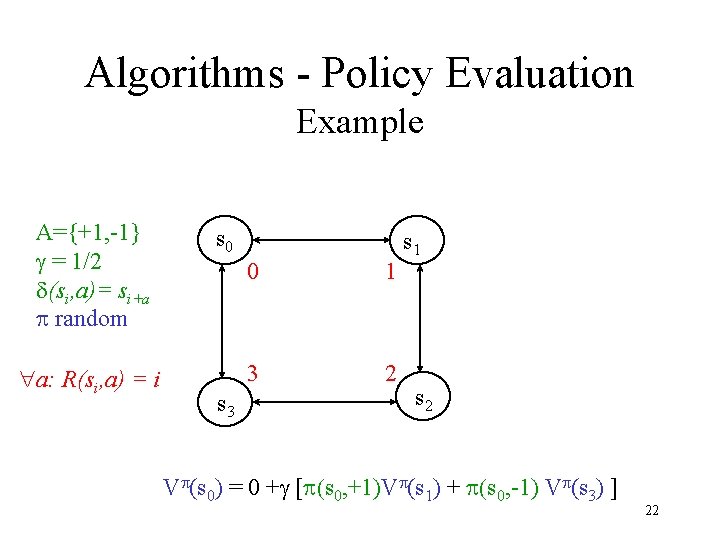

Algorithms - Policy Evaluation Example A={+1, -1} g = 1/2 d(si, a)= si+a p random "a: R(si, a) = i s 0 s 3 0 1 3 2 s 1 s 2 Vp(s 0) = 0 +g [p(s 0, +1)Vp(s 1) + p(s 0, -1) Vp(s 3) ] 22

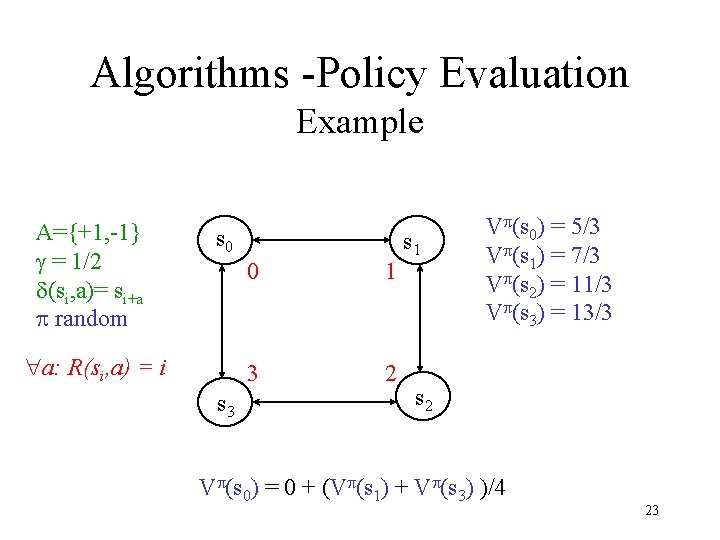

Algorithms -Policy Evaluation Example A={+1, -1} g = 1/2 d(si, a)= si+a p random s 0 "a: R(si, a) = i s 3 0 1 3 2 s 1 Vp(s 0) = 5/3 Vp(s 1) = 7/3 Vp(s 2) = 11/3 Vp(s 3) = 13/3 s 2 Vp(s 0) = 0 + (Vp(s 1) + Vp(s 3) )/4 23

![Algorithms optimal control StateAction Value function Qps a E Rs a Algorithms - optimal control State-Action Value function: Qp(s, a) = E [ R(s, a)]](https://slidetodoc.com/presentation_image_h2/c7fde7ac2275256f9cc0b7ff044a1306/image-24.jpg)

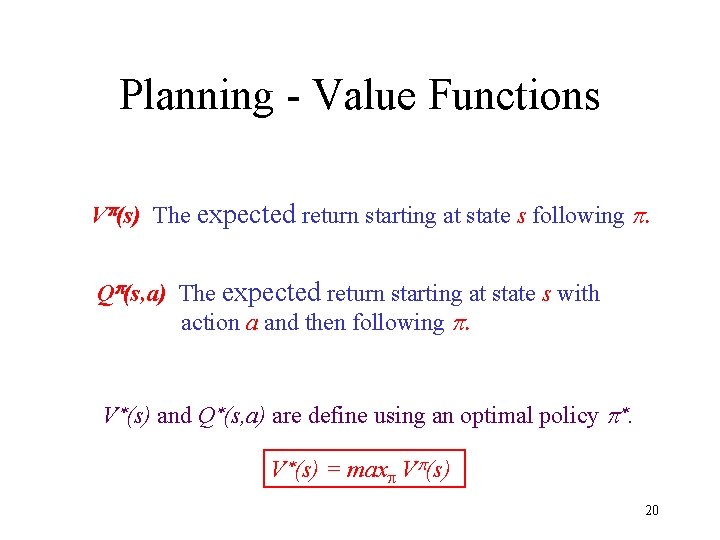

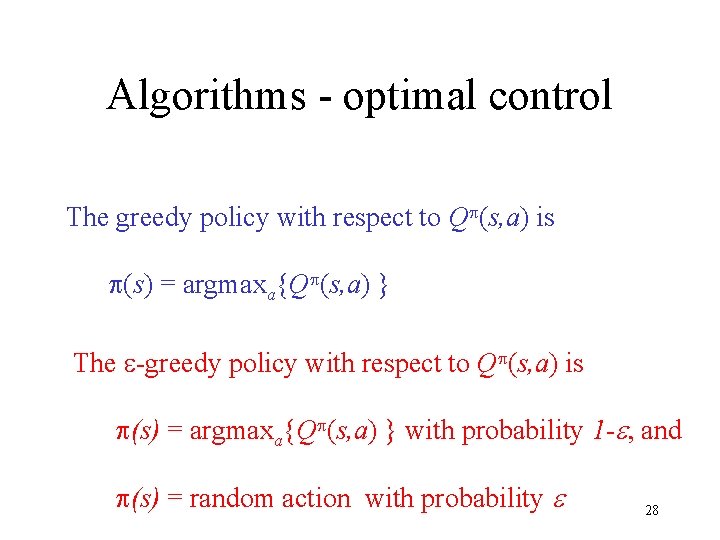

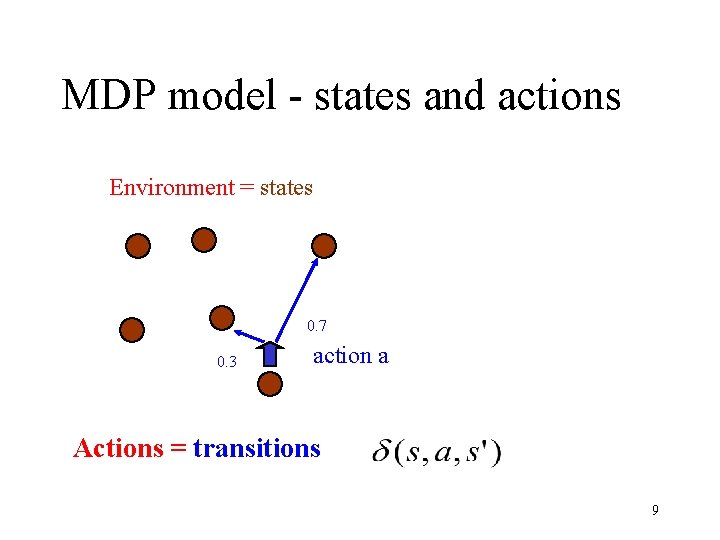

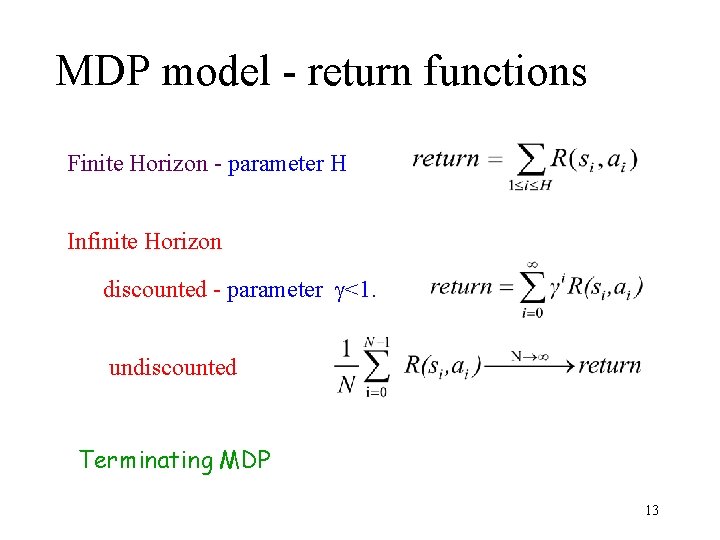

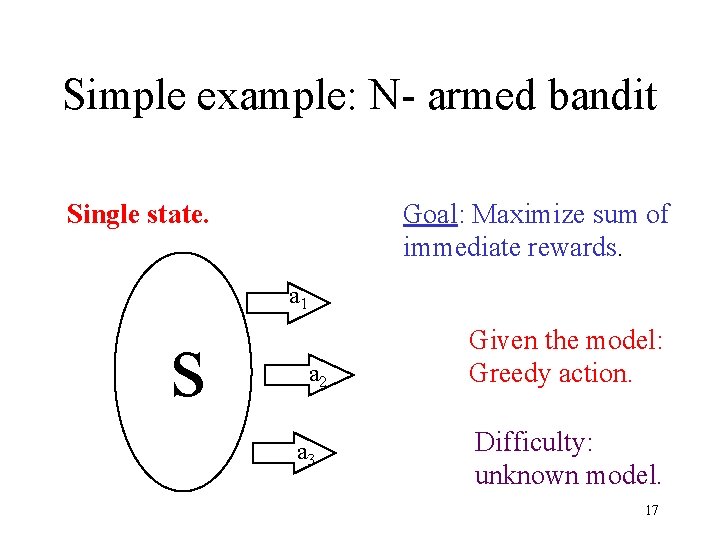

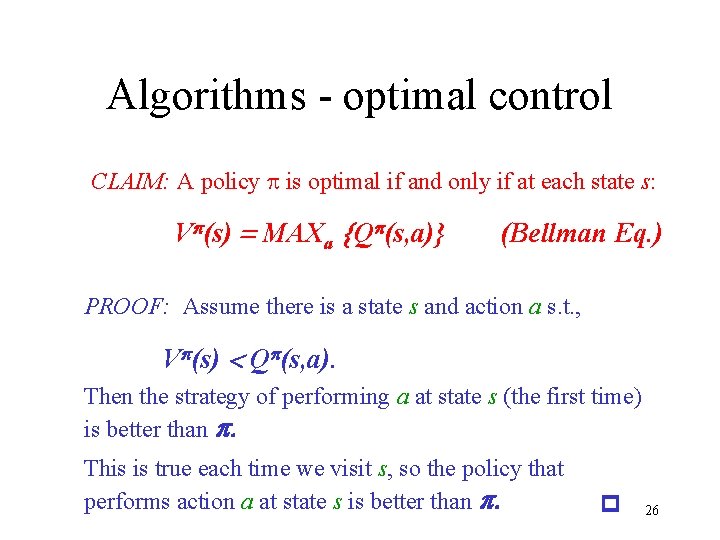

Algorithms - optimal control State-Action Value function: Qp(s, a) = E [ R(s, a)] + g Es’~ (s, a) [ Vp(s’)] Note For a deterministic policy p. 24

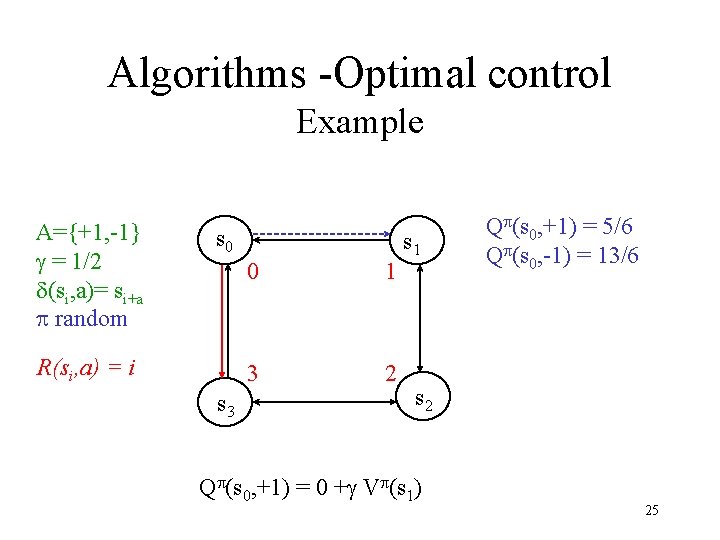

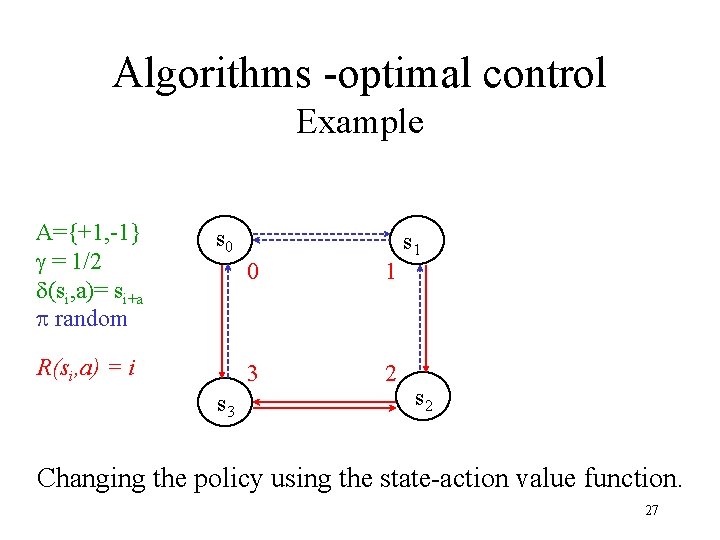

Algorithms -Optimal control Example A={+1, -1} g = 1/2 d(si, a)= si+a p random s 0 R(si, a) = i s 3 0 1 3 2 s 1 Qp(s 0, +1) = 5/6 Qp(s 0, -1) = 13/6 s 2 Qp(s 0, +1) = 0 +g Vp(s 1) 25

Algorithms - optimal control CLAIM: A policy p is optimal if and only if at each state s: Vp(s) = MAXa {Qp(s, a)} (Bellman Eq. ) PROOF: Assume there is a state s and action a s. t. , Vp(s) < Qp(s, a). Then the strategy of performing a at state s (the first time) is better than p. This is true each time we visit s, so the policy that performs action a at state s is better than p. p 26

Algorithms -optimal control Example A={+1, -1} g = 1/2 d(si, a)= si+a p random s 0 R(si, a) = i s 3 0 1 3 2 s 1 s 2 Changing the policy using the state-action value function. 27

Algorithms - optimal control The greedy policy with respect to Qp(s, a) is p(s) = argmaxa{Qp(s, a) } The e-greedy policy with respect to Qp(s, a) is p(s) = argmaxa{Qp(s, a) } with probability 1 -e, and p(s) = random action with probability e 28

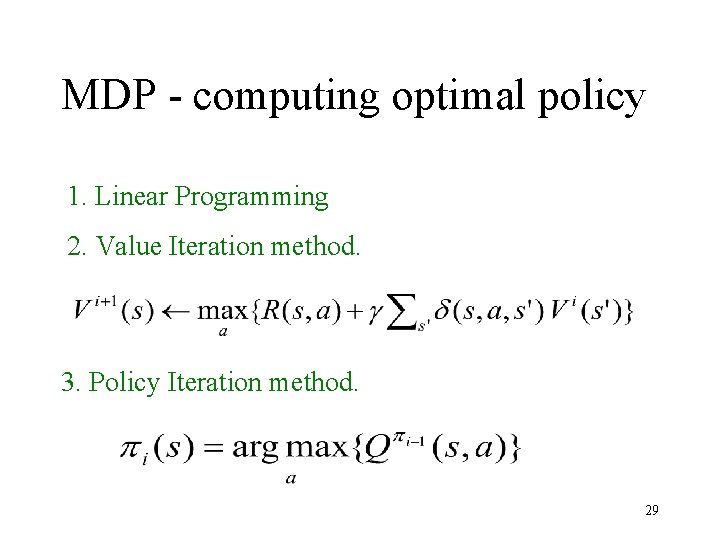

MDP - computing optimal policy 1. Linear Programming 2. Value Iteration method. 3. Policy Iteration method. 29

Convergence • Value Iteration – Drop in distance from optimal each iteration maxs {V*(s) – Vt(s)} • Policy Iteration – Policy can only improve s Vt+1(s) Vt(s) • Less iterations then Value Iteration, but • more expensive iterations. 30

Relations to Board Games • • • state = current board action = what we can play. opponent action = part of the environment value function = probability of winning Q- function = modified policy. Hidden assumption: Game is Markovian 31

Planning versus Learning Tightly coupled in Reinforcement Learning Goal: maximize return while learning. 32

Example - Elevator Control Learning (alone): Model the arrival model well. Planning (alone) : Given arrival model build schedule Real objective: Construct a schedule while updating model 33

Partially Observable MDP Rather than observing the state we observe some function of the state. Ob - Observable function. a random variable for each states. Example: (1) Ob(s) = s+noise. (2) Ob(s) = first bit of s. Problem: different states may “look” similar. The optimal strategy is history dependent ! 34

POMDP - Belief State Algorithm Given a history of actions and observable value we compute a posterior distribution for the state we are in (belief state). The belief-state MDP: States: distribution over S (states of the POMDP). actions: as in the POMDP. Transition: the posterior distribution (given the observation) We can perform the planning and learning on the belief-state MDP. 35

POMDP Hard computational problems. Computing an infinite (polynomial) horizon undiscounted optimal strategy for a deterministic POMDP is P-spacehard (NP-complete) [PT, L]. Computing an infinite (polynomial) horizon undiscounted optimal strategy for a stochastic POMDP is EXPTIMEhard (P-space-complete) [PT, L]. Computing an infinite (polynomial) horizon undiscounted optimal policy for an MDP is P-complete [PT]. 36

![Resources Reinforcement Learning an introduction Sutton Barto Markov Decision Processes Puterman Resources • Reinforcement Learning (an introduction) [Sutton & Barto] • Markov Decision Processes [Puterman]](https://slidetodoc.com/presentation_image_h2/c7fde7ac2275256f9cc0b7ff044a1306/image-37.jpg)

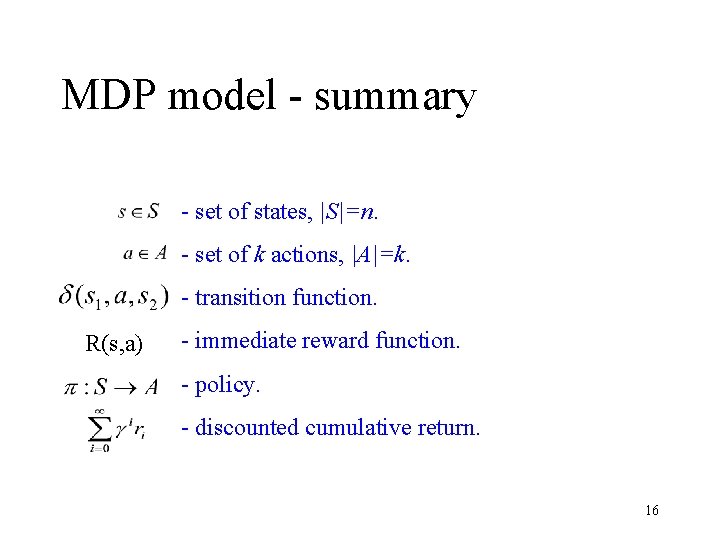

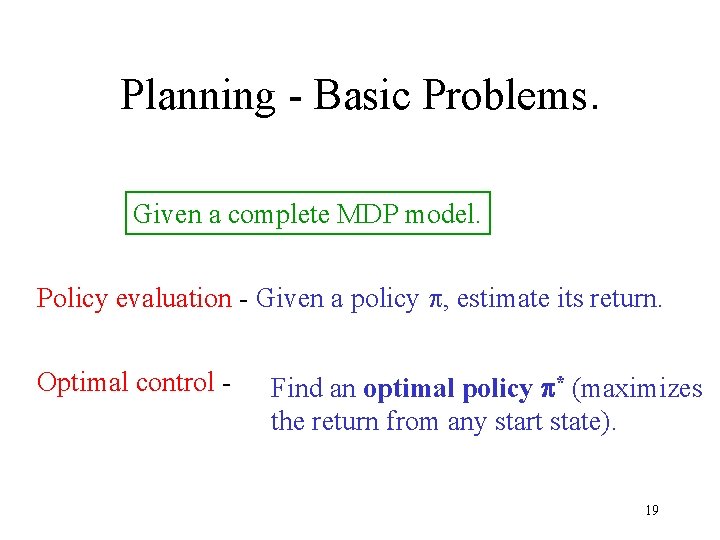

Resources • Reinforcement Learning (an introduction) [Sutton & Barto] • Markov Decision Processes [Puterman] • Dynamic Programming and Optimal Control [Bertsekas] • Neuro-Dynamic Programming [Bertsekas & Tsitsiklis] • Ph. D. thesis - Michael Littman 37