Reinforcement Learning Part I Tabular Solution Methods MiniBootcamp

Reinforcement Learning | Part I Tabular Solution Methods Mini-Bootcamp Richard S. Sutton & Andrew G. Barto 1 st ed. (1998), 2 nd ed. (2018) Presented by Nicholas Roy Pillow Lab Meeting June 27, 2019

RL of the tabular variety • What is special about RL? – “The most important feature distinguishing reinforcement learning from other types of learning is that it uses training information that evaluates the actions taken rather than instructs by giving correct actions. This is what creates the need for active exploration, for an explicit search for good behavior. Purely evaluative feedback indicates how good the action taken was, but not whether it was the best or the worst action possible. ” • What is the point of Part I? – “We describe almost all the core ideas of reinforcement learning algorithms in their simplest forms: that in which the state and action spaces are small enough for the approximate value functions to be represented as arrays, or tables. In this case, the methods can often find exact solutions…” Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

Part I: Tabular Solution Methods • • Ch 2: Multi-armed Bandits Ch 3: Finite Markov Decision Processes Ch 4: Dynamic Programming Ch 5: Monte Carlo Methods Ch 6: Temporal-Difference Learning Ch 7: n-step Bootstrapping Ch 8: Planning and Learning with Tabular Methods Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

Part I: Tabular Solution Methods • • Ch 2: Multi-armed Bandits Ch 3: Finite Markov Decision Processes Ch 4: Dynamic Programming Ch 5: Monte Carlo Methods Ch 6: Temporal-Difference Learning Ch 7: n-step Bootstrapping Ch 8: Planning and Learning with Tabular Methods Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

Let’s get through the basics… • • Agent/Environment States Actions Rewards Markov MDP Dynamics p(s’, r|s, a) Reinforcement Learning Mini-Bootcamp • • Nicholas Roy Returns Discount factors Episodic/Continuing tasks Policies State-/Action-value function Bellman equation Optimal policies Pillow Lab Meeting, 06/27/19

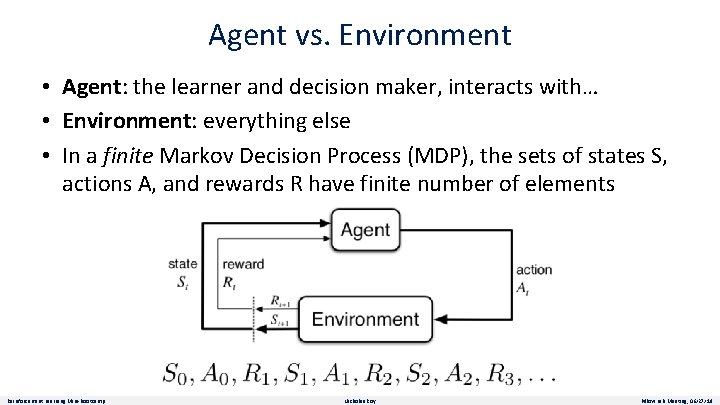

Agent vs. Environment • Agent: the learner and decision maker, interacts with… • Environment: everything else • In a finite Markov Decision Process (MDP), the sets of states S, actions A, and rewards R have finite number of elements Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

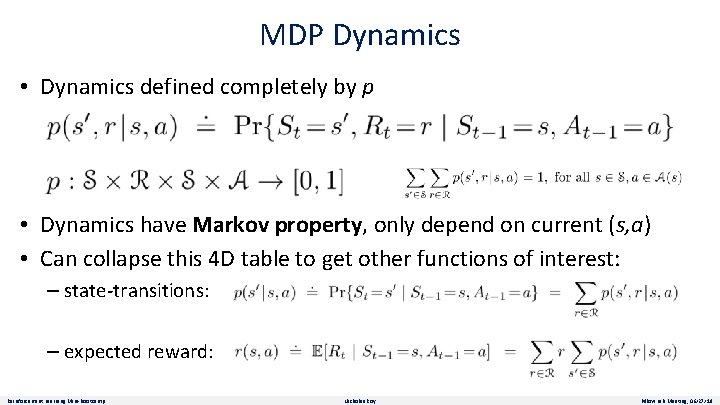

MDP Dynamics • Dynamics defined completely by p • Dynamics have Markov property, only depend on current (s, a) • Can collapse this 4 D table to get other functions of interest: – state-transitions: – expected reward: Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

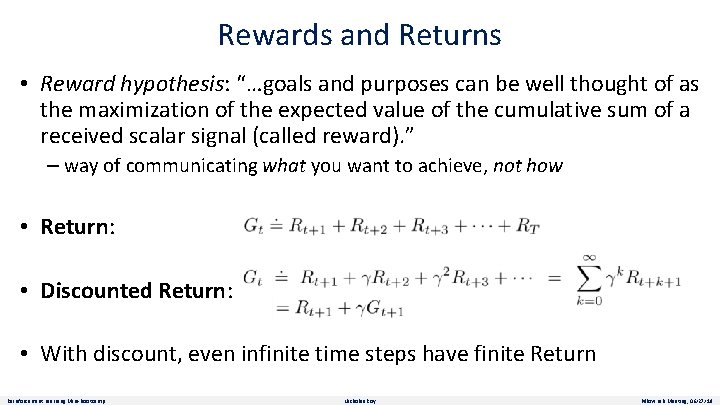

Rewards and Returns • Reward hypothesis: “…goals and purposes can be well thought of as the maximization of the expected value of the cumulative sum of a received scalar signal (called reward). ” – way of communicating what you want to achieve, not how • Return: • Discounted Return: • With discount, even infinite time steps have finite Return Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

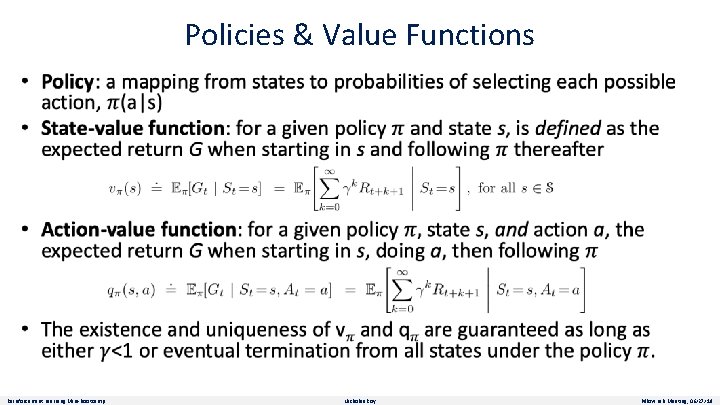

Policies & Value Functions • Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

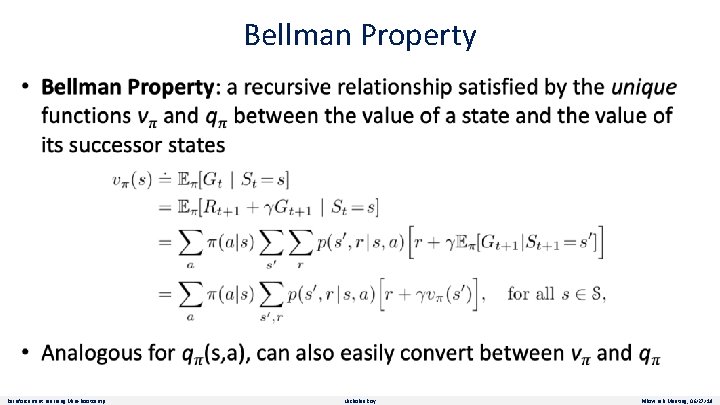

Bellman Property • Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

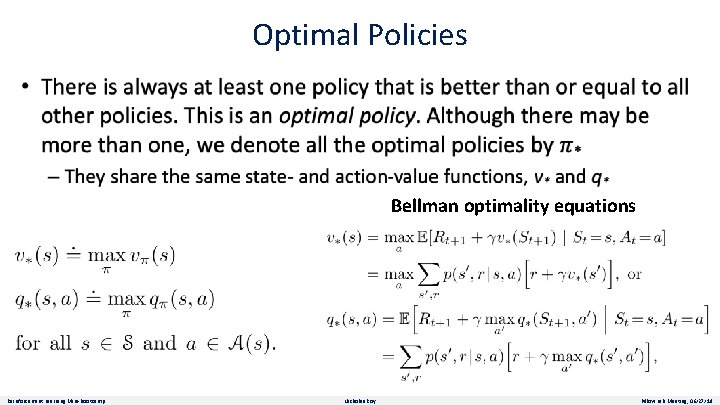

Optimal Policies Bellman optimality equations Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

Part I: Tabular Solution Methods • • Ch 2: Multi-armed Bandits Ch 3: Finite Markov Decision Processes Ch 4: Dynamic Programming Ch 5: Monte Carlo Methods Ch 6: Temporal-Difference Learning Ch 7: n-step Bootstrapping Ch 8: Planning and Learning with Tabular Methods Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

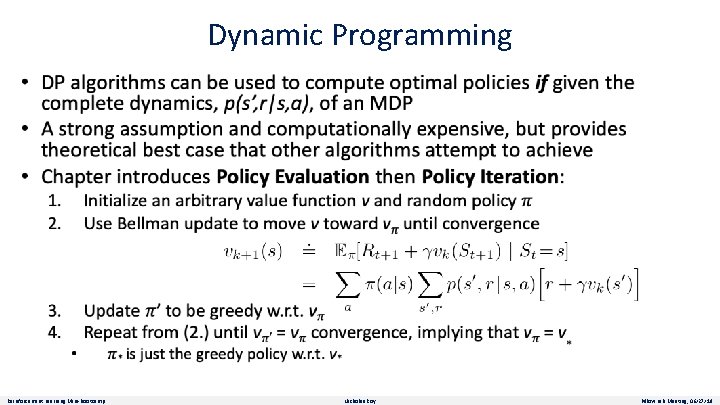

Dynamic Programming Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

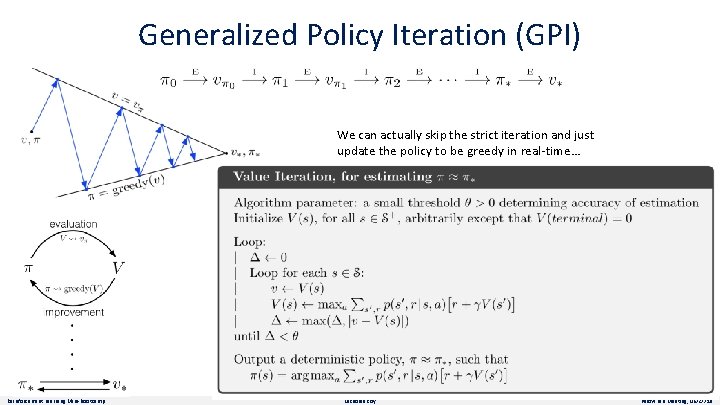

Generalized Policy Iteration (GPI) We can actually skip the strict iteration and just update the policy to be greedy in real-time… Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

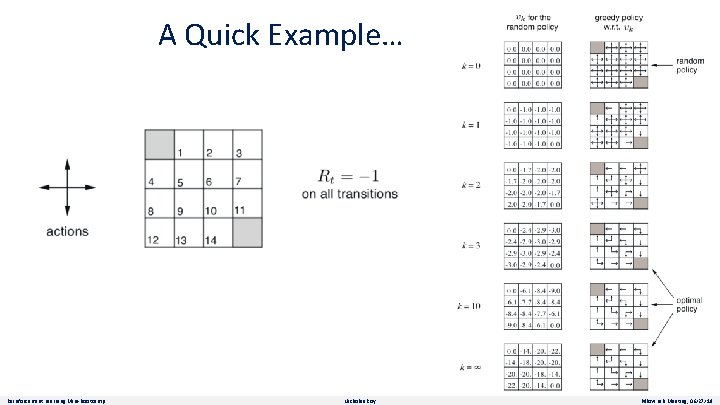

A Quick Example… Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

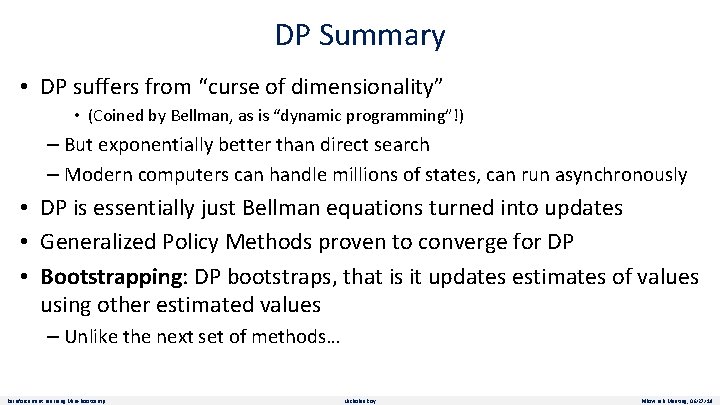

DP Summary • DP suffers from “curse of dimensionality” • (Coined by Bellman, as is “dynamic programming”!) – But exponentially better than direct search – Modern computers can handle millions of states, can run asynchronously • DP is essentially just Bellman equations turned into updates • Generalized Policy Methods proven to converge for DP • Bootstrapping: DP bootstraps, that is it updates estimates of values using other estimated values – Unlike the next set of methods… Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

Part I: Tabular Solution Methods • • Ch 2: Multi-armed Bandits Ch 3: Finite Markov Decision Processes Ch 4: Dynamic Programming Ch 5: Monte Carlo Methods Ch 6: Temporal-Difference Learning Ch 7: n-step Bootstrapping Ch 8: Planning and Learning with Tabular Methods Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

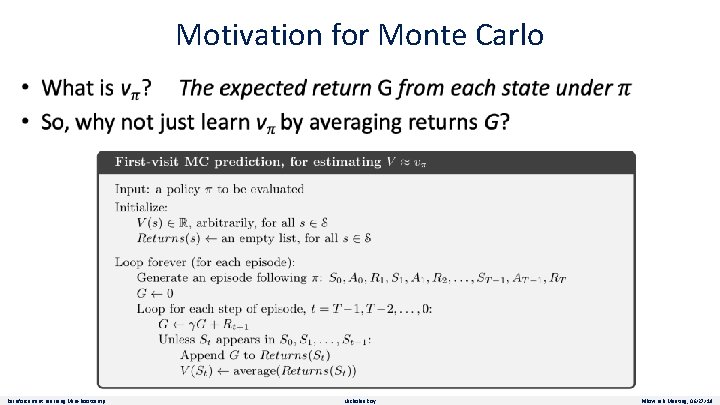

Motivation for Monte Carlo Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

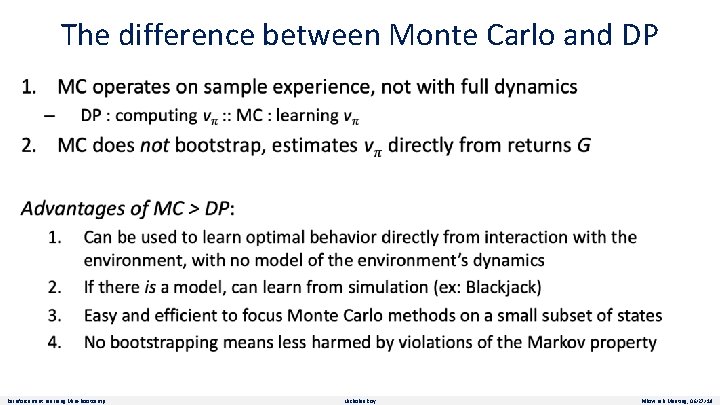

The difference between Monte Carlo and DP Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

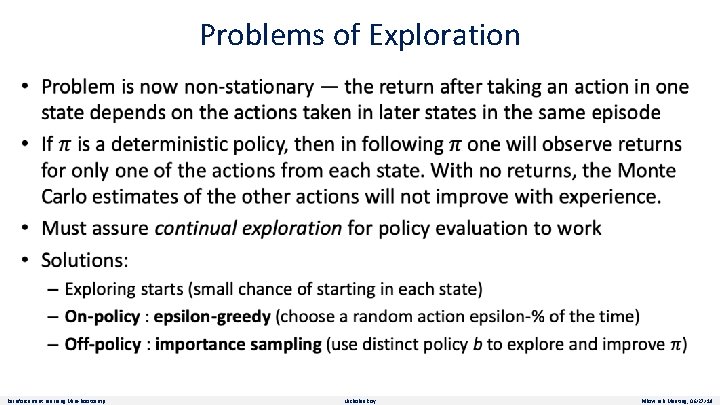

Problems of Exploration Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

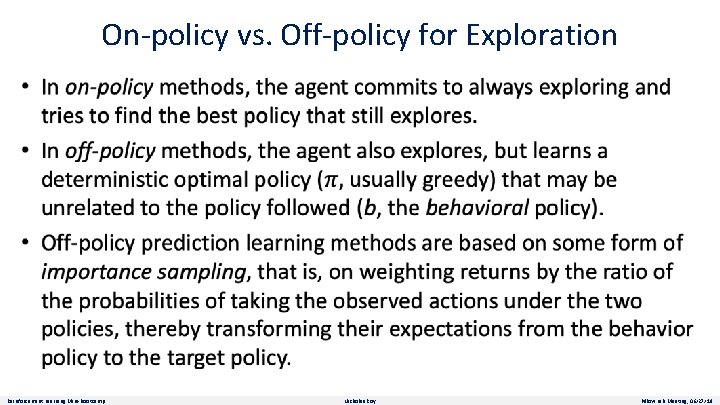

On-policy vs. Off-policy for Exploration Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

Part I: Tabular Solution Methods • • Ch 2: Multi-armed Bandits Ch 3: Finite Markov Decision Processes Ch 4: Dynamic Programming Ch 5: Monte Carlo Methods Ch 6: Temporal-Difference Learning Ch 7: n-step Bootstrapping Ch 8: Planning and Learning with Tabular Methods Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

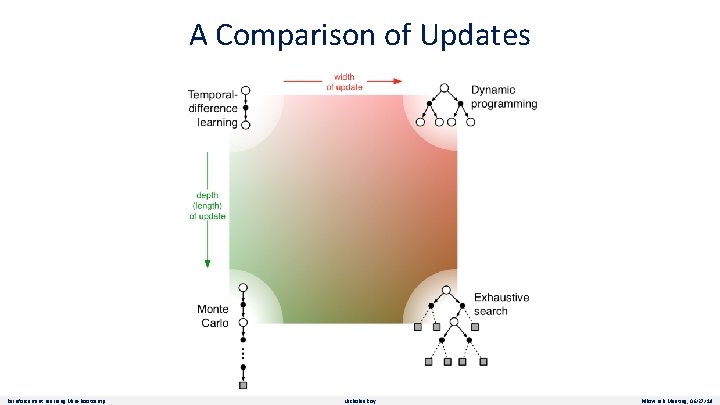

A Comparison of Updates Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

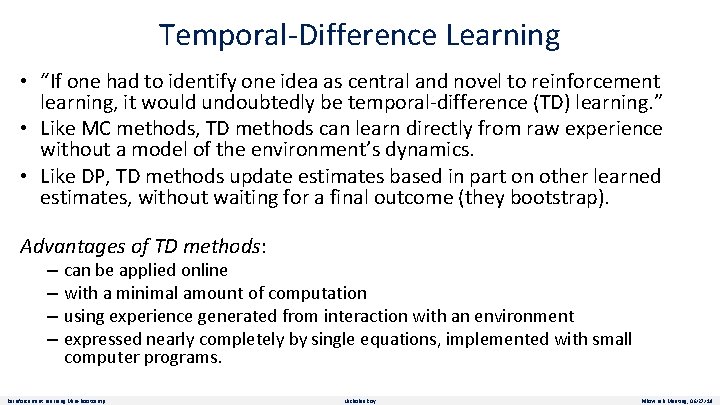

Temporal-Difference Learning • “If one had to identify one idea as central and novel to reinforcement learning, it would undoubtedly be temporal-difference (TD) learning. ” • Like MC methods, TD methods can learn directly from raw experience without a model of the environment’s dynamics. • Like DP, TD methods update estimates based in part on other learned estimates, without waiting for a final outcome (they bootstrap). Advantages of TD methods: – – can be applied online with a minimal amount of computation using experience generated from interaction with an environment expressed nearly completely by single equations, implemented with small computer programs. Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

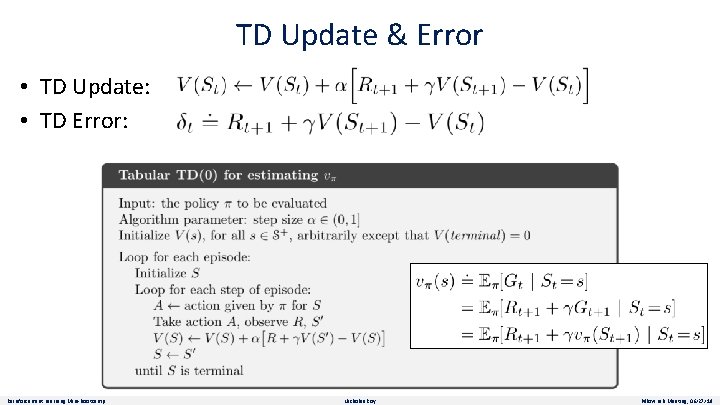

TD Update & Error • TD Update: • TD Error: Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

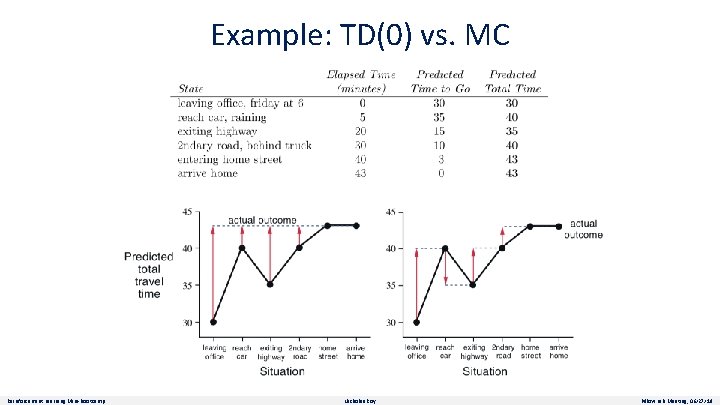

Example: TD(0) vs. MC Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

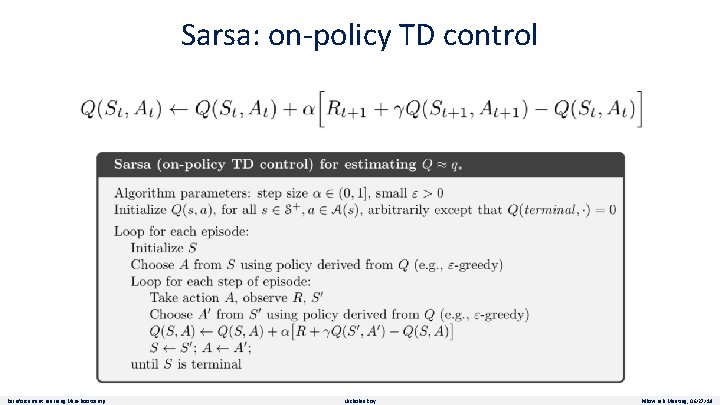

Sarsa: on-policy TD control Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

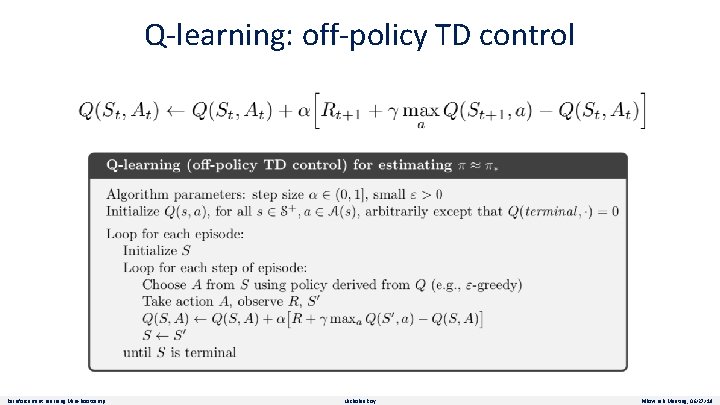

Q-learning: off-policy TD control Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

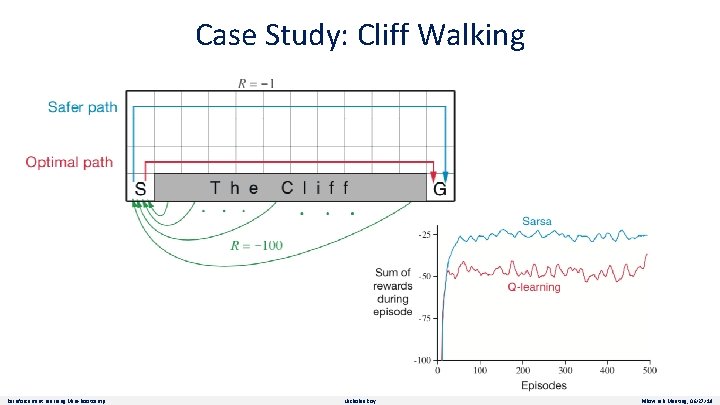

Case Study: Cliff Walking Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

Part I: Tabular Solution Methods • • Ch 2: Multi-armed Bandits Ch 3: Finite Markov Decision Processes Ch 4: Dynamic Programming Ch 5: Monte Carlo Methods Ch 6: Temporal-Difference Learning Ch 7: n-step Bootstrapping Ch 8: Planning and Learning with Tabular Methods Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

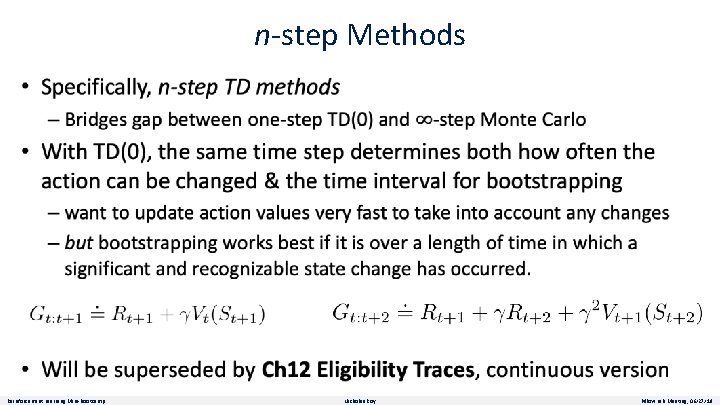

n-step Methods Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

Part I: Tabular Solution Methods • • Ch 2: Multi-armed Bandits Ch 3: Finite Markov Decision Processes Ch 4: Dynamic Programming Ch 5: Monte Carlo Methods Ch 6: Temporal-Difference Learning Ch 7: n-step Bootstrapping Ch 8: Planning and Learning with Tabular Methods Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

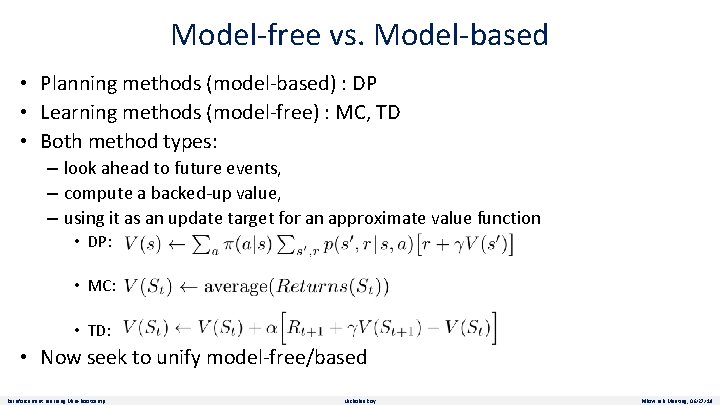

Model-free vs. Model-based • Planning methods (model-based) : DP • Learning methods (model-free) : MC, TD • Both method types: – look ahead to future events, – compute a backed-up value, – using it as an update target for an approximate value function • DP: • MC: • TD: • Now seek to unify model-free/based Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

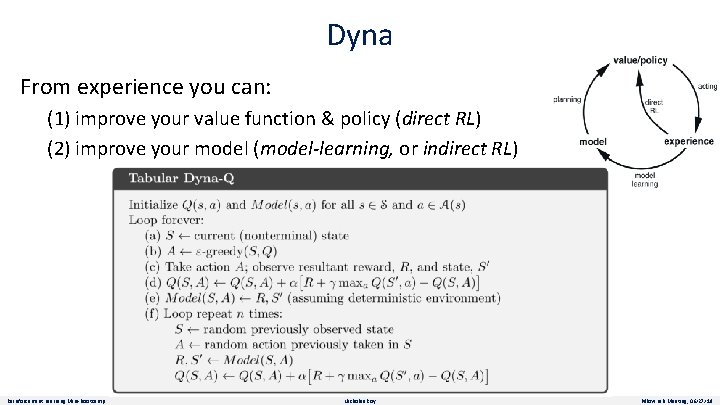

Dyna From experience you can: (1) improve your value function & policy (direct RL) (2) improve your model (model-learning, or indirect RL) Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

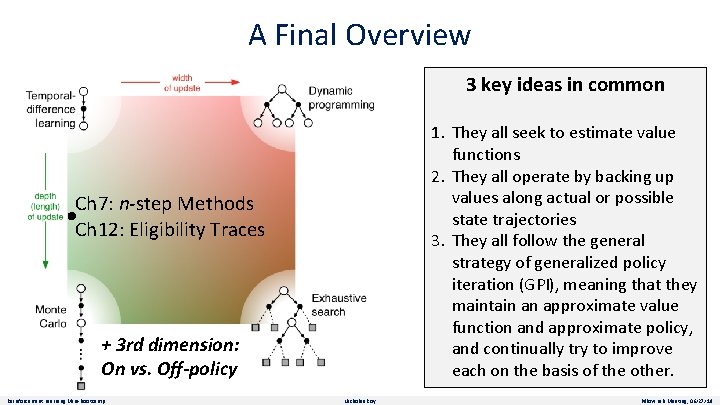

A Final Overview 3 key ideas in common 1. They all seek to estimate value functions 2. They all operate by backing up values along actual or possible state trajectories 3. They all follow the general strategy of generalized policy iteration (GPI), meaning that they maintain an approximate value function and approximate policy, and continually try to improve each on the basis of the other. Ch 7: n-step Methods Ch 12: Eligibility Traces + 3 rd dimension: On vs. Off-policy Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

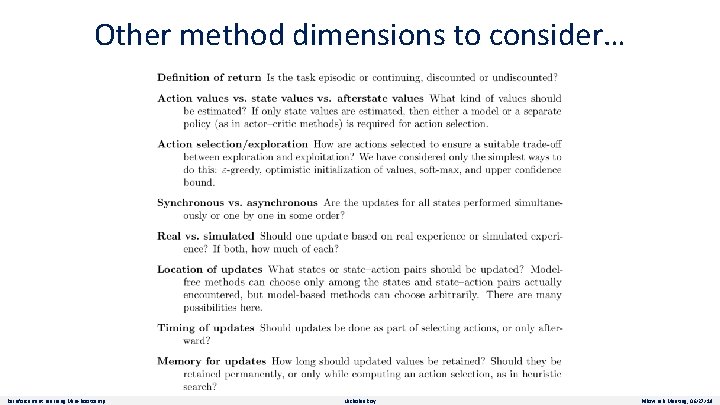

Other method dimensions to consider… Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

The Rest of the Book • Part I: Tabular Solution Methods • Part II: Approximate Solution Methods – Ch 9: On-policy Prediction with Approximation – Ch 10: On-policy Control with Approximation – Ch 11: Off-policy Methods with Approximation – Ch 12: Eligibility Traces – Ch 13: Policy Gradient Methods • Part III: Looking Deeper – Neuroscience, Psychology, Applications and Case Studies, Frontiers Reinforcement Learning Mini-Bootcamp Nicholas Roy Pillow Lab Meeting, 06/27/19

- Slides: 37