Reinforcement Learning Notes by PeiChi Peggy Huang Slides

Reinforcement Learning Notes by Pei-Chi (Peggy) Huang

Slides Sources 1. R. S. Sutton and A. G. Barto, “Reinforcement Learning”. Book published by MIT Press, 1998 Available on the web at: Rich. Sutton. com 2. Kenji Doya, "Reinforcement learning in continuous time and space, ” Neural Computation, vol. 12, 219 -245 (2000). 3. R. J. Williams, "Simple statistical gradient-following algorithms for connectionist reinforcement learning”. Machine Learning, vol. 8, pp. 229 -256, (1992). 4. http: //brain. cc. kogakuin. ac. jp/~kanamaru/NN/CPRL/ 5. Gerry Tesauro, IBM T. J. Watson Research Center http: //www. research. ibm. com/infoecon and http: //www. research. ibm. com/massdist 6. https: //medium. com/@tuzzer/cart-pole-balancing-with-q-learning-b 54 c 6068 d 947#. 38 r 90 wi 7 u 7. http: //www. cmap. polytechnique. fr/~dimo. brockhoff/advancedcontrol/slides/20140110_intro. Plus. Fuzzy. pdf 8. Information Source Russel, S. and P. Norvig (1995). Artificial Intelligence - A Modern Approach. Upper Saddle River, NJ, Prentice Hall 9. Some images and slides are used from CS 188 UC Berkeley and RN, AIMA 10. http: //web. engr. oregonstate. edu/~xfern/classes/cs 434/slides/RL-1. pdf 11. CS 229 Lecture notes - Andrew Ng 12. Reinforcement Learning: A Tutorial - Harmon 13. Simple Statistical Gradient-Following Algorithms for Connectionist Reinforcement Learning - Williams 14. Human-level control through deep reinforcement learning - Mnih et al. 15. http: //www. iclr. cc/lib/exe/fetch. php? media=iclr 2015: silver-iclr 2015. pdf 16. http: //uhaweb. hartford. edu/compsci/ccli/projects/QLearning. pdf 17. http: //www 2. econ. iastate. edu/tesfatsi/RLUsers. Guide. ICAC 2005. pdf 18. Addition Information and Glossary of Keywords Available at http: //www. cpsc. ucalgary. ca/~paulme/533

Reinforcement Learning in a nutshell Imagine playing a new game whose rules you don’t know; after a hundred or so moves, your opponent announces, “You lose”. - Russell and Norvig Introduction to Artificial Intelligence What do you do? Backtrack and try another move Better still, formulate a strategy that incorporates lessons from past failures.

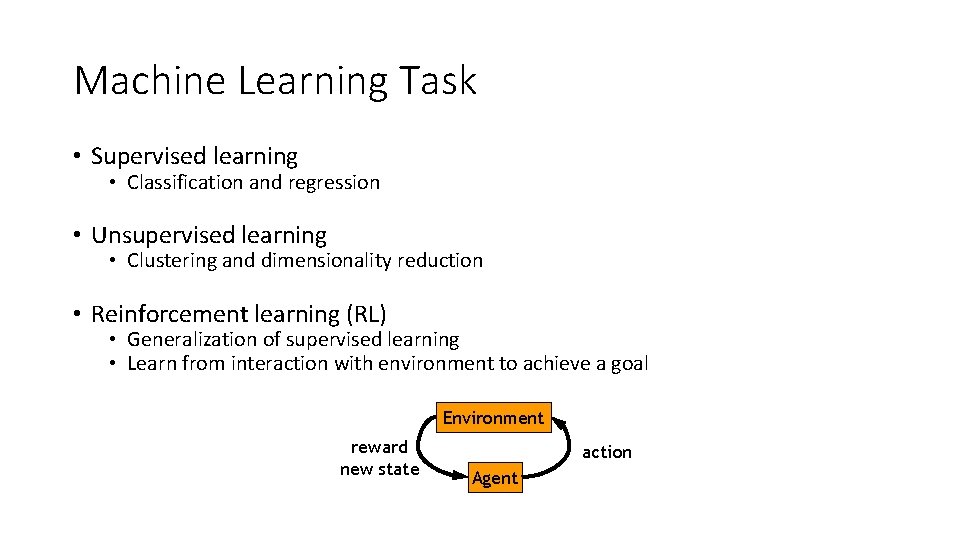

Machine Learning Task • Supervised learning • Classification and regression • Unsupervised learning • Clustering and dimensionality reduction • Reinforcement learning (RL) • Generalization of supervised learning • Learn from interaction with environment to achieve a goal Environment reward new state action Agent

Characteristics of Reinforcement Learning • What makes reinforcement learning different from other machine learning paradigms? • • There is no supervisor, only a reward signal Feedback is delayed, not instantaneous Time really matters (sequential, non i. i. d data) Agent’s actions affect the subsequent data it receives http: //www 0. cs. ucl. ac. uk/staff/d. silver/web/Teaching_files/intro_RL. pdf 5

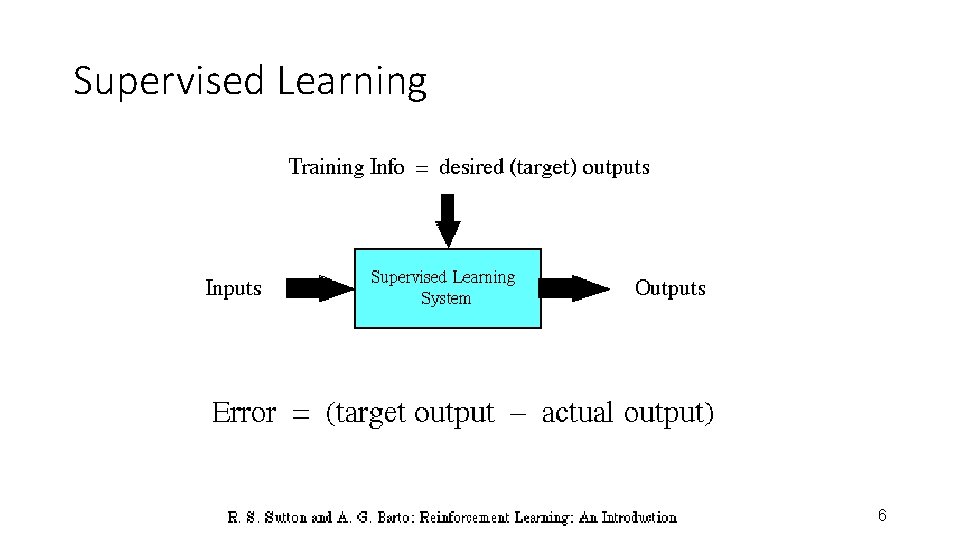

Supervised Learning 6

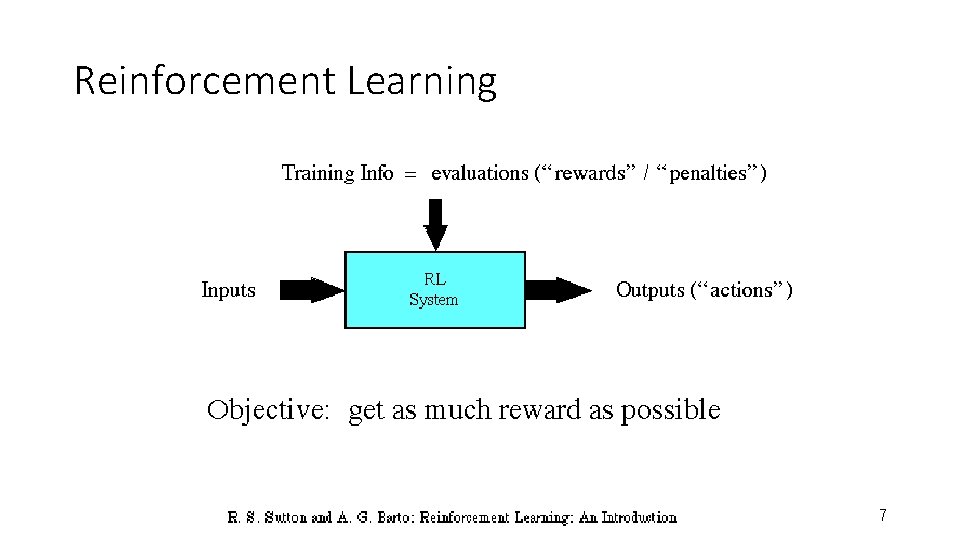

Reinforcement Learning 7

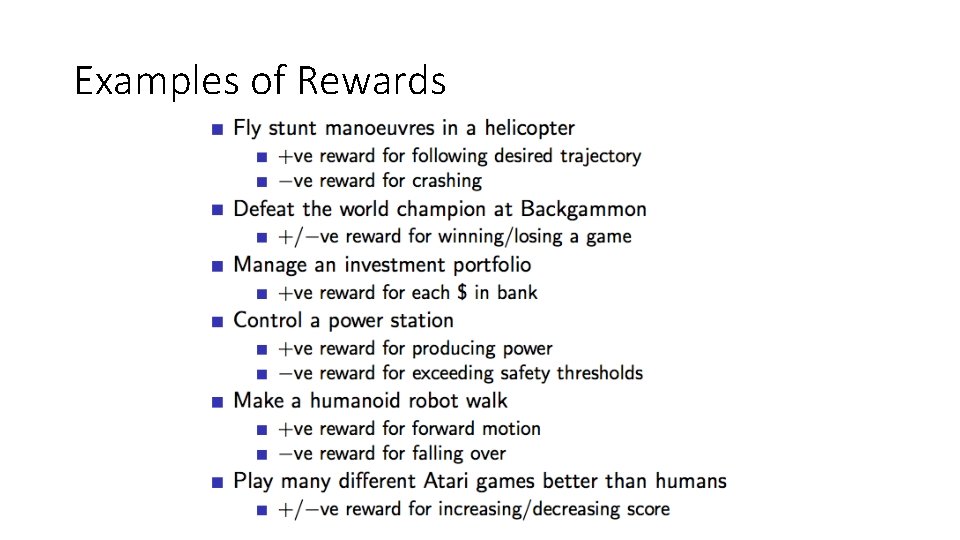

Examples of Reinforcement Learning • Classic: Inverted pendulum • Fly stunt maneuvers in a helicopter • Defeat the world champion at Backgammon • Manage an investment portfolio • Control a power station • Make a humanoid robot walk • Play many different Atari games better than humans • Space. X landing (Modern inverted pendulum)

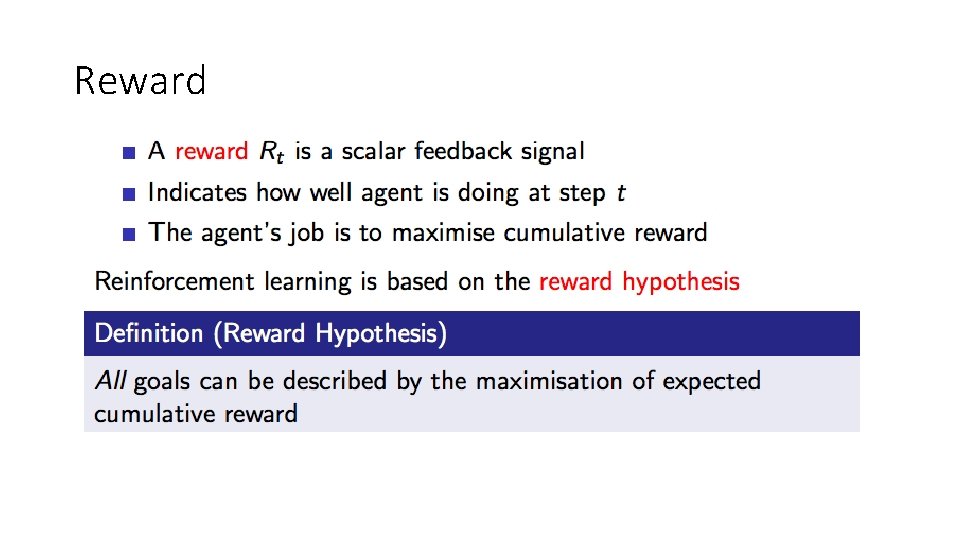

Reward

Examples of Rewards

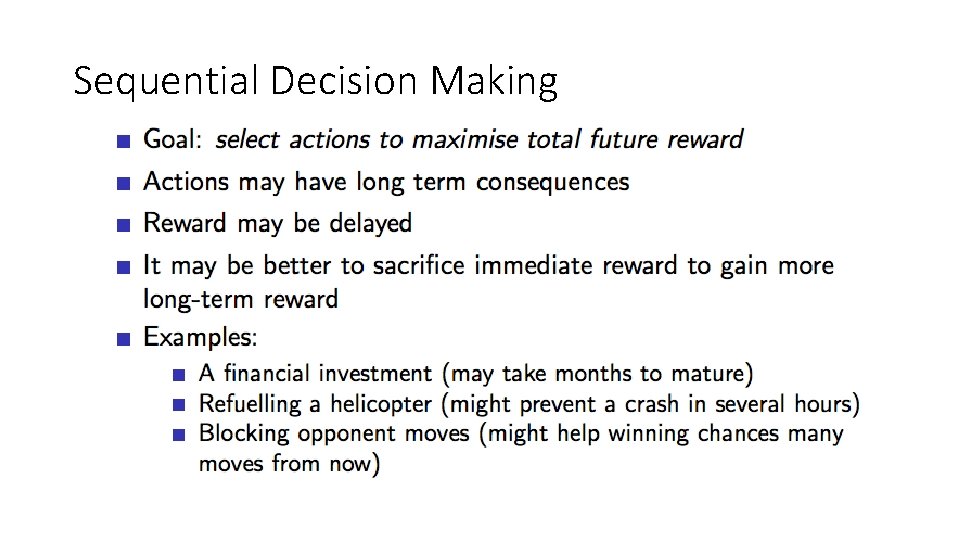

Sequential Decision Making

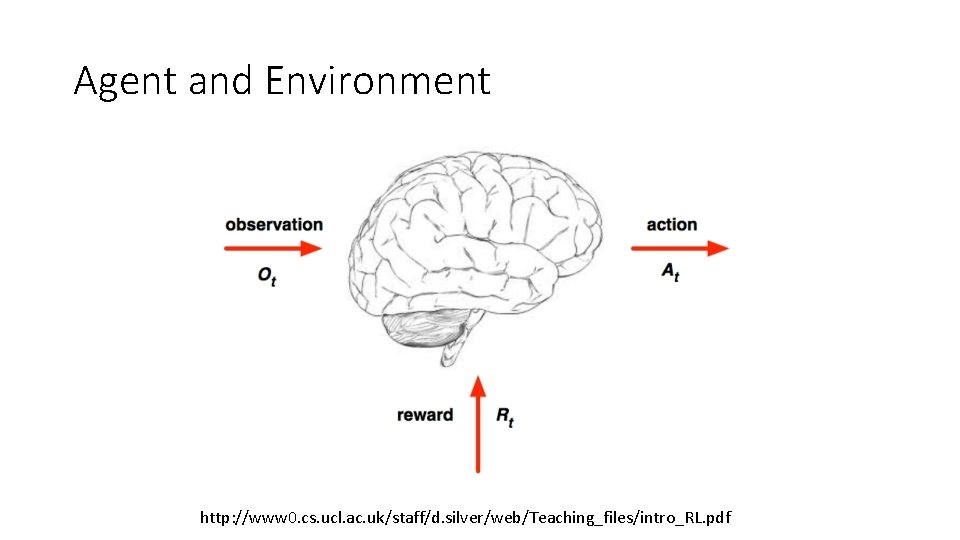

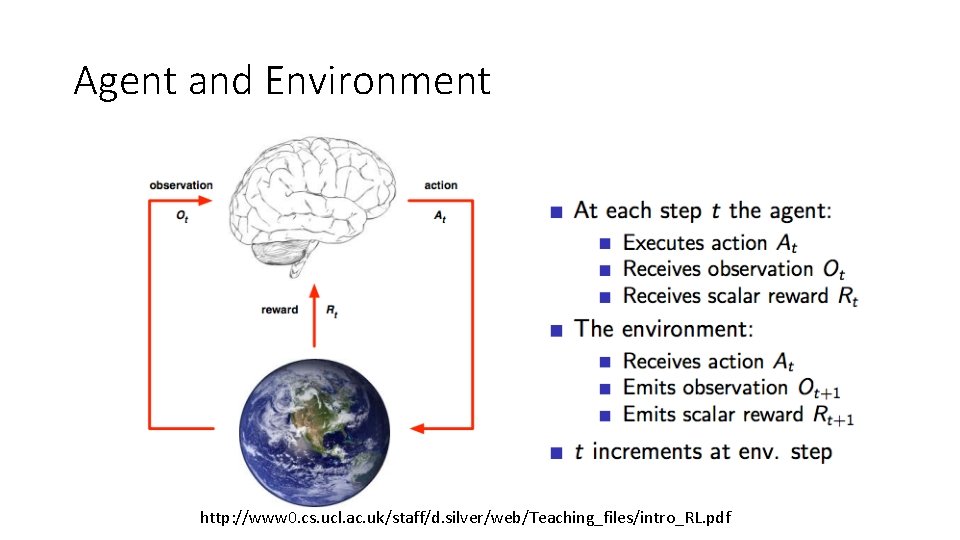

Agent and Environment http: //www 0. cs. ucl. ac. uk/staff/d. silver/web/Teaching_files/intro_RL. pdf

Agent and Environment http: //www 0. cs. ucl. ac. uk/staff/d. silver/web/Teaching_files/intro_RL. pdf

History and State

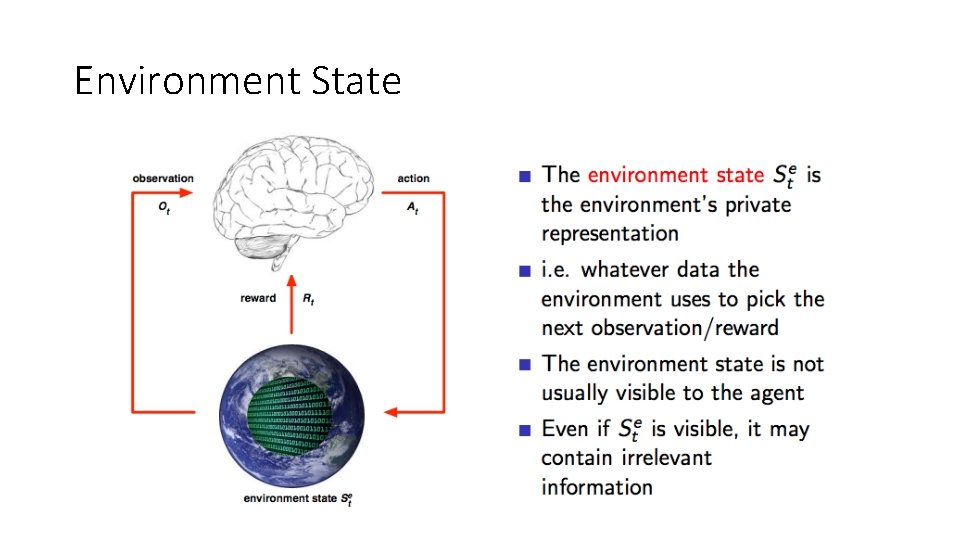

Environment State

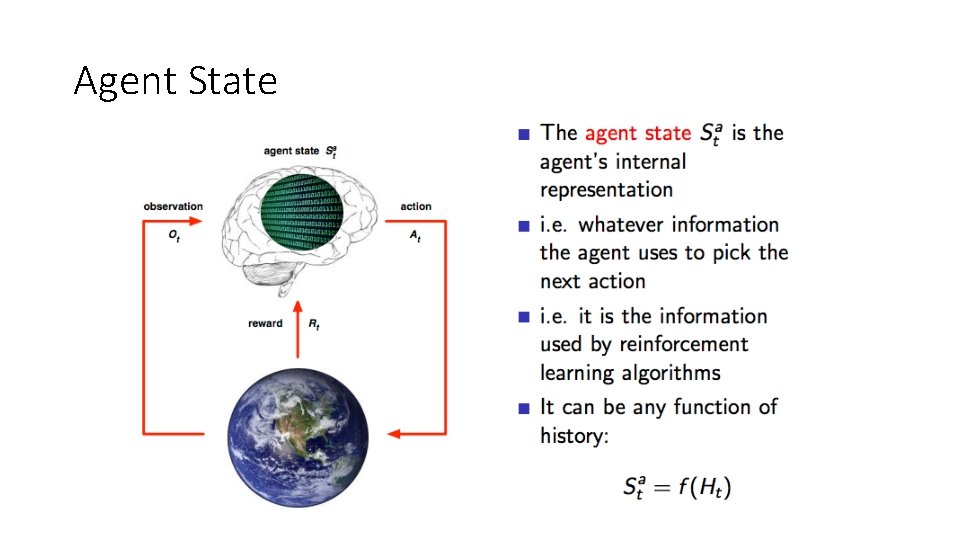

Agent State

Information State

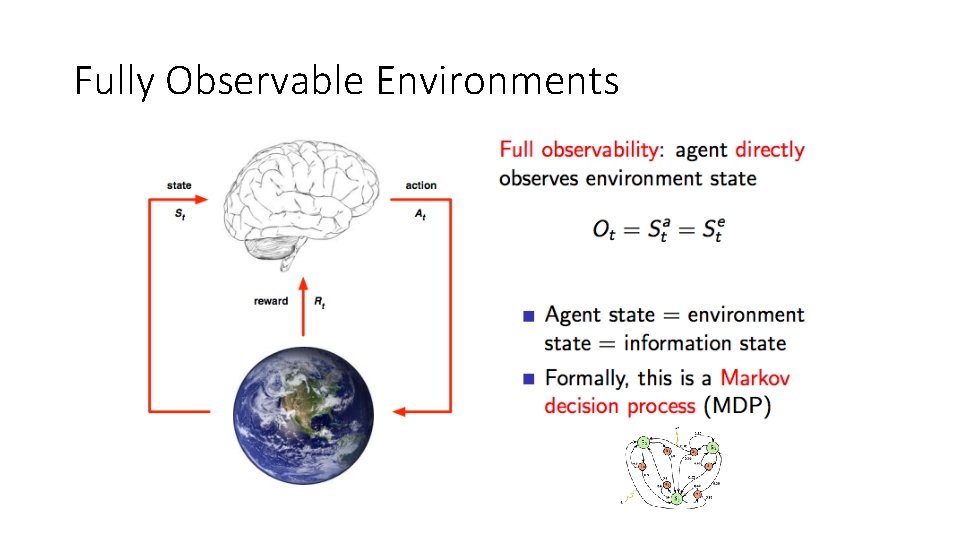

Fully Observable Environments

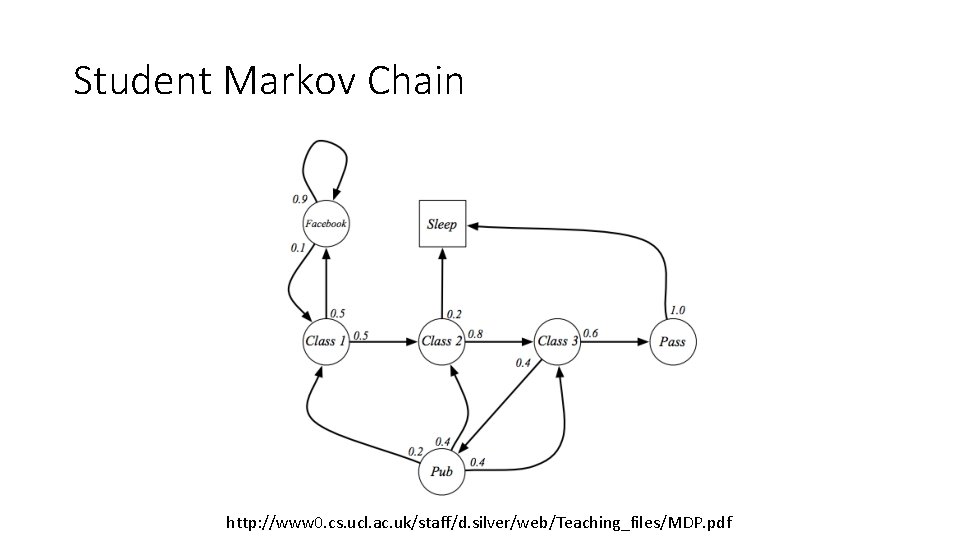

Student Markov Chain http: //www 0. cs. ucl. ac. uk/staff/d. silver/web/Teaching_files/MDP. pdf

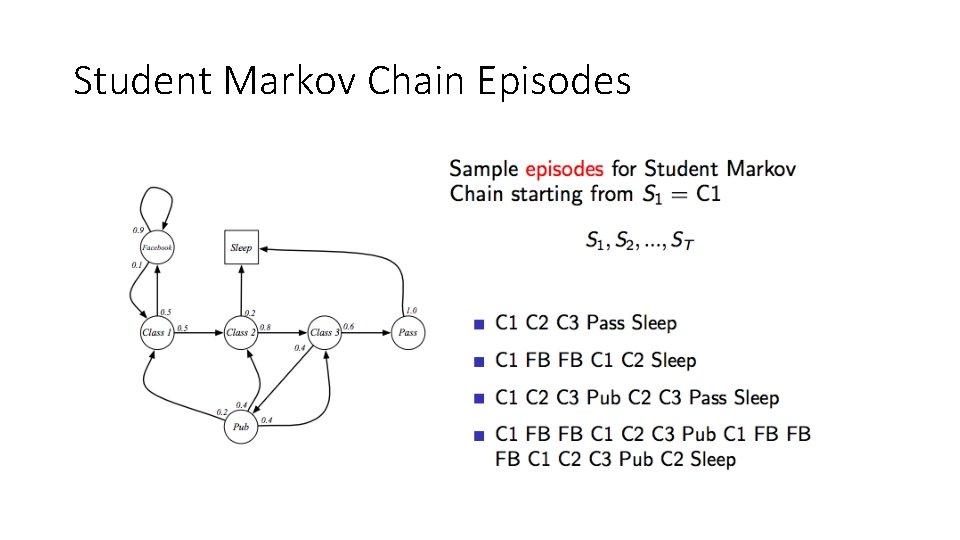

Student Markov Chain Episodes

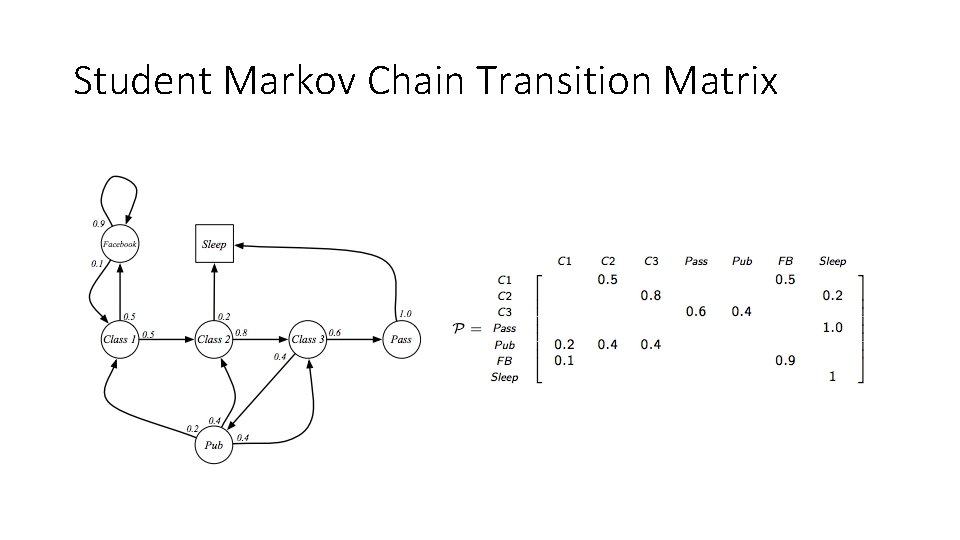

Student Markov Chain Transition Matrix

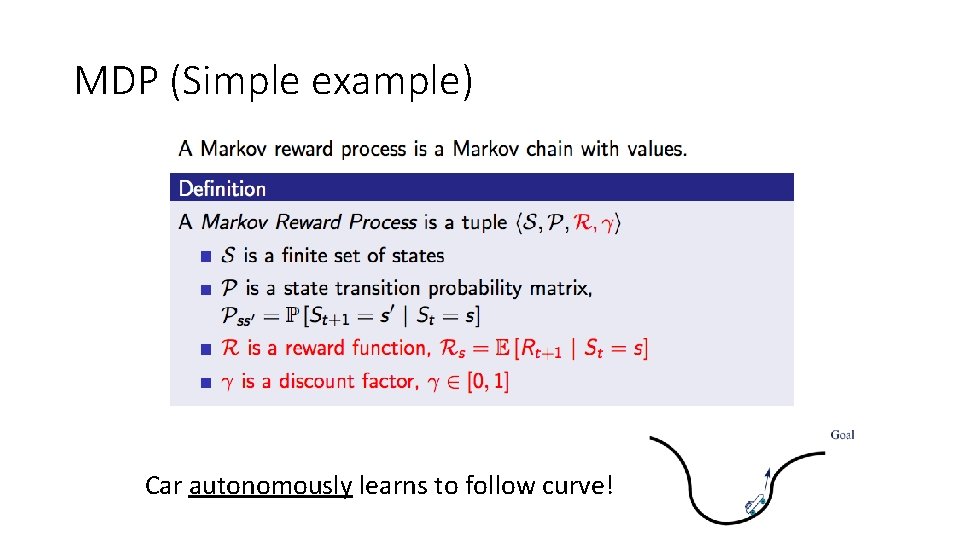

MDP (Simple example) Car autonomously learns to follow curve!

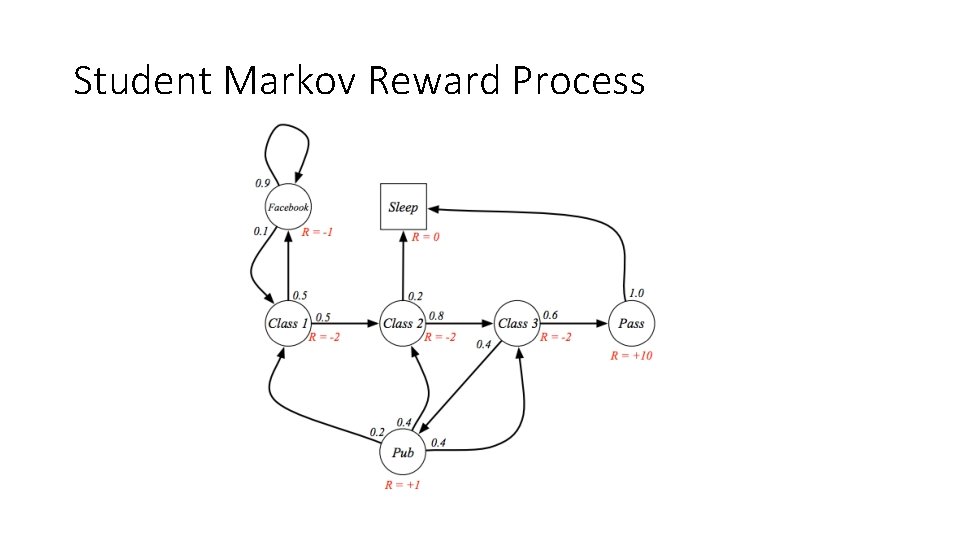

Student Markov Reward Process

Major Components of an RL Agent

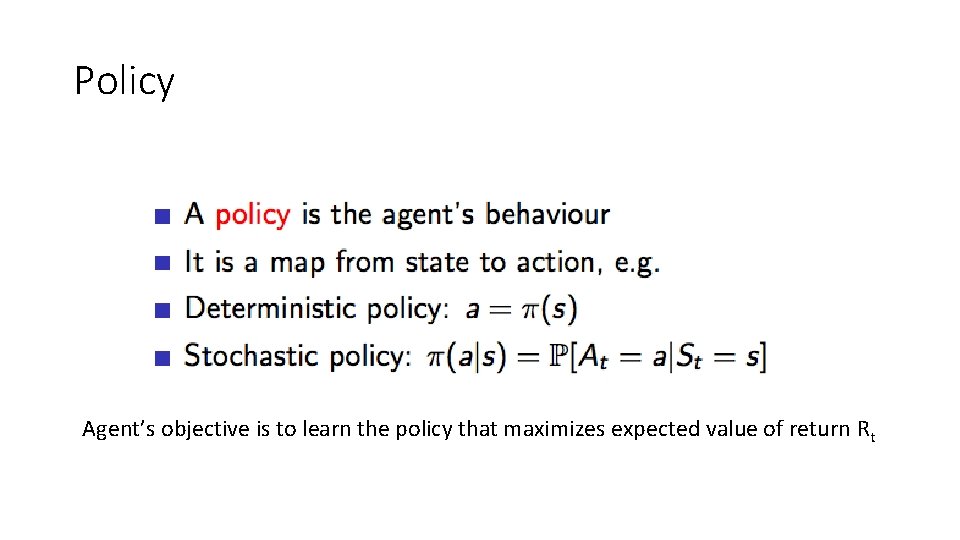

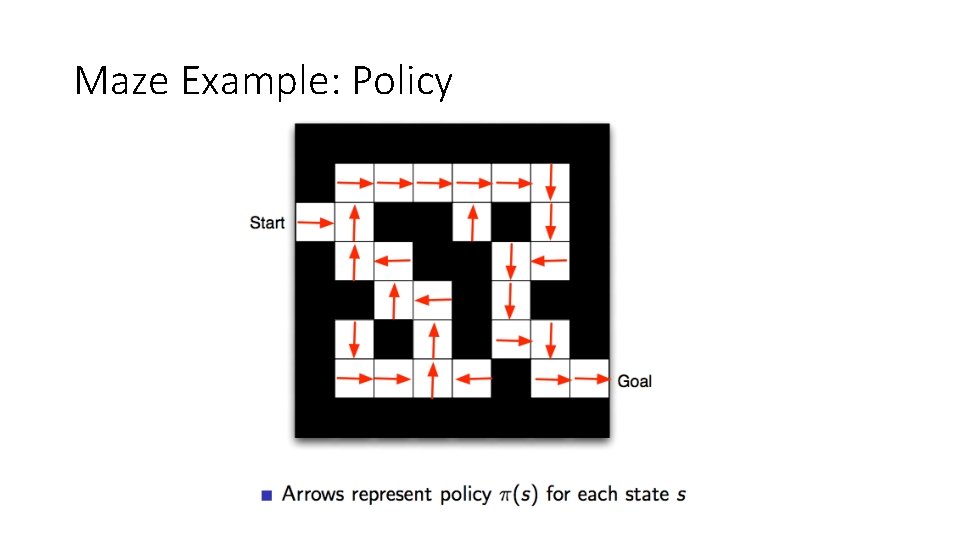

Policy Agent’s objective is to learn the policy that maximizes expected value of return Rt

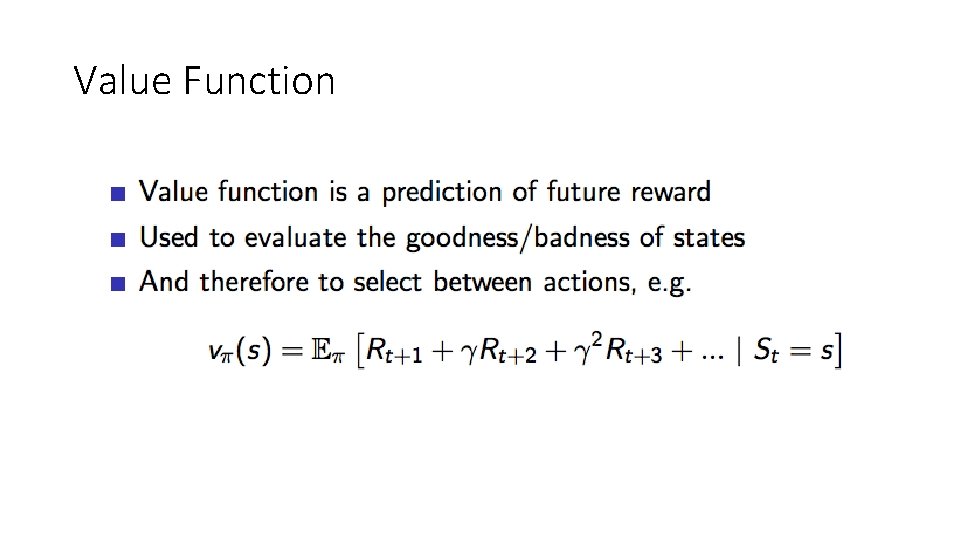

Value Function

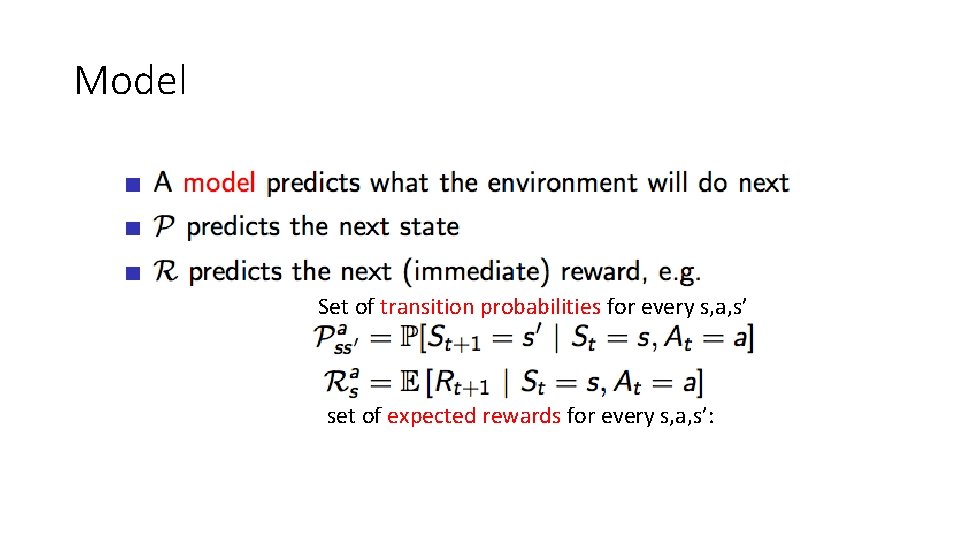

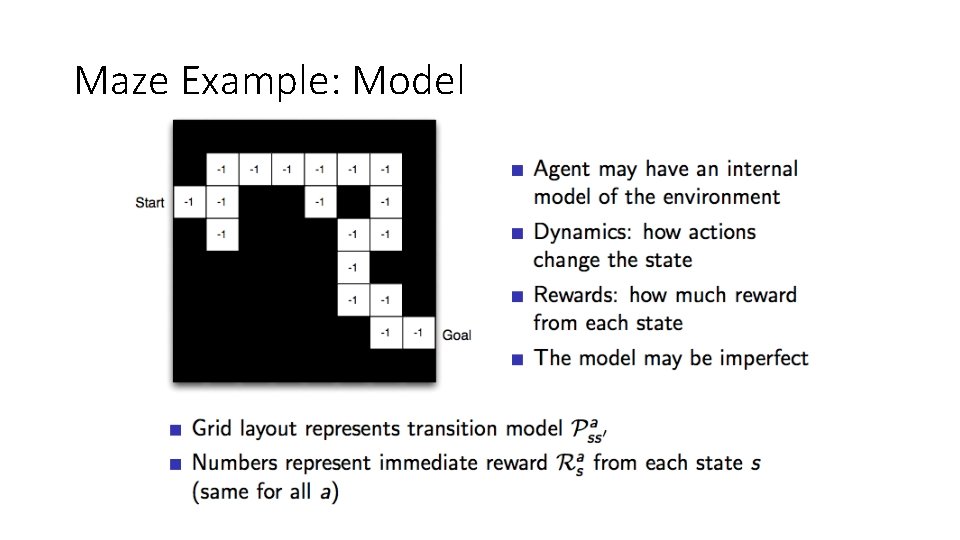

Model Set of transition probabilities for every s, a, s’ set of expected rewards for every s, a, s’:

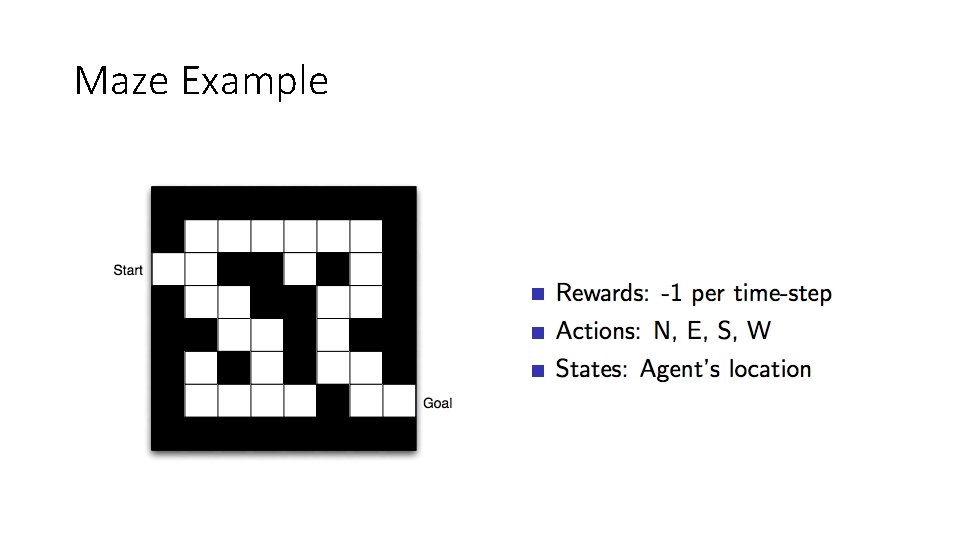

Maze Example

Maze Example: Policy

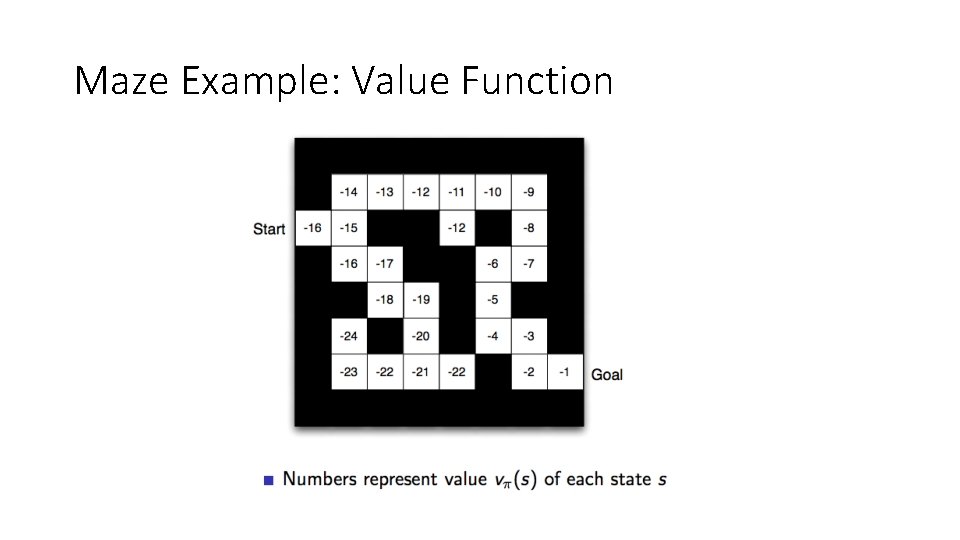

Maze Example: Value Function

Maze Example: Model

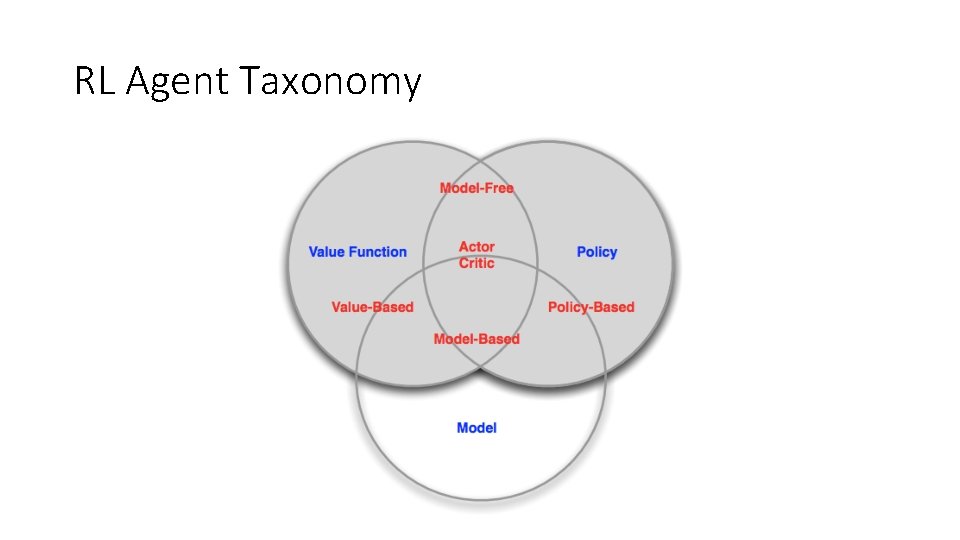

RL Agent Taxonomy

Advanced Control: Segway

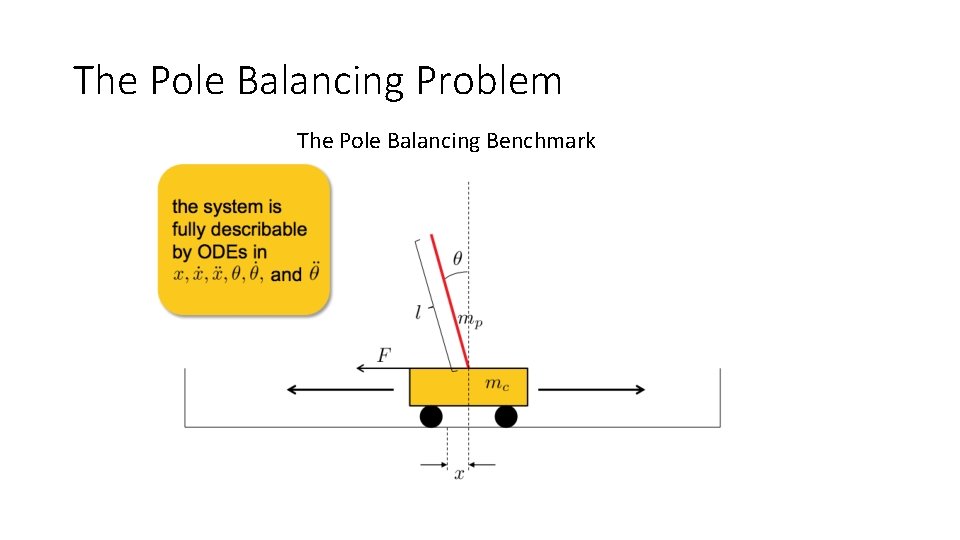

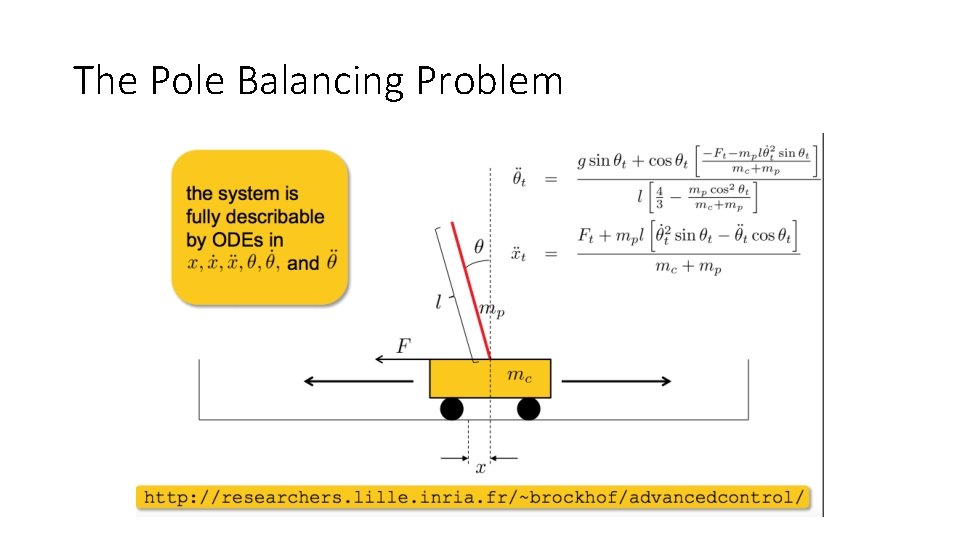

The Pole Balancing Problem The Pole Balancing Benchmark

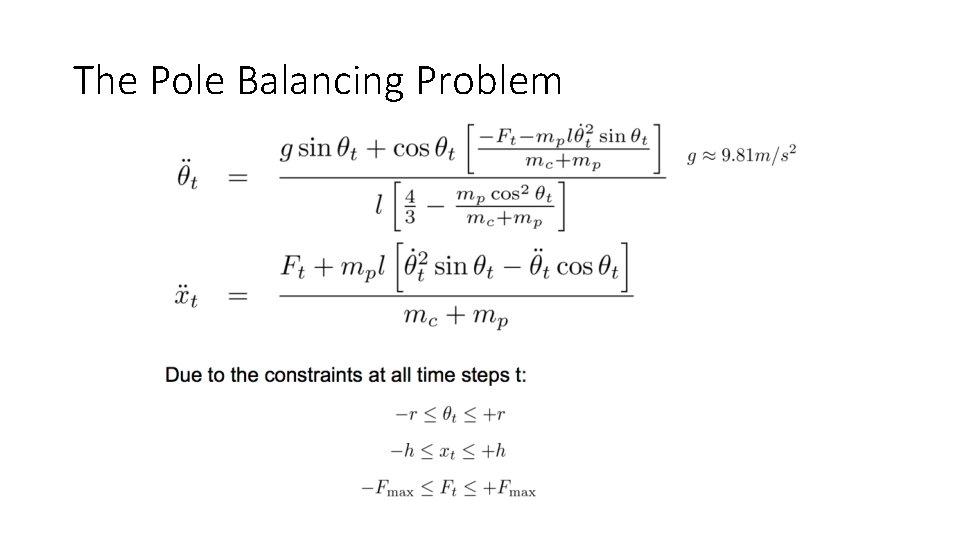

The Pole Balancing Problem

The Pole Balancing Problem

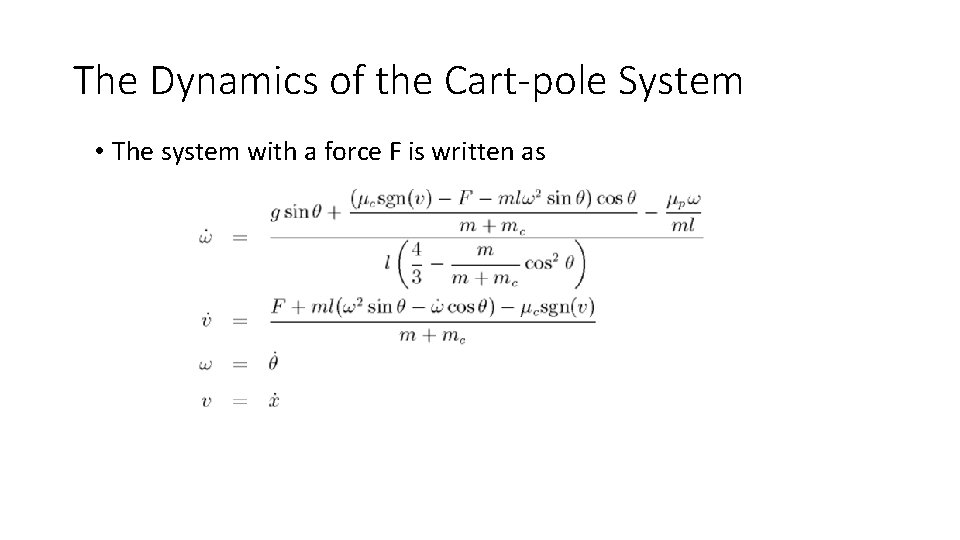

The Dynamics of the Cart-pole System • The system with a force F is written as

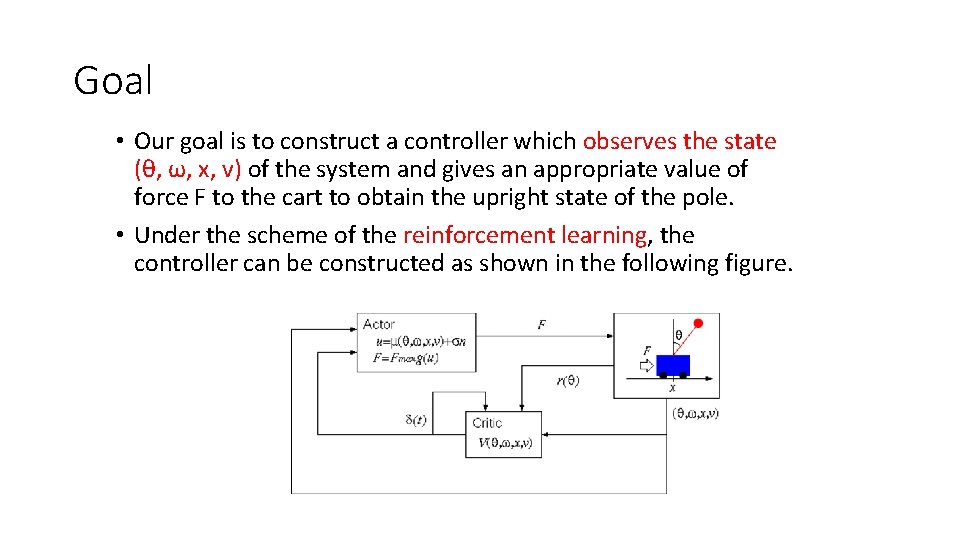

Goal • Our goal is to construct a controller which observes the state (θ, ω, x, v) of the system and gives an appropriate value of force F to the cart to obtain the upright state of the pole. • Under the scheme of the reinforcement learning, the controller can be constructed as shown in the following figure.

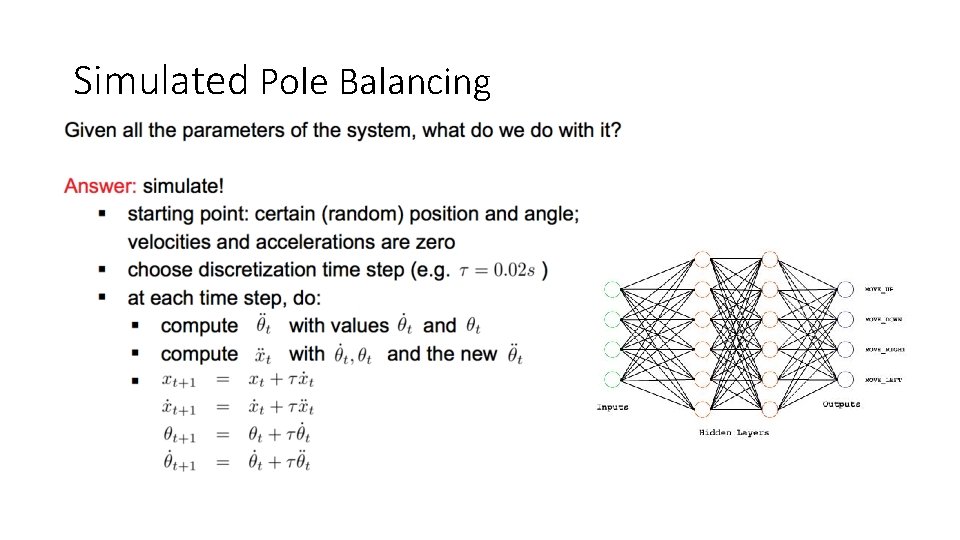

Simulated Pole Balancing

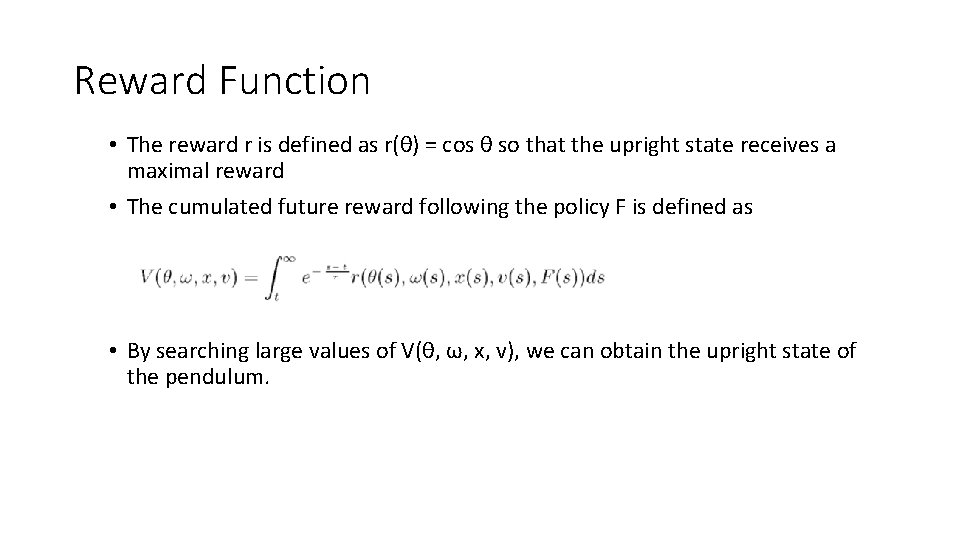

Reward Function • The reward r is defined as r(θ) = cos θ so that the upright state receives a maximal reward • The cumulated future reward following the policy F is defined as • By searching large values of V(θ, ω, x, v), we can obtain the upright state of the pendulum.

A Simple Search Solution • An episode is a run of the inverted pendulum balancer lasting some number of time steps. An episode gets a reward as an increasing function of the time the pendulum stays up • At every time step in an episode, the input to the balancer is the state vector s = <x, x_dot, θ, θ_dot>, the output is the action pair a = <direction, force> where direction is either LEFT or RIGHT and force is an integer between –W and W • Both direction and force are computed by some function of the current state. For example, direction may be the sign of the dot product between the state vector with a given parameter vector, and force may be the dot product of the state vector and a given parameter vector mapped to the range [-W, W] • The next state of the balancer is computed from the equations of motion governing the balancer. • The parameter vector is updated between episodes by some search algorithm whose goal is to maximize the reward of the next episode. • The search is successful if the reward of an episode is above the system design goal.

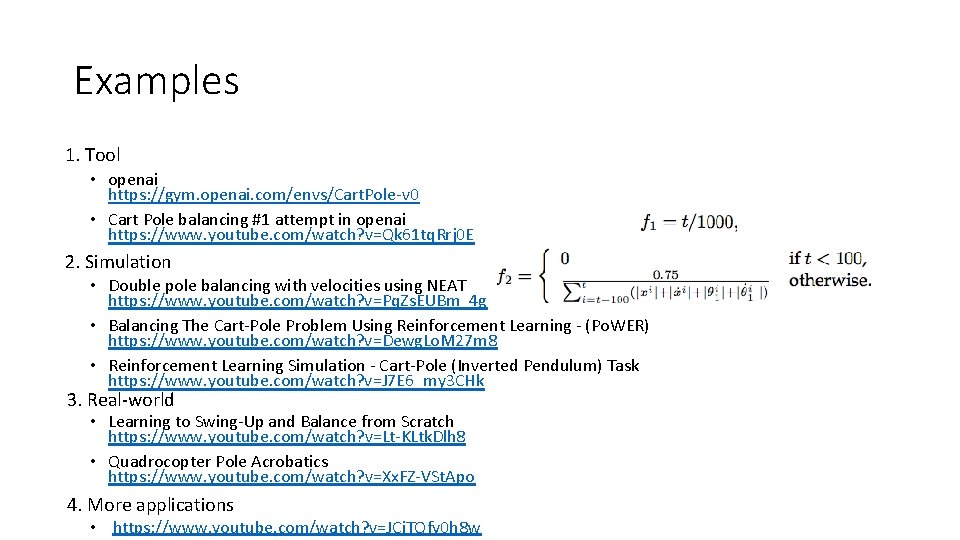

Examples 1. Tool • openai https: //gym. openai. com/envs/Cart. Pole-v 0 • Cart Pole balancing #1 attempt in openai https: //www. youtube. com/watch? v=Qk 61 tq. Rrj 0 E 2. Simulation • Double pole balancing with velocities using NEAT https: //www. youtube. com/watch? v=Pq. Zs. EUBm_4 g • Balancing The Cart-Pole Problem Using Reinforcement Learning - (Po. WER) https: //www. youtube. com/watch? v=Dewg. Lo. M 27 m 8 • Reinforcement Learning Simulation - Cart-Pole (Inverted Pendulum) Task https: //www. youtube. com/watch? v=J 7 E 6_my 3 CHk 3. Real-world • Learning to Swing-Up and Balance from Scratch https: //www. youtube. com/watch? v=Lt-KLtk. Dlh 8 • Quadrocopter Pole Acrobatics https: //www. youtube. com/watch? v=Xx. FZ-VSt. Apo 4. More applications • https: //www. youtube. com/watch? v=JCj. TQfy 0 h 8 w

Gradient Descent Tutorial By Andrew Ng, Stanford University Video links: Part 1 Part 2 Part 3

- Slides: 43