Reinforcement Learning in Games Colin Cherry colinccs Oct

- Slides: 15

Reinforcement Learning in Games Colin Cherry colinc@cs Oct 29/01 10/29/01 Reinforcement Learning in Games 1

Outline Reinforcement Learning & TD Learning n TD-Gammon n TDLeaf n ¨ Chinook n Conclusion 10/29/01 Reinforcement Learning in Games 2

The ideas behind Reinforcement Learning n Two broad categories for learning: ¨ Supervised ¨ Unsupervised n Problem with unsupervised learning: ¨ Delayed n (Our concern) rewards (temporal credit assignment) Goal: ¨ Create a good control policy based on delayed rewards 10/29/01 Reinforcement Learning in Games 3

Evaluation Function: Developing a Control Policy n Evaluation function: ¨ Function that estimates the total reward the agent will receive if it follows the function from this point onward n n We will assume the function evaluates states (good for deterministic games) The evaluation function could be: ¨ Look-up table, Linear function, Neural Network, any function approximator… 10/29/01 Reinforcement Learning in Games 4

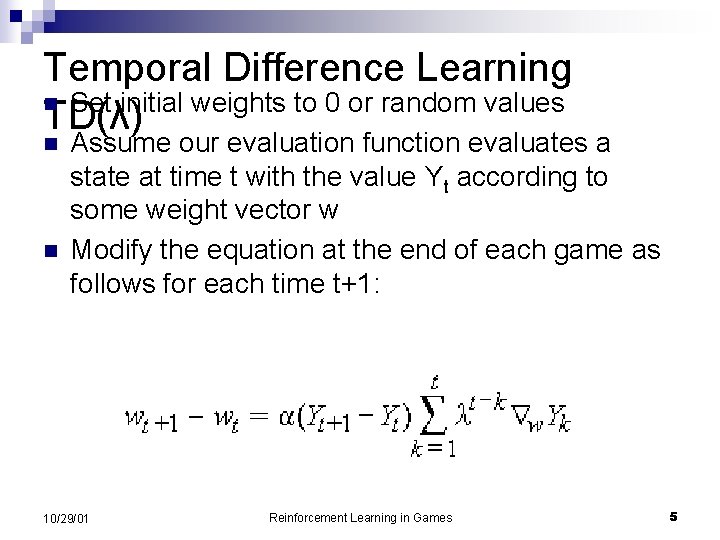

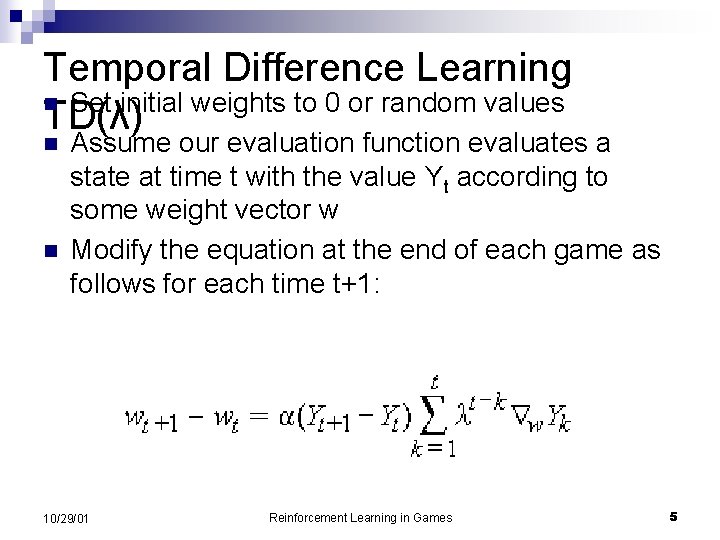

Temporal Difference Learning n Set initial weights to 0 or random values TD(λ) n n Assume our evaluation function evaluates a state at time t with the value Yt according to some weight vector w Modify the equation at the end of each game as follows for each time t+1: 10/29/01 Reinforcement Learning in Games 5

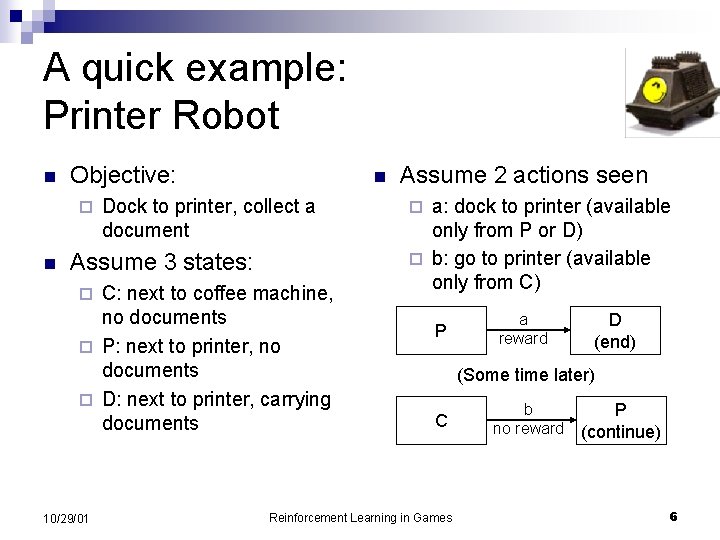

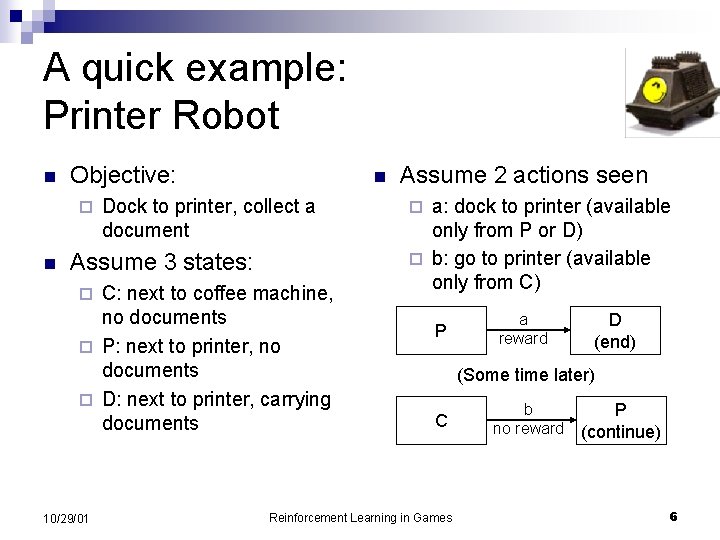

A quick example: Printer Robot n Objective: ¨ n n Dock to printer, collect a document Assume 3 states: C: next to coffee machine, no documents ¨ P: next to printer, no documents ¨ D: next to printer, carrying documents ¨ 10/29/01 Assume 2 actions seen a: dock to printer (available only from P or D) ¨ b: go to printer (available only from C) ¨ P a reward D (end) (Some time later) C Reinforcement Learning in Games b no reward P (continue) 6

TD-Gammon Self-taught backgammon player n Good enough to make the best sweat n Huge success for reinforcement learning n Far surpassed its supervised learning cousin, Neurogammon n 10/29/01 Reinforcement Learning in Games 7

How does it work? n n n Used an artificial neural network for its evaluation function approximator Excellent neural network design Used expert features developed for Neurogammon along with basic board rep. Hundreds of thousands of training games against itself Hard-coded doubling algorithm 10/29/01 Reinforcement Learning in Games 8

Why did it work so well? Stochastic domain – forces exploration n Linear (basic) concepts are learned first n Shallow search is “good enough” against humans n 10/29/01 Reinforcement Learning in Games 9

Backgammon vrs Other games Shallow Search n TD-Gammon followed a greedy approach ¨ 1 ply look-ahead (later increased to 3 -ply) ¨ Its hard to predict your opponent’s move w/o his or her dice roll? What about your move after that? n Doesn’t work so well for other games: ¨ What features will tell me what move to take by looking only at the immediate results of the moves available to me? 10/29/01 Reinforcement Learning in Games 10

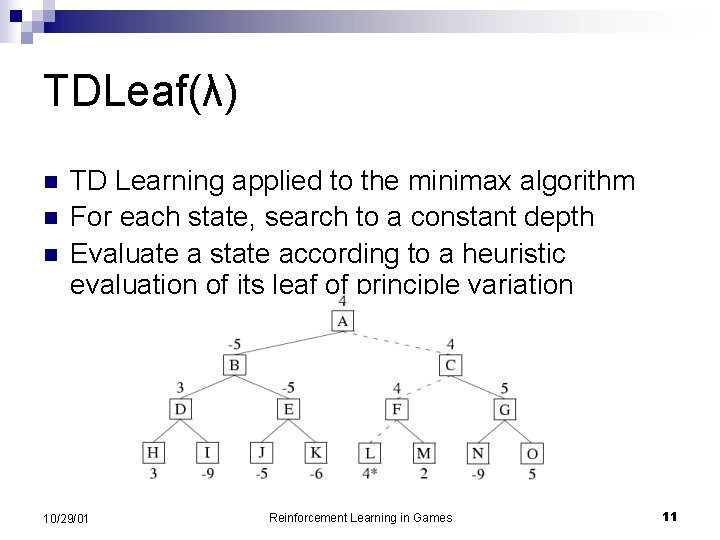

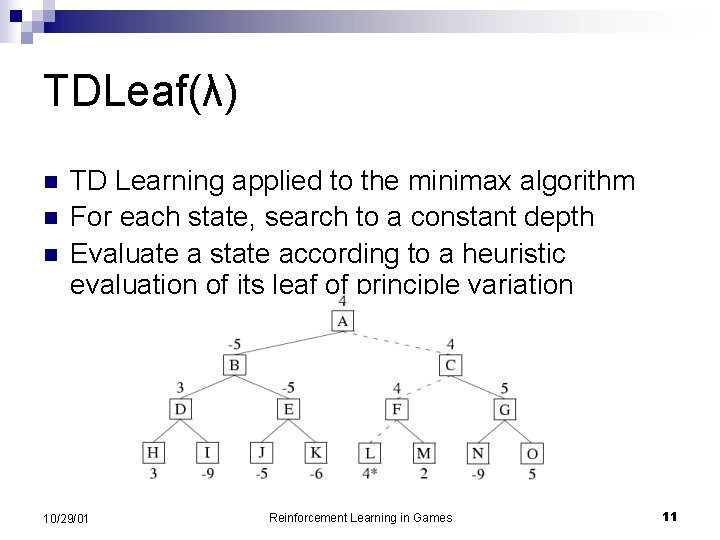

TDLeaf(λ) n n n TD Learning applied to the minimax algorithm For each state, search to a constant depth Evaluate a state according to a heuristic evaluation of its leaf of principle variation 10/29/01 Reinforcement Learning in Games 11

Chinook n n This program, at this school, in this class, should need no introduction 84 features (4 sets of 21) were tunable by weight Each feature consists of many hand-picked parameters Question: Can we learn the 84 weights as well as a human can set them? 10/29/01 Reinforcement Learning in Games 12

The Test Trained using TDLeaf n All weight values set to 0 n Variations introduced by using a book of opening moves (144 3 -ply openings) n Played no more than 10, 000 games against itself before hitting a plateau n Both programs are to use the same depth n 10/29/01 Reinforcement Learning in Games 13

The results were very positive Chinook w/ all weights set to 1 vrs Tournament Chinook: 94. 5 -193. 5 n Chinook after self-play training vrs Tournament Chinook: Even Steven n Some Lessons Learned: n ¨ You have to train at the same depth you plan to play at ¨ You have to play against real people too 10/29/01 Reinforcement Learning in Games 14

Conclusions n n TD(λ) can be a powerful tool in the creation of game-playing evaluation functions Must be a type of training that will introduce variation Features need to be hand-picked (for now) TD and TDLeaf allow quick weight tuning ¨ Takes a lot of the tedium out of player design ¨ Allows designers more experiment with features 10/29/01 Reinforcement Learning in Games 15