Reinforcement Learning Guest Lecturer Chengxiang Zhai 15 681

- Slides: 25

Reinforcement Learning Guest Lecturer: Chengxiang Zhai 15 -681 Machine Learning December 6, 2001

Outline For Today • The Reinforcement Learning Problem • Markov Decision Process • Q-Learning • Summary

The Checker Problem Revisited • Goal: To win every game! • What to learn: Given any board position, choose a “good” move • But, what is a “good” move? – A move that helps win a game – A move that will lead to a “better” board position • So, what is a “better” board position? – A position where a “good” next move exists!

Structure of the Checker Problem • You are interacting/experimenting with an environment (board) • You see the state of the environment (board position) • And, you take an action (move), which will – change the state of the environment – result in an immediate reward • Immediate reward = 0 unless you win (+100) or lose (-100) the game • You want to learn to “control” the environment (board) so as to maximize your long term reward (win the game)

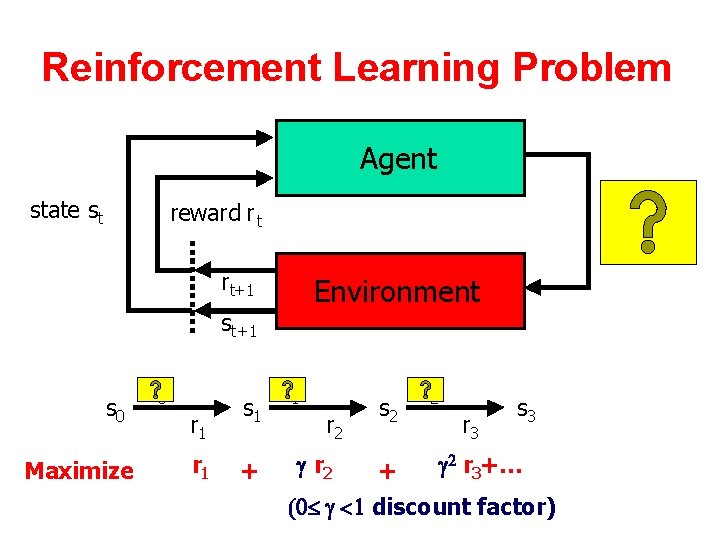

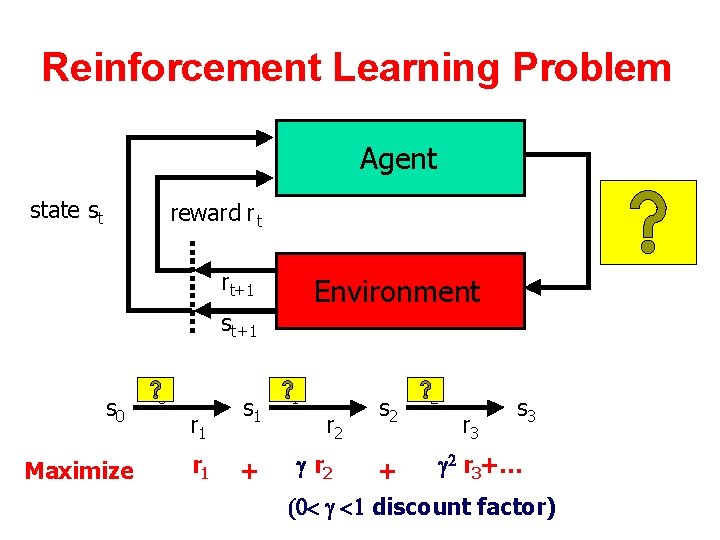

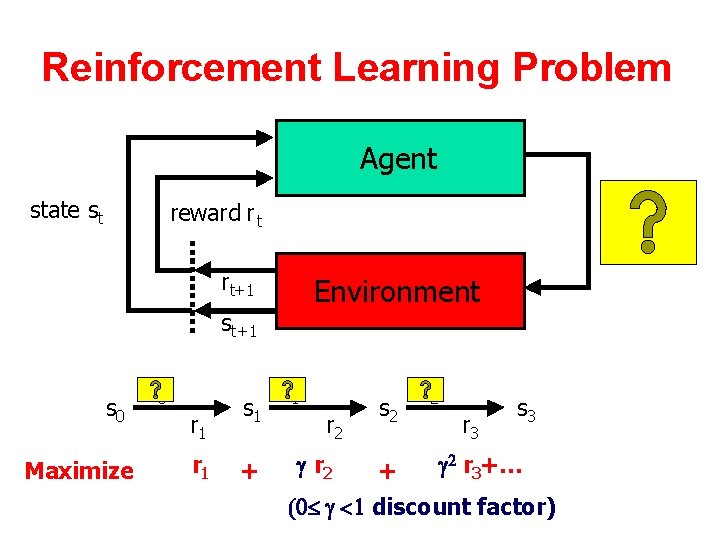

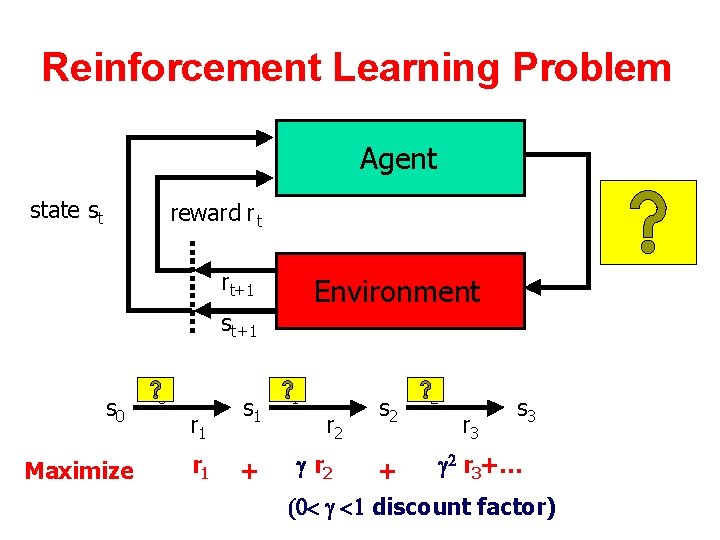

Reinforcement Learning Problem Agent state st reward r t action a t rt+1 Environment st+1 s 0 Maximize a 0 r 1 s 1 + a 1 r 2 s 2 + a 2 r 3 s 3 2 r 3+… (0 <1 discount factor)

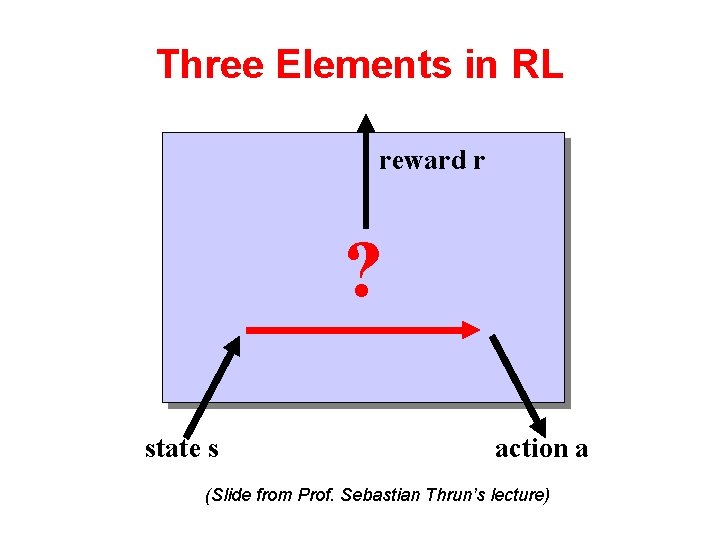

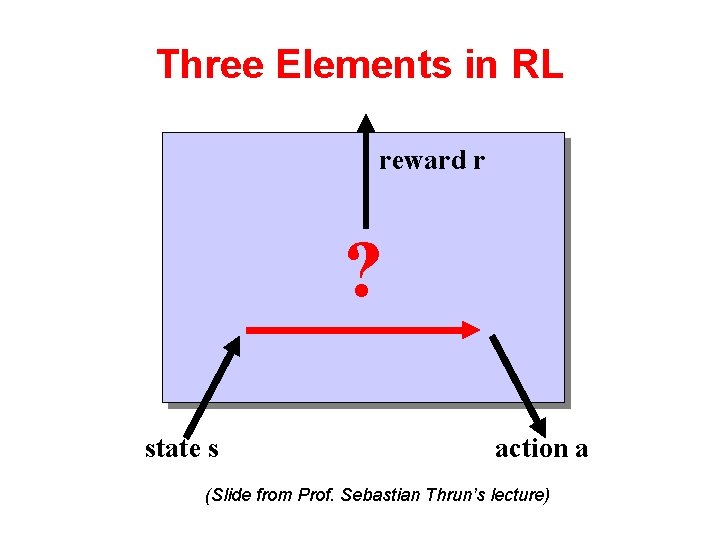

Three Elements in RL reward r ? state s action a (Slide from Prof. Sebastian Thrun’s lecture)

Example 1 : Slot Machine • State: configuration of slots • Action: stopping time • Reward: $$$ (Slide from Prof. Sebastian Thrun’s lecture)

Example 2 : Mobile Robot • State: location of robot, people, etc. • Action: motion • Reward: the number of happy faces (Slide from Prof. Sebastian Thrun’s lecture)

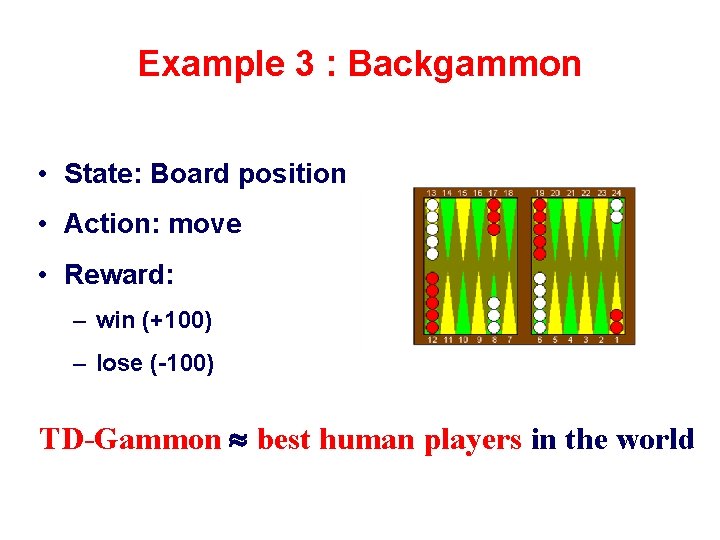

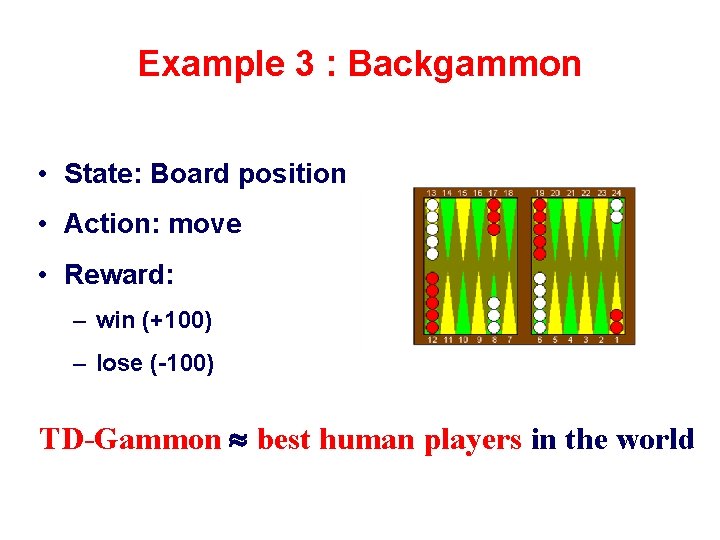

Example 3 : Backgammon • State: Board position • Action: move • Reward: – win (+100) – lose (-100) TD-Gammon best human players in the world

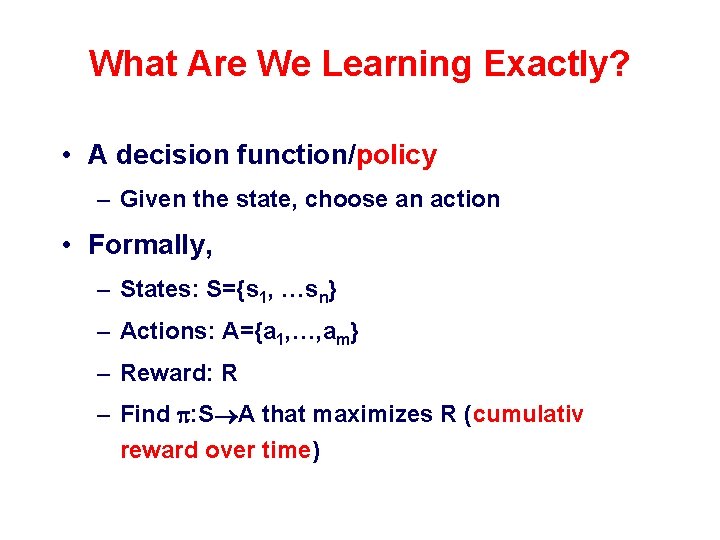

What Are We Learning Exactly? • A decision function/policy – Given the state, choose an action • Formally, – States: S={s 1, …sn} – Actions: A={a 1, …, am} – Reward: R – Find : S A that maximizes R (cumulativ reward over time)

Find : S A Function Approx. So, What’s Special About Reinforcement Learning?

Reinforcement Learning Problem Agent state st reward r t action a t rt+1 Environment st+1 s 0 Maximize a 0 r 1 s 1 + a 1 r 2 s 2 + a 2 r 3 s 3 2 r 3+… (0< <1 discount factor)

What’s So Special About CL? (Answers from “the book”) • Delayed Reward • Exploration • Partially observable states • Life-long learning

Now that we know the problem, How do we solve it? ==> Markov Decision Process (MDP)

Markov Decision Process (MDP) • Finite set of states S • Finite set of actions A • At each time step the agent observes state st S and chooses action at A(st) • Then receives immediate reward rt+1=r(st, at) • And state changes to st+1 =d(st, at) • Markov assumption : st+1=d(st, at) and rt+1=r(st, at) – Next reward and state only depend on current state st and action at – Functions d(st, at) and r(st, at) may be non-deterministic – Functions d(st, at) and r(st, at) not necessarily known to agent

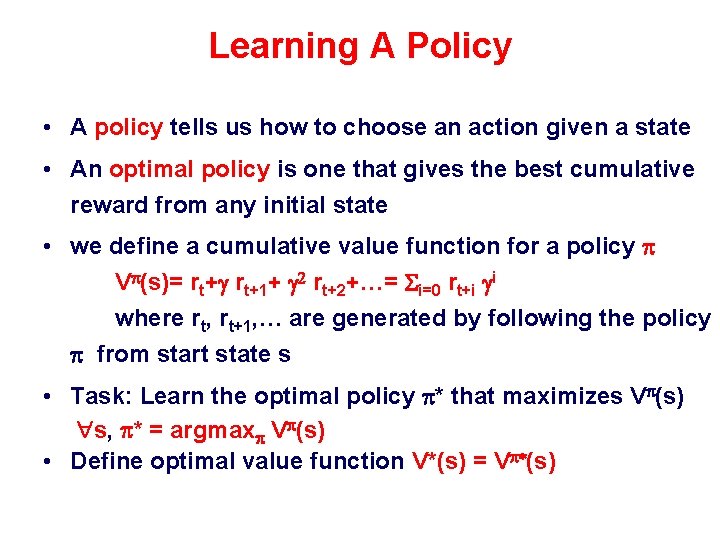

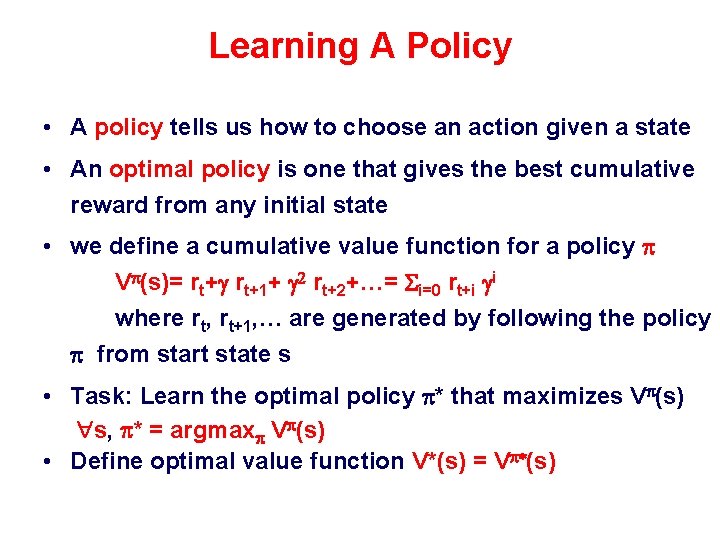

Learning A Policy • A policy tells us how to choose an action given a state • An optimal policy is one that gives the best cumulative reward from any initial state • we define a cumulative value function for a policy V (s)= rt+1+ 2 rt+2+…= Si=0 rt+i i where rt, rt+1, … are generated by following the policy from start state s • Task: Learn the optimal policy * that maximizes V (s) s, * = argmax V (s) • Define optimal value function V*(s) = V *(s)

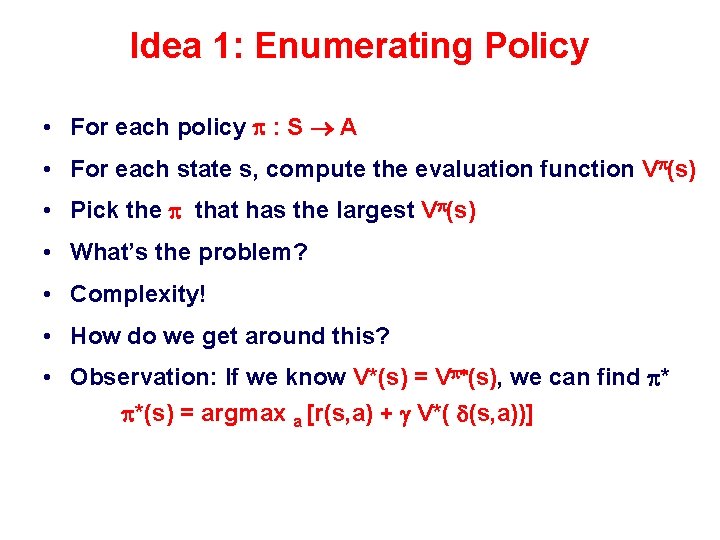

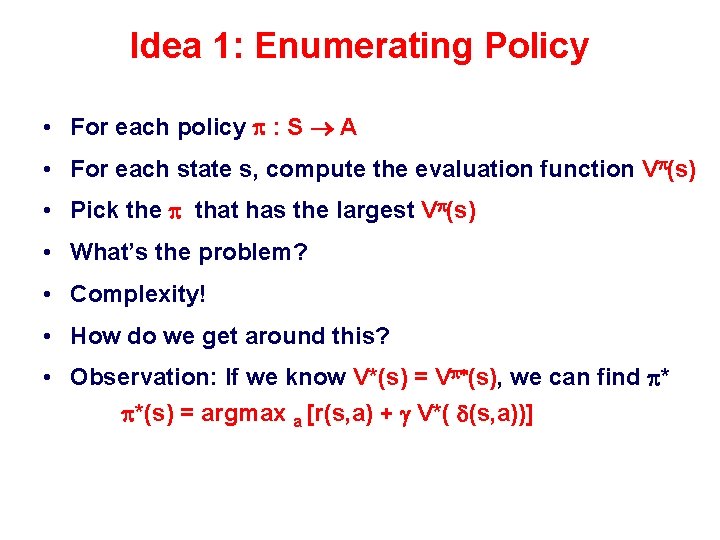

Idea 1: Enumerating Policy • For each policy : S A • For each state s, compute the evaluation function V (s) • Pick the that has the largest V (s) • What’s the problem? • Complexity! • How do we get around this? • Observation: If we know V*(s) = V *(s), we can find * *(s) = argmax a [r(s, a) + V*( d(s, a))]

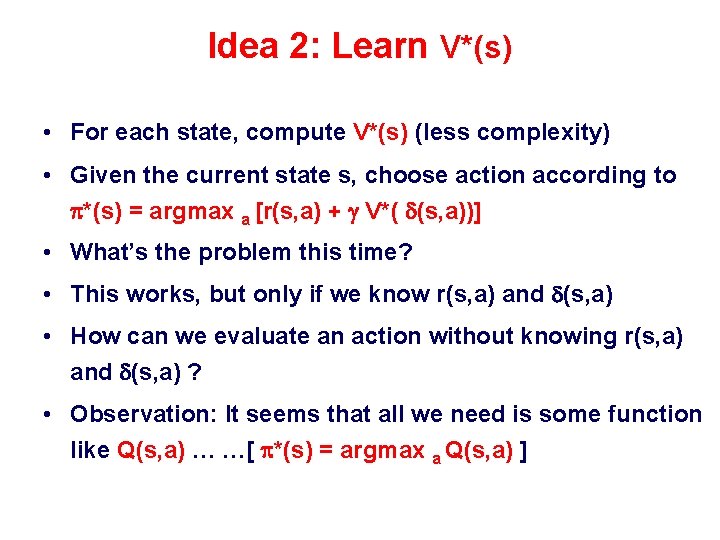

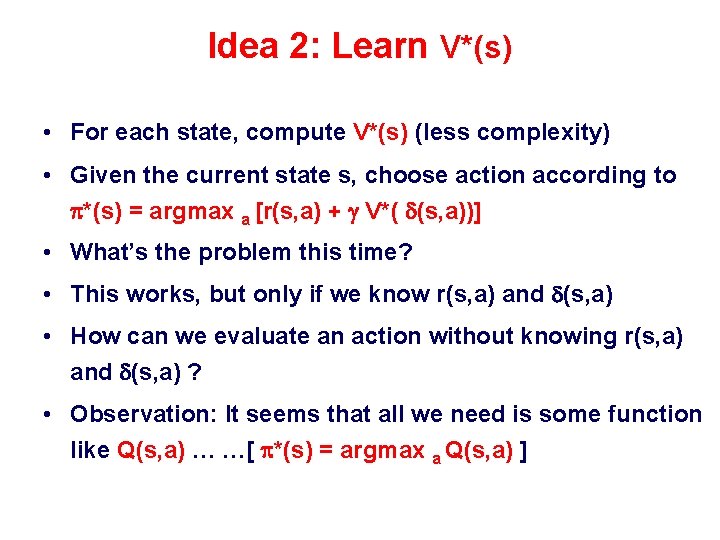

Idea 2: Learn V*(s) • For each state, compute V*(s) (less complexity) • Given the current state s, choose action according to *(s) = argmax a [r(s, a) + V*( d(s, a))] • What’s the problem this time? • This works, but only if we know r(s, a) and d(s, a) • How can we evaluate an action without knowing r(s, a) and d(s, a) ? • Observation: It seems that all we need is some function like Q(s, a) … …[ *(s) = argmax a Q(s, a) ]

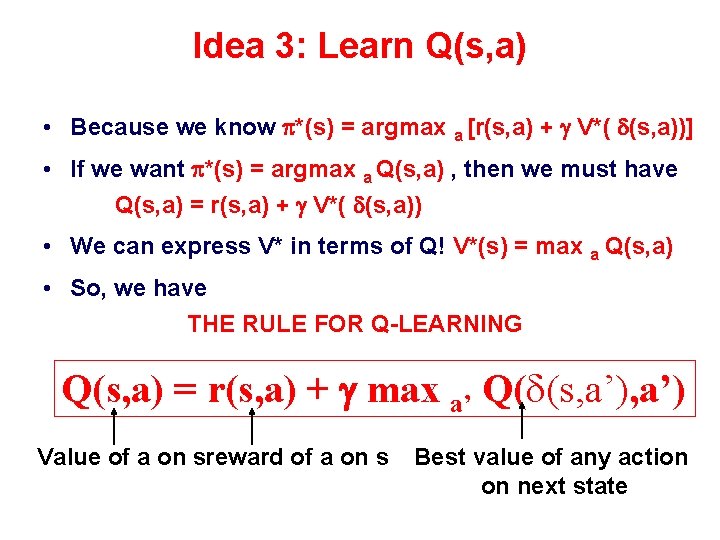

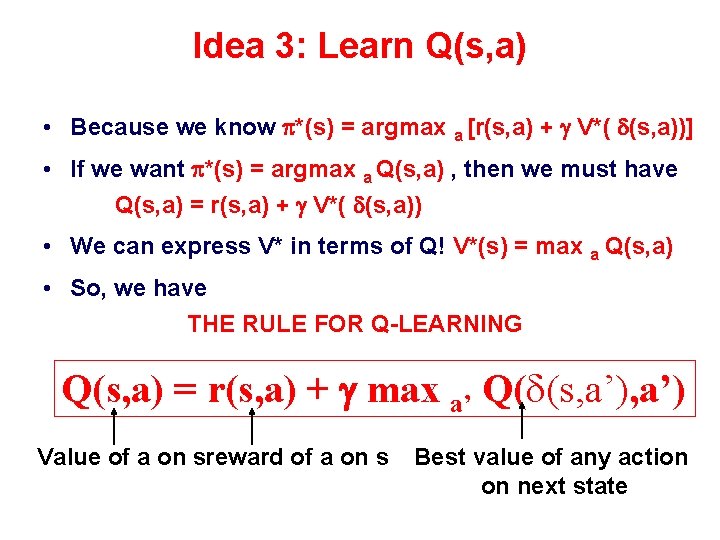

Idea 3: Learn Q(s, a) • Because we know *(s) = argmax a [r(s, a) + V*( d(s, a))] • If we want *(s) = argmax a Q(s, a) , then we must have Q(s, a) = r(s, a) + V*( d(s, a)) • We can express V* in terms of Q! V*(s) = max a Q(s, a) • So, we have THE RULE FOR Q-LEARNING Q(s, a) = r(s, a) + max a’ Q(d(s, a’) Value of a on sreward of a on s Best value of any action on next state

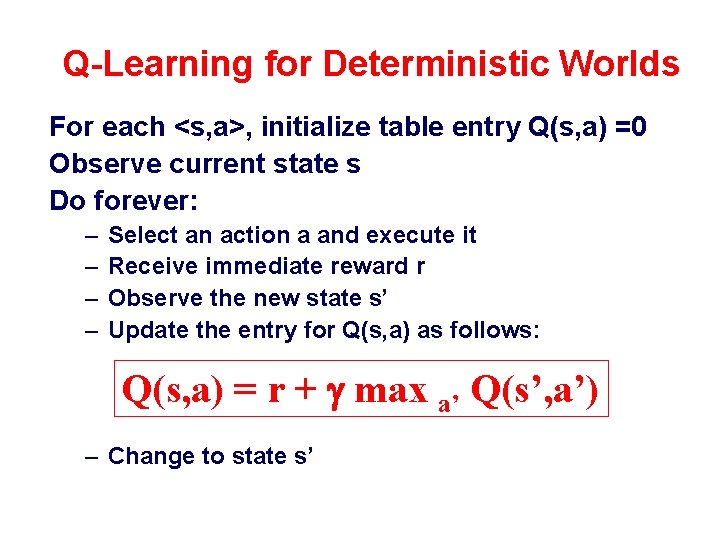

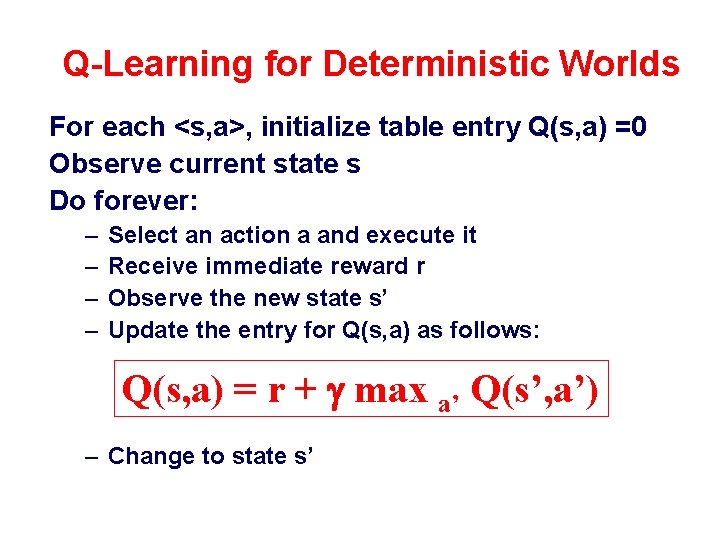

Q-Learning for Deterministic Worlds For each <s, a>, initialize table entry Q(s, a) =0 Observe current state s Do forever: – – Select an action a and execute it Receive immediate reward r Observe the new state s’ Update the entry for Q(s, a) as follows: Q(s, a) = r + max a’ Q(s’, a’) – Change to state s’

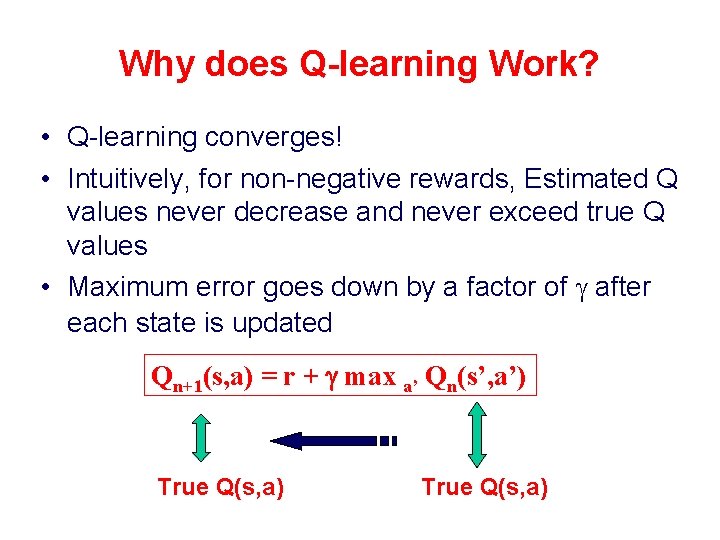

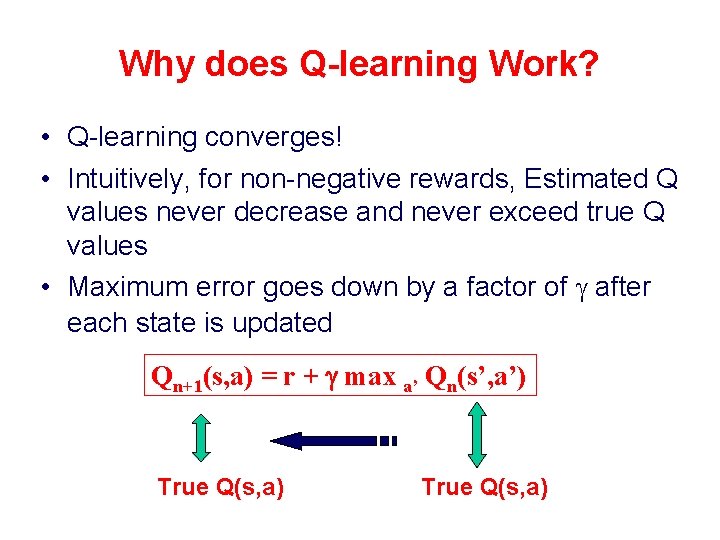

Why does Q-learning Work? • Q-learning converges! • Intuitively, for non-negative rewards, Estimated Q values never decrease and never exceed true Q values • Maximum error goes down by a factor of after each state is updated Qn+1(s, a) = r + max a’ Qn(s’, a’) True Q(s, a)

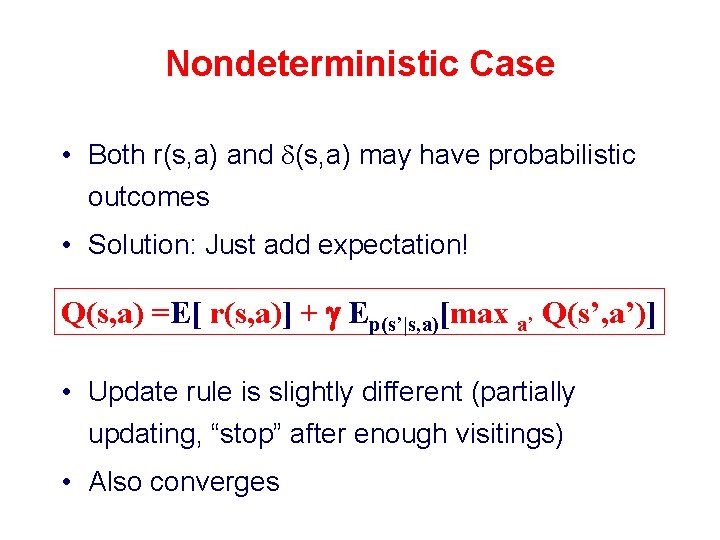

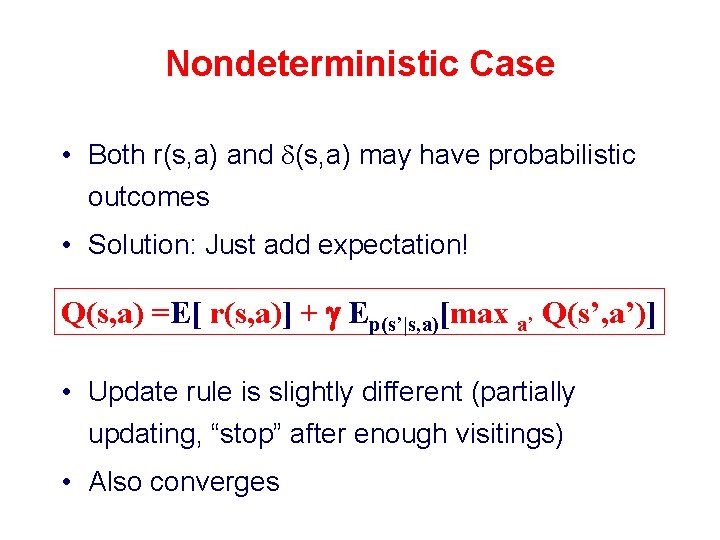

Nondeterministic Case • Both r(s, a) and d(s, a) may have probabilistic outcomes • Solution: Just add expectation! Q(s, a) =E[ r(s, a)] + Ep(s’|s, a)[max a’ Q(s’, a’)] • Update rule is slightly different (partially updating, “stop” after enough visitings) • Also converges

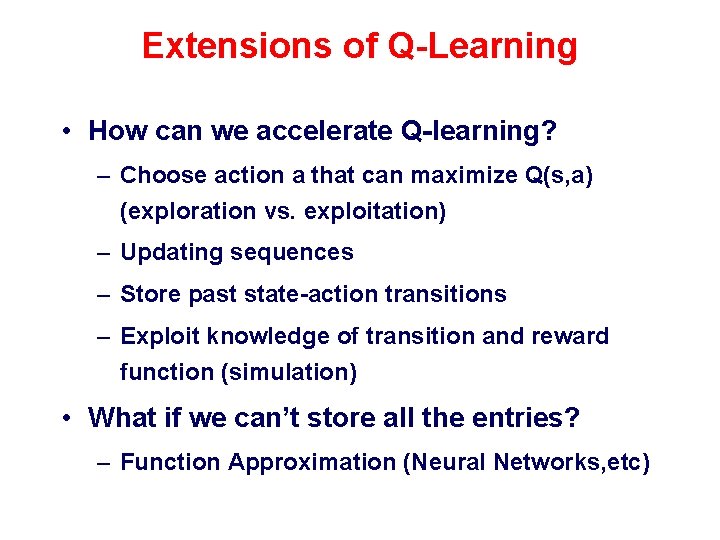

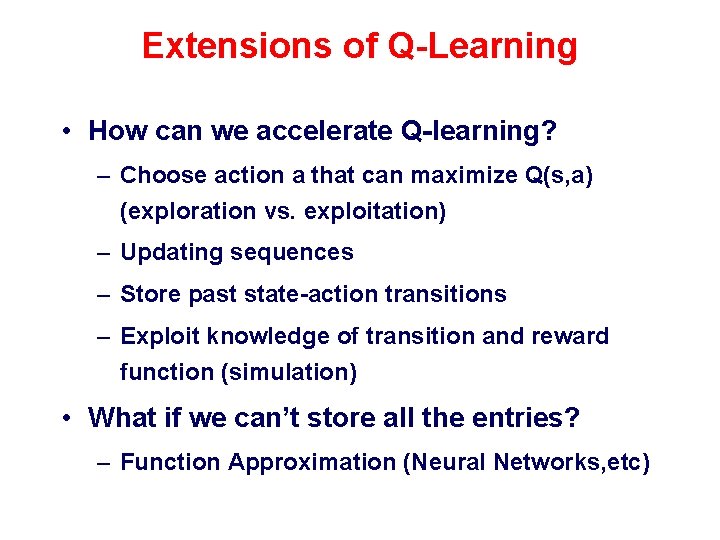

Extensions of Q-Learning • How can we accelerate Q-learning? – Choose action a that can maximize Q(s, a) (exploration vs. exploitation) – Updating sequences – Store past state-action transitions – Exploit knowledge of transition and reward function (simulation) • What if we can’t store all the entries? – Function Approximation (Neural Networks, etc)

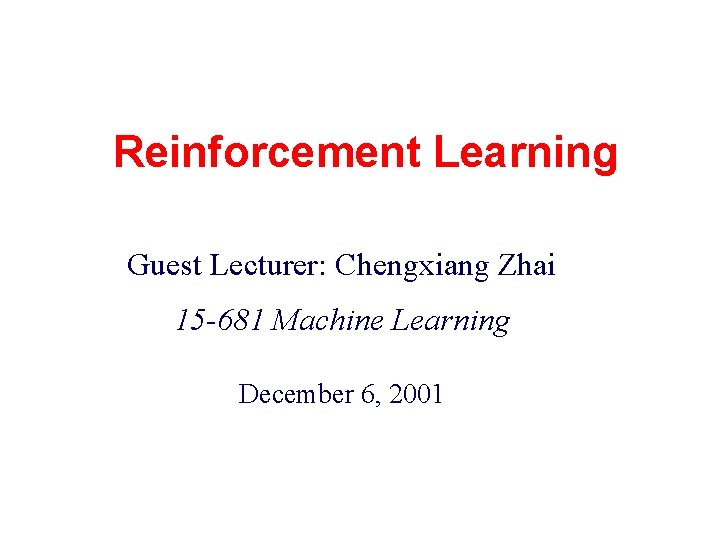

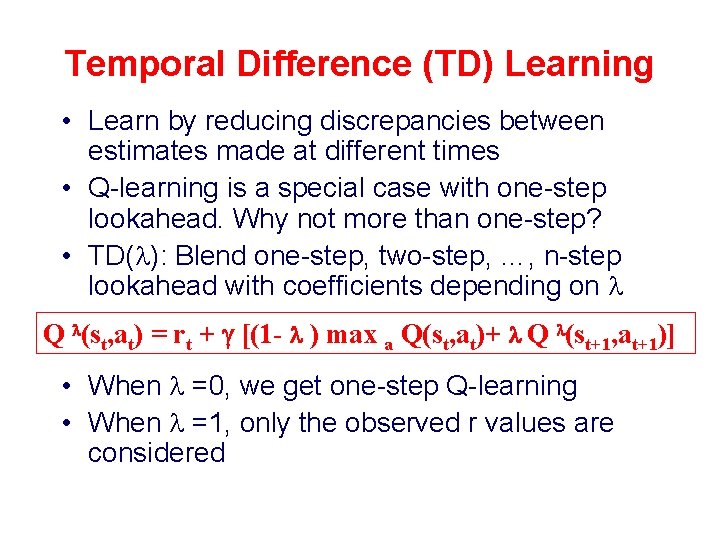

Temporal Difference (TD) Learning • Learn by reducing discrepancies between estimates made at different times • Q-learning is a special case with one-step lookahead. Why not more than one-step? • TD( ): Blend one-step, two-step, …, n-step lookahead with coefficients depending on Q (st, at) = rt + [(1 - ) max a Q(st, at)+ Q (st+1, at+1)] • When =0, we get one-step Q-learning • When =1, only the observed r values are considered

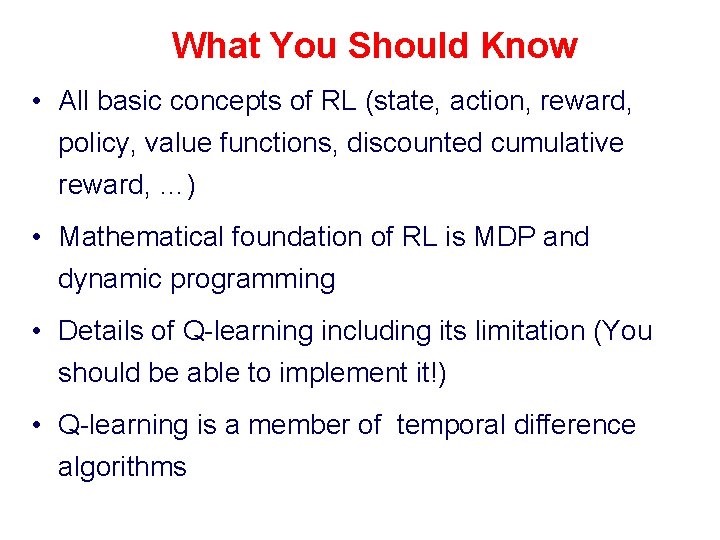

What You Should Know • All basic concepts of RL (state, action, reward, policy, value functions, discounted cumulative reward, …) • Mathematical foundation of RL is MDP and dynamic programming • Details of Q-learning including its limitation (You should be able to implement it!) • Q-learning is a member of temporal difference algorithms

Chengxiang zhai

Chengxiang zhai Lecturer's name or lecturer name

Lecturer's name or lecturer name Guest lecturer in geography

Guest lecturer in geography Iron abbey gastropub

Iron abbey gastropub 681 complex inc

681 complex inc Cs 681

Cs 681 What is 239 rounded to the nearest hundred

What is 239 rounded to the nearest hundred Apprenticeship learning via inverse reinforcement learning

Apprenticeship learning via inverse reinforcement learning Apprenticeship learning via inverse reinforcement learning

Apprenticeship learning via inverse reinforcement learning Inverse reinforcement learning

Inverse reinforcement learning Secondsry reinforcer

Secondsry reinforcer Seth zhai

Seth zhai Cheng xiang zhai

Cheng xiang zhai Cheng xiang zhai

Cheng xiang zhai Cheng xiang zhai

Cheng xiang zhai Cheng zhai

Cheng zhai Cheng xiang zhai

Cheng xiang zhai Molly zhai

Molly zhai Physician associate lecturer

Physician associate lecturer Spe distinguished lecturer

Spe distinguished lecturer Hello good afternoon teacher

Hello good afternoon teacher Photography lecturer

Photography lecturer Lecturer in charge

Lecturer in charge Designation lecturer

Designation lecturer Designation of lecturer

Designation of lecturer Lecturer name

Lecturer name