Regression Usman Roshan CS 675 Machine Learning Regression

- Slides: 17

Regression Usman Roshan CS 675 Machine Learning

Regression • Same problem as classification except that the target variable yi is continuous. • Popular solutions – Linear regression (perceptron) – Support vector regression – Logistic regression (for regression)

Linear regression • Suppose target values are generated by a function yi = f(xi) + ei • We will estimate f(xi) by g(xi, θ). • Suppose each ei is being generated by a Gaussian distribution with 0 mean and σ2 variance (same variance for all ei). • This implies that the probability of yi given the input xi and variables θ (denoted as p(yi|xi, θ) is normally distributed with mean g(xi, θ) and variance σ2.

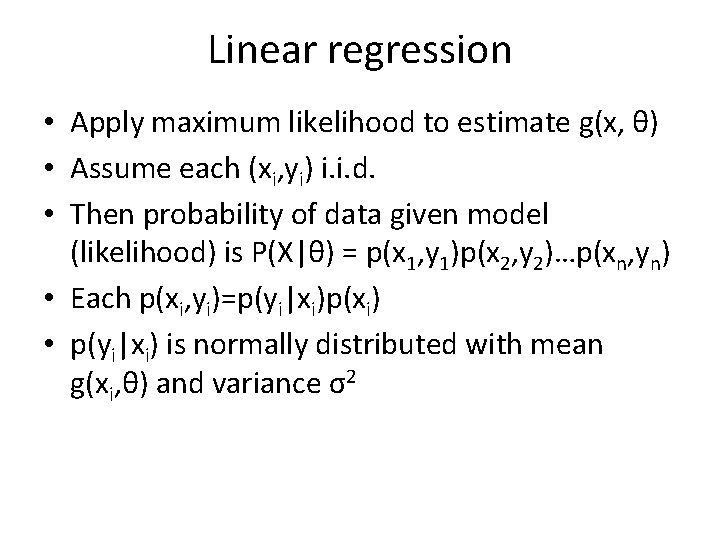

Linear regression • Apply maximum likelihood to estimate g(x, θ) • Assume each (xi, yi) i. i. d. • Then probability of data given model (likelihood) is P(X|θ) = p(x 1, y 1)p(x 2, y 2)…p(xn, yn) • Each p(xi, yi)=p(yi|xi)p(xi) • p(yi|xi) is normally distributed with mean g(xi, θ) and variance σ2

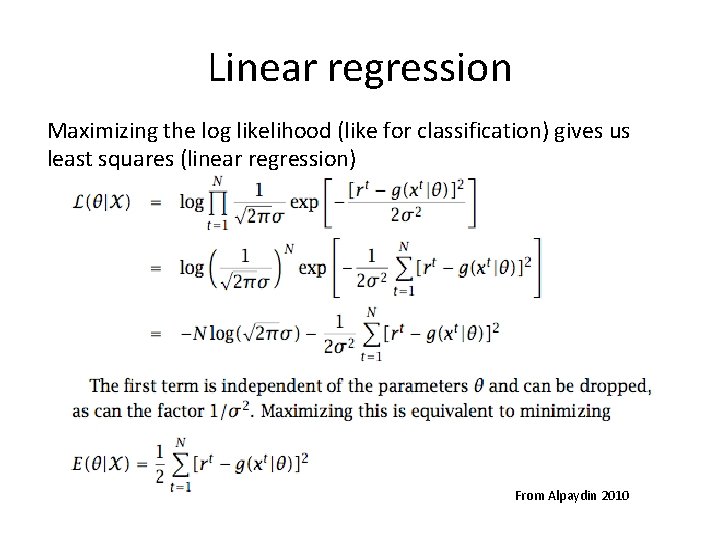

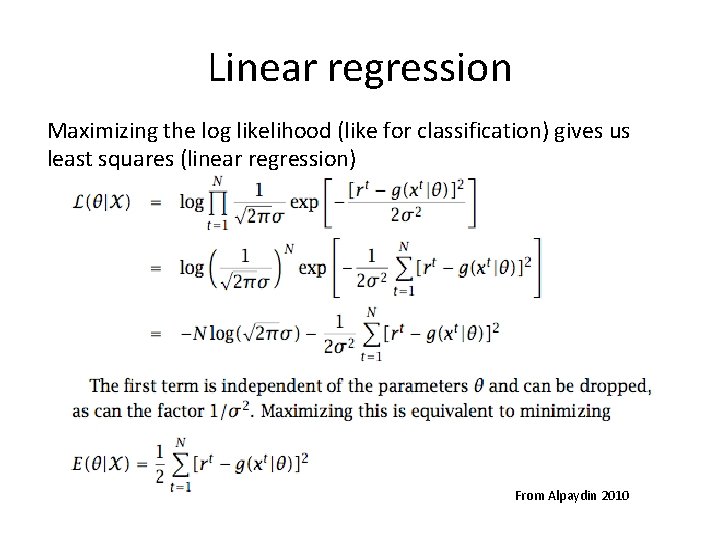

Linear regression Maximizing the log likelihood (like for classification) gives us least squares (linear regression) From Alpaydin 2010

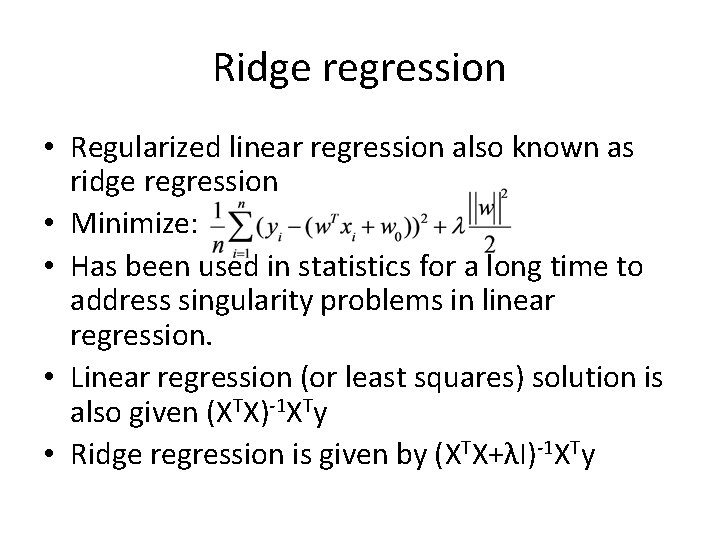

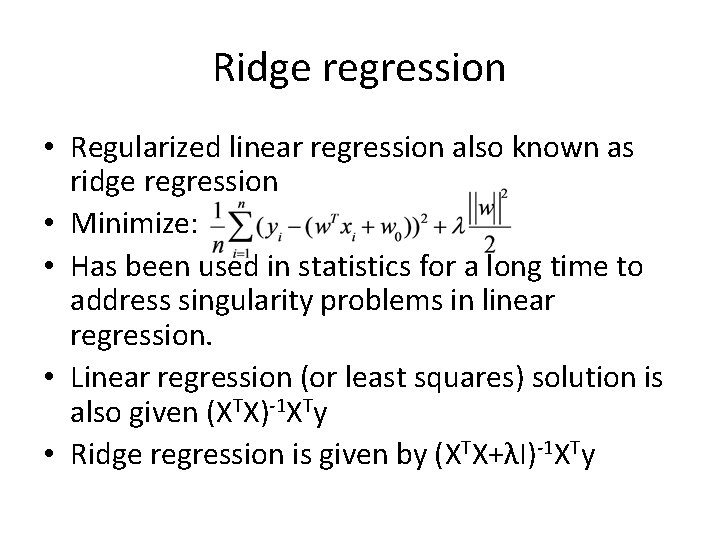

Ridge regression • Regularized linear regression also known as ridge regression • Minimize: • Has been used in statistics for a long time to address singularity problems in linear regression. • Linear regression (or least squares) solution is also given (XTX)-1 XTy • Ridge regression is given by (XTX+λI)-1 XTy

Logistic regression • Similar to linear regression derivation • Here we predict with the sigmoid function instead of a linear function • We still minimize sum of squares between predicted and actual value • Output yi is constrained in the range [0, 1]

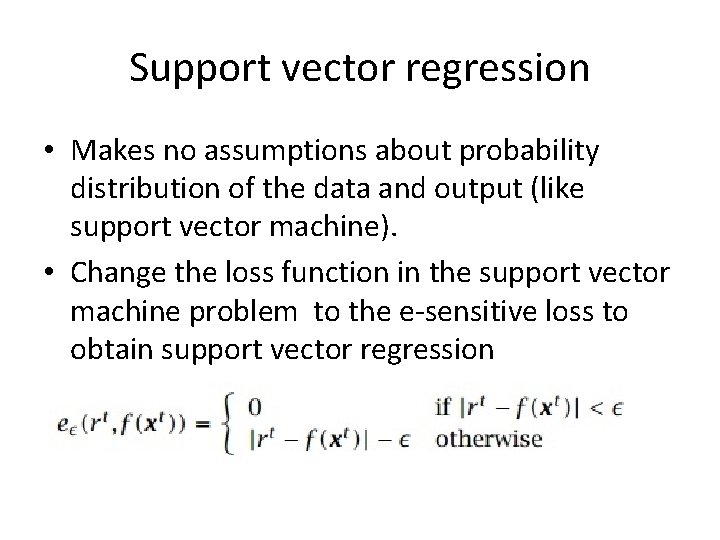

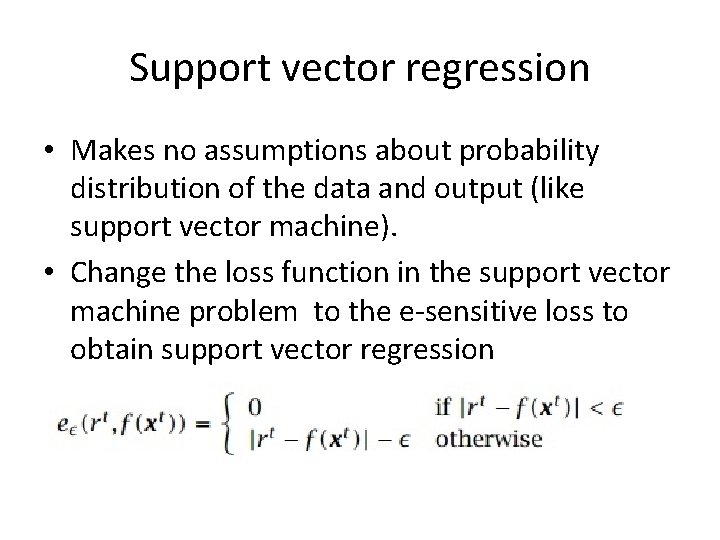

Support vector regression • Makes no assumptions about probability distribution of the data and output (like support vector machine). • Change the loss function in the support vector machine problem to the e-sensitive loss to obtain support vector regression

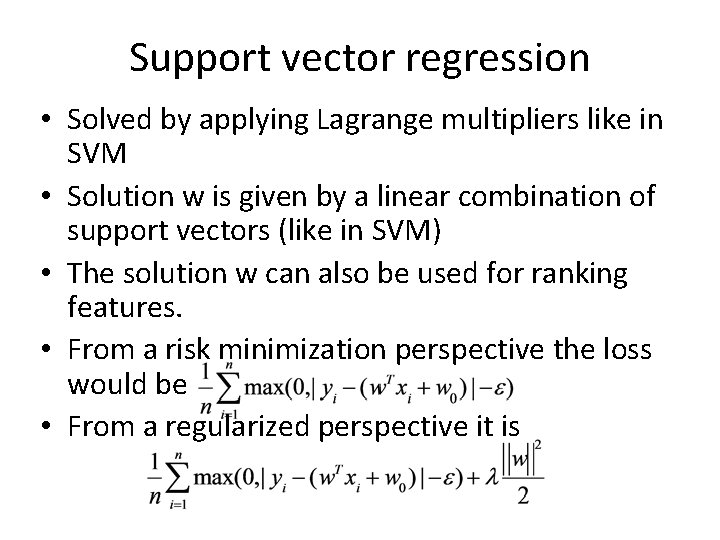

Support vector regression • Solved by applying Lagrange multipliers like in SVM • Solution w is given by a linear combination of support vectors (like in SVM) • The solution w can also be used for ranking features. • From a risk minimization perspective the loss would be • From a regularized perspective it is

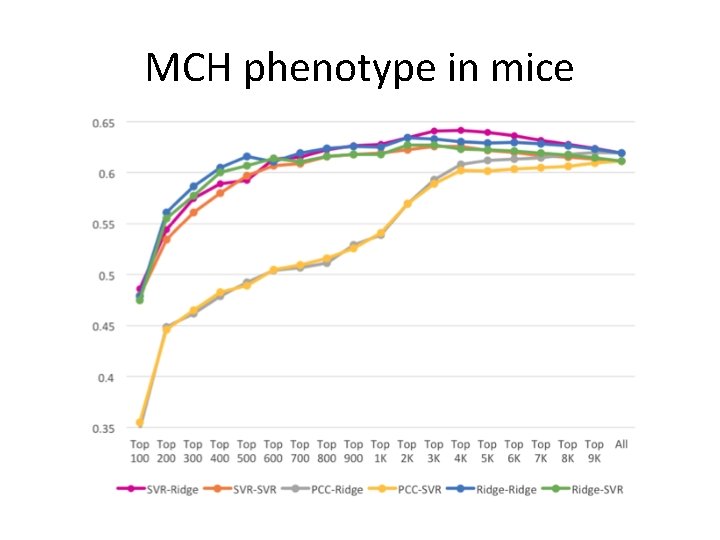

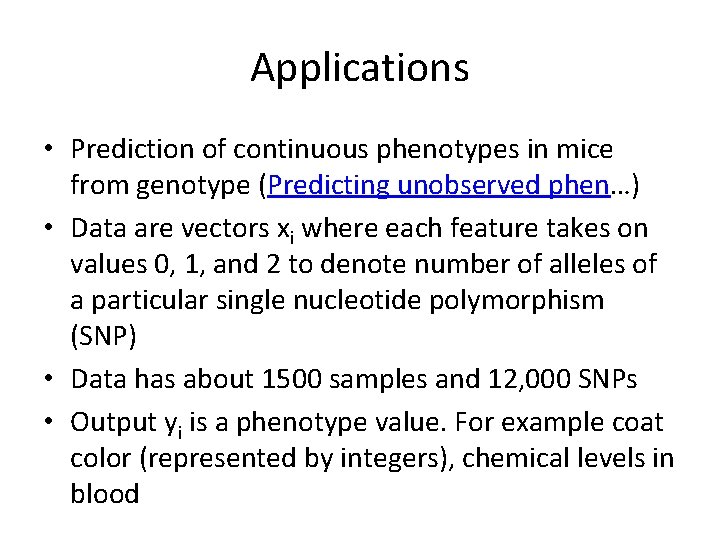

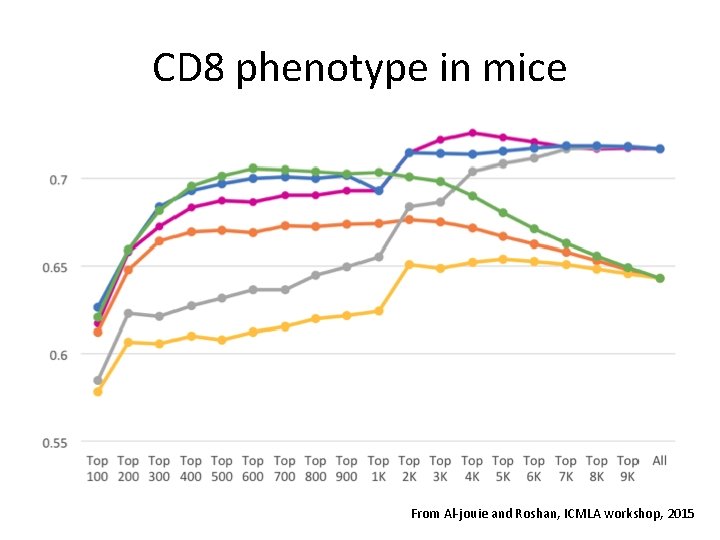

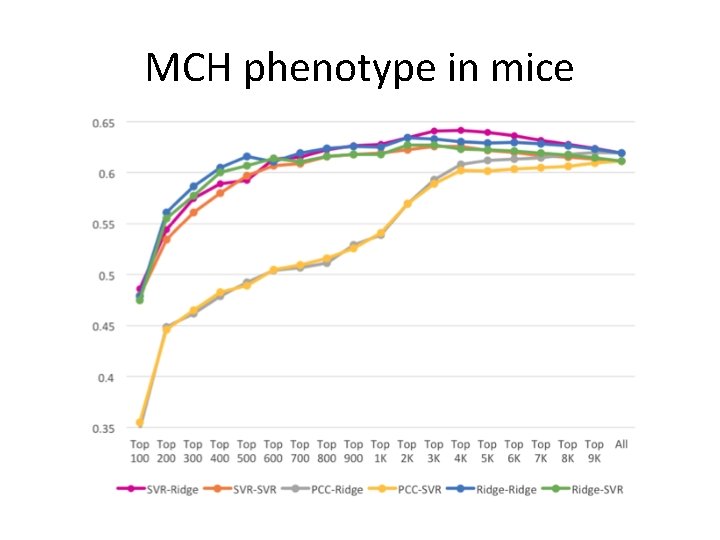

Applications • Prediction of continuous phenotypes in mice from genotype (Predicting unobserved phen…) • Data are vectors xi where each feature takes on values 0, 1, and 2 to denote number of alleles of a particular single nucleotide polymorphism (SNP) • Data has about 1500 samples and 12, 000 SNPs • Output yi is a phenotype value. For example coat color (represented by integers), chemical levels in blood

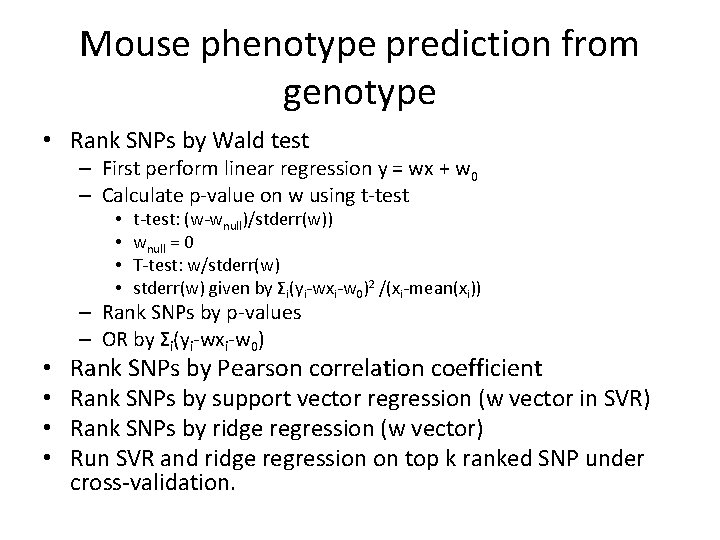

Mouse phenotype prediction from genotype • Rank SNPs by Wald test – First perform linear regression y = wx + w 0 – Calculate p-value on w using t-test • • t-test: (w-wnull)/stderr(w)) wnull = 0 T-test: w/stderr(w) given by Σi(yi-wxi-w 0)2 /(xi-mean(xi)) – Rank SNPs by p-values – OR by Σi(yi-wxi-w 0) Rank SNPs by Pearson correlation coefficient Rank SNPs by support vector regression (w vector in SVR) Rank SNPs by ridge regression (w vector) Run SVR and ridge regression on top k ranked SNP under cross-validation.

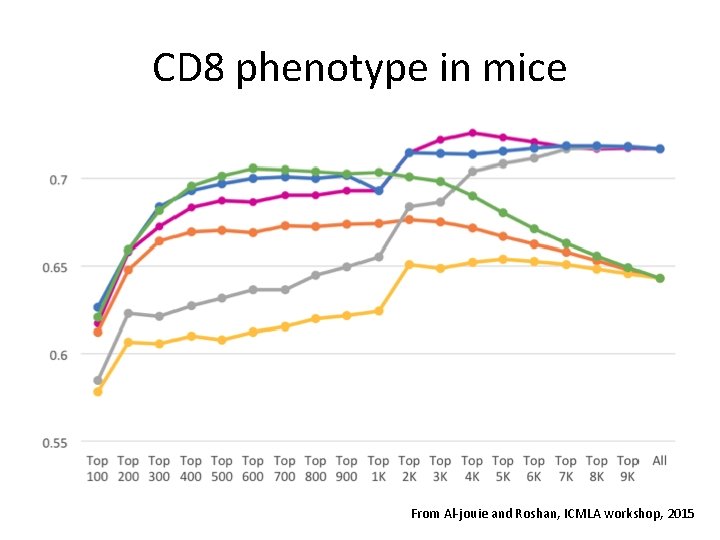

CD 8 phenotype in mice From Al-jouie and Roshan, ICMLA workshop, 2015

MCH phenotype in mice

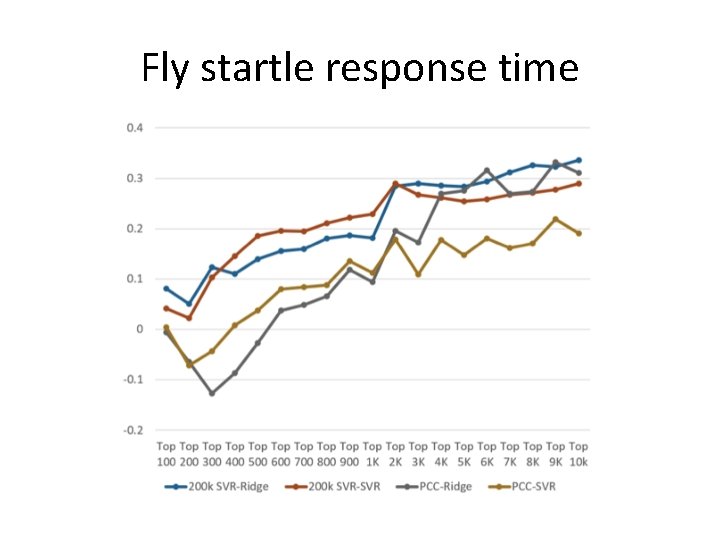

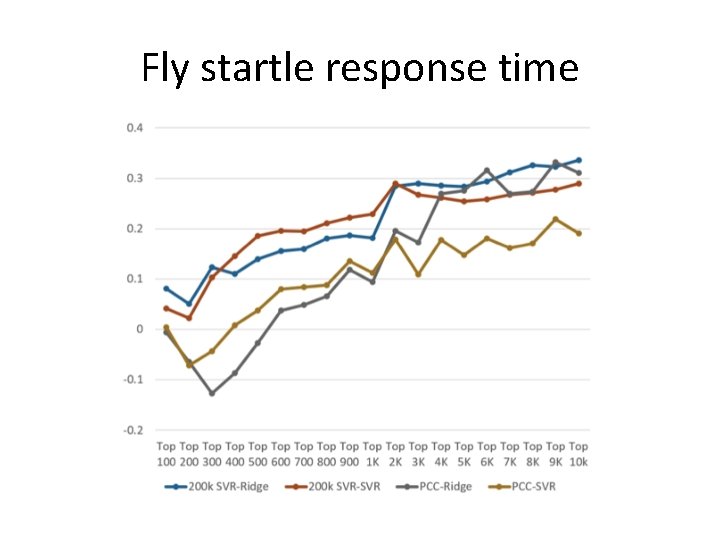

Fly startle response time prediction from genotype • Same experimental study as previously • Using whole genome sequence data to predict quantitative trait phenotypes in Drosophila Melanogaster • Data has 155 samples and 2 million SNPs (features)

Fly startle response time

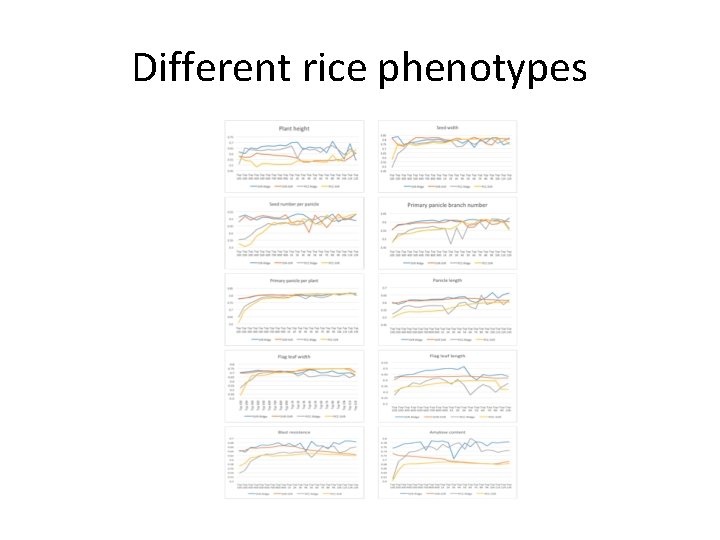

Rice phenotype prediction from genotype • Same experimental study as previously • Improving the Accuracy of Whole Genome Prediction for Complex Traits Using the Results of Genome Wide Association Studies • Data has 413 samples and 36901 SNPs (features) • Basic unbiased linear prediction (BLUP) method improved by prior SNP knowledge (given in genome-wide association studies)

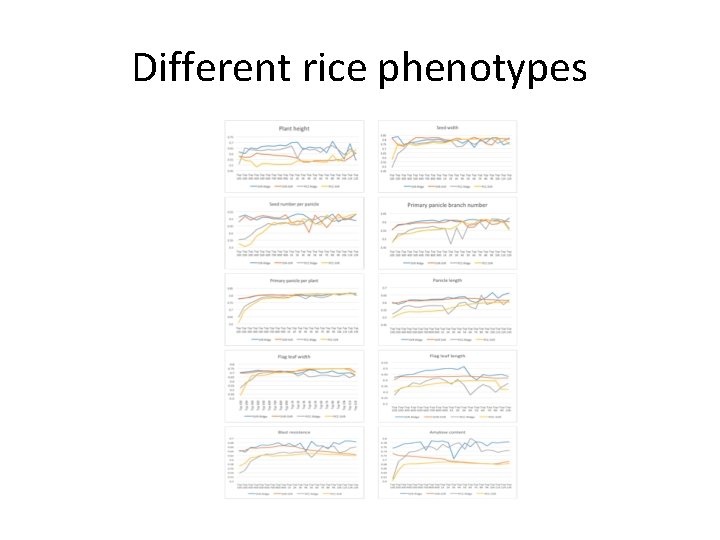

Different rice phenotypes