Regression Methods Linear Regression Simple linear regression one

- Slides: 15

Regression Methods

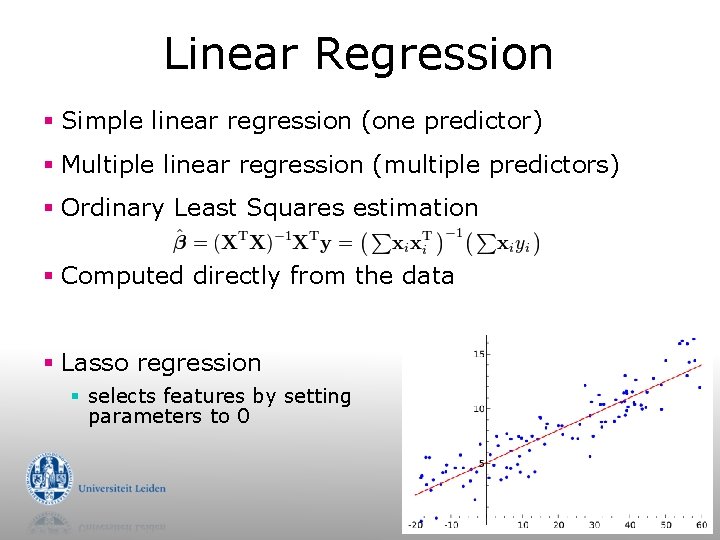

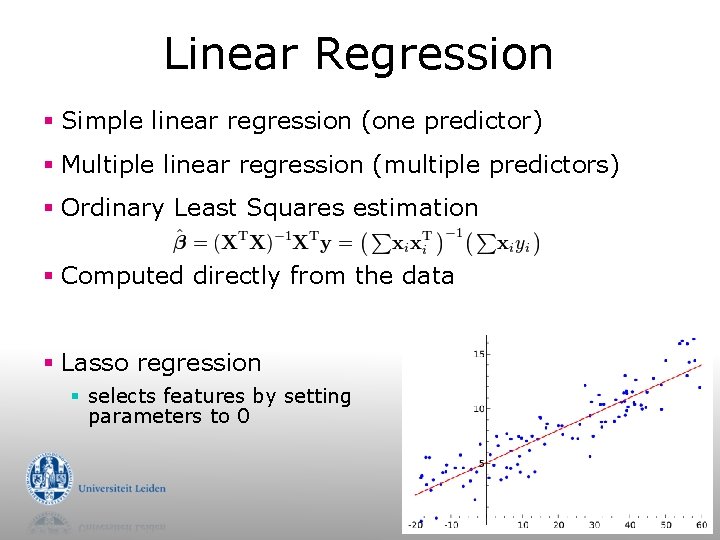

Linear Regression § Simple linear regression (one predictor) § Multiple linear regression (multiple predictors) § Ordinary Least Squares estimation § Computed directly from the data § Lasso regression § selects features by setting parameters to 0

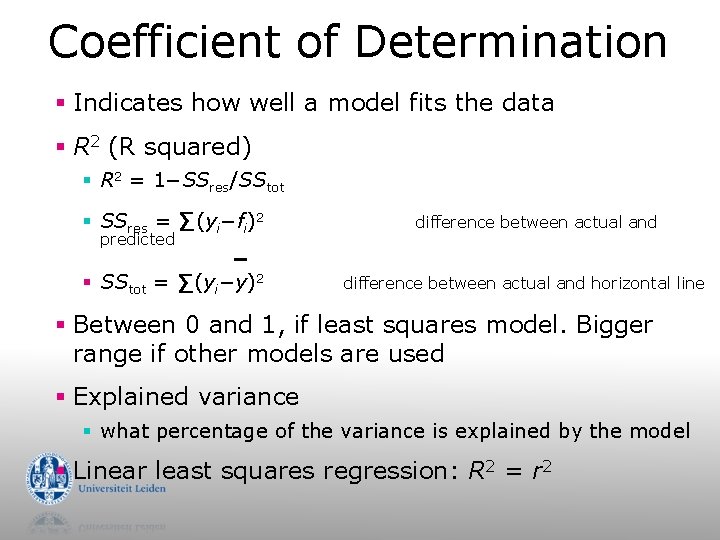

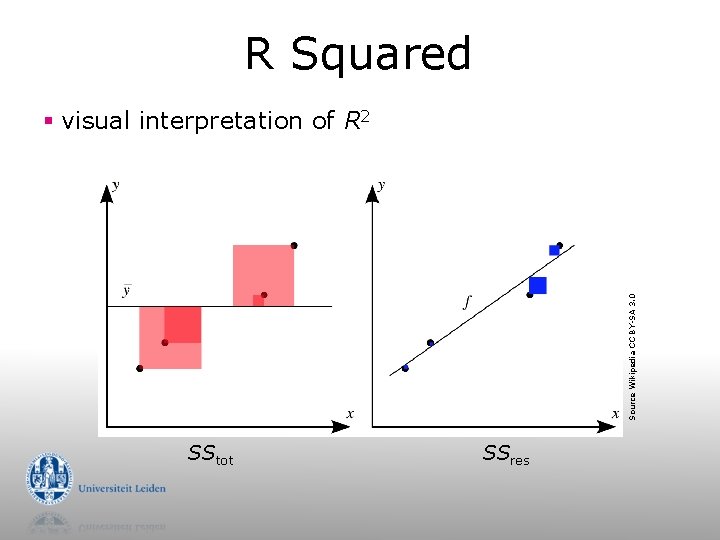

Coefficient of Determination § Indicates how well a model fits the data § R 2 (R squared) § R 2 = 1−SSres/SStot § SSres = Σ(yi−fi)2 § SStot = Σ(yi−y)2 predicted difference between actual and horizontal line § Between 0 and 1, if least squares model. Bigger range if other models are used § Explained variance § what percentage of the variance is explained by the model § Linear least squares regression: R 2 = r 2

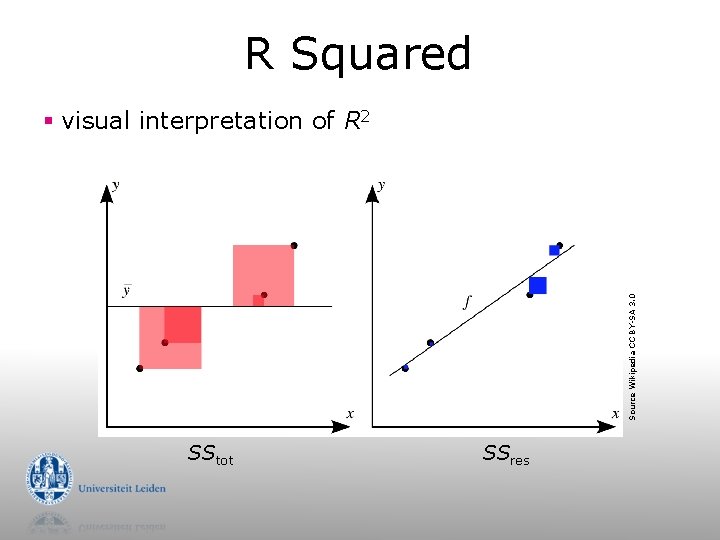

R Squared Source Wikipedia CC BY-SA 3. 0 § visual interpretation of R 2 SStot SSres

Regression Trees § Regression variant of decision tree § Top-down induction § 2 options: § Constant value in leaf (piecewise constant) § regression trees § Local linear model in leaf (piecewise linear) § model trees

M 5 algorithm (Quinlan, Wang) § M 5’, M 5 P in Weka § (classifiers > trees > M 5 P) § Offers both regression trees and model trees § Model trees are default § -R option (build. Regression. Tree) for piecewise constant

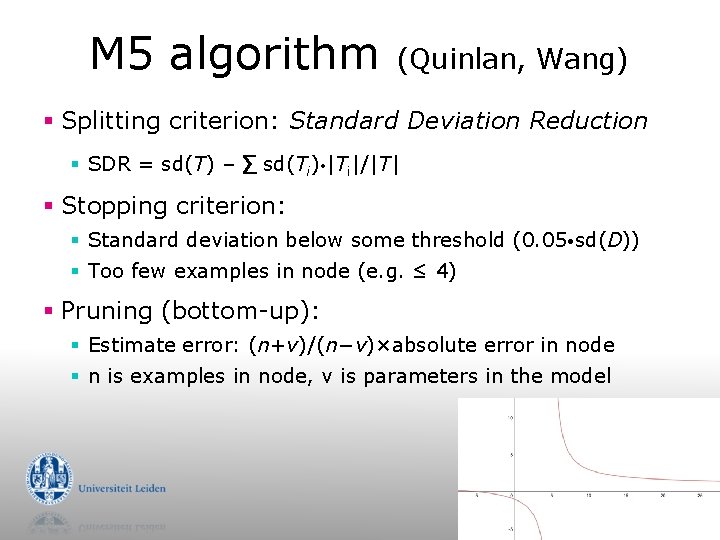

M 5 algorithm (Quinlan, Wang) § Splitting criterion: Standard Deviation Reduction § SDR = sd(T) – Σ sd(Ti) |Ti|/|T| § Stopping criterion: § Standard deviation below some threshold (0. 05 sd(D)) § Too few examples in node (e. g. ≤ 4) § Pruning (bottom-up): § Estimate error: (n+v)/(n−v)×absolute error in node § n is examples in node, v is parameters in the model

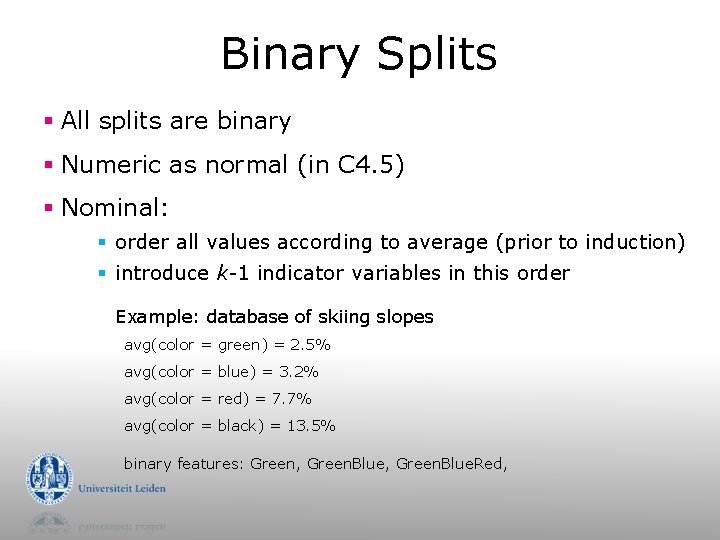

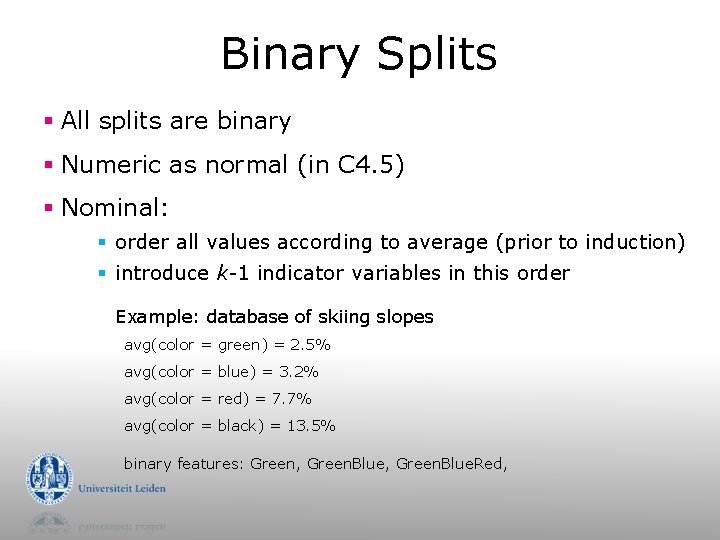

Binary Splits § All splits are binary § Numeric as normal (in C 4. 5) § Nominal: § order all values according to average (prior to induction) § introduce k-1 indicator variables in this order Example: database of skiing slopes avg(color = green) = 2. 5% avg(color = blue) = 3. 2% avg(color = red) = 7. 7% avg(color = black) = 13. 5% binary features: Green, Green. Blue. Red,

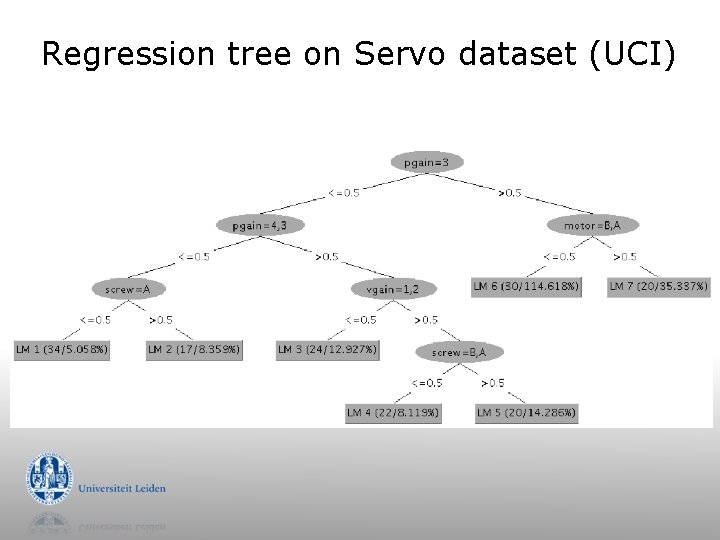

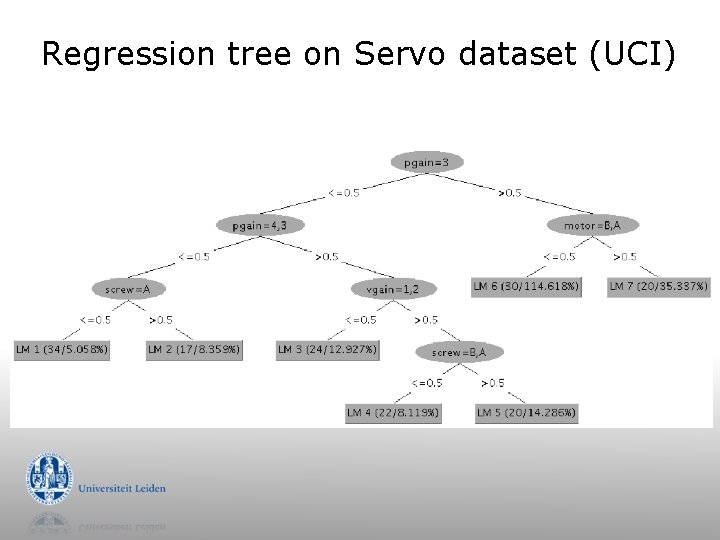

Regression tree on Servo dataset (UCI)

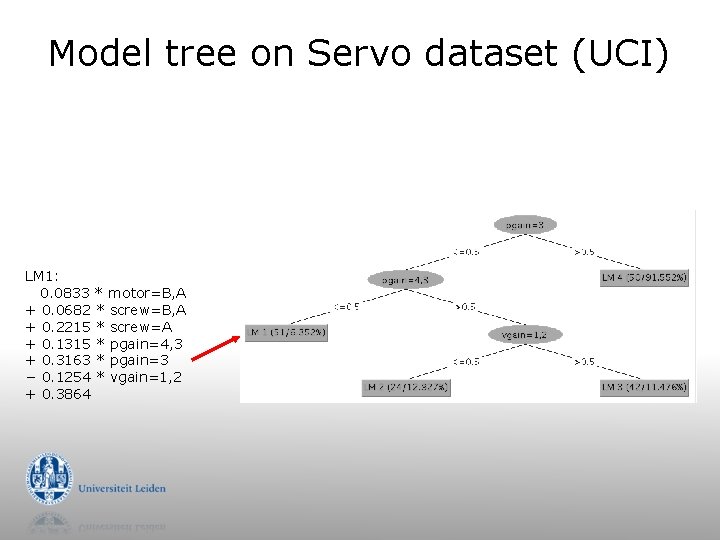

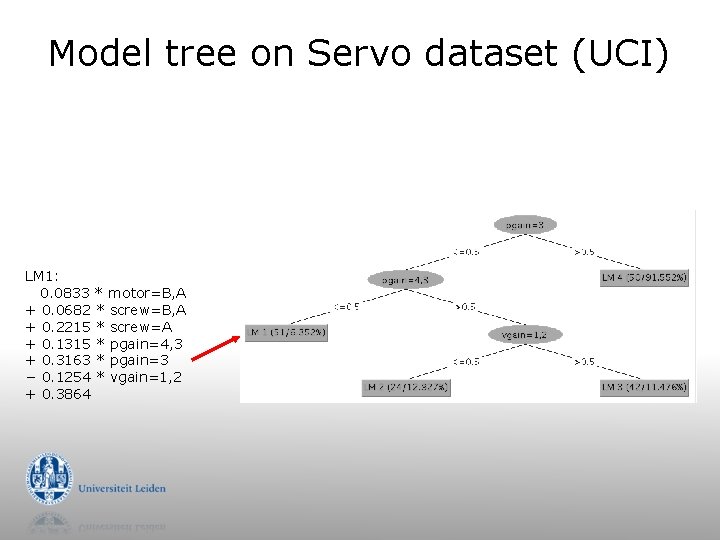

Model tree on Servo dataset (UCI) LM 1: 0. 0833 * motor=B, A + 0. 0682 * screw=B, A + 0. 2215 * screw=A + 0. 1315 * pgain=4, 3 + 0. 3163 * pgain=3 − 0. 1254 * vgain=1, 2 + 0. 3864

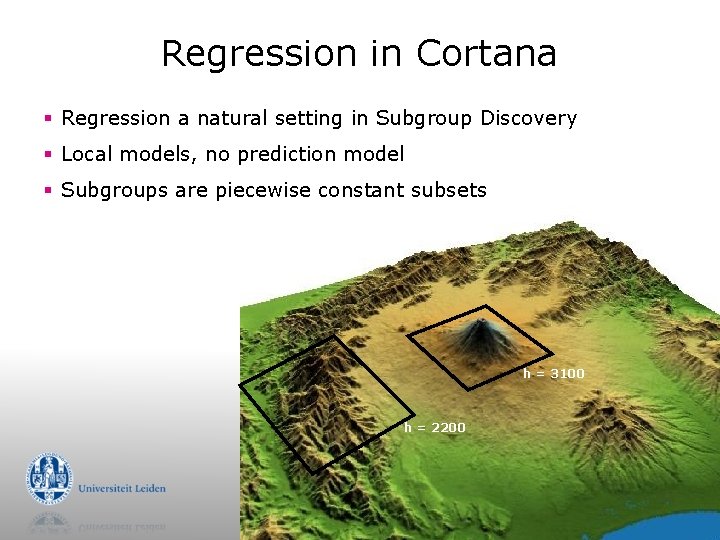

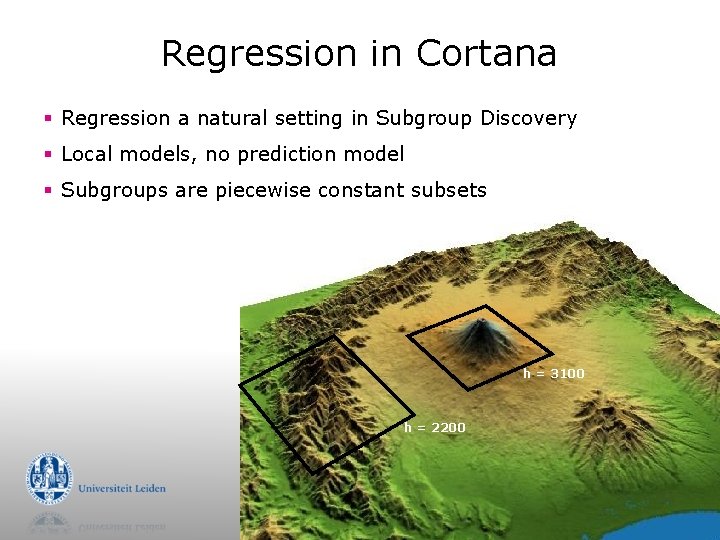

Regression in Cortana § Regression a natural setting in Subgroup Discovery § Local models, no prediction model § Subgroups are piecewise constant subsets h = 3100 h = 2200

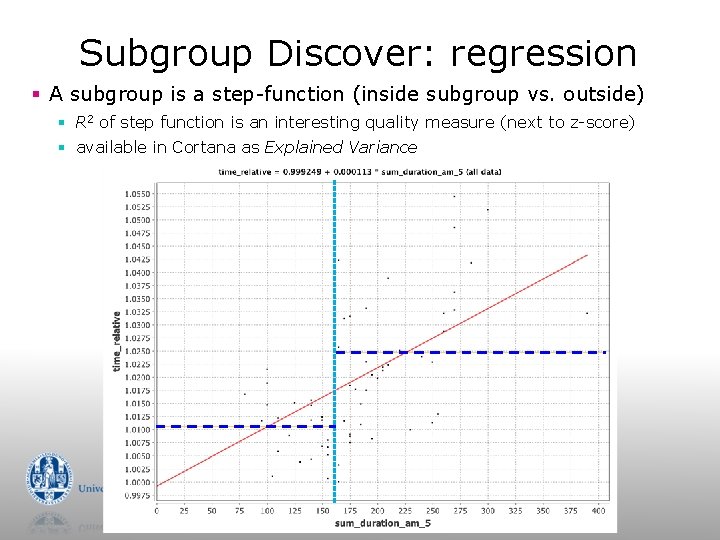

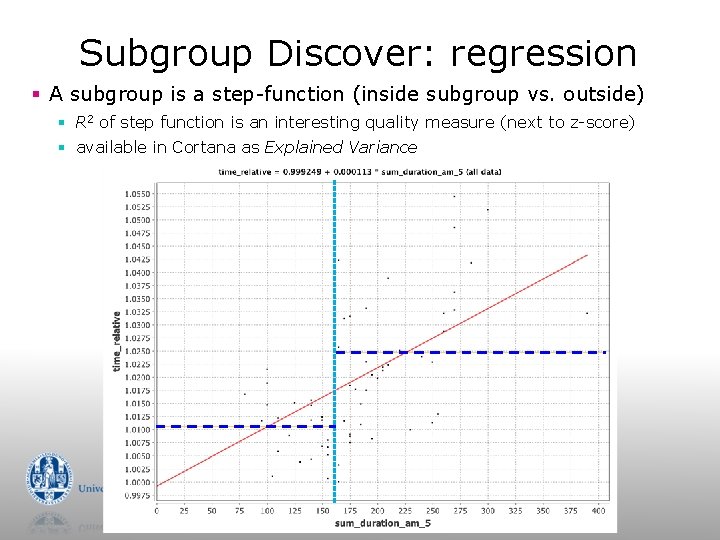

Subgroup Discover: regression § A subgroup is a step-function (inside subgroup vs. outside) § R 2 of step function is an interesting quality measure (next to z-score) § available in Cortana as Explained Variance

Other regression models § Functions § Linear. Regression § Multi. Layer. Perceptron (artificial neural network) § SMOreg (Support Vector Machine) § Lazy § IBK (k-Nearest Neigbors) § Rules § M 5 Rule (decision list)

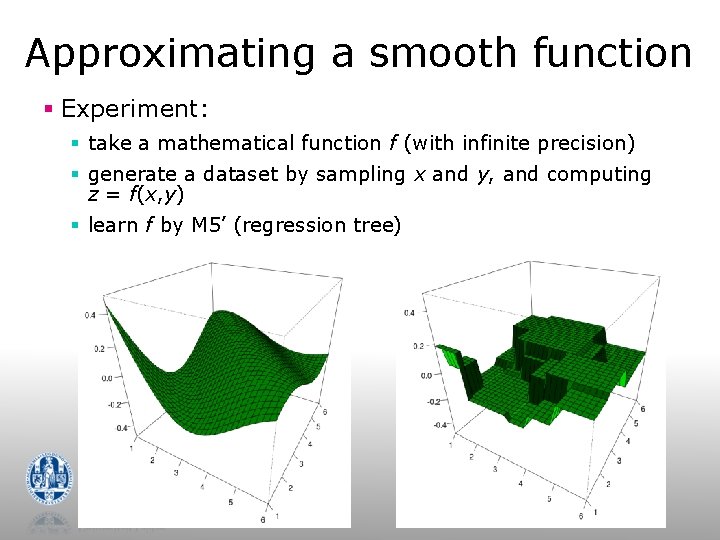

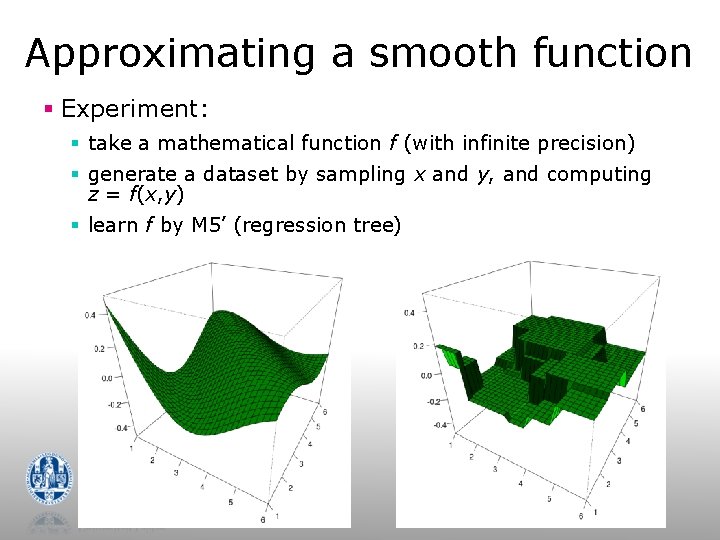

Approximating a smooth function § Experiment: § take a mathematical function f (with infinite precision) § generate a dataset by sampling x and y, and computing z = f(x, y) § learn f by M 5’ (regression tree)

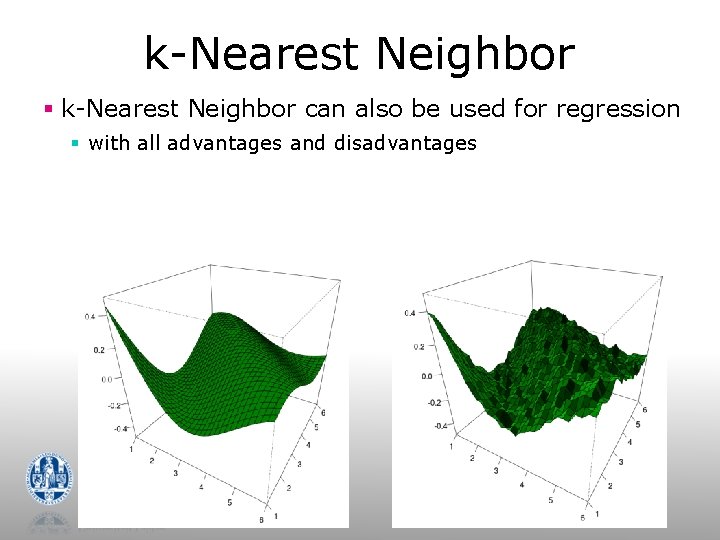

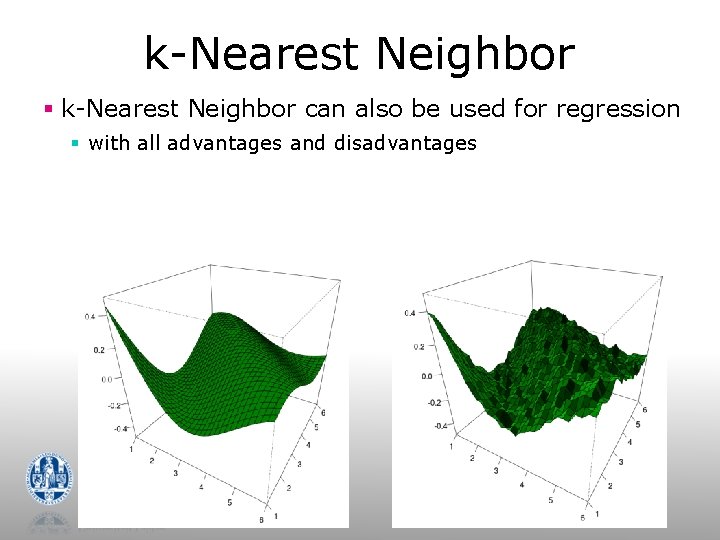

k-Nearest Neighbor § k-Nearest Neighbor can also be used for regression § with all advantages and disadvantages