Regression Analysis Simple linear regression Multiple regression Estimation

- Slides: 67

Regression Analysis -Simple linear regression -Multiple regression -Estimation and interpretation -Inference Tintner (1953) “The Study of the Application of Statistical Methods to the Analysis of Economic Phenomena”

Business analysts often need to be in position to: - Interpret the economic or financial landscape - Identify and assess the influence of several exogenous or predetermined factors on one or more endogenous variables - Provide ex-ante forecasts of one or more endogenous variables How does one achieve these objectives?

Why do Business Analysts Wish to Achieve These Objectives? -To improve decision-making! Example: Investigate key determinants of demand for Prego spaghetti sauce: Price of Prego; price of competitors (Ragu, Classico, Hunt’s, Newman’s Own); in-store displays; coupons; price of spaghetti Forecast sales of Prego spaghetti sauce one month, one quarter, or even one year into the future

Course of Action — Development of Formal Quantitative Models Regression Analysis

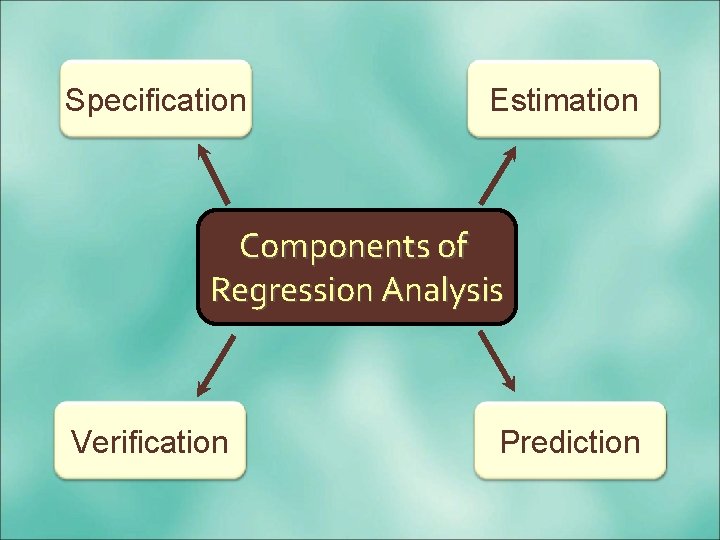

Components of Regression Analysis involves four phases: – – Specification – the model building activity Estimation – fitting the model to data Verification – testing the model Prediction – producing ex-ante forecasts and conducting ex-post forecast evaluations

Specification Estimation Components of Regression Analysis Verification Prediction

Getting Started

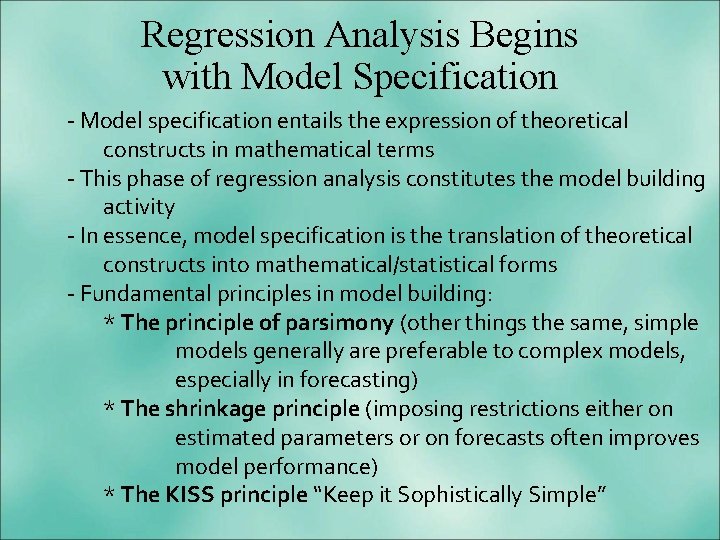

Regression Analysis Begins with Model Specification - Model specification entails the expression of theoretical constructs in mathematical terms - This phase of regression analysis constitutes the model building activity - In essence, model specification is the translation of theoretical constructs into mathematical/statistical forms - Fundamental principles in model building: * The principle of parsimony (other things the same, simple models generally are preferable to complex models, especially in forecasting) * The shrinkage principle (imposing restrictions either on estimated parameters or on forecasts often improves model performance) * The KISS principle “Keep it Sophistically Simple”

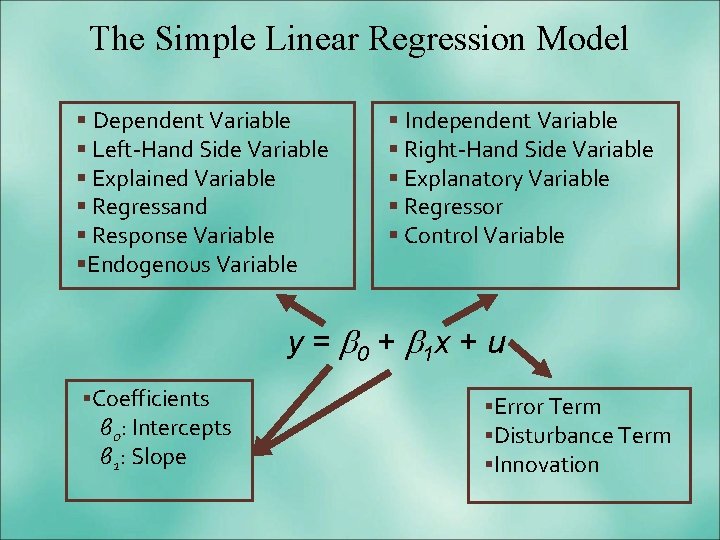

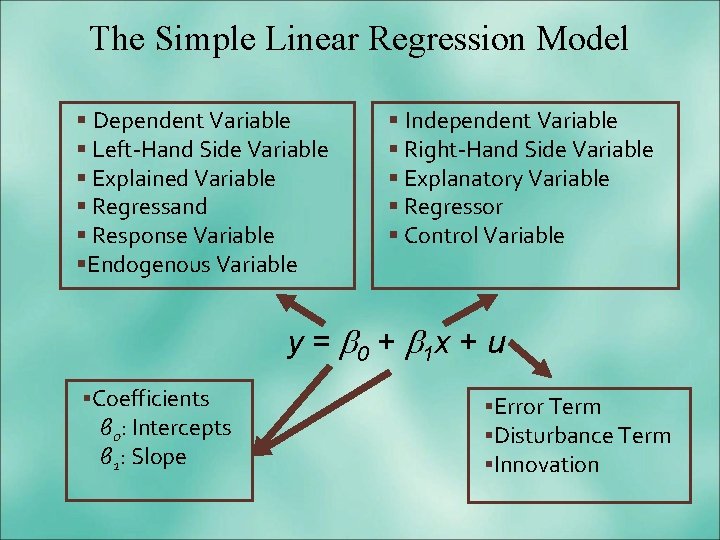

The Simple Linear Regression Model § Dependent Variable § Left-Hand Side Variable § Explained Variable § Regressand § Response Variable §Endogenous Variable § Independent Variable § Right-Hand Side Variable § Explanatory Variable § Regressor § Control Variable y = 0 + 1 x + u §Coefficients β 0: Intercepts β 1: Slope §Error Term §Disturbance Term §Innovation

The error term u explicitly relates that the relationship between y and x is not an identity; u arises for two reasons: (1) measurement error (2) the regression inadvertently omits the effects of other variables besides x that could impact y.

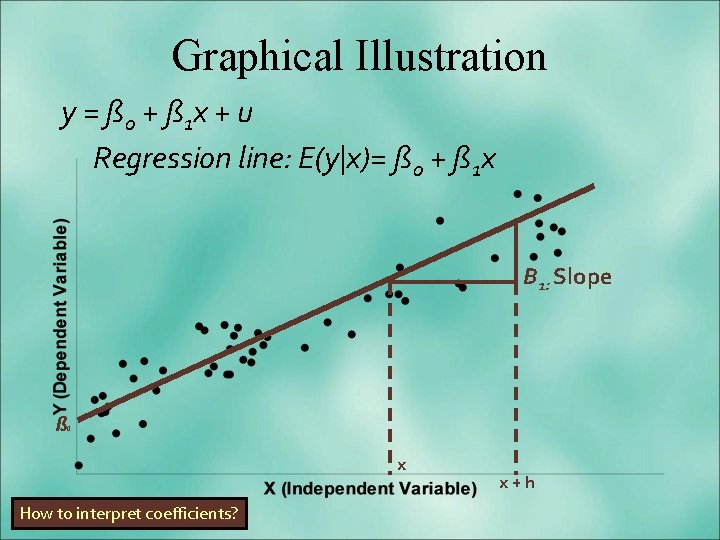

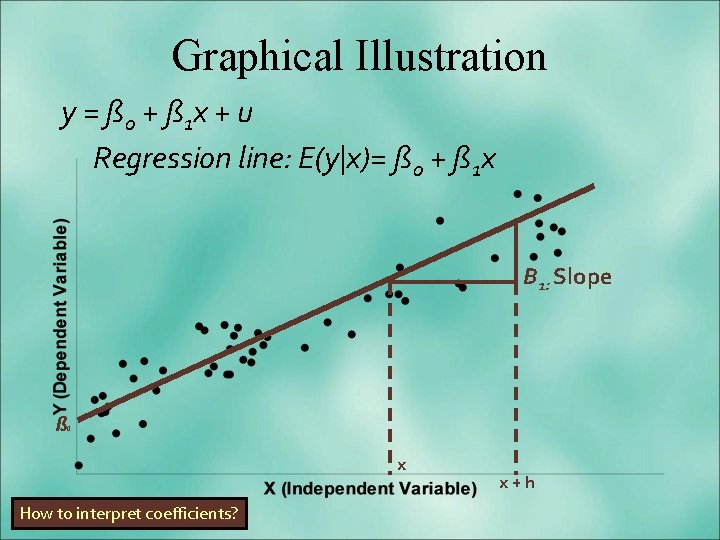

Graphical Illustration y = ß 0 + ß 1 x + u Regression line: E(y|x)= ß 0 + ß 1 x Β 1: Slope ß 0 x How to interpret coefficients? x+h

Example: Estimation of Demand Relationships - Often in regression analysis, analysts have interest in estimating demand relationships, particularly for commodities. - Analysts may wish to estimate the demand for cosmetic products, automobiles, various food products, or various beverages.

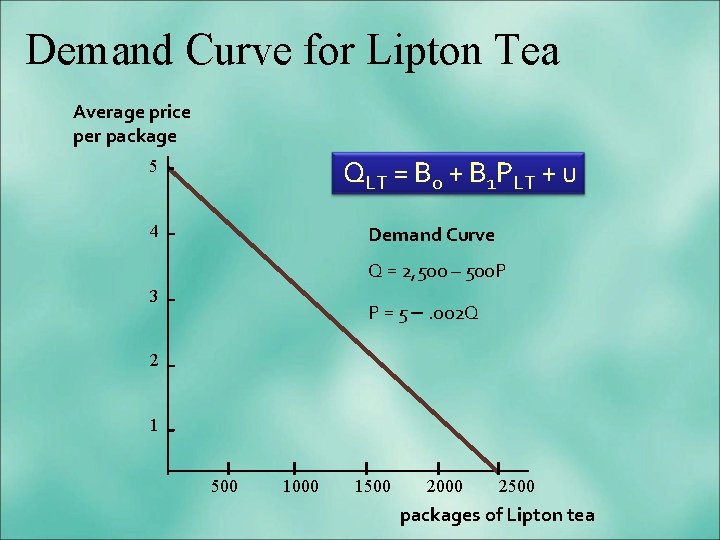

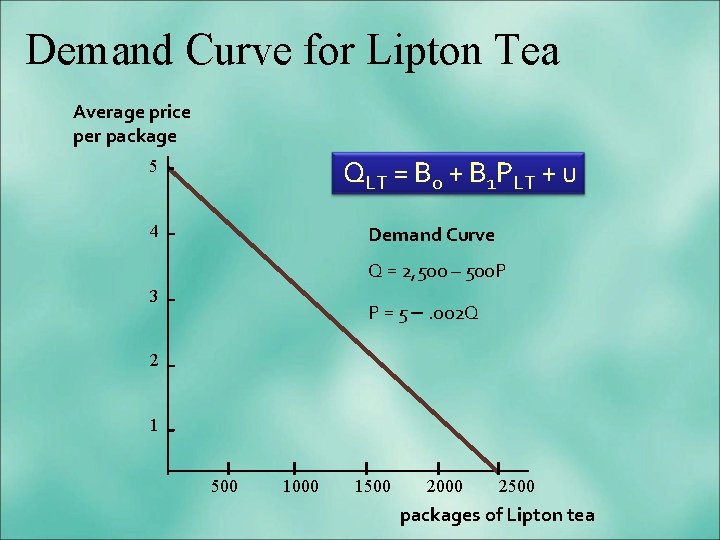

Demand Curve for Lipton Tea Average price per package QLT = B 0 + B 1 PLT + u 5 4 Demand Curve Q = 2, 500 – 500 P 3 P = 5 –. 002 Q 2 1 500 1000 1500 2000 2500 packages of Lipton tea

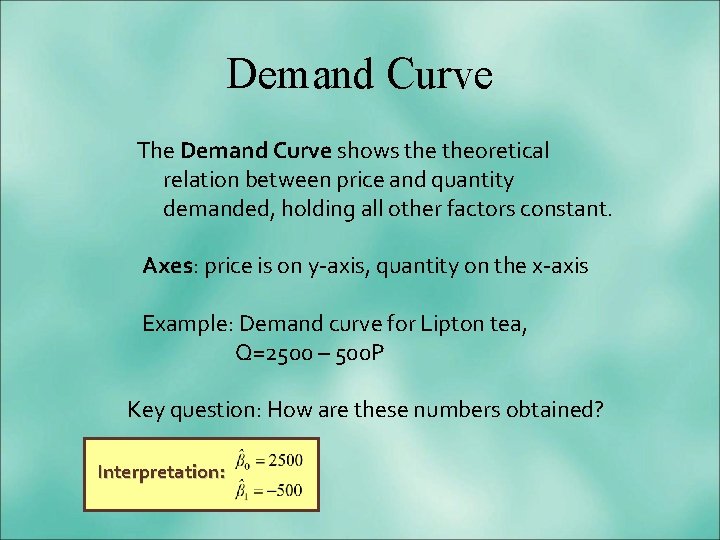

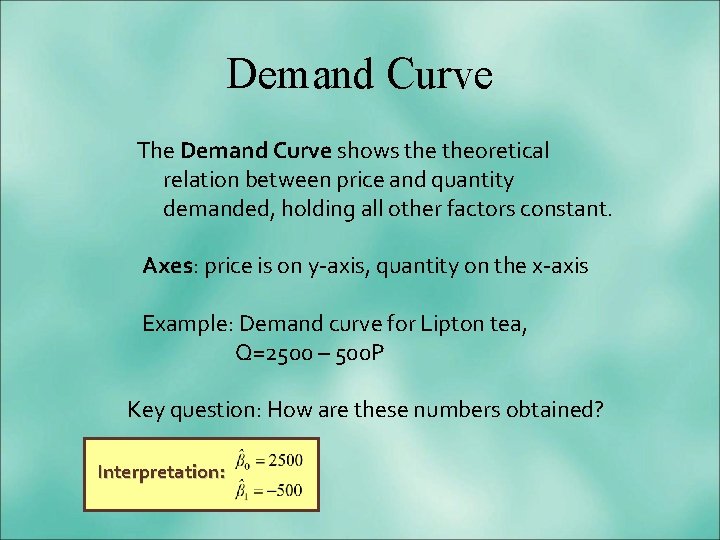

Demand Curve The Demand Curve shows theoretical relation between price and quantity demanded, holding all other factors constant. Axes: price is on y-axis, quantity on the x-axis Example: Demand curve for Lipton tea, Q=2500 – 500 P Key question: How are these numbers obtained? Interpretation:

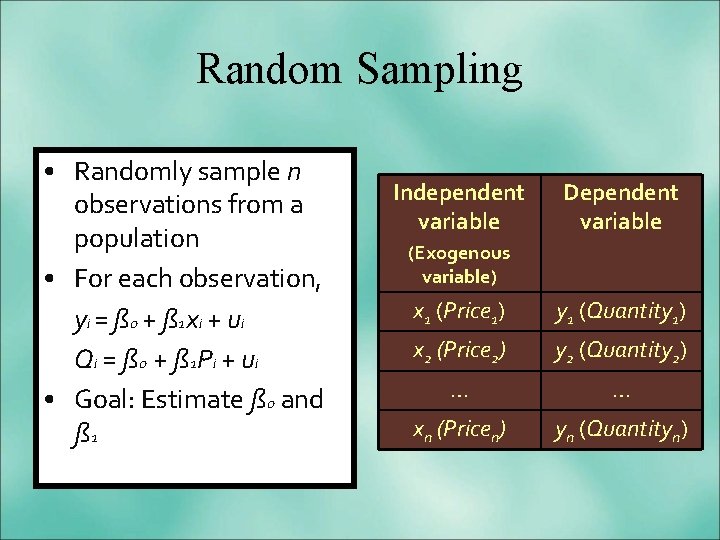

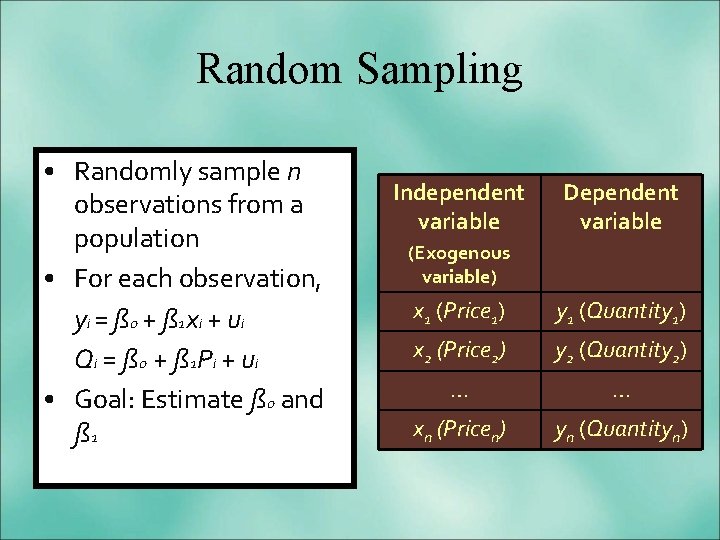

Random Sampling • Randomly sample n observations from a population • For each observation, y i = ß 0 + ß 1 x i + u i Q i = ß 0 + ß 1 Pi + u i • Goal: Estimate ß 0 and ß 1 Independent variable Dependent variable (Exogenous variable) x 1 (Price 1) y 1 (Quantity 1) x 2 (Price 2) y 2 (Quantity 2) … … xn (Pricen) yn (Quantityn)

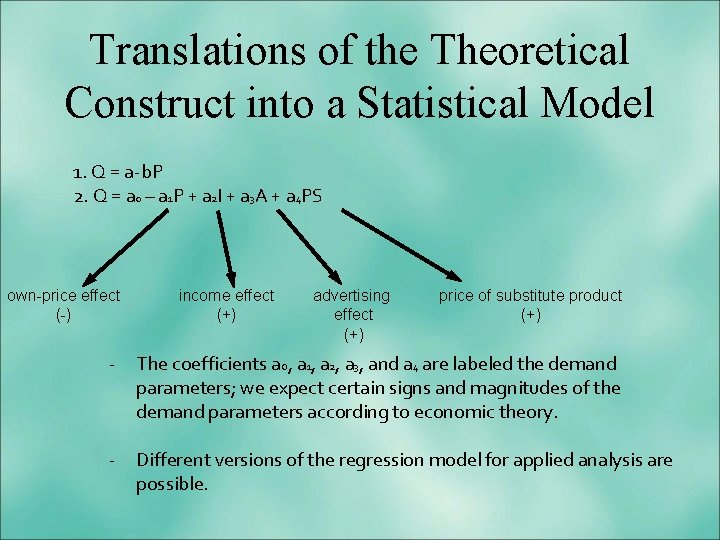

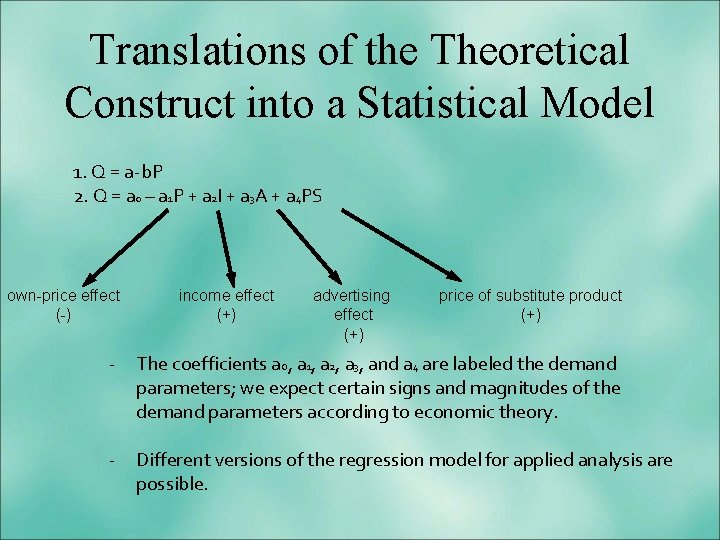

Translations of the Theoretical Construct into a Statistical Model 1. Q = a-b. P 2. Q = a 0 – a 1 P + a 2 I + a 3 A + a 4 PS own-price effect (-) income effect (+) advertising effect (+) price of substitute product (+) - The coefficients a 0, a 1, a 2, a 3, and a 4 are labeled the demand parameters; we expect certain signs and magnitudes of the demand parameters according to economic theory. - Different versions of the regression model for applied analysis are possible.

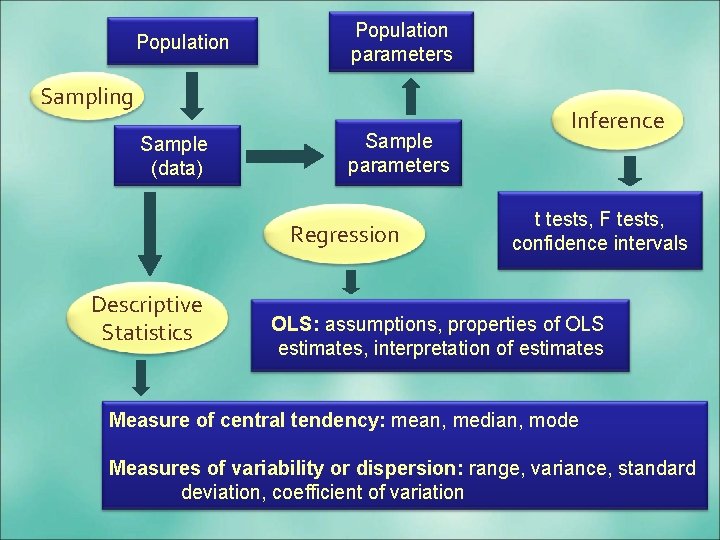

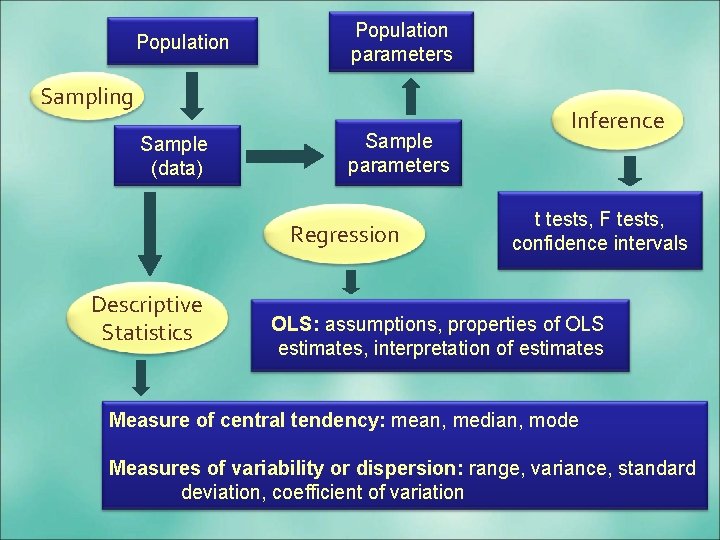

Population parameters Sampling Sample (data) Sample parameters Regression Descriptive Statistics Inference t tests, F tests, confidence intervals OLS: assumptions, properties of OLS estimates, interpretation of estimates Measure of central tendency: mean, median, mode Measures of variability or dispersion: range, variance, standard deviation, coefficient of variation

Descriptive Statistics Measures of central tendency: - mean - median - mode Measures of dispersion/variability: - range - variance -standard deviation - coefficient of variation

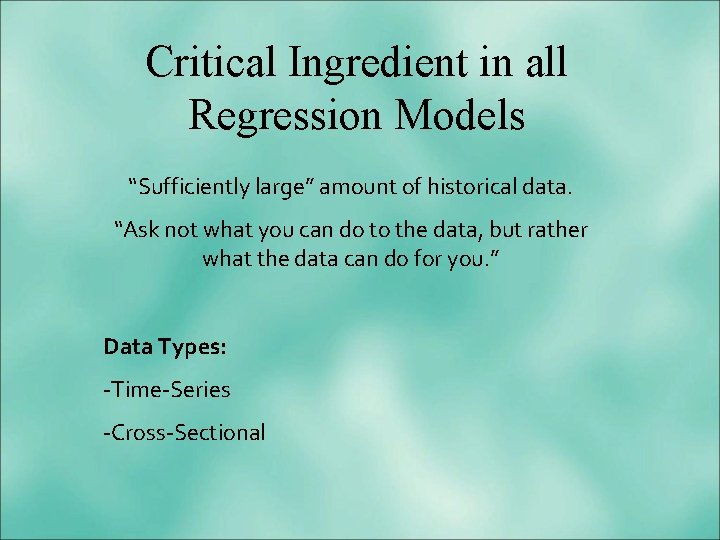

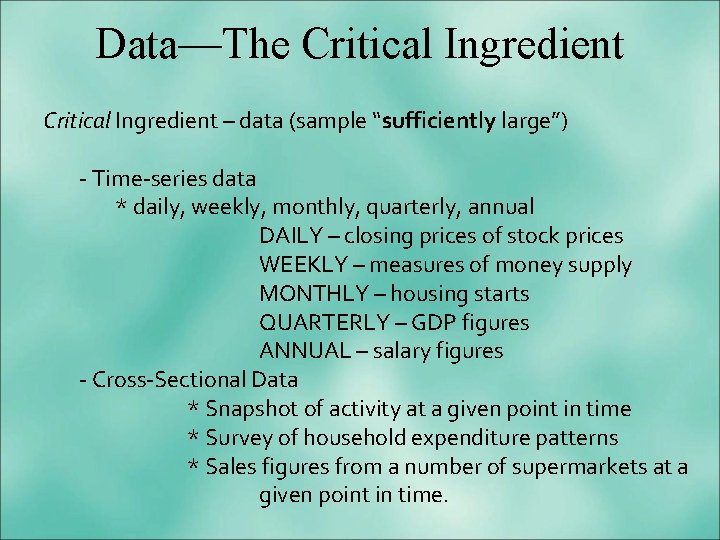

Critical Ingredient in all Regression Models “Sufficiently large” amount of historical data. “Ask not what you can do to the data, but rather what the data can do for you. ” Data Types: -Time-Series -Cross-Sectional

Data—The Critical Ingredient – data (sample “sufficiently large”) - Time-series data * daily, weekly, monthly, quarterly, annual DAILY – closing prices of stock prices WEEKLY – measures of money supply MONTHLY – housing starts QUARTERLY – GDP figures ANNUAL – salary figures - Cross-Sectional Data * Snapshot of activity at a given point in time * Survey of household expenditure patterns * Sales figures from a number of supermarkets at a given point in time.

Quote from Lord Kelvin “I often say that when you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is of a meager and unsatisfactory kind. ”

Get a Feel for the Data -Plots of key variables -Scatter plots -Descriptive statistics • mean • median • minimum • maximum • standard deviation • skewness • kurtosis • distribution

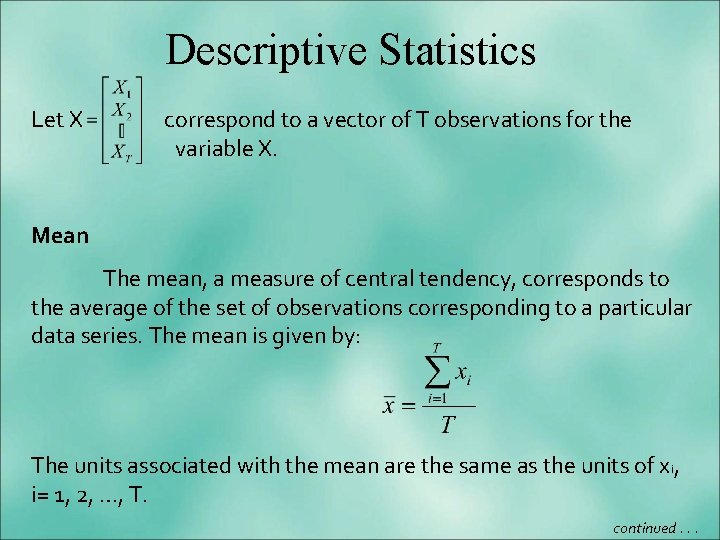

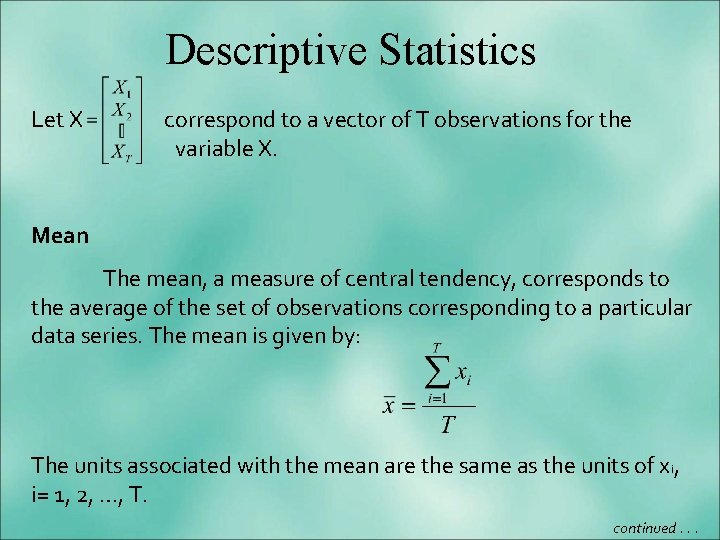

Descriptive Statistics Let X correspond to a vector of T observations for the variable X. Mean The mean, a measure of central tendency, corresponds to the average of the set of observations corresponding to a particular data series. The mean is given by: The units associated with the mean are the same as the units of xi, i= 1, 2, …, T. continued. . .

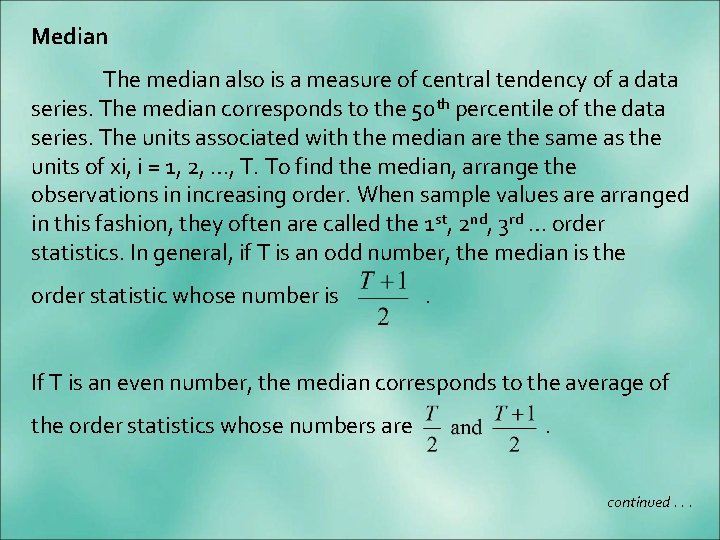

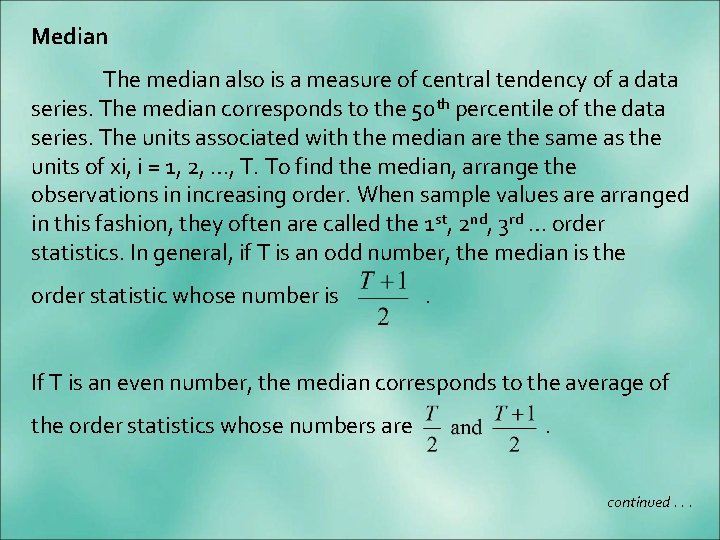

Median The median also is a measure of central tendency of a data series. The median corresponds to the 50 th percentile of the data series. The units associated with the median are the same as the units of xi, i = 1, 2, …, T. To find the median, arrange the observations in increasing order. When sample values are arranged in this fashion, they often are called the 1 st, 2 nd, 3 rd … order statistics. In general, if T is an odd number, the median is the order statistic whose number is . If T is an even number, the median corresponds to the average of the order statistics whose numbers are . continued. . .

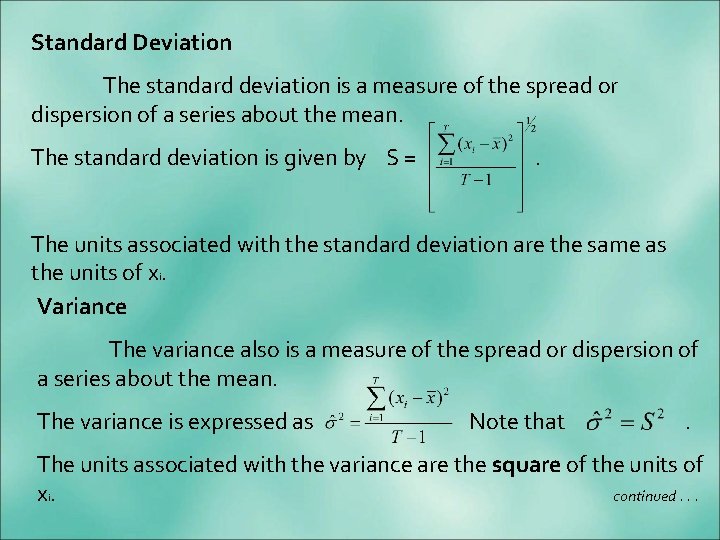

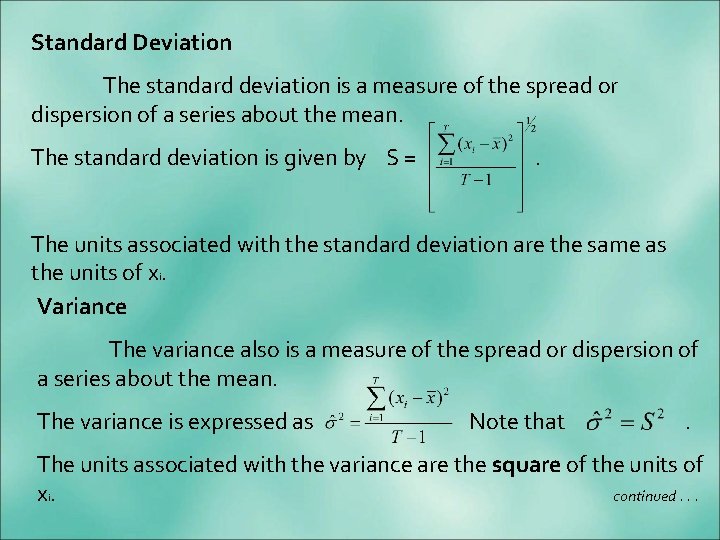

Standard Deviation The standard deviation is a measure of the spread or dispersion of a series about the mean. The standard deviation is given by S = . The units associated with the standard deviation are the same as the units of xi. Variance The variance also is a measure of the spread or dispersion of a series about the mean. The variance is expressed as Note that . The units associated with the variance are the square of the units of xi. continued. . .

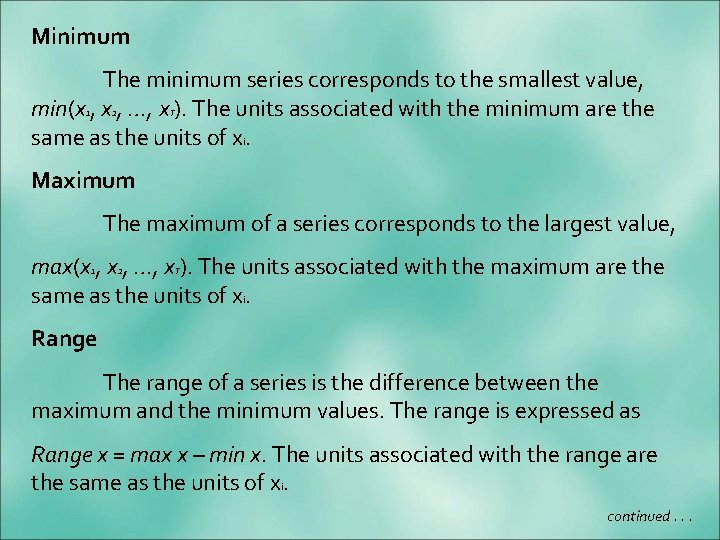

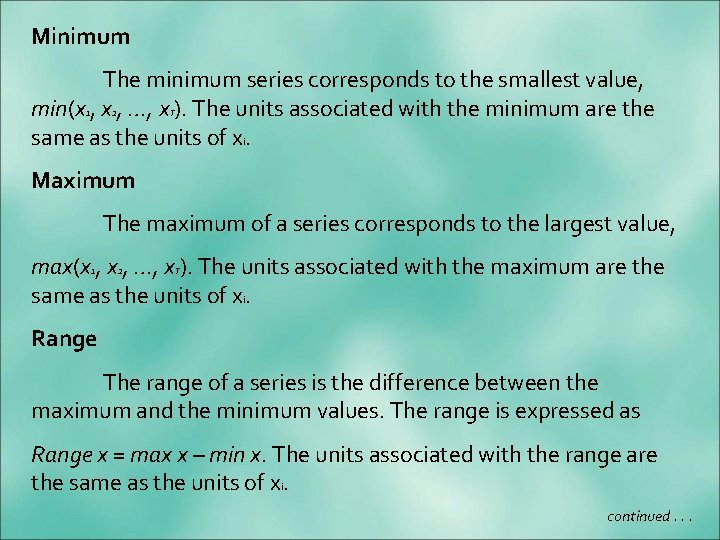

Minimum The minimum series corresponds to the smallest value, min(x 1, x 2, …, x ). The units associated with the minimum are the same as the units of xi. T Maximum The maximum of a series corresponds to the largest value, max(x 1, x 2, …, x ). The units associated with the maximum are the same as the units of xi. T Range The range of a series is the difference between the maximum and the minimum values. The range is expressed as Range x = max x – min x. The units associated with the range are the same as the units of xi. continued. . .

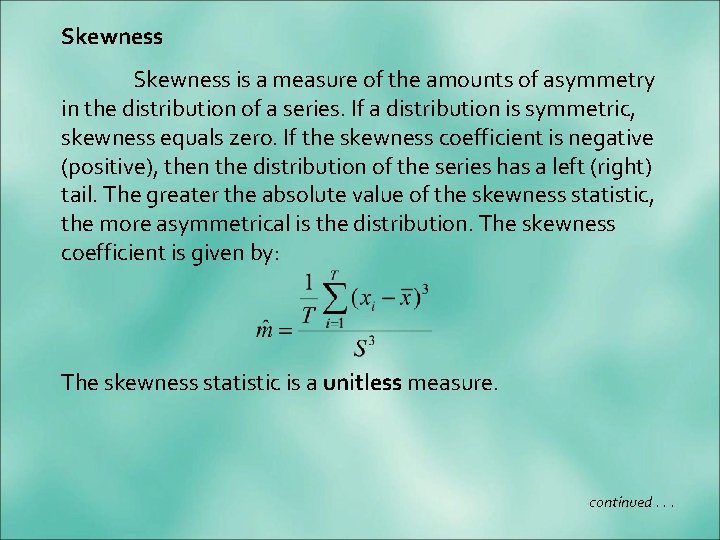

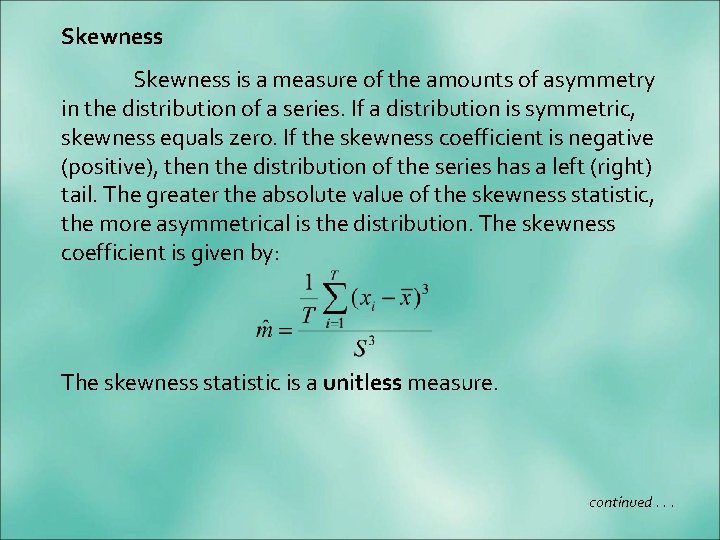

Skewness is a measure of the amounts of asymmetry in the distribution of a series. If a distribution is symmetric, skewness equals zero. If the skewness coefficient is negative (positive), then the distribution of the series has a left (right) tail. The greater the absolute value of the skewness statistic, the more asymmetrical is the distribution. The skewness coefficient is given by: The skewness statistic is a unitless measure. continued. . .

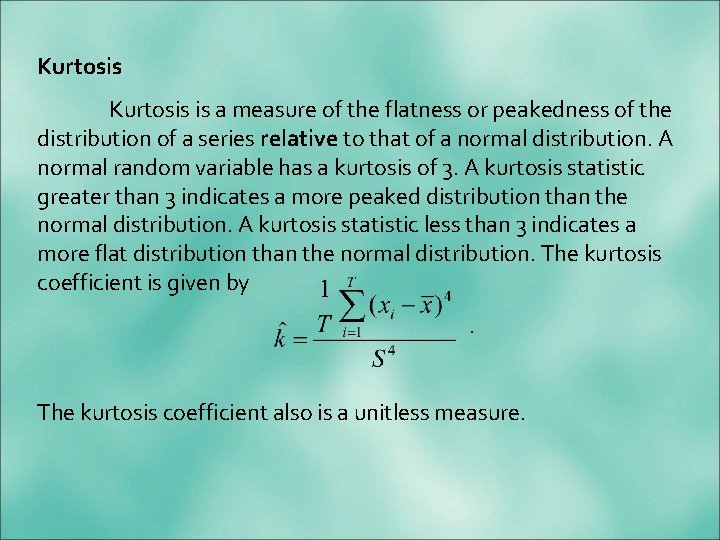

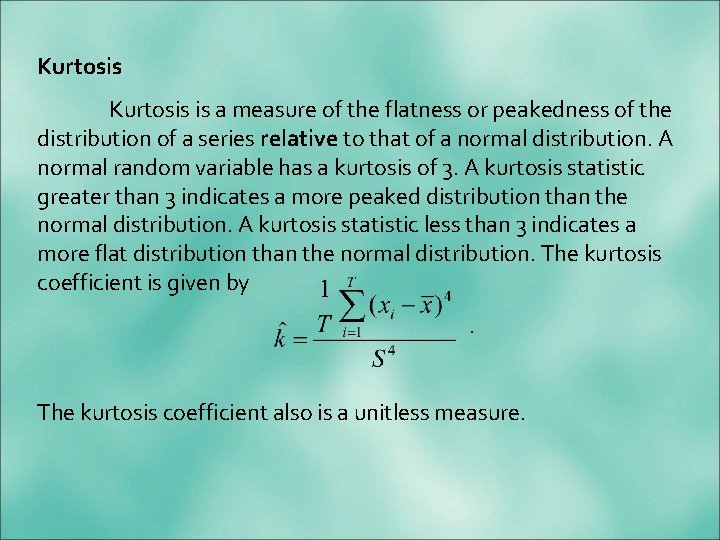

Kurtosis is a measure of the flatness or peakedness of the distribution of a series relative to that of a normal distribution. A normal random variable has a kurtosis of 3. A kurtosis statistic greater than 3 indicates a more peaked distribution than the normal distribution. A kurtosis statistic less than 3 indicates a more flat distribution than the normal distribution. The kurtosis coefficient is given by. The kurtosis coefficient also is a unitless measure.

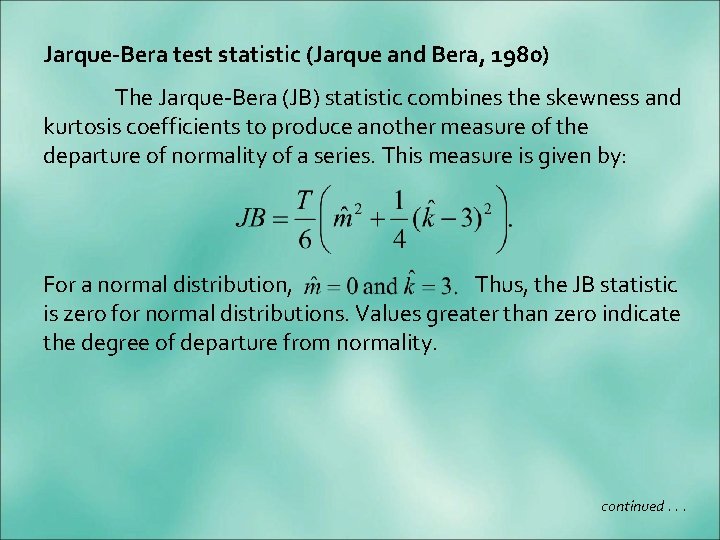

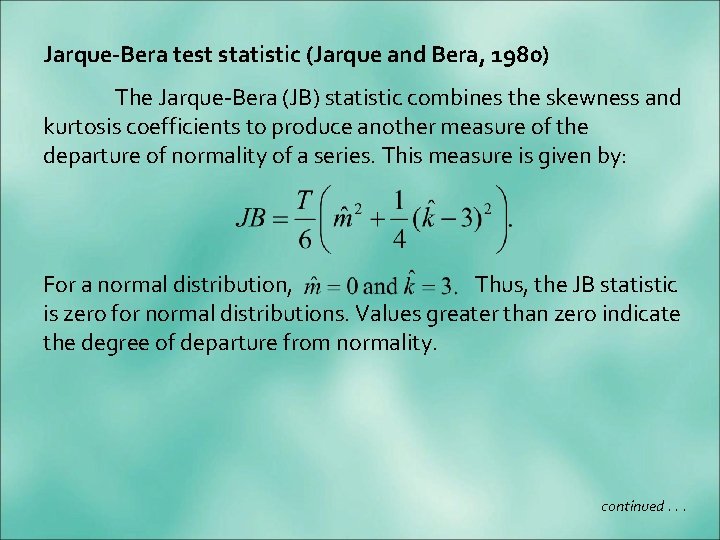

Jarque-Bera test statistic (Jarque and Bera, 1980) The Jarque-Bera (JB) statistic combines the skewness and kurtosis coefficients to produce another measure of the departure of normality of a series. This measure is given by: For a normal distribution, Thus, the JB statistic is zero for normal distributions. Values greater than zero indicate the degree of departure from normality. continued. . .

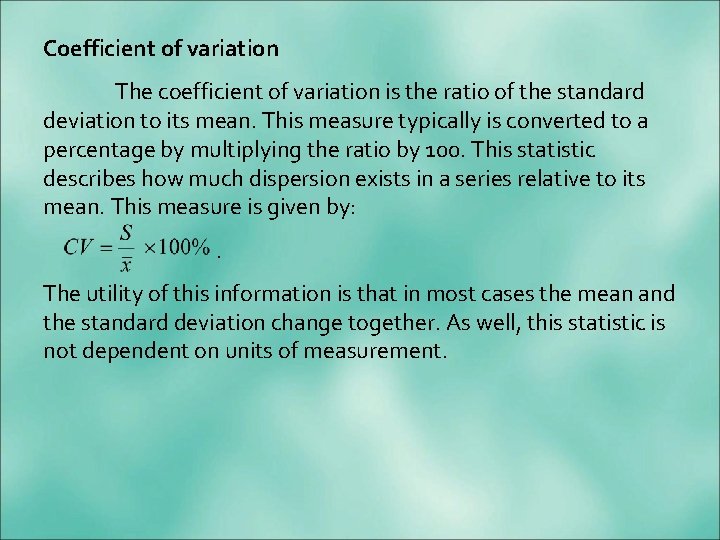

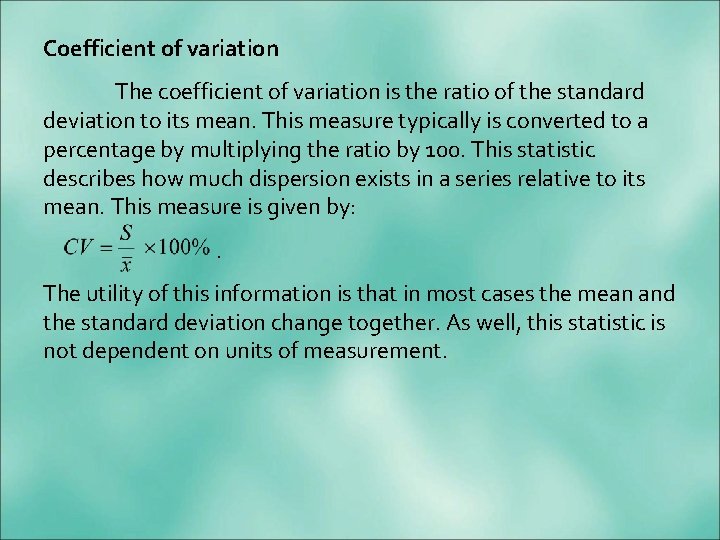

Coefficient of variation The coefficient of variation is the ratio of the standard deviation to its mean. This measure typically is converted to a percentage by multiplying the ratio by 100. This statistic describes how much dispersion exists in a series relative to its mean. This measure is given by: . The utility of this information is that in most cases the mean and the standard deviation change together. As well, this statistic is not dependent on units of measurement.

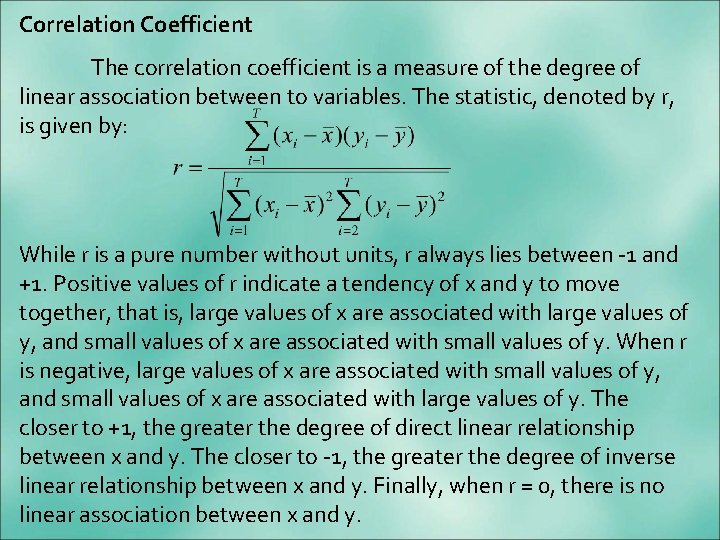

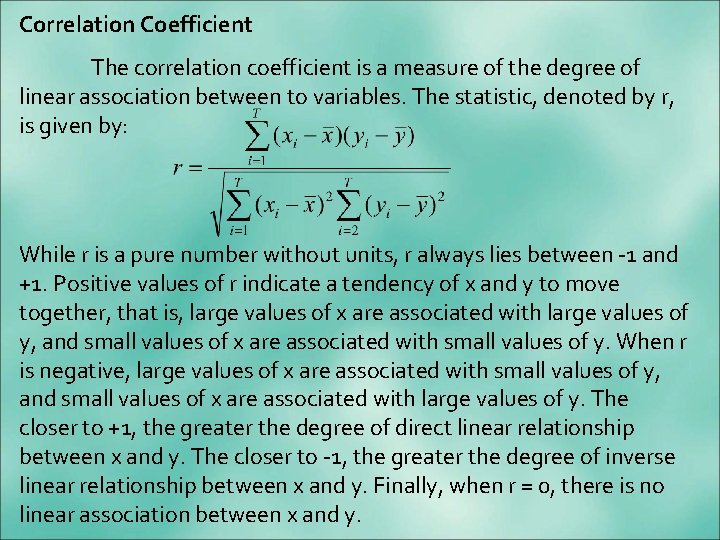

Correlation Coefficient The correlation coefficient is a measure of the degree of linear association between to variables. The statistic, denoted by r, is given by: While r is a pure number without units, r always lies between -1 and +1. Positive values of r indicate a tendency of x and y to move together, that is, large values of x are associated with large values of y, and small values of x are associated with small values of y. When r is negative, large values of x are associated with small values of y, and small values of x are associated with large values of y. The closer to +1, the greater the degree of direct linear relationship between x and y. The closer to -1, the greater the degree of inverse linear relationship between x and y. Finally, when r = 0, there is no linear association between x and y.

Mode The mode corresponds to the most frequent observation in the data series x 1, x 2, …, xr. The units associated with the mode are the same as the units of xi. In empirical applications, often the observations are non-repetitive. Hence, this measure often is of limited usefulness.

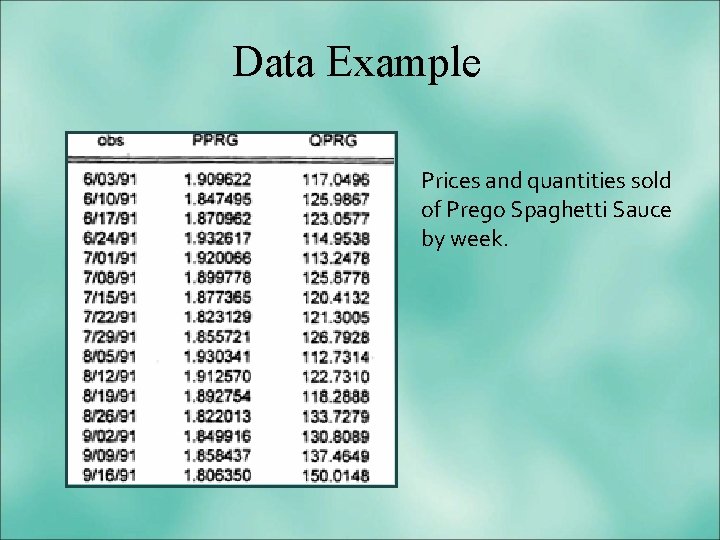

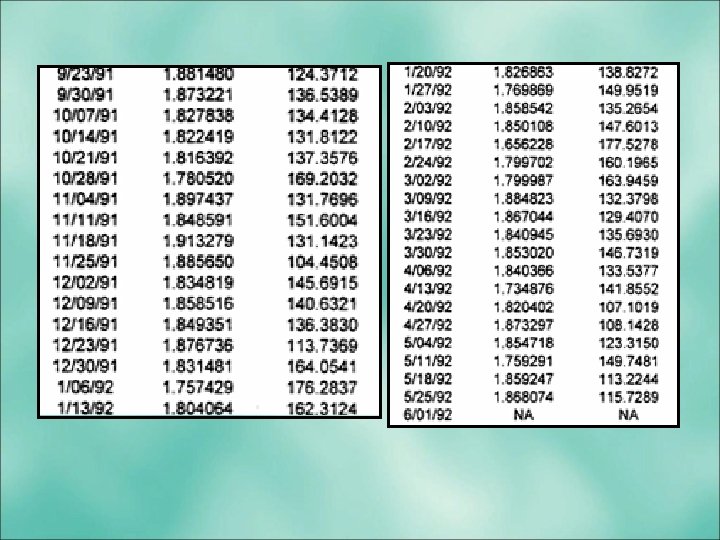

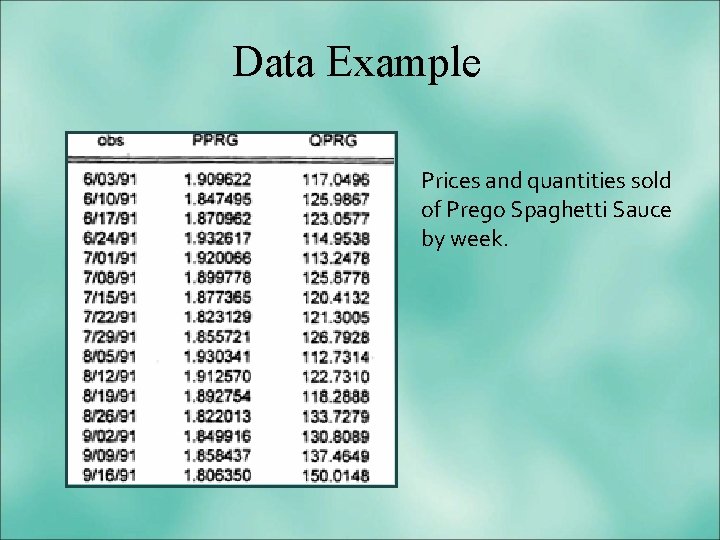

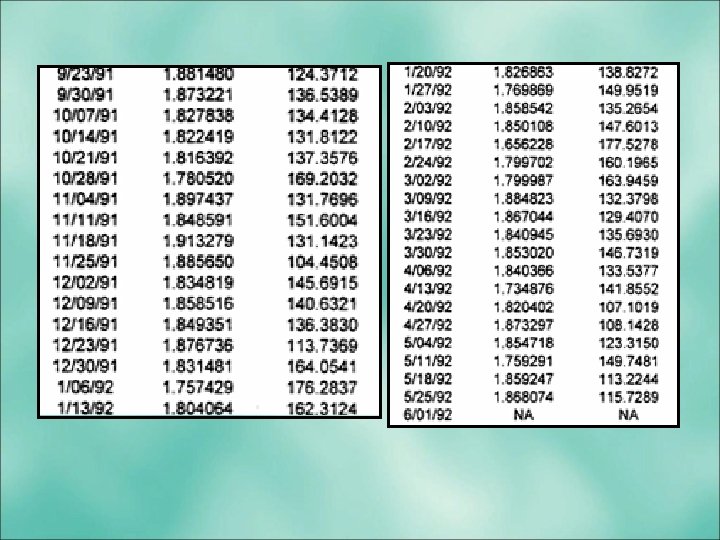

Data Example Prices and quantities sold of Prego Spaghetti Sauce by week.

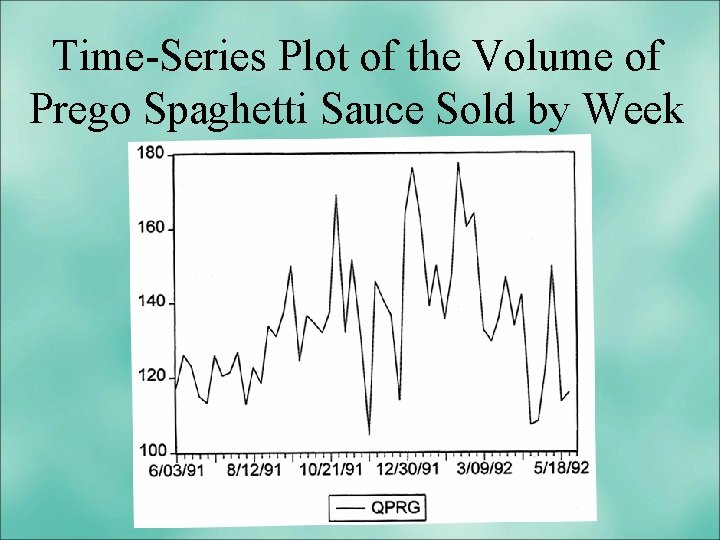

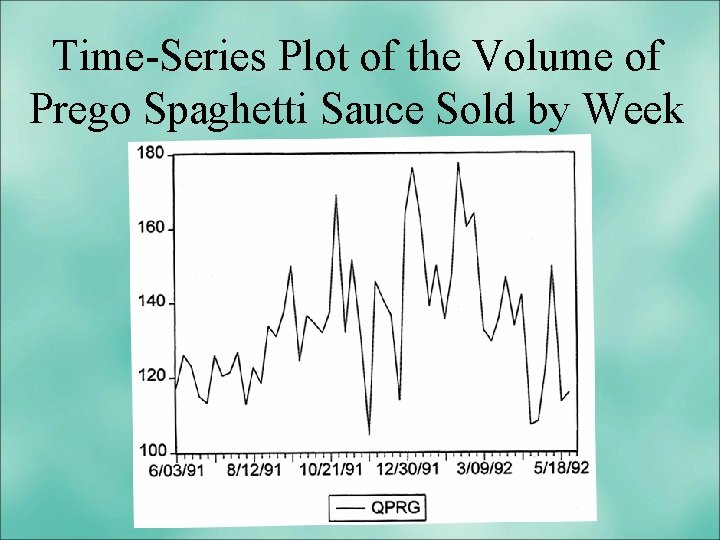

Time-Series Plot of the Volume of Prego Spaghetti Sauce Sold by Week

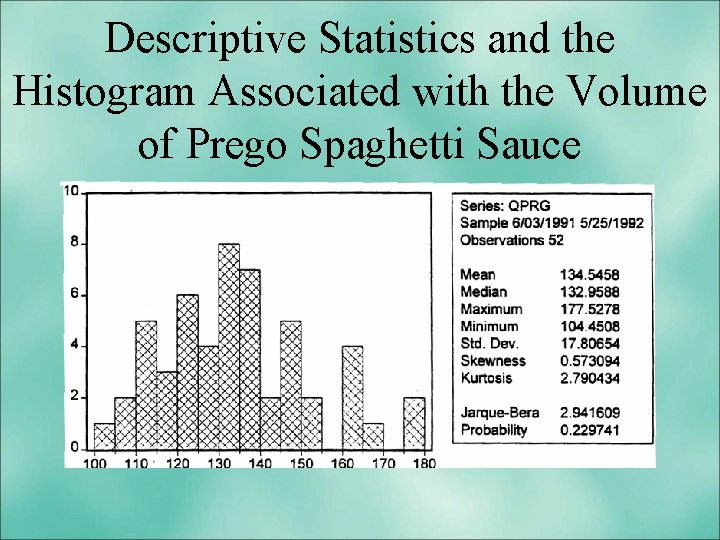

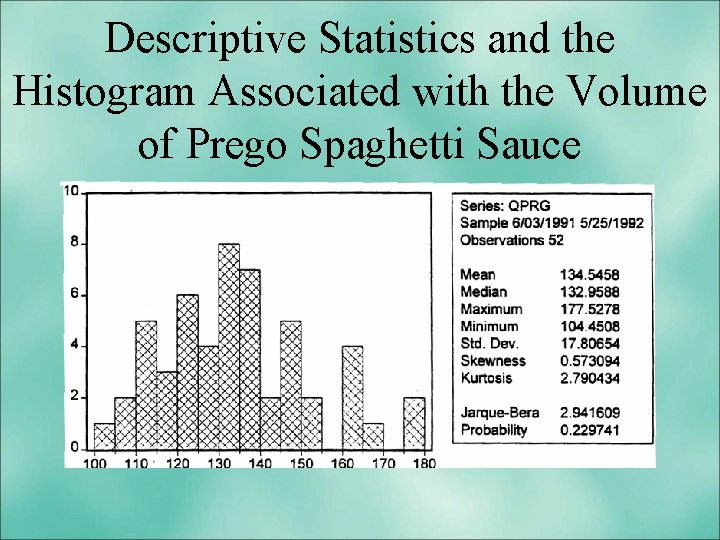

Descriptive Statistics and the Histogram Associated with the Volume of Prego Spaghetti Sauce

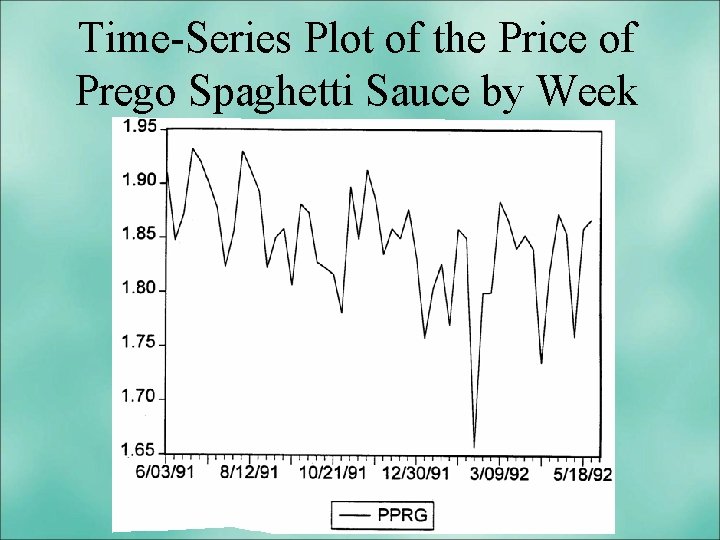

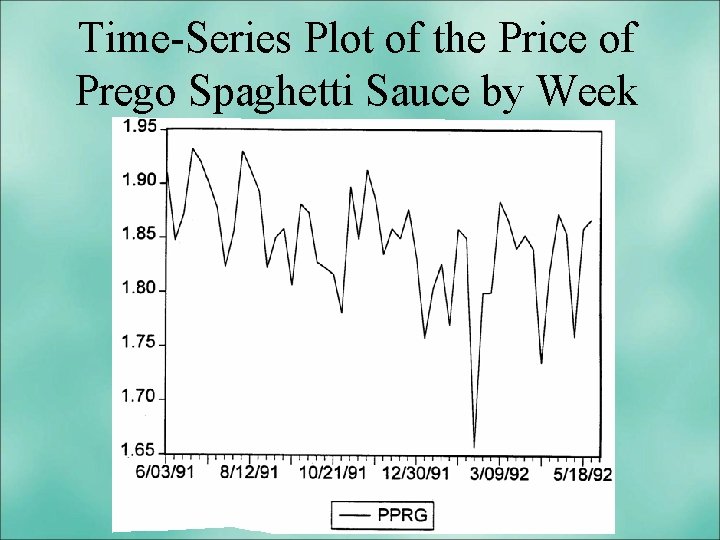

Time-Series Plot of the Price of Prego Spaghetti Sauce by Week

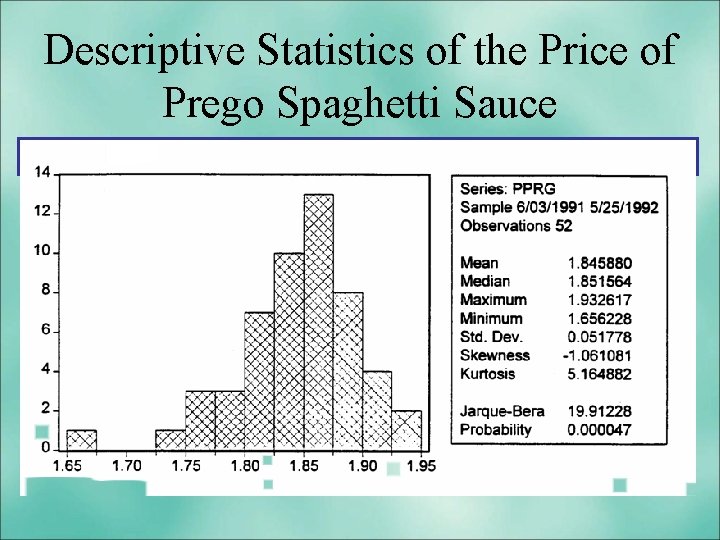

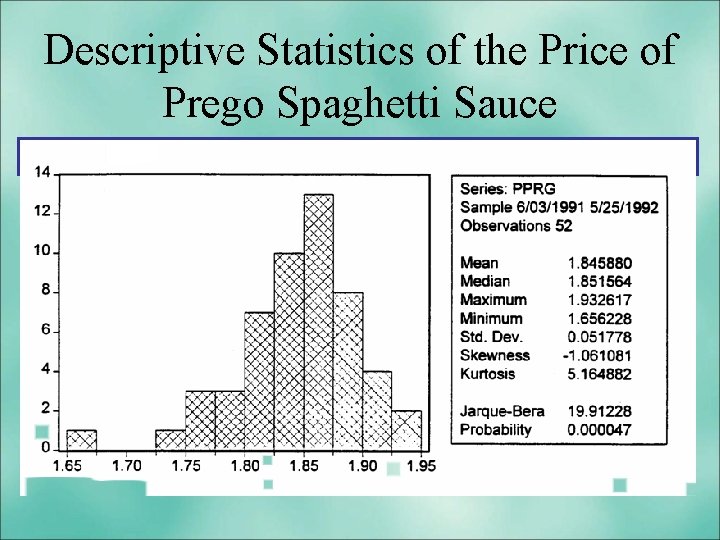

Descriptive Statistics of the Price of Prego Spaghetti Sauce

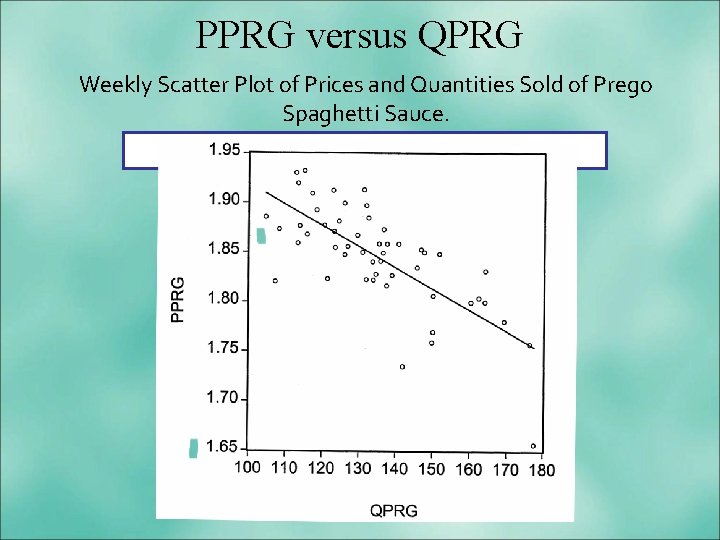

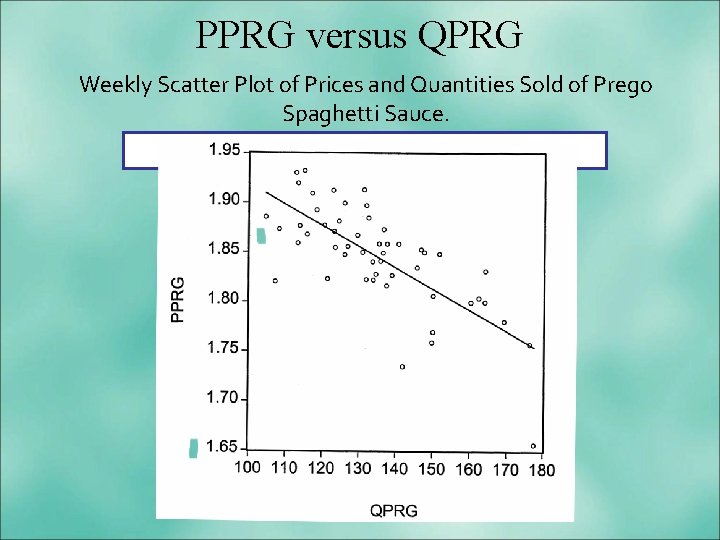

PPRG versus QPRG Weekly Scatter Plot of Prices and Quantities Sold of Prego Spaghetti Sauce.

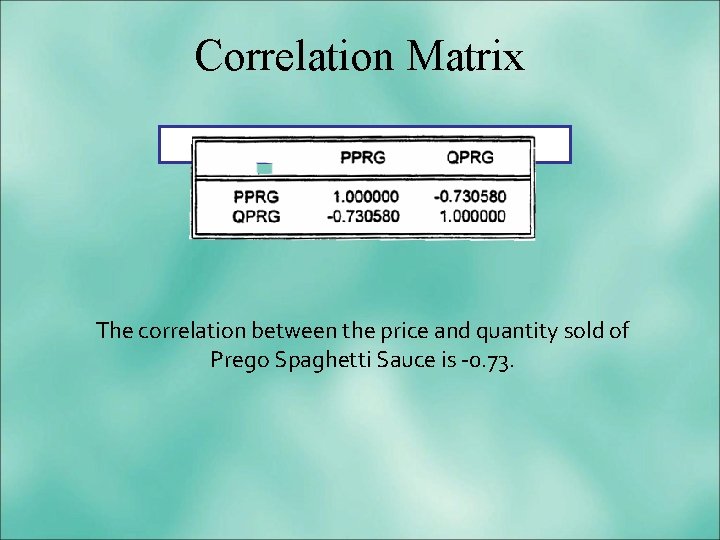

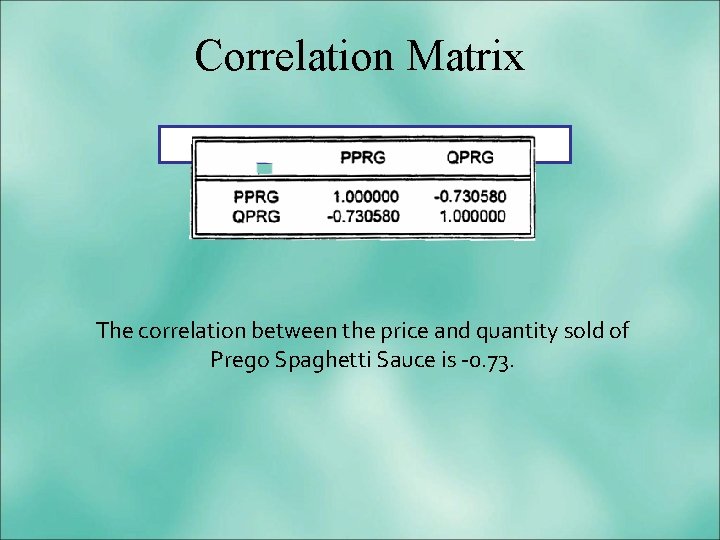

Correlation Matrix The correlation between the price and quantity sold of Prego Spaghetti Sauce is -0. 73.

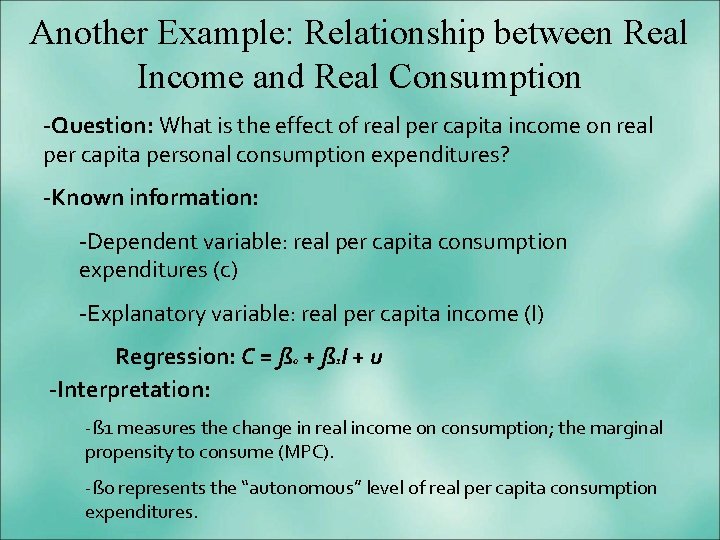

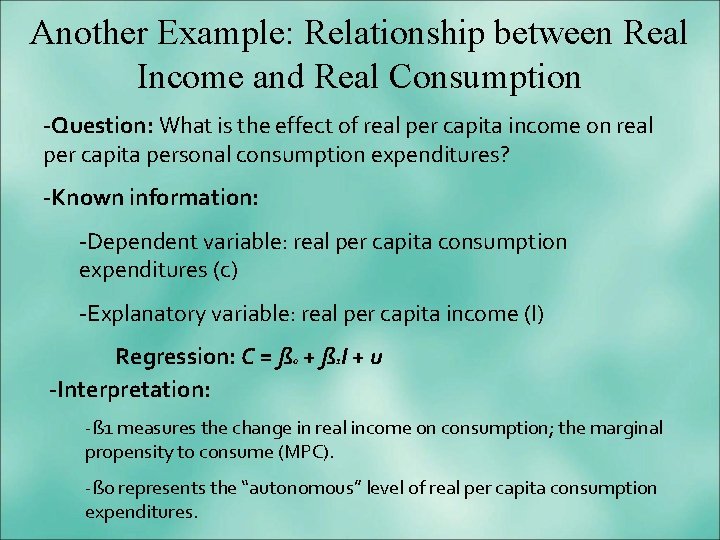

Another Example: Relationship between Real Income and Real Consumption -Question: What is the effect of real per capita income on real per capita personal consumption expenditures? -Known information: -Dependent variable: real per capita consumption expenditures (c) -Explanatory variable: real per capita income (I) Regression: C = ß 0 + ß 1 I + u -Interpretation: -ß 1 measures the change in real income on consumption; the marginal propensity to consume (MPC). -ß 0 represents the “autonomous” level of real per capita consumption expenditures.

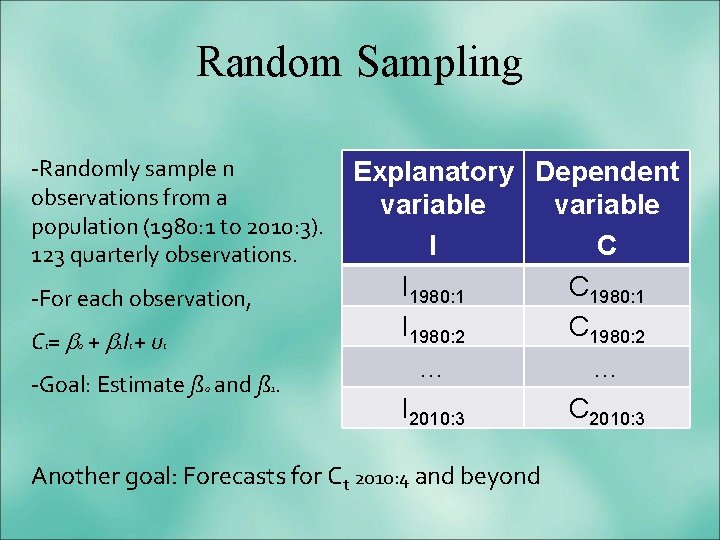

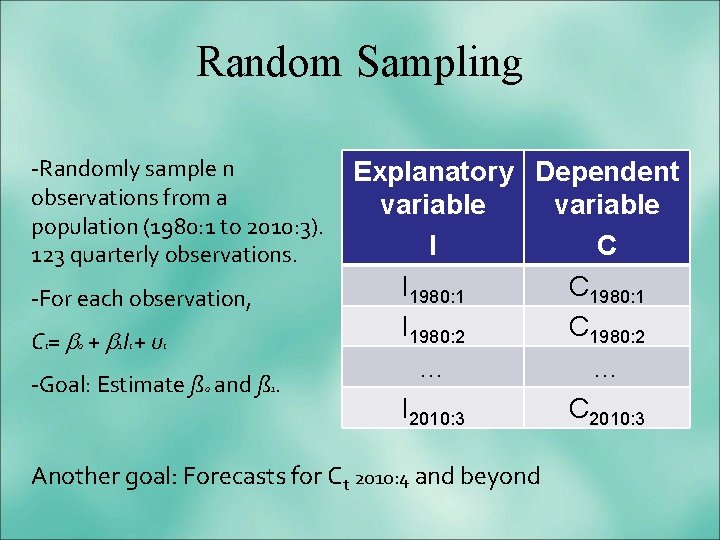

Random Sampling -Randomly sample n observations from a population (1980: 1 to 2010: 3). 123 quarterly observations. -For each observation, Ct= 0 + 1 It + ut -Goal: Estimate ß 0 and ß 1. Explanatory Dependent variable I C I 1980: 1 C 1980: 1 I 1980: 2 C 1980: 2 … … I 2010: 3 C 2010: 3 Another goal: Forecasts for Ct 2010: 4 and beyond

Estimation of the Simple Linear Regression Model

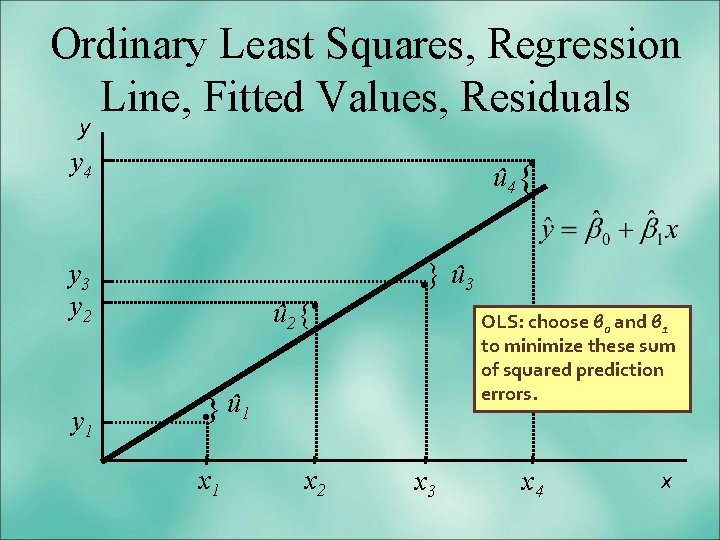

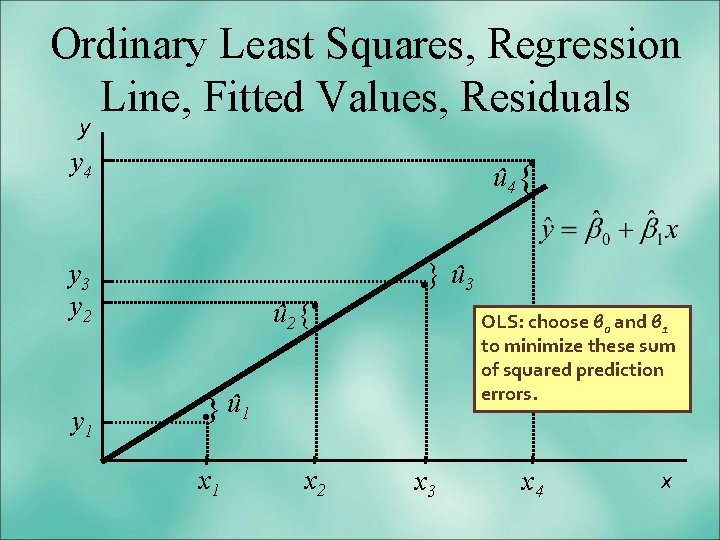

Ordinary Least Squares, Regression Line, Fitted Values, Residuals y . y 4 û 4 { y 3 y 2 y 1 û 2 {. . } û 3 OLS: choose β 0 and β 1 to minimize these sum of squared prediction errors. . } û 1 x 2 x 3 x 4 x

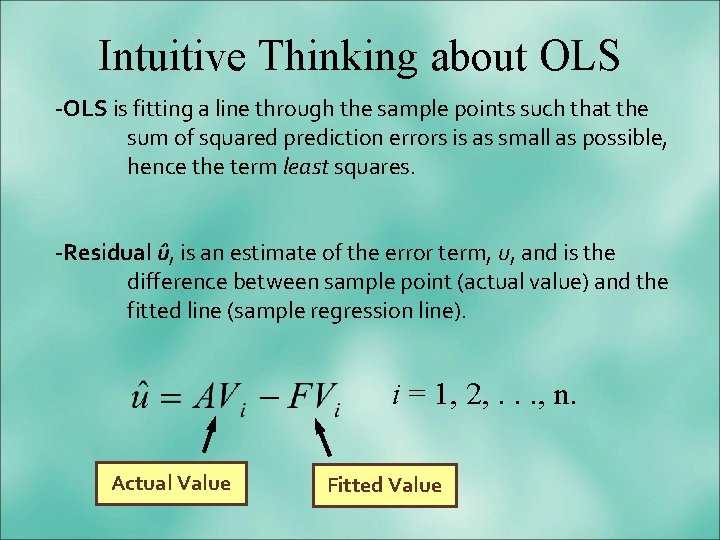

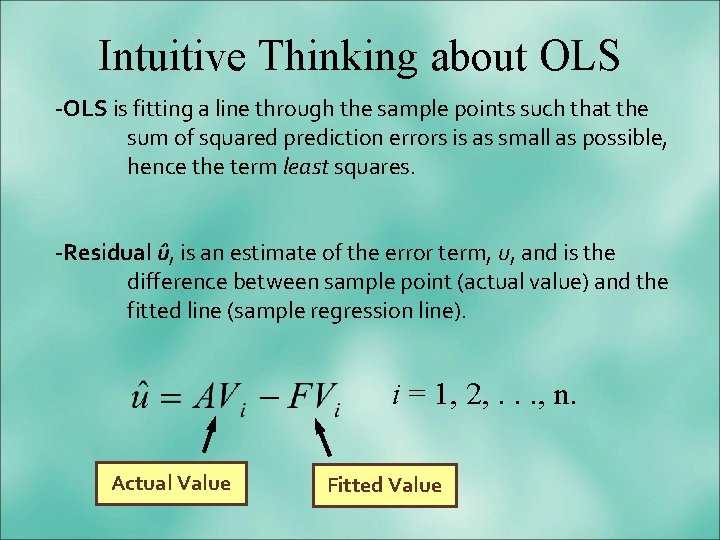

Intuitive Thinking about OLS -OLS is fitting a line through the sample points such that the sum of squared prediction errors is as small as possible, hence the term least squares. -Residual û, is an estimate of the error term, u, and is the difference between sample point (actual value) and the fitted line (sample regression line). i = 1, 2, . . . , n. Actual Value Fitted Value

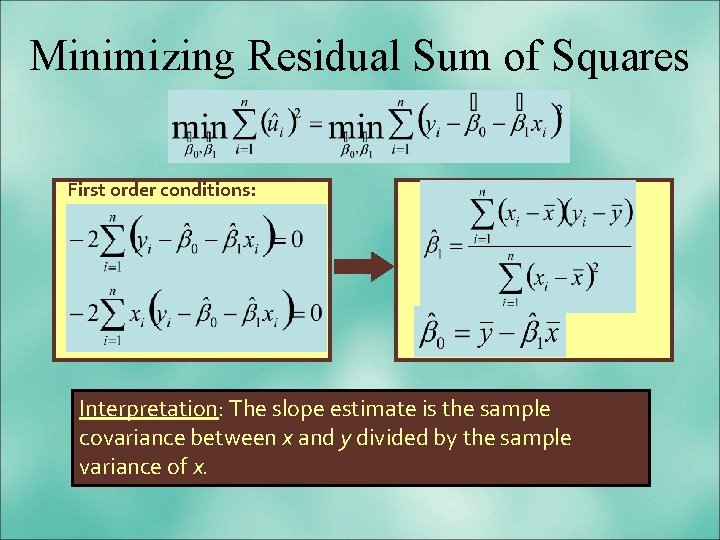

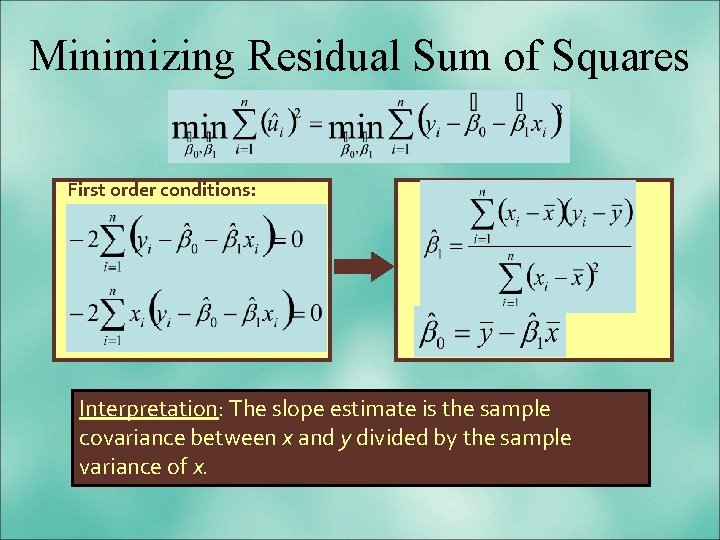

Minimizing Residual Sum of Squares First order conditions: Interpretation: The slope estimate is the sample covariance between x and y divided by the sample variance of x.

Assumptions Behind the Simple Linear Regression Model y i = ß 0 + ß 1 x i + u i

Assumption 1: Zero Mean of u E(u) = 0: The average value of u, the error term, is 0.

Assumption 2: Independent Error Terms Each observed ui is independent of all other uj, Corr(uiuj) = 0 for all i j

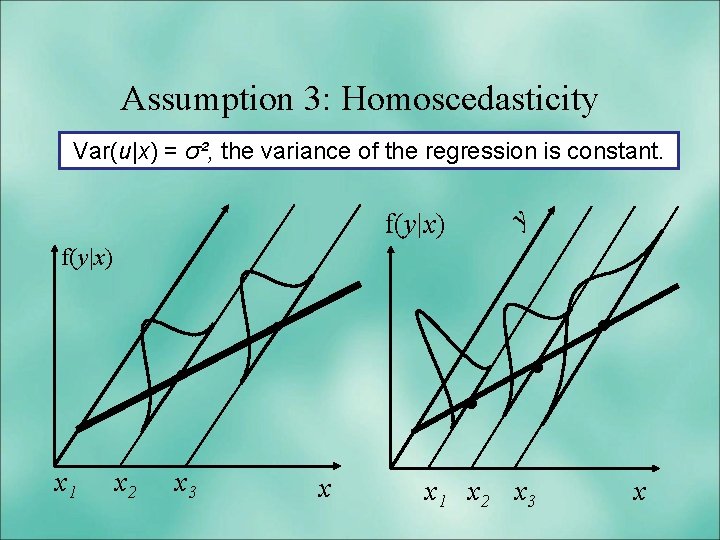

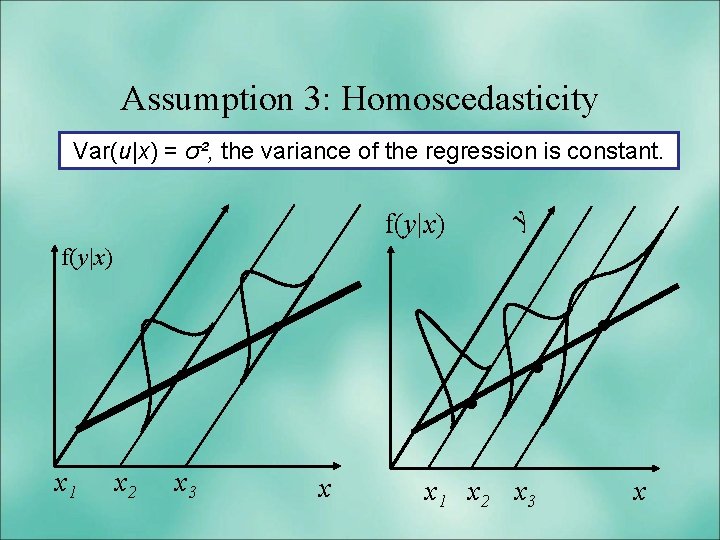

Assumption 3: Homoscedasticity Var(u|x) = σ², the variance of the regression is constant. f(y|x) y f(y|x) . x 1 x 2 x 3 . . . x x 1 x 2 x 3 . x

Assumption 4: Normality -The error term u is normally distributed with mean zero and variance σ². -This assumption is essential for inference and forecasting. -This assumption is not essential to estimate the parameters of the regression model. -We only need assumptions 1 -3 to derive the OLS estimators -OLS → ordinary least squares

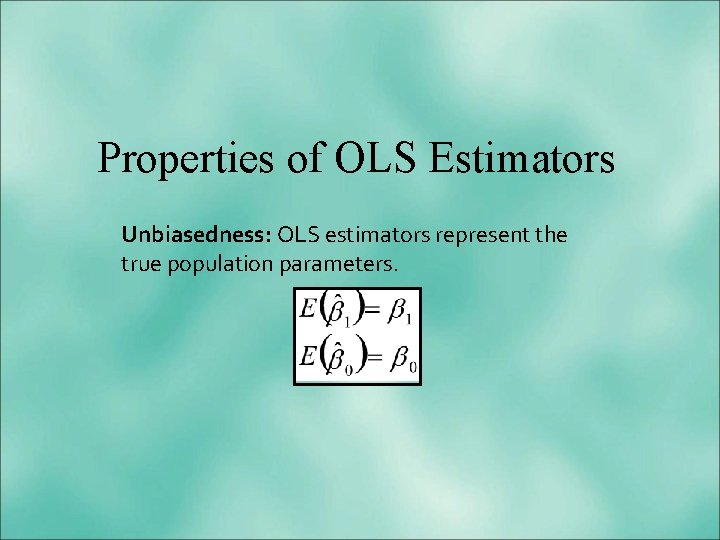

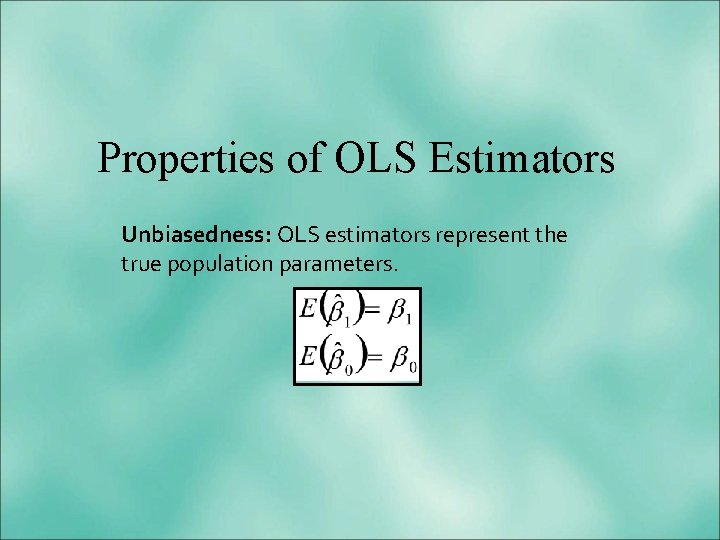

Properties of OLS Estimators Unbiasedness: OLS estimators represent the true population parameters.

Variance of OLS Estimators -We know that the sampling distribution of our estimate is centered around the true parameter (unbiasedness). -Unbiasedness is a description of the estimator—in a given sample we may be “near” or “far” from the true parameter. But on average, we will cover the population parameter. -Question: How spread out is the distribution of the OLS estimator? The answer to this question leads us to examining the variance of the OLS estimator.

Estimating the Error Variance -Variance of Population σ² vs. sample variance ². -The error variance, σ² , is unknown because we don’t observe the errors, ui. -What we observe are the residuals, ûi. -We can use the residuals to form an estimate of the error variance.

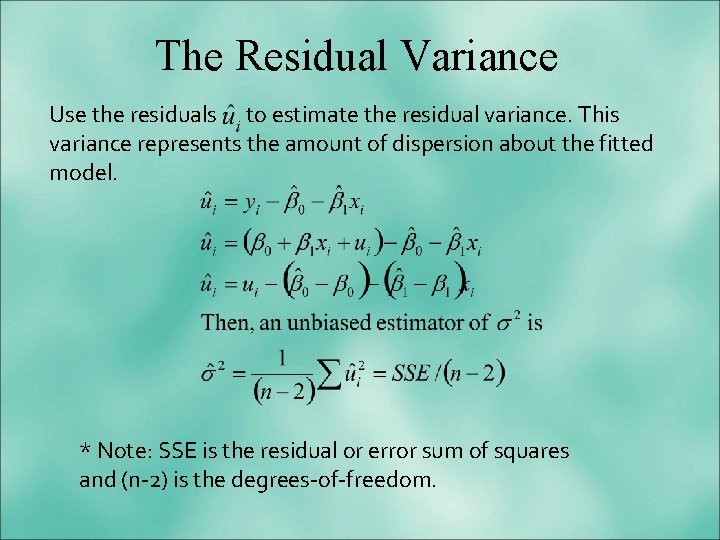

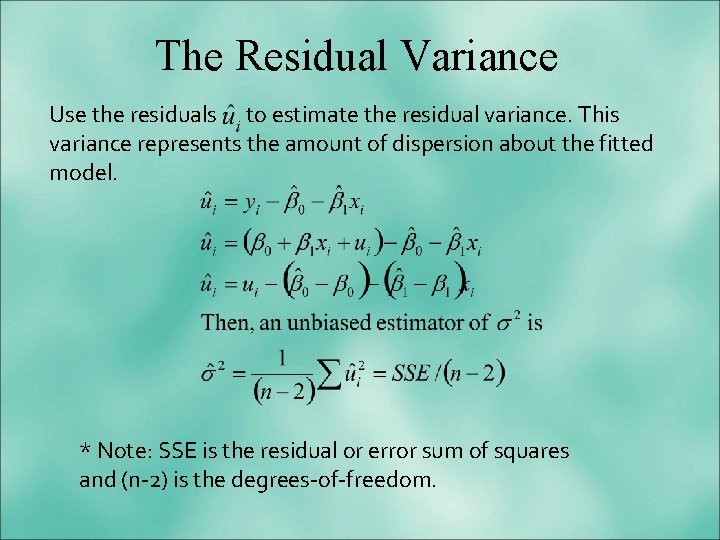

The Residual Variance Use the residuals to estimate the residual variance. This variance represents the amount of dispersion about the fitted model. * Note: SSE is the residual or error sum of squares and (n-2) is the degrees-of-freedom.

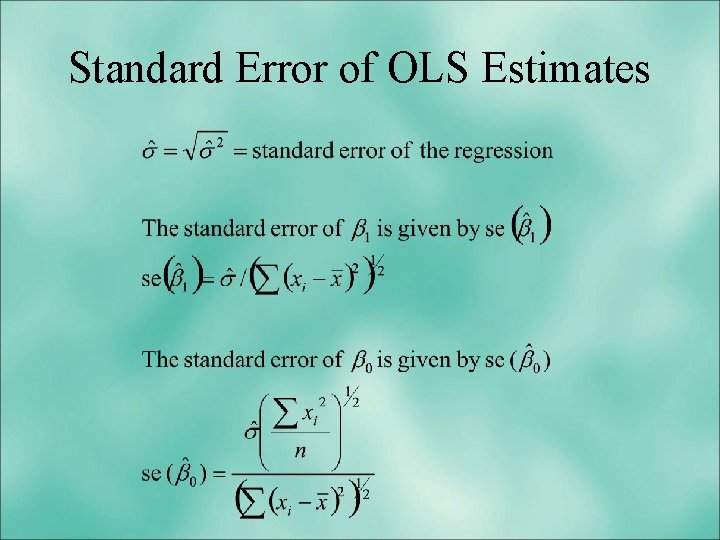

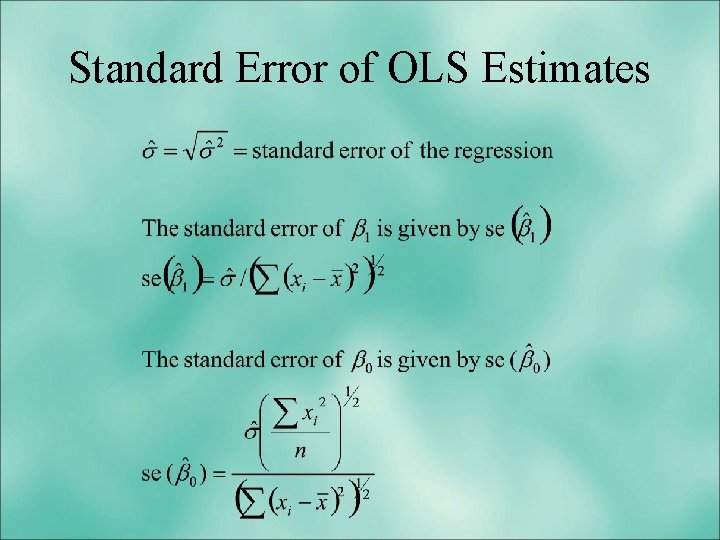

Standard Error of OLS Estimates

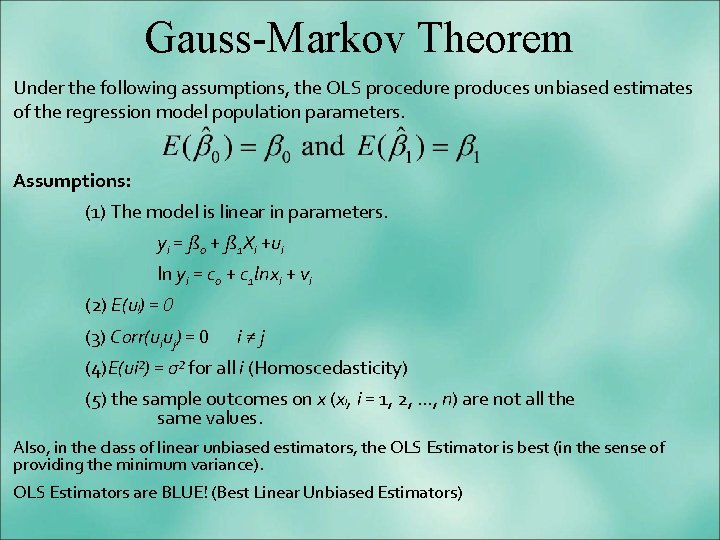

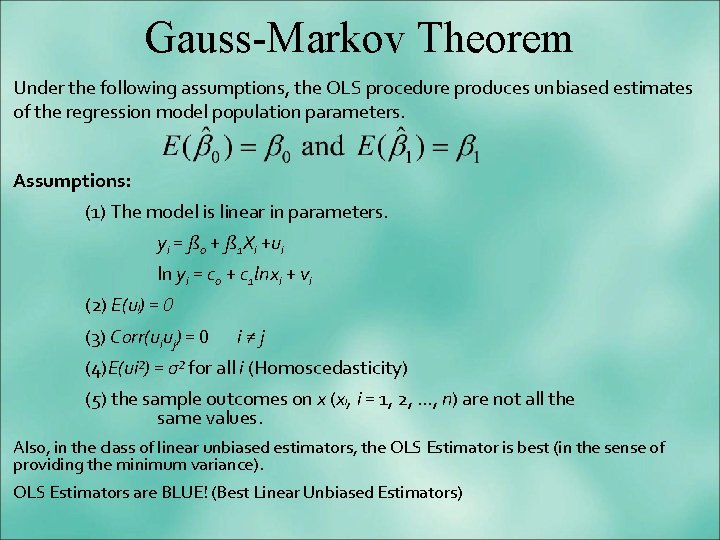

Gauss-Markov Theorem Under the following assumptions, the OLS procedure produces unbiased estimates of the regression model population parameters. Assumptions: (1) The model is linear in parameters. yi = ß 0 + ß 1 Xi +ui ln yi = c 0 + c 1 lnxi + vi (2) E(ui) = 0 (3) Corr(uiuj) = 0 i≠j (4)E(ui²) = σ² for all i (Homoscedasticity) (5) the sample outcomes on x (xi, i = 1, 2, …, n) are not all the same values. Also, in the class of linear unbiased estimators, the OLS Estimator is best (in the sense of providing the minimum variance). OLS Estimators are BLUE! (Best Linear Unbiased Estimators)

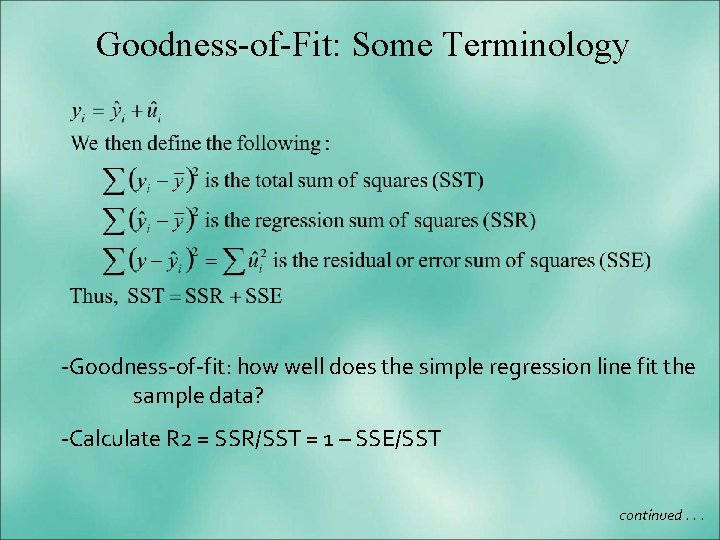

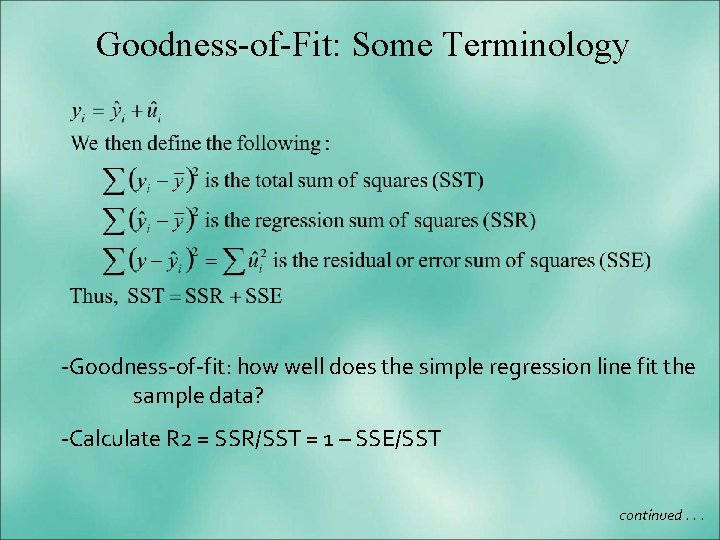

Goodness-of-Fit: Some Terminology -Goodness-of-fit: how well does the simple regression line fit the sample data? -Calculate R 2 = SSR/SST = 1 – SSE/SST continued. . .

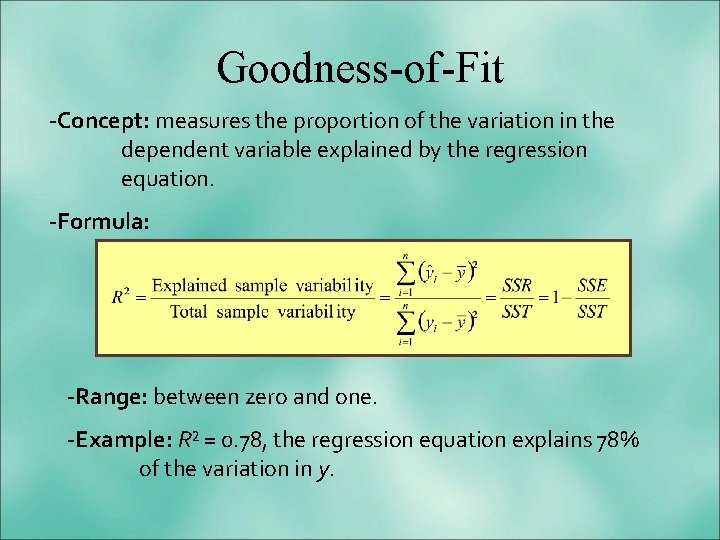

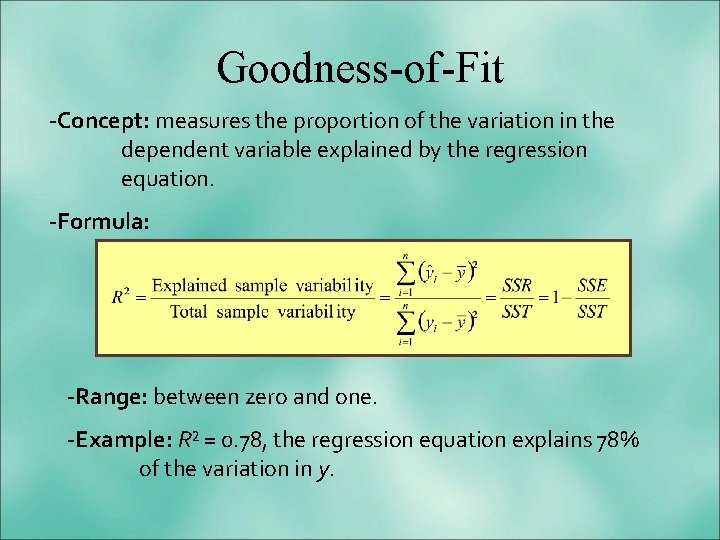

Goodness-of-Fit -Concept: measures the proportion of the variation in the dependent variable explained by the regression equation. -Formula: -Range: between zero and one. -Example: R² = 0. 78, the regression equation explains 78% of the variation in y.

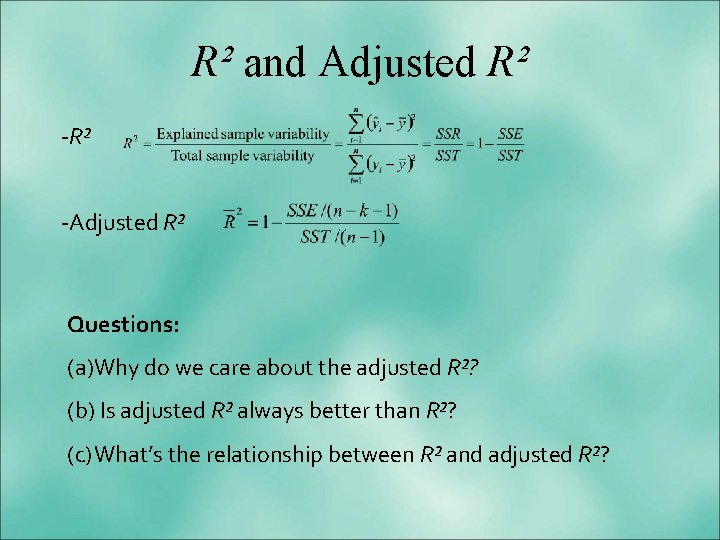

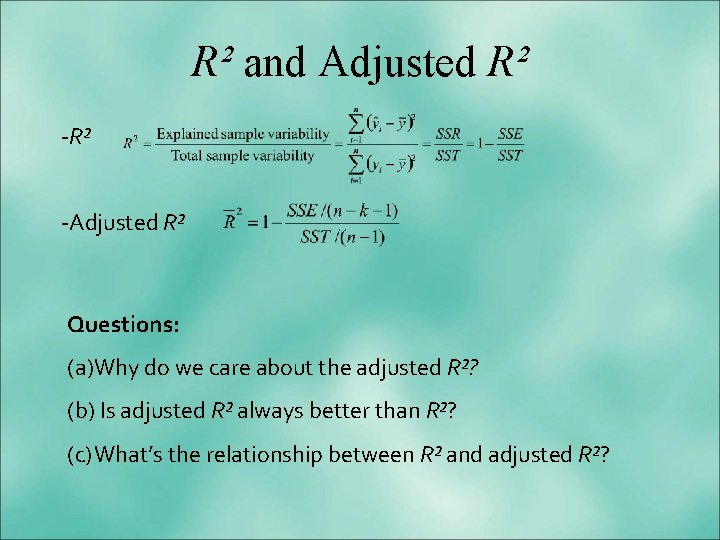

R² and Adjusted R² -Adjusted R² Questions: (a)Why do we care about the adjusted R²? (b) Is adjusted R² always better than R²? (c) What’s the relationship between R² and adjusted R²?

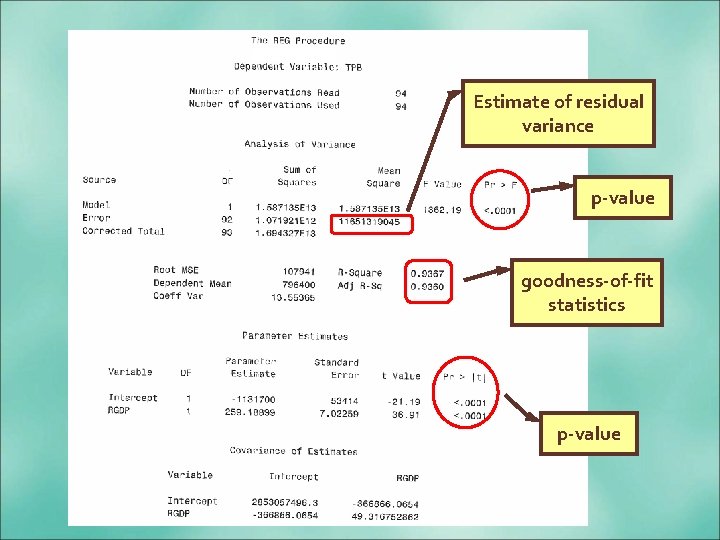

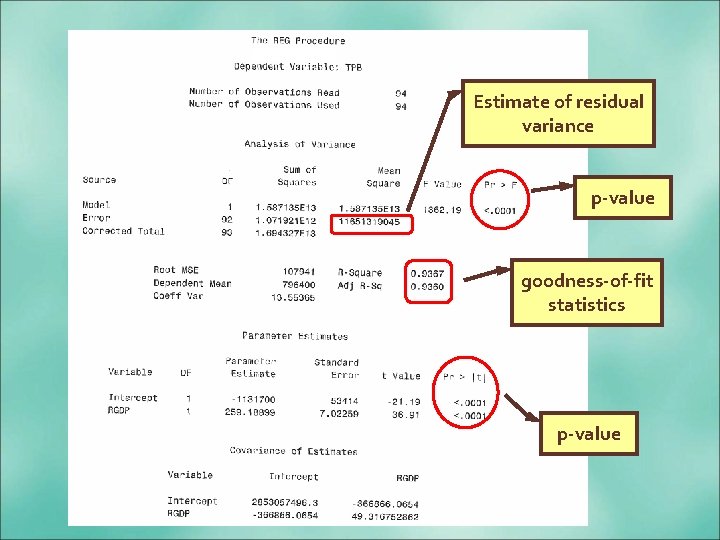

Estimate of residual variance p-value goodness-of-fit statistics p-value

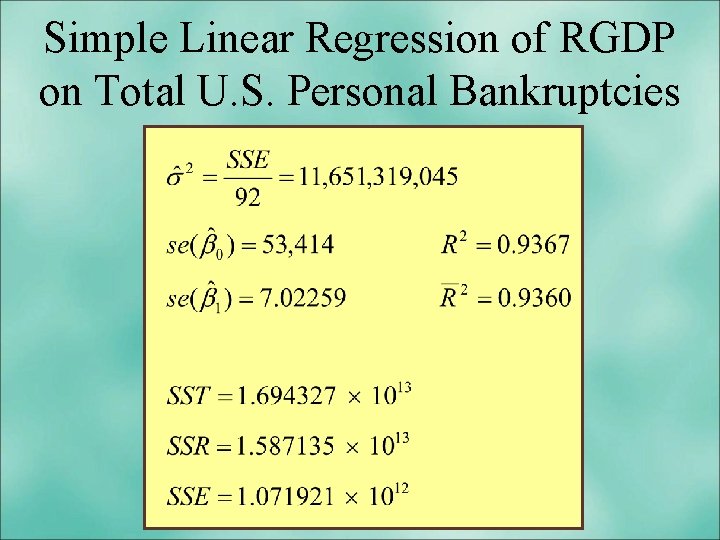

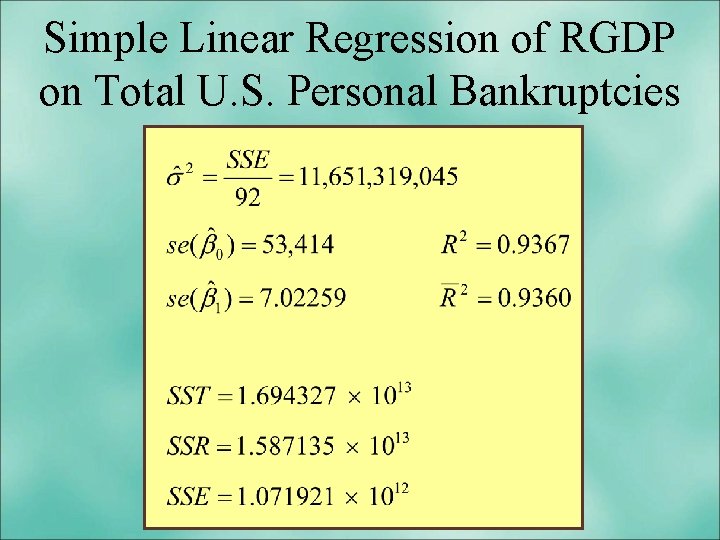

Simple Linear Regression of RGDP on Total U. S. Personal Bankruptcies

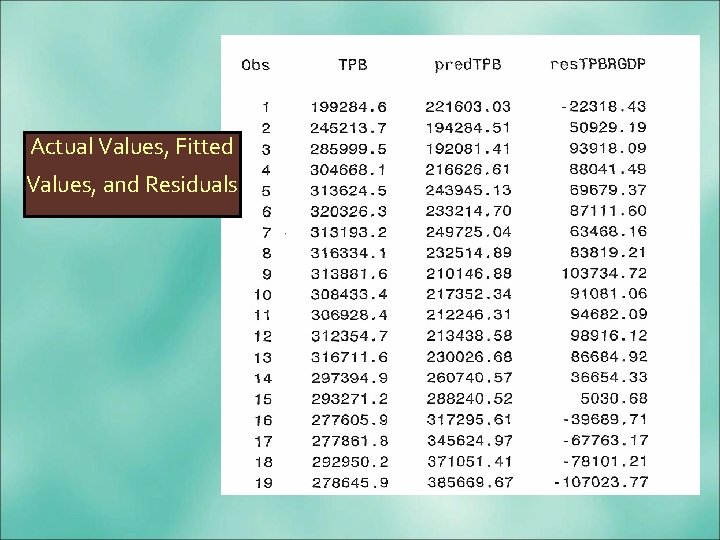

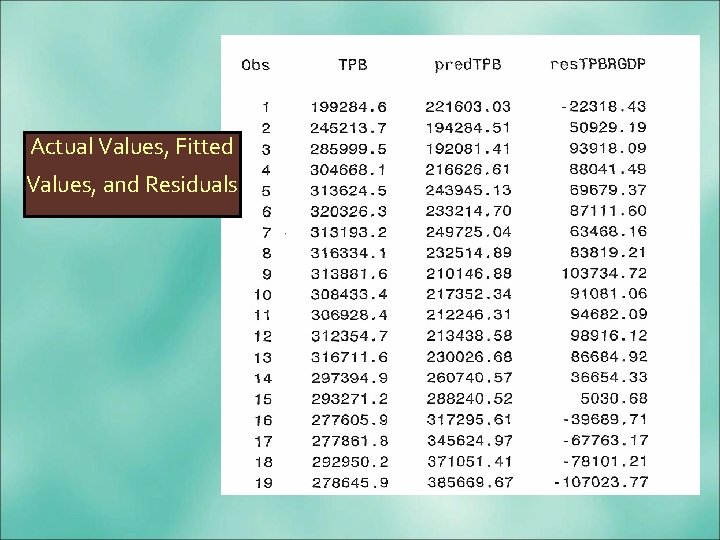

Actual Values, Fitted Values, and Residuals

What Have We Learned About Regression Analysis Thus Far? -Population parameters vs. sample parameters -Getting a feel for the data -Ordinary least squares (OLS) (a) Assumptions (b) Estimators (c) Unbiasedness (d) Interpretation of Estimated Parameters -Goodness-of-fit (a) R² (b) adjusted R²

Coming Attractions The Multiple Linear Regression Model Estimation and Inference Use of SAS to Conduct the Regression Analysis