Regexes vs Regular Expressions and Recursive Descent Parser

- Slides: 35

Regexes vs Regular Expressions; and Recursive Descent Parser Ras Bodik, Thibaud Hottelier, James Ide UC Berkeley CS 164: Introduction to Programming Languages and Compilers Fall 2010 1

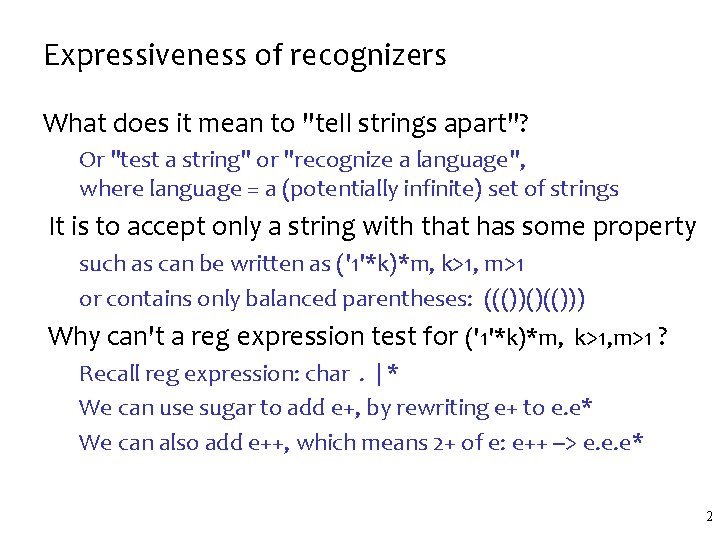

Expressiveness of recognizers What does it mean to "tell strings apart"? Or "test a string" or "recognize a language", where language = a (potentially infinite) set of strings It is to accept only a string with that has some property such as can be written as ('1'*k)*m, k>1, m>1 or contains only balanced parentheses: ((())()(())) Why can't a reg expression test for ('1'*k)*m, k>1, m>1 ? Recall reg expression: char. | * We can use sugar to add e+, by rewriting e+ to e. e* We can also add e++, which means 2+ of e: e++ --> e. e. e* 2

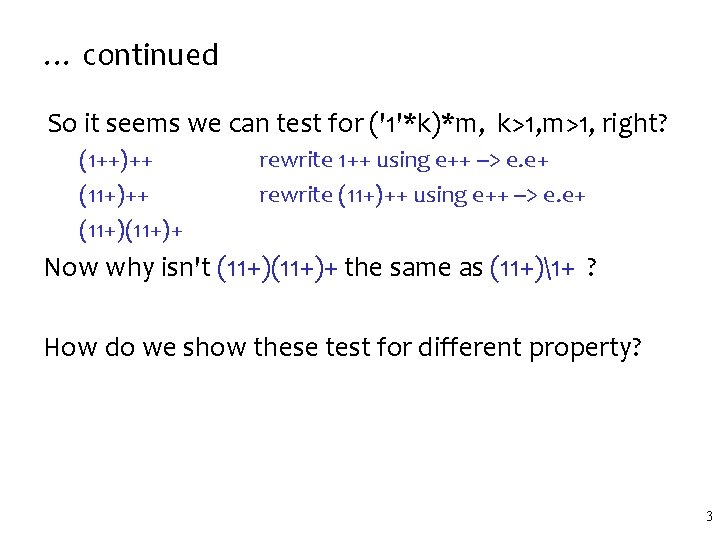

… continued So it seems we can test for ('1'*k)*m, k>1, m>1, right? (1++)++ (11+)(11+)+ rewrite 1++ using e++ --> e. e+ rewrite (11+)++ using e++ --> e. e+ Now why isn't (11+)+ the same as (11+)1+ ? How do we show these test for different property? 3

A refresher Regexes and regular expressions both support operators in this grammar R : : = char | R R | R* | R ‘|’ R Regexes suppot more operators, such as backreferences 1, 2, Capturing groups but let’s ignore this for now. 4

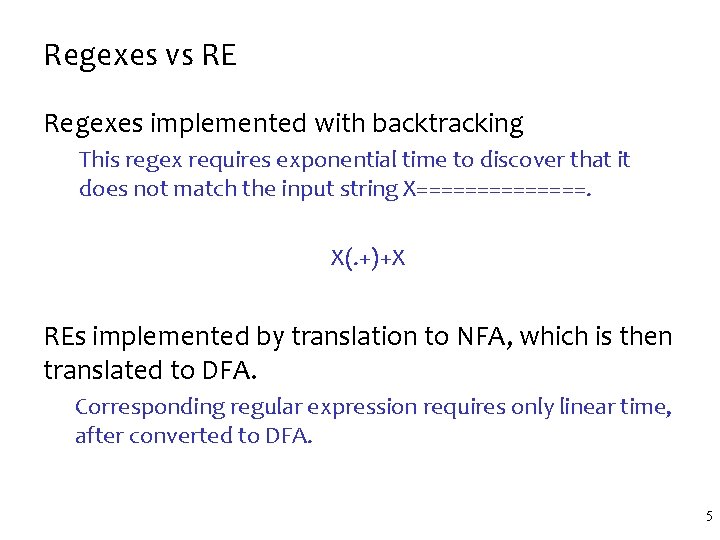

Regexes vs RE Regexes implemented with backtracking This regex requires exponential time to discover that it does not match the input string X=======. X(. +)+X REs implemented by translation to NFA, which is then translated to DFA. Corresponding regular expression requires only linear time, after converted to DFA. 5

Match. All On the problem of detecting whether a pattern (regex or RE) matches the entire string, both regex and RE interpretation of a patter agree – After all, to match the whole string, it is sufficient to find any number of times that a Kleene star matches 6

Let’s now focus on when regex and RE differ 7

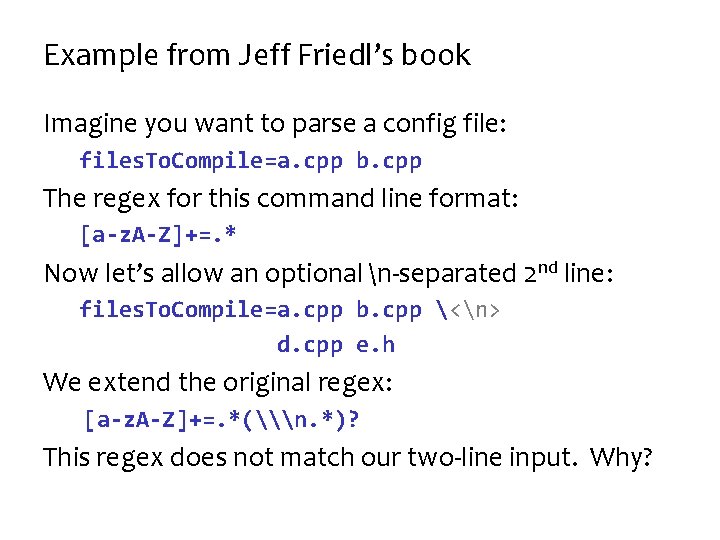

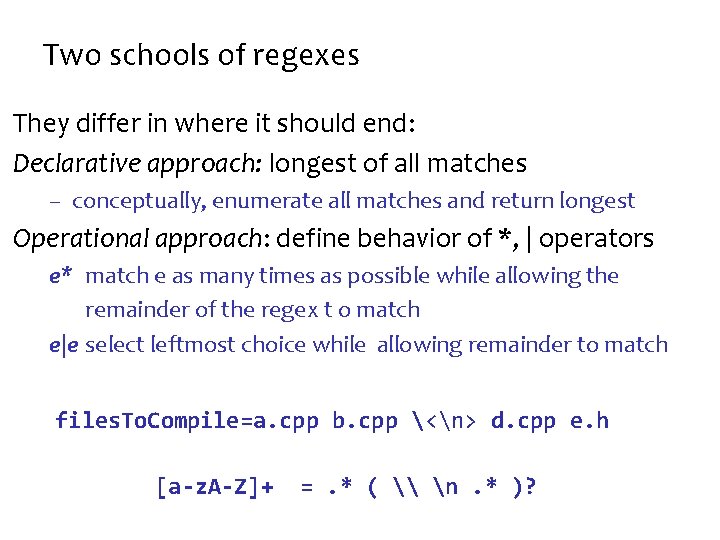

Example from Jeff Friedl’s book Imagine you want to parse a config file: files. To. Compile=a. cpp b. cpp The regex for this command line format: [a-z. A-Z]+=. * Now let’s allow an optional n-separated 2 nd line: files. To. Compile=a. cpp b. cpp <n> d. cpp e. h We extend the original regex: [a-z. A-Z]+=. *(\n. *)? This regex does not match our two-line input. Why?

What compiler textbooks don’t teach you The textbook string matching problem is simple: Does a regex r match the entire string s? – a clean statement and suitable for theoretical study – here is where regexes and FSMs are equivalent The matching problem in the Real World: Given a string s and a regex r, find a substring in s matching r. Do you see the language design issue here? – There may be many such substrings. – We need to decide which substring to find. It is easy to agree where the substring should start: – the matched substring should be the leftmost match

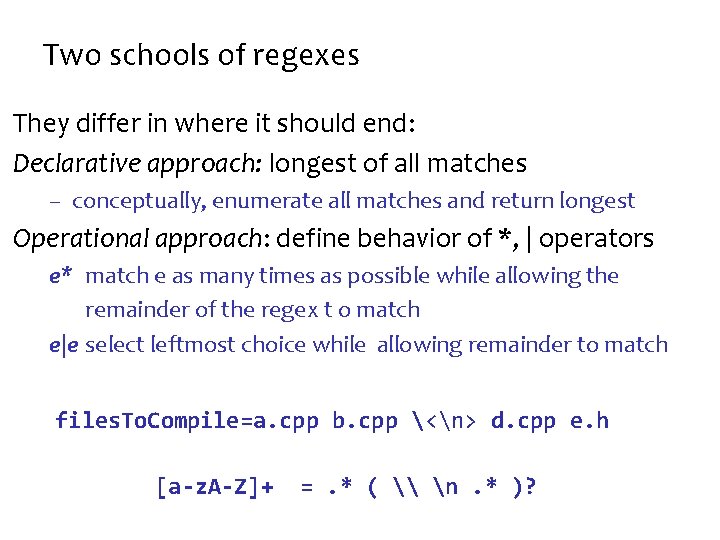

Two schools of regexes They differ in where it should end: Declarative approach: longest of all matches – conceptually, enumerate all matches and return longest Operational approach: define behavior of *, | operators e* match e as many times as possible while allowing the remainder of the regex t o match e|e select leftmost choice while allowing remainder to match files. To. Compile=a. cpp b. cpp <n> d. cpp e. h [a-z. A-Z]+ =. * ( \ n. * )?

These are important differences We saw a non-contrived regex can behave differently – personal story: I spent 3 hours debugging a similar regex – despite reading the manual carefully The (greedy) operational semantics of * – does not guarantee longest match (in case you need it) – forces the programmer to reason about backtracking It seems that backtracking is nice to reason about – because it’s local: no need to consider the entire regex – cognitive load is actually higher, as it breaks composition 11

Where in history of re did things go wrong? It’s tempting to blame perl – but the greedy regex semantics seems older – there are other reasons why backtracking is used Hypothesis 1: creators of re libs knew not that NFA can – can be the target language for compiling regexes – find all matches simultaneously (no backtracking) – be implemented efficiently (convert NFA to DFA) Hypothesis 2: their hands were tied – Ken Thompson’s algorithm for re-to-NFA was patented With backtracking came the greedy semantics – longest match would be expensive (must try all matches) – so semantics was defined greedily, and non-compositionally

Concepts • Syntax tree-directed translation (re to NFA) • recognizers: tell strings apart • NFA, DFA, regular expressions = equally powerful • but 1 (backreference) makes regexes more pwrful • Syntax sugar: e+ to e. e* • Compositionality: be weary of greedy semantics • Metacharacters: characters with special meaning 13

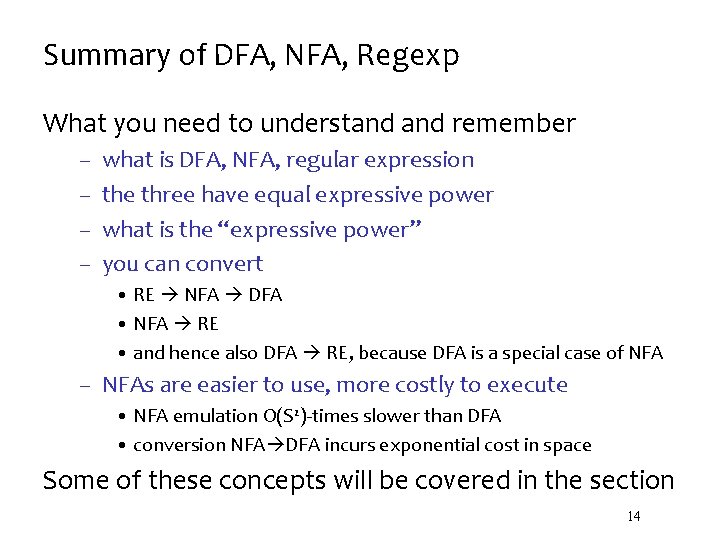

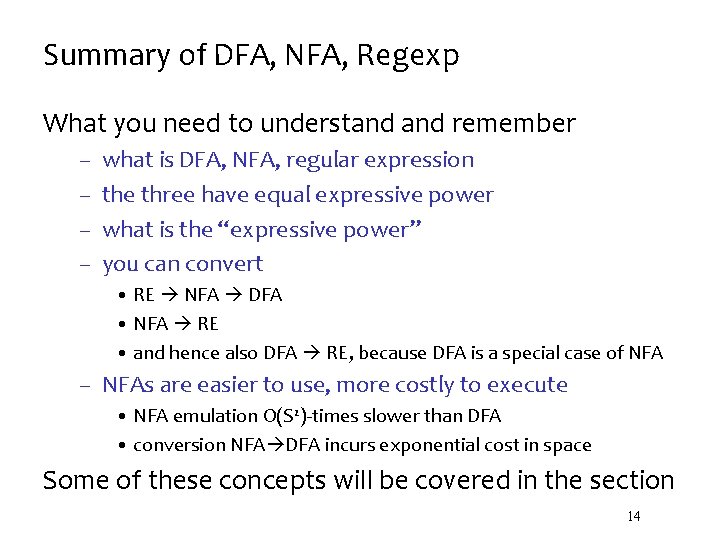

Summary of DFA, NFA, Regexp What you need to understand remember – – what is DFA, NFA, regular expression the three have equal expressive power what is the “expressive power” you can convert • RE NFA DFA • NFA RE • and hence also DFA RE, because DFA is a special case of NFA – NFAs are easier to use, more costly to execute • NFA emulation O(S 2)-times slower than DFA • conversion NFA DFA incurs exponential cost in space Some of these concepts will be covered in the section 14

Recursive descent parser 15

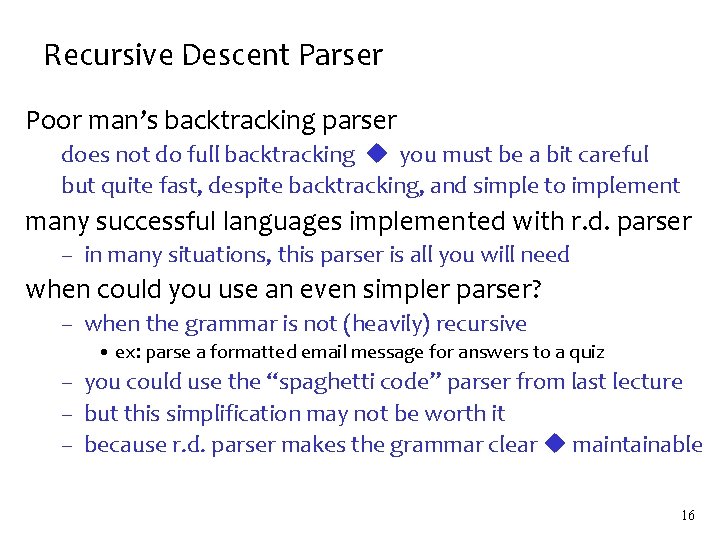

Recursive Descent Parser Poor man’s backtracking parser does not do full backtracking you must be a bit careful but quite fast, despite backtracking, and simple to implement many successful languages implemented with r. d. parser – in many situations, this parser is all you will need when could you use an even simpler parser? – when the grammar is not (heavily) recursive • ex: parse a formatted email message for answers to a quiz – you could use the “spaghetti code” parser from last lecture – but this simplification may not be worth it – because r. d. parser makes the grammar clear maintainable 16

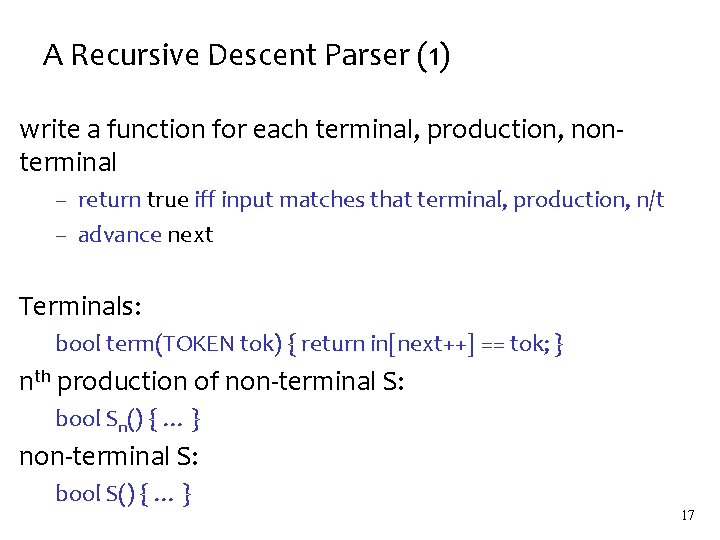

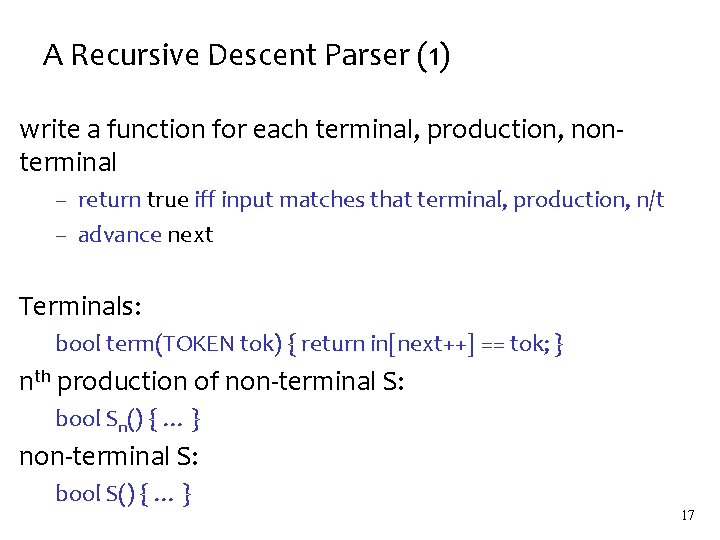

A Recursive Descent Parser (1) write a function for each terminal, production, nonterminal – return true iff input matches that terminal, production, n/t – advance next Terminals: bool term(TOKEN tok) { return in[next++] == tok; } nth production of non-terminal S: bool Sn() { … } non-terminal S: bool S() { … } 17

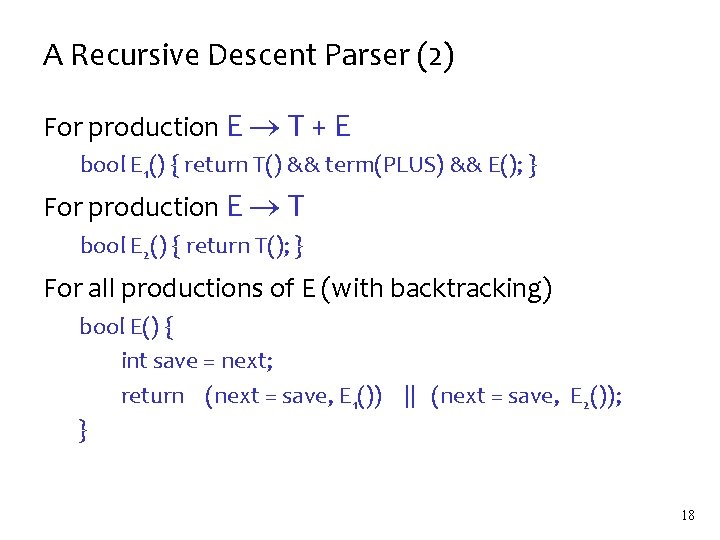

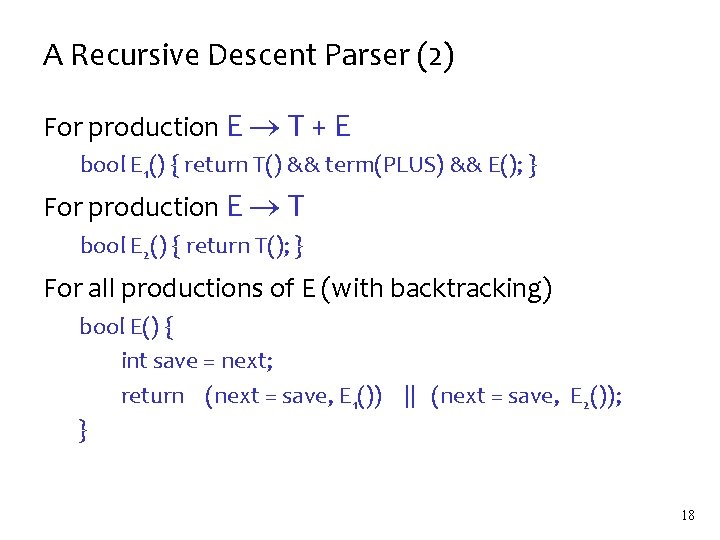

A Recursive Descent Parser (2) For production E T + E bool E 1() { return T() && term(PLUS) && E(); } For production E T bool E 2() { return T(); } For all productions of E (with backtracking) bool E() { int save = next; return (next = save, E 1()) || (next = save, E 2()); } 18

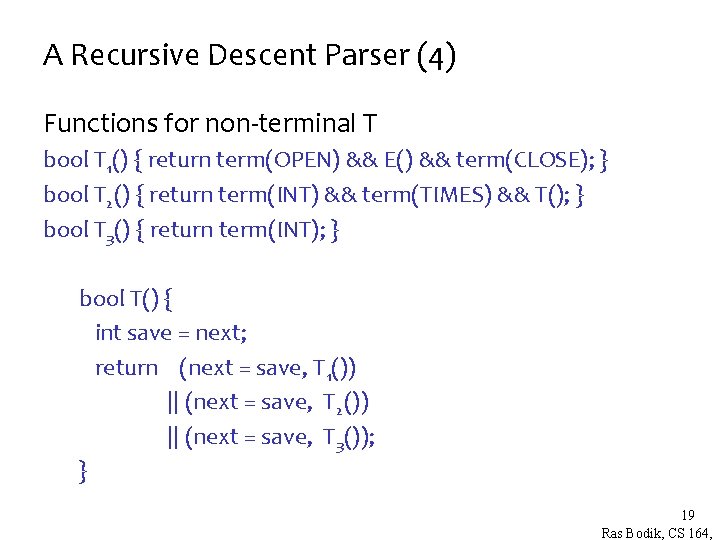

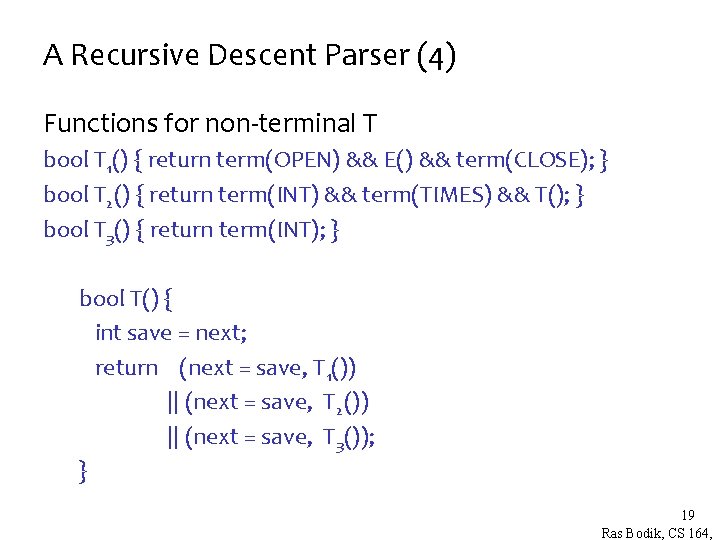

A Recursive Descent Parser (4) Functions for non-terminal T bool T 1() { return term(OPEN) && E() && term(CLOSE); } bool T 2() { return term(INT) && term(TIMES) && T(); } bool T 3() { return term(INT); } bool T() { int save = next; return (next = save, T 1()) || (next = save, T 2()) || (next = save, T 3()); } 19 Ras Bodik, CS 164,

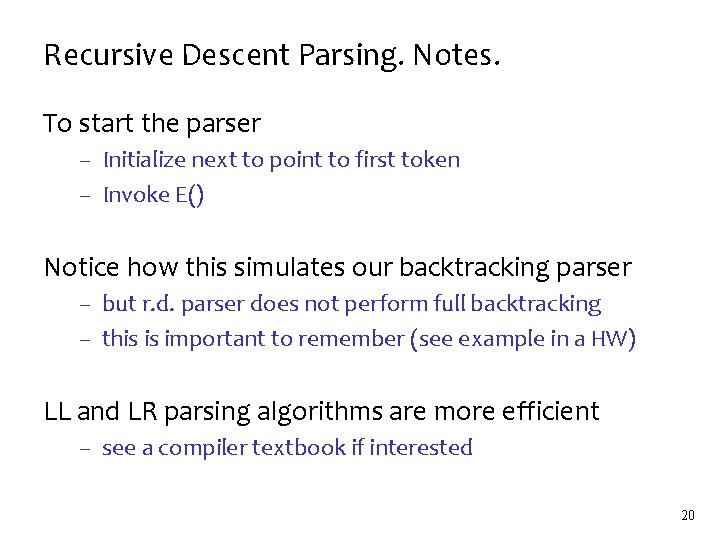

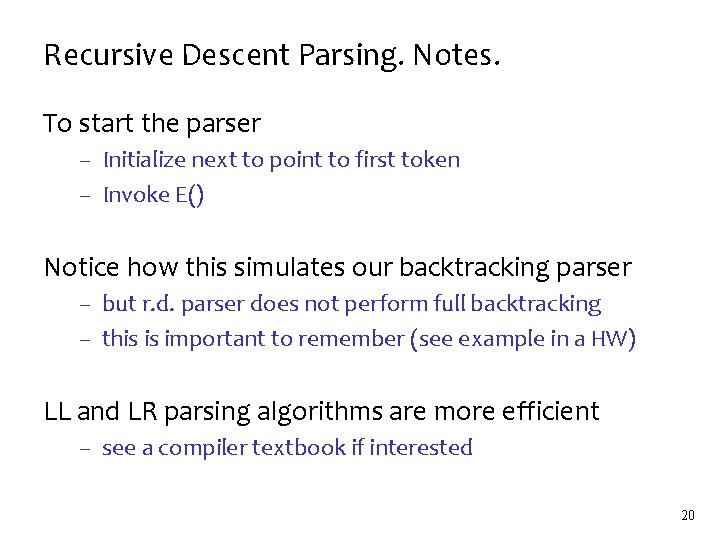

Recursive Descent Parsing. Notes. To start the parser – Initialize next to point to first token – Invoke E() Notice how this simulates our backtracking parser – but r. d. parser does not perform full backtracking – this is important to remember (see example in a HW) LL and LR parsing algorithms are more efficient – see a compiler textbook if interested 20

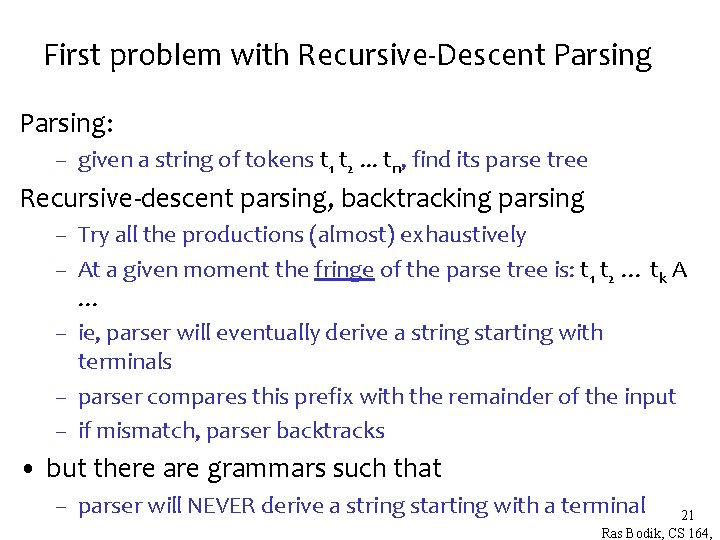

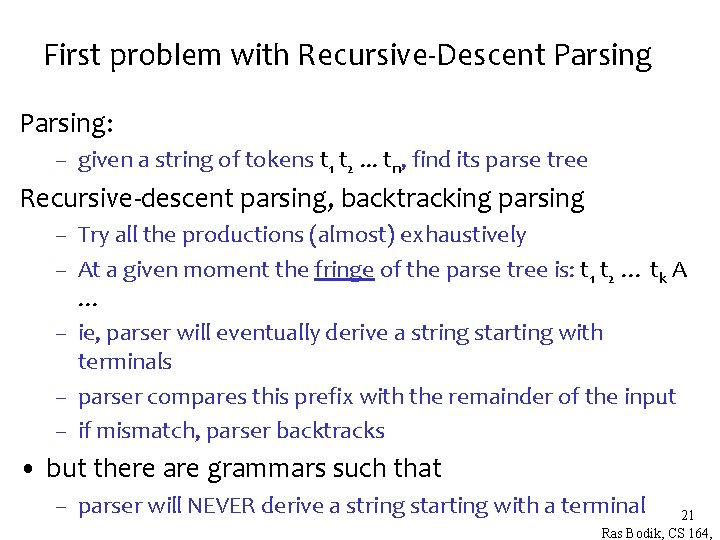

First problem with Recursive-Descent Parsing: – given a string of tokens t 1 t 2. . . tn, find its parse tree Recursive-descent parsing, backtracking parsing – Try all the productions (almost) exhaustively – At a given moment the fringe of the parse tree is: t 1 t 2 … tk A … – ie, parser will eventually derive a string starting with terminals – parser compares this prefix with the remainder of the input – if mismatch, parser backtracks • but there are grammars such that – parser will NEVER derive a string starting with a terminal 21 Ras Bodik, CS 164,

Eliminating left recursion

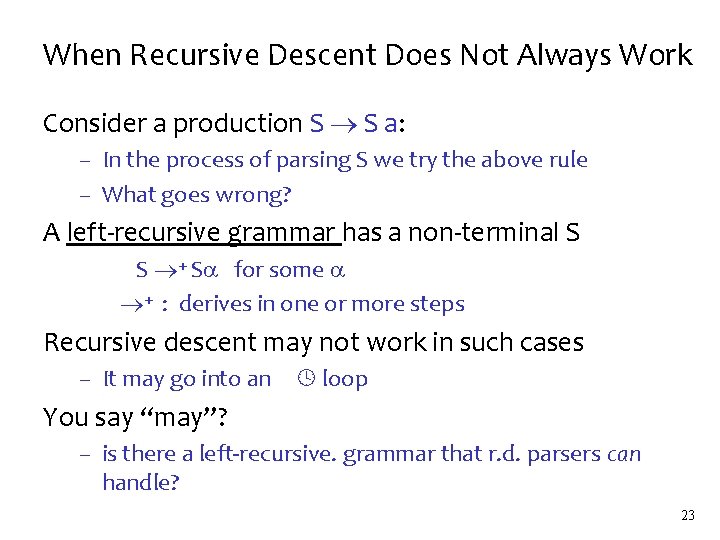

When Recursive Descent Does Not Always Work Consider a production S S a: – In the process of parsing S we try the above rule – What goes wrong? A left-recursive grammar has a non-terminal S S + S for some + : derives in one or more steps Recursive descent may not work in such cases – It may go into an loop You say “may”? – is there a left-recursive. grammar that r. d. parsers can handle? 23

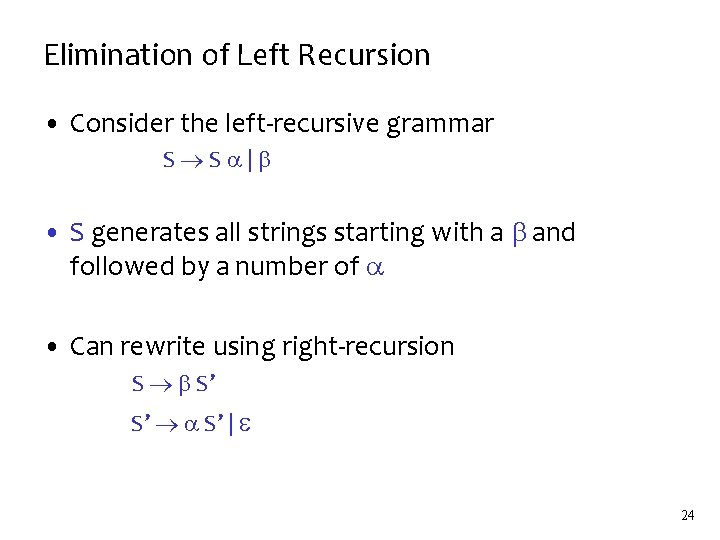

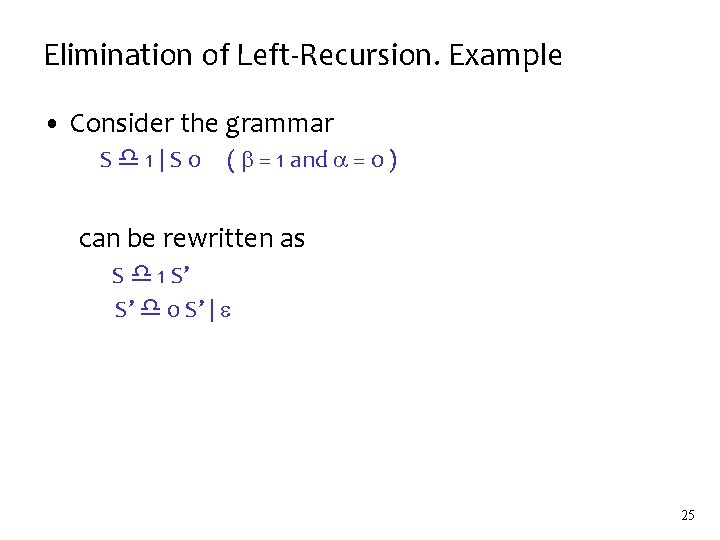

Elimination of Left Recursion • Consider the left-recursive grammar S S | • S generates all strings starting with a and followed by a number of • Can rewrite using right-recursion S S’ S’ S’ | 24

Elimination of Left-Recursion. Example • Consider the grammar S 1|S 0 ( = 1 and = 0 ) can be rewritten as S 1 S’ S’ 0 S’ | 25

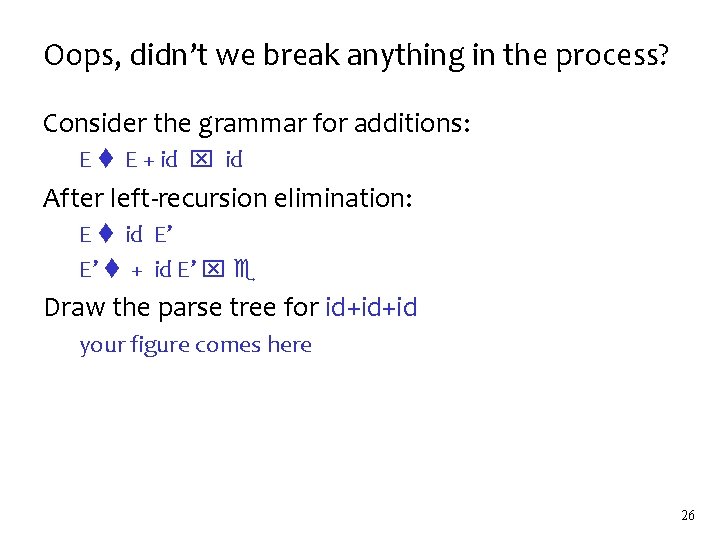

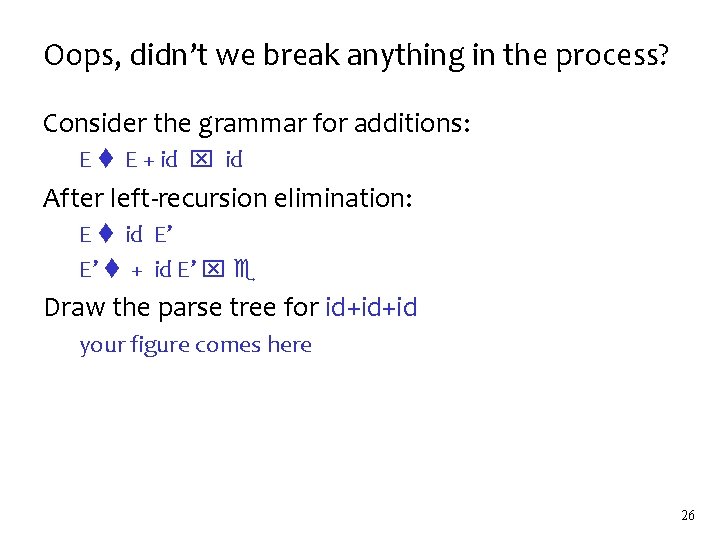

Oops, didn’t we break anything in the process? Consider the grammar for additions: E E + id After left-recursion elimination: E id E’ E’ + id E’ Draw the parse tree for id+id+id your figure comes here 26

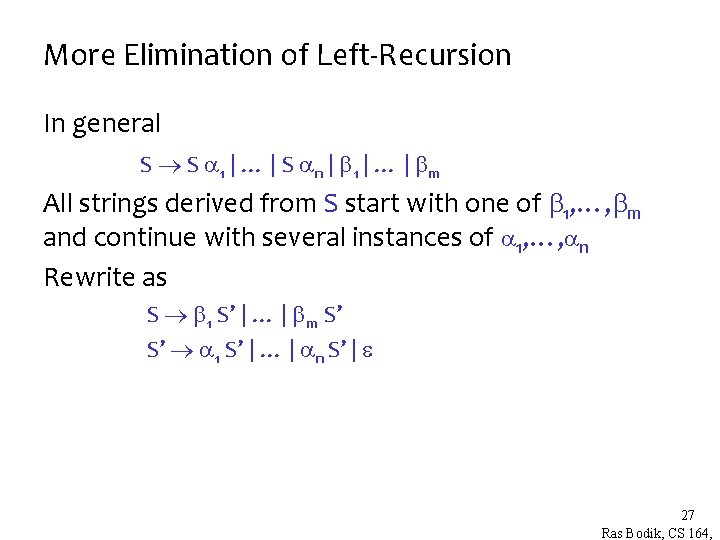

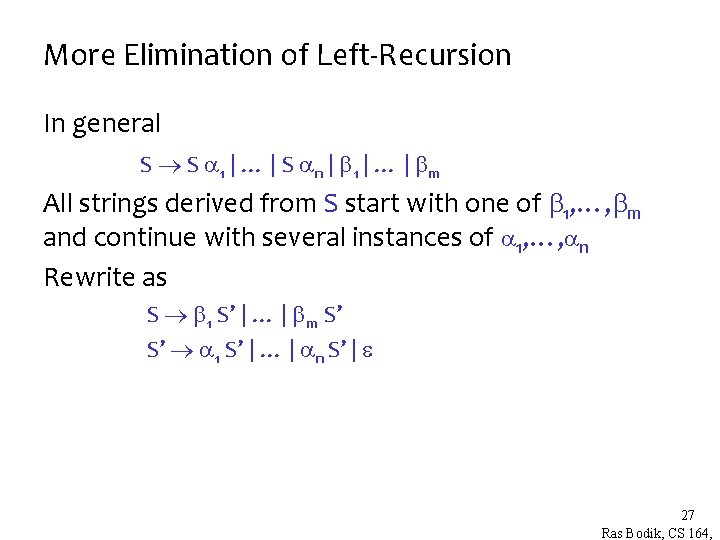

More Elimination of Left-Recursion In general S S 1 | … | S n | 1 | … | m All strings derived from S start with one of 1, …, m and continue with several instances of 1, …, n Rewrite as S 1 S’ | … | m S’ S’ 1 S’ | … | n S’ | 27 Ras Bodik, CS 164,

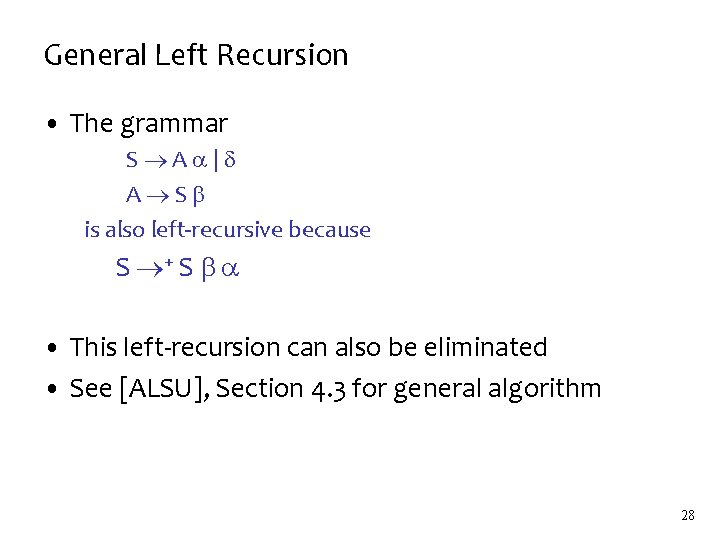

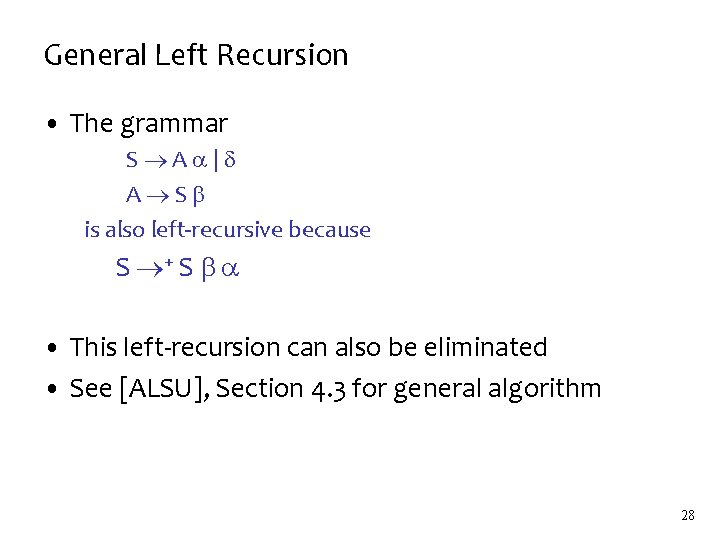

General Left Recursion • The grammar S A | A S is also left-recursive because S + S • This left-recursion can also be eliminated • See [ALSU], Section 4. 3 for general algorithm 28

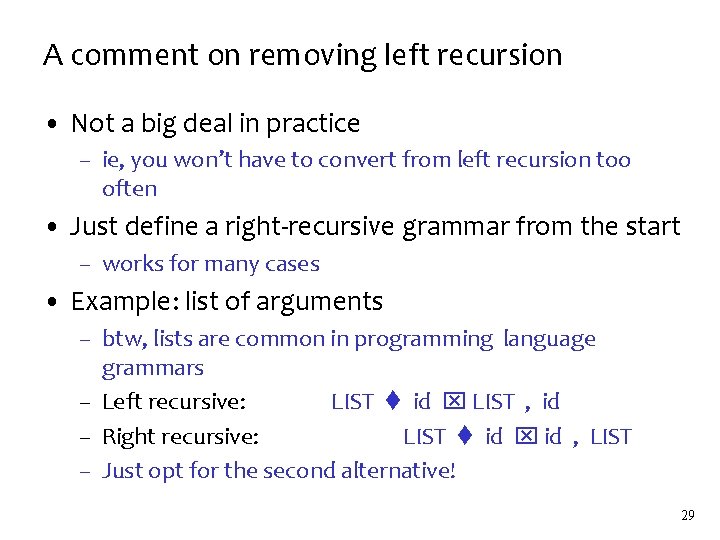

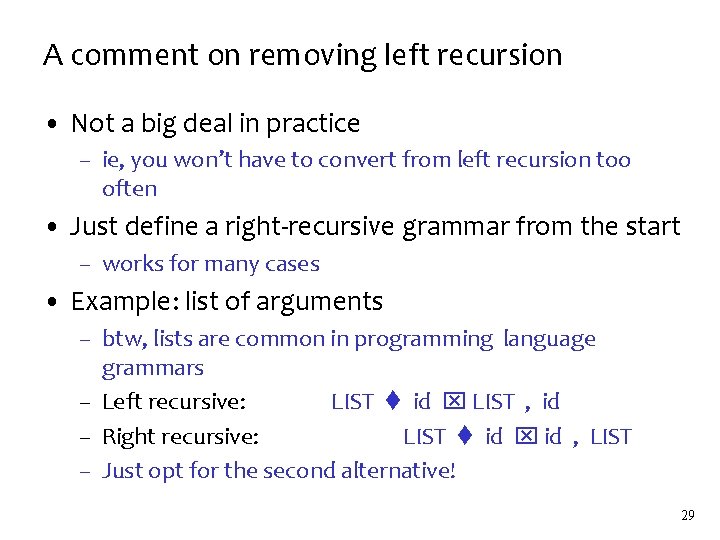

A comment on removing left recursion • Not a big deal in practice – ie, you won’t have to convert from left recursion too often • Just define a right-recursive grammar from the start – works for many cases • Example: list of arguments – btw, lists are common in programming language grammars – Left recursive: LIST id LIST , id – Right recursive: LIST id , LIST – Just opt for the second alternative! 29

Left Factoring

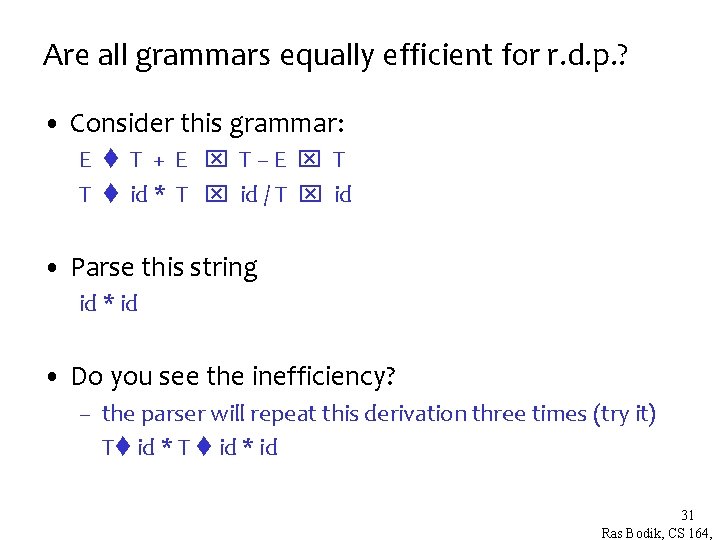

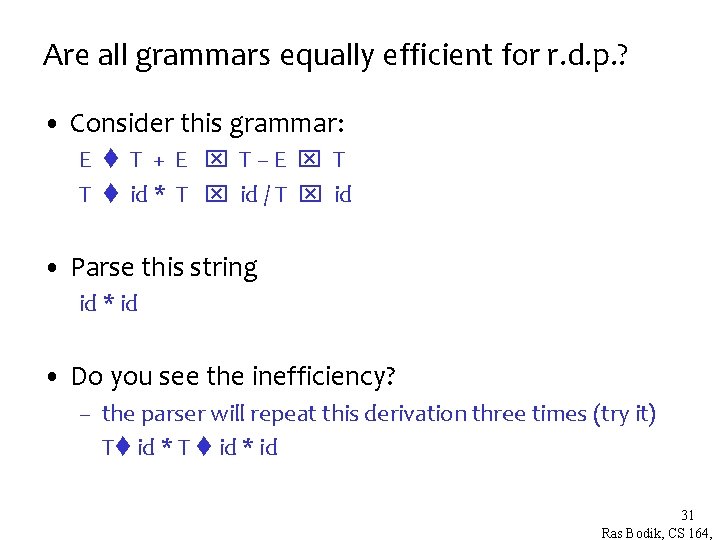

Are all grammars equally efficient for r. d. p. ? • Consider this grammar: E T + E T–E T T id * T id / T id • Parse this string id * id • Do you see the inefficiency? – the parser will repeat this derivation three times (try it) T id * T id * id 31 Ras Bodik, CS 164,

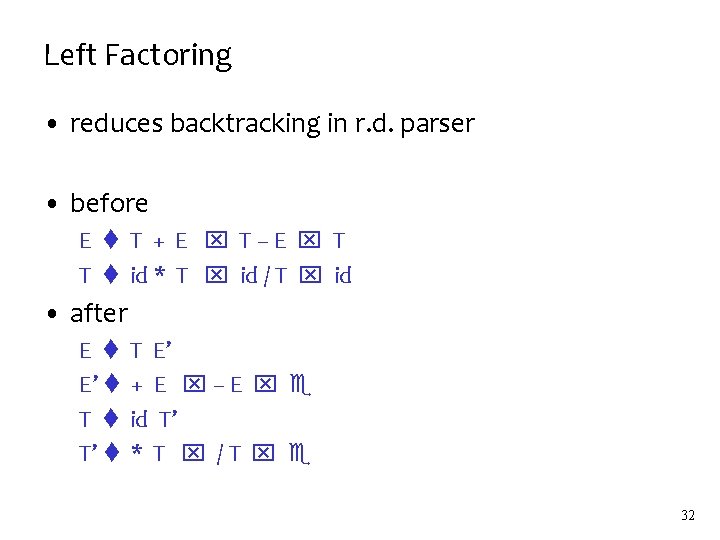

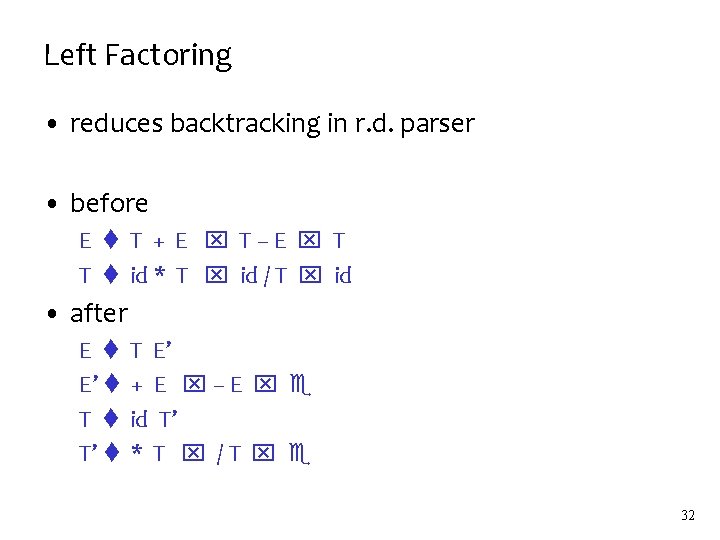

Left Factoring • reduces backtracking in r. d. parser • before E T + E T–E T T id * T id / T id • after E E’ T T’ T E’ + E –E id T’ * T /T 32

Limited Backtracking

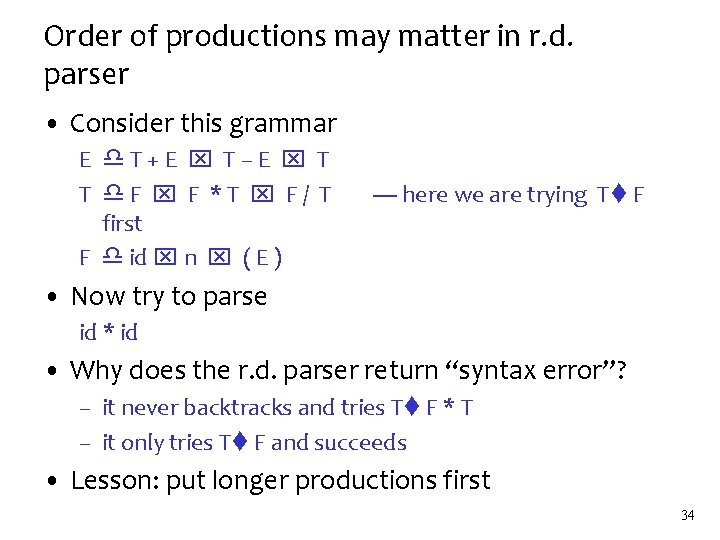

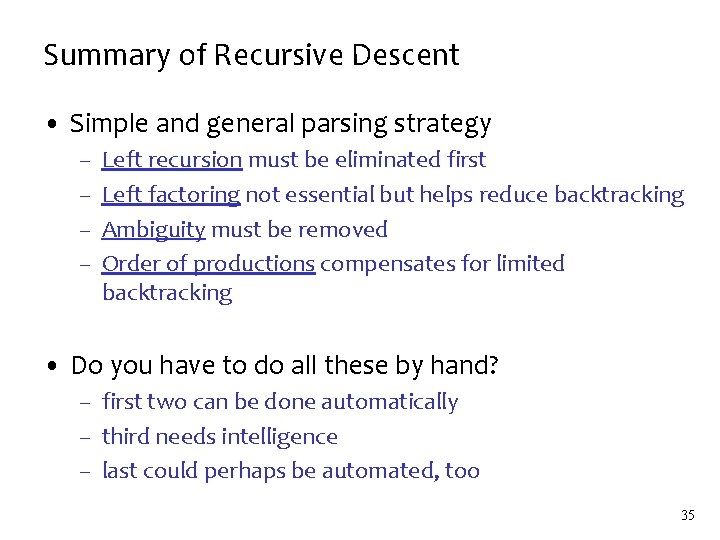

Order of productions may matter in r. d. parser • Consider this grammar E T+E T–E T T F F *T F/ T first F id n ( E ) ---- here we are trying T F • Now try to parse id * id • Why does the r. d. parser return “syntax error”? – it never backtracks and tries T F * T – it only tries T F and succeeds • Lesson: put longer productions first 34

Summary of Recursive Descent • Simple and general parsing strategy – – Left recursion must be eliminated first Left factoring not essential but helps reduce backtracking Ambiguity must be removed Order of productions compensates for limited backtracking • Do you have to do all these by hand? – first two can be done automatically – third needs intelligence – last could perhaps be automated, too 35