Reducing DRAM Latency at Low Cost by Exploiting

Reducing DRAM Latency at Low Cost by Exploiting Heterogeneity Donghyuk Lee Carnegie Mellon University

Problem: High DRAM Latency processor stalls: waiting for data main memory high latency Major bottleneck for system performance 2

Historical DRAM Trends Capacity (Gb) 2. 5 Latency (t. RC) 16 X 2. 0 100 80 1. 5 60 1. 0 40 -20% 0. 5 20 0. 0 0 2003 2006 2008 2011 Latency (ns) Capacity Year DRAM latency continues to be a critical bottleneck Goal: Reduce DRAM latency at low cost 3

Why is DRAM slow? 4

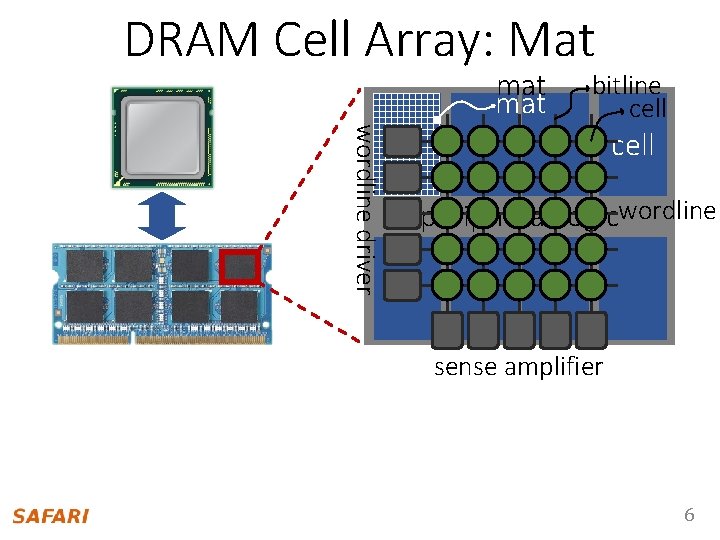

DRAM Organization processor mat bank peripheral logic main memory high latency 5

DRAM Cell Array: Mat mat wordline driver bitline cell peripheral logicwordline sense amplifier 6

Cell Array (Mat): High Latency 7

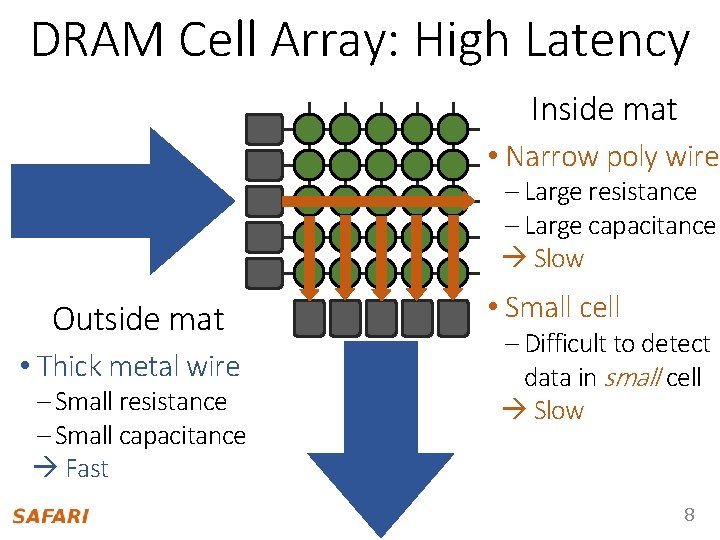

DRAM Cell Array: High Latency Inside mat • Narrow poly wire – Large resistance – Large capacitance Slow Outside mat • Thick metal wire – Small resistance – Small capacitance Fast • Small cell – Difficult to detect data in small cell Slow 8

DRAM cell array (mat) is the dominant latency bottleneck due to three reasons 9

1. Long Narrow Wires 1 Long narrow wires: enables small area, increases latency 10

2. Operating Conditions 2 Operating conditions: differing latencies, uses the same standard value optimized for the worst case e. g. , small cell vs. normal cell e. g. , hot vs. cool 11

3. Distance from Peripheral Logic 3 Distance from peripheral logic: differing latencies uses the same standard value optimized for the farthest cell e. g. , near cell vs. far cell 12

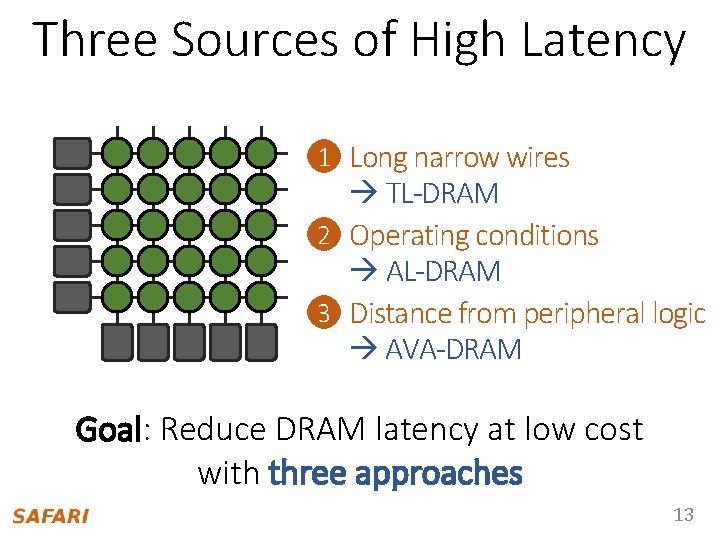

Three Sources of High Latency 1 Long narrow wires TL-DRAM 2 Operating conditions AL-DRAM 3 Distance from peripheral logic AVA-DRAM Goal: Reduce DRAM latency at low cost with three approaches 13

Thesis Statement DRAM latency can be reduced by enabling and exploiting latency heterogeneity in DRAM 14

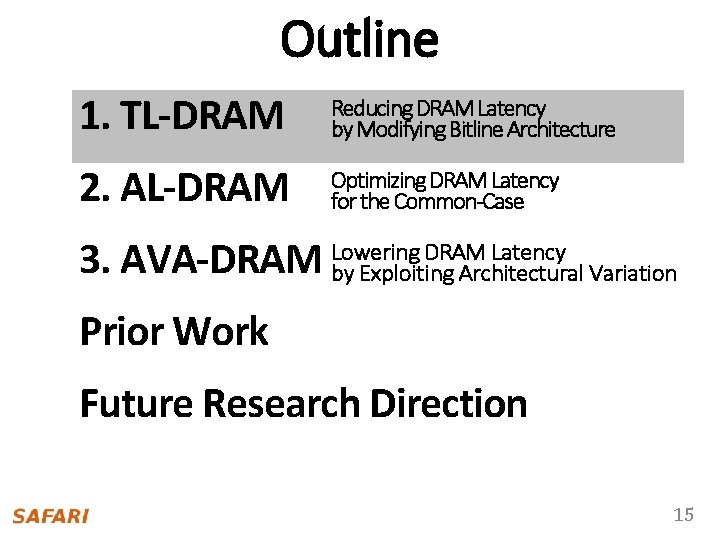

Approach 1 Outline 1. TL-DRAM Reducing DRAM Latency by Modifying Bitline Architecture 2. AL-DRAM Tiered-Latency DRAM: Optimizing DRAM Latency for the Common-Case Lowering Latency Lowering DRAM Latency 3. AVA-DRAM by Exploiting Architectural Variation by Modifying the Bitline Architecture Prior Work Future Research Direction Lee et al. , Tiered-Latency DRAM: A Low Latency and Low Cost DRAM Architecture, HPCA 2013 15

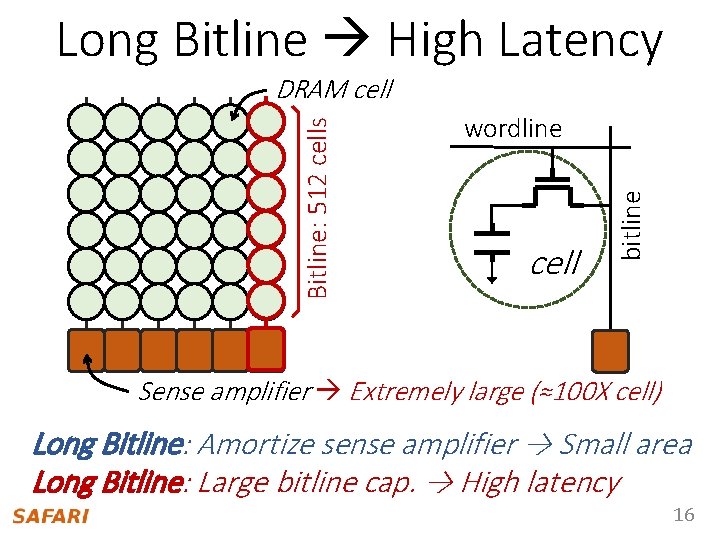

Long Bitline High Latency wordline cell bitline Bitline: 512 cells DRAM cell Sense amplifier Extremely large (≈100 X cell) Long Bitline: Amortize sense amplifier → Small area Long Bitline: Large bitline cap. → High latency 16

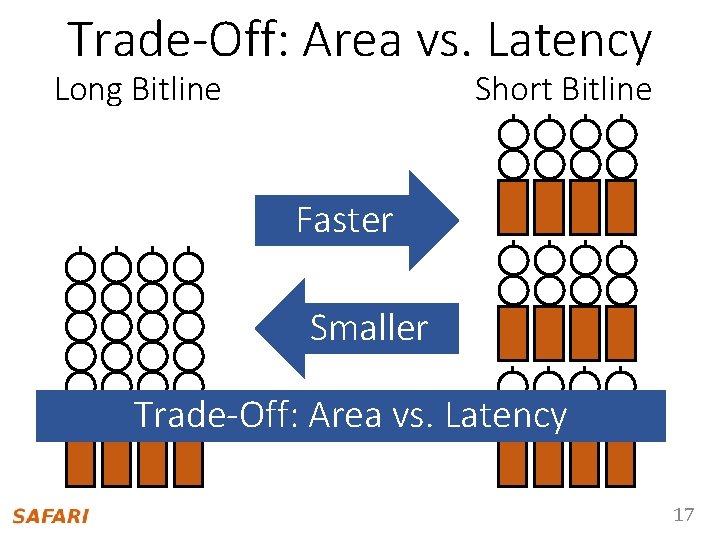

Trade-Off: Area vs. Latency Long Bitline Short Bitline Faster Smaller Trade-Off: Area vs. Latency 17

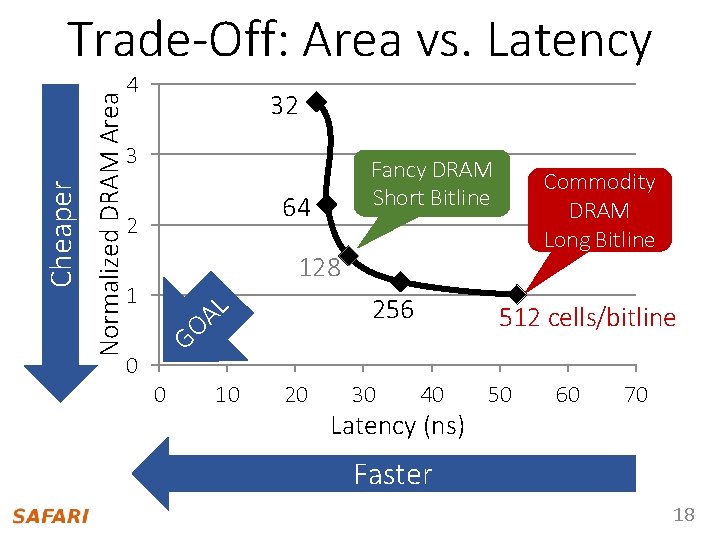

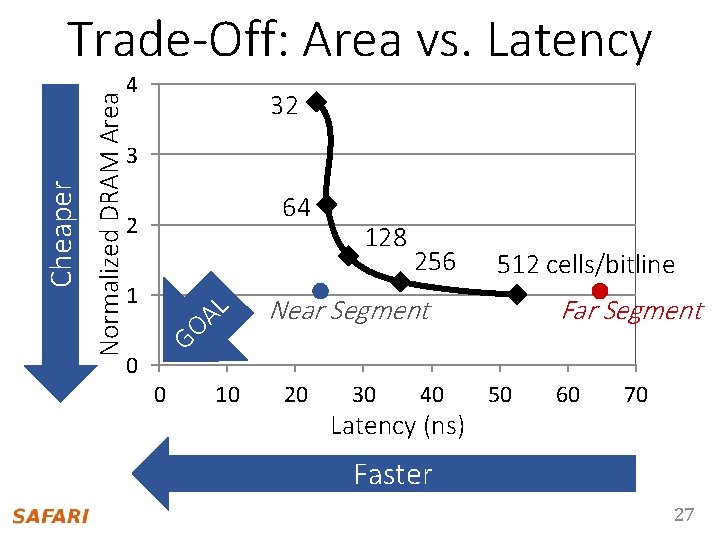

Normalized DRAM Area Cheaper Trade-Off: Area vs. Latency 4 32 3 Fancy DRAM Short Bitline 64 2 Commodity DRAM Long Bitline 128 1 L A GO 0 0 10 256 20 30 512 cells/bitline 40 Latency (ns) 50 60 70 Faster 18

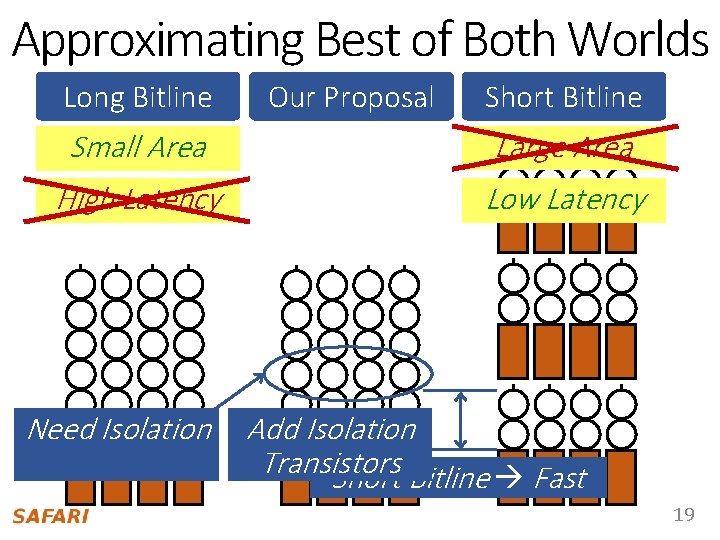

Approximating Best of Both Worlds Long Bitline Our Proposal Short Bitline Small Area Large Area High Latency Low Latency Need Isolation Add Isolation Transistors Short Bitline Fast 19

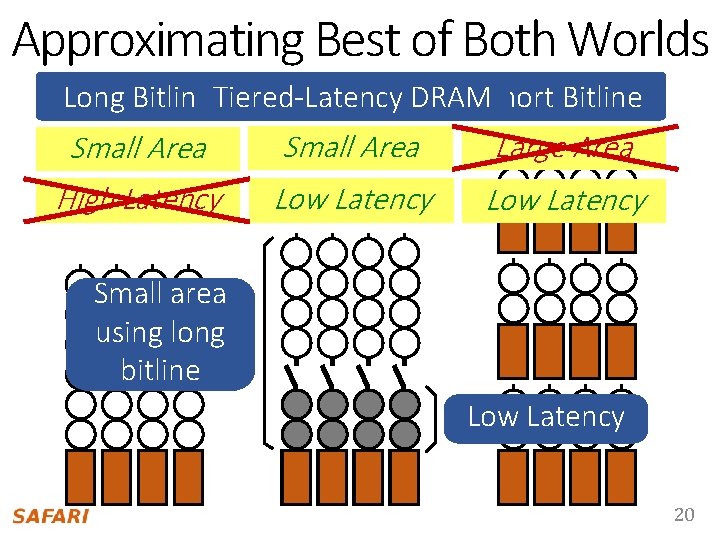

Approximating Best of Both Worlds Long Bitline. Tiered-Latency Our Proposal DRAMShort Bitline Small Area Large Area High Latency Low Latency Small area using long bitline Low Latency 20

Tiered-Latency DRAM • Divide a bitline into two segments with an isolation transistor Far Segment Isolation Transistor Near Segment Sense Amplifier 21

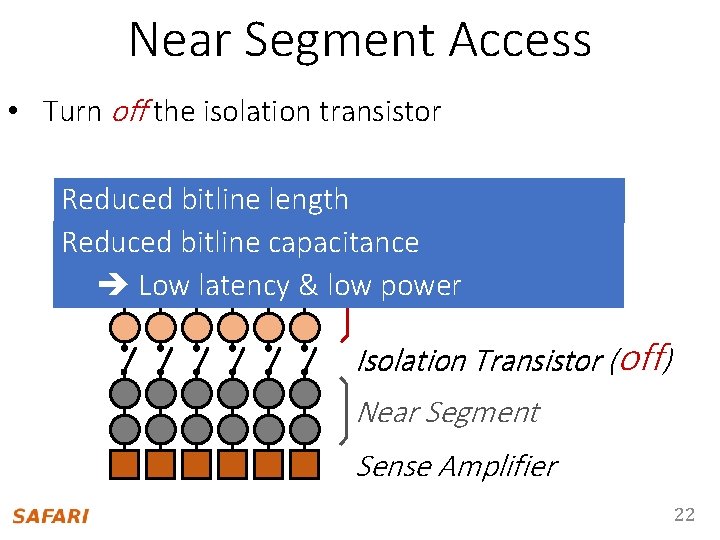

Near Segment Access • Turn off the isolation transistor Reduced bitline length Reduced bitline capacitance Farpower Segment Low latency & low Isolation Transistor (off) Near Segment Sense Amplifier 22

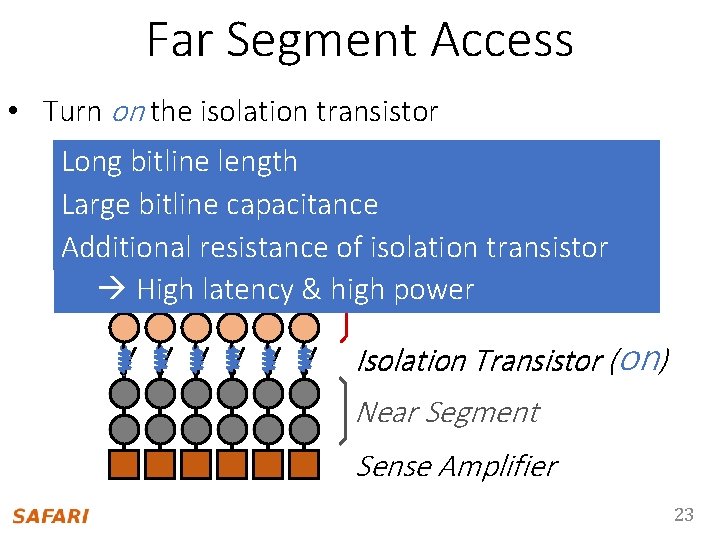

Far Segment Access • Turn on the isolation transistor Long bitline length Large bitline capacitance Additional resistance of isolation transistor Far Segment High latency & high power Isolation Transistor (on) Near Segment Sense Amplifier 23

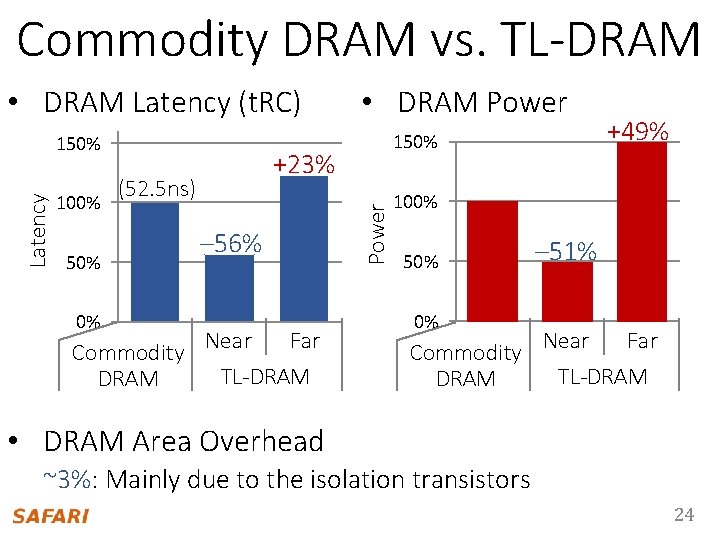

Commodity DRAM vs. TL-DRAM • DRAM Latency (t. RC) 100% +23% (52. 5 ns) 50% 0% Commodity DRAM 150% Power Latency 150% • DRAM Power – 56% Near Far TL-DRAM +49% 100% 50% 0% Commodity DRAM – 51% Near Far TL-DRAM • DRAM Area Overhead ~3%: Mainly due to the isolation transistors 24

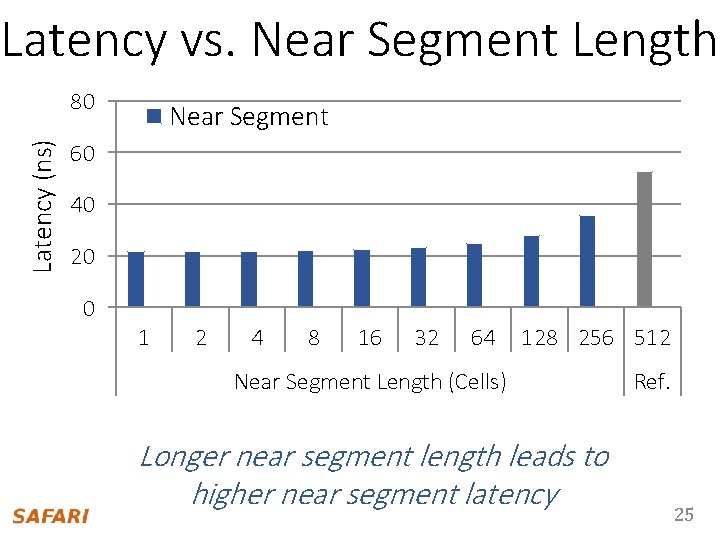

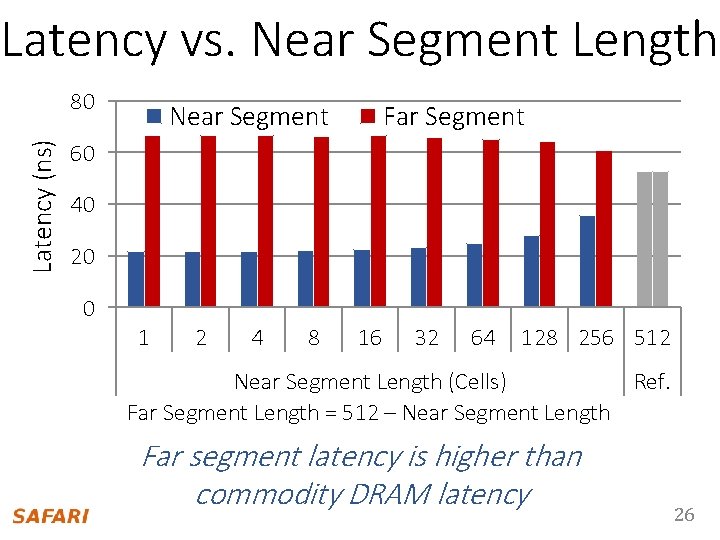

Latency vs. Near Segment Length Latency (ns) 80 Near Segment Far Segment 60 40 20 0 1 2 4 8 16 32 64 128 256 512 Near Segment Length (Cells) Longer near segment length leads to higher near segment latency Ref. 25

Latency vs. Near Segment Length Latency (ns) 80 Near Segment Far Segment 60 40 20 0 1 2 4 8 16 32 64 128 256 512 Near Segment Length (Cells) Ref. Far Segment Length = 512 – Near Segment Length Far segment latency is higher than commodity DRAM latency 26

Normalized DRAM Area Cheaper Trade-Off: Area vs. Latency 4 32 3 64 2 1 L A GO 0 0 10 128 256 512 cells/bitline Near Segment 20 30 40 Latency (ns) Far Segment 50 60 70 Faster 27

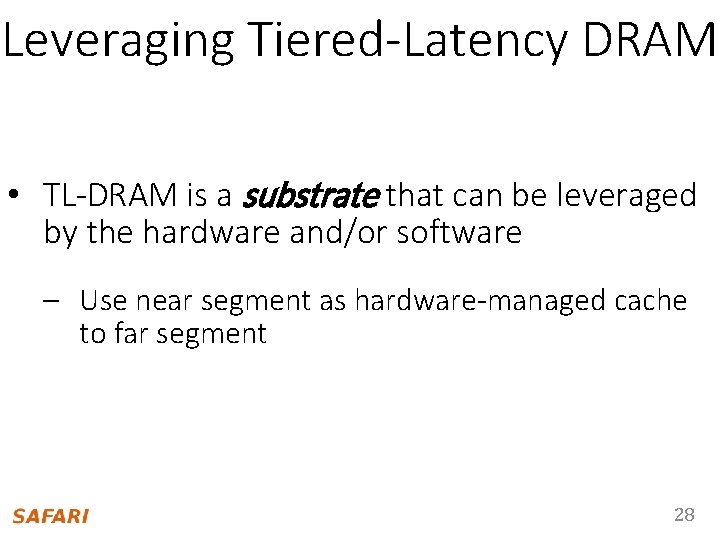

Leveraging Tiered-Latency DRAM • TL-DRAM is a substrate that can be leveraged by the hardware and/or software – Use near segment as hardware-managed cache to far segment 28

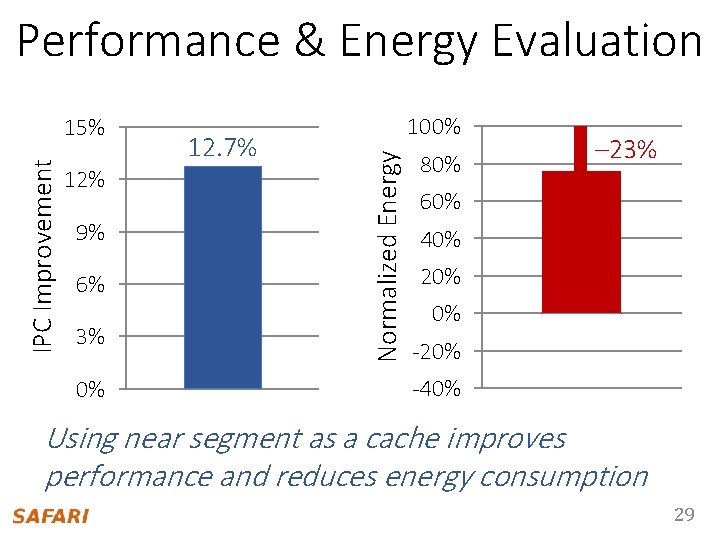

Performance & Energy Evaluation 12% 9% 6% 3% 0% 12. 7% 100% Normalized Energy IPC Improvement 15% 80% – 23% 60% 40% 20% 0% -20% -40% Using near segment as a cache improves performance and reduces energy consumption 29

Summary: TL-DRAM • Observation – Long bitlines are the dominant source of DRAM latency • Idea – Divide a long bitline into two shorter segments Fast and slow segments • Tiered-latency DRAM: Enables latency heterogeneity – Can leverage this in many ways to improve performance and reduce power consumption • Performance & Power Evaluation – When the fast segment is used as a cache to the slow segment Significant performance improvement (>12%) and power reduction (>23%) at low area cost (3%) 30

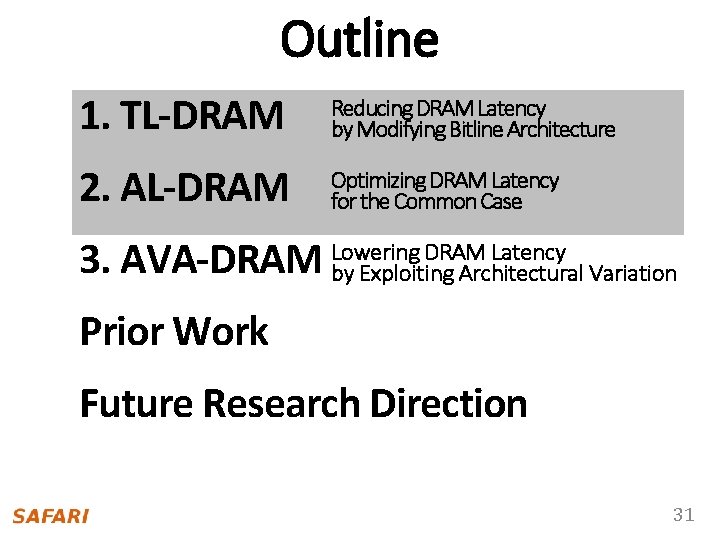

Approach 2 Outline 1. TL-DRAM Reducing DRAM Latency by Modifying Bitline Architecture 2. AL-DRAM Adaptive-Latency DRAM: Optimizing DRAM Latency for the Common-Case Optimizing DRAM Latency Lowering DRAM Latency 3. AVA-DRAM by Exploiting Architectural Variation for the Common Operating Conditions Prior Work Future Research Direction Lee et al. , Adaptive-Latency DRAM: Optimizing DRAM Timing for the Common-Case, HPCA 2015 31

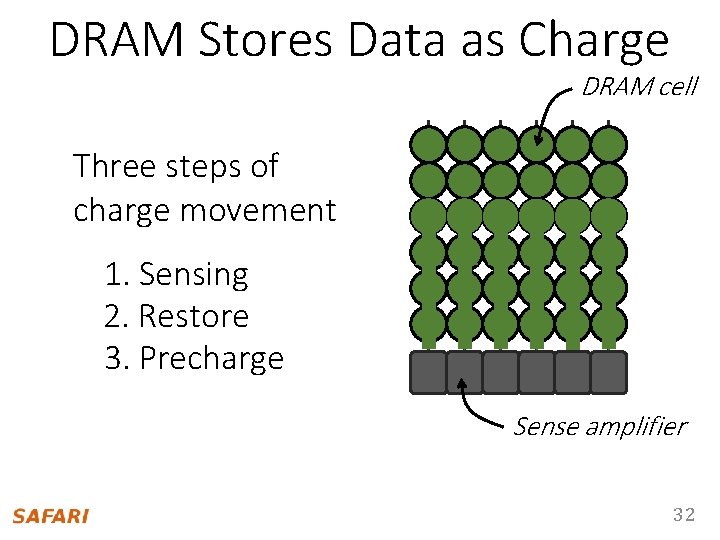

DRAM Stores Data as Charge DRAM cell Three steps of charge movement 1. Sensing 2. Restore 3. Precharge Sense amplifier 32

DRAM Charge over Time cell charge Data 1 Sense amplifier Timing Parameters Sensing In theory In practice Data 0 Restore margin time Why does DRAM need the extra timing margin? 33

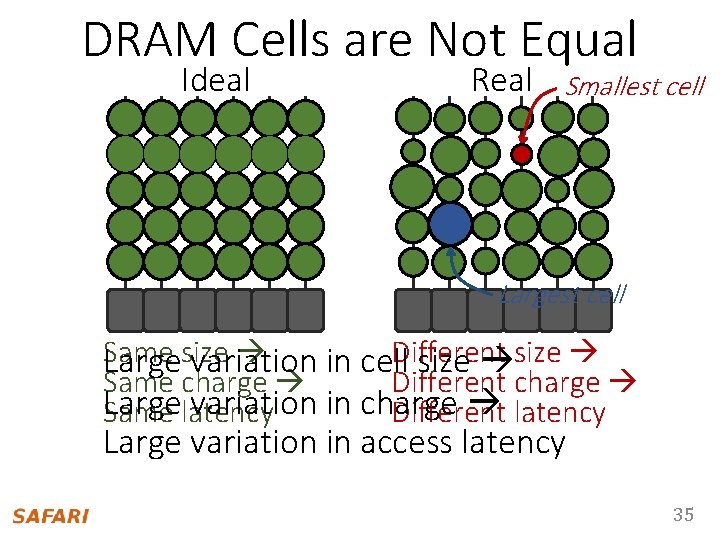

Two Reasons for Timing Margin 1. Process Variation – DRAM cells are not equal – Leads to extra timing margin for cells cell thatcan store large small amount of of charge; 2. Temperature Dependence – DRAM leaks more charge at higher temperature – Leads to extra timing margin when operating at low temperature 34

DRAM Cells are Not Equal Ideal Real Smallest cell Largest cell Same Different Largesize variation in cell size Same charge Different charge Largelatency variation in charge latency Same Different Large variation in access latency 35

Two Reasons for Timing Margin 1. Process Variation – DRAM cells are not equal – Leads to extra timing margin for cells that can store large amount of charge 2. Temperature Dependence – DRAM leaks more charge at higher temperature – Leads to extra timing margin when operating at low temperature 36

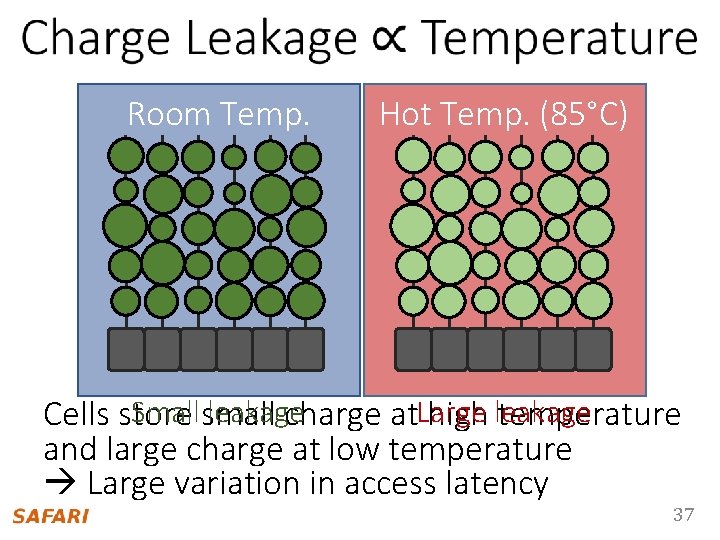

Room Temp. Hot Temp. (85°C) Smallsmall leakage Cells store charge at. Large high leakage temperature and large charge at low temperature Large variation in access latency 37

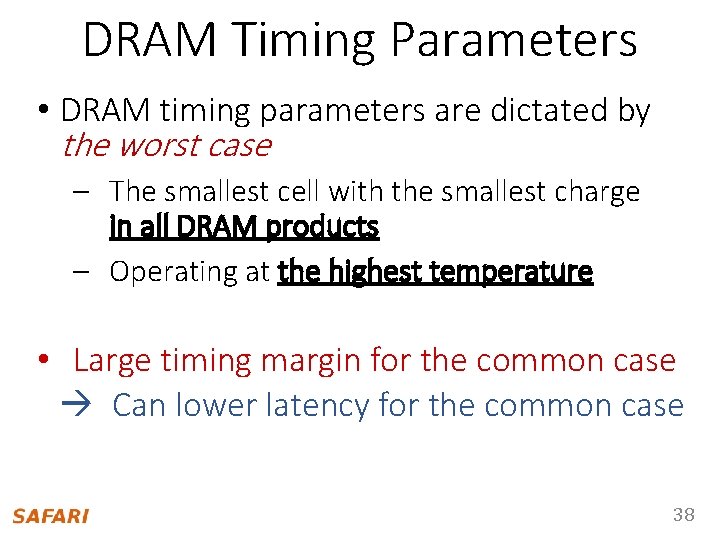

DRAM Timing Parameters • DRAM timing parameters are dictated by the worst case – The smallest cell with the smallest charge in all DRAM products – Operating at the highest temperature • Large timing margin for the common case Can lower latency for the common case 38

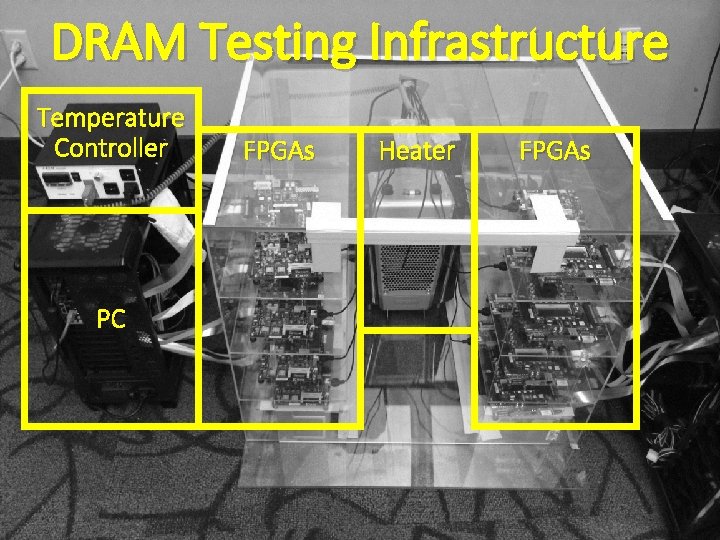

DRAM Testing Infrastructure Temperature Controller FPGAs Heater FPGAs PC 39

Obs 1. Faster Sensing Typical DIMM at Low Temperature 115 DIMM characterization More charge Timing (t. RCD) Strong charge flow 17% ↓ Faster sensing No Errors Typical DIMM at Low Temperature More charge Faster sensing 40

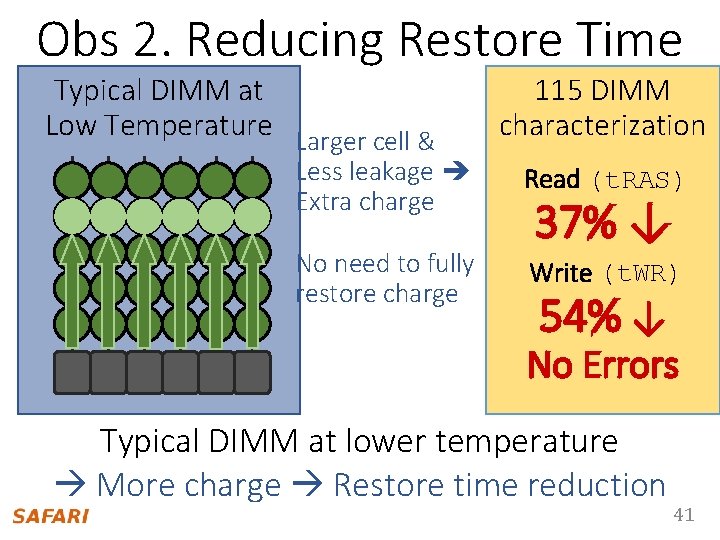

Obs 2. Reducing Restore Time Typical DIMM at Low Temperature Larger cell & 115 DIMM characterization Less leakage Extra charge Read (t. RAS) No need to fully restore charge Write (t. WR) 37% ↓ 54% ↓ No Errors Typical DIMM at lower temperature More charge Restore time reduction 41

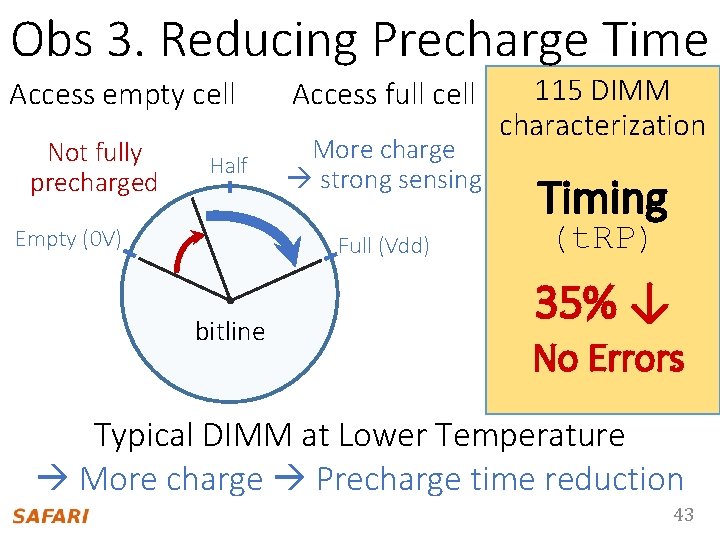

Obs 3. Reducing Precharge Time Sensing Half Precharge Empty (0 V) Full (Vdd) Bitline Typical DIMM at Low Temperature Sense amplifier Precharge ? – Setting bitline to half-full charge 42

Obs 3. Reducing Precharge Time Access empty cell Not fully precharged Half Empty (0 V) Access full cell More charge strong sensing Full (Vdd) bitline 115 DIMM characterization Timing (t. RP) 35% ↓ No Errors Typical DIMM at Lower Temperature More charge Precharge time reduction 43

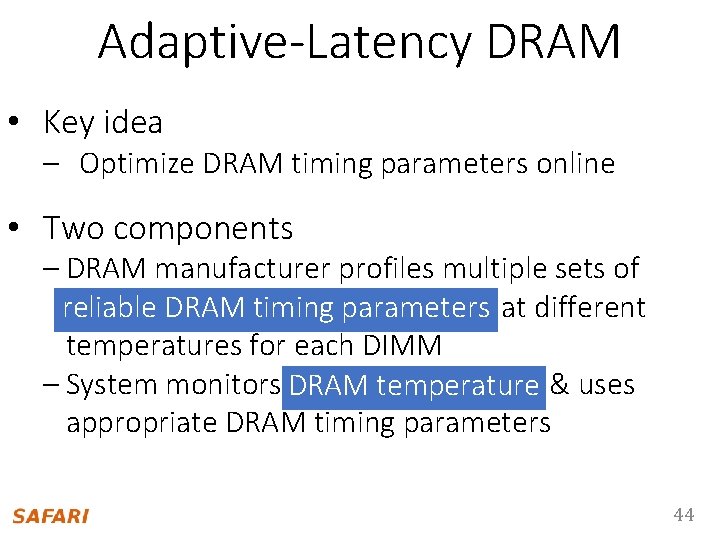

Adaptive-Latency DRAM • Key idea – Optimize DRAM timing parameters online • Two components – DRAM manufacturer profiles multiple sets of reliable DRAM timing parameters at different reliable temperatures for each DIMM – System monitors DRAM temperature & uses appropriate DRAM timing parameters 44

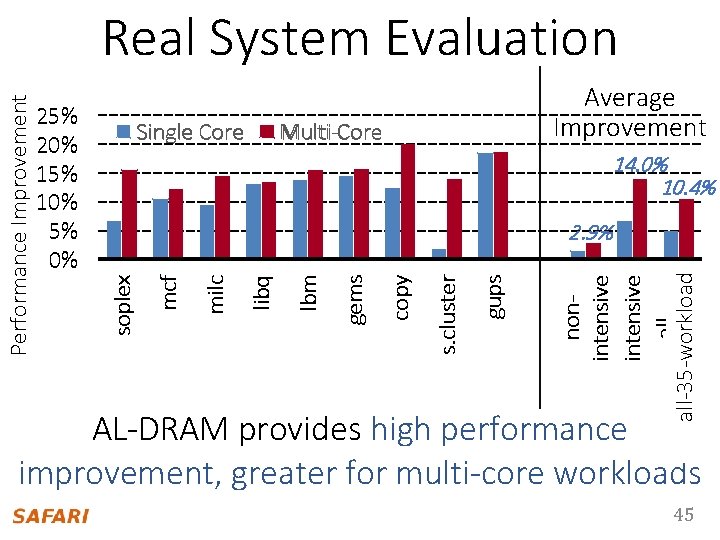

Single Core Average Improvement Multi-Core Multi Core 14. 0% 10. 4% nonintensive allall-35 -workloads gups s. cluster copy gems lbm libq milc 2. 9% mcf 25% 20% 15% 10% 5% 0% soplex Performance Improvement Real System Evaluation AL-DRAM provides high performance improvement, greater for multi-core workloads 45

Summary: AL-DRAM • Observation – DRAM timing parameters are dictated by the worst-case cell (smallest cell at highest temperature) • Our Approach: Adaptive-Latency DRAM (AL-DRAM) – Optimizes DRAM timing parameters for the common case (typical DIMM operating at low temperatures) • Analysis: Characterization of 115 DIMMs – Great potential to lower DRAM timing parameters (17 – 54%) without any errors • Real System Performance Evaluation – Significant performance improvement(14% for memoryintensive workloads) without errors (33 days) 46

Approach 3 Outline 1. TL-DRAM 2. AL-DRAM AVA-DRAM: Reducing DRAM Latency by Modifying Bitline Architecture Optimizing DRAM Latency for the Common-Case Lowering DRAM Latency 3. AVA-DRAM by Exploiting Architectural Variation Prior Work Future Research Direction Lee et al. , AVA-DRAM: Reducing DRAM Latency by Exploiting Architectural Variation, under submission 47

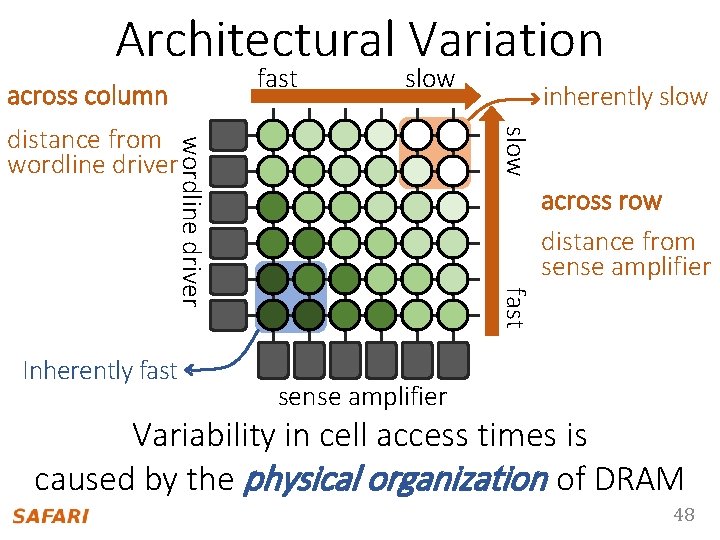

Architectural Variation fast across column slow wordline driver slow distance from wordline driver across row distance from sense amplifier fast Inherently fast inherently slow sense amplifier Variability in cell access times is caused by the physical organization of DRAM 48

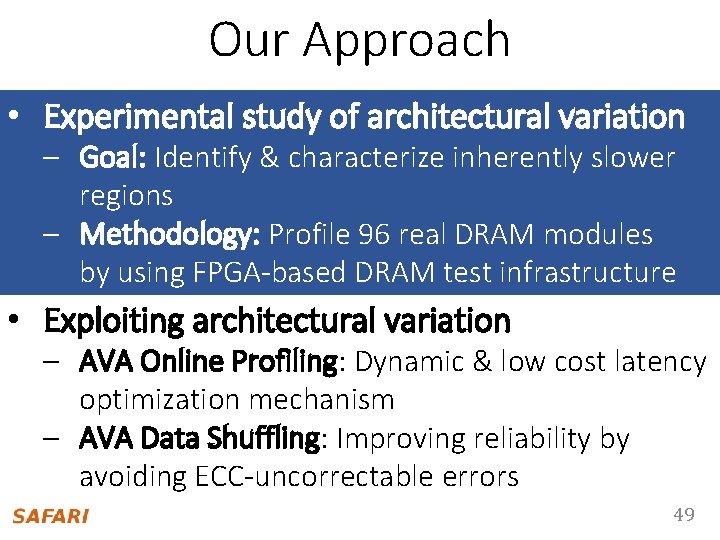

Our Approach • Experimental study of forarchitecturalvariation – Goal: Identify & characterize inherently slower regions – Methodology: Profile Profiling 9696 real. DRAMmodules by using FPGA-based DRAM test infrastructure • Exploiting architectural variation – AVA Online Profiling: Dynamic & low cost latency optimization mechanism – AVA Data Shuffling: Improving reliability by avoiding ECC-uncorrectable errors 49

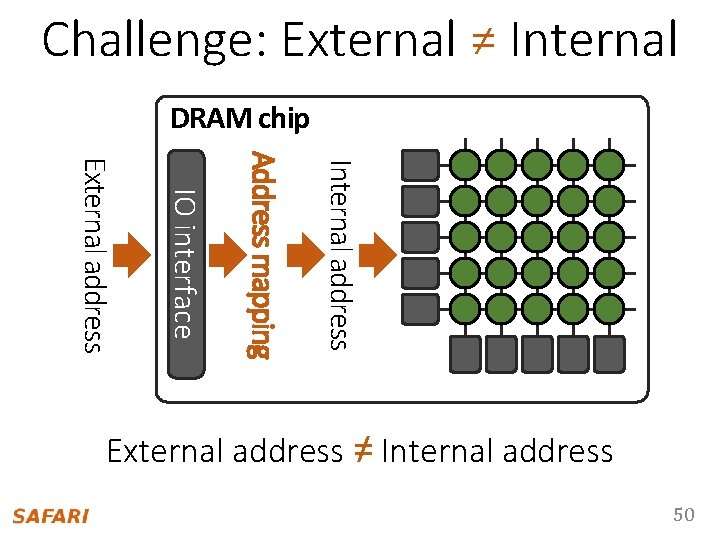

Challenge: External ≠ Internal DRAM chip Internal address Address mapping IO interface External address ≠ Internal address 50

Expected Characteristics • Variation – Some regions are slower than others – Some regions are more vulnerable than others when accessed with reduced latency • Repeatability – Latency (error) characteristics repeat periodically, if the same component (e. g. , mat) is duplicated • Similarity – Across different organizations (e. g. , chip/DIMM) if they share same design 51

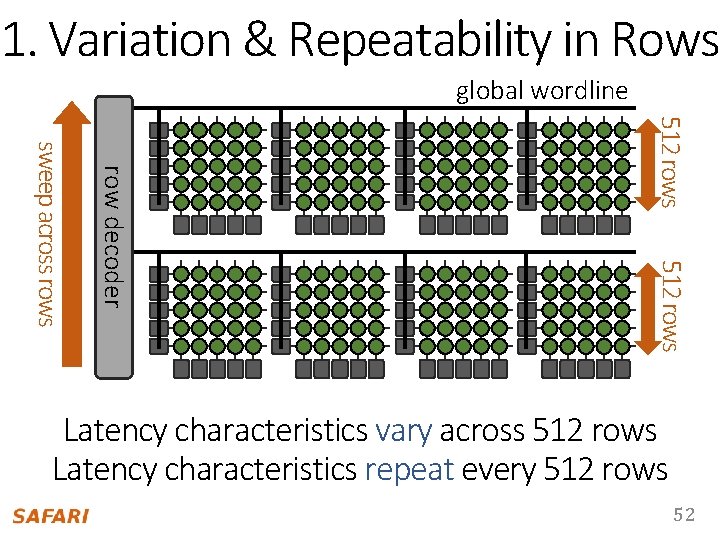

1. Variation & Repeatability in Rows 512 rows sweep across row decoder global wordline Latency characteristics vary across 512 rows Samecharacteristics organization repeats every 512512 rows Latency repeat every 52

row addr. (mod. 512) Random Errors 8300 3500 8200 3000 8100 2500 8000 2000 7900 1500 7800 1000 7700 500 7600 0 7500 row addr. (mod. 512) Periodic Errors 0 64 128 192 256 320 384 448 0 5. 0 ns 4000 0 64 128 192 256 320 384 448 1 7. 5 ns Erroneous Request Count 2 10. 0 ns 0 64 128 192 256 320 384 448 Erroneous Request Count t. RP 1. 1. Variation in Rows row addr. (mod. 512) Mostly Errors 53

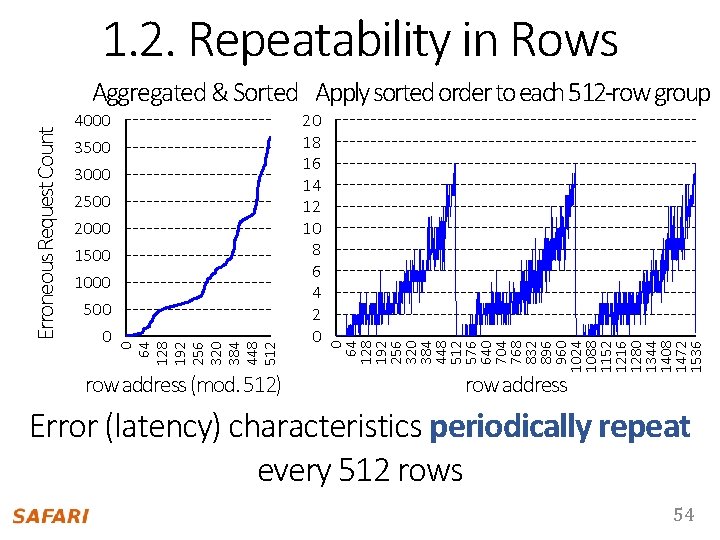

1. 2. Repeatability in Rows 3500 3000 2500 2000 1500 1000 500 0 row address (mod. 512) 20 18 16 14 12 10 8 6 4 2 0 0 64 128 192 256 320 384 448 512 576 640 704 768 832 896 960 1024 1088 1152 1216 1280 1344 1408 1472 1536 4000 0 64 128 192 256 320 384 448 512 Erroneous Request Count Aggregated & Sorted Apply sorted order to each 512 -row group row address Error (latency) characteristics periodically repeat every 512 rows 54

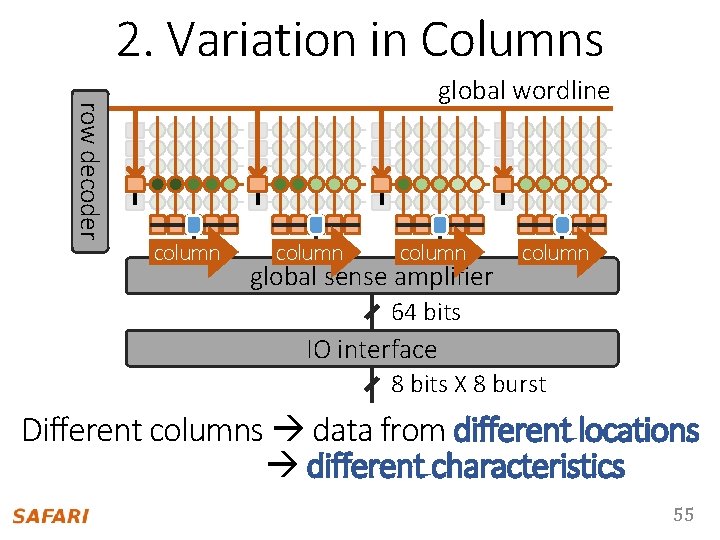

2. Variation in Columns row decoder global wordline column global sense amplifier column 64 bits IO interface 8 bits X 8 burst Different columns data from different locations different characteristics 55

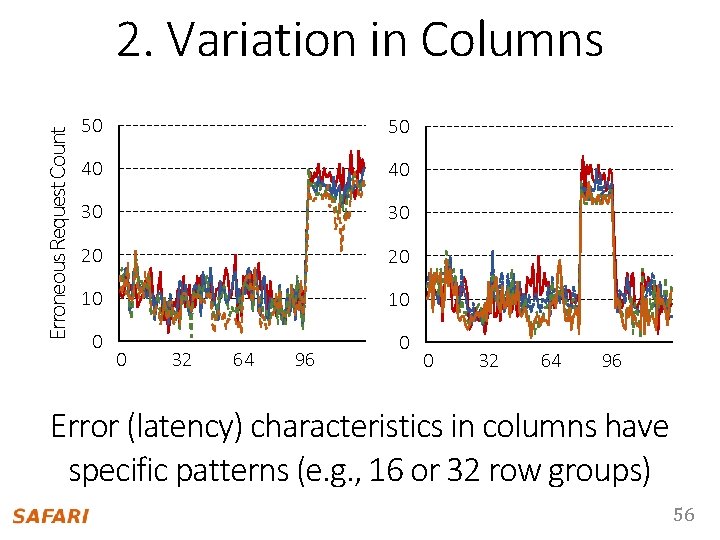

Erroneous Request Count 2. Variation in Columns 50 50 40 40 30 30 20 20 10 10 0 32 64 96 Error (latency) characteristics in columns have specific patterns (e. g. , 16 or 32 row groups) 56

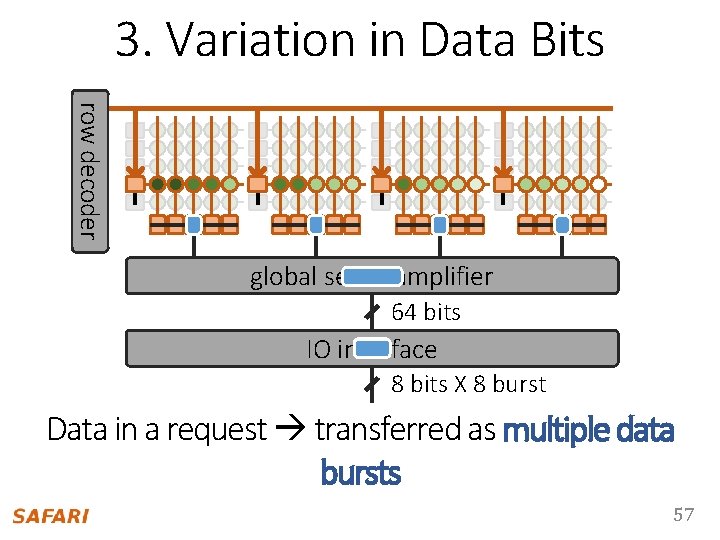

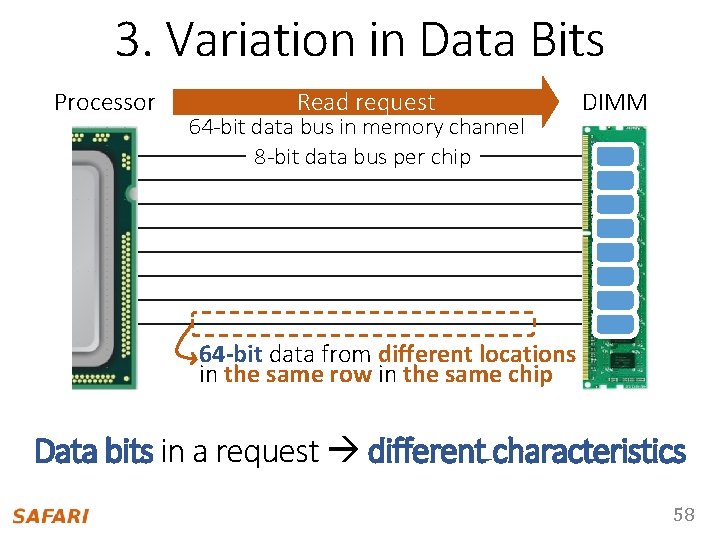

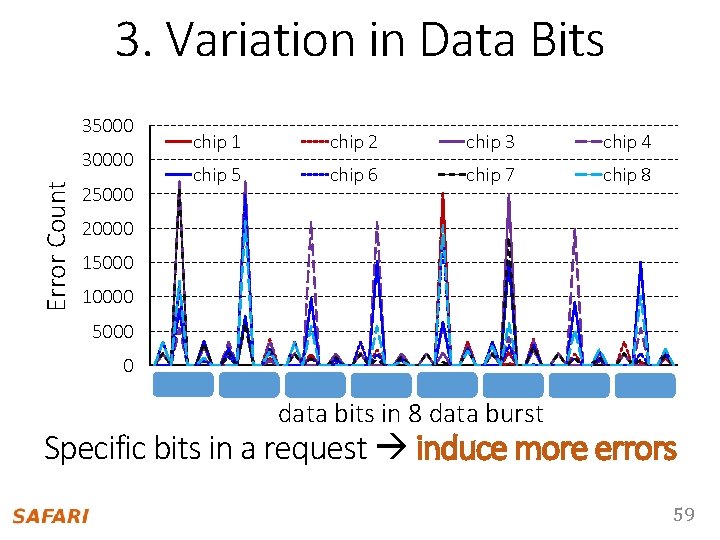

3. Variation in Data Bits row decoder global sense amplifier 64 bits IO interface 8 bits X 8 burst Data in a request transferred as multiple data bursts 57

3. Variation in Data Bits Processor Read request 64 -bit data bus in memory channel 8 -bit data bus per chip DIMM 64 -bit data from different locations in the same row in the same chip Data bits in a request different characteristics 58

3. Variation in Data Bits 35000 Error Count 30000 25000 chip 1 chip 2 chip 3 chip 4 chip 5 chip 6 chip 7 chip 8 20000 15000 10000 5000 0 0 8 16 24 32 40 48 data bits in 8 data burst 56 Specific bits in a request induce more errors 59

Our Approach • Experimental study of forarchitecturalvariation – Goal: Identify & characterize inherently slower regions – Methodology: Profile Profiling 9696 real. DRAMmodules by using FPGA-based DRAM test infrastructure • Exploiting architectural variation – AVA Online Profiling: Dynamic & low cost latency optimization mechanism – AVA Data Shuffling: Improving reliability by avoiding ECC-uncorrectable ECC uncorrectable errors 60

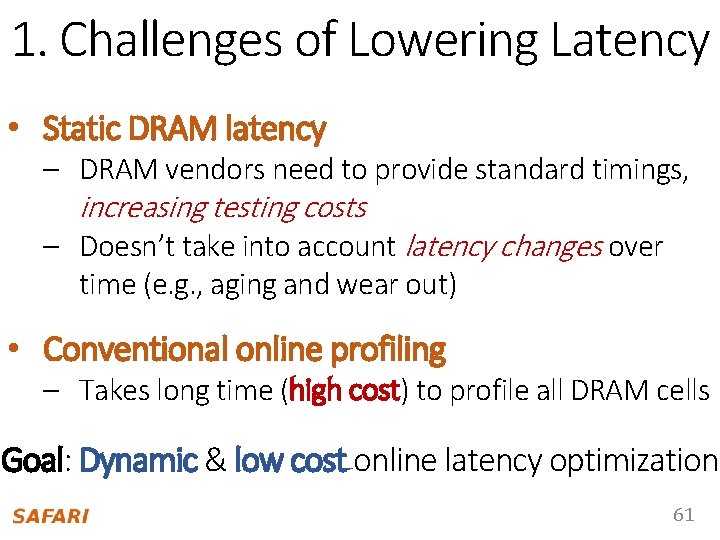

1. Challenges of Lowering Latency • Static DRAM latency – DRAM vendors need to provide standard timings, increasing testing costs – Doesn’t take into account latency changes over time (e. g. , aging and wear out) • Conventional online profiling – Takes long time (high cost) to profile all DRAM cells Goal: Dynamic & low cost online latency optimization 61

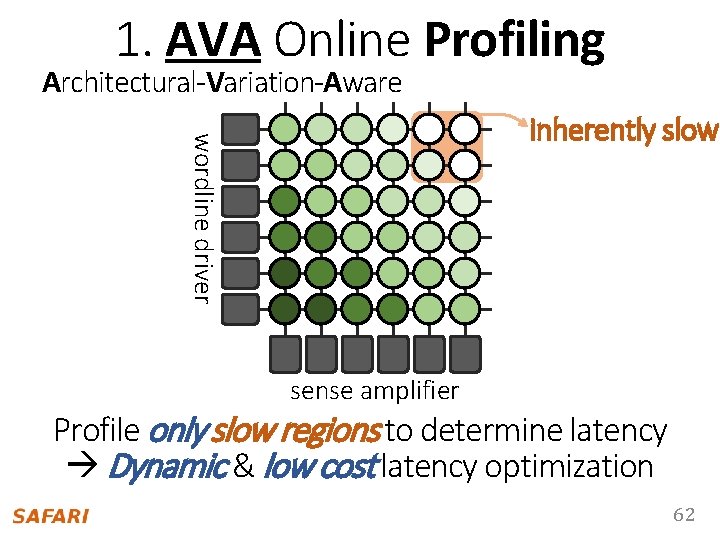

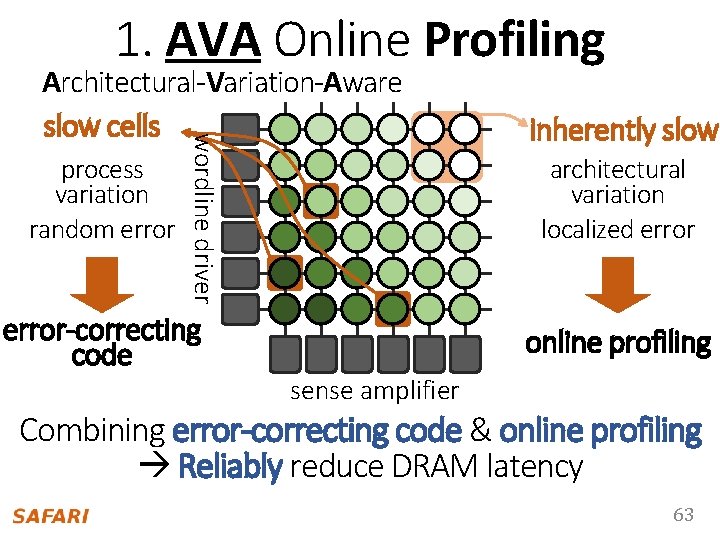

1. AVA Online Profiling Architectural-Variation-Aware wordline driver inherently slow sense amplifier Profile only slow regions to determine latency Dynamic & low cost latency optimization 62

1. AVA Online Profiling process variation random error wordline driver Architectural-Variation-Aware slow cells error-correcting code inherently slow architectural variation localized error online profiling sense amplifier Combining error-correcting code & online profiling Reliably reduce DRAM latency 63

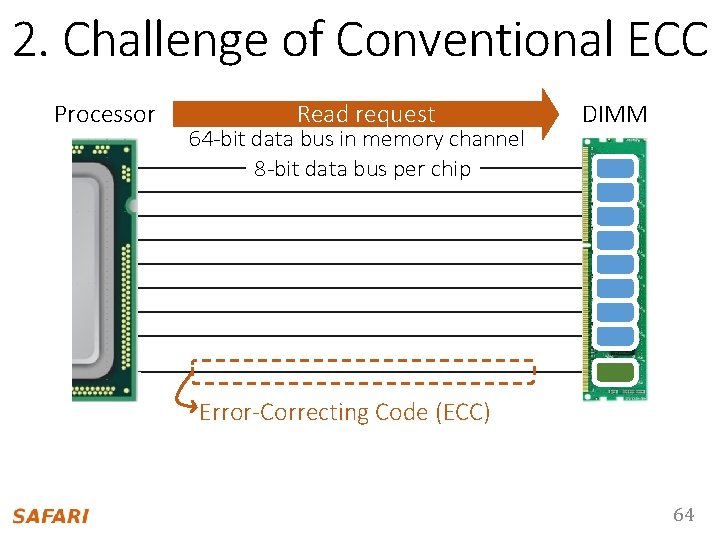

2. Challenge of Conventional ECC Processor Read request 64 -bit data bus in memory channel 8 -bit data bus per chip DIMM Error-Correcting Code (ECC) 64

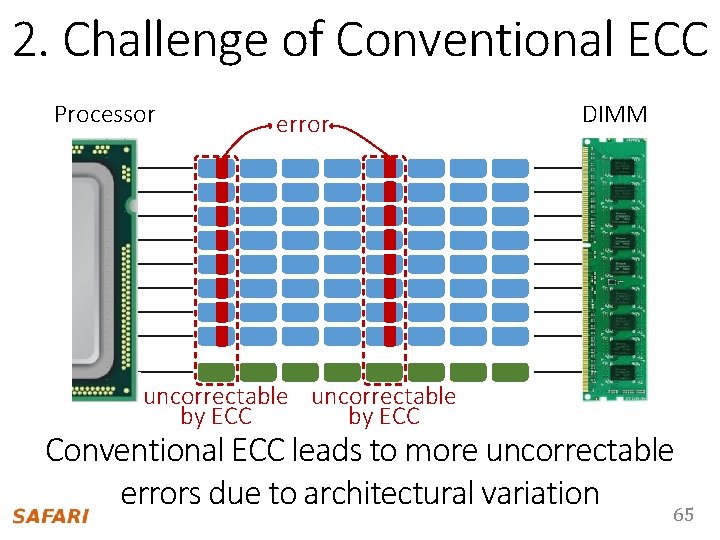

2. Challenge of Conventional ECC Processor error DIMM 8 -bit data bus per chip uncorrectable by ECC Conventional ECC leads to more uncorrectable errors due to architectural variation 65

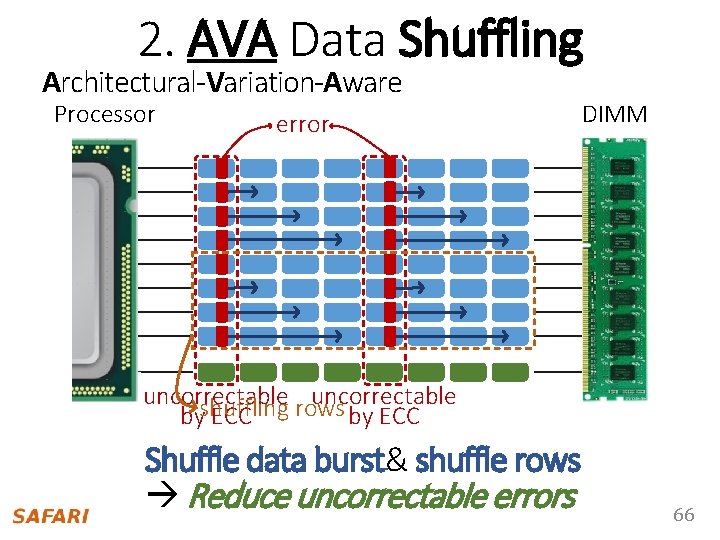

2. AVA Data Shuffling Architectural-Variation-Aware Processor error DIMM 8 -bit data bus per chip uncorrectable rows by ECC byshuffling ECC Shuffle data burst& shuffle rows Reduce uncorrectable errors 66

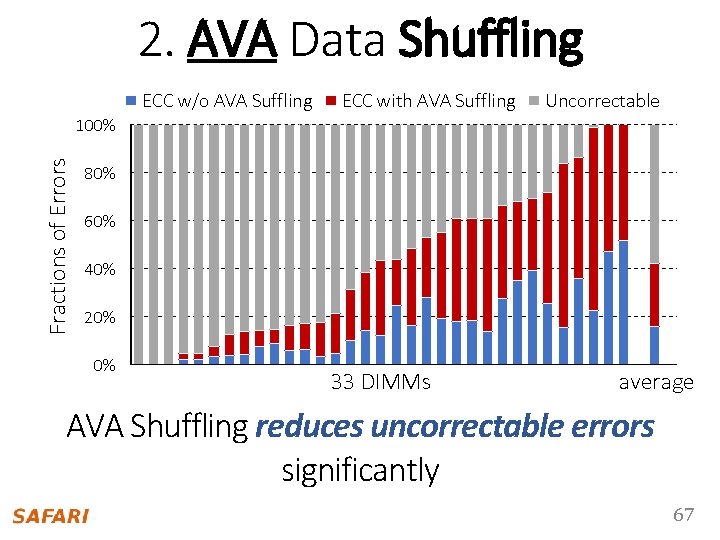

2. AVA Data Shuffling ECC w/o AVA Suffling Fractions of Errors 100% ECC with AVA Suffling Uncorrectable 80% 60% 40% 20% 0% 11 22 33 44 55 66 77 88 33 DIMMs average 99 10 10 11 11 12 12 13 13 14 14 15 15 16 16 17 17 18 18 19 19 20 20 21 21 22 22 23 23 24 24 25 25 26 26 27 27 28 28 29 29 30 30 31 31 32 32 33 33 34 34 35 35 36 36 AVA Shuffling reduces uncorrectable errors significantly 67

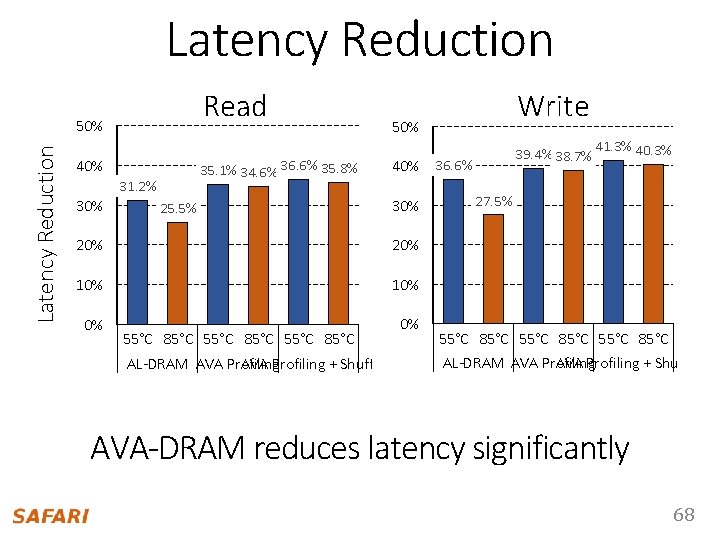

Latency Reduction Read Latency Reduction 50% 35. 1% 34. 6% 36. 6% 35. 8% 40% 31. 2% 30% 25. 5% Write 50% 39. 4% 38. 7% 41. 3% 40% 36. 6% 30% 20% 10% 0% 0% 55°C 85°C AL-DRAM AVA Profiling + Shuffling 27. 5% 55°C 85°C AL-DRAM AVA Profiling + Shuffling AVA-DRAM reduces latency significantly 68

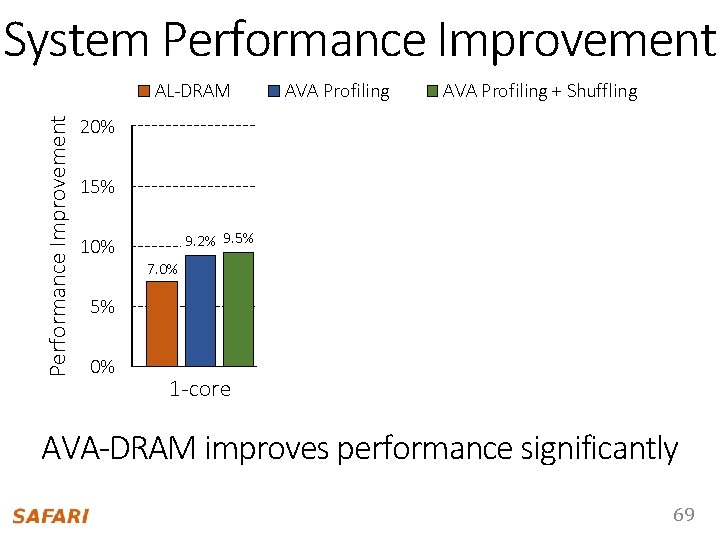

System Performance Improvement AL-DRAM AVA Profiling + Shuffling 20% 14. 7%15. 1% 15% 11. 7% 9. 2% 9. 5% 10% 13. 7%14. 2% 11. 0% 13. 8%14. 1% 11. 5% 7. 0% 5% 0% 1 -core 2 -core 4 -core 8 -core AVA-DRAM improves performance significantly 69

Summary: AVA-DRAM • Observation: Architectural Variation – DRAM has inherently slow regions due to its cell array organization, which leads to high DRAM latency • Our Approach – AVA Profiling: Profile only inherently slow regionsto determine latency dynamic & low cost latency optimization – AVA Shuffling: Distribute data from slow regions to different ECC code words avoid uncorrectable errors • Analysis: Characterization of 96 DIMMs – Great potential to lower DRAM timing parameters • System Performance Evaluation – Significant performance improvement(15% for memoryintensive workloads) 70

Outline 1. TL-DRAM Reducing DRAM Latency by Modifying Bitline Architecture 2. AL-DRAM Optimizing DRAM Latency for the Common Case 3. AVA-DRAM Lowering DRAM Latency by Exploiting Architectural Variation Prior Work Future Research Direction 71

Prior Work • Low latency DRAM – Having short bitline – Heterogeneous bitline • Cached DRAM • DRAM with higher parallelism – Subarray level parallelism – Parallelizing refreshes with accesses • Memory scheduling – Memory scheduling for more parallelism – Application-Aware Memory Scheduling • Caching, Paging, and Prefetching 72

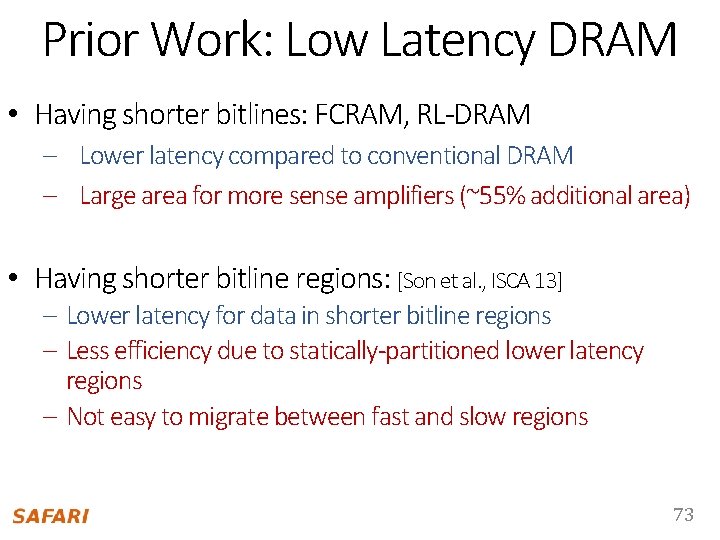

Prior Work: Low Latency DRAM • Having shorter bitlines: FCRAM, RL-DRAM – Lower latency compared to conventional DRAM – Large area for more sense amplifiers (~55% additional area) • Having shorter bitline regions: [Son et al. , ISCA 13] – Lower latency for data in shorter bitline regions – Less efficiency due to statically-partitioned lower latency regions – Not easy to migrate between fast and slow regions 73

Prior Work: Cached DRAM • Implementing low-latency SRAM cache in DRAM – Lower latency for accessing recently-accessed requests – Large area for SRAM cache (~145% for integrating 6% capacity as SRAM cell) – Complex control for SRAM cache 74

![Prior Work: More Parallelism • Subarray-Level Parallelism: [Kim+, ISCA 2012] – Enables independent accesses Prior Work: More Parallelism • Subarray-Level Parallelism: [Kim+, ISCA 2012] – Enables independent accesses](http://slidetodoc.com/presentation_image/e73969f72e11ba2e78d5446171d429f7/image-75.jpg)

Prior Work: More Parallelism • Subarray-Level Parallelism: [Kim+, ISCA 2012] – Enables independent accesses to different subarrays (a row of mats) – Does not reduce latency of a single access • Parallelizing refreshes with accesses: [Chang+, HPCA 14] – Mitigates latency penalty of DRAM refresh operations – Does not reduce latency of a single access 75

Outline 1. TL-DRAM Reducing DRAM Latency by Modifying Bitline Architecture 2. AL-DRAM Optimizing DRAM Latency for the Common Case 3. AVA-DRAM Lowering DRAM Latency by Exploiting Architectural Variation Prior Work Future Research Direction 76

Future Research Direction • Reducing Latency in 3 D-stacked DRAM – Power delivered from the bottom layer up to to the top layer new source of variation in latency – Evaluate & exploit power network related variation • Exploiting Variation in Retention Time – Cells have different retention time based on their contents (i. e. , 0 vs. 1), but use the same refresh interval – Evaluate the relationship between the content in a cell and retention time & exploit the variation in retention time 77

Future Research Direction • System Design for Heterogeneous-Latency DRAM – Design a system that allocates frequently-used or more critical data to fast regions – Design a system that optimizes DRAM operating conditions for better performance (e. g. , reducing DRAM temperature by spreading accesses out to different regions) 78

• Observation Conclusion – DRAM cell array is the dominant source of high latency • DRAM latency can be reduced by enabling and exploiting latency heterogeneity • Our Three Approaches – TL-DRAM: Enabling latency heterogeneity by changing DRAM architecture – AL-DRAM: Exploiting latency heterogeneity from process variation and temperature dependency – AVA-DRAM: Exploiting latency heterogeneity from architectural variation • Evaluation & Result – Our mechanisms enable significant latency reduction at low cost and thus improve system performance 79

Contributions • Identified three major sources of high DRAM latency – Long narrow wires – Uniform latencies despite different operating conditions – Uniform latencies despite architectural variation • Evaluated the impact of varying DRAM latencies – Simulation with detailed DRAM model – Profiled real DRAM (96 – 115 DIMMs) with FPGA-based DRAM test infrastructure • Developed mechanisms to lower DRAM latency, leading to significant performance improvement 80

Reducing DRAM Latency at Low Cost by Exploiting Heterogeneity Donghyuk Lee Carnegie Mellon University

- Slides: 81