Recursive Composition in Computer Vision Leo Zhu CSAIL

![RCM-1: Multi-level Potentials � Potentials for appearance [ Gabor, Edge, = …] * 13 RCM-1: Multi-level Potentials � Potentials for appearance [ Gabor, Edge, = …] * 13](https://slidetodoc.com/presentation_image_h2/c9c484e88d2601456f751aedb6c3a431/image-13.jpg)

- Slides: 61

Recursive Composition in Computer Vision Leo Zhu CSAIL MIT Joint work with Chen, Yuille, Freeman and Torralba 1

Ideas behind Recursive Composition � How to deal with image complexity � A general framework for different vision tasks � Rich representation and tractable computation Pattern Theory. Grenander 94 Compositionality. Geman 02, 06 Stochastic Grammar. Zhu and Mumford 06 2

Recursive Composition �Representation • Recursive Compositional Models (RCMs) �Inference • Recursive Optimization �Learning • Supervised Parameter Estimation • Unsupervised Recursive Dictionary Learning �RCM-1: Deformable Object �RCM-2: Articulated Object �RCM-3: Scene (Entire Image) 3

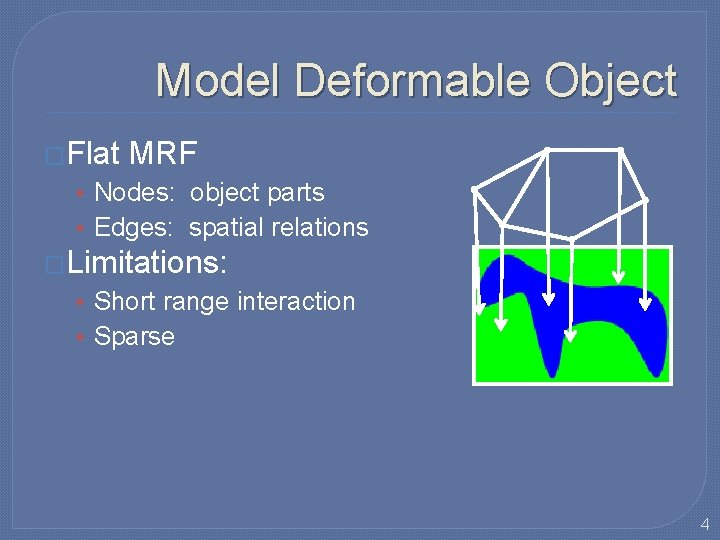

Model Deformable Object �Flat MRF • Nodes: object parts • Edges: spatial relations �Limitations: • Short range interaction • Sparse 4

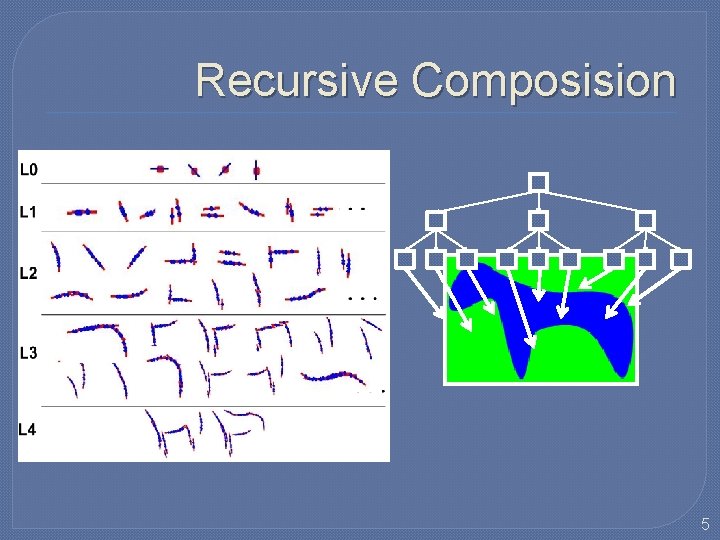

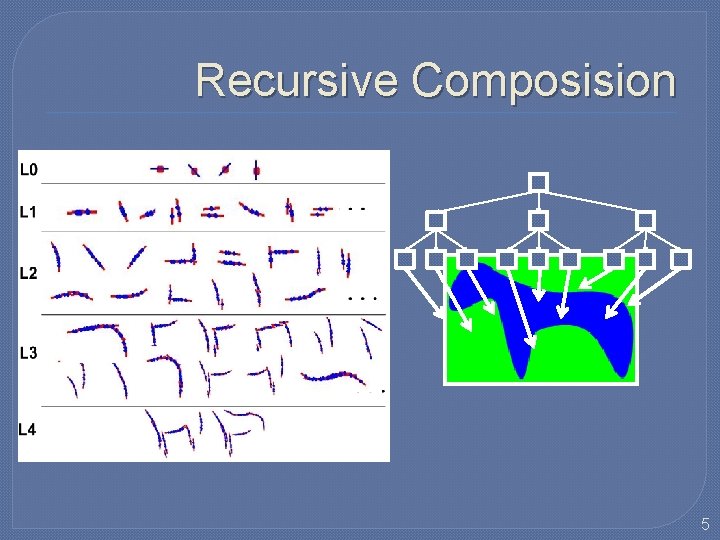

Recursive Composision 5

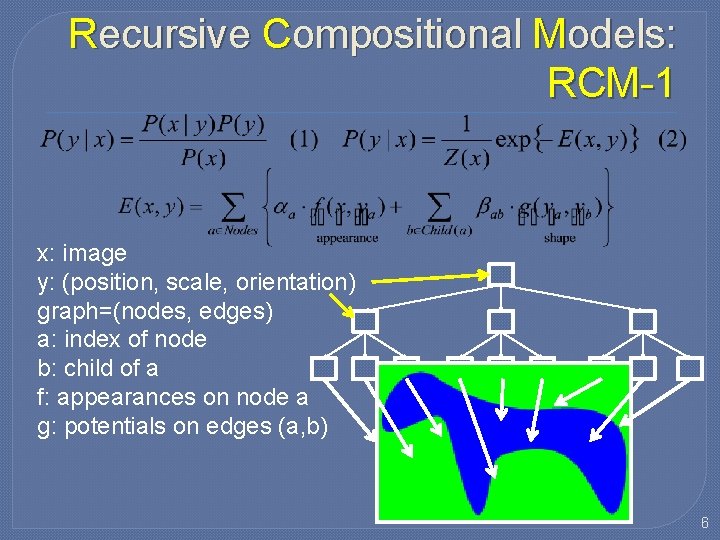

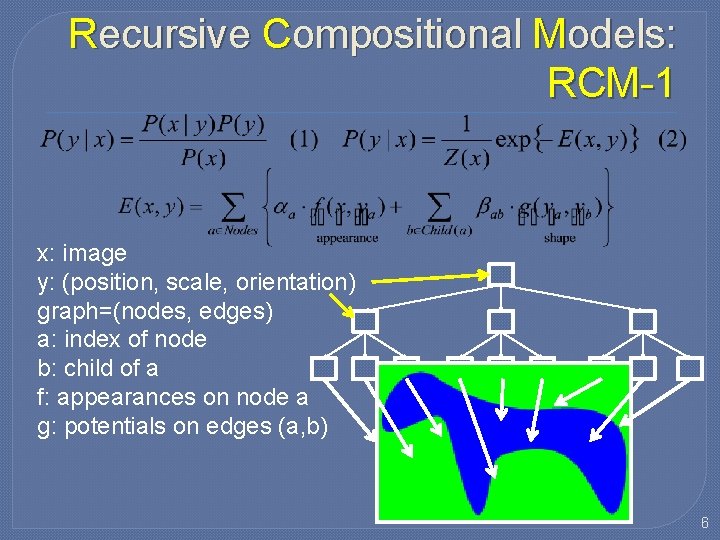

Recursive Compositional Models: RCM-1 x: image y: (position, scale, orientation) graph=(nodes, edges) a: index of node b: child of a f: appearances on node a g: potentials on edges (a, b) 6

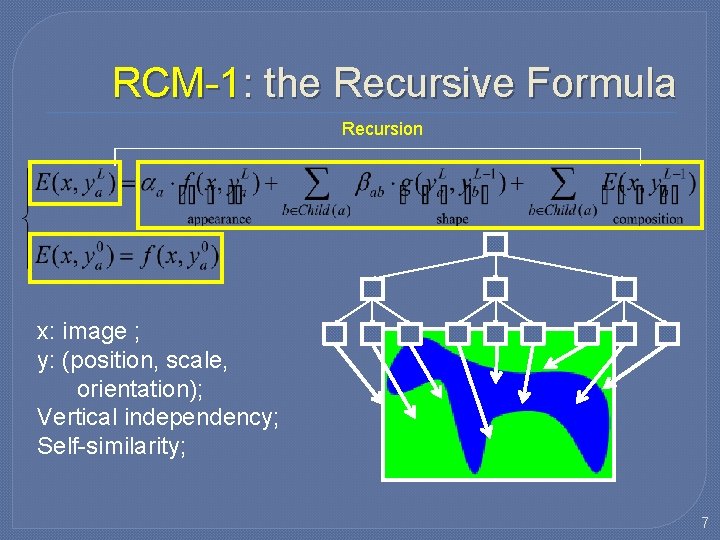

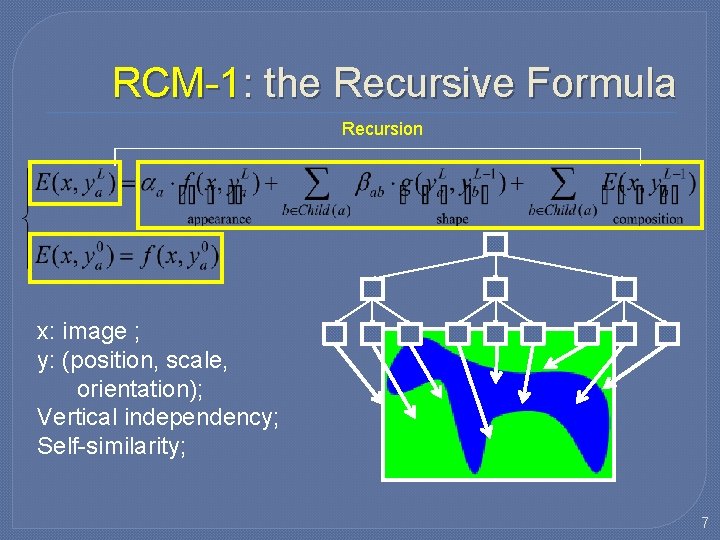

RCM-1: the Recursive Formula Recursion x: image ; y: (position, scale, orientation); Vertical independency; Self-similarity; 7

Recursive Composition �Representation • Recursive Compositional Models (RCMs) �Inference • Recursive Optimization �Learning • Supervised Parameter Estimation • Unsupervised Recursive Dictionary Learning 8

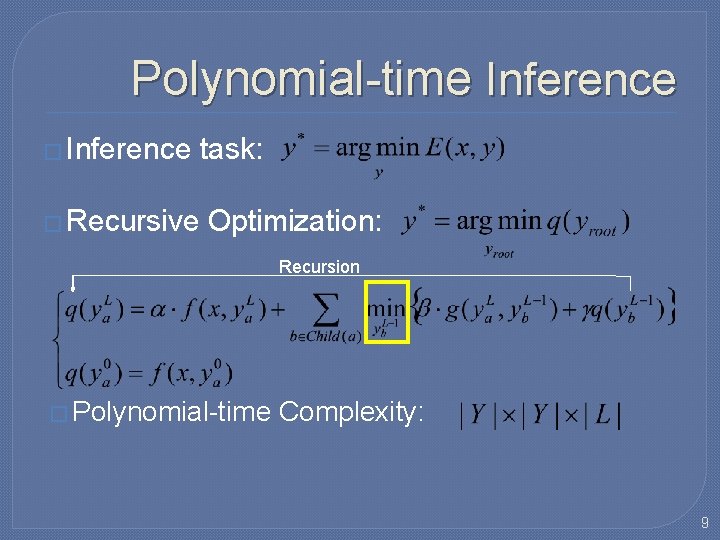

Polynomial-time Inference � Inference task: � Recursive Optimization: Recursion � Polynomial-time Complexity: 9

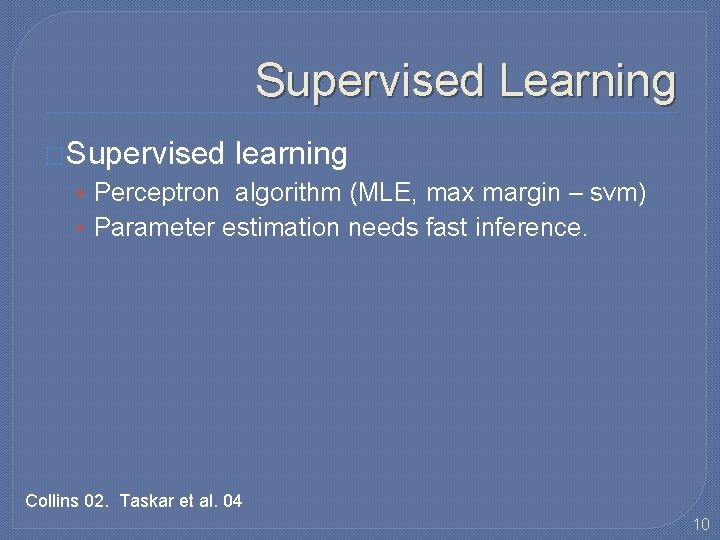

Supervised Learning �Supervised learning • Perceptron algorithm (MLE, max margin – svm) • Parameter estimation needs fast inference. Collins 02. Taskar et al. 04 10

Supervised learning by Perceptron Algorithm � Goal: � Input: where a set of training images with ground truth. Initialize parameter vector. � Training algorithm (Collins 02): Loop over training samples: i = 1 to N Step 1: find the best using inference: Step 2: Update the parameters: End of Loop. Inference is critical for learning 11

Recursive Composition �Representation • Recursive Compositional Models (RCMs) �Inference • Recursive Optimization (Polynomial-time) �Learning • Supervised Parameter Estimation �RCM-1: Deformable Object 12

![RCM1 Multilevel Potentials Potentials for appearance Gabor Edge 13 RCM-1: Multi-level Potentials � Potentials for appearance [ Gabor, Edge, = …] * 13](https://slidetodoc.com/presentation_image_h2/c9c484e88d2601456f751aedb6c3a431/image-13.jpg)

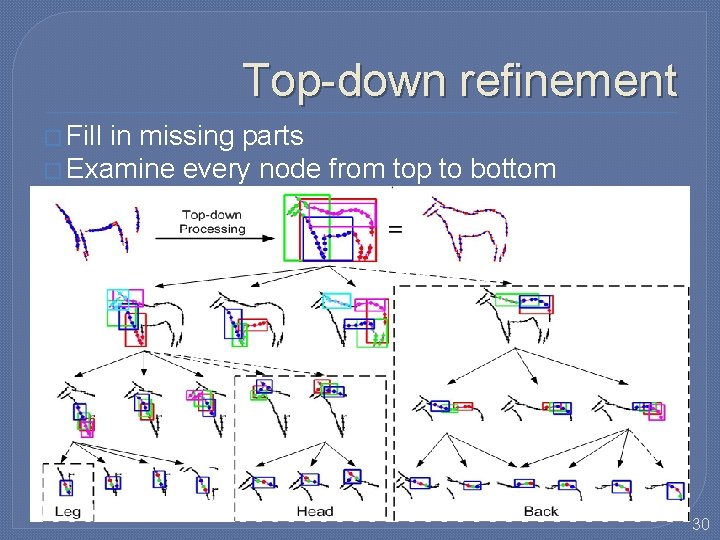

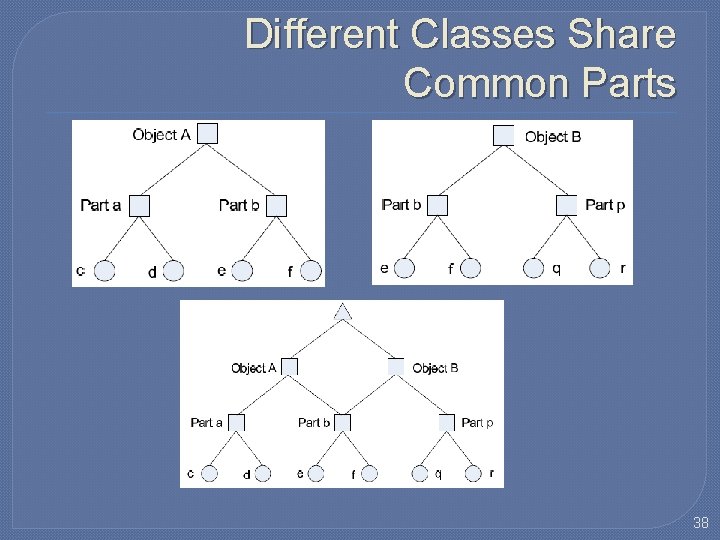

RCM-1: Multi-level Potentials � Potentials for appearance [ Gabor, Edge, = …] * 13

RCM-1: Multi-level Potentials � Potentials for shape: triplet descriptors (position, scale, orientation) 14

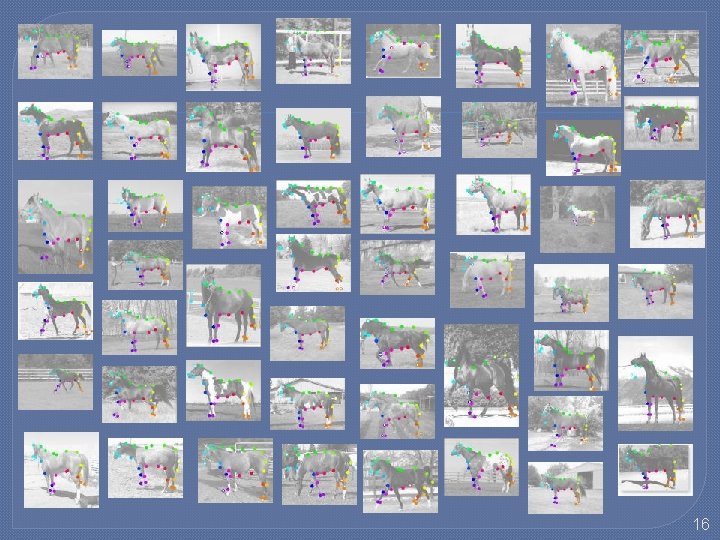

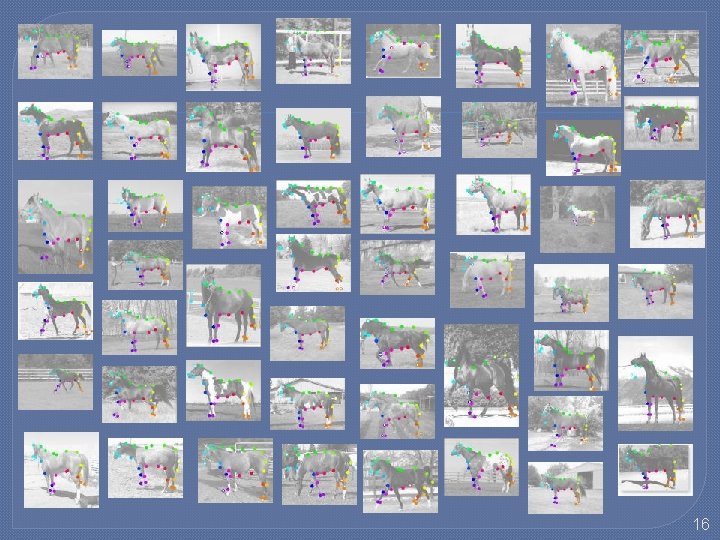

The Inference Results after Supervised Learning 15

16

Segmentation Results 17

Evaluations: Segmentation and Parsing � Segmentation (Accuracy of pixel labeling) • The proportion of the correct pixel labels (object or non- object) � Parsing (Average Position Error of matching) • The average distance between the positions of leaf nodes of the ground truth and those estimated in the parse tree Methods Testing Segmentation Parsing Speed RCM-1 228 94. 7 16 23 s Ren (Berkeley) 172 91 Winn (LOCUS) 200 93 Levin and Weiss N/A 95 Kumar (OBJ CUT) 5 96 18

Recursive Composition �Modeling: (Representation) • Recursive Compositional Models (RCMs) �Inference: (Computing) • Recursive Optimization (Polynomial-time) �Learning: • Supervised Parameter Estimation • Unsupervised Recursive Learning �RCM-1: deformable object 20

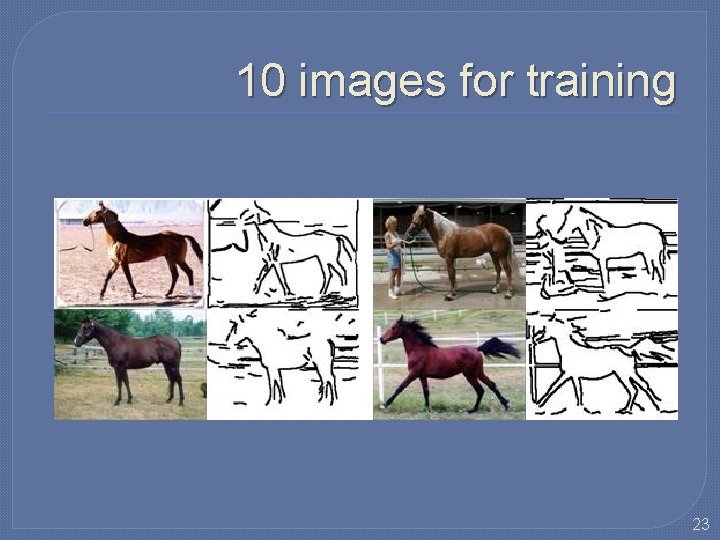

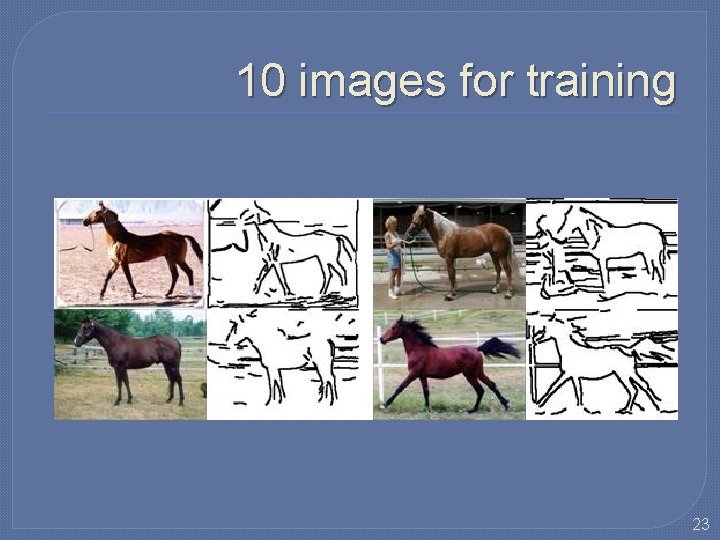

Unsupervised Learning � Task: given 10 training images, no labeling, no alignment, highly ambiguous features. • Induce the structure (nodes and edges) • Estimate the parameters. Correspondence is unknown ? Combinatorial Explosion problem 21

Recursive Dictionary Learning � Multi-level dictionary (layer-wise greedy) � Bottom-Up and Top-Down recursive procedure � Three Principles: • Recursive Composition • Suspicious Coincidence • Competitive Exclusion Recursion Barlow 94. 22

10 images for training 23

Bottom-up Learning Suspicious Coincidence Composition Clustering Competitive Exclusion 24

The Dictionary: From Generic Parts to Object Structures � Unified representation (RCMs) and learning � Bridge the gap between the generic features and specific object structures 25

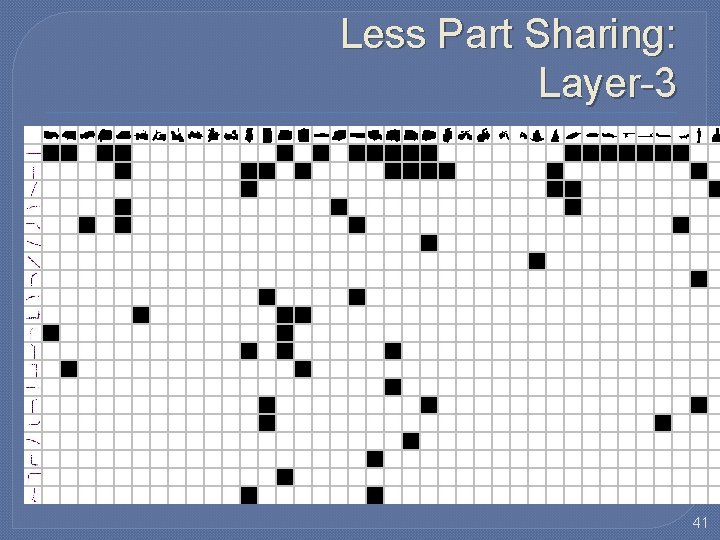

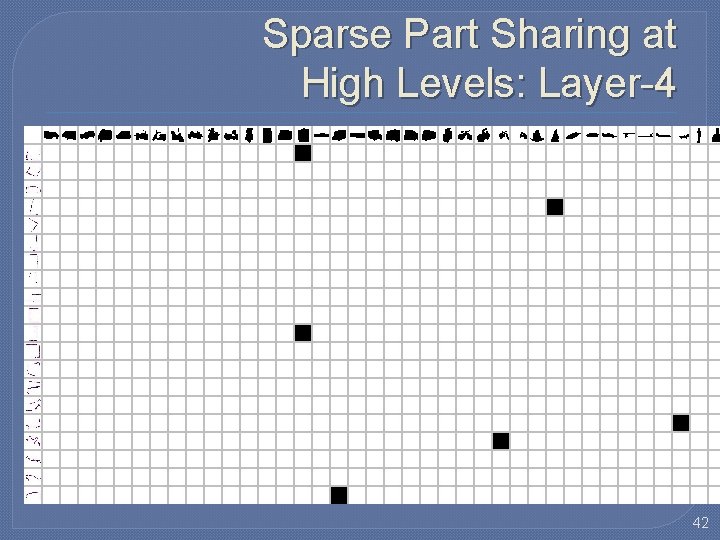

Dictionary Size, Part Sharing and Computational Complexity Level Composition Clusters Suspicious Coincidence More Sharing 0 Competitive Exclusion Seconds 4 1 1 167, 431 14, 684 262 48 117 2 2, 034, 851 741, 662 995 116 254 3 2, 135, 467 1, 012, 777 305 53 99 4 236, 955 72, 620 30 2 9 26

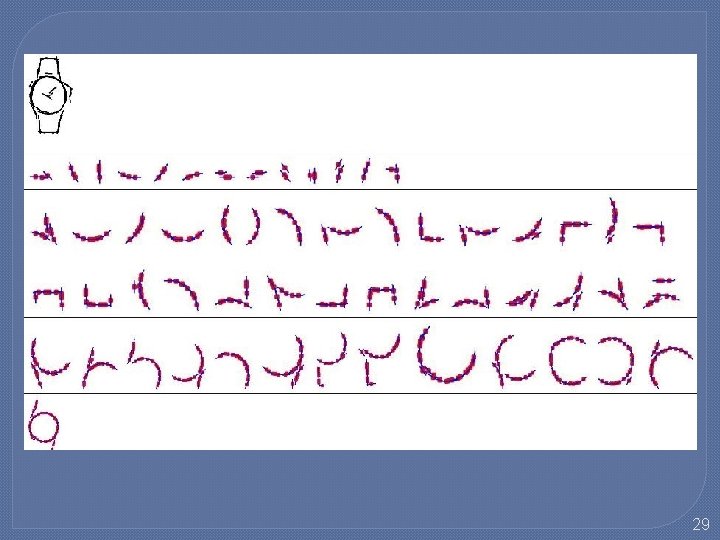

27

28

29

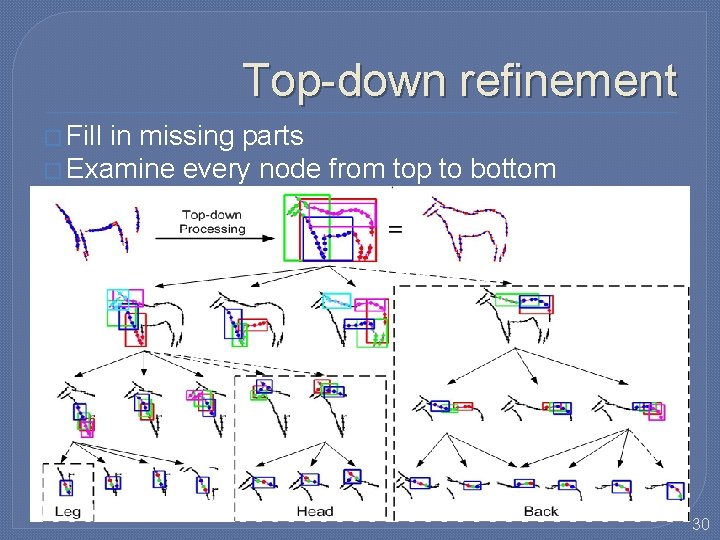

Top-down refinement � Fill in missing parts � Examine every node from top to bottom 30

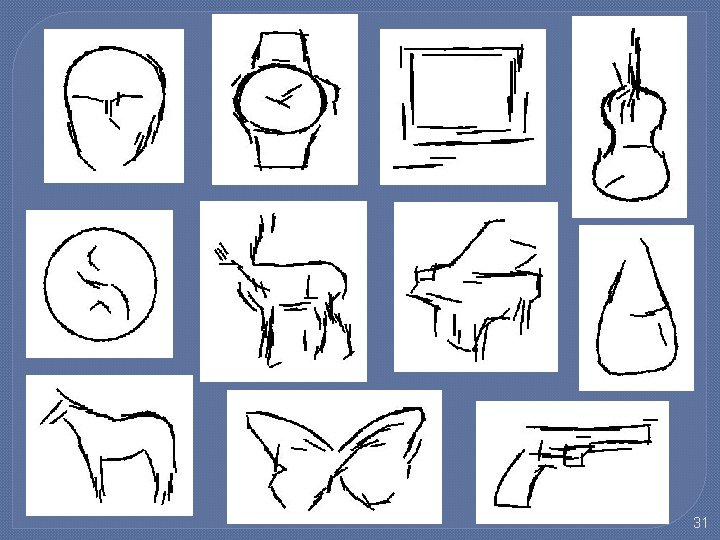

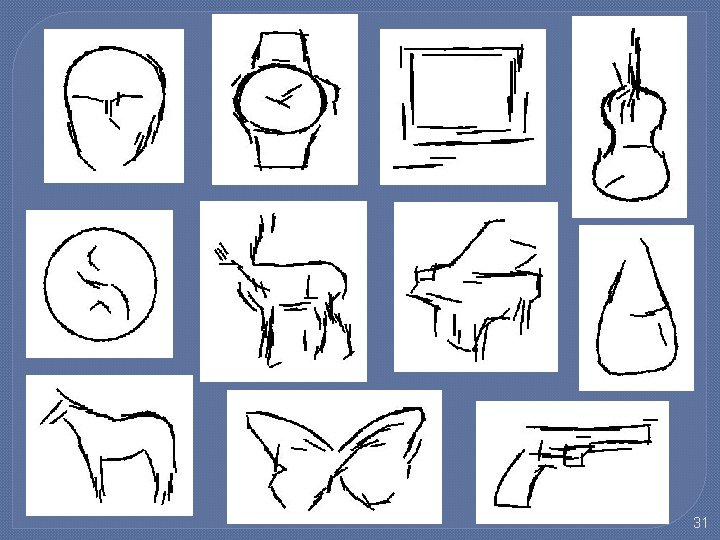

31

Evaluations of Unsupervised Learning Methods Testing Segmentation Unsupervised 316 93. 3 Supervised 228 94. 7 Parsing Speed 17 s 16 23 s 32

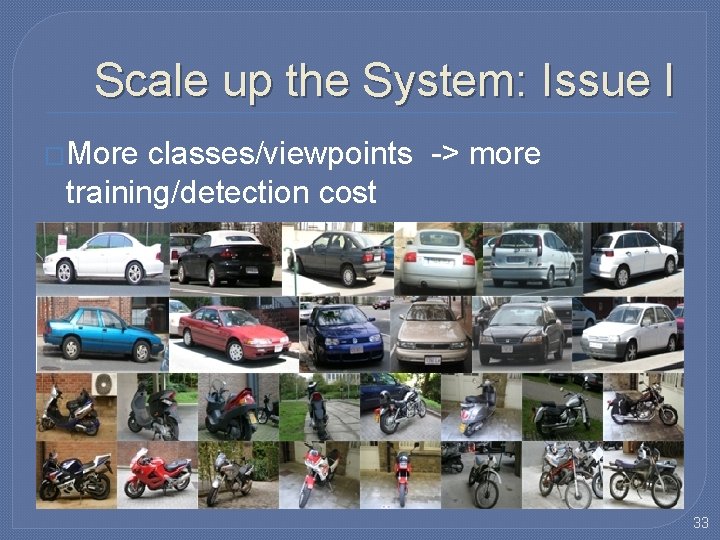

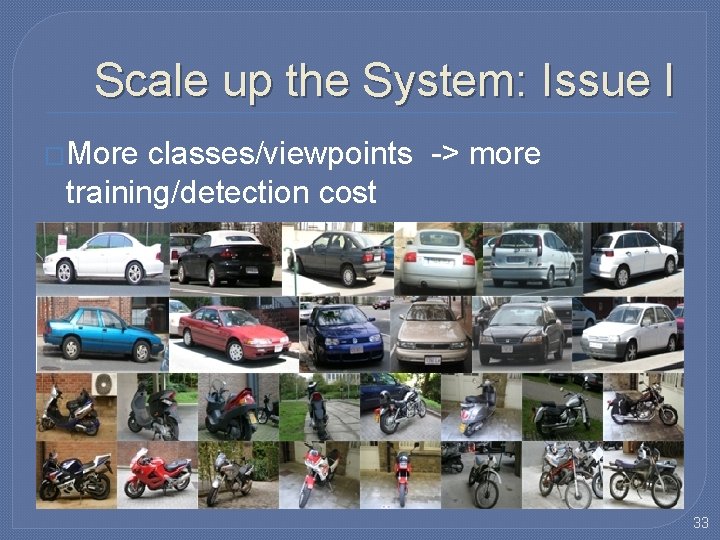

Scale up the System: Issue I �More classes/viewpoints -> more training/detection cost 33

Scale up the System: Issue II �No enough data for rare viewpoints/classes 34

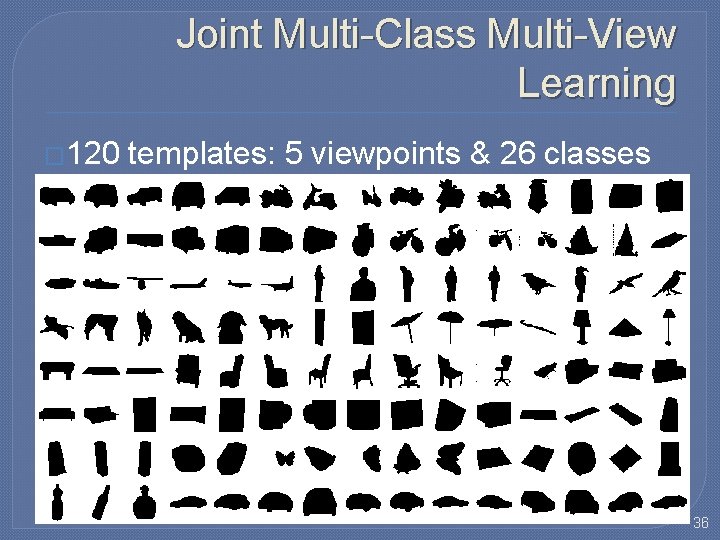

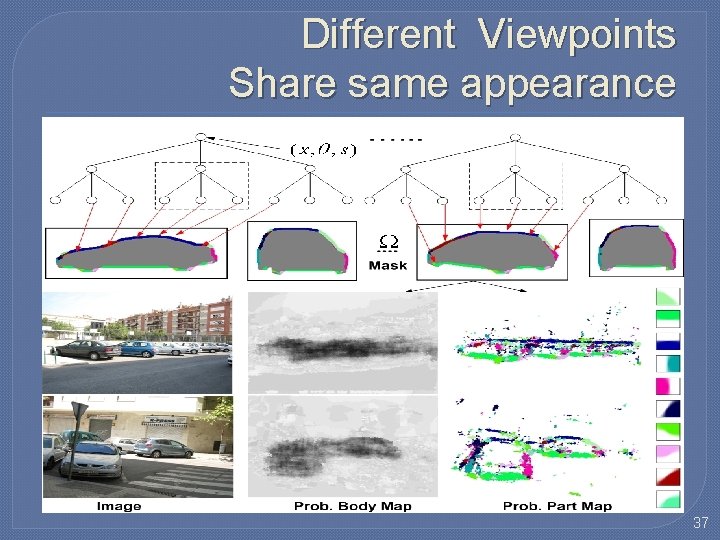

Our Strategy �Joint multi-class multi-view learning �Appearance sharing �Part sharing 35

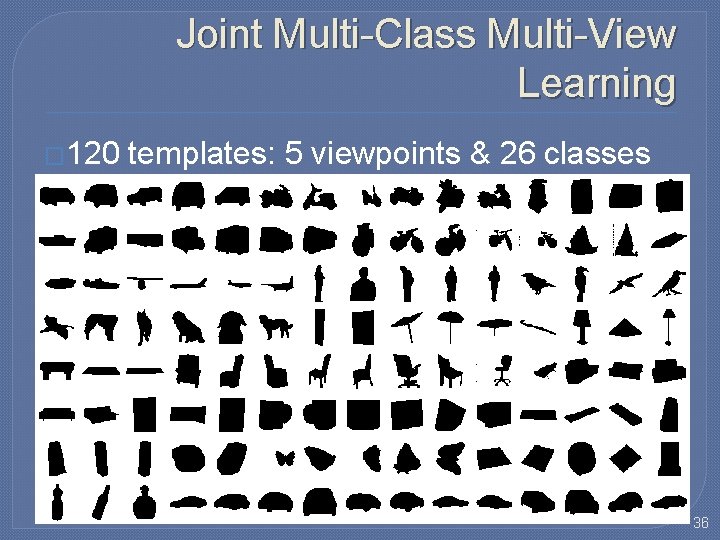

Joint Multi-Class Multi-View Learning � 120 templates: 5 viewpoints & 26 classes 36

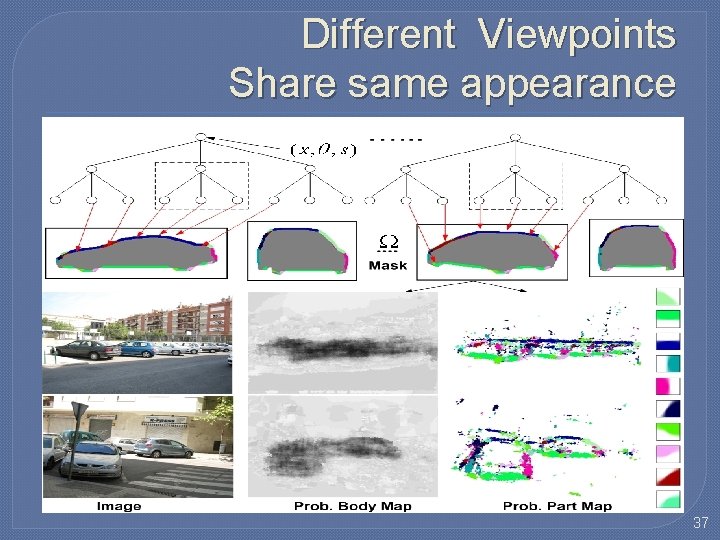

Different Viewpoints Share same appearance 37

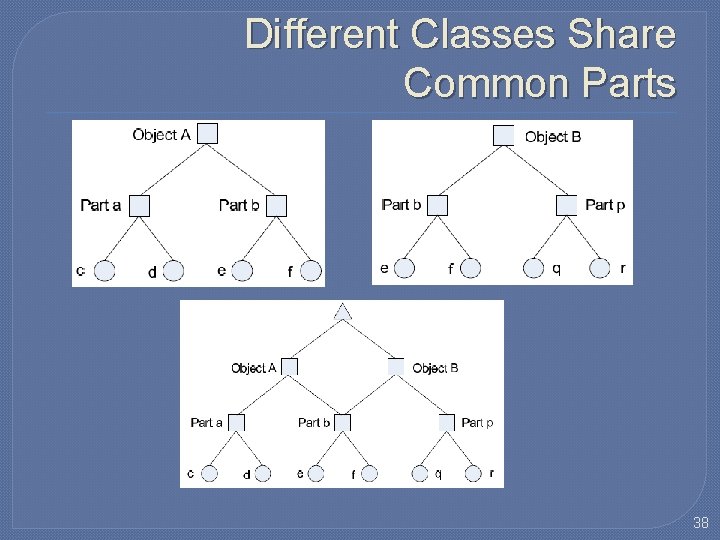

Different Classes Share Common Parts 38

Compact Hierarchical Dictionary 39

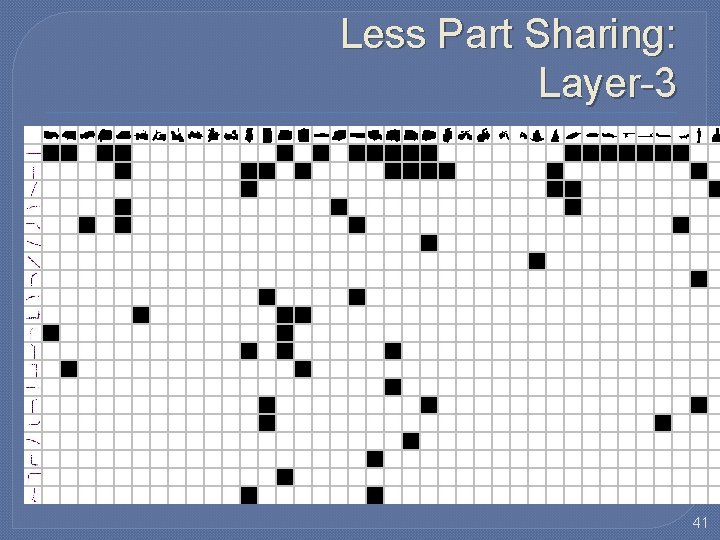

Dense Part Sharing at Low Levels: Layer-2 40

Less Part Sharing: Layer-3 41

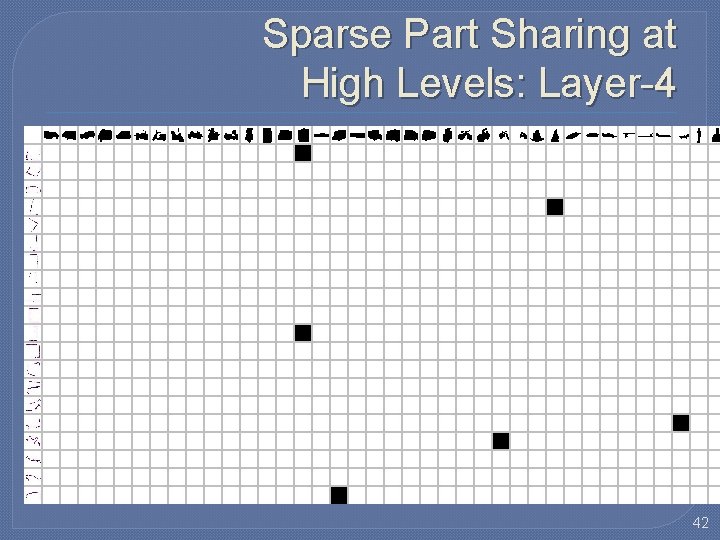

Sparse Part Sharing at High Levels: Layer-4 42

Re-usable Parts: All Layers 43

The more classes/viewpoints, the more amount of part sharing 44

Multi-View Single Class Performance 45

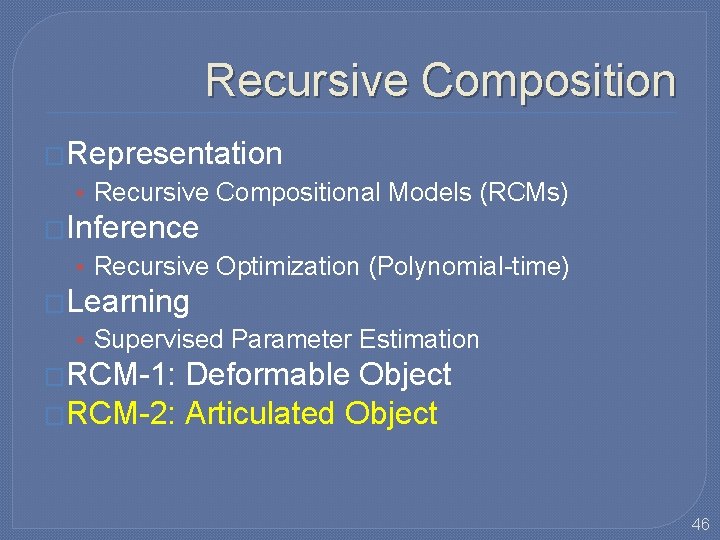

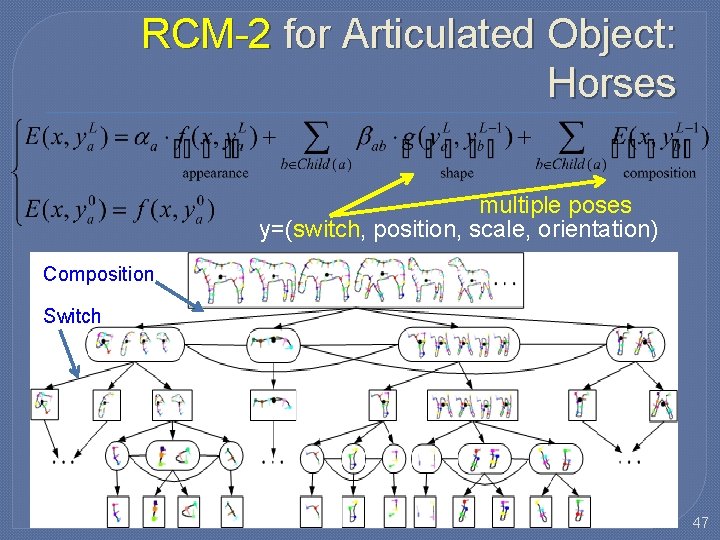

Recursive Composition �Representation • Recursive Compositional Models (RCMs) �Inference • Recursive Optimization (Polynomial-time) �Learning • Supervised Parameter Estimation �RCM-1: Deformable Object �RCM-2: Articulated Object 46

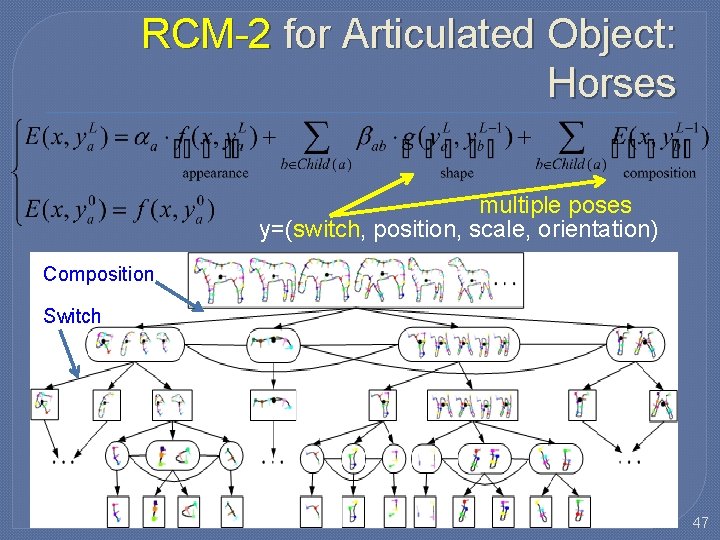

RCM-2 for Articulated Object: Horses multiple poses y=(switch, position, scale, orientation) Composition Switch 47

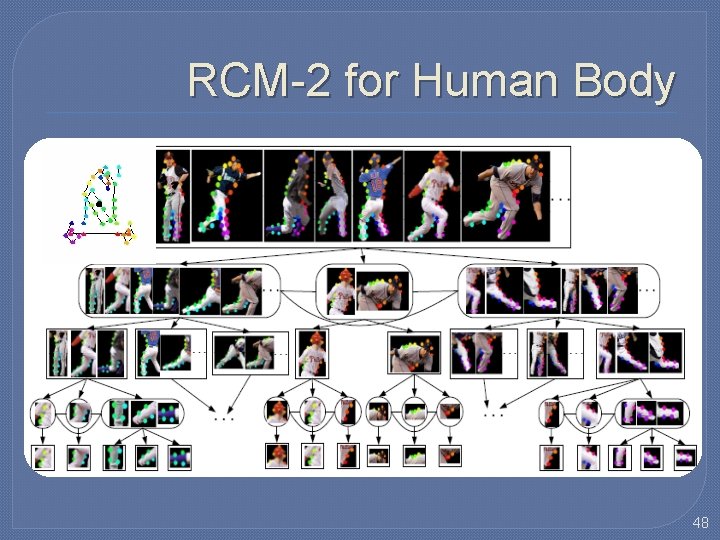

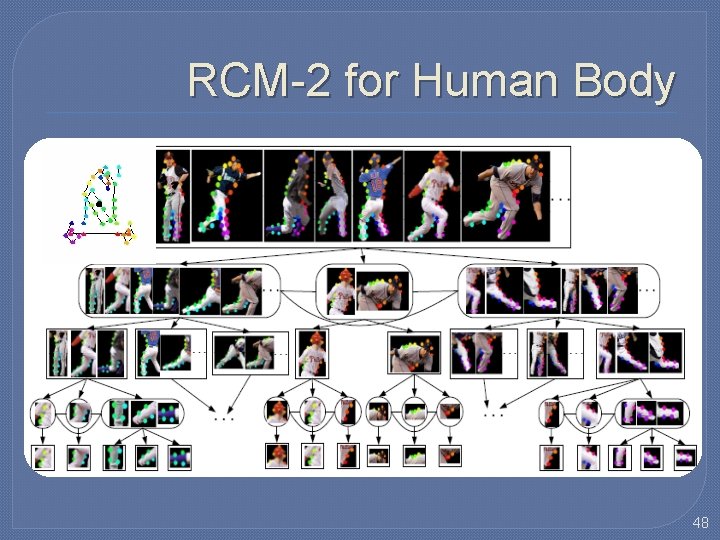

RCM-2 for Human Body 48

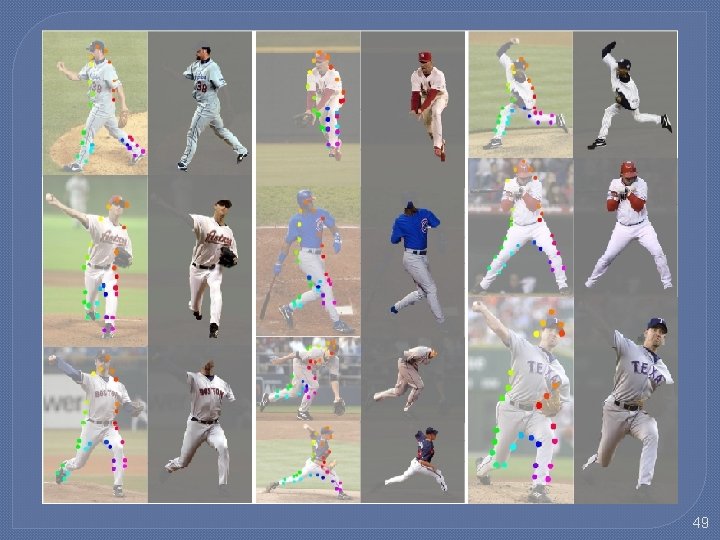

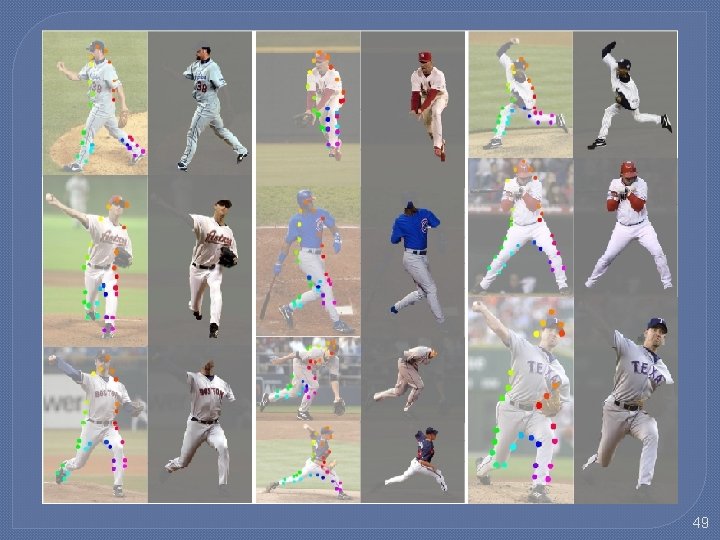

49

Recursive Composition �Representation • Recursive Compositional Models (RCMs) �Inference • Recursive Optimization (Polynomial-time) �Learning • Supervised Parameter Estimation �RCM-1: Deformable Object �RCM-2: Articulated Object �RCM-3: Scene (Entire Image) 50

Image Scene Parsing �Task: Image Segmentation and Labeling 51

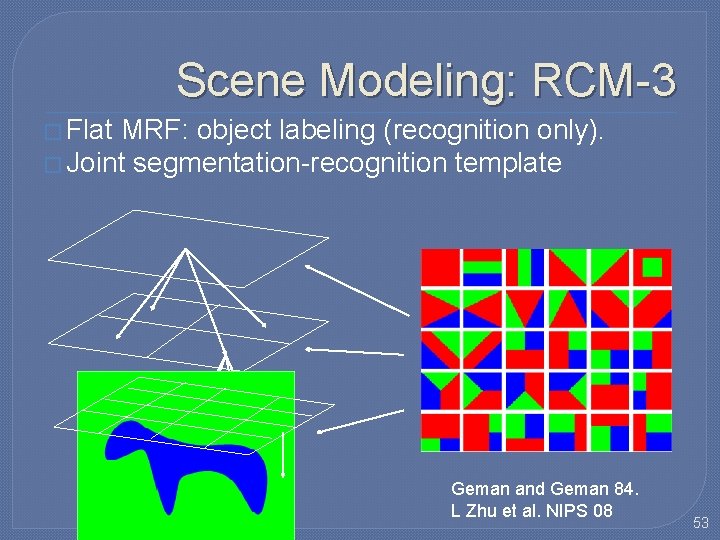

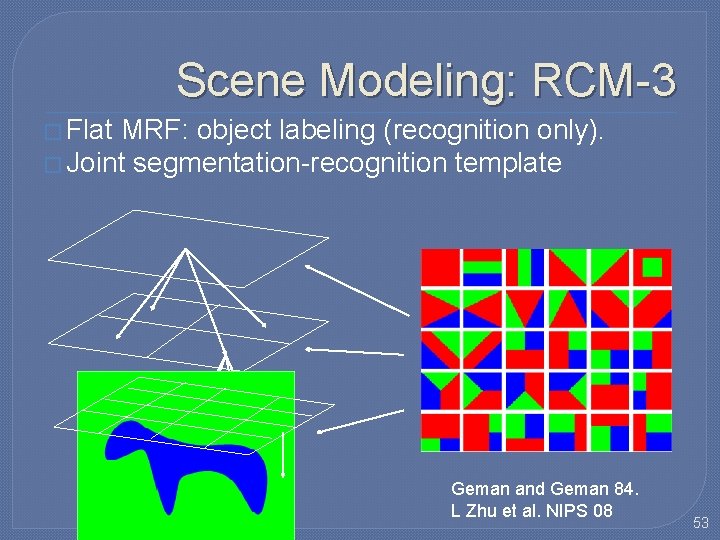

Scene Modeling: RCM-3 � Flat MRF: object labeling (recognition only). � Lack of long-range interactions. � Lack of region-level properties. � High-order potentials -> heavy computation Geman and Geman 84. L Zhu et al. NIPS 08 52

Scene Modeling: RCM-3 � Flat MRF: object labeling (recognition only). � Joint segmentation-recognition template Geman and Geman 84. L Zhu et al. NIPS 08 53

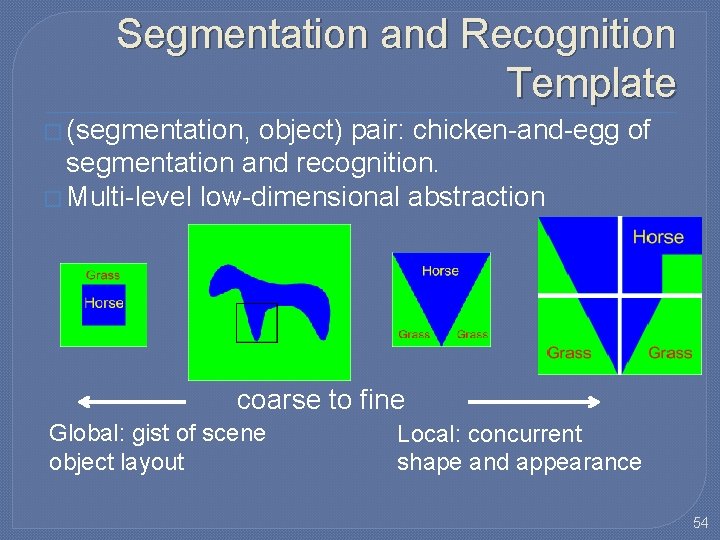

Segmentation and Recognition Template � (segmentation, object) pair: chicken-and-egg of segmentation and recognition. � Multi-level low-dimensional abstraction coarse to fine Global: gist of scene object layout Local: concurrent shape and appearance 54

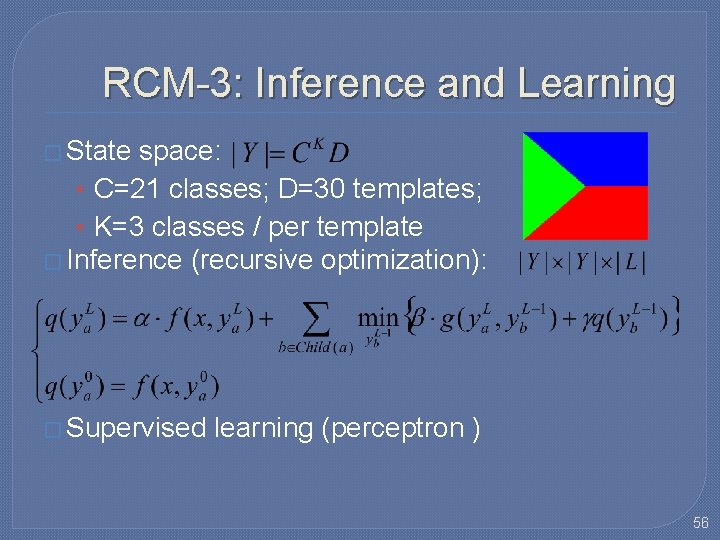

RCM-3 for Scene Parsing Recursion y=(segmentation, object) f: appearance likelihood g: object layout prior homogeneity layer-wise consistency object texture color object cooccurrence Horse Grass segmentation prior 55

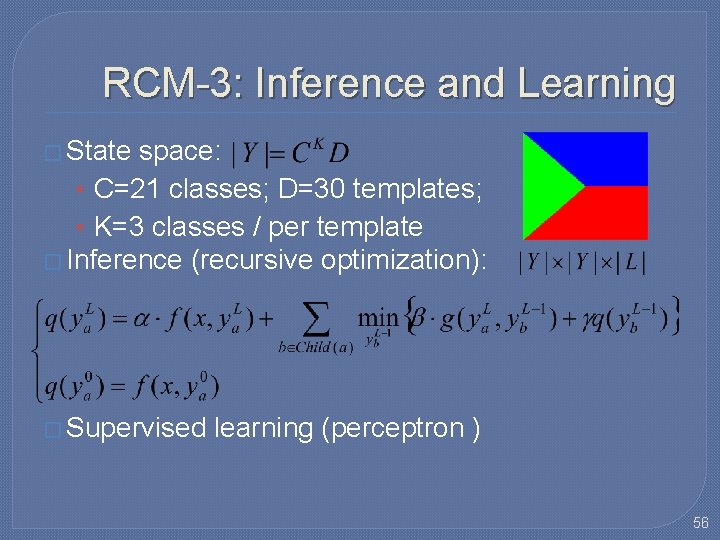

RCM-3: Inference and Learning � State space: • C=21 classes; D=30 templates; • K=3 classes / per template � Inference (recursive optimization): � Supervised learning (perceptron ) 56

57

58

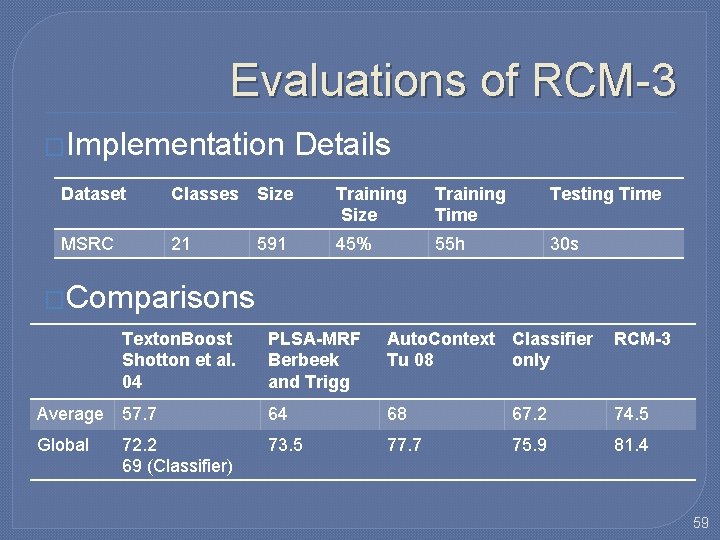

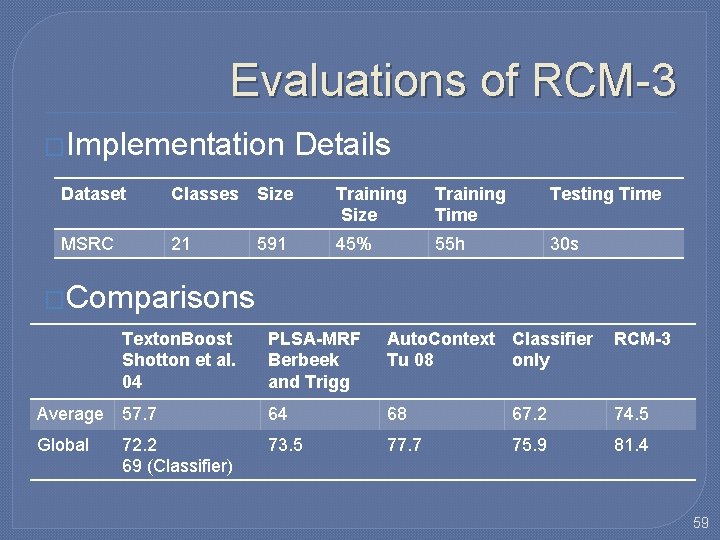

Evaluations of RCM-3 �Implementation Details Dataset Classes Size Training Time Testing Time MSRC 21 45% 55 h 30 s 591 �Comparisons Texton. Boost Shotton et al. 04 PLSA-MRF Berbeek and Trigg Auto. Context Classifier Tu 08 only RCM-3 Average 57. 7 64 68 67. 2 74. 5 Global 73. 5 77. 7 75. 9 81. 4 72. 2 69 (Classifier) 59

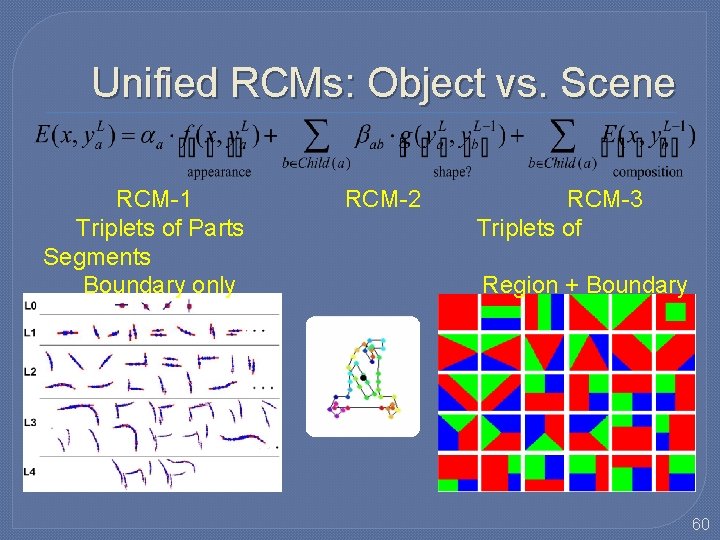

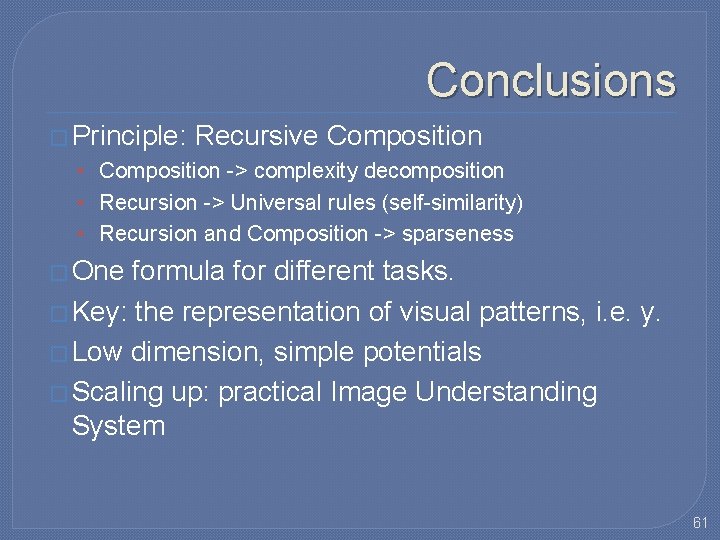

Unified RCMs: Object vs. Scene RCM-1 Triplets of Parts Segments Boundary only RCM-2 RCM-3 Triplets of Region + Boundary 60

Conclusions � Principle: Recursive Composition • Composition -> complexity decomposition • Recursion -> Universal rules (self-similarity) • Recursion and Composition -> sparseness � One formula for different tasks. � Key: the representation of visual patterns, i. e. y. � Low dimension, simple potentials � Scaling up: practical Image Understanding System 61

References � � � � Long Zhu, Yuanhao Chen, Antonio Torralba, William Freeman, Alan. Yuille. Part and Appearance Sharing: Recursive Compositional Models for Multi. View Multi-Object Detection. CVPR. 2010. Long Zhu, Yuanhao Chen, Yuan Lin, Chenxi Lin, Alan Yuille. Recursive Segmentation and Recognition Templates for 2 D Parsing. NIPS 2008. Long Zhu, Chenxi Lin, Haoda Huang, Yuanhao Chen, Alan Yuille. Unsupervised Structure Learning: Hierarchical Recursive Composition, Suspicious Coincidence and Competitive Exclusion. ECCV 2008. Long Zhu, Yuanhao Chen, Yifei Lu, Chenxi Lin, Alan Yuille. Max Margin AND/OR Graph Learning for Parsing the Human Body. CVPR 2008. Long Zhu, Yuanhao Chen, Xingyao Ye, Alan Yuille. Structure-Perceptron Learning of a Hierarchical Log-Linear Model. CVPR 2008. Yuanhao Chen, Long Zhu, Chenxi Lin, Alan Yuille, Hongjiang Zhang. Rapid Inference on a Novel AND/OR graph for Object Detection, Segmentation and Parsing. NIPS 2007. Long Zhu, Alan L. Yuille. A Hierarchical Compositional System for Rapid Object Detection. NIPS 2005 62