RECURRENT NEURAL NETWORKS OR ASSOCIATIVE MEMORIES Ranga Rodrigo

- Slides: 11

RECURRENT NEURAL NETWORKS OR ASSOCIATIVE MEMORIES Ranga Rodrigo February 24, 2014 1

INTRODUCTION • In a network, the signal received at the output was sent again to the network input. • Such circulation of the signal is called feedback. • Such neural networks are called recurrent neural networks. • Recurrent neural networks: – Hopfield neural network – Hamming neural network – Real Time Recurrent Network (RTRN) – Elman neural network – Bidirectional Associative Memory (BAM) 2

HOPFIELD NEURAL NETWORK 3

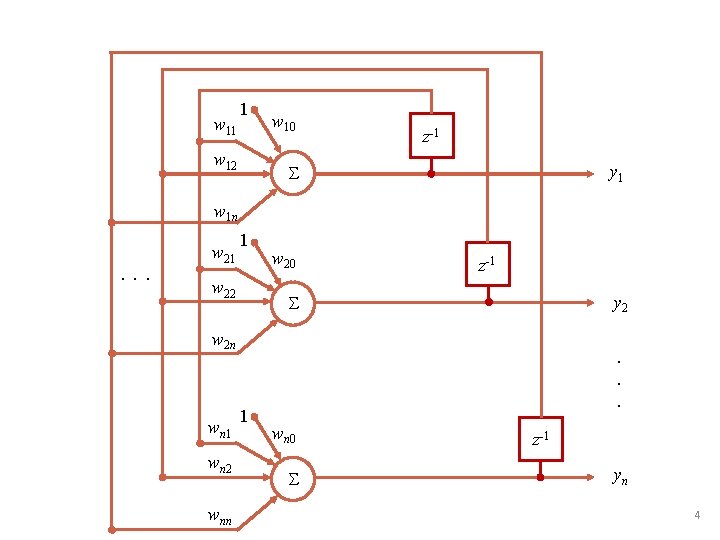

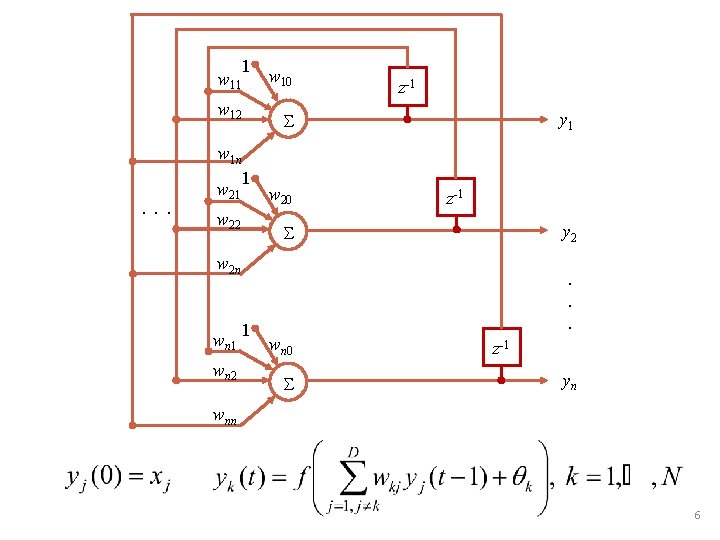

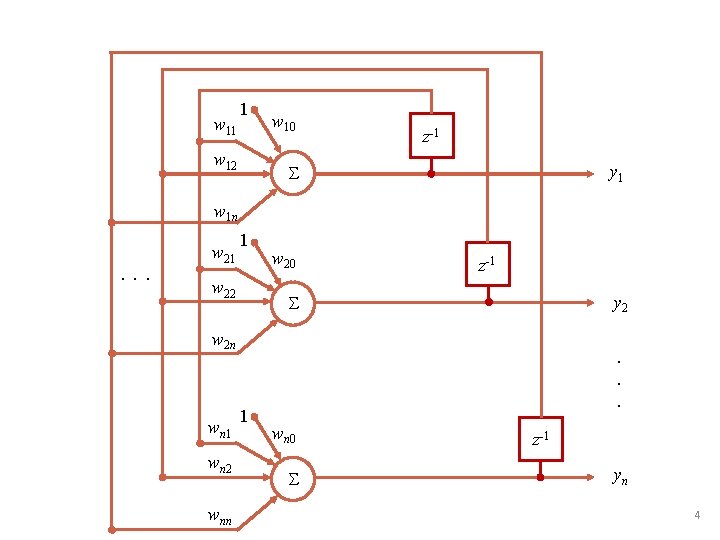

w 11 1 w 12 w 10 z-1 y 1 w 1 n. . . w 21 1 w 22 w 20 z-1 y 2 w 2 n wn 1 wn 2 wnn 1 . . . wn 0 z-1 yn 4

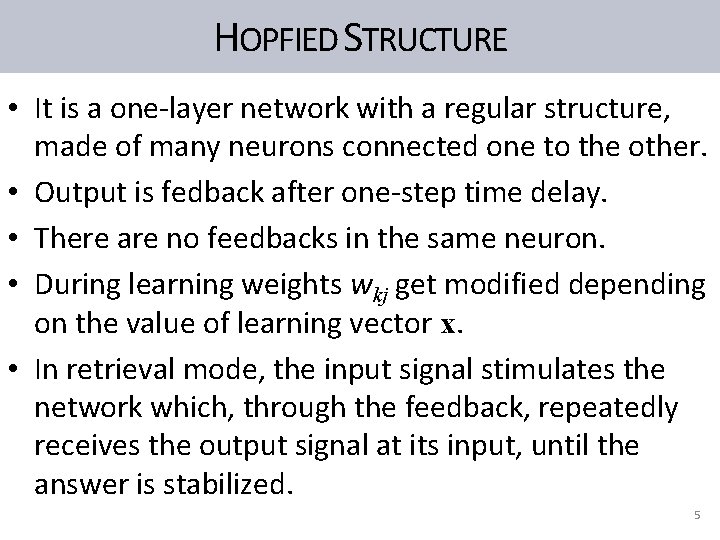

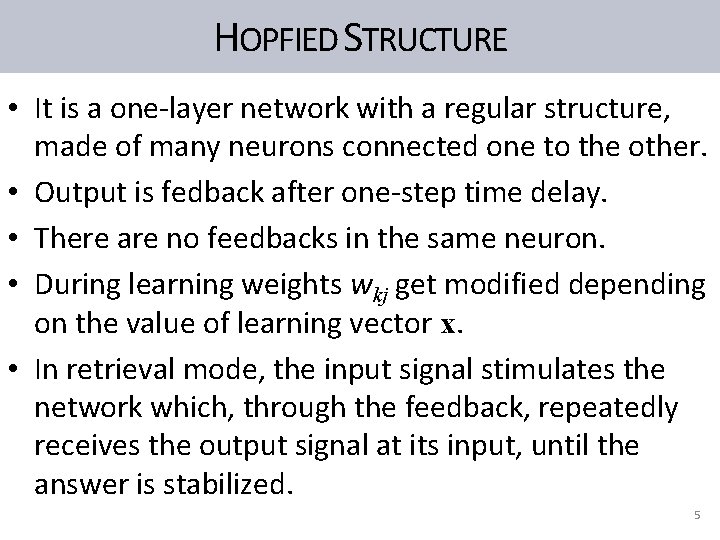

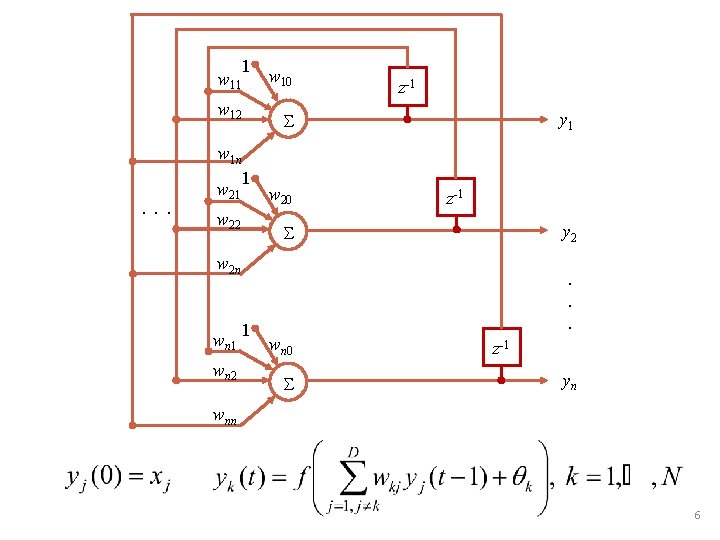

HOPFIED STRUCTURE • It is a one-layer network with a regular structure, made of many neurons connected one to the other. • Output is fedback after one-step time delay. • There are no feedbacks in the same neuron. • During learning weights wkj get modified depending on the value of learning vector x. • In retrieval mode, the input signal stimulates the network which, through the feedback, repeatedly receives the output signal at its input, until the answer is stabilized. 5

1 . . . w 11 w 10 w 12 w 1 n 1 w 20 w 22 z-1 y 1 z-1 y 2 w 2 n wn 1 wn 2 1 . . . wn 0 z-1 yn wnn 6

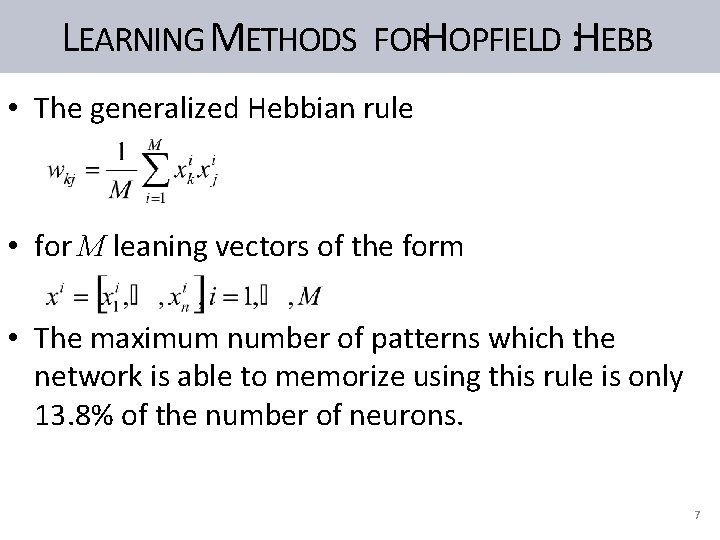

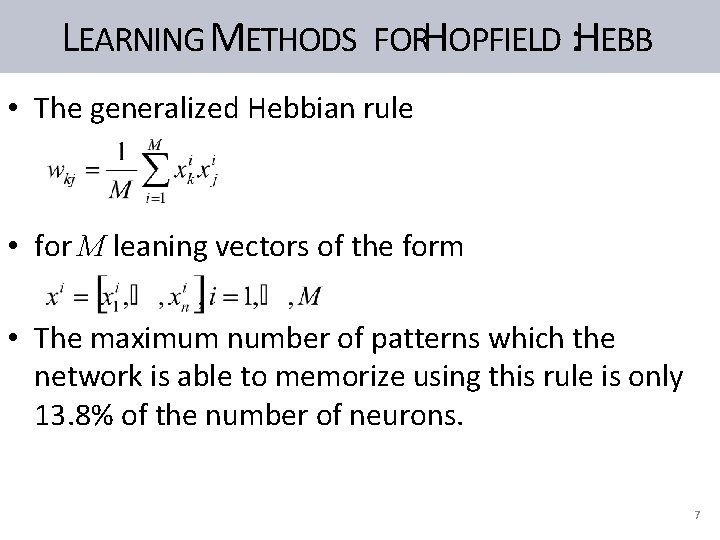

LEARNING METHODS FORHOPFIELD : HEBB • The generalized Hebbian rule • for M leaning vectors of the form • The maximum number of patterns which the network is able to memorize using this rule is only 13. 8% of the number of neurons. 7

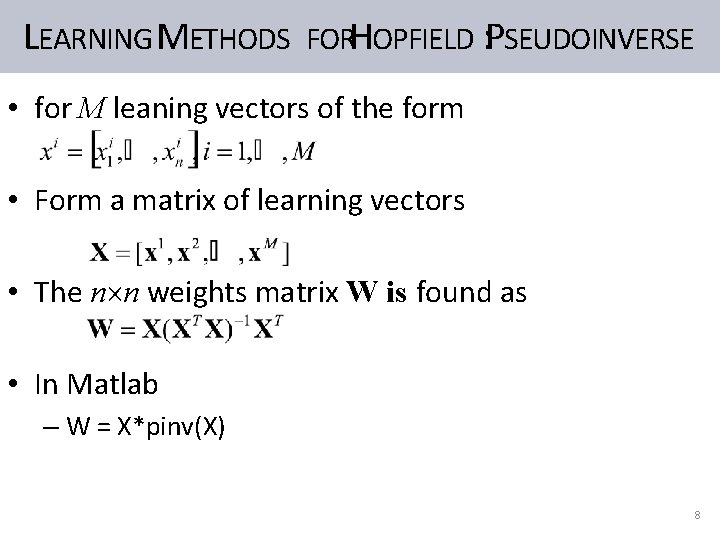

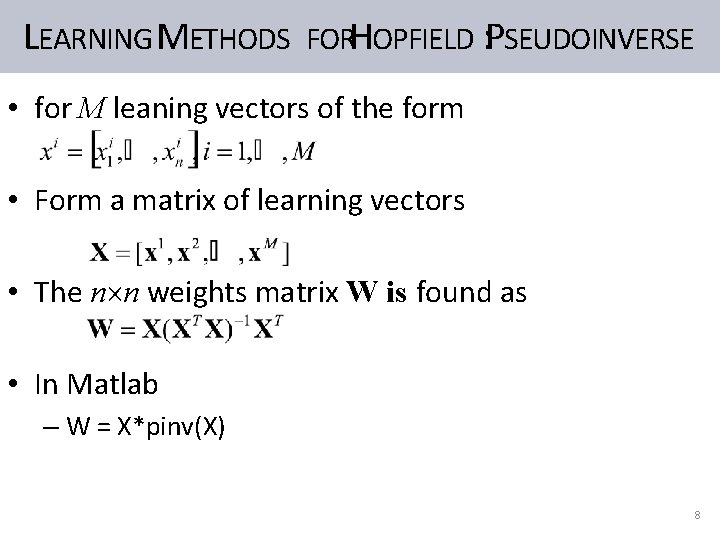

LEARNING METHODS FORHOPFIELD : PSEUDOINVERSE • for M leaning vectors of the form • Form a matrix of learning vectors • The n n weights matrix W is found as • In Matlab – W = X*pinv(X) 8

HAMMING NEURAL NETWORK 9

10

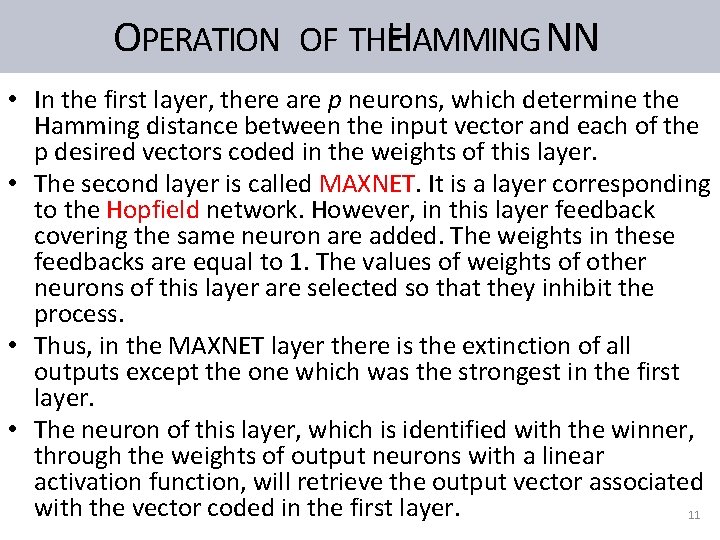

OPERATION OF THEHAMMING NN • In the first layer, there are p neurons, which determine the Hamming distance between the input vector and each of the p desired vectors coded in the weights of this layer. • The second layer is called MAXNET. It is a layer corresponding to the Hopfield network. However, in this layer feedback covering the same neuron are added. The weights in these feedbacks are equal to 1. The values of weights of other neurons of this layer are selected so that they inhibit the process. • Thus, in the MAXNET layer there is the extinction of all outputs except the one which was the strongest in the first layer. • The neuron of this layer, which is identified with the winner, through the weights of output neurons with a linear activation function, will retrieve the output vector associated with the vector coded in the first layer. 11

Pixel recurrent neural networks

Pixel recurrent neural networks Andrew ng lstm

Andrew ng lstm Ranga rodrigo

Ranga rodrigo Ranga rodrigo

Ranga rodrigo Extensions of recurrent neural network language model

Extensions of recurrent neural network language model Colah lstm

Colah lstm Tomas mikolov

Tomas mikolov Habituation psychology definition

Habituation psychology definition Associative learning vs non associative learning

Associative learning vs non associative learning Releasing stimulus

Releasing stimulus Visualizing and understanding recurrent networks

Visualizing and understanding recurrent networks Visualizing and understanding recurrent networks

Visualizing and understanding recurrent networks