Recovering Geometric Photometric and Kinematic Properties from Images

- Slides: 24

Recovering Geometric, Photometric and Kinematic Properties from Images Jitendra Malik Computer Science Division University of California at Berkeley Work supported by ONR, Interval Research, Rockwell, MICRO, NSF, JSEP

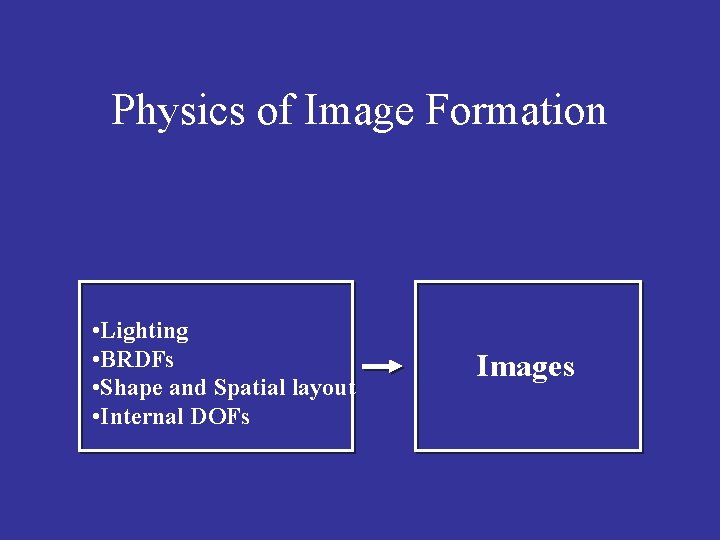

Physics of Image Formation • Lighting • BRDFs • Shape and Spatial layout • Internal DOFs Images

Solving inverse problems requires models • Define suitable parametric models for geometry, lighting, BRDFs, and kinematics. • Recover parameters using optimization techniques. • Humans better at selecting models; computers at recovering parameters.

But there will always be unmodeled detail…. . • Models are always approximate. • Adding more parameters doesn’t help; data will be insufficient to recover these parameters.

Hybrid Approaches are best! • ANALYSIS – use images to recover a subset of object parameters. These are chosen judiciously so that they can be recovered robustly • SYNTHESIS – render using appropriately selected images or subimages, transformed using the model.

Talk Outline Geometry – Debevec, Taylor and Malik, SIGGRAPH 96 • Photometry – Yu and Malik, SIGGRAPH 98 – Debevec and Malik, SIGGRAPH 97 • Kinematics – Bregler and Malik, CVPR 98 •

Modeling and Rendering Architecture from Photographs Paul Debevec Camillo Taylor Jitendra Malik ov k u h s Bor e g r Geo zhou Yu Yi Computer Vision Group Computer Science Division University of California at Berkeley

Overview • Photogrammetric Modeling – Allows the user to construct a parametric model of the scene directly from photographs • Model-Based Stereo – Recovers additional geometric detail through stereo correspondence • View-Dependent Texture-Mapping – Renders each polygon of the recovered model using a linear combination of three nearest views

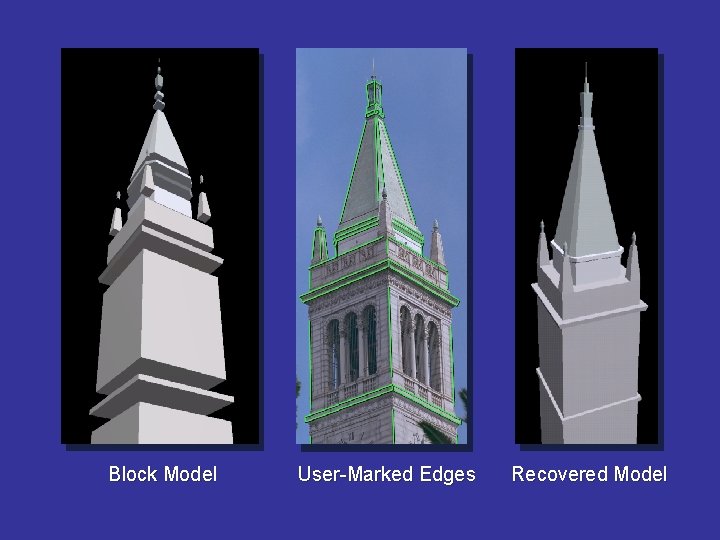

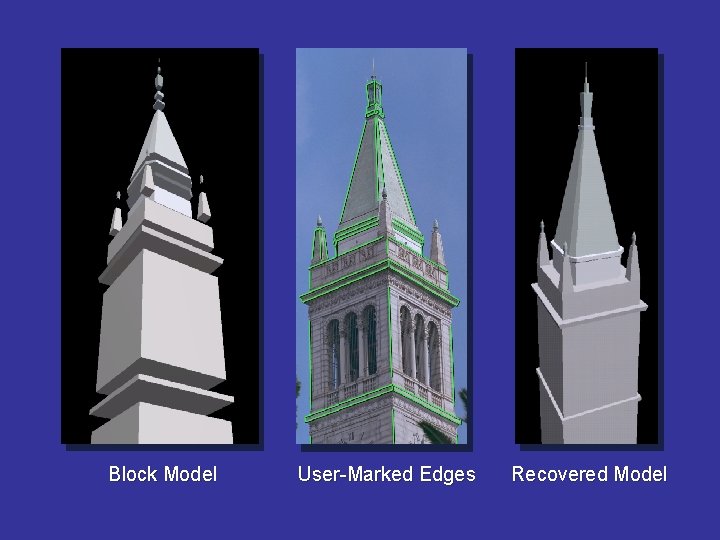

Our Modeling Method: • The user represents the scene as a collection of blocks • The computer solves for the sizes and positions of the blocks according to user-supplied edge correspondences

Block Model User-Marked Edges Recovered Model

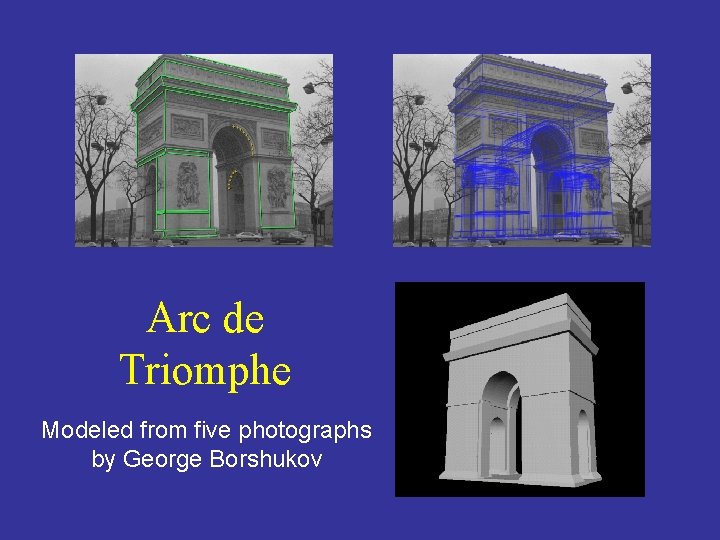

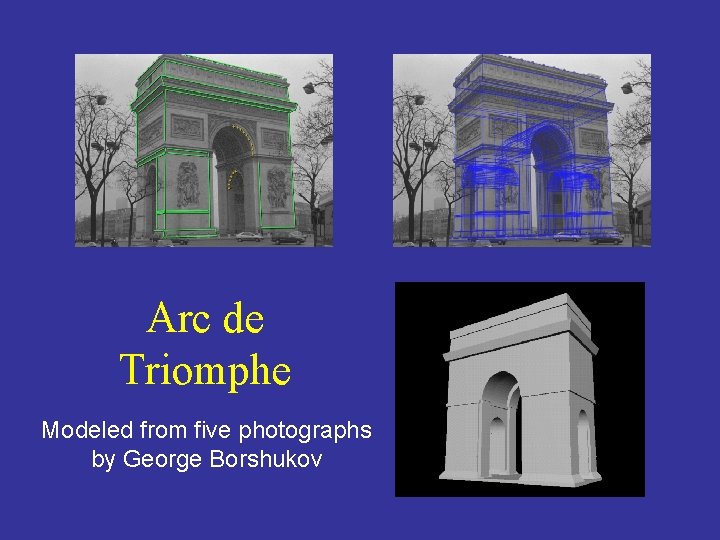

Arc de Triomphe Modeled from five photographs by George Borshukov

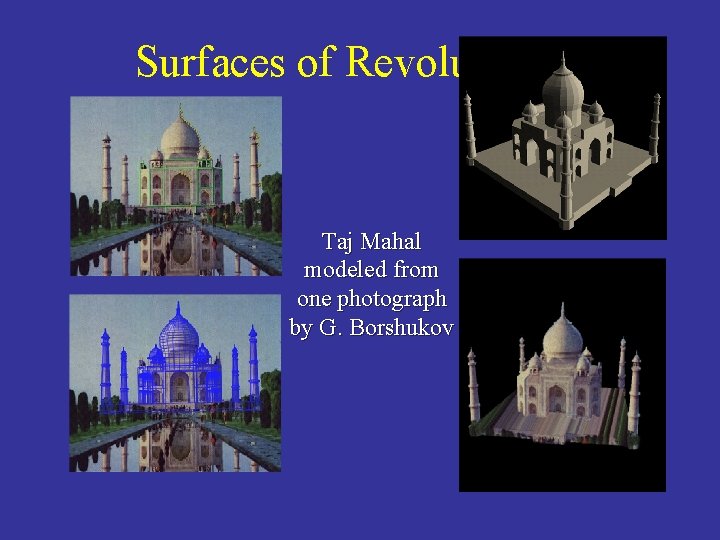

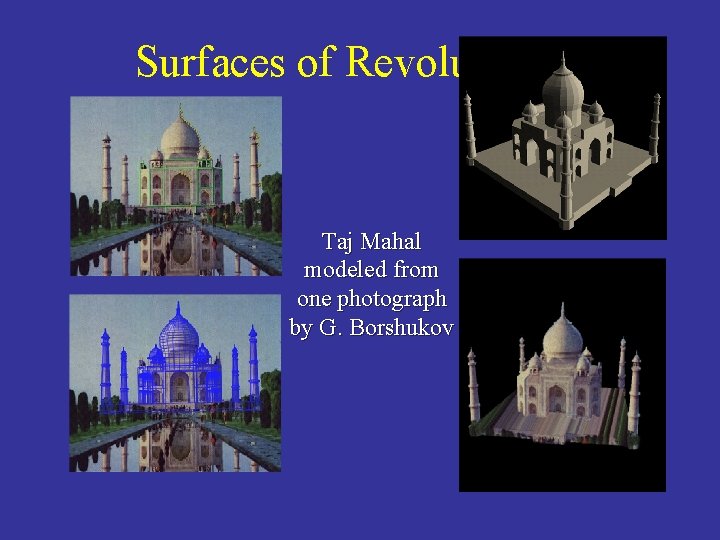

Surfaces of Revolution Taj Mahal modeled from one photograph by G. Borshukov

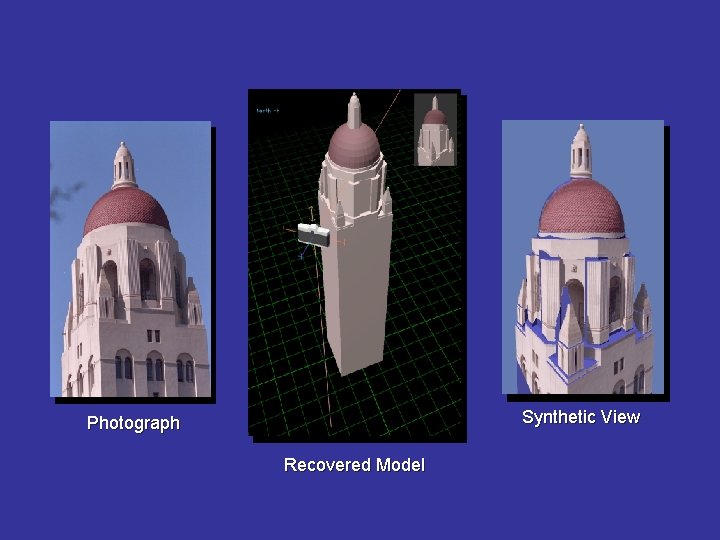

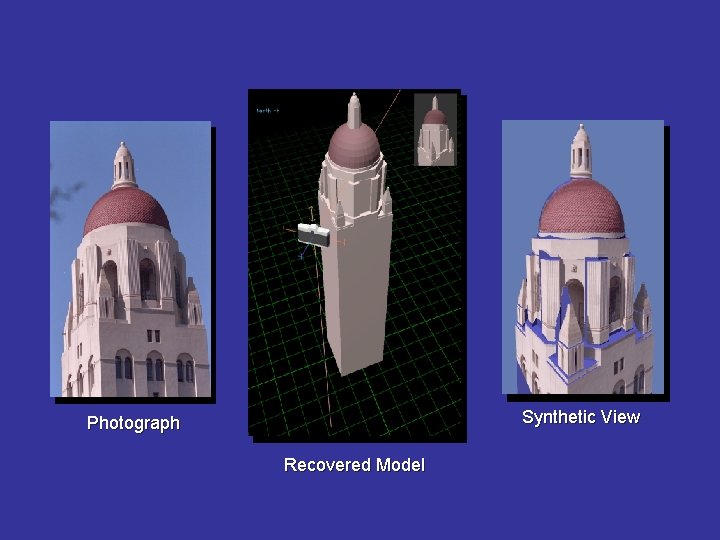

Synthetic View Photograph Recovered Model

Recovering Additional Detail with Model-Based Stereo • Scenes will have geometric detail not captured in the model • This detail can be recovered automatically through model-based stereo

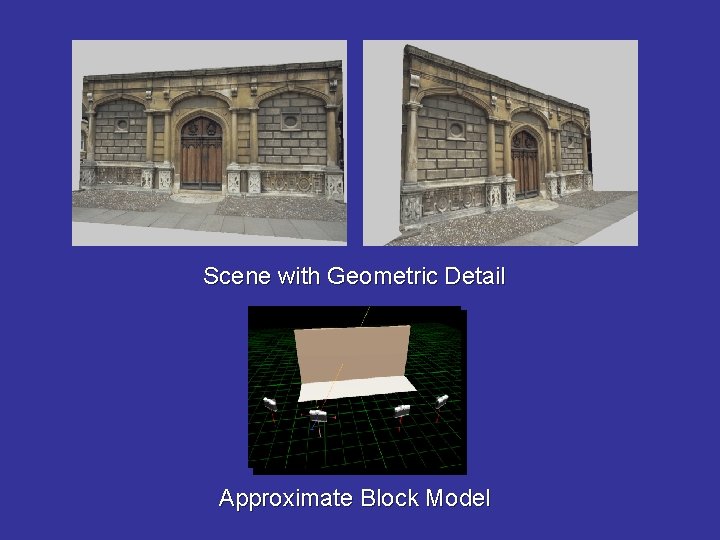

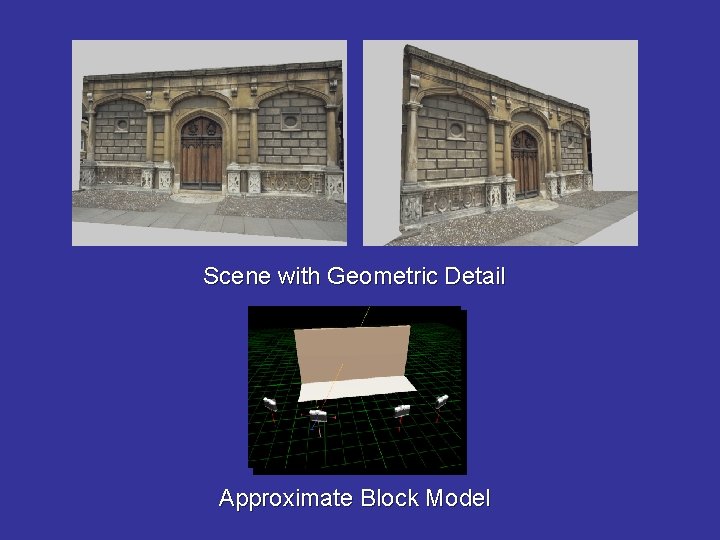

Scene with Geometric Detail Approximate Block Model

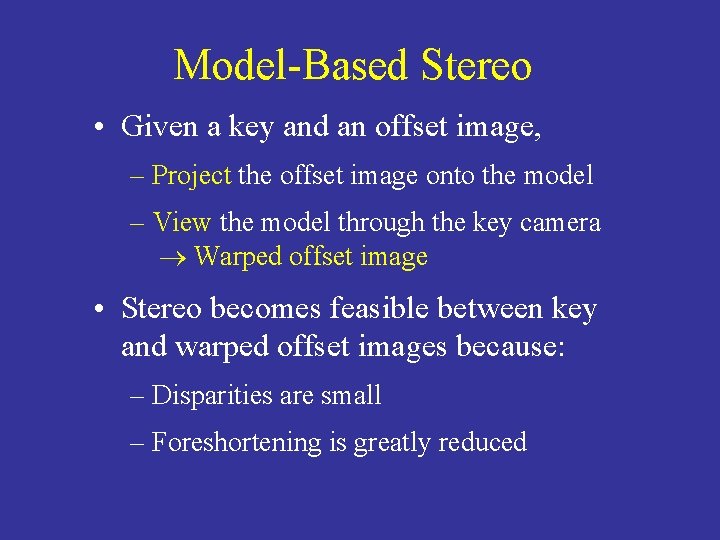

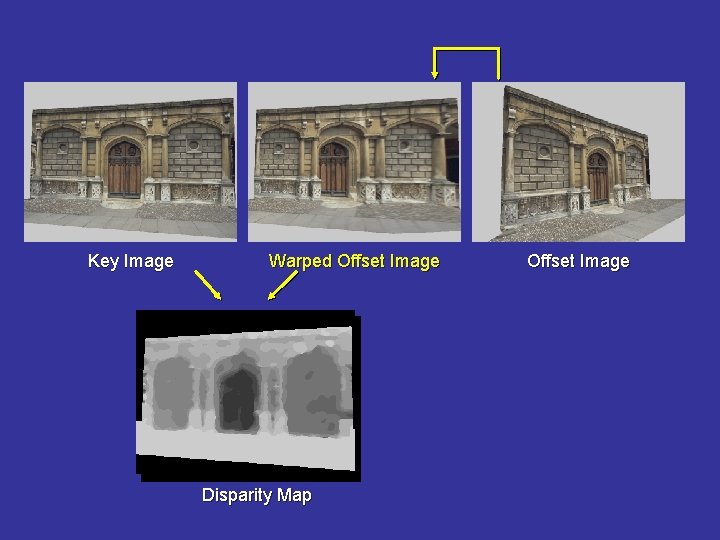

Model-Based Stereo • Given a key and an offset image, – Project the offset image onto the model – View the model through the key camera Warped offset image • Stereo becomes feasible between key and warped offset images because: – Disparities are small – Foreshortening is greatly reduced

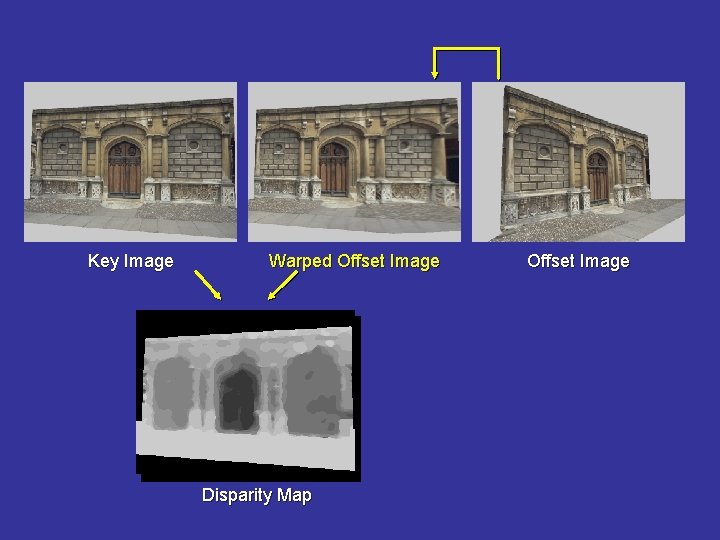

Key Image Warped Offset Image Disparity Map Offset Image

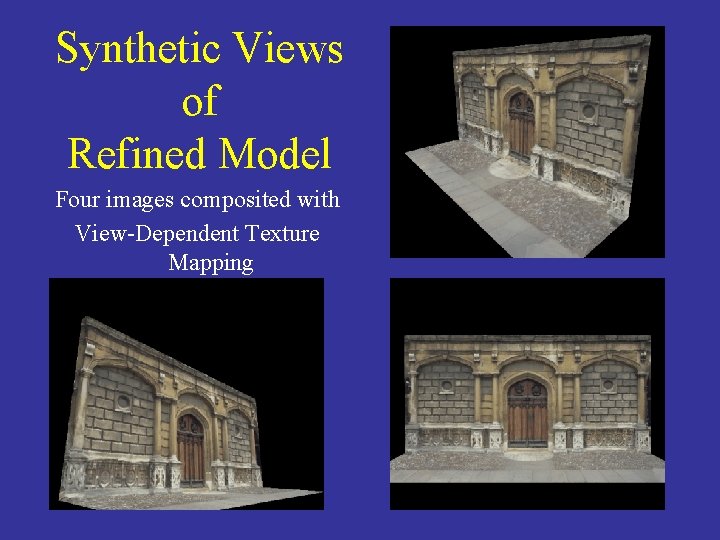

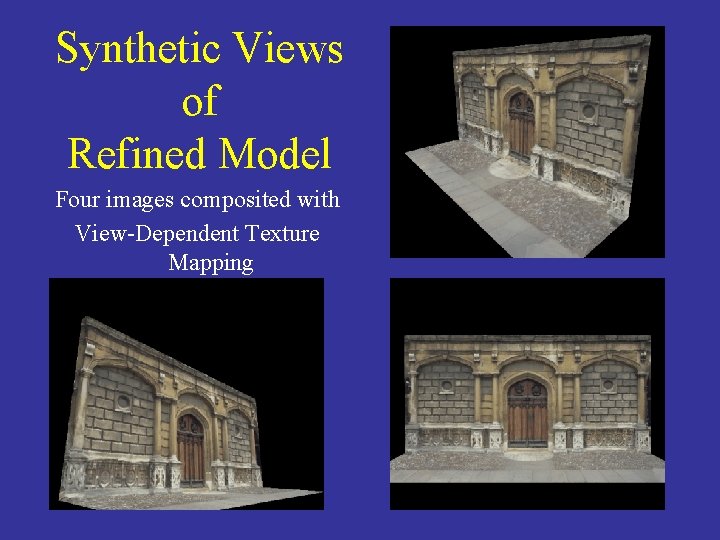

Synthetic Views of Refined Model Four images composited with View-Dependent Texture Mapping

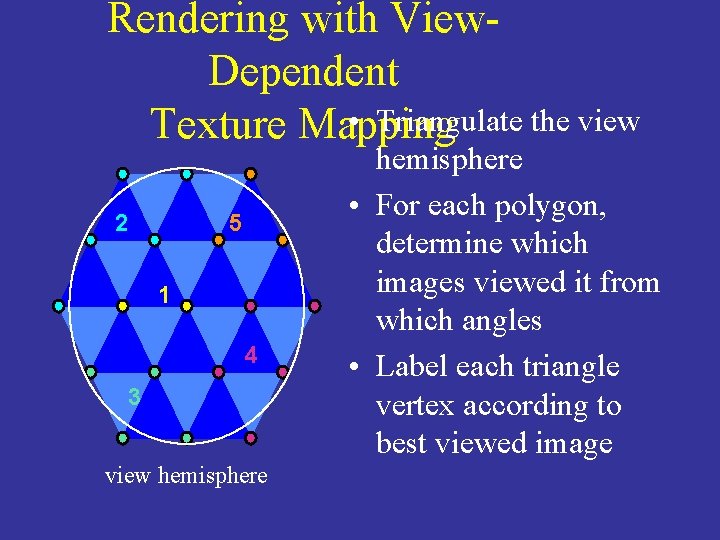

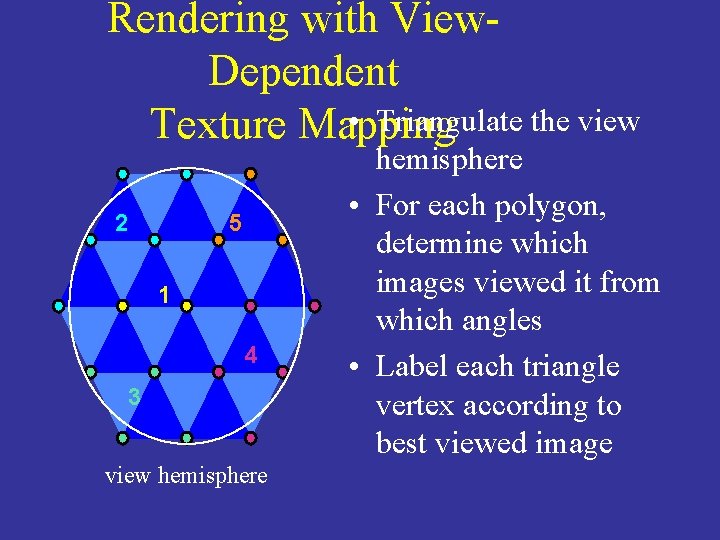

Rendering with View. Dependent • Triangulate the view Texture Mapping 2 5 1 4 3 view hemisphere • For each polygon, determine which images viewed it from which angles • Label each triangle vertex according to best viewed image

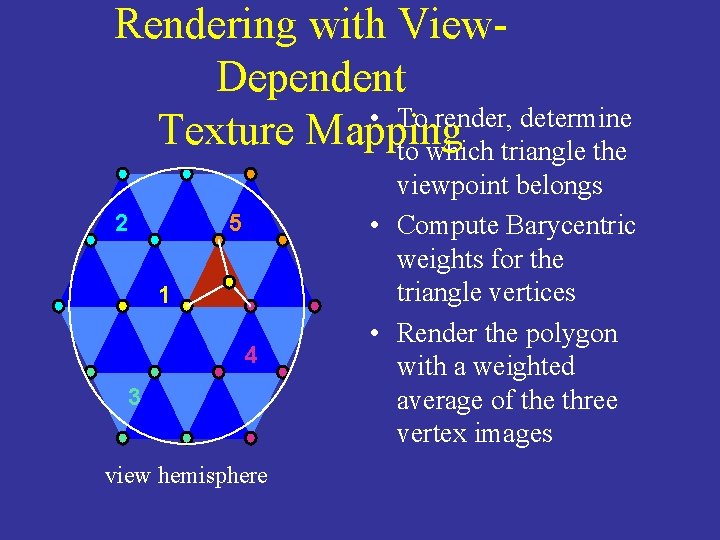

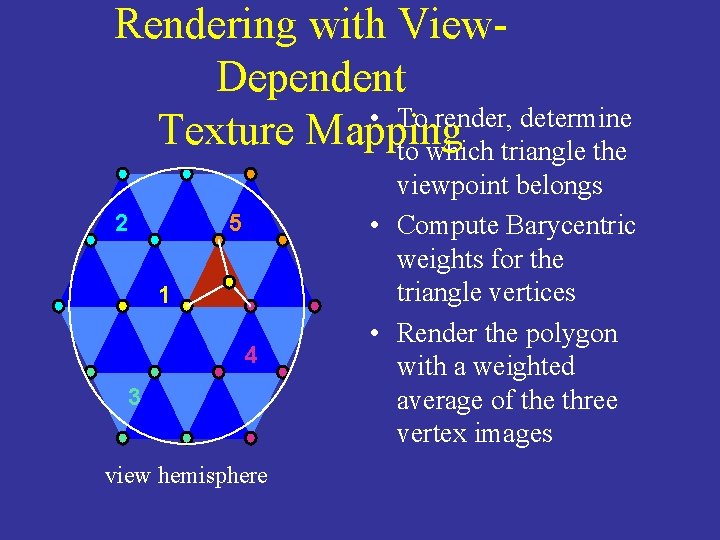

Rendering with View. Dependent • To render, determine Texture Mapping to which triangle the 2 5 1 4 3 view hemisphere viewpoint belongs • Compute Barycentric weights for the triangle vertices • Render the polygon with a weighted average of the three vertex images

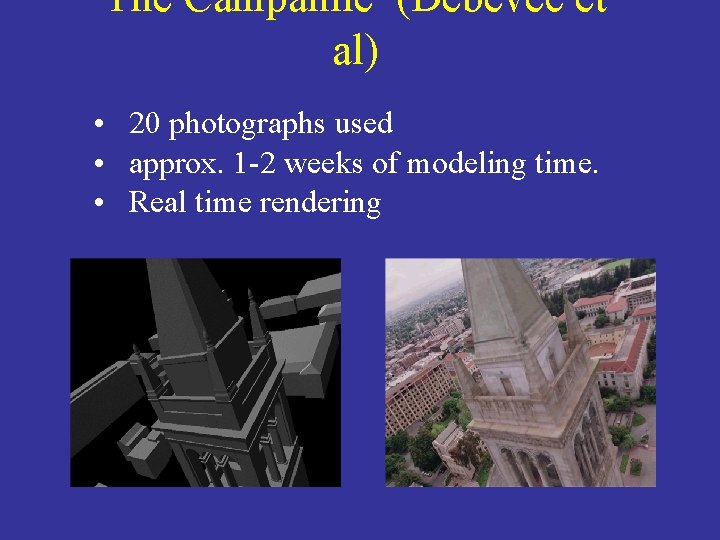

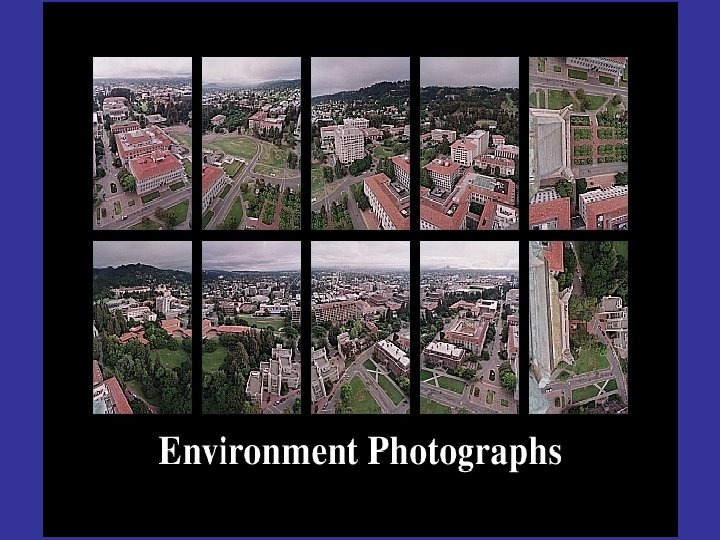

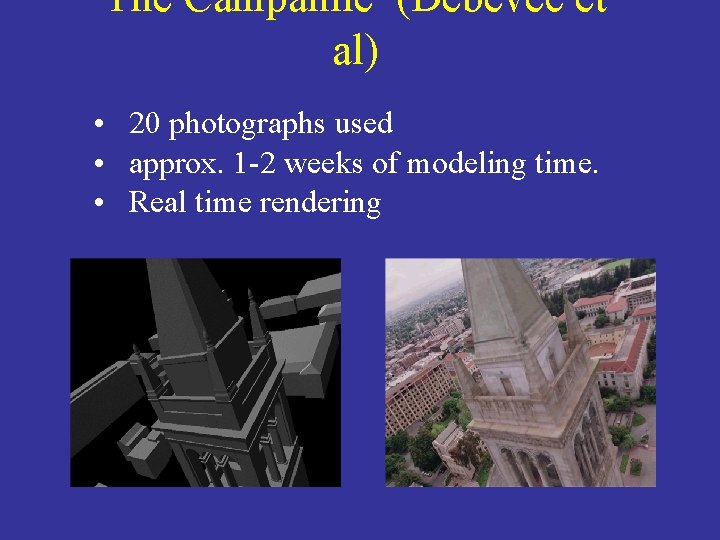

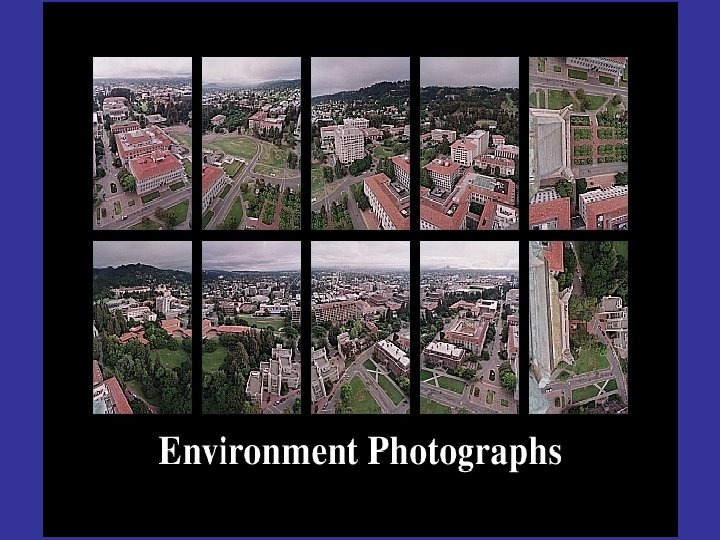

The Campanile (Debevec et al) • 20 photographs used • approx. 1 -2 weeks of modeling time. • Real time rendering

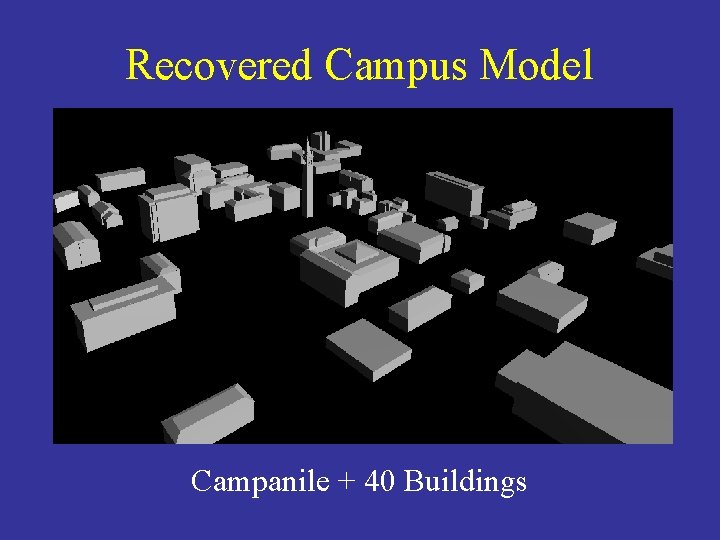

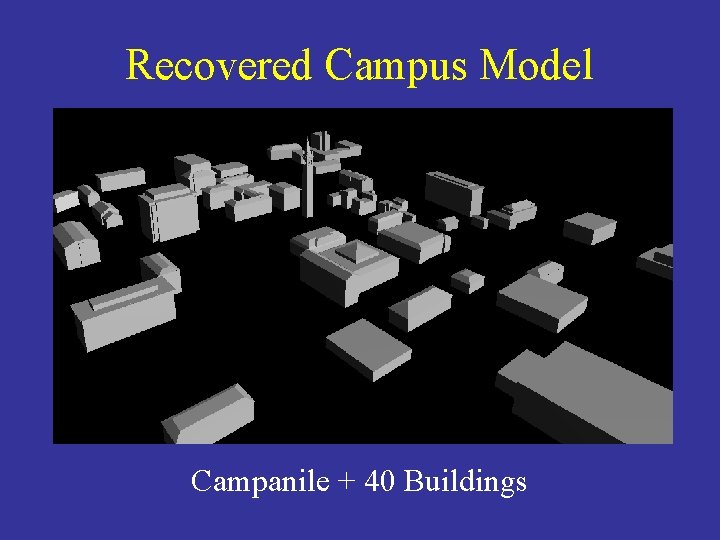

Recovered Campus Model Campanile + 40 Buildings

Geometric and photometric image formation

Geometric and photometric image formation Geometry bootcamp answers

Geometry bootcamp answers Photometric reprojection error

Photometric reprojection error Photometric image formation

Photometric image formation Photometric stereo

Photometric stereo Ronen basri

Ronen basri Middlesbrough recovering together

Middlesbrough recovering together How to calculate volume

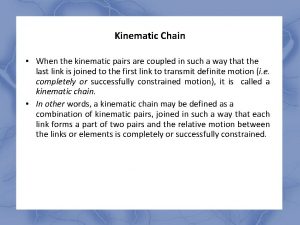

How to calculate volume Open and closed kinematic chain

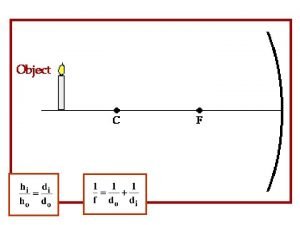

Open and closed kinematic chain Real vs virtual image

Real vs virtual image Do f

Do f Images.search.yahoo.com

Images.search.yahoo.com How to save images on google images

How to save images on google images Https://tw.images.search.yahoo.com/images/view

Https://tw.images.search.yahoo.com/images/view Extensive properties and intensive properties

Extensive properties and intensive properties Physical properties and chemical properties

Physical properties and chemical properties Properties of plane mirror image

Properties of plane mirror image Kinematics equations for uniformly accelerated motion

Kinematics equations for uniformly accelerated motion Timeless kinematic equation

Timeless kinematic equation Timeless kinematic equation

Timeless kinematic equation Big 4 kinematic equations

Big 4 kinematic equations Rotation kinematic equations

Rotation kinematic equations 4 kinematic equations

4 kinematic equations Big four kinematic equations

Big four kinematic equations Post kinematic metamorphic texture

Post kinematic metamorphic texture