Recommender Systems Twenty years of research Lior Rokach

- Slides: 52

Recommender Systems Twenty years of research Lior Rokach Dept. of Software and Information Systems Eng. , Ben-Gurion University of the Negev

Recommender Systems • A recommender system (RS) helps users that have no sufficient competence or time to evaluate the, potentially overwhelming, number of alternatives offered by a web site. – In their simplest form, RSs recommend to their users personalized and ranked lists of items 2

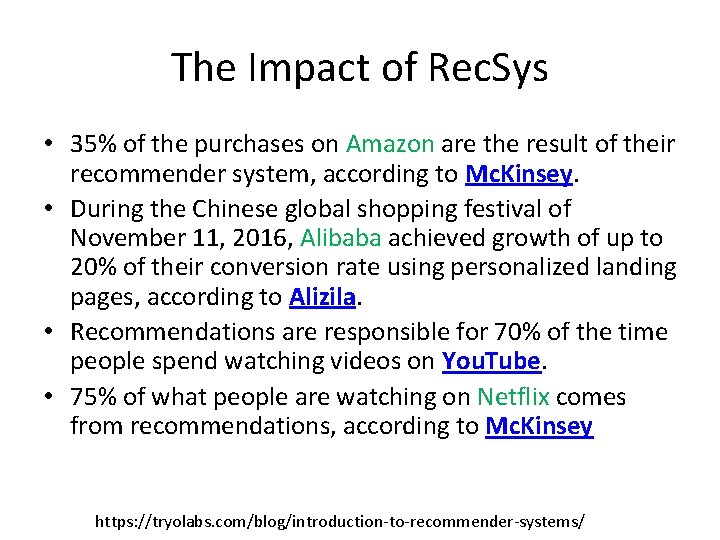

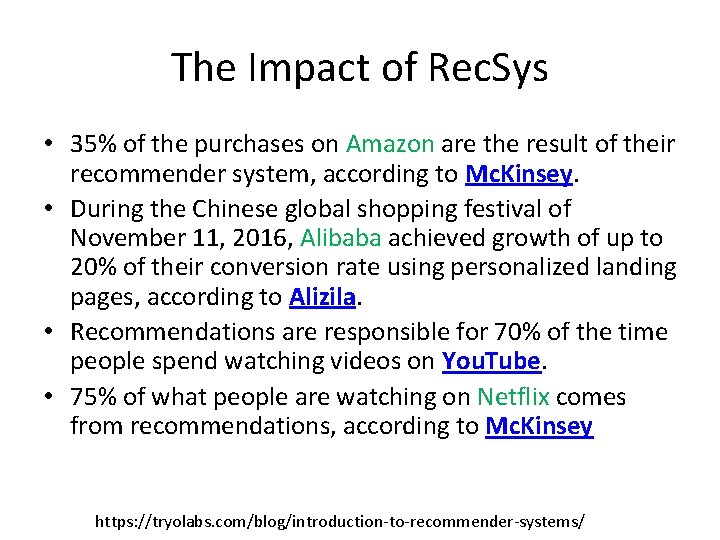

The Impact of Rec. Sys • 35% of the purchases on Amazon are the result of their recommender system, according to Mc. Kinsey. • During the Chinese global shopping festival of November 11, 2016, Alibaba achieved growth of up to 20% of their conversion rate using personalized landing pages, according to Alizila. • Recommendations are responsible for 70% of the time people spend watching videos on You. Tube. • 75% of what people are watching on Netflix comes from recommendations, according to Mc. Kinsey https: //tryolabs. com/blog/introduction-to-recommender-systems/

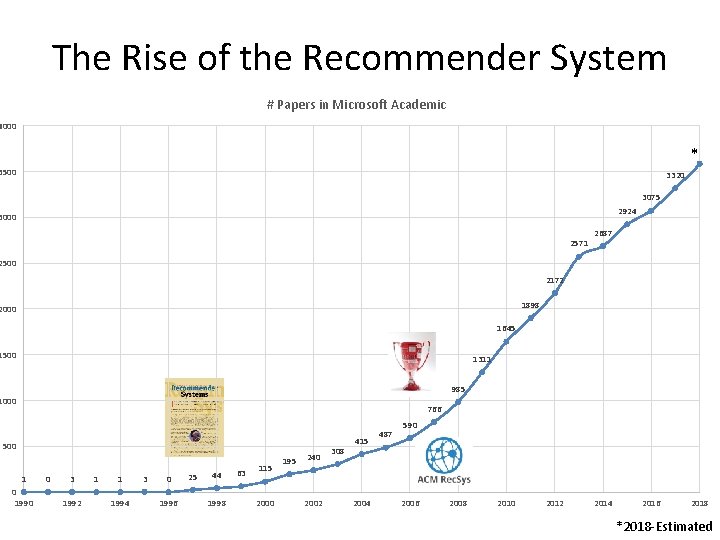

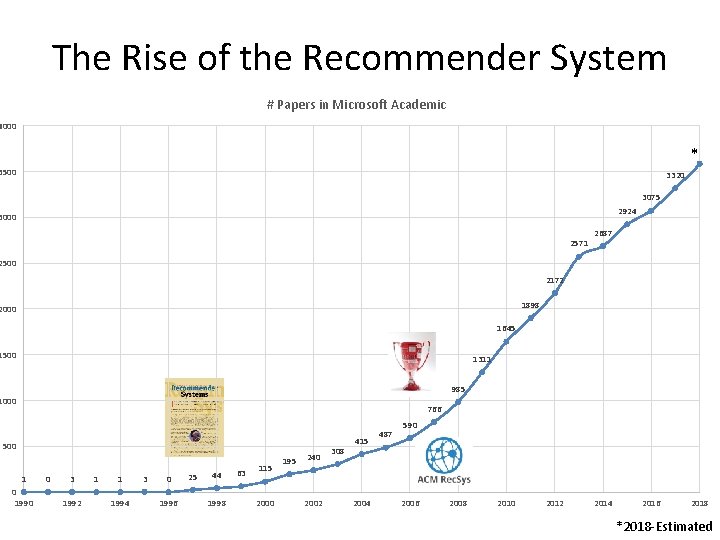

The Rise of the Recommender System # Papers in Microsoft Academic 4000 * 3500 3320 3075 2924 3000 2571 2687 2500 2172 1898 2000 1645 1500 1311 985 1000 766 500 1 0 1990 0 3 1992 1 1 1994 3 0 1996 25 44 1998 63 115 2000 195 240 2002 308 415 2004 487 590 2006 2008 2010 2012 2014 2016 2018 *2018 -Estimated

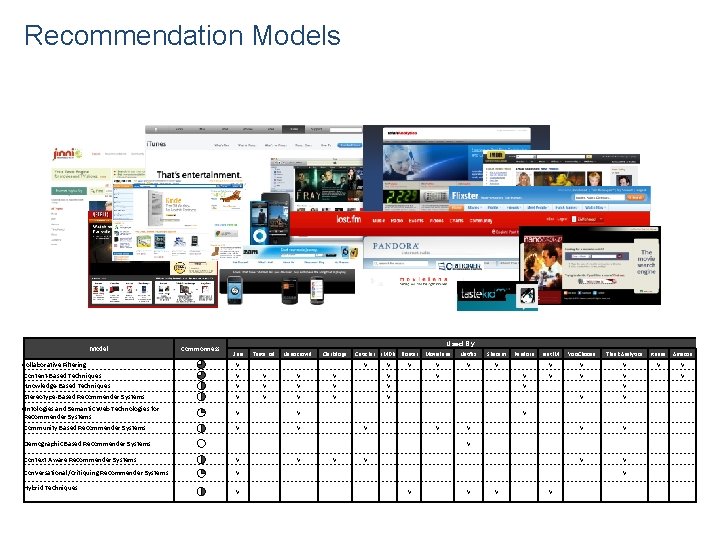

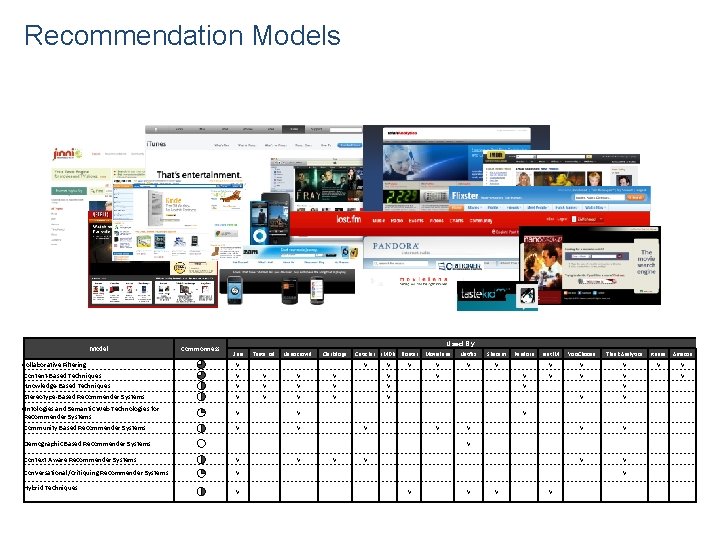

Recommendation Models Model Commonness Used By: Jinni Taste Kid Nanocrowd Clerkdogs Criticker Movielens Collaborative Filtering v v v Netflix Shazam Pandora Last. FM Yoo. Choose Think Analytics Itunes Amazon v v v v Content-Based Techniques v v v v Knowledge-Based Techniques v v v v v Stereotype-Based Recommender Systems v v v v v Ontologies and Semantic Web Technologies for Recommender Systems v v Community Based Recommender Systems v v v Demographic Based Recommender Systems v Context Aware Recommender Systems v v Conversational/Critiquing Recommender Systems v v v v v v Hybrid Techniques IMDb Flixster

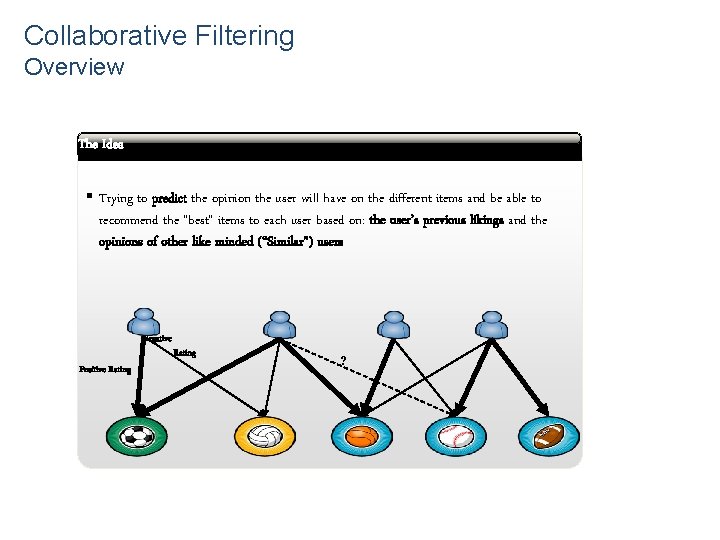

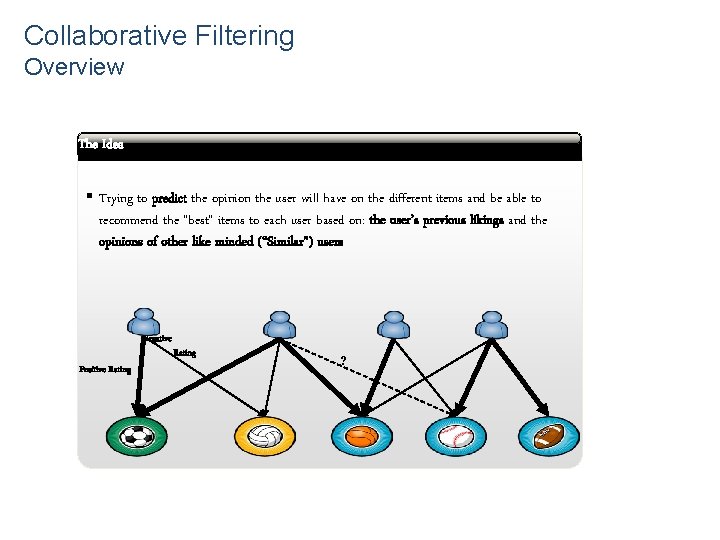

Collaborative Filtering Overview abcd The Idea § Trying to predict the opinion the user will have on the different items and be able to recommend the “best” items to each user based on: the user’s previous likings and the opinions of other like minded (“Similar”) users Negative Positive Rating ?

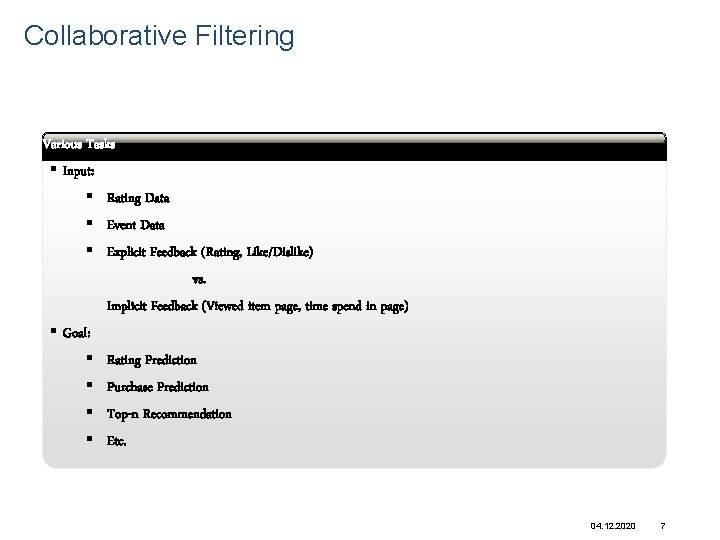

Collaborative Filtering Various Tasks § Input: abcd § Rating Data § Event Data § Explicit Feedback (Rating, Like/Dislike) vs. Implicit Feedback (Viewed item page, time spend in page) § Goal: § Rating Prediction § Purchase Prediction § Top-n Recommendation § Etc. 04. 12. 2020 7

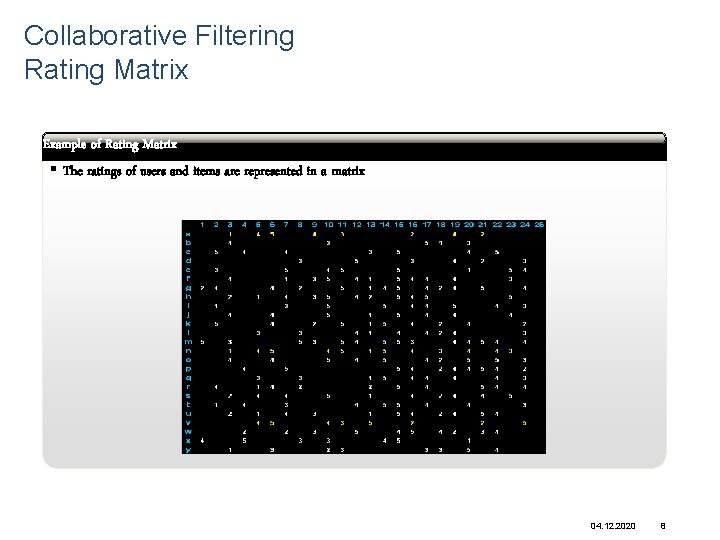

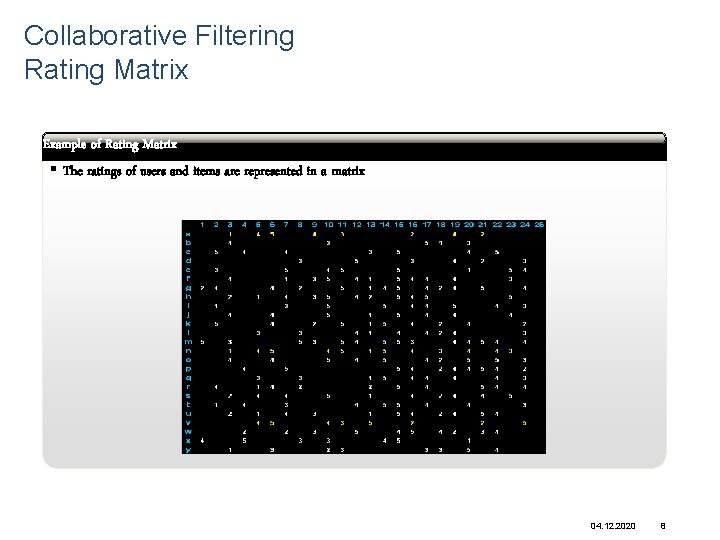

Collaborative Filtering Rating Matrix Example of Rating Matrix § The ratings of users and items are represented in a matrix abcd 04. 12. 2020 8

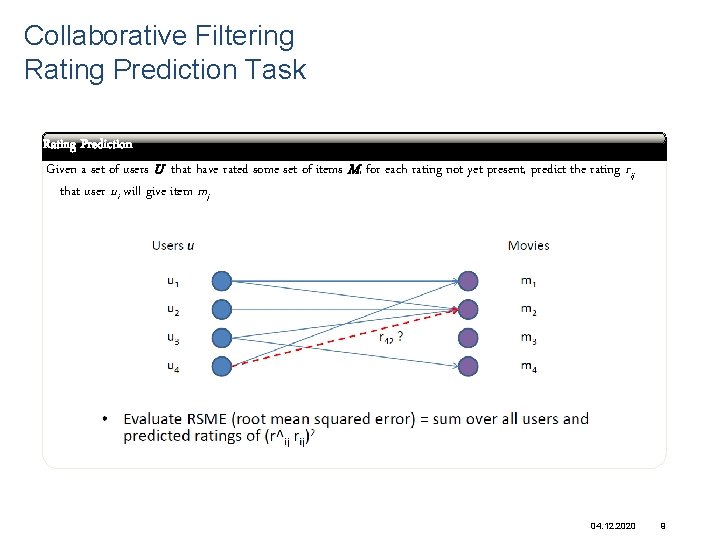

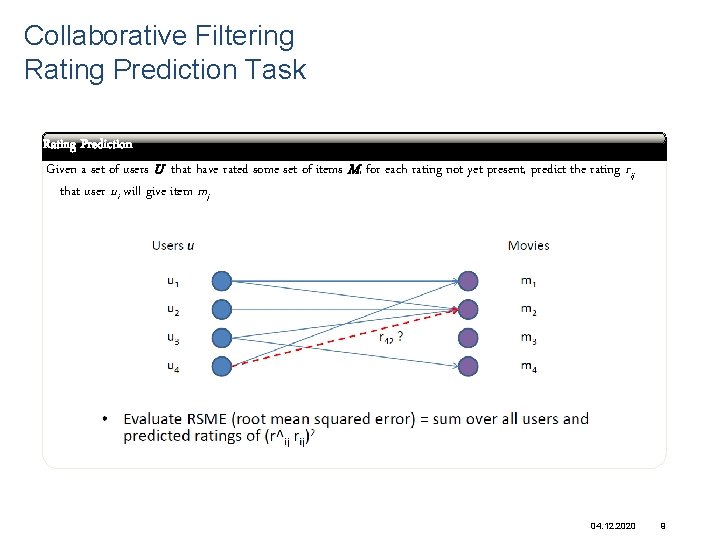

Collaborative Filtering Rating Prediction Task abcd Rating Prediction Given a set of users U that have rated some set of items M, for each rating not yet present, predict the rating rij that user ui will give item mj 04. 12. 2020 9

Collaborative Filtering Techniques Popular Techniques §Nearest Neighbor §Matrix Factorization §Deep Learning 04. 12. 2020 10

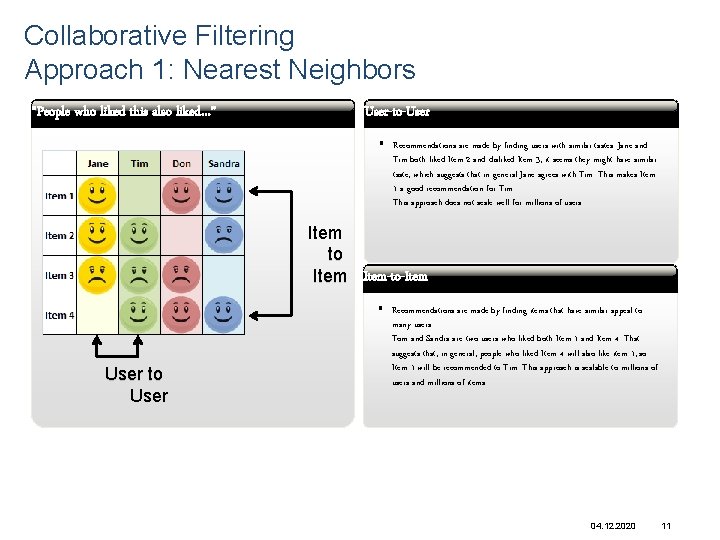

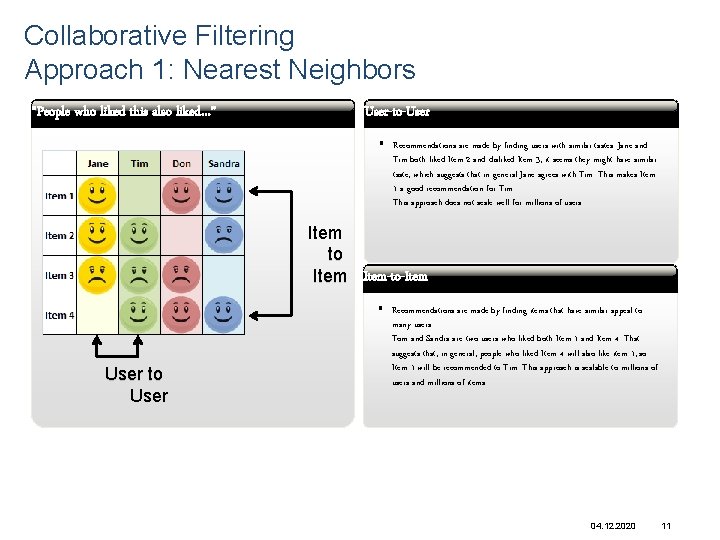

Collaborative Filtering Approach 1: Nearest Neighbors abcd “People who liked this also liked…” User-to-User abcd § Recommendations are made by finding users with similar tastes. Jane and Tim both liked Item 2 and disliked Item 3; it seems they might have similar taste, which suggests that in general Jane agrees with Tim. This makes Item 1 a good recommendation for Tim. This approach does not scale well for millions of users. Item to Item-to-Item User to User § Recommendations are made by finding items that have similar appeal to many users. Tom and Sandra are two users who liked both Item 1 and Item 4. That suggests that, in general, people who liked Item 4 will also like item 1, so Item 1 will be recommended to Tim. This approach is scalable to millions of users and millions of items. 04. 12. 2020 11

Nearest Neighbor Technique Popular Methods § Using predefined similarity measures (such as Pearson or Hamming Distance) § Learning similarity the relations weights via optimization 04. 12. 2020 12

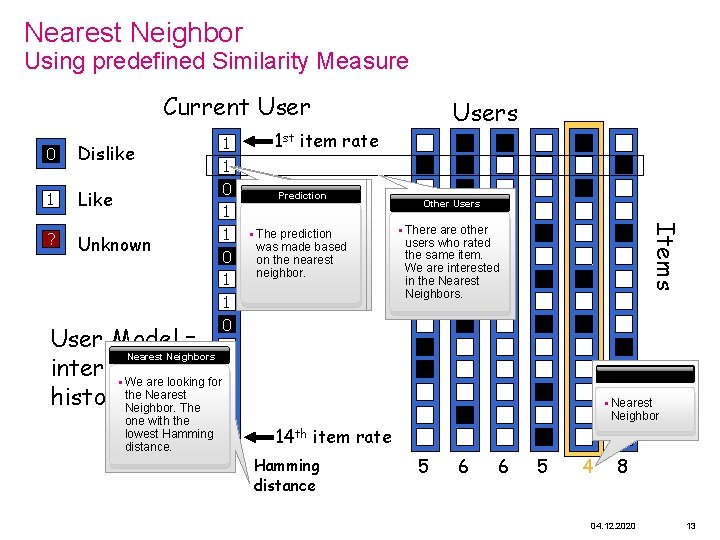

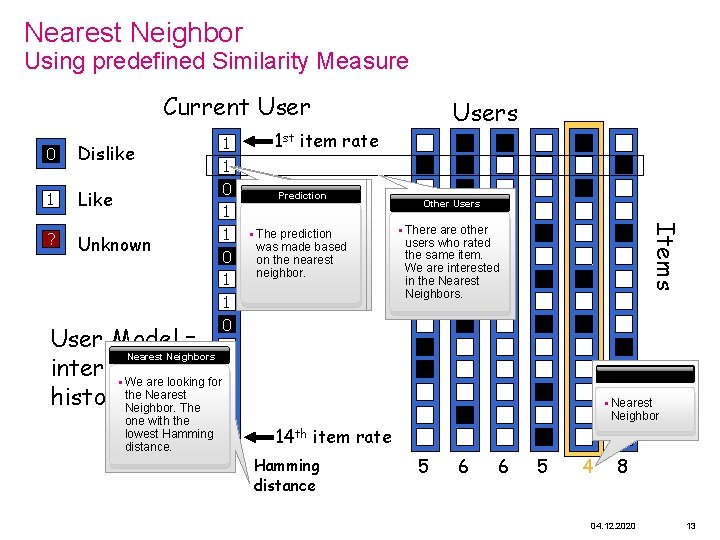

Nearest Neighbor Using predefined Similarity Measure Current User 1 st item rate abcd Rating Unknown Prediction § This user did not § The prediction rate the item. We was made based will try to predict a on the nearest rating according neighbor. to his neighbors. abcd Users Other Items 1 0 Dislike ? 1 0 1 Like 1 1 ? Unknown 0 1 1 0 User Model = 1 abcd Nearest Neighbors 1 interaction § We are looking for 1 historythe Nearest Neighbor. The 1 one with the lowest Hamming 0 distance. Users § There are other users who rated the same item. We are interested in the Nearest Neighbors. abcd § Nearest Neighbor 14 th item rate Hamming distance 5 6 6 5 4 8 04. 12. 2020 13

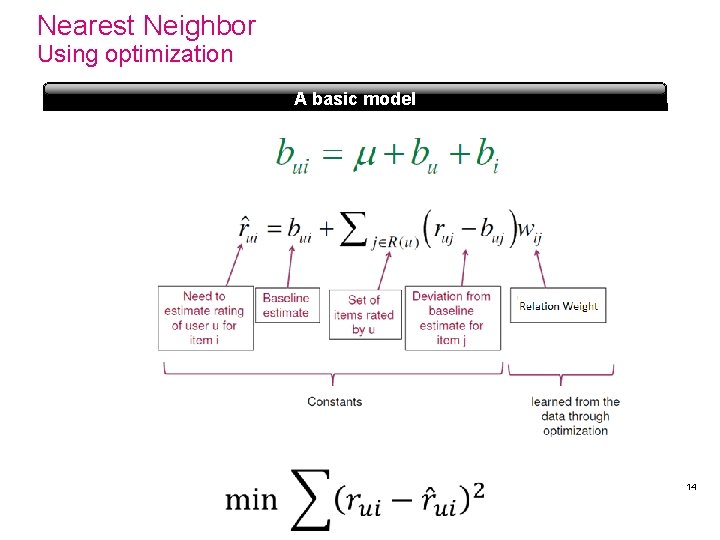

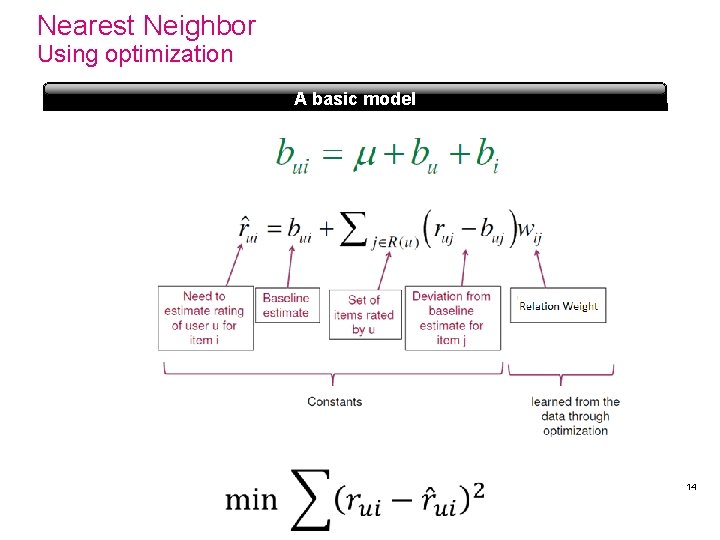

Nearest Neighbor Using optimization abcd A basic model 14

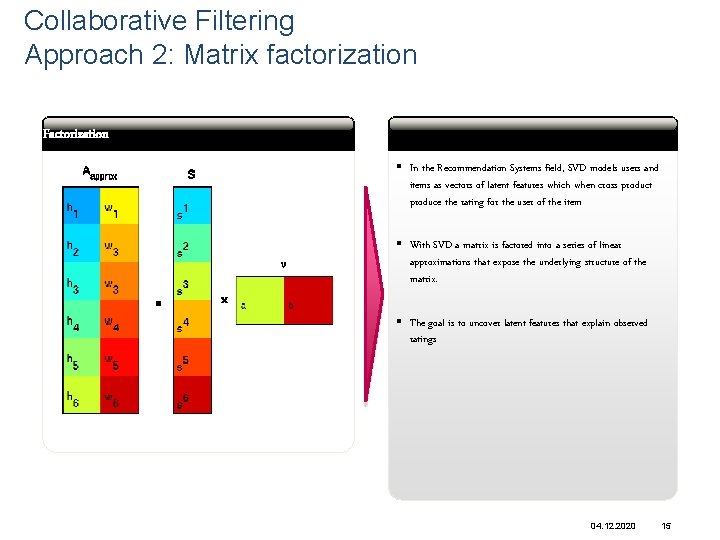

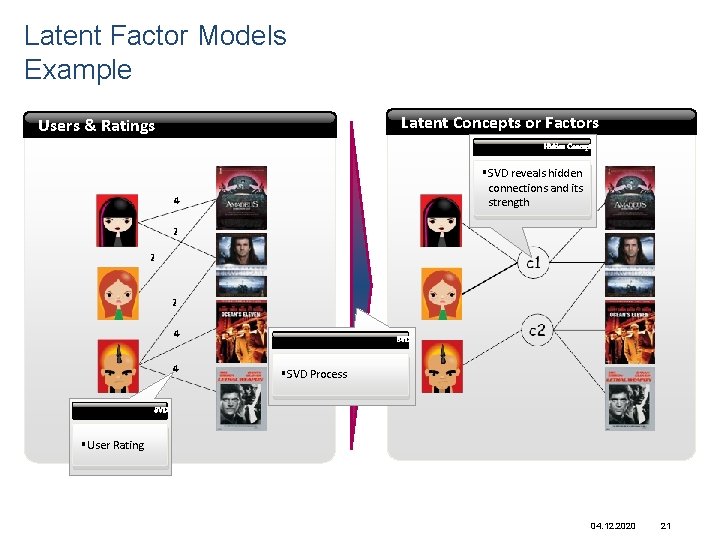

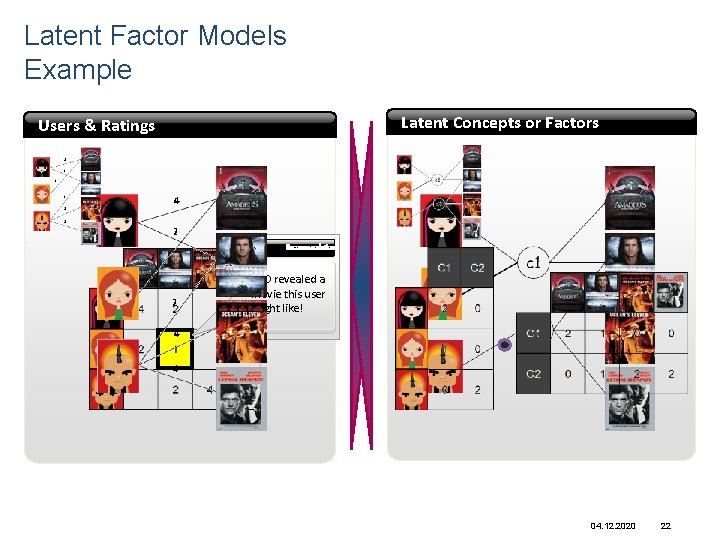

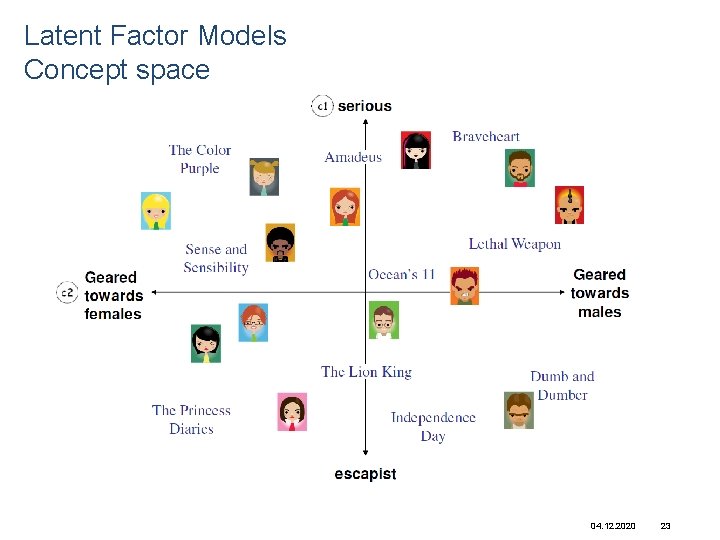

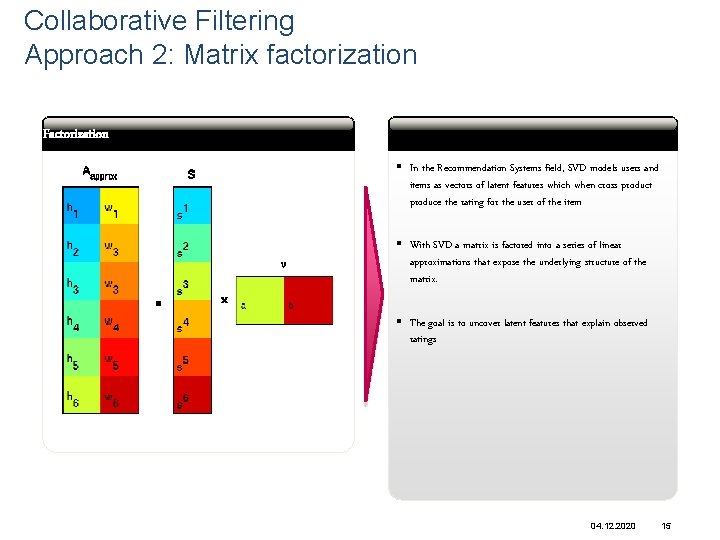

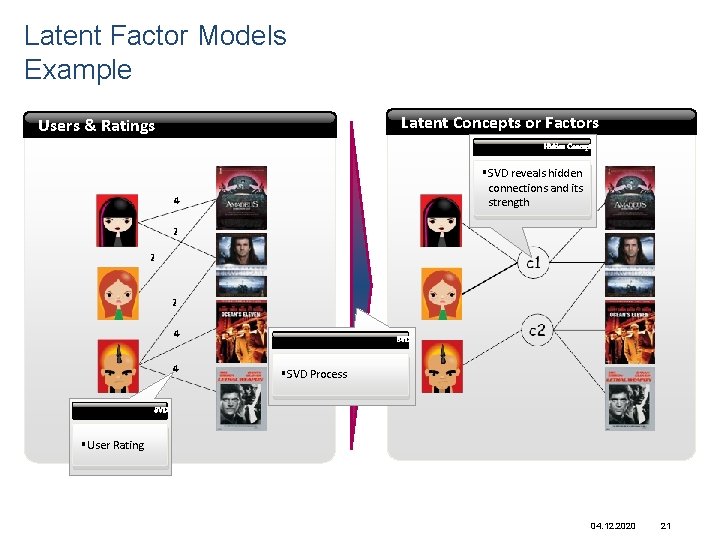

Collaborative Filtering Approach 2: Matrix factorization Factorization abcd § In the Recommendation Systems field, SVD models users and items as vectors of latent features which when cross product produce the rating for the user of the item § With SVD a matrix is factored into a series of linear approximations that expose the underlying structure of the matrix. § The goal is to uncover latent features that explain observed ratings 04. 12. 2020 15

The Netflix Prize § Started on Oct. 2006 § $1, 000 Grand Prize § Training dataset: 100 million ratings (1, 2, 3, 4, 5 stars) from 480 K customers on 18 K movies. § Qualifying set (2, 817, 131 ratings) consisting of: § Test set (1, 408, 789 ratings), used to determine winners § Quiz set (1, 408, 342 ratings), used to calculate leaderboard scores § Goal: § Improve the Netflix existing algorithm by at least 10% § Reduce RMSE From 0. 9525 to RMSE<0. 8572 16

Thre e Ye ars Late r … 17

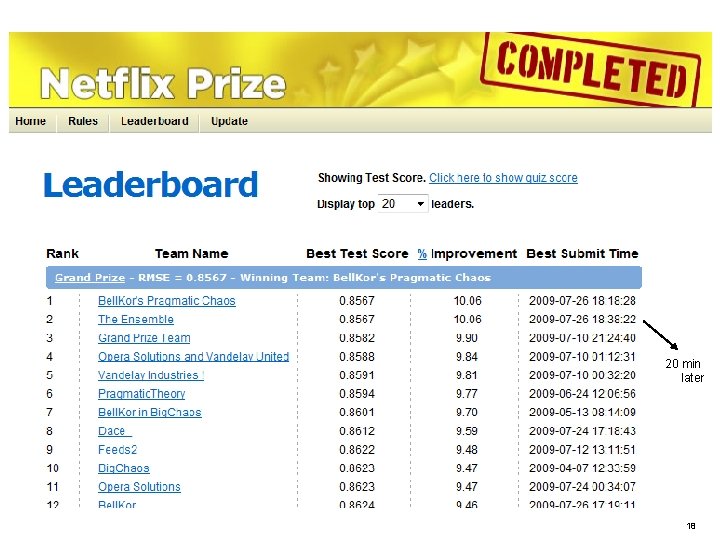

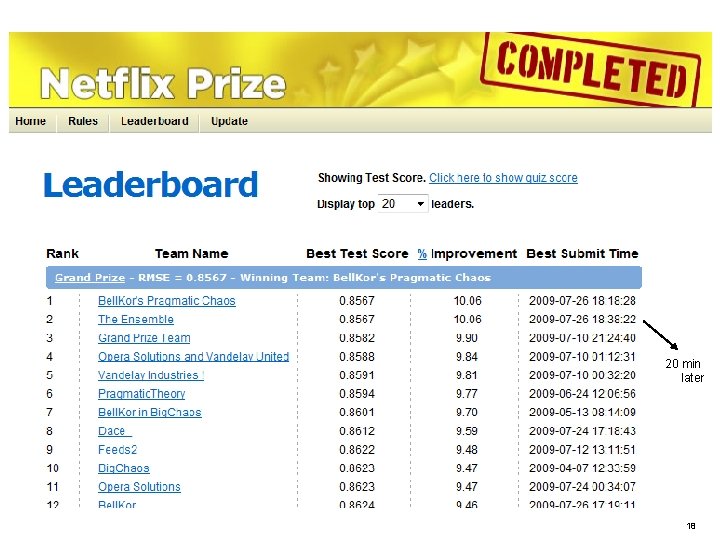

20 min later 18

The Prize Goes To … § § § Once a team succeeded to improve the RMSE by 10%, the jury issue a last call, giving all teams 30 days to send their submissions. On July 25, 2009 the team "The Ensemble” achieved a 10. 09% improvement. After some dispute … 19

Lessons Learned from the Netflix Prize § Competition is an excellent way for companies to: § Outsource their challenges § Get PR. § Hire top talent § SVD has become the method-of-choice in CF. § Ensemble is crucial for winning. § Regularization is important for alleviating over-fitting. § When an abundant training data is given, content features (e. g. genre and actors) found to be useless. § Methods that were developed during competitions are not always useful for real systems. 20

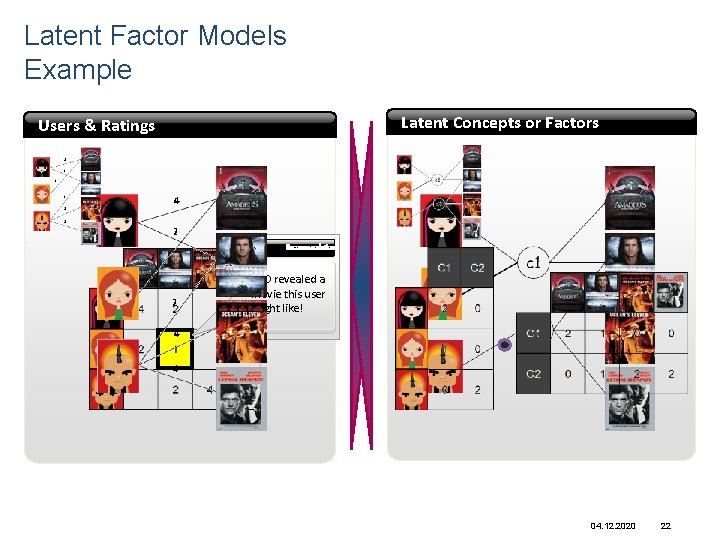

Latent Factor Models Example Latent Concepts or Factors Users & Ratings abcd. Hidden Concept § SVD reveals hidden connections and its strength abcd SVD § SVD Process abcd SVD § User Rating 04. 12. 2020 21

Latent Factor Models Example Latent Concepts or Factors Users & Ratings abcd Recommendation § SVD revealed a movie this user might like! 04. 12. 2020 22

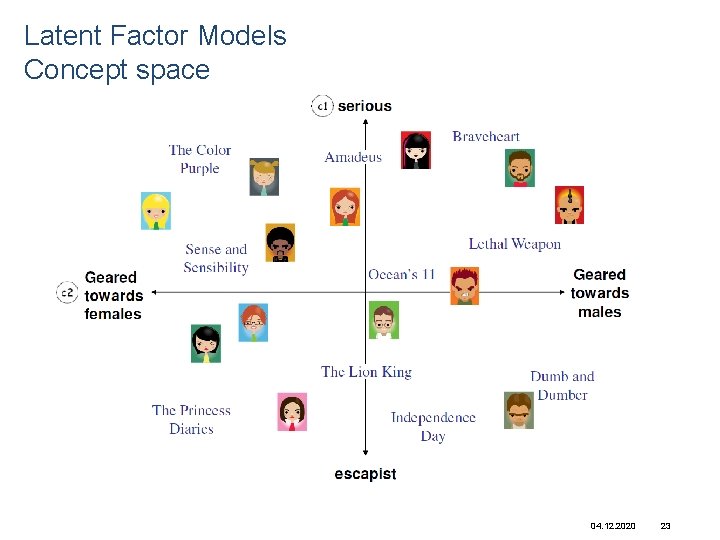

Latent Factor Models Concept space 04. 12. 2020 23

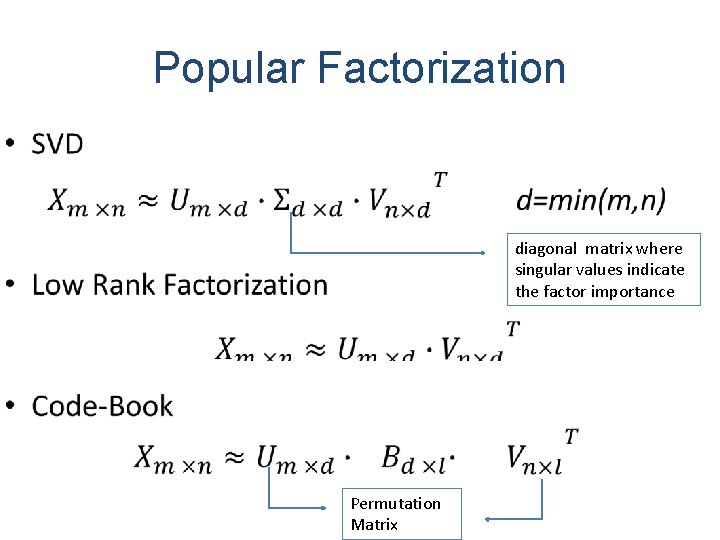

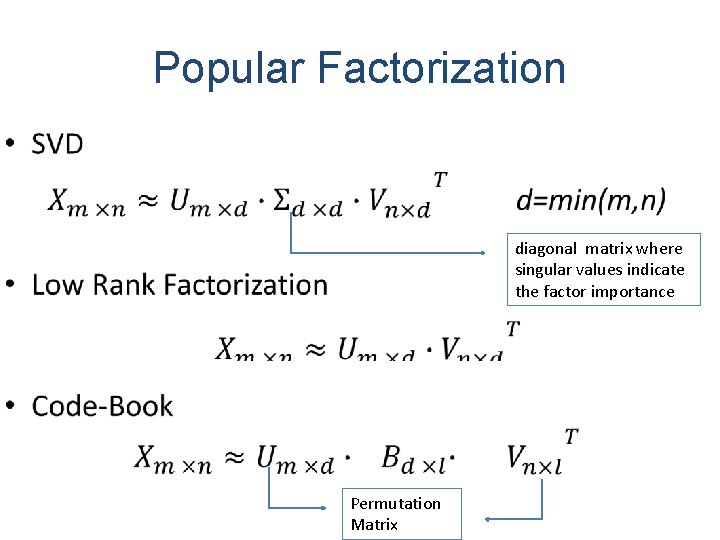

Popular Factorization • diagonal matrix where singular values indicate the factor importance Permutation Matrix

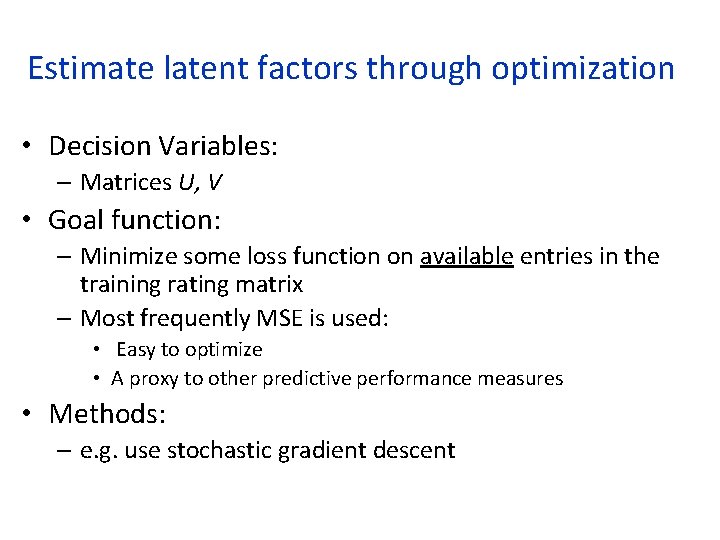

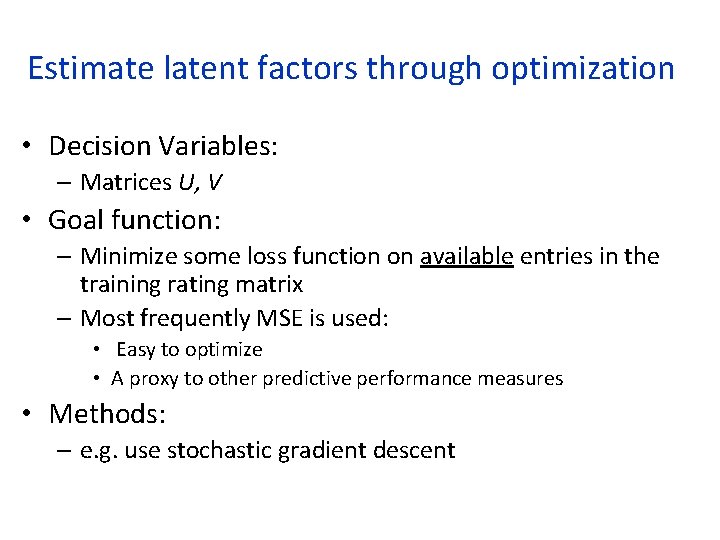

Estimate latent factors through optimization • Decision Variables: – Matrices U, V • Goal function: – Minimize some loss function on available entries in the training rating matrix – Most frequently MSE is used: • Easy to optimize • A proxy to other predictive performance measures • Methods: – e. g. use stochastic gradient descent

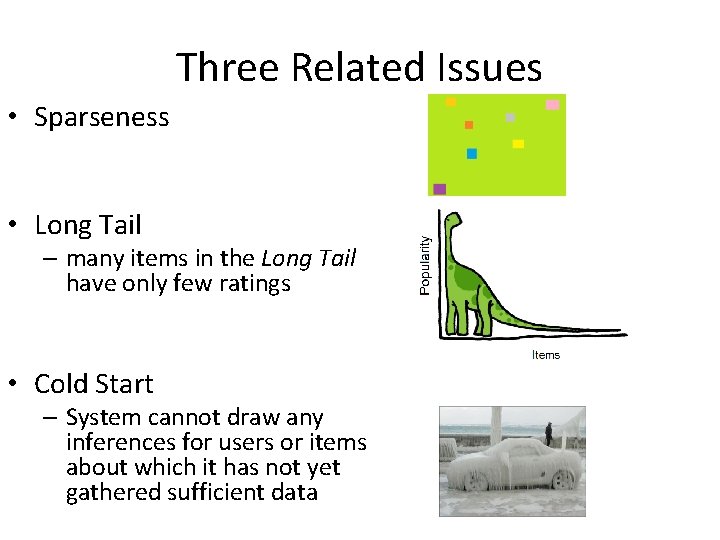

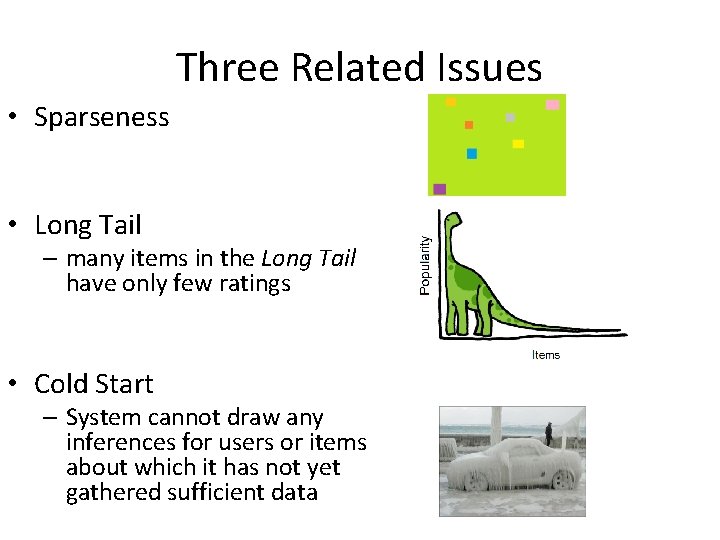

Three Related Issues • Sparseness • Long Tail – many items in the Long Tail have only few ratings • Cold Start – System cannot draw any inferences for users or items about which it has not yet gathered sufficient data

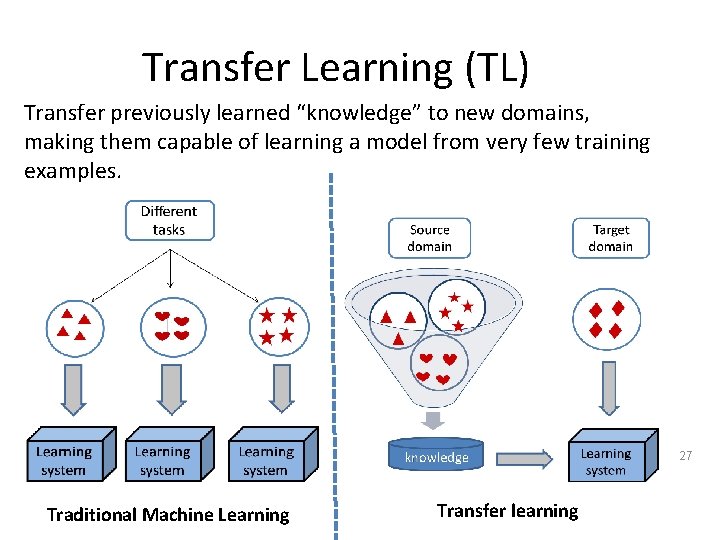

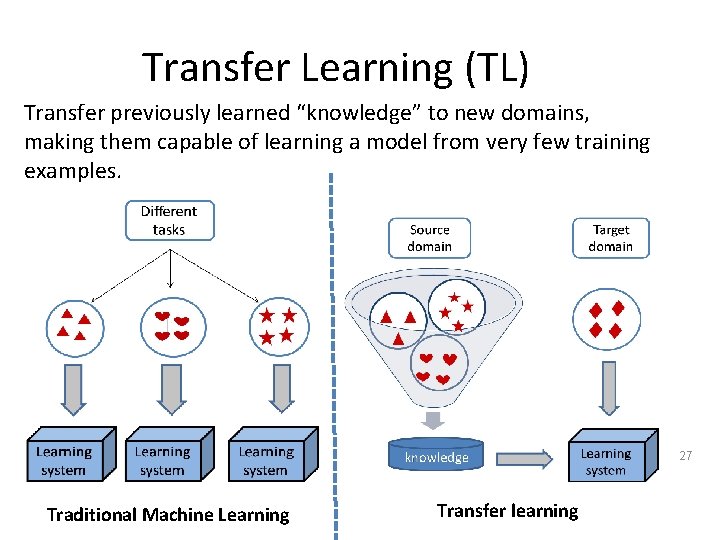

Transfer Learning (TL) Transfer previously learned “knowledge” to new domains, making them capable of learning a model from very few training examples. 27 Traditional Machine Learning Transfer learning

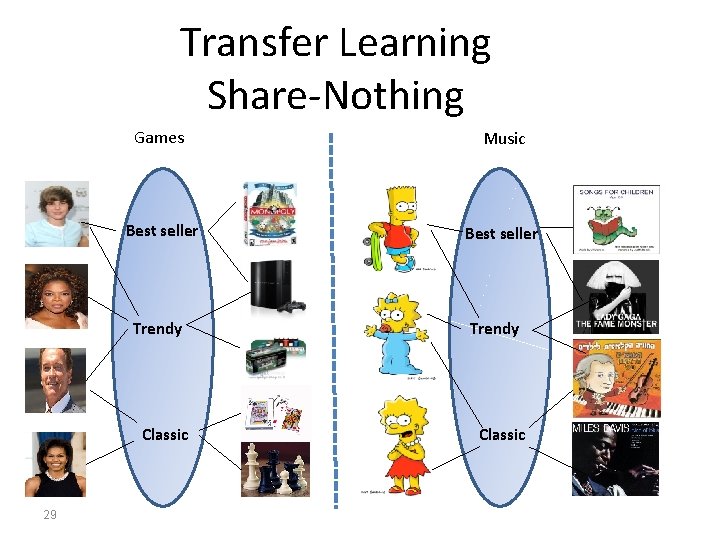

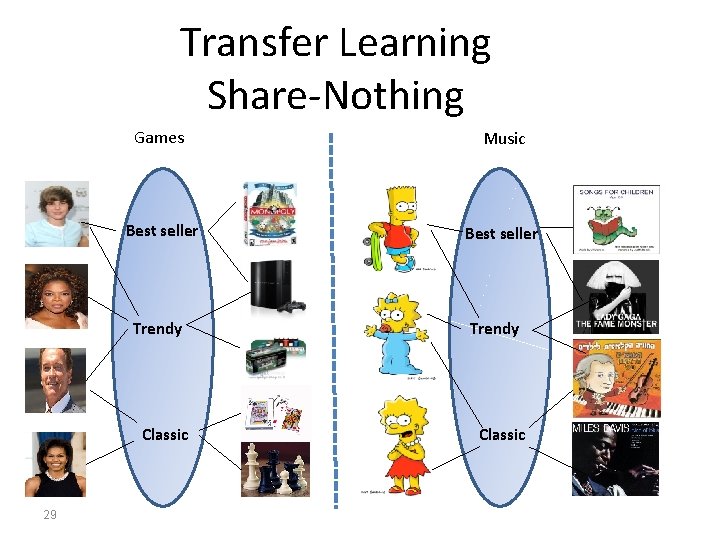

Transfer Learning Share-Nothing Games 28 Music

Transfer Learning Share-Nothing Games Music Best seller Trendy Classic 29 Trendy Classic

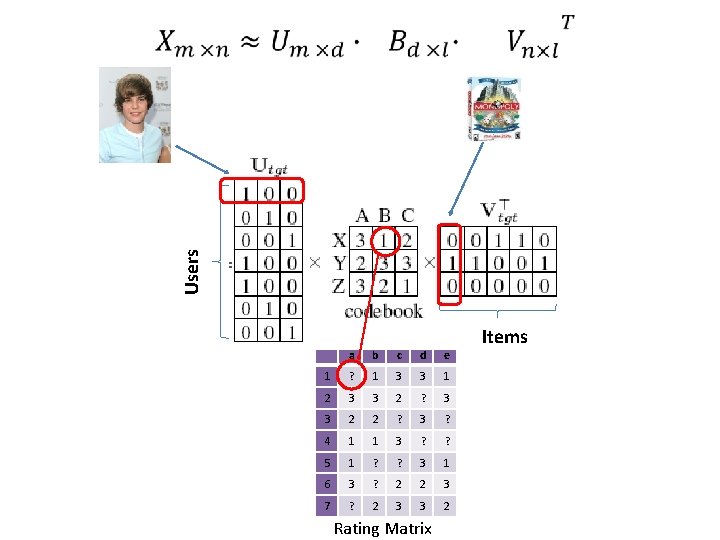

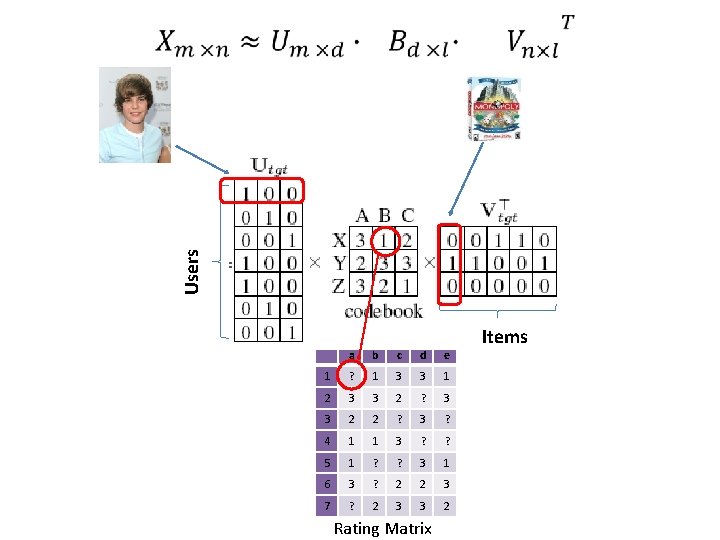

Users • a b c d e 1 ? 1 3 3 1 2 3 3 2 ? 3 3 2 2 ? 3 ? 4 1 1 3 ? ? 5 1 ? ? 3 1 6 3 ? 2 2 3 7 ? 2 3 3 2 Rating Matrix Items

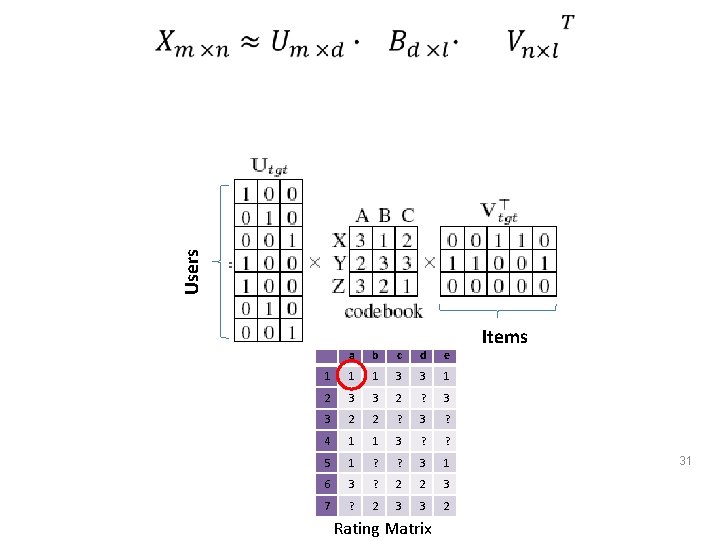

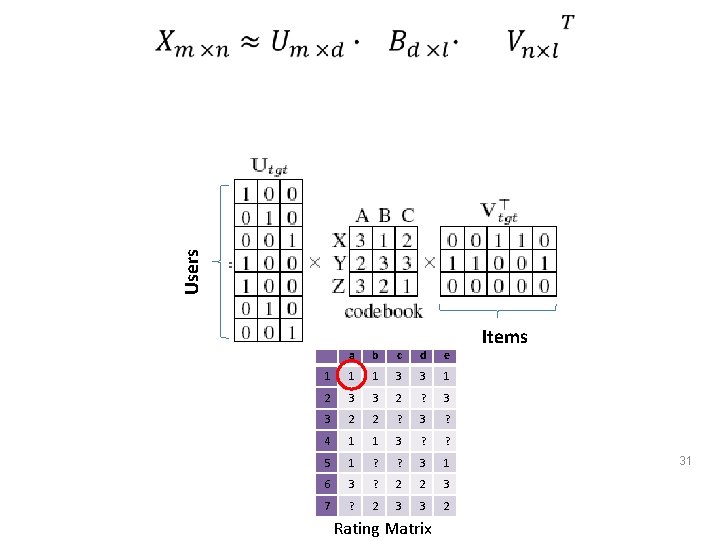

Users • a b c d e 1 1 1 3 3 1 2 3 3 2 ? 3 3 2 2 ? 3 ? 4 1 1 3 ? ? 5 1 ? ? 3 1 6 3 ? 2 2 3 7 ? 2 3 3 2 Rating Matrix Items 31

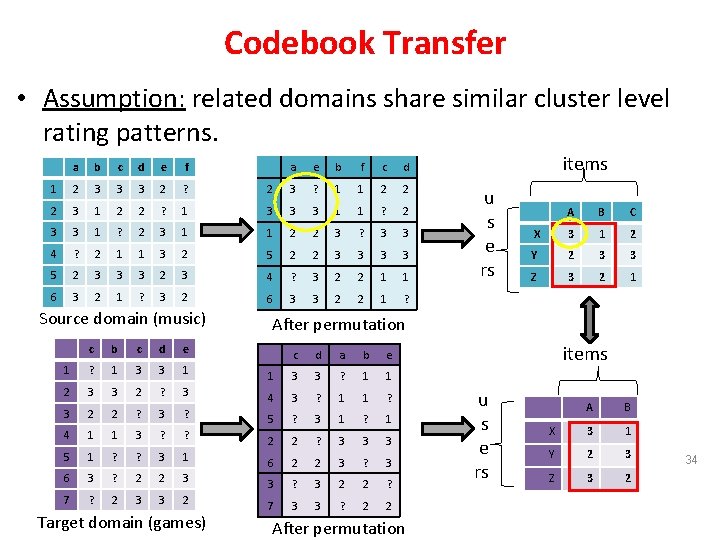

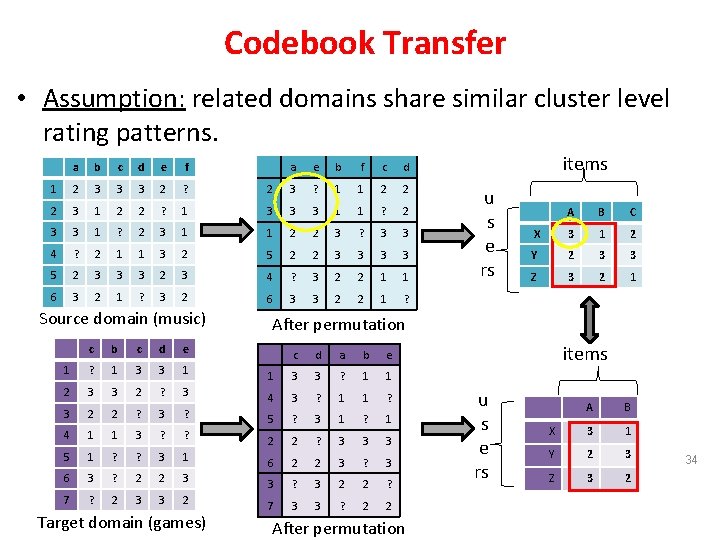

Codebook Transfer • Assumption: related domains share similar cluster level rating patterns. a b c d e f 1 2 3 3 3 2 ? 2 3 1 2 2 ? 3 3 1 ? 2 4 ? 2 1 5 2 3 6 3 2 a e b f c d 2 3 ? 1 1 2 2 1 3 3 3 1 1 ? 2 3 1 1 2 2 3 ? 3 3 1 3 2 5 2 2 3 3 3 2 3 4 ? 3 2 2 1 1 1 ? 3 2 6 3 3 2 2 1 ? Source domain (music) c b c d e 1 ? 1 3 3 1 2 3 3 2 ? 3 2 2 ? 4 1 1 5 1 6 7 u s e rs A B C X 3 1 2 Y 2 3 3 Z 3 2 1 After permutation c d a b e 1 3 3 ? 1 1 3 4 3 ? 1 1 ? 3 ? 5 ? 3 1 ? 1 3 ? ? 2 2 ? 3 3 3 ? ? 3 1 6 2 2 3 ? 3 3 ? 2 2 3 3 ? 3 2 2 ? ? 2 3 3 2 7 3 3 ? 2 2 Target domain (games) items After permutation items u s e rs A B X 3 1 Y 2 3 Z 3 2 34

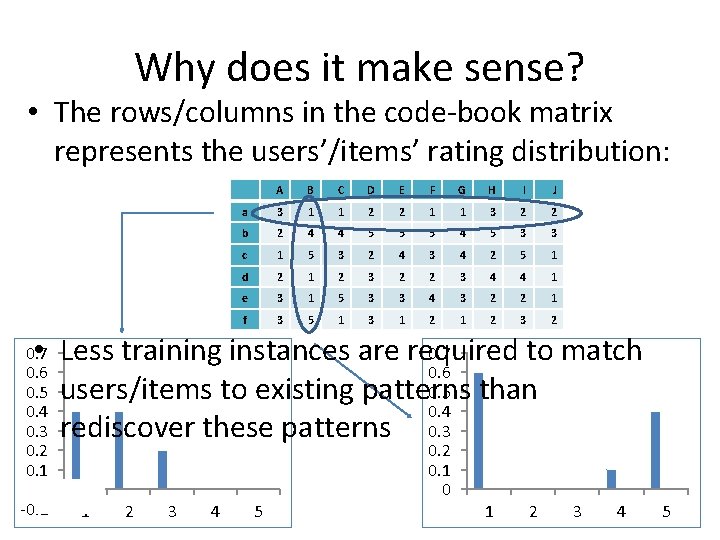

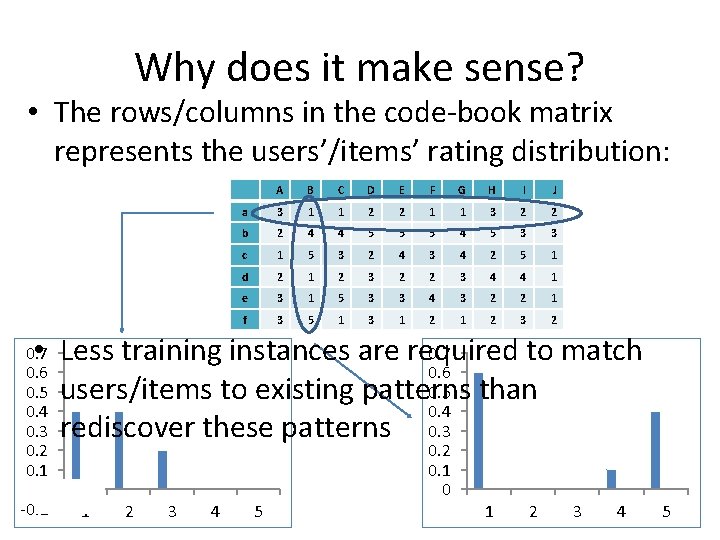

Why does it make sense? • The rows/columns in the code-book matrix represents the users’/items’ rating distribution: A B C D E F G H I J a 3 1 1 2 2 1 1 3 2 2 b 2 4 4 5 5 5 4 5 3 3 c 1 5 3 2 4 3 4 2 5 1 d 2 1 2 3 2 2 3 4 4 1 e 3 1 5 3 3 4 3 2 2 1 f 3 5 1 3 1 2 3 2 0. 7 • Less training instances are required to match 0. 6 0. 5 users/items to existing patterns than 0. 4 rediscover these patterns 0. 3 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 0 -0. 1 1 2 3 4 5 0. 2 0. 1 0 1 2 3 4 5

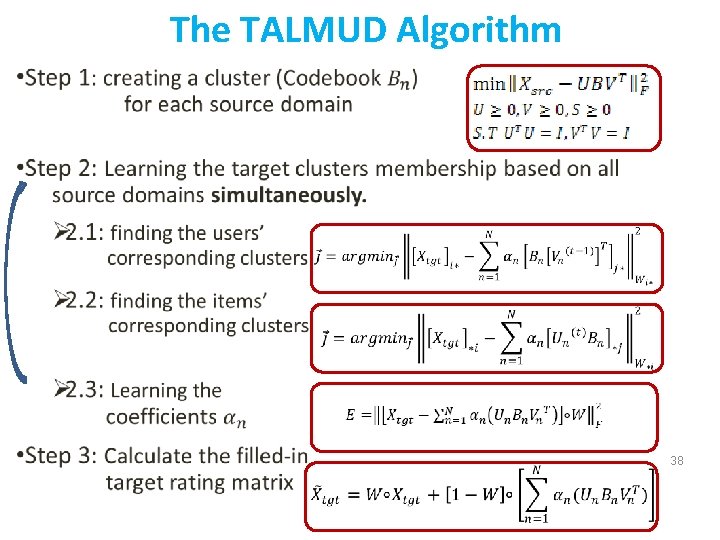

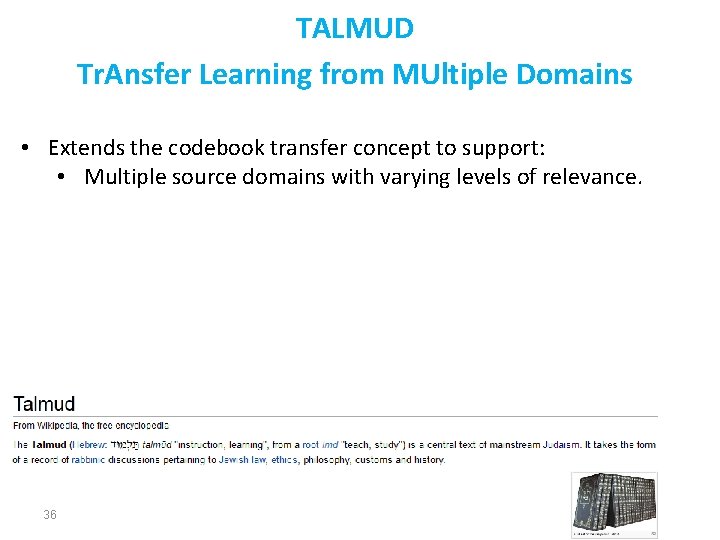

TALMUD Tr. Ansfer Learning from MUltiple Domains • Extends the codebook transfer concept to support: • Multiple source domains with varying levels of relevance. 36

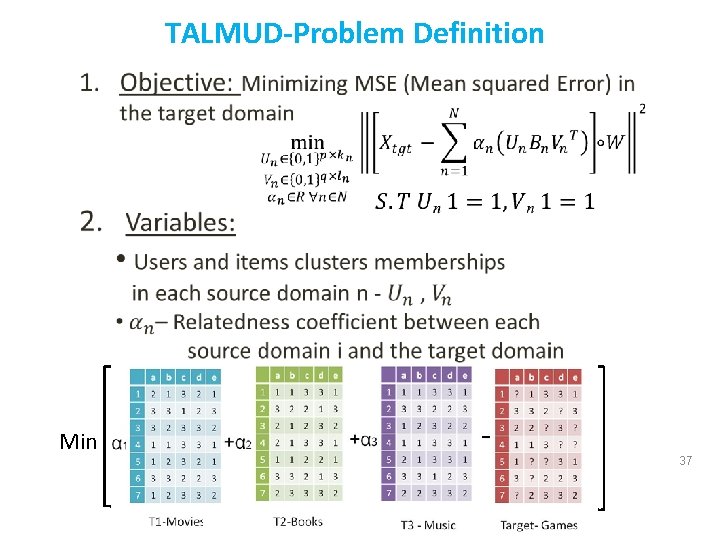

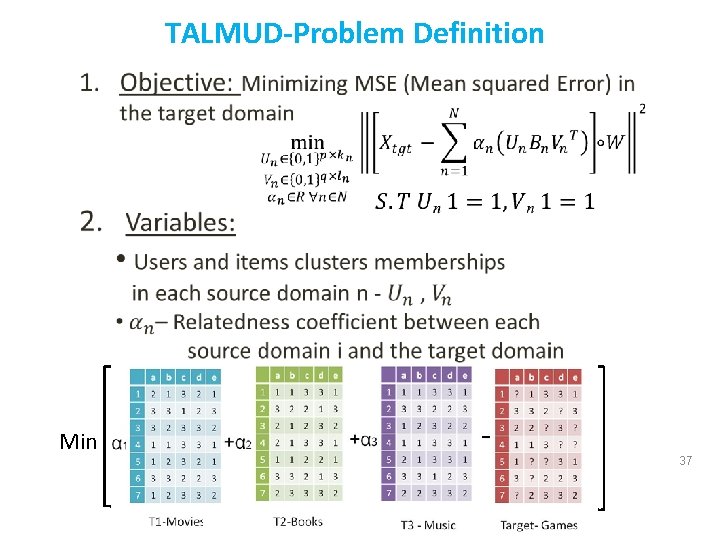

TALMUD-Problem Definition Min 37 37

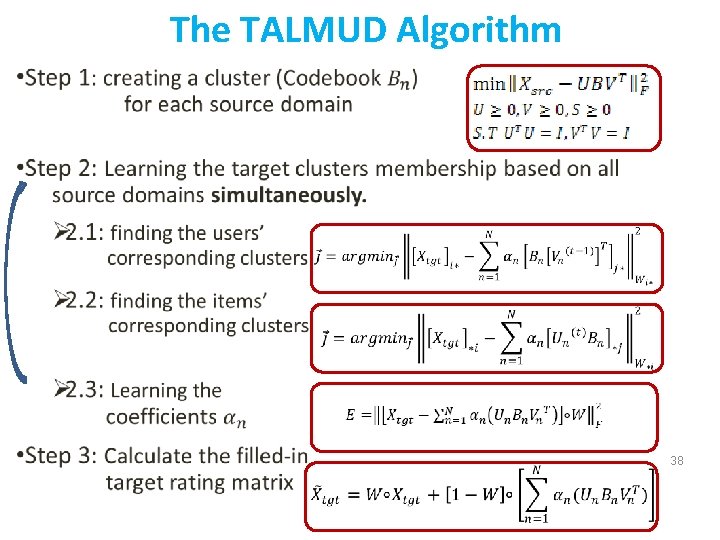

The TALMUD Algorithm 38

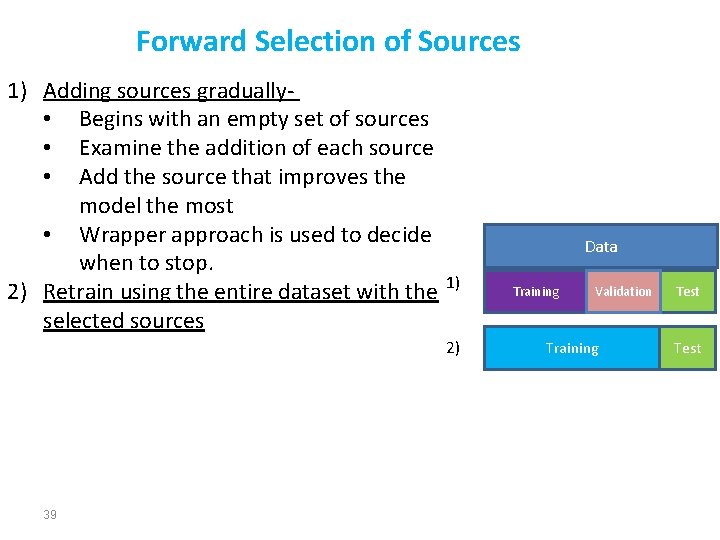

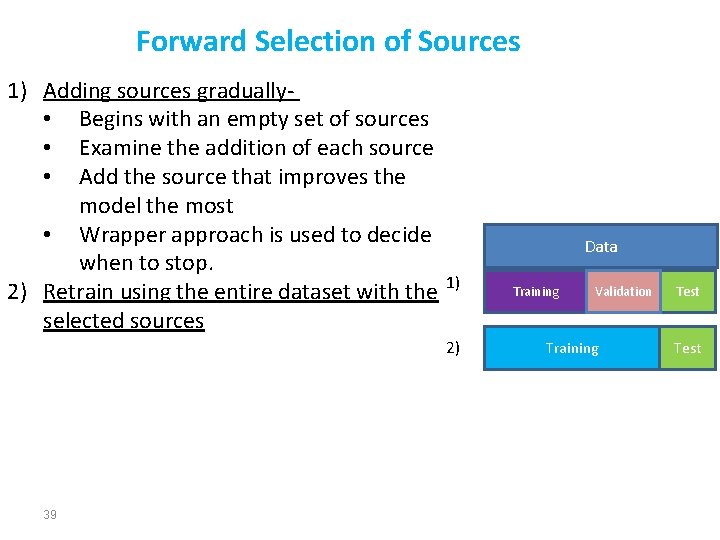

Forward Selection of Sources 1) Adding sources gradually- • Begins with an empty set of sources • Examine the addition of each source • Add the source that improves the model the most • Wrapper approach is used to decide when to stop. 2) Retrain using the entire dataset with the 1) selected sources 2) 39 Data Training Validation Training Test

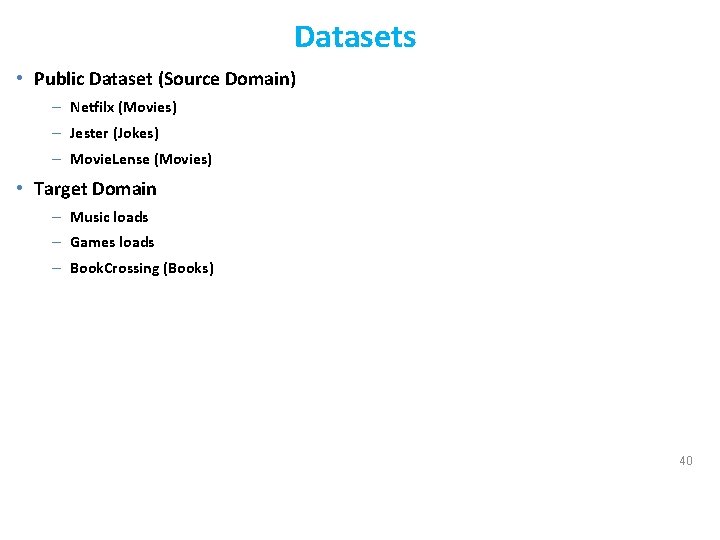

Datasets • Public Dataset (Source Domain) – Netfilx (Movies) – Jester (Jokes) – Movie. Lense (Movies) • Target Domain – Music loads – Games loads – Book. Crossing (Books) 40

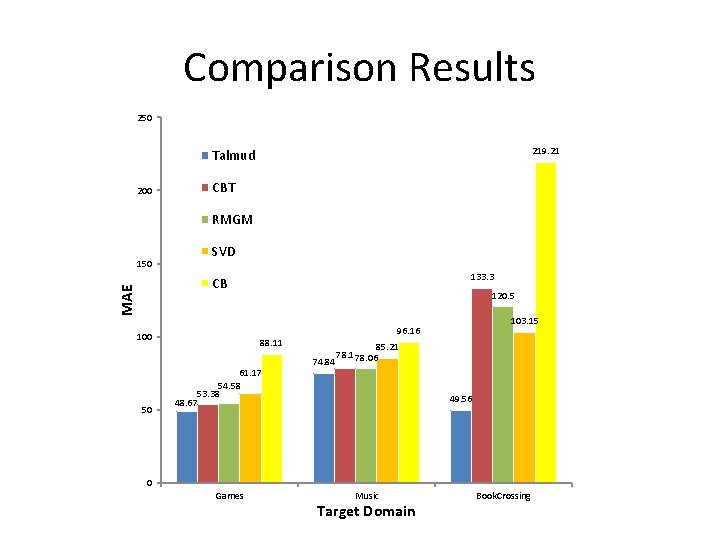

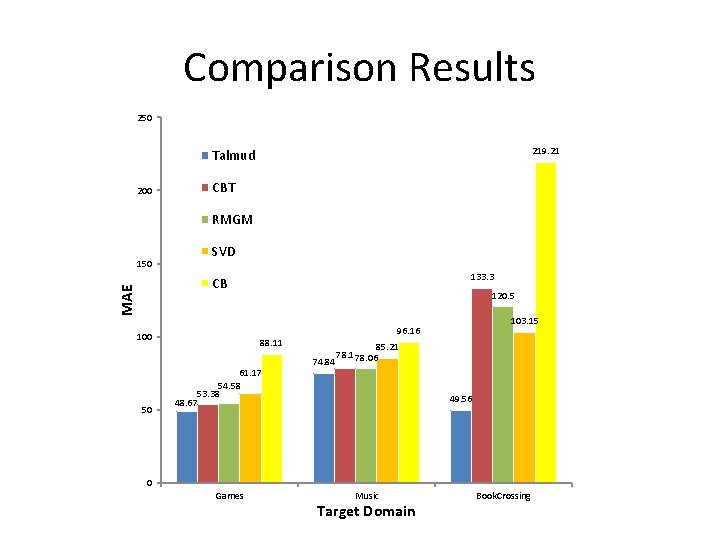

Comparison Results 250 219. 21 Talmud 200 CBT RMGM 150 SVD 133. 3 MAE CB 100 50 120. 5 88. 11 61. 17 54. 58 53. 38 48. 67 103. 15 96. 16 74. 84 85. 21 78. 06 49. 56 0 Games Music Target Domain Book. Crossing

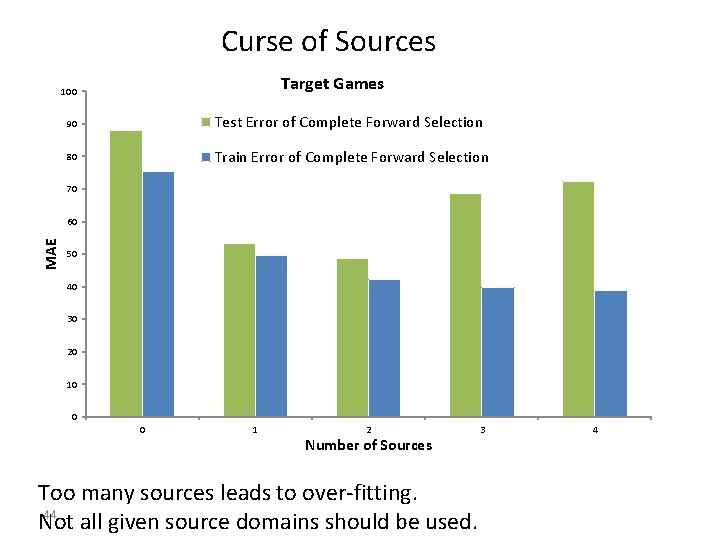

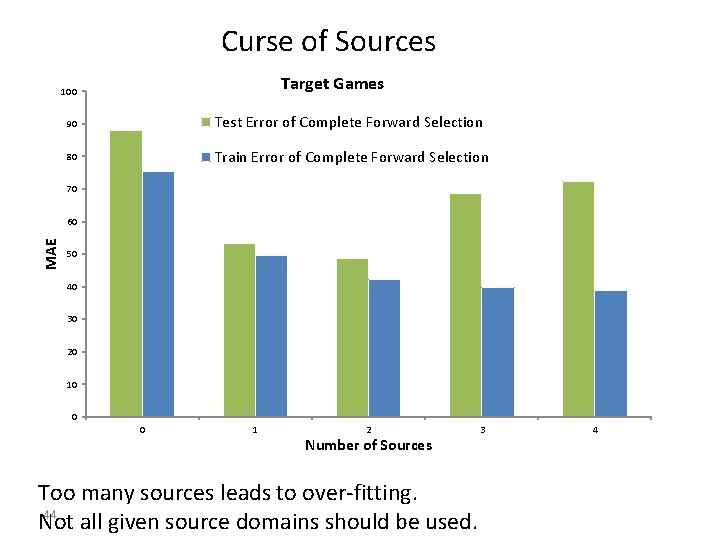

Curse of Sources Target Games 100 90 Test Error of Complete Forward Selection Train Error of Complete Forward Selection 80 70 MAE 60 50 40 30 20 10 0 0 1 2 Number of Sources Too many sources leads to over-fitting. 44 Not all given source domains should be used. 3 4

and then dee p lea rning com es 46

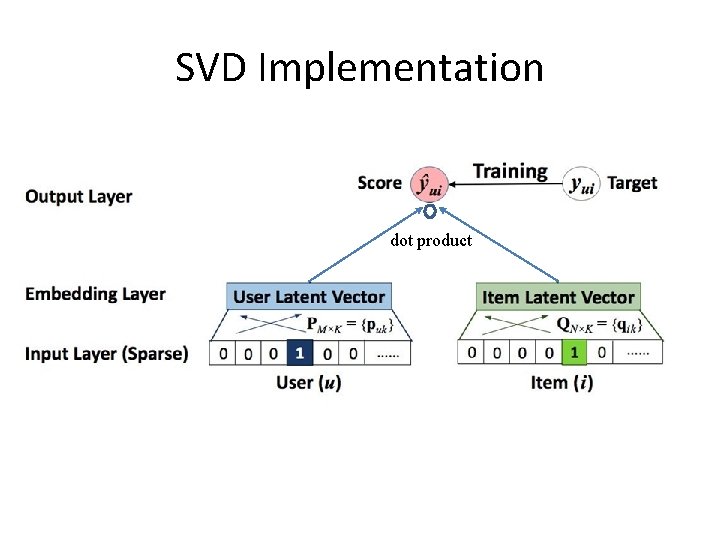

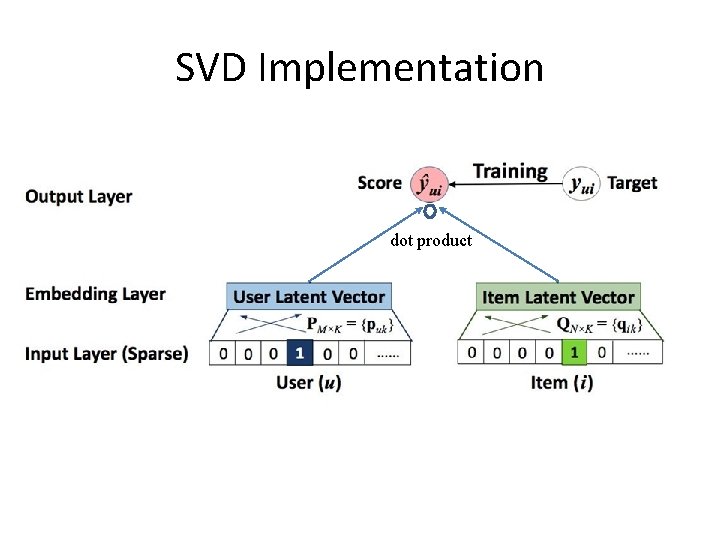

SVD Implementation dot product

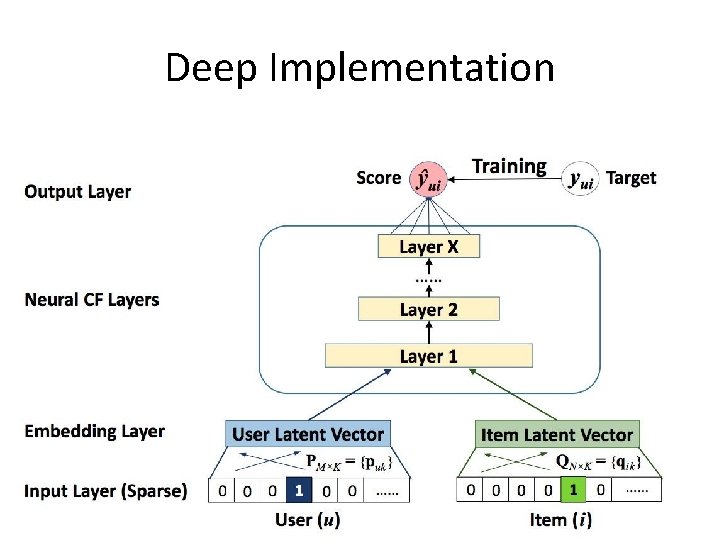

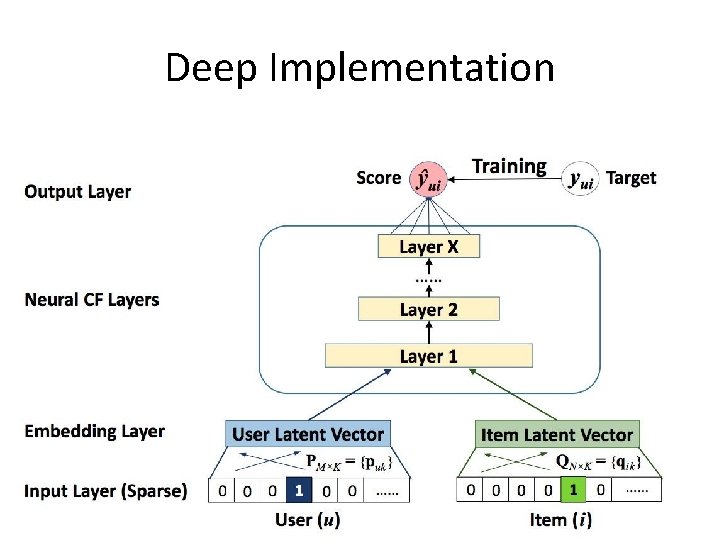

Deep Implementation

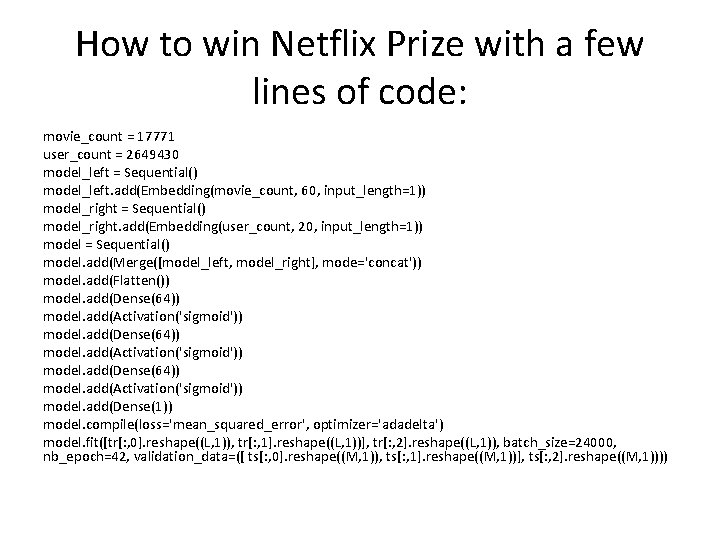

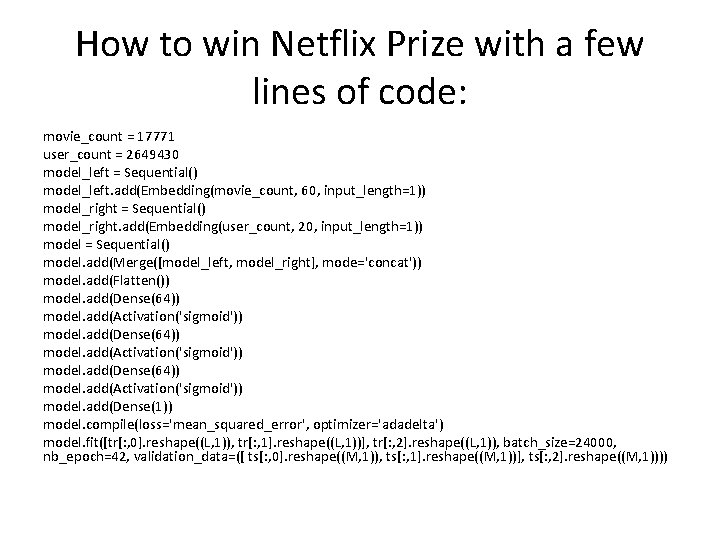

How to win Netflix Prize with a few lines of code: movie_count = 17771 user_count = 2649430 model_left = Sequential() model_left. add(Embedding(movie_count, 60, input_length=1)) model_right = Sequential() model_right. add(Embedding(user_count, 20, input_length=1)) model = Sequential() model. add(Merge([model_left, model_right], mode='concat')) model. add(Flatten()) model. add(Dense(64)) model. add(Activation('sigmoid')) model. add(Dense(1)) model. compile(loss='mean_squared_error', optimizer='adadelta') model. fit([tr[: , 0]. reshape((L, 1)), tr[: , 1]. reshape((L, 1))], tr[: , 2]. reshape((L, 1)), batch_size=24000, nb_epoch=42, validation_data=([ ts[: , 0]. reshape((M, 1)), ts[: , 1]. reshape((M, 1))], ts[: , 2]. reshape((M, 1))))

Item 2 Vec: Item Embedding • Represent each item with a low-dimensional vector • Item similarity = vector similarity • Learned from users’ sessions. • Inspired by Word 2 Vec – Words = Items – Sentences = Users’ Sessions

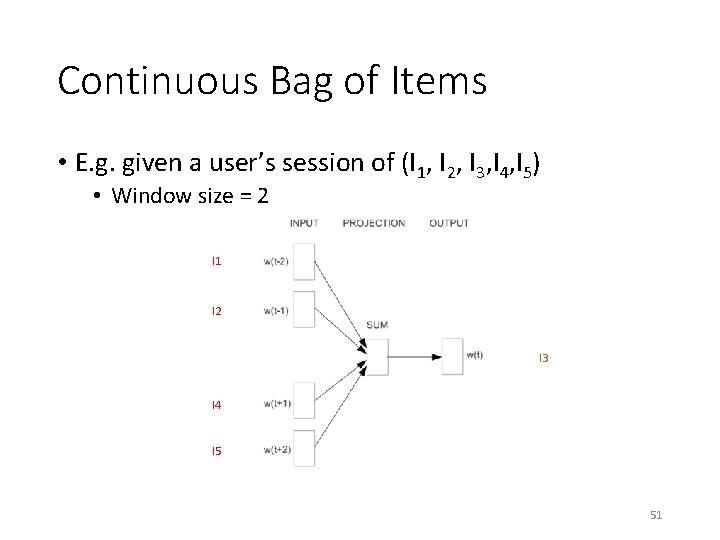

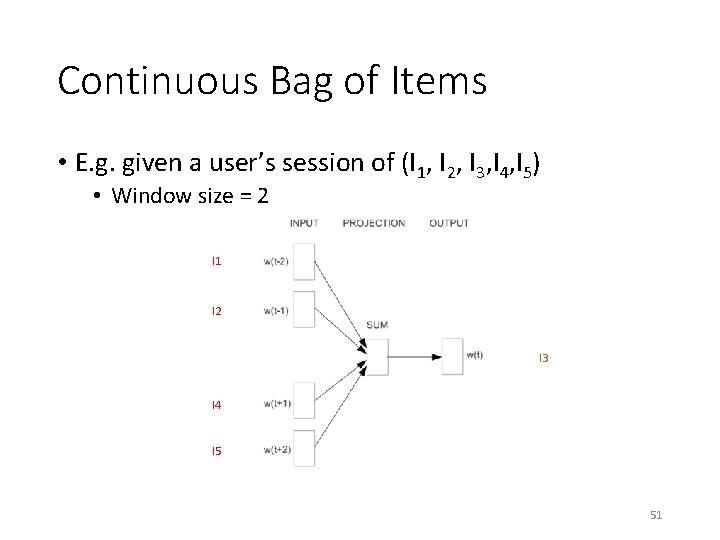

Continuous Bag of Items • E. g. given a user’s session of (I 1, I 2, I 3, I 4, I 5) • Window size = 2 I 1 I 2 I 3 I 4 I 5 51

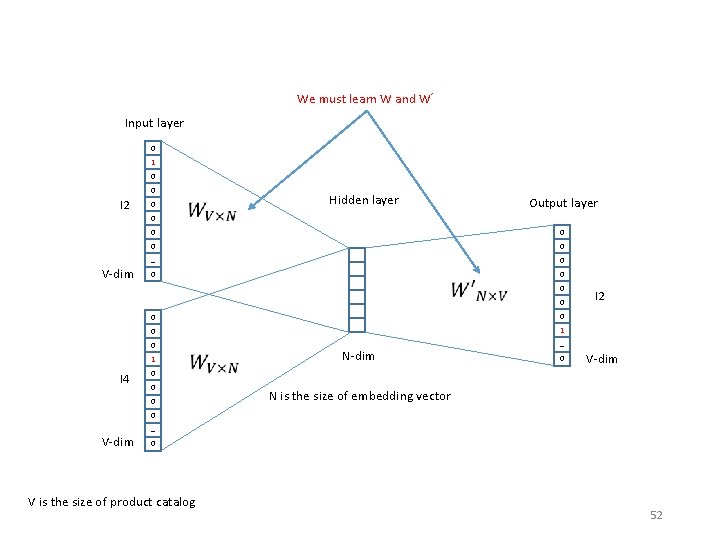

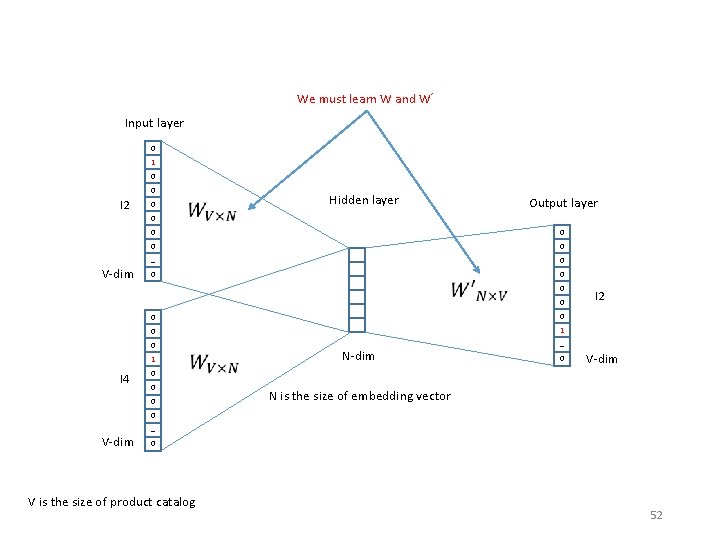

We must learn W and W’ Input layer 0 1 0 0 I 2 0 0 V-dim Hidden layer 0 0 … 0 0 0 1 0 … 1 0 0 0 I 2 0 0 I 4 Output layer N-dim 0 V-dim N is the size of embedding vector 0 V-dim … 0 V is the size of product catalog 52

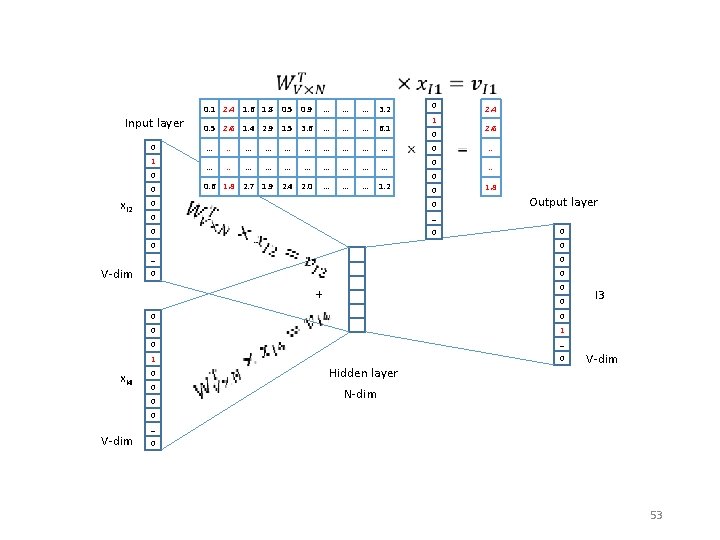

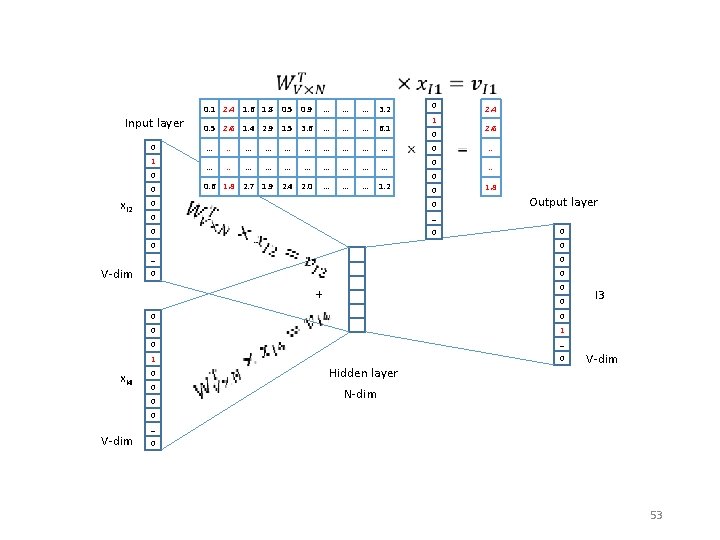

Input layer 0 1 0 0 x. I 2 0. 1 2. 4 1. 6 1. 8 0. 5 0. 9 … … … 0. 5 2. 6 1. 4 2. 9 1. 5 3. 6 … … … 6. 1 … … … … … 0. 6 1. 8 2. 7 1. 9 2. 4 2. 0 … … … 1. 2 0 0 … … 1. 8 Output layer 0 0 0 + x. I 4 0 0 2. 6 0 0 0 2. 4 1 0 … V-dim 0 3. 2 0 0 0 1 0 … 1 0 0 I 3 Hidden layer V-dim N-dim 0 V-dim … 0 53

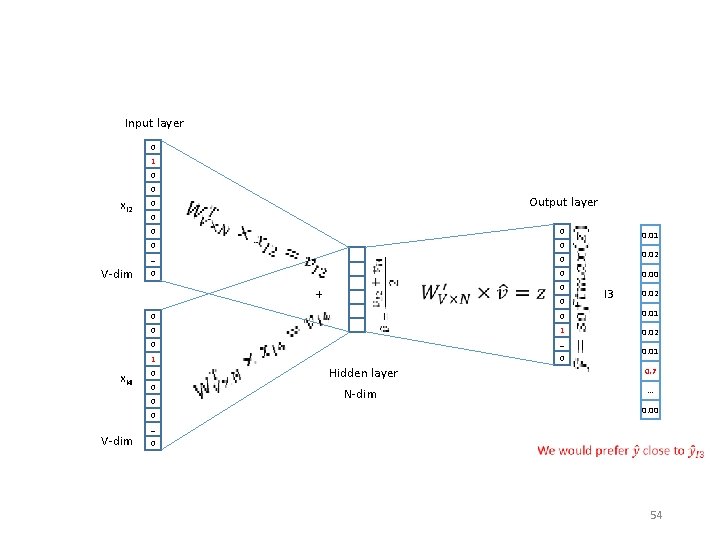

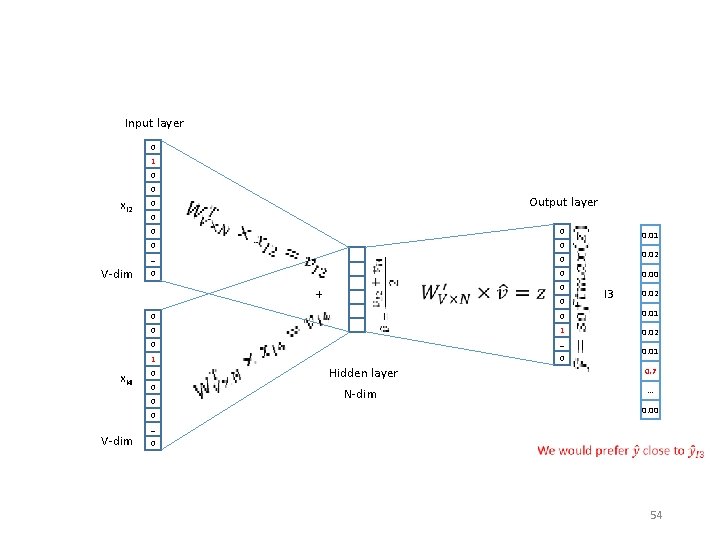

Input layer 0 1 0 0 Output layer 0 0 0 … V-dim 0 + 0. 02 0 0. 00 0 I 3 0. 02 0. 01 0 1 0. 02 0 … 1 0 0 0 V-dim 0. 01 0 0 x. I 4 x. I 2 Hidden layer N-dim 0. 01 0. 7 … 0. 00 … 0 54

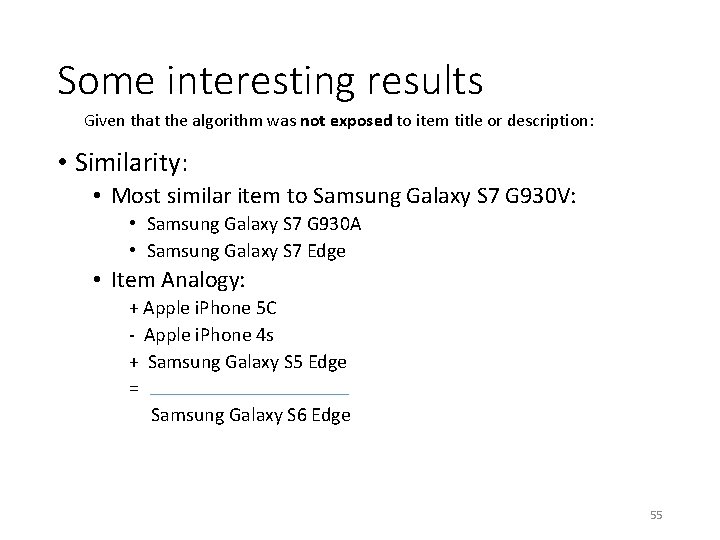

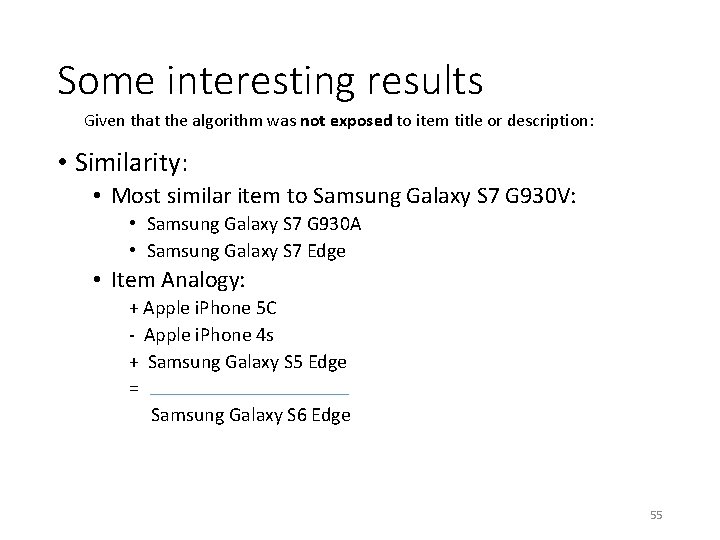

Some interesting results Given that the algorithm was not exposed to item title or description: • Similarity: • Most similar item to Samsung Galaxy S 7 G 930 V: • Samsung Galaxy S 7 G 930 A • Samsung Galaxy S 7 Edge • Item Analogy: + Apple i. Phone 5 C - Apple i. Phone 4 s + Samsung Galaxy S 5 Edge = Samsung Galaxy S 6 Edge 55

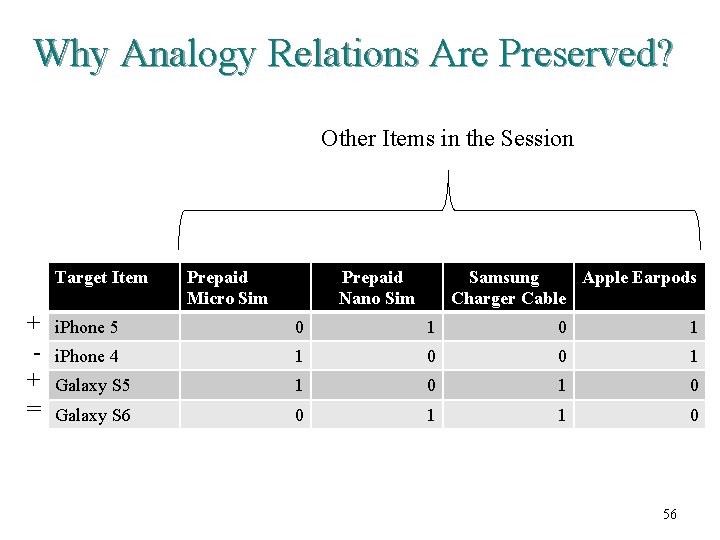

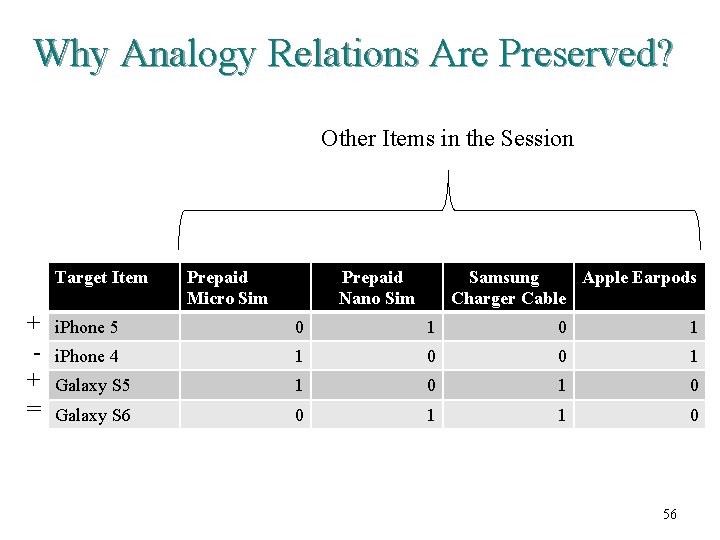

Why Analogy Relations Are Preserved? Other Items in the Session Target Item + + = Prepaid Micro Sim Prepaid Nano Sim Samsung Apple Earpods Charger Cable i. Phone 5 0 1 i. Phone 4 1 0 0 1 Galaxy S 5 1 0 Galaxy S 6 0 1 1 0 56

Beyond Accuracy: Future Trends in Rec. Sys • • Diversity & Serendipity Incorporating price in Rec. Sys models Explainable Rec. Sys Counteract the effect of the existing Rec. Sys and isolate the organic browsing of the users • Knowledge-based Rec. Sys 57

Lior rokach

Lior rokach Cct theory

Cct theory Introduction to recommender systems

Introduction to recommender systems Recommender systems: an introduction

Recommender systems: an introduction Weighted hybrid recommender systems

Weighted hybrid recommender systems Lior arazi

Lior arazi Unsent message to lior

Unsent message to lior Avi lior

Avi lior Stratasys

Stratasys Literary devices characterization

Literary devices characterization How long is four score and seven years?

How long is four score and seven years? After twenty years סיכום

After twenty years סיכום Andre courreges biography

Andre courreges biography Twenty years before

Twenty years before Four score and seven years ago our fathers

Four score and seven years ago our fathers Latent factors recommender system

Latent factors recommender system Recommender relationship

Recommender relationship Goat years to human years

Goat years to human years 300 solar years to lunar years

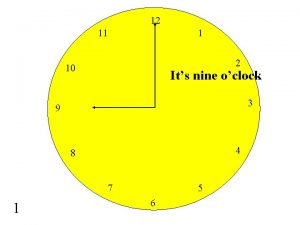

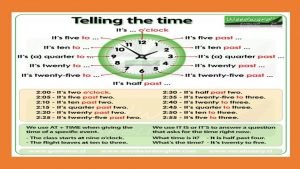

300 solar years to lunar years It's twenty to nine

It's twenty to nine Games to.play in the car

Games to.play in the car 20 questions game

20 questions game Twenty point program

Twenty point program It's twenty to eight

It's twenty to eight Twenty to six

Twenty to six It's twenty past nine

It's twenty past nine Half past sixteen

Half past sixteen A brief description

A brief description Subject questions

Subject questions I can easier teach twenty

I can easier teach twenty Shaving mirror for survival

Shaving mirror for survival In the twenty-first century, sales leaders are

In the twenty-first century, sales leaders are Twenty one pilots: guns for hands

Twenty one pilots: guns for hands Twenty-five to six

Twenty-five to six Twenty questions christmas

Twenty questions christmas A small group usually has between three and twenty people.

A small group usually has between three and twenty people. Six past half

Six past half It's a quarter to two

It's a quarter to two It's twenty-five to ten

It's twenty-five to ten Fiona told the truth to julian change into passive voice

Fiona told the truth to julian change into passive voice Rules of the game questions and answers

Rules of the game questions and answers Twenty first century trends in entrepreneurship

Twenty first century trends in entrepreneurship Twenty questions

Twenty questions 20 questions

20 questions Split personality poe

Split personality poe Aa twenty questions

Aa twenty questions Yourt ube

Yourt ube Decision support systems and intelligent systems

Decision support systems and intelligent systems Principles of complex systems for systems engineering

Principles of complex systems for systems engineering Embedded systems vs cyber physical systems

Embedded systems vs cyber physical systems Engineering elegant systems: theory of systems engineering

Engineering elegant systems: theory of systems engineering Hcsrn

Hcsrn Esri redlands, ca

Esri redlands, ca