Recommender Systems Martin Ester Simon Fraser University School

![Collaborative Filtering Evaluation [Herlocker 2004] • split users into train/test sets • for each Collaborative Filtering Evaluation [Herlocker 2004] • split users into train/test sets • for each](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-18.jpg)

![Collaborative Filtering Item-Item Collaborative Filtering [Sarwar et al 2001] • Many applications have many Collaborative Filtering Item-Item Collaborative Filtering [Sarwar et al 2001] • Many applications have many](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-26.jpg)

![Trust-based Recommendation Tidal. Trust [Golbeck 2005] • most accurate information will come from the Trust-based Recommendation Tidal. Trust [Golbeck 2005] • most accurate information will come from the](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-36.jpg)

![Trust-based Recommendation Mole. Trust [Massa et al 2007] • trust model similar to Tidal. Trust-based Recommendation Mole. Trust [Massa et al 2007] • trust model similar to Tidal.](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-37.jpg)

![Trust-based Recommendation Random Walks [Andersen et al 2008] • perform a random walk in Trust-based Recommendation Random Walks [Andersen et al 2008] • perform a random walk in](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-39.jpg)

![Model-based Recommendation Introduction [Cohen 2002] • so far: memory-based methods CF, trust-based recommendation • Model-based Recommendation Introduction [Cohen 2002] • so far: memory-based methods CF, trust-based recommendation •](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-43.jpg)

![Model-based Recommendation CF as Density Estimation [Horvitz et al 1998] • estimate Pr(Rij=k) for Model-based Recommendation CF as Density Estimation [Horvitz et al 1998] • estimate Pr(Rij=k) for](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-44.jpg)

![Model-based Recommendation CF as Classification [Basu et al, 1998] • Classification task: map (user, Model-based Recommendation CF as Classification [Basu et al, 1998] • Classification task: map (user,](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-48.jpg)

- Slides: 56

Recommender Systems Martin Ester Simon Fraser University School of Computing Science CMPT 884 Spring 2009 CMPT 884, SFU, Martin Ester, 1 -09 104

Recommender Systems Outline • Introduction motivation, applications, issues • Collaborative filtering user-based, item-based, challenges • Trust-based recommendation deterministic, random walks, challenges • Model-based recommendation [Konstan 2008] [Cohen 2002] CMPT 884, SFU, Martin Ester, 1 -09 105

Recommender Systems Introduction • search engine users just type in a few keywords • search engine overwhelms user with a flood of results • ranking mechanism based on similarity between query keywords and web pages and on prestige of pages • search engine‘s answers do not take into account user feedback and users‘ preferences Information needs more complex than keywords or topics: quality and taste CMPT 884, SFU, Martin Ester, 1 -09 106

Recommender Systems Introduction • Users are not willing to spend a lot of time to specify their personal information needs • Recommender systems automatically identify relevant information or products relevant for a given user, learning from available data • Data can be transactions of all users / customers of a website or profile of an individual users who bought this book also bought. . . (Amazon. com) CMPT 884, SFU, Martin Ester, 1 -09 107

Recommender Systems Personalization Level • Generic everyone receives same recommendations • Demographic matches a demographic group • Personalized matches an individual, everybody gets different recommendations • Ephemeral matches current activity • Persistent matches long-term interests CMPT 884, SFU, Martin Ester, 1 -09 108

Recommender Systems Types of Systems • Filtering interfaces E-mail filters, clipping services • Recommendation interfaces suggestion lists, “top-n, ” offers and promotions • Prediction interfaces evaluate candidates, predicted ratings CMPT 884, SFU, Martin Ester, 1 -09 109

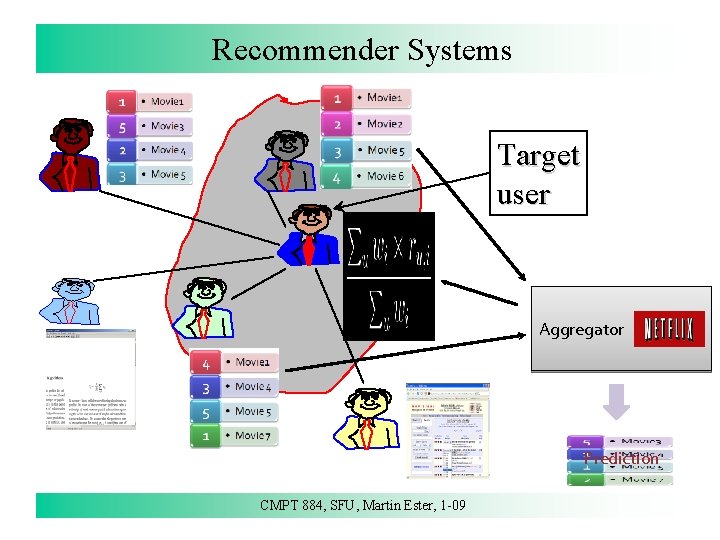

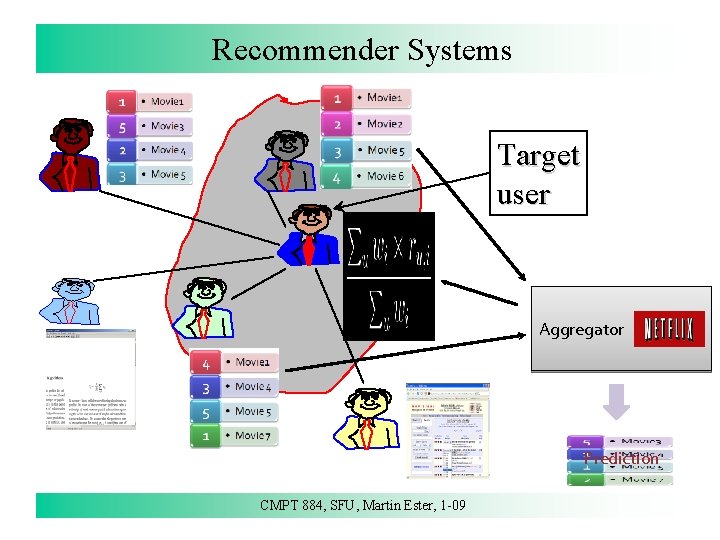

Recommender Systems Collaborative Filtering • Main idea users rate items users are correlated with other users personal predictions for unrated items • Nearest-Neighbor Approach find people with history of agreement aggregate their ratings to predict rating of user assume stable tastes employs data about the target user and other users CMPT 884, SFU, Martin Ester, 1 -09 110

Recommender Systems Target user Aggregator Prediction CMPT 884, SFU, Martin Ester, 1 -09 111

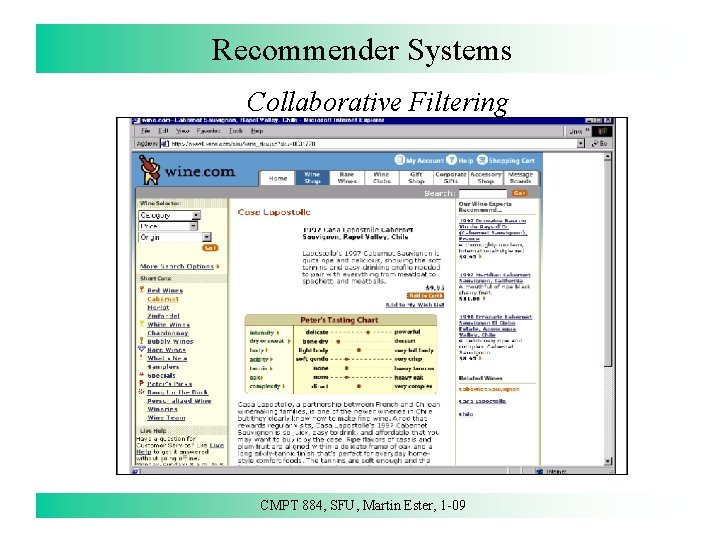

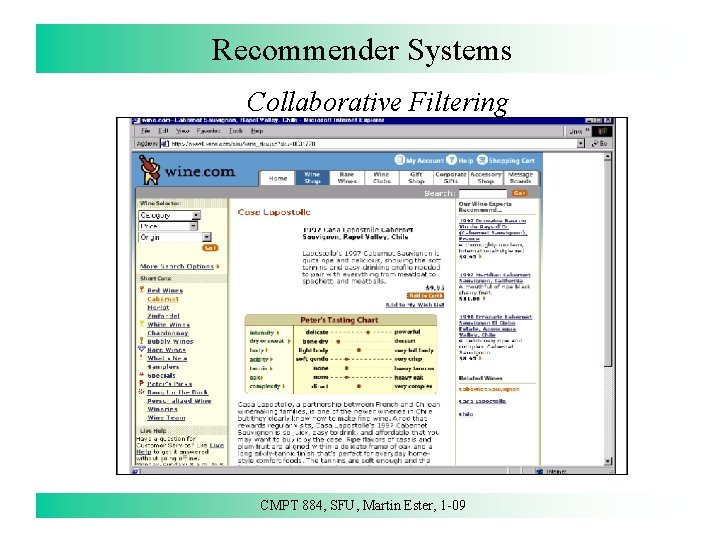

Recommender Systems Collaborative Filtering CMPT 884, SFU, Martin Ester, 1 -09 112

Recommender Systems Collaborative Filtering • Recommendation task 1 Predicting the rating on a target item for a given user Predicting John’s rating on Star Wars Movie movie 1 ? ? Recommender CMPT 884, SFU, Martin Ester, 1 -09 113

Recommender Systems Collaborative Filtering • Recommendation task 2 Recommending a list of items to a given user Recommending a list of movies to John for watching List of Top Movies ? ? Recommender CMPT 884, SFU, Martin Ester, 1 -09 114

Recommender Systems Applications • Movie recommendations • Book recommendations • Recommendation of friends CMPT 884, SFU, Martin Ester, 1 -09 115

Recommender Systems Privacy and Trustworthiness • Who knows what about me? – personal information revealed – identity • Is the recommendation honest? – biases built-in by operator e. g. want to sell „old hats“ or prefers ads with higher bids • Vulnerability to external manipulation (fraud) - insert fraudulent user profiles which rate my product highly CMPT 884, SFU, Martin Ester, 1 -09 116

Collaborative Filtering Introduction Rating Matrix Items Users Ratings Similar user What is Joe’s rating of Blimp and of Rocky. XV? CMPT 884, SFU, Martin Ester, 1 -09 117

Collaborative Filtering Example CMPT 884, SFU, Martin Ester, 1 -09 118

Collaborative Filtering Definitions • vi, j: vote of user i on item j • Ii = items for which user i has voted • mean vote of user i is • predicted vote for active user a on target item j is weighted sum of votes on j by n “similar” users normalizer weights of n similar users CMPT 884, SFU, Martin Ester, 1 -09 119

Collaborative Filtering Definitions • K-nearest neighbor • Pearson correlation coefficient • Cosine distance CMPT 884, SFU, Martin Ester, 1 -09 120

![Collaborative Filtering Evaluation Herlocker 2004 split users into traintest sets for each Collaborative Filtering Evaluation [Herlocker 2004] • split users into train/test sets • for each](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-18.jpg)

Collaborative Filtering Evaluation [Herlocker 2004] • split users into train/test sets • for each user a in the test set: - split a’s votes into observed (I) and to-predict (P) - measure average absolute deviation between predicted and actual votes in P - alternatively, measure the squared deviation predicted and actual votes in P • average error measure over all test users MAE or RMSE CMPT 884, SFU, Martin Ester, 1 -09 121

Collaborative Filtering Evaluation • There is a trade-off between precision and recall • Measure also the recall / coverage, i. e. the percentage of (a, i) pairs for which method can make a recommendation • F-measure considers both precision and recall Max squared error CMPT 884, SFU, Martin Ester, 1 -09 122

Collaborative Filtering Evaluation • so far, only comparison against ground truth • in industry, want to measure the business profit • user surveys • in an online system measure click through rates measure add-on sales CMPT 884, SFU, Martin Ester, 1 -09 123

Collaborative Filtering Challenges • user item rating matrix is very sparse typically 99% of the entries unknown dimensionality reduction item-item based CF • cannot make (accurate) recommendations for cold start users who have recently joined the system and have rated only very few items (typically, 50% of users) trust-based recommendation CMPT 884, SFU, Martin Ester, 1 -09 124

Collaborative Filtering Challenges • the larger the user community - the more variance among the ratings - the more the ratings converge to the mean value cluster users and use only the corresponding cluster to make a recommendation • cannot compute the confidence of a recommendation system does not know its limits probabilistic methods • vulnerable to fraud copy a user profile and become the most similar user trust-based recommendation CMPT 884, SFU, Martin Ester, 1 -09 125

Collaborative Filtering Challenges • need to explain recommendations • how to reward serendipity in the evaluation? recommendations should not all be of the same kind • how to evaluate a set of recommendations? • how to produce the best sequence of recommendations? CMPT 884, SFU, Martin Ester, 1 -09 126

Collaborative Filtering CMPT 884, SFU, Martin Ester, 1 -09 127

Collaborative Filtering leads to a denser rating, lower-dimensional matrix can alternatively use Singular Value Decomposition (SVD) or Latent Semantic Indexing (LSI) CMPT 884, SFU, Martin Ester, 1 -09 128

![Collaborative Filtering ItemItem Collaborative Filtering Sarwar et al 2001 Many applications have many Collaborative Filtering Item-Item Collaborative Filtering [Sarwar et al 2001] • Many applications have many](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-26.jpg)

Collaborative Filtering Item-Item Collaborative Filtering [Sarwar et al 2001] • Many applications have many more users (customers) than items (products) • Many customers have no similar customers • Most products have similar products • Make recommendation by considering ratings of active user for similar products CMPT 884, SFU, Martin Ester, 1 -09 129

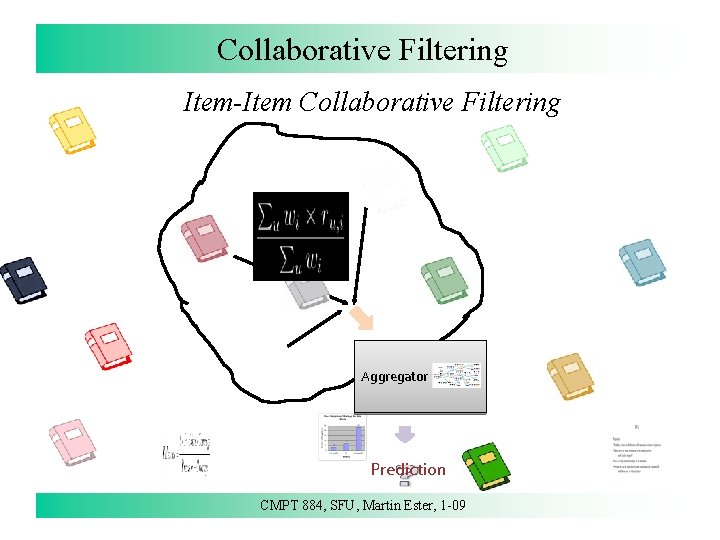

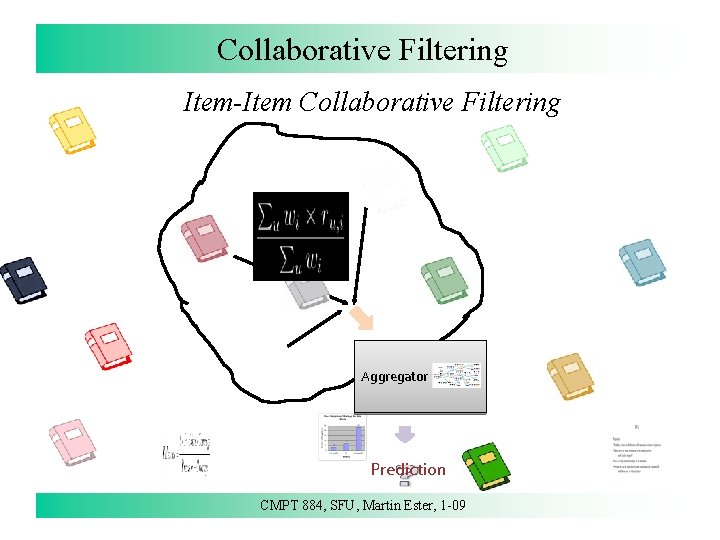

Collaborative Filtering Item-Item Collaborative Filtering Aggregator Prediction CMPT 884, SFU, Martin Ester, 1 -09 130

Collaborative Filtering Explanations • Simple visual representations of neighbors ratings • Statement of strong previous performance “Movie. Lens has predicted correctly 80% of the time for you” CMPT 884, SFU, Martin Ester, 1 -09 131

Collaborative Filtering Explanations • Complex representations are not accepted by users, e. g. - more than one dimension - any use of statistical terminology such as correlation, variance, etc. CMPT 884, SFU, Martin Ester, 1 -09 132

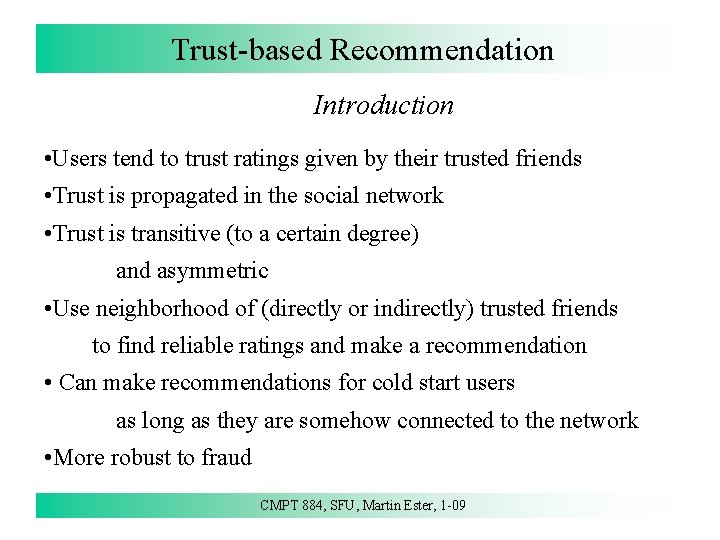

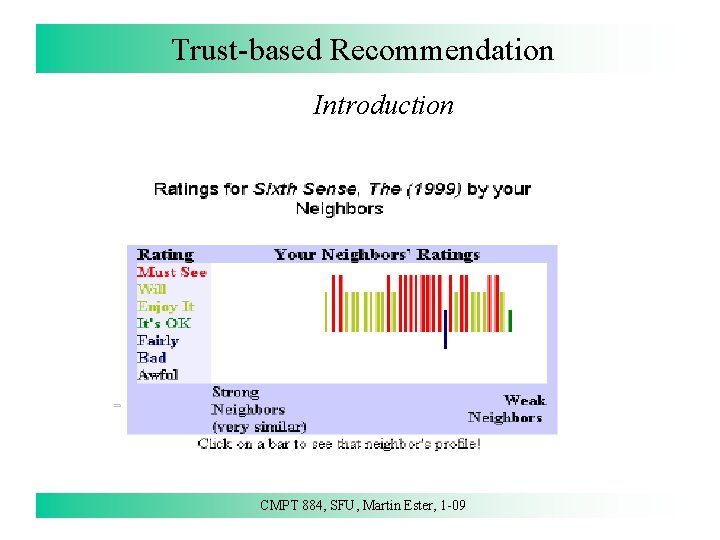

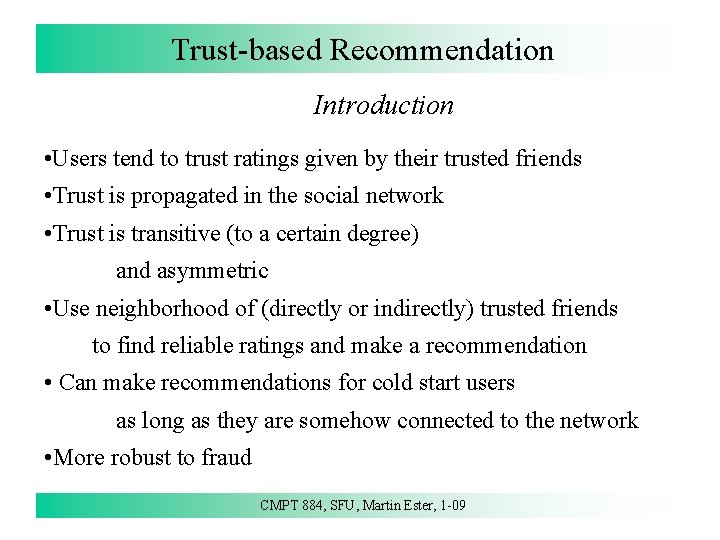

Trust-based Recommendation Introduction • Users tend to trust ratings given by their trusted friends • Trust is propagated in the social network • Trust is transitive (to a certain degree) and asymmetric • Use neighborhood of (directly or indirectly) trusted friends to find reliable ratings and make a recommendation • Can make recommendations for cold start users as long as they are somehow connected to the network • More robust to fraud CMPT 884, SFU, Martin Ester, 1 -09 133

Trust-based Recommendation Introduction CMPT 884, SFU, Martin Ester, 1 -09 134

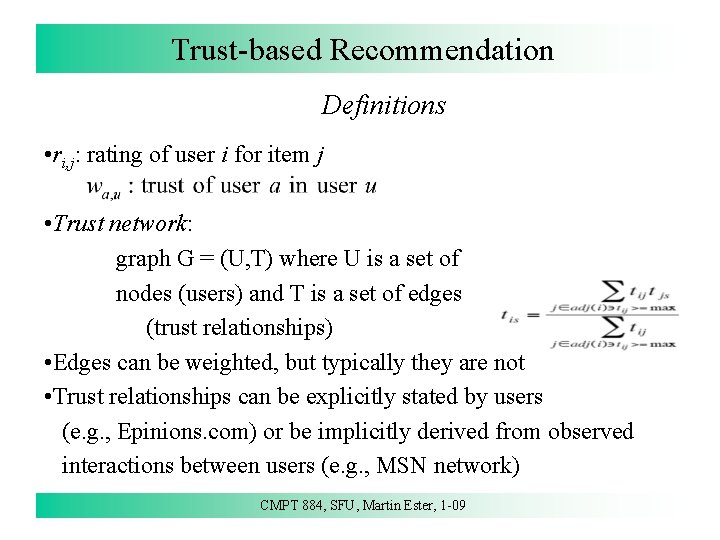

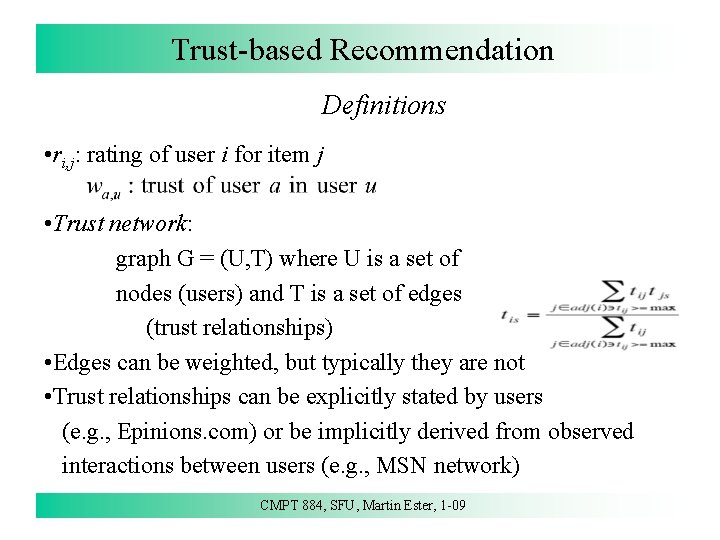

Trust-based Recommendation Definitions • ri, j: rating of user i for item j • Trust network: graph G = (U, T) where U is a set of nodes (users) and T is a set of edges (trust relationships) • Edges can be weighted, but typically they are not • Trust relationships can be explicitly stated by users (e. g. , Epinions. com) or be implicitly derived from observed interactions between users (e. g. , MSN network) CMPT 884, SFU, Martin Ester, 1 -09 135

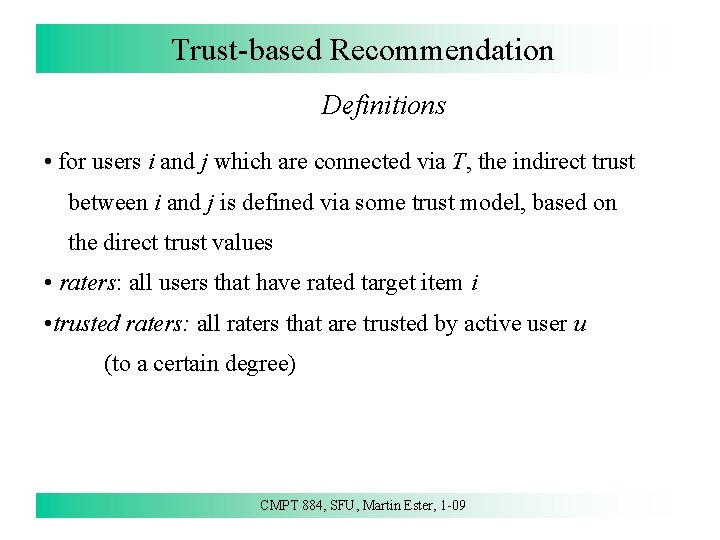

Trust-based Recommendation Definitions • for users i and j which are connected via T, the indirect trust between i and j is defined via some trust model, based on the direct trust values • raters: all users that have rated target item i • trusted raters: all raters that are trusted by active user u (to a certain degree) CMPT 884, SFU, Martin Ester, 1 -09 136

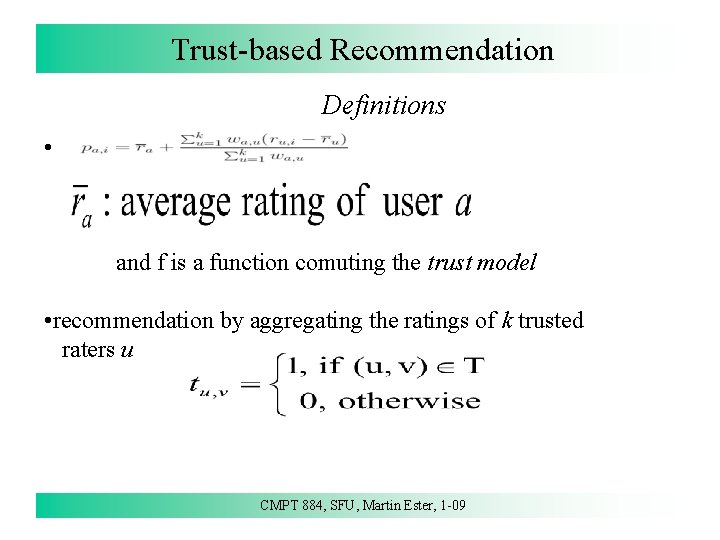

Trust-based Recommendation Definitions • and f is a function comuting the trust model • recommendation by aggregating the ratings of k trusted raters u CMPT 884, SFU, Martin Ester, 1 -09 137

Trust-based Recommendation Issues • How to compute the indirect trust? • How many of the trusted raters to consider? • Which ones? • If using too few, the prediction is not based on a significant number or rates. If using too many, these raters may only be weakly trusted. • In a large trust network, need to consider also the efficiency of exploring the trust network. CMPT 884, SFU, Martin Ester, 1 -09 138

![Trustbased Recommendation Tidal Trust Golbeck 2005 most accurate information will come from the Trust-based Recommendation Tidal. Trust [Golbeck 2005] • most accurate information will come from the](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-36.jpg)

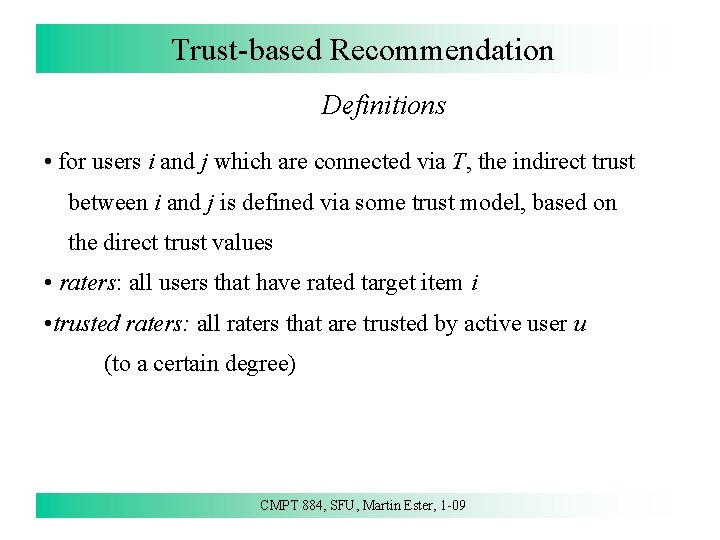

Trust-based Recommendation Tidal. Trust [Golbeck 2005] • most accurate information will come from the highest trusted neighbors • in principle, each node should consider only its neighbors with highest trust rating • but different nodes have different max trust among their neighbors, which would lead to different levels of trust in different parts of the network • max: largest trust value such that a path can be found from source to sink with all tij >= max • define indirect trust recursively CMPT 884, SFU, Martin Ester, 1 -09 139

![Trustbased Recommendation Mole Trust Massa et al 2007 trust model similar to Tidal Trust-based Recommendation Mole. Trust [Massa et al 2007] • trust model similar to Tidal.](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-37.jpg)

Trust-based Recommendation Mole. Trust [Massa et al 2007] • trust model similar to Tidal. Trust • major difference in the set of trusted raters considered • both, Tidal. Trust and Mole. Trust perform a breadth-first search of the trust network • Tidal. Trust considers all raters at the minimum depth (shortest path distance from the active user) • Mole. Trust considers all raters up to a specified maximum depth CMPT 884, SFU, Martin Ester, 1 -09 140

Trust-based Recommendation Discussion • Tidal. Trust is likely to find only very few raters • Mole. Trust may consider too many raters • Tidal. Trust ignores the actual ratings and their distribution • Mole. Trust even ignores the actual distribution of the raters maximum depth independent of a and i CMPT 884, SFU, Martin Ester, 1 -09 141

![Trustbased Recommendation Random Walks Andersen et al 2008 perform a random walk in Trust-based Recommendation Random Walks [Andersen et al 2008] • perform a random walk in](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-39.jpg)

Trust-based Recommendation Random Walks [Andersen et al 2008] • perform a random walk in the trust network starting from user a • if current user u has rating for item i, return it • otherwise, choose a trusted neighbor v randomly with probability proportional to tu, v and go to v • terminate as soon as rating found or some specified maxdepth reached • repeat random walks until the average aggregated rating converges • use the aggregated rating as recommendation termination depends on distribution of raters and ratings CMPT 884, SFU, Martin Ester, 1 -09 142

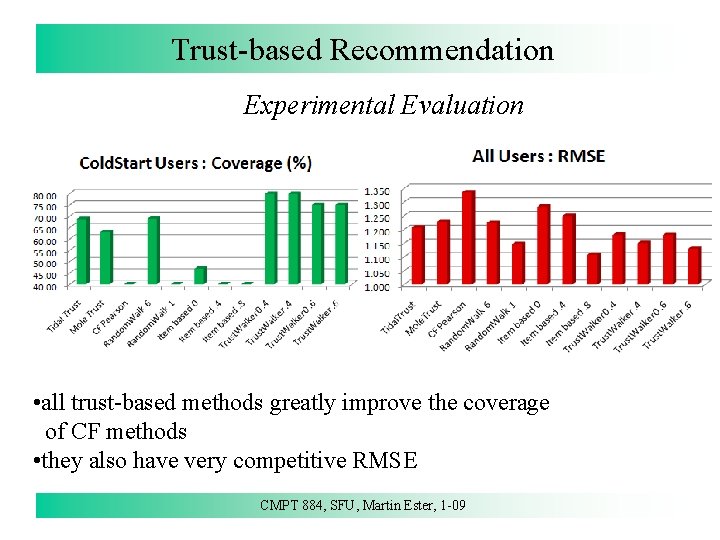

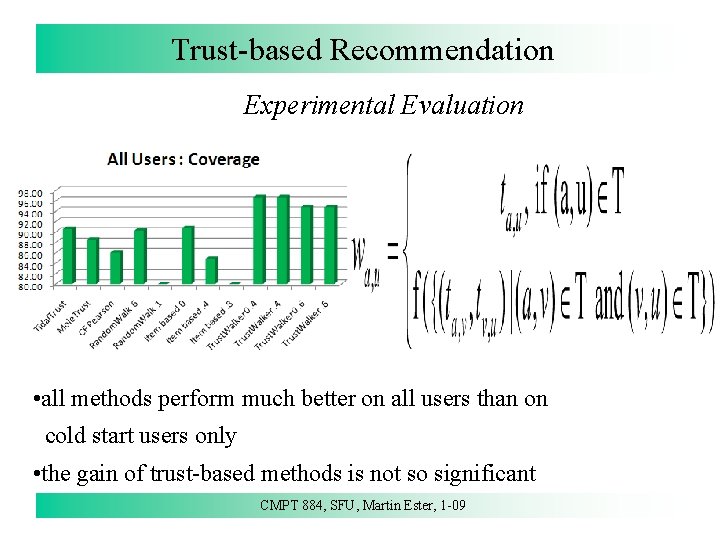

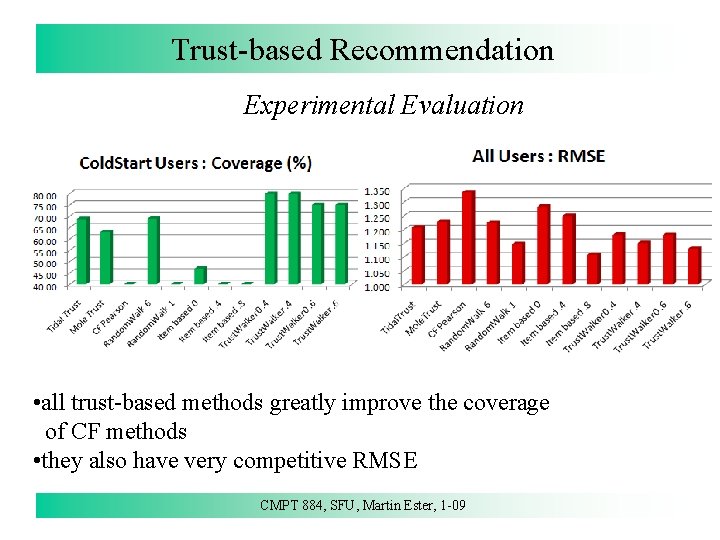

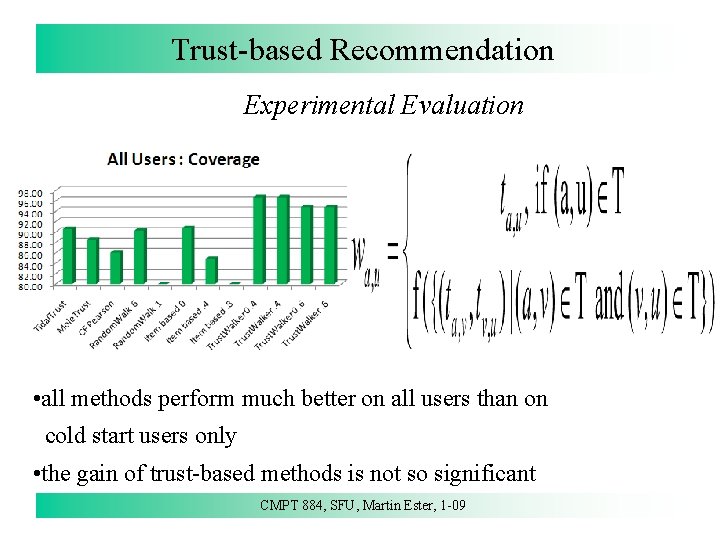

Trust-based Recommendation Experimental Evaluation • Epinions dataset products rated on a scale of [1. . 5] explicit trust network (binary) epinions. com • Distinguish cold start users and all users • Comparison of various CF and trust-based methods • Item based 0 /. 4 /. 8: considers only items with similarity at least 0 /. 4 /. 8 • Random Walk 1 / 6: considers trusted raters up to depth 1 / 6 CMPT 884, SFU, Martin Ester, 1 -09 143

Trust-based Recommendation Experimental Evaluation • all trust-based methods greatly improve the coverage of CF methods • they also have very competitive RMSE CMPT 884, SFU, Martin Ester, 1 -09 144

Trust-based Recommendation Experimental Evaluation • all methods perform much better on all users than on cold start users only • the gain of trust-based methods is not so significant CMPT 884, SFU, Martin Ester, 1 -09 145

![Modelbased Recommendation Introduction Cohen 2002 so far memorybased methods CF trustbased recommendation Model-based Recommendation Introduction [Cohen 2002] • so far: memory-based methods CF, trust-based recommendation •](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-43.jpg)

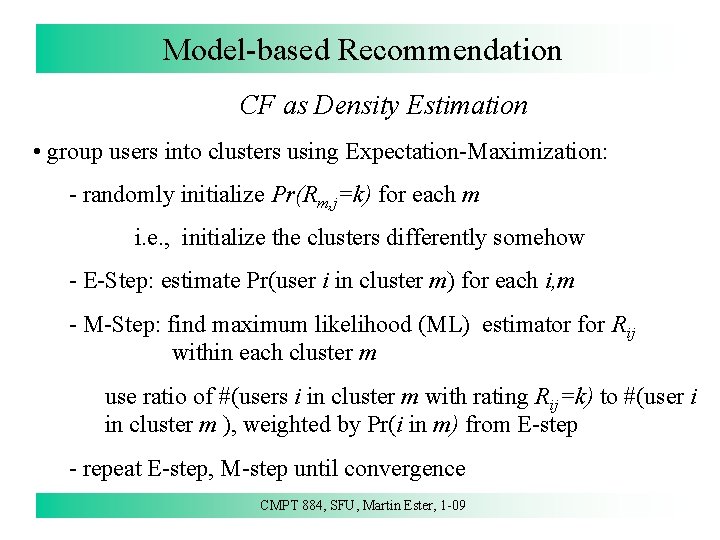

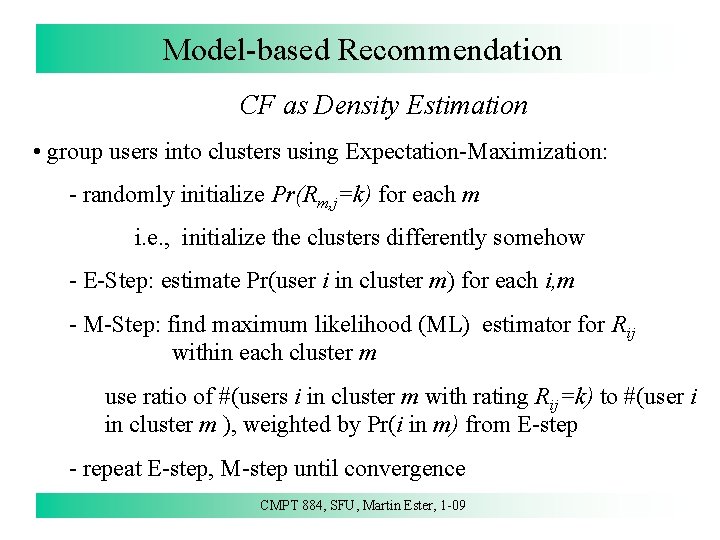

Model-based Recommendation Introduction [Cohen 2002] • so far: memory-based methods CF, trust-based recommendation • no training of a model • model-based approaches to CF: 1) CF as density estimation 2) CF and content-based recommendation as classification CMPT 884, SFU, Martin Ester, 1 -09 146

![Modelbased Recommendation CF as Density Estimation Horvitz et al 1998 estimate PrRijk for Model-based Recommendation CF as Density Estimation [Horvitz et al 1998] • estimate Pr(Rij=k) for](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-44.jpg)

Model-based Recommendation CF as Density Estimation [Horvitz et al 1998] • estimate Pr(Rij=k) for each user i, movie j, and rating k • use all available data to build model for this estimator Airplane Rij Matrix Room with a View . . . Hidalgo Joe 9 7 2 . . . 7 Carol 8 ? 9 … ? . . . . 9 3 ? … 6 Kumar CMPT 884, SFU, Martin Ester, 1 -09 147

Model-based Recommendation CF as Density Estimation • a simple model same model for all users CMPT 884, SFU, Martin Ester, 1 -09 148

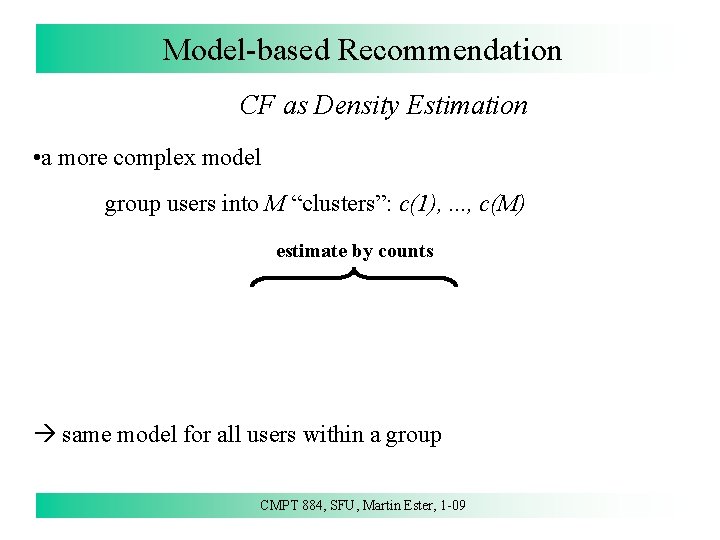

Model-based Recommendation CF as Density Estimation • a more complex model group users into M “clusters”: c(1), . . . , c(M) estimate by counts same model for all users within a group CMPT 884, SFU, Martin Ester, 1 -09 149

Model-based Recommendation CF as Density Estimation • group users into clusters using Expectation-Maximization: - randomly initialize Pr(Rm, j=k) for each m i. e. , initialize the clusters differently somehow - E-Step: estimate Pr(user i in cluster m) for each i, m - M-Step: find maximum likelihood (ML) estimator for Rij within each cluster m use ratio of #(users i in cluster m with rating Rij=k) to #(user i in cluster m ), weighted by Pr(i in m) from E-step - repeat E-step, M-step until convergence CMPT 884, SFU, Martin Ester, 1 -09 150

![Modelbased Recommendation CF as Classification Basu et al 1998 Classification task map user Model-based Recommendation CF as Classification [Basu et al, 1998] • Classification task: map (user,](https://slidetodoc.com/presentation_image/231aa9257ed91ef07ba92f2e5dc7b674/image-48.jpg)

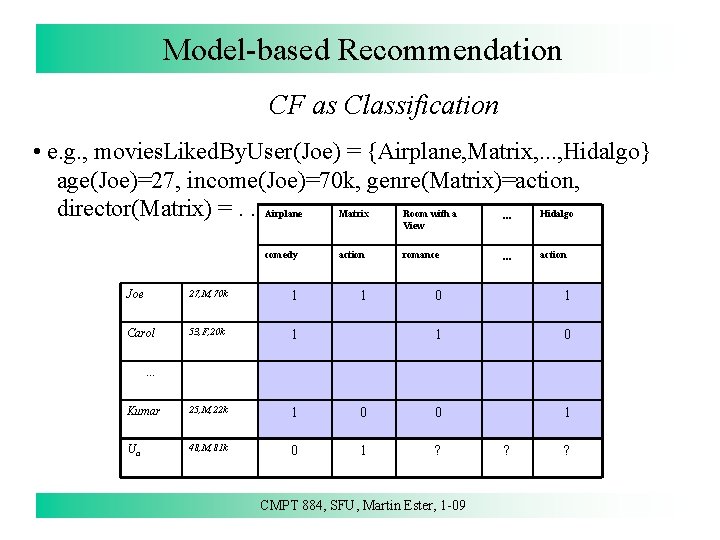

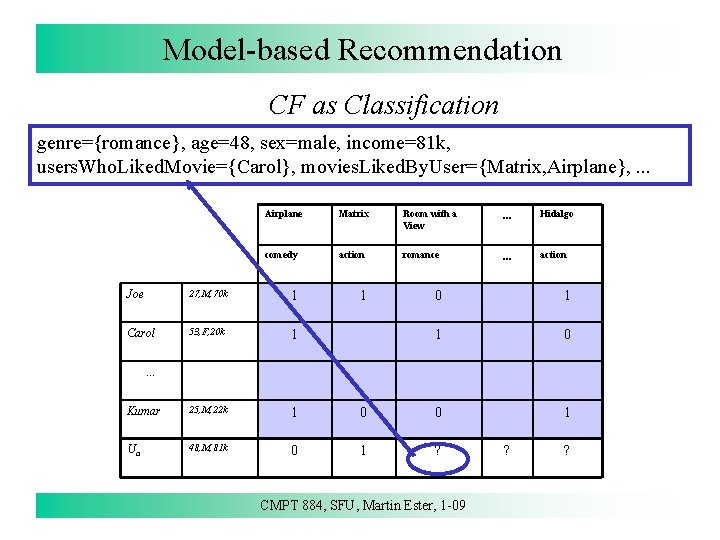

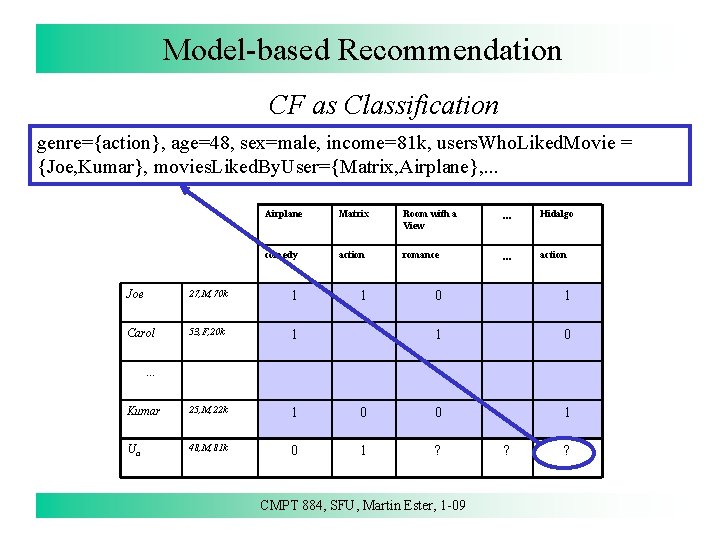

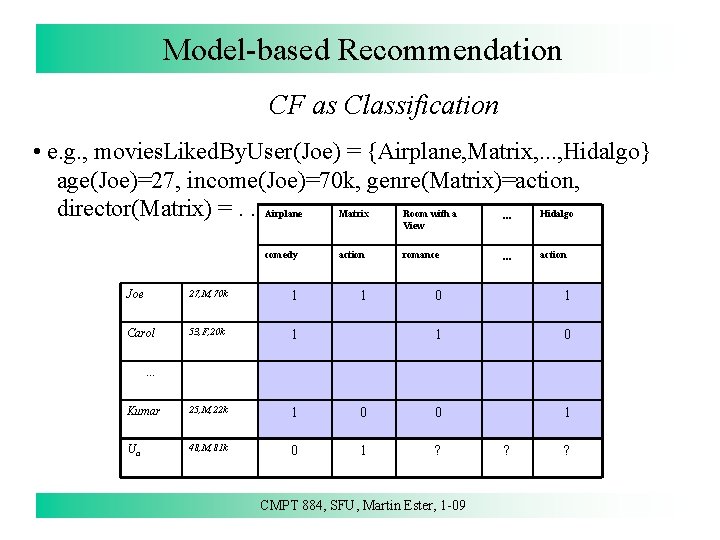

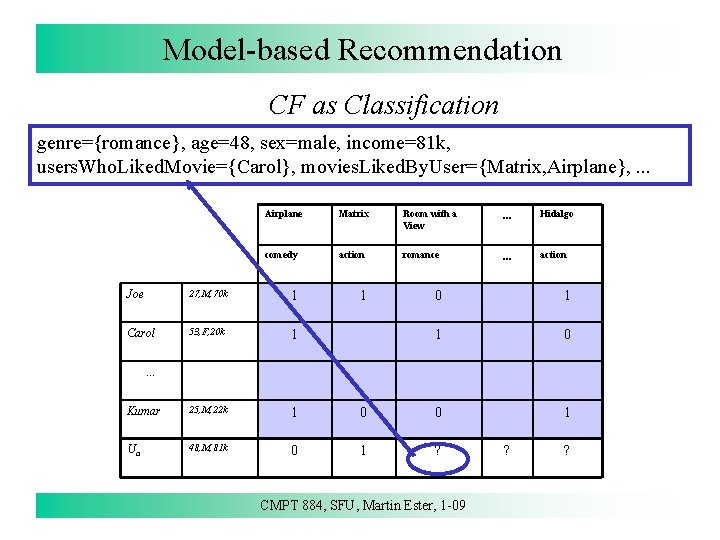

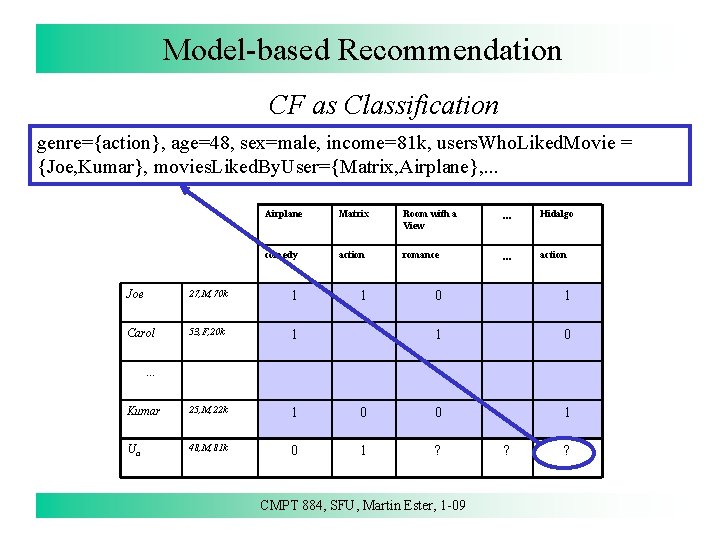

Model-based Recommendation CF as Classification [Basu et al, 1998] • Classification task: map (user, movie) pair into {likes, dislikes} • Training data: known likes/dislikes, test data: active users • Features: any properties of user/movie pair Airplane Matrix Room with a View . . . Hidalgo comedy action romance . . . action 1 0 1 Joe 27, M, 70 k 1 Carol 53, F, 20 k 1 Kumar 25, M, 22 k 1 0 0 Ua 48, M, 81 k 0 1 ? . . . CMPT 884, SFU, Martin Ester, 1 -09 ? ? 151

Model-based Recommendation CF as Classification • e. g. , movies. Liked. By. User(Joe) = {Airplane, Matrix, . . . , Hidalgo} age(Joe)=27, income(Joe)=70 k, genre(Matrix)=action, director(Matrix) =. . Airplane Matrix Room with a Hidalgo. . . View comedy action romance 1 0 1 Joe 27, M, 70 k 1 Carol 53, F, 20 k 1 Kumar 25, M, 22 k 1 0 0 Ua 48, M, 81 k 0 1 ? . . . action . . . CMPT 884, SFU, Martin Ester, 1 -09 ? ? 152

Model-based Recommendation CF as Classification genre={romance}, age=48, sex=male, income=81 k, users. Who. Liked. Movie={Carol}, movies. Liked. By. User={Matrix, Airplane}, . . . Airplane Matrix Room with a View . . . Hidalgo comedy action romance . . . action 1 0 1 Joe 27, M, 70 k 1 Carol 53, F, 20 k 1 Kumar 25, M, 22 k 1 0 0 Ua 48, M, 81 k 0 1 ? . . . CMPT 884, SFU, Martin Ester, 1 -09 ? ? 153

Model-based Recommendation CF as Classification genre={action}, age=48, sex=male, income=81 k, users. Who. Liked. Movie = {Joe, Kumar}, movies. Liked. By. User={Matrix, Airplane}, . . . Airplane Matrix Room with a View . . . Hidalgo comedy action romance . . . action 1 0 1 Joe 27, M, 70 k 1 Carol 53, F, 20 k 1 Kumar 25, M, 22 k 1 0 0 Ua 48, M, 81 k 0 1 ? . . . CMPT 884, SFU, Martin Ester, 1 -09 ? ? 154

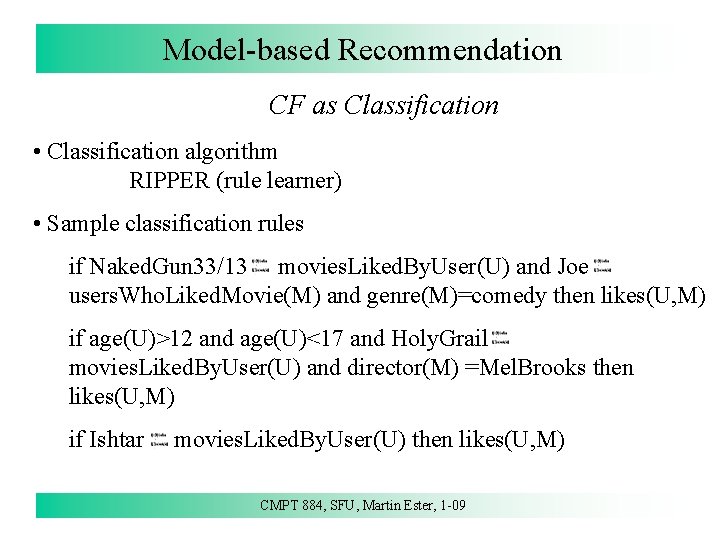

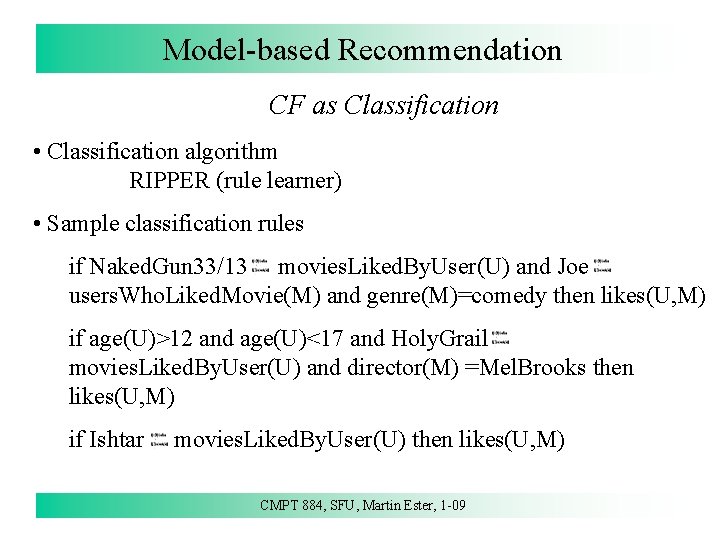

Model-based Recommendation CF as Classification • Classification algorithm RIPPER (rule learner) • Sample classification rules if Naked. Gun 33/13 movies. Liked. By. User(U) and Joe users. Who. Liked. Movie(M) and genre(M)=comedy then likes(U, M) if age(U)>12 and age(U)<17 and Holy. Grail movies. Liked. By. User(U) and director(M) =Mel. Brooks then likes(U, M) if Ishtar movies. Liked. By. User(U) then likes(U, M) CMPT 884, SFU, Martin Ester, 1 -09 155

Model-based Recommendation CF as Classification • features - collaborative: Users. Who. Liked. Movie, Users. Who. Disliked. Movie, Movies. Liked. By. User - content: Actors, Directors, Genre, MPAA rating, . . . - hybrid: Comedies. Liked. By. User, Dramas. Liked. By. User, Users. Who. Liked. Few. Dramas, . . . • predict liked(U, M) for the M in top quartile of U’s ranking for different feature sets • evaluate recall and precision w. r. t. actual (U, M) pairs CMPT 884, SFU, Martin Ester, 1 -09 156

Model-based Recommendation CF as Classification • precision at same level of recall (about 33%) • RIPPER with collaborative features only performs worse than memory-based CF by about 5 pts precision (73% vs. 78%) • RIPPER with hybrid features performs better than memory based CF by about 5 pts precision (83% vs. 78%) CMPT 884, SFU, Martin Ester, 1 -09 157

Recommender Systems References • R. Andersen, C. Borgs, J. Chayes, U. Feige, A. Flaxman, A. Kalai, V. Mirrokni, and M. Tennenholtz: Trust-based recommendation systems: an axiomatic approach, WWW 2008 • Chumki Basu, Haym Hirsh, and William W. Cohen: Recommendation as Classification: Using Social and Content-Based Information in Recommendation, AAAI 1998 • William Cohen: Collaborative Filtering, Tutorial DIMACS Workshop, 2002 • Jennifer Golbeck: Computing and Applying Trust in Web-based Social Networks, Ph. D Thesis, University of Maryland College Park, 2005 • J. Herlocker et al. : Evaluating Collaborative Filtering Recommender Systems, ACM Transactions on Information Systems, Jan. 2004 CMPT 884, SFU, Martin Ester, 1 -09 158

Recommender Systems References • Eric Horvitz, Jack S. Breese, David Heckerman, David Hovel, Koos Rommelse: The Lumière Project: Bayesian User Modeling for Inferring the Goals and Needs of Software Users, UAI 1998 • Joseph A. Konstan: Introduction to Recommender Systems, Tutorial SIGMOD 2008 • Paolo Massa, Paolo Avesani: Trust-aware Recommender Systems, ACM Rec. Sys 2007 • Paul Resnick, Neophytos Iacovou, Mitesh Suchak, Peter Bergstrom, John Riedl: Group. Lens: An Open Architecture for Collaborative Filtering of Netnews, ACM Conference on Computer Supported Cooperative Work, 1994 • Badrul Sarwar, George Karypis, Joseph Konstan, and John Riedl: Item. Based Collaborative Filtering Recommendation Algorithms, WWW 2001 159 CMPT 884, SFU, Martin Ester, 1 -09