Recognition Hidden Markov Models 1 Hidden Markov Model

- Slides: 19

Recognition Hidden Markov Models 1

Hidden Markov Model (HMM) n n HMMs allow you to estimate probabilities of unobserved events E. g. , in speech recognition, the observed data is the acoustic signal and the words are the hidden parameters you are trying to figure out. 2

HMMs and their Usage n HMMs are very common in Computational Linguistics: • Speech recognition (observed: acoustic signal, hidden: words) • Handwriting recognition (observed: image, hidden: words) • Part-of-speech tagging (observed: words, hidden: part-of-speech tags) • Machine translation (observed: foreign words, hidden: words in target language) 3

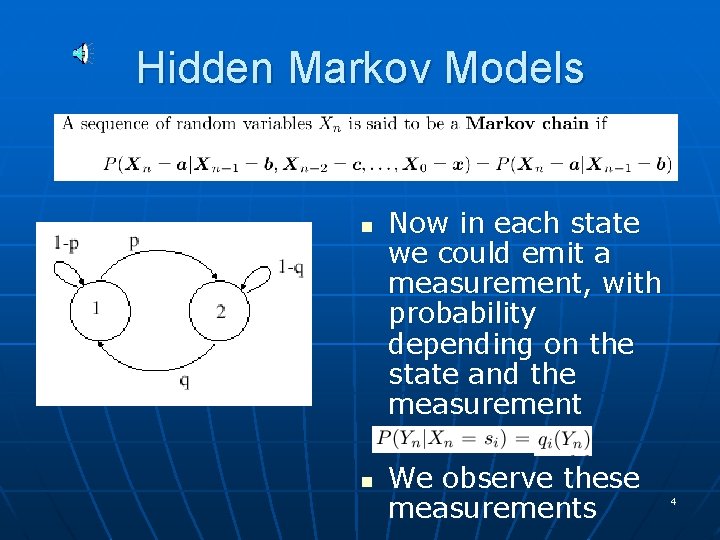

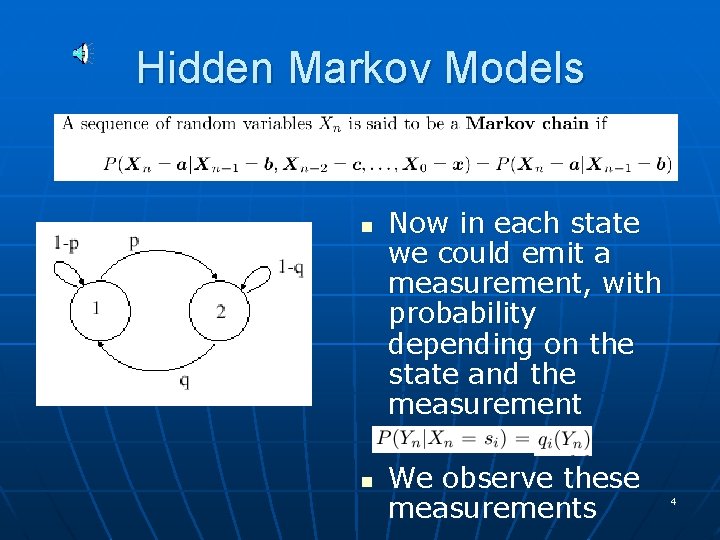

Hidden Markov Models n n Now in each state we could emit a measurement, with probability depending on the state and the measurement We observe these measurements 4

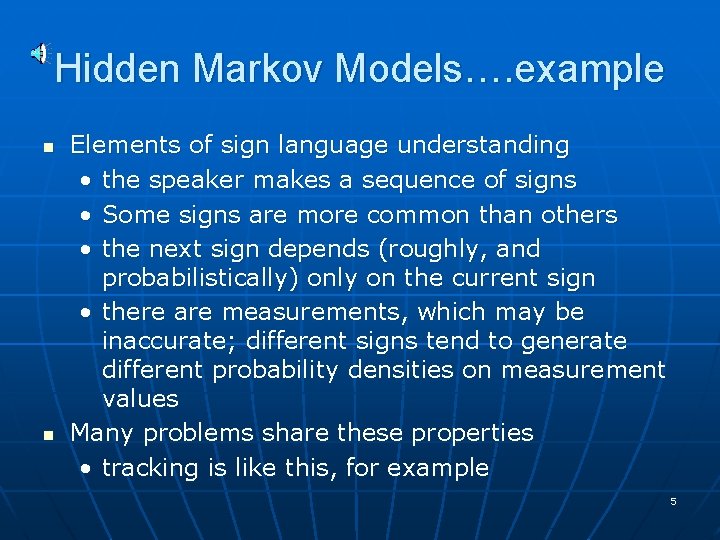

Hidden Markov Models…. example n n Elements of sign language understanding • the speaker makes a sequence of signs • Some signs are more common than others • the next sign depends (roughly, and probabilistically) only on the current sign • there are measurements, which may be inaccurate; different signs tend to generate different probability densities on measurement values Many problems share these properties • tracking is like this, for example 5

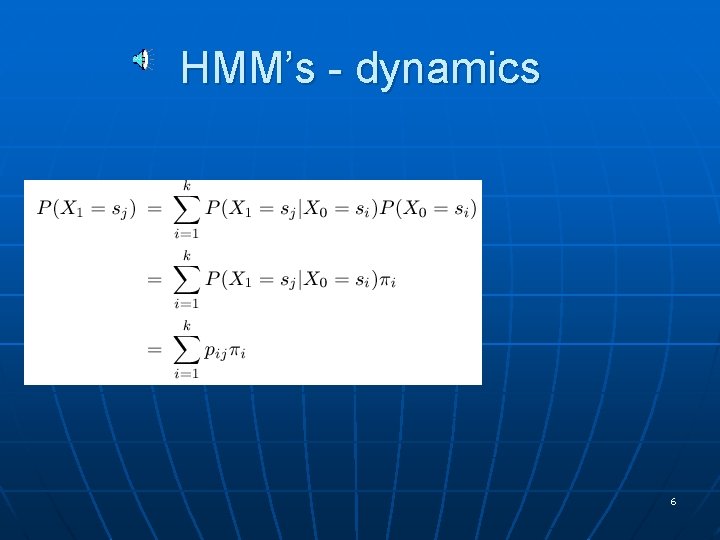

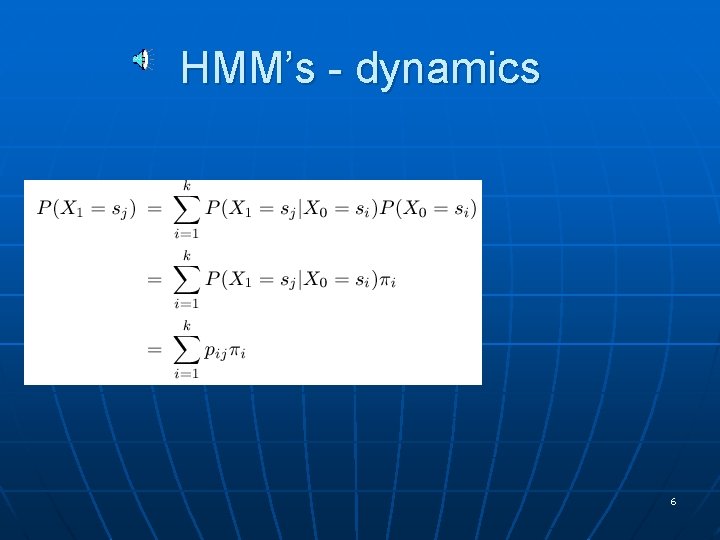

HMM’s - dynamics 6

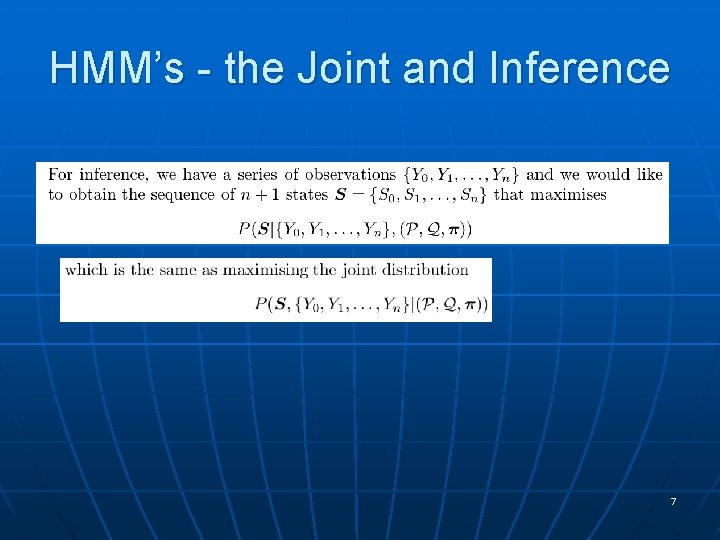

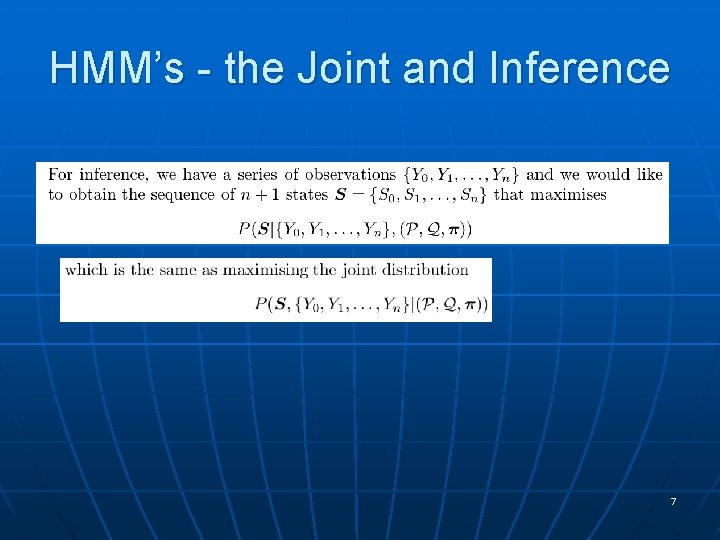

HMM’s - the Joint and Inference 7

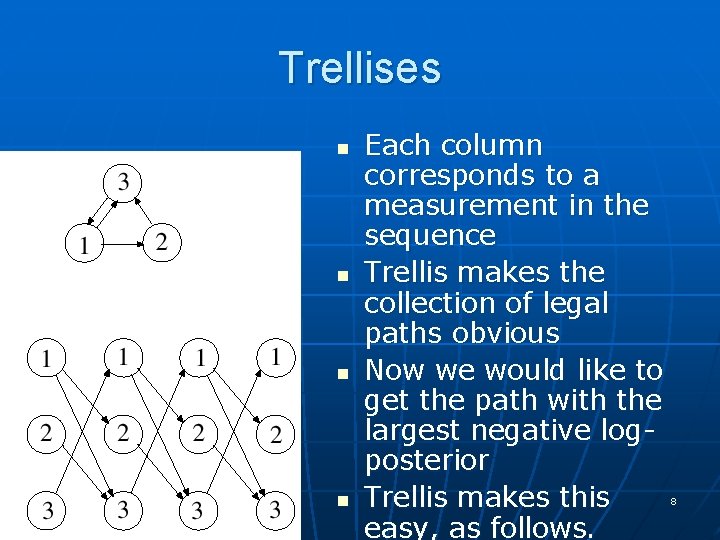

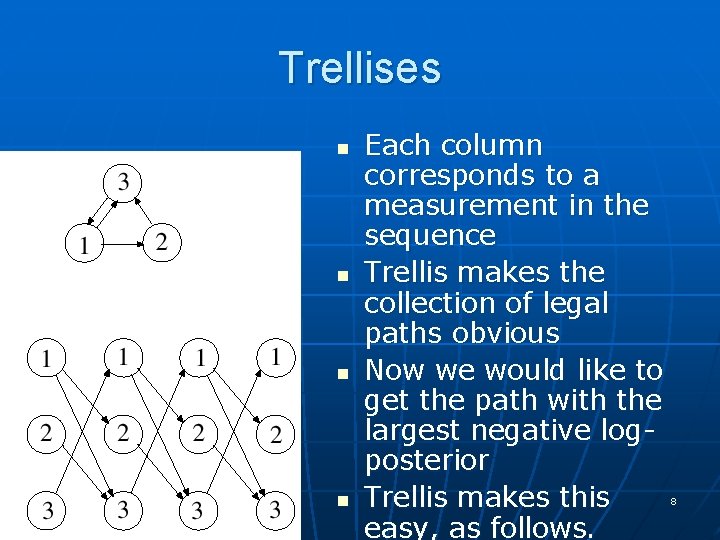

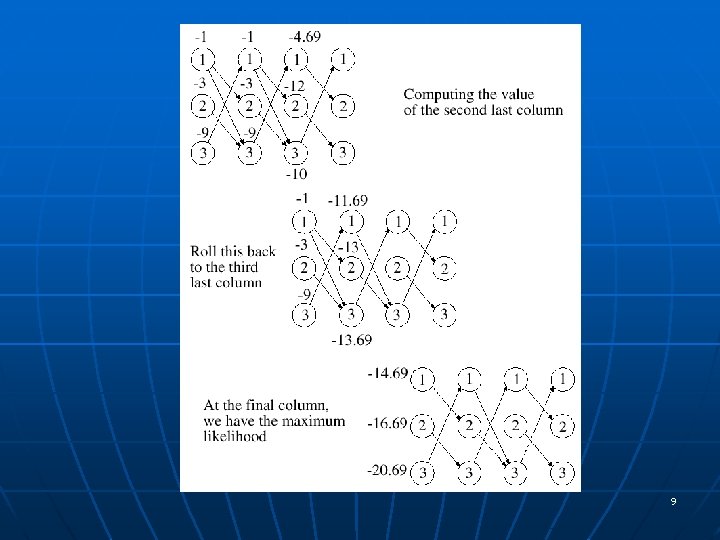

Trellises n n Each column corresponds to a measurement in the sequence Trellis makes the collection of legal paths obvious Now we would like to get the path with the largest negative logposterior Trellis makes this easy, as follows. 8

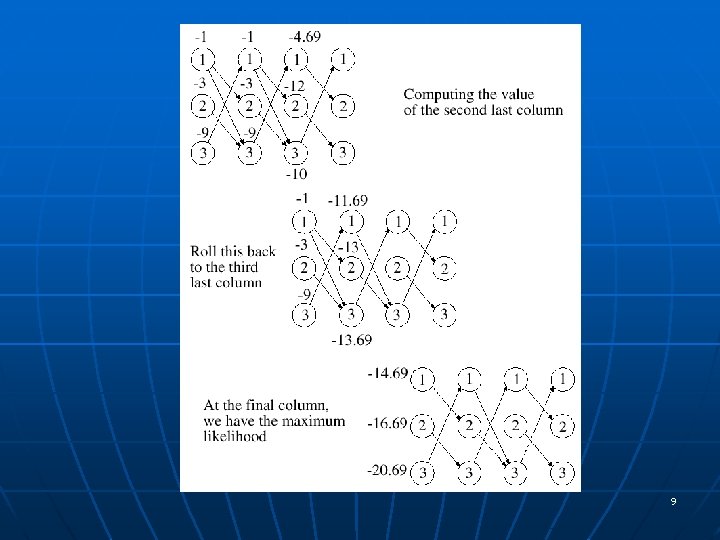

9

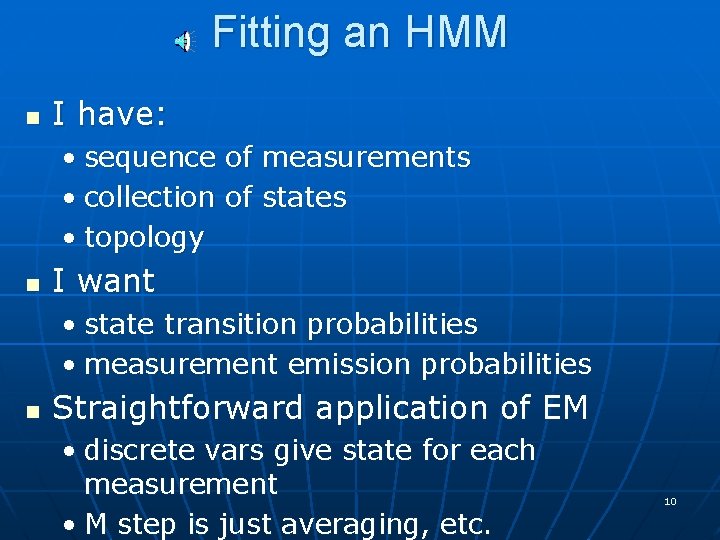

Fitting an HMM n I have: • sequence of measurements • collection of states • topology n I want • state transition probabilities • measurement emission probabilities n Straightforward application of EM • discrete vars give state for each measurement • M step is just averaging, etc. 10

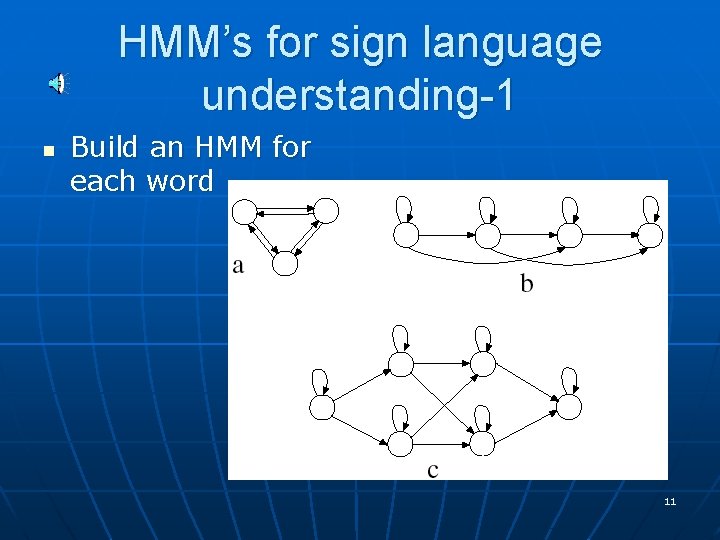

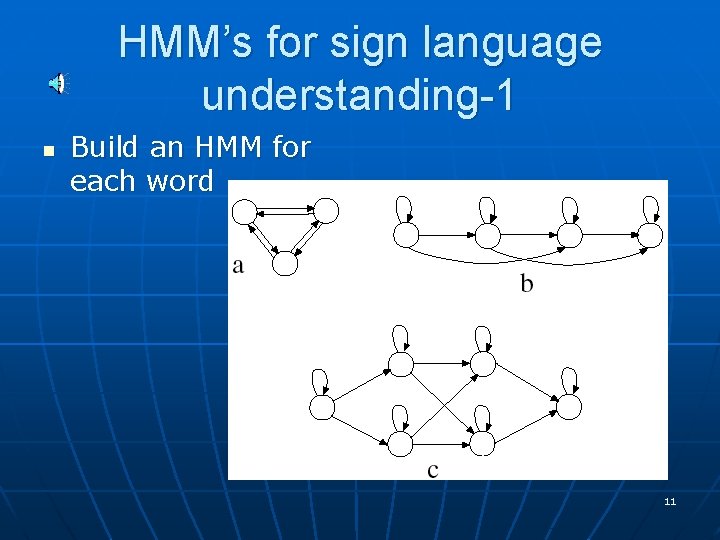

HMM’s for sign language understanding-1 n Build an HMM for each word 11

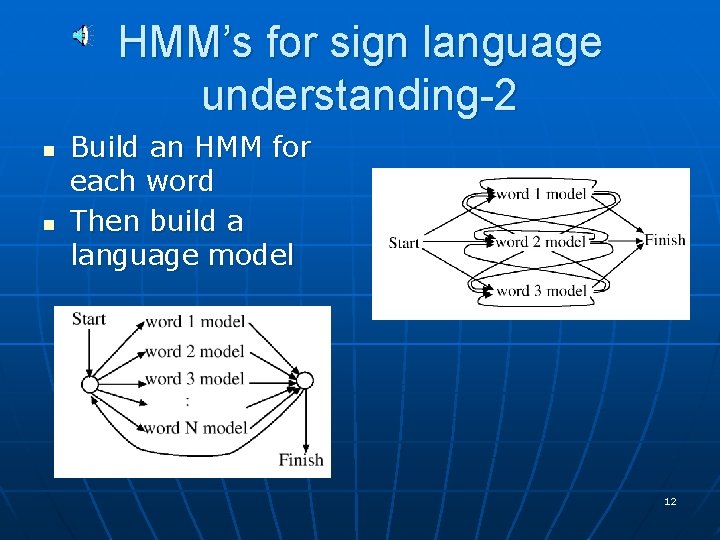

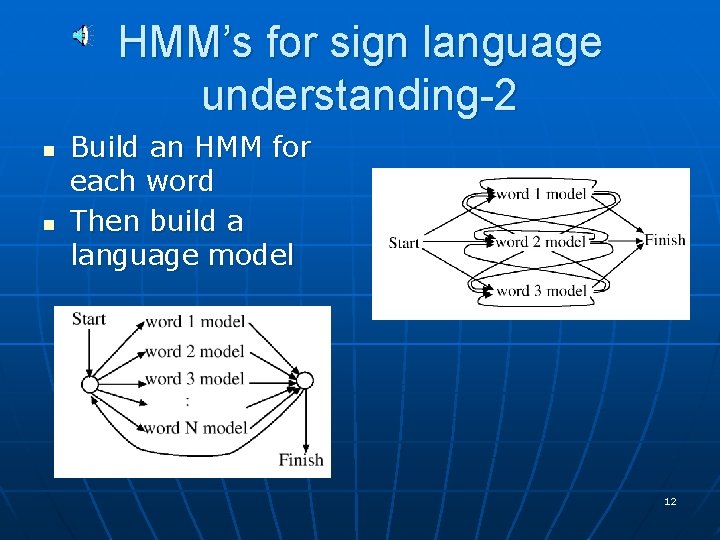

HMM’s for sign language understanding-2 n n Build an HMM for each word Then build a language model 12

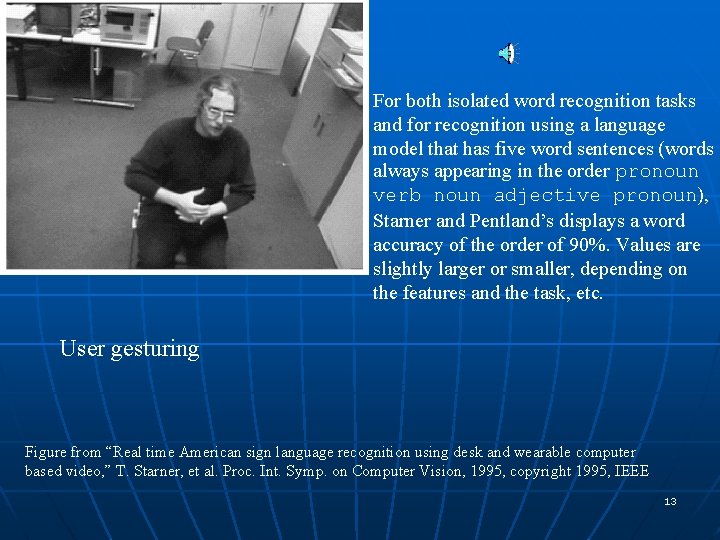

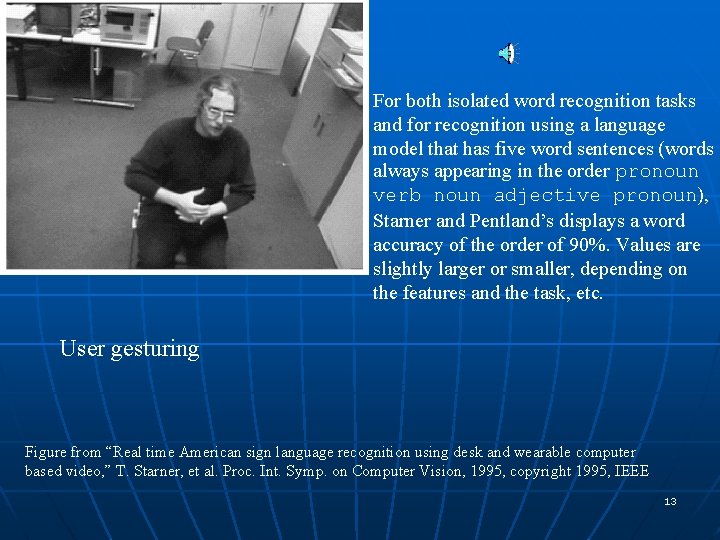

For both isolated word recognition tasks and for recognition using a language model that has five word sentences (words always appearing in the order pronoun verb noun adjective pronoun), Starner and Pentland’s displays a word accuracy of the order of 90%. Values are slightly larger or smaller, depending on the features and the task, etc. User gesturing Figure from “Real time American sign language recognition using desk and wearable computer based video, ” T. Starner, et al. Proc. Int. Symp. on Computer Vision, 1995, copyright 1995, IEEE 13

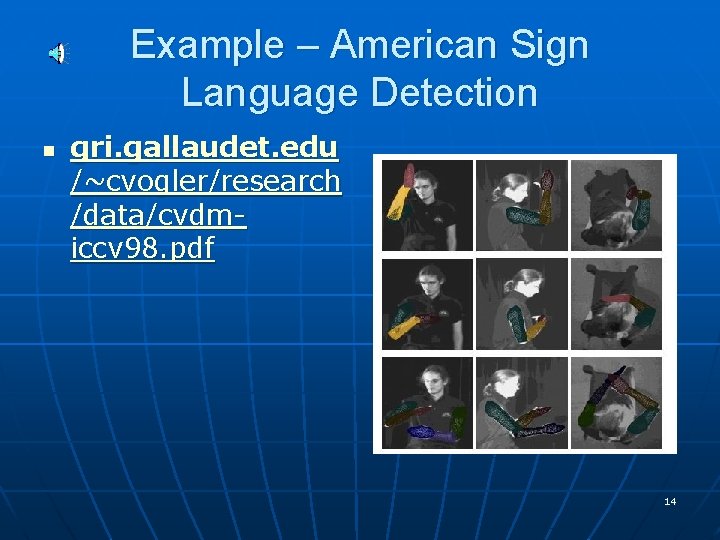

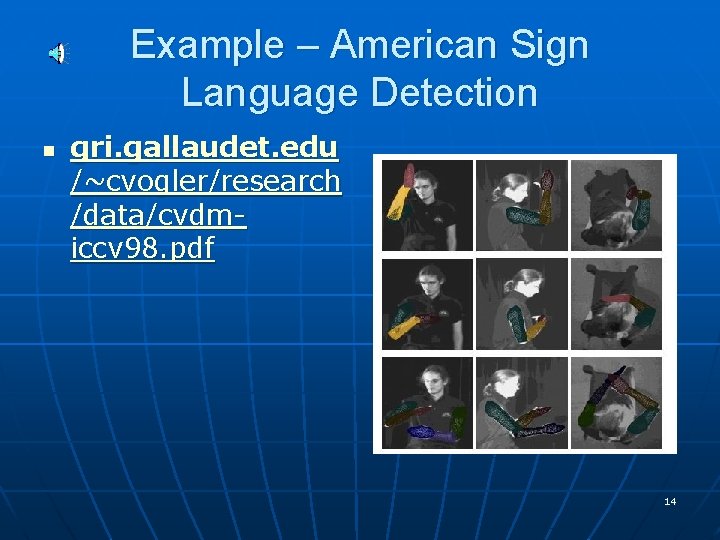

Example – American Sign Language Detection n gri. gallaudet. edu /~cvogler/research /data/cvdmiccv 98. pdf 14

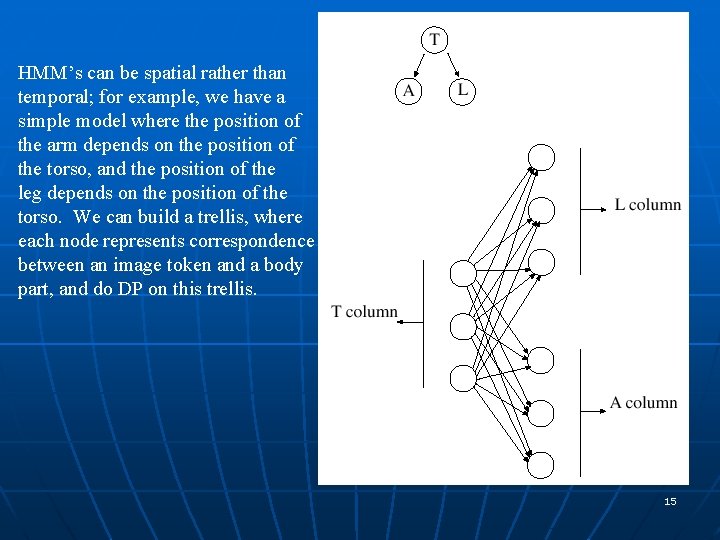

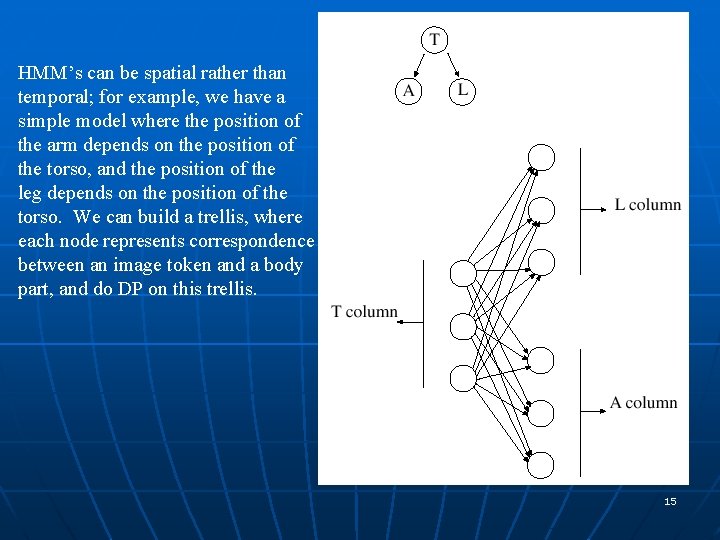

HMM’s can be spatial rather than temporal; for example, we have a simple model where the position of the arm depends on the position of the torso, and the position of the leg depends on the position of the torso. We can build a trellis, where each node represents correspondence between an image token and a body part, and do DP on this trellis. 15

16

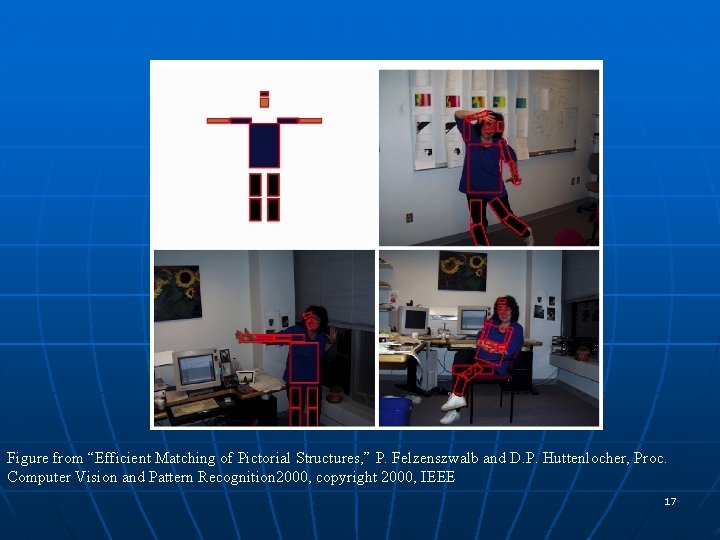

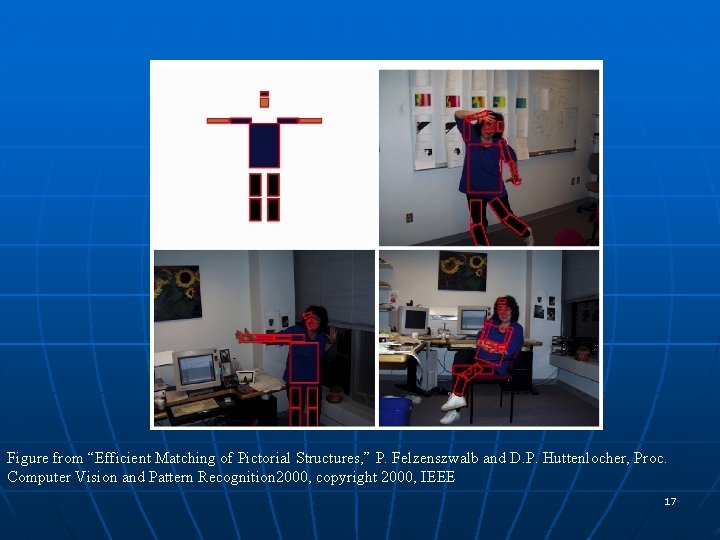

Figure from “Efficient Matching of Pictorial Structures, ” P. Felzenszwalb and D. P. Huttenlocher, Proc. Computer Vision and Pattern Recognition 2000, copyright 2000, IEEE 17

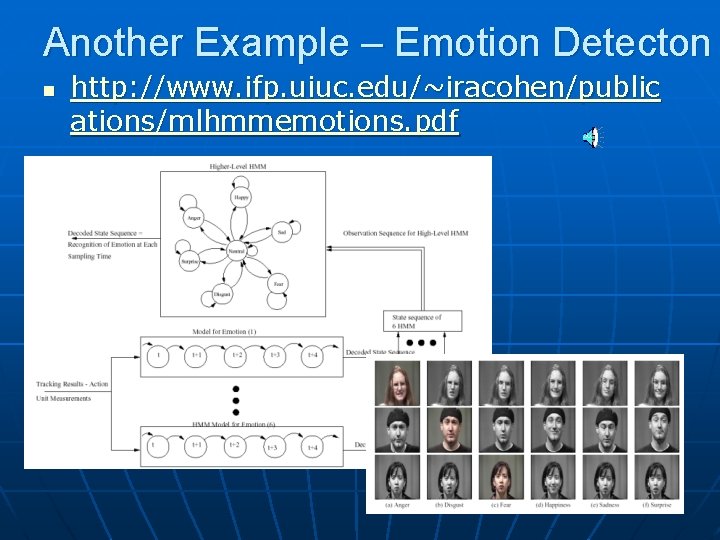

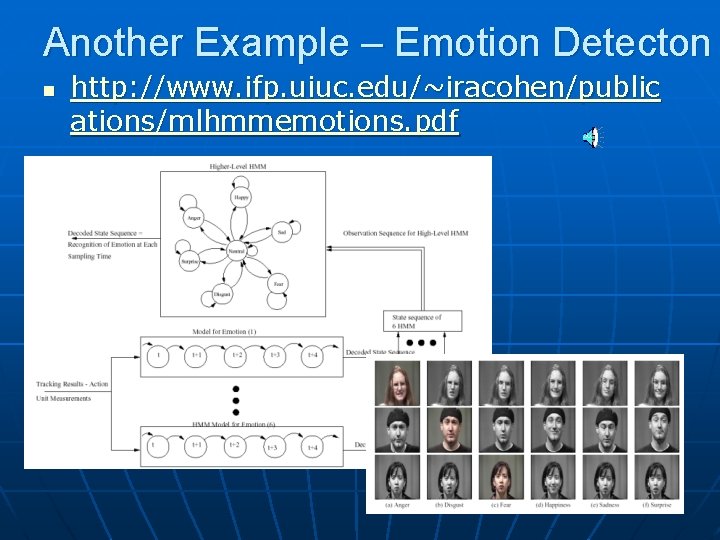

Another Example – Emotion Detecton n http: //www. ifp. uiuc. edu/~iracohen/public ations/mlhmmemotions. pdf 18

Advantage of HMM n n Does not just use current state to do recognition…. looks at previous state(s) to understand what is going on. This is powerful idea when such temporal dependencies exist. 19