Recent Advances in Neural Architecture Search Hao Chen

![Search Space Design Sequential Multi-branch Cell Search Space [Elsken et al. 2018] Search Space Design Sequential Multi-branch Cell Search Space [Elsken et al. 2018]](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-4.jpg)

![Sequential Search Space • Proxyless. NAS [Cai et al. 2019] • Choose different kernel Sequential Search Space • Proxyless. NAS [Cai et al. 2019] • Choose different kernel](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-5.jpg)

![Multi-Branch Search Space • NAS with RL [Zoph and Le 2017] • Sequential layers Multi-Branch Search Space • NAS with RL [Zoph and Le 2017] • Sequential layers](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-6.jpg)

![Cell Search Space • NASNet [Zoph et al. 2018] • Normal + reduction cell Cell Search Space • NASNet [Zoph et al. 2018] • Normal + reduction cell](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-7.jpg)

![Search Strategies • Random Search • SMASH [Brock et al. 2017] / One-Shot [Bender Search Strategies • Random Search • SMASH [Brock et al. 2017] / One-Shot [Bender](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-8.jpg)

![Evolutionary Strategy • Amoeba. Net [Real et al. 2018] • Aging evolution to explore Evolutionary Strategy • Amoeba. Net [Real et al. 2018] • Aging evolution to explore](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-10.jpg)

![One-Shot NAS • DARTS [Liu et al. 2018 1] • Gradient based bi-level optimization One-Shot NAS • DARTS [Liu et al. 2018 1] • Gradient based bi-level optimization](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-15.jpg)

![Better Gradient Estimate? • Temperature dependent sigmoid [Noy et al. 2019] • Gumbel-softmax trick Better Gradient Estimate? • Temperature dependent sigmoid [Noy et al. 2019] • Gumbel-softmax trick](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-17.jpg)

![Dealing with Evaluation Gap • One-Shot [Bender et al. 2018] • Linear scheduled droppath Dealing with Evaluation Gap • One-Shot [Bender et al. 2018] • Linear scheduled droppath](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-18.jpg)

![Single Path Training • Cell search space • ENAS [Pham et al. 2018 2] Single Path Training • Cell search space • ENAS [Pham et al. 2018 2]](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-19.jpg)

![• [Anonymous 2018] Single Shot Neural Architecture Search Via Direct Sparse Optimization • • [Anonymous 2018] Single Shot Neural Architecture Search Via Direct Sparse Optimization •](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-21.jpg)

- Slides: 21

Recent Advances in Neural Architecture Search Hao Chen hao. chen 01@Adelaide. edu. au

Introduction to NAS • Automate the design of artificial neural networks • Manually designing of neural network can be time-consuming and error-prone • Growing interest in neural architecture search (NAS) for • Image processing • Language modelling

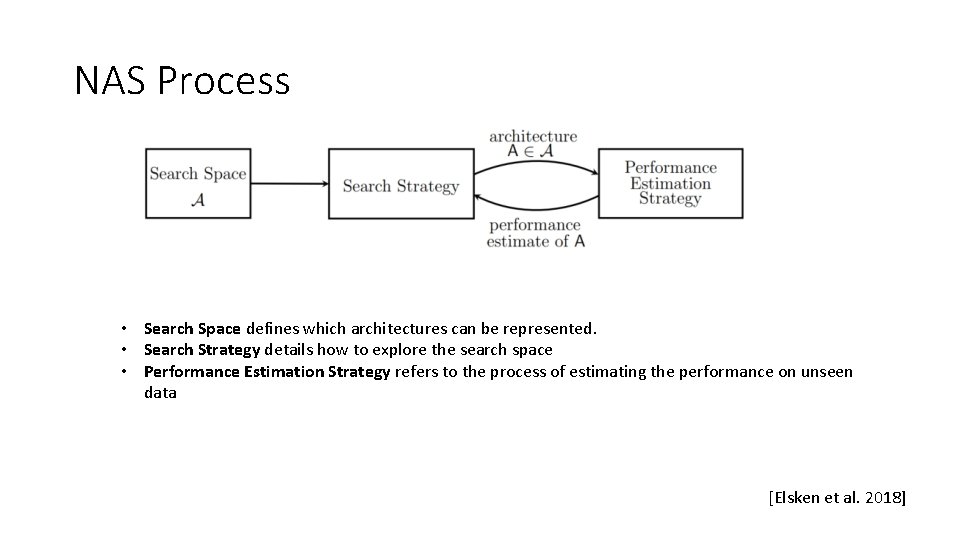

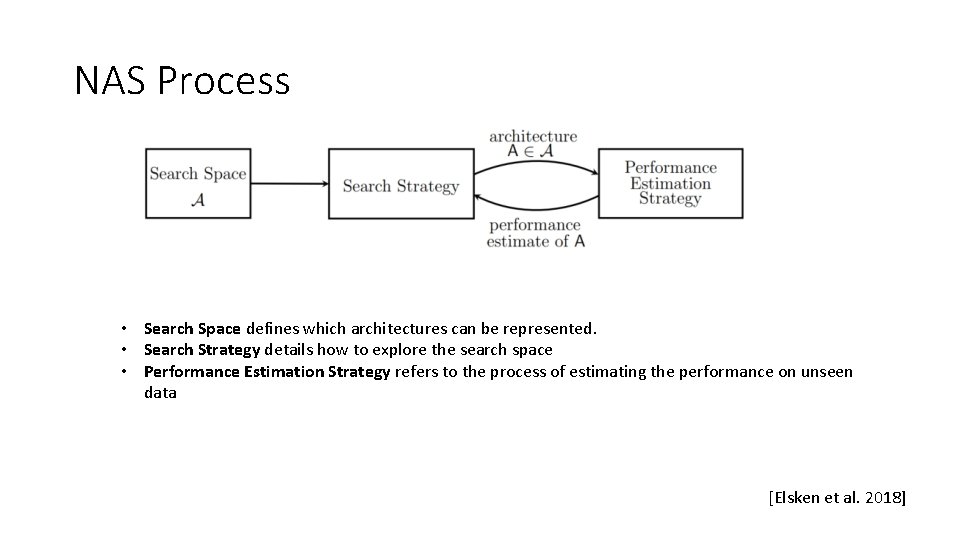

NAS Process • Search Space defines which architectures can be represented. • Search Strategy details how to explore the search space • Performance Estimation Strategy refers to the process of estimating the performance on unseen data [Elsken et al. 2018]

![Search Space Design Sequential Multibranch Cell Search Space Elsken et al 2018 Search Space Design Sequential Multi-branch Cell Search Space [Elsken et al. 2018]](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-4.jpg)

Search Space Design Sequential Multi-branch Cell Search Space [Elsken et al. 2018]

![Sequential Search Space Proxyless NAS Cai et al 2019 Choose different kernel Sequential Search Space • Proxyless. NAS [Cai et al. 2019] • Choose different kernel](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-5.jpg)

Sequential Search Space • Proxyless. NAS [Cai et al. 2019] • Choose different kernel sizes for Mobilenet v 2 blocks • Uniform [Guo et al. 2019]/Det. NAS [Chen et al. 2019] • Choose different kernel sizes for shufflenet v 2 blocks

![MultiBranch Search Space NAS with RL Zoph and Le 2017 Sequential layers Multi-Branch Search Space • NAS with RL [Zoph and Le 2017] • Sequential layers](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-6.jpg)

Multi-Branch Search Space • NAS with RL [Zoph and Le 2017] • Sequential layers with skip connections learned with RNN self-attention • DPC [Chen et al. 2018] • 5 branches of conv 3 x 3 with different dilation rates • NAS-FPN [Ghaisi et al. 2019]

![Cell Search Space NASNet Zoph et al 2018 Normal reduction cell Cell Search Space • NASNet [Zoph et al. 2018] • Normal + reduction cell](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-7.jpg)

Cell Search Space • NASNet [Zoph et al. 2018] • Normal + reduction cell • 7 x speed-up with better performance • DARTS-like

![Search Strategies Random Search SMASH Brock et al 2017 OneShot Bender Search Strategies • Random Search • SMASH [Brock et al. 2017] / One-Shot [Bender](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-8.jpg)

Search Strategies • Random Search • SMASH [Brock et al. 2017] / One-Shot [Bender et al. 2018] • Evolutionary Algorithm • Regularized evolution [Real et al. 2018] • Reinforcement Learning • REINFORCE: [Zoph and Le 2017], [Pham et al. 2018 1] • Proximal policy optimization (PPO): [Zoph et al. 2018] • Bayesian Optimization • GP with string kernel • Vizier [Chen et al. 2018] • Guided ES [Liu et al. 2018 2] • Hyperband Bayesian optimization [Wang et al. 2018] • BO and optimal transport [Kandasamy et al. 2018] • Gradient Based Optimization • • SMASH [Brock et al. 2017] ENAS [Pham et al. 2018 2] DARTS [Liu et al. 2018 1] …

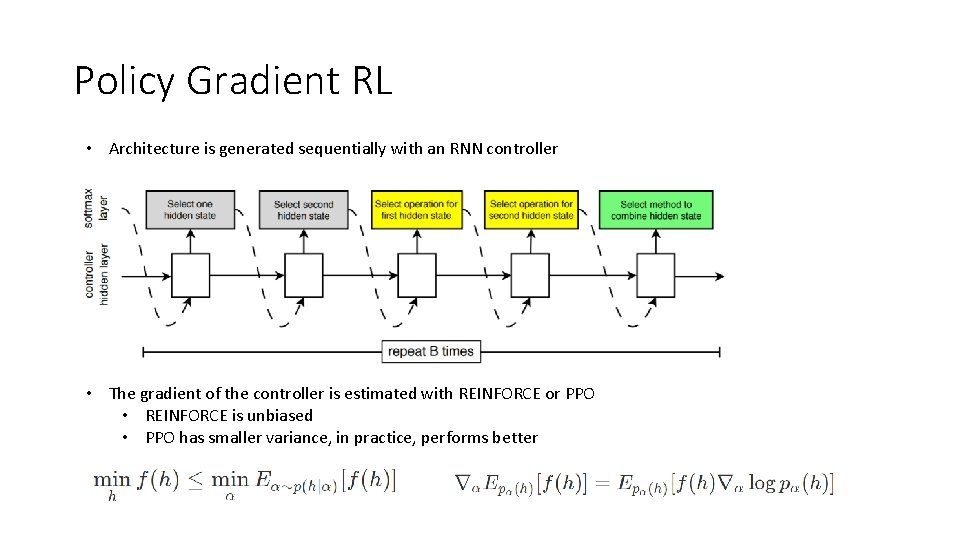

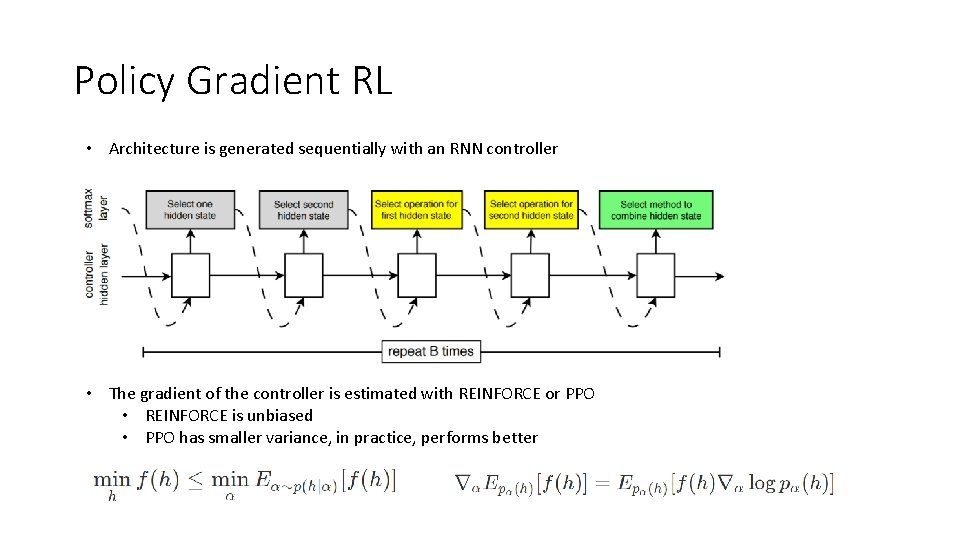

Policy Gradient RL • Architecture is generated sequentially with an RNN controller • The gradient of the controller is estimated with REINFORCE or PPO • REINFORCE is unbiased • PPO has smaller variance, in practice, performs better

![Evolutionary Strategy Amoeba Net Real et al 2018 Aging evolution to explore Evolutionary Strategy • Amoeba. Net [Real et al. 2018] • Aging evolution to explore](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-10.jpg)

Evolutionary Strategy • Amoeba. Net [Real et al. 2018] • Aging evolution to explore the space more. • Connection/op mutation • Det. NAS [Chen et al. 2019] • Single path training of supernet • Samples are single paths of supernet

Comparison of the strategies • In the field of hyperparameter optimization and Auto. ML, a cleverly designed random search algorithm can be very reliable [Bergstra 2012]. • According to the case studies comparing EA, RL and BO, there is no guarantees that one search strategy that is strictly better than the others [Liu et al. 2018 2, Kandasamy et al. 2018]. • However, they are all constantly better than random search. [Real at al. 2018]

Computation limitation • The original NAS paper, 800 GPU x 28 days on 32 x 32 CIFAR 10 images • With cell search space and PPO, reduced to 2000 GPU-days

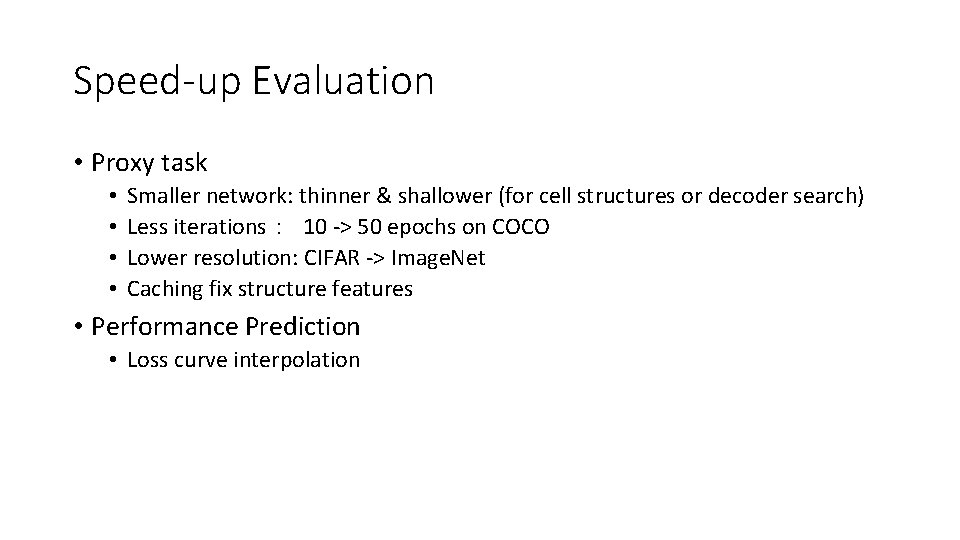

Speed-up Evaluation • Proxy task • • Smaller network: thinner & shallower (for cell structures or decoder search) Less iterations: 10 -> 50 epochs on COCO Lower resolution: CIFAR -> Image. Net Caching fix structure features • Performance Prediction • Loss curve interpolation

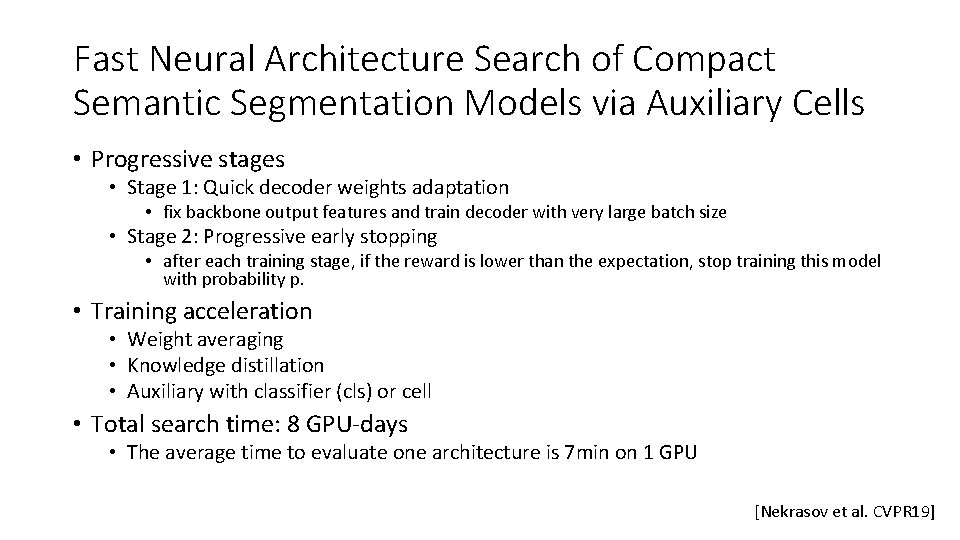

Fast Neural Architecture Search of Compact Semantic Segmentation Models via Auxiliary Cells • Progressive stages • Stage 1: Quick decoder weights adaptation • fix backbone output features and train decoder with very large batch size • Stage 2: Progressive early stopping • after each training stage, if the reward is lower than the expectation, stop training this model with probability p. • Training acceleration • Weight averaging • Knowledge distillation • Auxiliary with classifier (cls) or cell • Total search time: 8 GPU-days • The average time to evaluate one architecture is 7 min on 1 GPU [Nekrasov et al. CVPR 19]

![OneShot NAS DARTS Liu et al 2018 1 Gradient based bilevel optimization One-Shot NAS • DARTS [Liu et al. 2018 1] • Gradient based bi-level optimization](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-15.jpg)

One-Shot NAS • DARTS [Liu et al. 2018 1] • Gradient based bi-level optimization

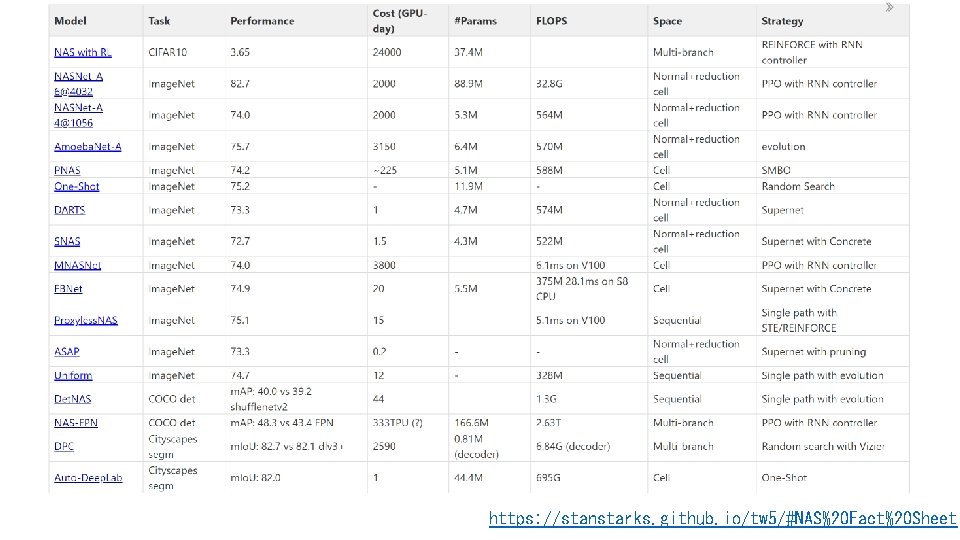

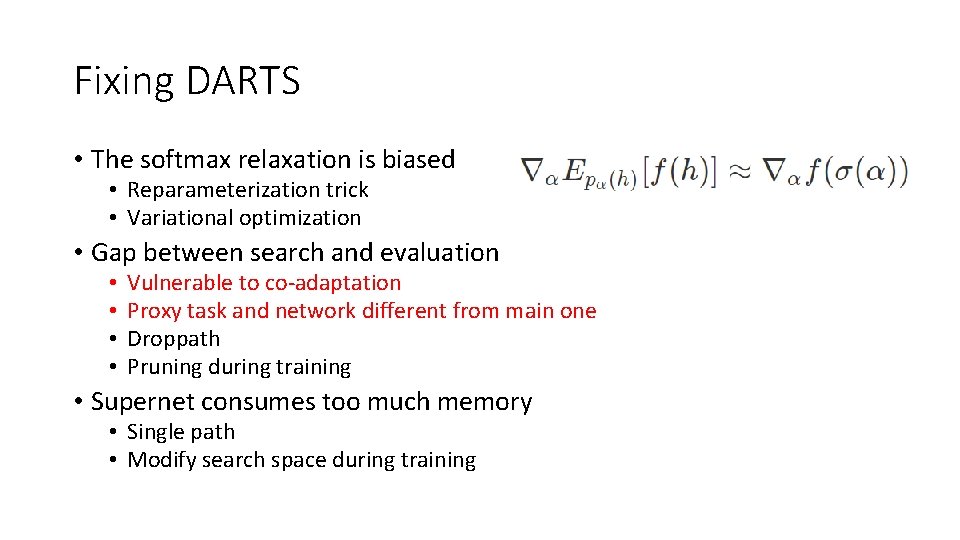

Fixing DARTS • The softmax relaxation is biased • Reparameterization trick • Variational optimization • Gap between search and evaluation • • Vulnerable to co-adaptation Proxy task and network different from main one Droppath Pruning during training • Supernet consumes too much memory • Single path • Modify search space during training

![Better Gradient Estimate Temperature dependent sigmoid Noy et al 2019 Gumbelsoftmax trick Better Gradient Estimate? • Temperature dependent sigmoid [Noy et al. 2019] • Gumbel-softmax trick](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-17.jpg)

Better Gradient Estimate? • Temperature dependent sigmoid [Noy et al. 2019] • Gumbel-softmax trick [Xie et al. 2019] • As t -> 0, the bias becomes smaller but the variance goes to infinity • REBAR/RELAX • unbiased, low-variance estimator

![Dealing with Evaluation Gap OneShot Bender et al 2018 Linear scheduled droppath Dealing with Evaluation Gap • One-Shot [Bender et al. 2018] • Linear scheduled droppath](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-18.jpg)

Dealing with Evaluation Gap • One-Shot [Bender et al. 2018] • Linear scheduled droppath • ASAP [Noy et al. 2019] • Pruning during training with threshold 0. 4/N on connection weights • PDARTS [Chen et al. 2019 2] • Growing the search space OTF • Architecture parameters learning is difficult • Variational optimization or Bayesian network

![Single Path Training Cell search space ENAS Pham et al 2018 2 Single Path Training • Cell search space • ENAS [Pham et al. 2018 2]](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-19.jpg)

Single Path Training • Cell search space • ENAS [Pham et al. 2018 2] • Unstable batch feature statistics • Sequential search space • Proxyless. NAS • STE/REINFORCE • Uniform/Det. NAS • Uniform sampling of paths • Evolution search • w/o proxy task -> direct hardware • Limited in search space

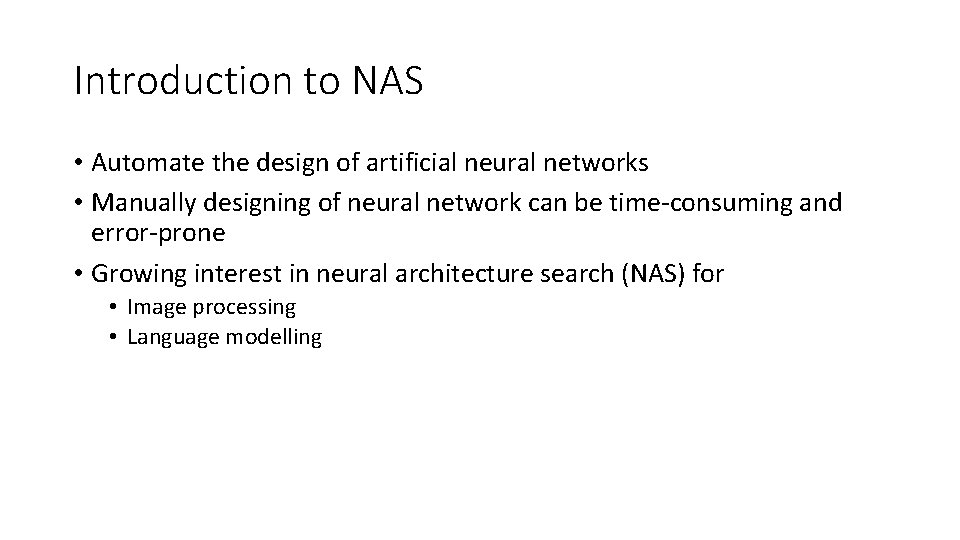

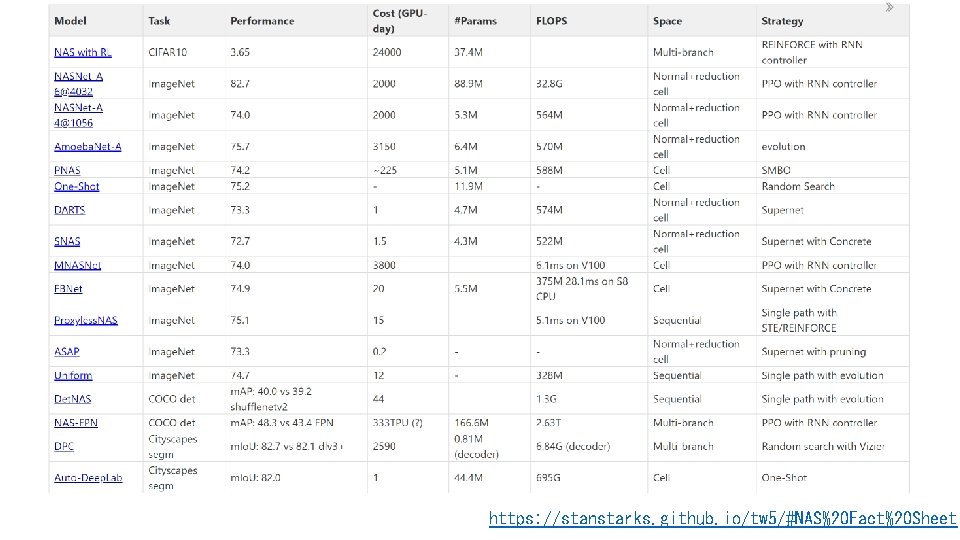

https: //stanstarks. github. io/tw 5/#NAS%20 Fact%20 Sheet

![Anonymous 2018 Single Shot Neural Architecture Search Via Direct Sparse Optimization • [Anonymous 2018] Single Shot Neural Architecture Search Via Direct Sparse Optimization •](https://slidetodoc.com/presentation_image_h/75b794d49fd3037c47139c9fc769c2ae/image-21.jpg)

• [Anonymous 2018] Single Shot Neural Architecture Search Via Direct Sparse Optimization • [Bender et al. 2018] Understanding and Simplifying One-Shot Architecture Search • [Bergstra 2012] Random search Hyperparameter Optimization, JMLR 2012 • [Brock et al. 2017] SMASH: One-shot model architecture search through hypernetworks • [Cai et al. 2019] Proxyless. NAS: Direct Neural Architecture Search on Target Task and Hardware, ICLR 2019 • [Chen et al. 2018] Searching for Efficient Multi-Scale Architectures for Dense Image Prediction, NIPS 2018 • [Chen et al. 2019] Det. NAS: Neural Architecture Search on Object Detection • [Chen et al. 2019 2] Progressive Differentiable Architecture Search: Bridging the Depth Gap between Search and Evaluation • [Elsken et al. 2018] Neural architecture search: A survey, ar. Xiv preprint ar. Xiv: 1808. 05377 (2018). • [Ghaisi et al. 2019] NAS-FPN: Learning Scalable Feature Pyramid Architecture for Object Detection, CVPR 2019 • [Guo et al. 2019] Single Path One-Shot Neural Architecture Search with Uniform Sampling • [Kandasamy et al. 2018] Neural Architecture Search with Bayesian Optimization and Optimal Transport • [Liu et al. 2018 1] DARTS: Differentiable Architecture Search • [Liu et al. 2018 2] Progressive Neural Architecture Search • [Nekrasov et al. 2018] Fast Neural Architecture Search of Compact Semantic Segmentation Models via Auxiliary Cells • [Noy et al. 2019] ASAP: Architecture Search, Anneal and Prune • [Pham et al. 2018 1] Faster Discovery of Neural Architectures by Searching for Paths in a Large Model, ICLR 2018 • [Pham et al. 2018 2] Efficient Neural Architecture Search via Parameter Sharing • [Real et al. 2018] Regularized Evolution for Image Classifier Architecture Search • [Schulman et al. 2017] Proximal policy optimization algorithms. ar. Xiv preprint ar. Xiv: 1707. 06347 (2017). • [Wang et al. 2018] Combination of Hyperband Bayesian Optimization for Hyperparameter Optimization in Deep Learning • [Xie et al. 2019] SNAS: STOCHASTIC NEURAL ARCHITECTURE SEARCH, ICLR 2019 • [Zoph and Le 2017] Neural Architecture Search with Reinforcement Learning, ICLR 2017 • [Zoph et al. 2018] Learning Transferable Architectures for Scalable Image Recognition